- Introduction

- The foundation: Neural networks and deep learning

- The evolution: Sequence models and transformers

- The breakthrough: Large language models and the scaling hypothesis

- From theory to application: Foundation models and fine-tuning

- The road ahead: Understanding limitations and future directions

- Conclusion: Building your AI learning journey

"We're not creating intelligent machines. We're creating machines that augment human intelligence in extraordinary ways." — Fei-Fei Li, Professor of Computer Science at Stanford University

Introduction

The rapid advancement of generative AI technologies has transformed our digital landscape, with tools like ChatGPT, DALL-E, and Midjourney capturing the imagination of millions. Behind these seemingly magical capabilities lies a fascinating progression of scientific breakthroughs and engineering innovations.

For learners interested in understanding how these systems work—beyond simply using them—there's never been a better time to explore the fundamental concepts powering generative AI and large language models (LLMs). Whether you're a student beginning your journey into data science or a professional looking to understand the technology reshaping your industry, gaining insight into how these models function opens doors to countless opportunities.

This guide breaks down the science behind modern AI systems, from their foundational building blocks to the sophisticated architectures that enable today's most powerful generative models. More importantly, we'll highlight excellent courses that can help you build expertise in each area, creating a clear learning path that evolves with your understanding.

The foundation: Neural networks and deep learning

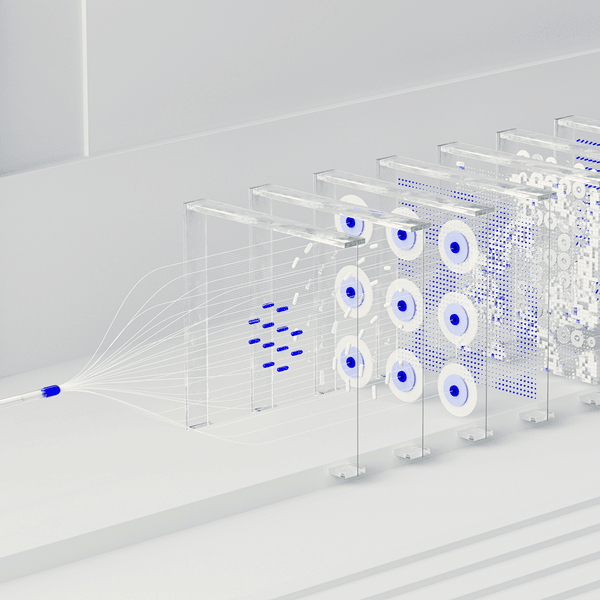

At the heart of modern AI lies a concept inspired by the human brain: neural networks. These computational structures consist of interconnected nodes (neurons) organized in layers, each performing simple mathematical operations that, when combined, can recognize patterns in data with remarkable accuracy.

Deep learning—the approach powering today's most impressive AI systems—expanded on this foundation by introducing neural networks with many layers (hence "deep"), enabling models to learn increasingly abstract representations of data. This breakthrough allowed AI systems to move beyond simple pattern recognition to understand complex relationships in:

- Images: Recognizing not just edges and shapes, but objects, scenes, and even artistic styles

- Text: Identifying not just words, but grammar, context, and semantic meaning

- Audio: Detecting not just sounds, but speech patterns, emotions, and musical structures

The evolution from basic neural networks to deep learning represented a pivotal moment in AI development, laying the groundwork for everything that followed. What made this possible wasn't just theoretical advancement but the convergence of three critical factors: vast amounts of digital data, significant improvements in computing power (especially GPUs), and algorithmic innovations like backpropagation for efficient network training.

Understanding these foundations is essential for anyone seeking to grasp how modern generative AI works. The journey from these basic concepts to today's sophisticated LLMs reflects a fascinating progression of ideas, each building upon what came before.

Mastering these fundamentals provides the conceptual framework needed to understand more advanced AI architectures. For many learners, starting with a comprehensive course on neural networks and deep learning creates the strongest foundation for future exploration. Through OpenCourser, you can find courses that match your preferred learning style—whether you thrive with hands-on programming exercises, theoretical lectures, or a balanced approach that combines both. The platform's detailed course summaries and review highlights make it easy to identify which programs best fit your specific needs and prior experience level.

The evolution: Sequence models and transformers

While deep neural networks revolutionized AI's capabilities with static data like images, they initially struggled with sequential information where context and order matter—text, speech, time series data, and more. This limitation gave rise to specialized architectures designed specifically for handling sequences: Recurrent Neural Networks (RNNs), Long Short-Term Memory networks (LSTMs), and eventually, transformers.

RNNs introduced a novel concept: the ability to "remember" previous inputs while processing current ones. By maintaining a hidden state that carries information forward, these networks could theoretically capture long-range dependencies in sequences. However, in practice, they suffered from vanishing gradient problems that limited their effectiveness with longer sequences.

LSTMs and their variants (like GRUs) addressed this limitation through more sophisticated memory mechanisms that could selectively remember or forget information, significantly improving performance on language tasks. These architectures powered the first generation of practical language models and translation systems, representing a major step forward in AI's ability to work with sequential data.

The true paradigm shift came in 2017 with the introduction of the transformer architecture in the landmark paper "Attention is All You Need." This breakthrough approach:

- Eliminated the need for sequential processing, allowing parallel computation that dramatically sped up training

- Introduced the multi-head attention mechanism, enabling models to focus on different parts of the input sequence simultaneously

- Created more direct pathways for information flow, helping preserve context across very long sequences

The transformer's elegant design addressed fundamental limitations of previous sequence models while significantly improving performance, establishing it as the foundation for virtually all state-of-the-art language models that followed.

Understanding the progression from basic sequence models to transformers provides crucial insight into why modern language models are so capable. Courses focused on this evolution typically combine theoretical explanations with practical implementations, allowing you to build working models that demonstrate these concepts in action. OpenCourser's detailed syllabus previews help you identify which courses include hands-on programming assignments that reinforce theoretical learning—a crucial factor for many learners trying to master these complex topics.

The breakthrough: Large language models and the scaling hypothesis

The true explosion in generative AI capabilities came with the development of large language models trained on unprecedented amounts of data. This advancement was driven largely by the "scaling hypothesis"—the observation that continuously increasing model size (parameters), training data, and computational resources leads to emergent capabilities not present in smaller models.

The progression from BERT and GPT-2 to models like GPT-4, Claude, and Llama 2 demonstrated that scale unlocks surprising new abilities:

- Few-shot learning: The ability to perform new tasks from just a few examples

- In-context learning: Using information provided in the prompt to solve problems

- Reasoning: Breaking down complex questions into logical steps

- Multimodality: Processing and generating multiple types of content (text, images, code)

What makes this scaling approach so powerful is that it doesn't require fundamental architectural changes—the same transformer design, when implemented at sufficient scale and trained on diverse data, naturally develops increasingly sophisticated capabilities.

This revelation changed how researchers think about AI development: instead of hand-engineering specific capabilities, the focus shifted to creating conditions (larger models, better data, more efficient training) that allow these abilities to emerge organically through learning from examples.

For learners looking to understand modern LLMs, courses that specifically address scaling effects and emergent abilities provide crucial context that more general AI courses might miss. OpenCourser's "Traffic Lights" section can be particularly helpful here, highlighting whether courses cover cutting-edge topics or focus more on established concepts. For working professionals, the platform's "Activities" recommendations can suggest complementary projects that help solidify understanding of these complex systems through hands-on exploration.

From theory to application: Foundation models and fine-tuning

The development of general-purpose "foundation models" trained on broad data has fundamentally changed the AI development landscape. Rather than building specialized models from scratch, practitioners now typically start with pre-trained foundation models and adapt them to specific tasks through techniques like:

- Fine-tuning: Continuing training on domain-specific data to specialize the model

- RLHF (Reinforcement Learning from Human Feedback): Aligning model outputs with human preferences

- Parameter-efficient fine-tuning: Methods like LoRA that adapt models with minimal resource requirements

- Prompting strategies: Techniques for effectively communicating with models to elicit desired behaviors

This shift represents a democratization of AI capabilities, allowing organizations without massive computational resources to leverage state-of-the-art models for specific applications. It's especially significant for learners, as it means you can build practical AI systems without necessarily mastering every detail of model architecture or training.

The application landscape for these models spans virtually every industry:

- Healthcare: Medical text analysis, diagnostic assistance, drug discovery

- Education: Personalized tutoring, content creation, assessment

- Creative fields: Writing assistance, image generation, music composition

- Business: Document analysis, customer service automation, market research

For many learners, especially those focused on practical applications, starting with courses on fine-tuning and prompting can provide immediate value while building towards deeper technical understanding. OpenCourser's "Career Center" section helps connect these learning paths to specific professional roles, showing how these skills translate to career opportunities. The platform's "Reading List" recommendations can also supplement online courses with foundational texts that provide deeper theoretical context.

The road ahead: Understanding limitations and future directions

While current generative AI systems demonstrate remarkable capabilities, understanding their limitations is just as important as appreciating their strengths. Current models face several significant challenges:

- Hallucinations: Generating plausible-sounding but factually incorrect information

- Context windows: Limited ability to process very long documents or conversations

- Reasoning limitations: Struggling with complex logical or mathematical reasoning

- Alignment challenges: Ensuring models behave according to human values and intentions

- Computational efficiency: The environmental and economic costs of training and running large models

Researchers are actively addressing these limitations through approaches like retrieval-augmented generation (connecting models to external knowledge sources), specialized reasoning techniques, more efficient architectures, and improved alignment methods.

The field continues to evolve rapidly, with emerging research directions including:

- Multimodal models that combine language, vision, and other modalities

- Models with improved reasoning capabilities for complex tasks

- More computationally efficient architectures that reduce resource requirements

- Methods for ensuring model safety, fairness, and alignment with human values

For learners seeking to stay at the cutting edge, courses that specifically address current limitations and research frontiers provide valuable perspective. OpenCourser's regular updates ensure that course recommendations reflect the latest developments in this rapidly evolving field. The platform's "Save to list" feature allows you to bookmark courses of interest as you map out your personal learning journey through this fascinating landscape.

Conclusion: Building your AI learning journey

Understanding generative AI and LLMs requires navigating a complex landscape of interconnected concepts, from foundational deep learning principles to specialized architectures and training techniques. Rather than attempting to master everything at once, consider building your knowledge progressively:

- Start with solid fundamentals in neural networks and deep learning

- Progress to sequence models and transformer architectures

- Explore large language models and scaling effects

- Learn about fine-tuning and application development

- Study current limitations and future research directions

This structured approach allows you to build intuition at each stage before tackling more advanced concepts. Remember that theoretical understanding and practical application reinforce each other—courses that combine both elements often provide the most effective learning experience.

Through OpenCourser's comprehensive search capabilities, you can find courses that match your specific interests, learning style, and prior experience level. The platform's detailed course information, review summaries, and supplementary resources help ensure that you invest your valuable learning time wisely.

As generative AI continues to transform industries and create new opportunities, developing a deep understanding of how these systems work positions you not just to use these tools effectively, but potentially to contribute to their advancement. Whether you're pursuing a career in AI development or simply seeking to understand the technology reshaping our world, the journey of learning is both intellectually rewarding and professionally valuable.

Begin your exploration today by saving courses that interest you to your OpenCourser list, and take the first step on your path to mastering the science behind modern AI.