Statistical Learning

Statistical Learning: A Comprehensive Guide

Statistical learning is a field that sits at the intersection of statistics and computer science, focused on developing methods to make sense of complex datasets. At a high level, it involves building mathematical models to understand data, identify patterns, and make predictions or decisions. For those new to the area, think of it as teaching computers to learn from data in much the same way humans learn from experience, but with a rigorous mathematical and statistical underpinning. It's a discipline that empowers us to extract meaningful insights from the vast amounts of information generated in our modern world.

Working in statistical learning can be intellectually stimulating. It offers the chance to solve challenging puzzles hidden within data, leading to discoveries that can have a real-world impact across diverse sectors. Furthermore, the field is dynamic and constantly evolving, providing continuous learning opportunities as new algorithms, techniques, and applications emerge. The ability to transform raw data into actionable knowledge is a powerful skill, making roles in this domain both engaging and highly sought after.

Introduction to Statistical Learning

This section aims to provide a clear understanding of what statistical learning entails, how it relates to and differs from associated fields, its practical applications, and the foundational knowledge beneficial for anyone looking to explore this area further. It is designed to be accessible, especially for those who are new to the concepts or are considering a career shift into data-oriented roles.

Defining Statistical Learning and Its Scope

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis. It deals with the problem of finding a predictive function based on data. At its core, statistical learning involves a set of tools and techniques for understanding data. These tools can be broadly categorized into supervised and unsupervised learning methods, which we will explore later. The primary goal is often to build models that can predict an output based on a set of inputs or to discover underlying patterns and structures within the data itself.

The scope of statistical learning is vast and continues to expand. It ranges from relatively simple tasks like predicting house prices based on features (e.g., size, location) to complex applications like identifying cancerous cells from medical images or powering recommendation engines on e-commerce websites. It is a foundational element of data science and plays a crucial role in the development of artificial intelligence systems. The emphasis is on creating models that are not only accurate but also interpretable and generalizable to new, unseen data.

Its applications span across numerous industries, including finance (fraud detection, algorithmic trading), healthcare (disease diagnosis, drug discovery), marketing (customer segmentation, targeted advertising), technology (search engines, natural language processing), and scientific research (genomics, climate modeling). The ability to learn from data and make predictions is a powerful capability that drives innovation and efficiency in many domains.

Statistical Learning, Machine Learning, and Traditional Statistics: Understanding the Nuances

While "statistical learning," "machine learning," and "traditional statistics" are often used interchangeably, there are subtle but important distinctions. Traditional statistics often focuses on inference: drawing conclusions about a population based on a sample of data. This typically involves hypothesis testing, confidence intervals, and understanding the underlying probability distributions. The emphasis is often on understanding the relationships between variables and the significance of these relationships.

Machine learning, on the other hand, is a broader field within computer science that gives computers the ability to learn without being explicitly programmed. While it heavily utilizes statistical methods, its primary focus is often on prediction accuracy and performance on new data. Machine learning encompasses a wide array of algorithms, some of which may not have strong statistical underpinnings but demonstrate high predictive power.

Statistical learning can be seen as a subfield of statistics that has a significant overlap with machine learning. It brings the rigor of statistical modeling to the predictive tasks often associated with machine learning. It emphasizes understanding the trade-offs in model complexity, assessing model uncertainty, and ensuring that models generalize well. While machine learning might sometimes prioritize predictive power even with "black box" models, statistical learning often strives for models that are both predictive and interpretable.

You may wish to explore this related topic:

Real-World Impact: Applications Across Industries

The principles of statistical learning are not just theoretical constructs; they have tangible impacts across a multitude of industries, transforming how businesses operate and how scientific discoveries are made. In the financial sector, statistical learning algorithms are crucial for credit scoring, assessing the risk of loan defaults, detecting fraudulent transactions in real-time, and developing sophisticated algorithmic trading strategies.

Healthcare has also been revolutionized by statistical learning. It's used in medical diagnosis, for instance, to analyze patient data and medical images (like MRIs or X-rays) to identify diseases such as cancer at earlier stages. Personalized medicine, which aims to tailor treatments based on an individual's genetic makeup and other characteristics, heavily relies on statistical learning models to predict treatment efficacy and potential side effects. Pharmaceutical companies use these methods in drug discovery and clinical trial design.

In the technology sphere, statistical learning powers many of the services we use daily. Search engines use it to rank search results, e-commerce platforms use it for product recommendations, and social media platforms use it to personalize content feeds. Spam filters in email clients are a classic example of a classification problem solved using statistical learning. Furthermore, areas like natural language processing (NLP) for chatbots and translation services, and computer vision for image and facial recognition, all have strong foundations in statistical learning techniques.

Other sectors also benefit significantly. Retailers use statistical learning for demand forecasting and inventory management. Manufacturing industries apply it for predictive maintenance to anticipate equipment failures. In environmental science, it's used to model climate change and predict weather patterns. The versatility and power of statistical learning make it an indispensable tool for extracting value and knowledge from data in nearly every field imaginable.

Foundational Knowledge for Aspiring Learners

Embarking on a journey into statistical learning requires a grasp of certain fundamental concepts. A solid understanding of basic mathematics is crucial. This includes linear algebra (vectors, matrices, matrix operations), calculus (derivatives, gradients), and probability theory (random variables, distributions, Bayes' theorem). These mathematical tools form the language in which statistical learning models are expressed and understood.

Some familiarity with basic statistics is also highly beneficial. Concepts like mean, median, variance, standard deviation, hypothesis testing, and p-values provide a good starting point. Understanding different types of data (categorical, numerical) and basic data exploration techniques is also important. While deep statistical expertise can be developed over time, a foundational layer will make the learning process smoother.

Finally, a basic understanding of programming concepts can be very helpful, as practical application of statistical learning often involves writing code. Languages like Python and R are widely used in the field due to their extensive libraries and tools for data analysis and machine learning. While you don't need to be an expert programmer from day one, being comfortable with variables, loops, functions, and data structures will enable you to implement and experiment with different statistical learning methods.

These courses offer a diverse set of starting points for building a foundation in statistical learning, leveraging different programming languages and institutional perspectives:

Core Concepts in Statistical Learning

To truly appreciate and apply statistical learning, one must become familiar with its core concepts. These ideas form the bedrock upon which more advanced techniques and applications are built. This section delves into these fundamental principles, aiming to provide clarity for students and practitioners alike, while keeping the explanations accessible.

Supervised vs. Unsupervised Learning: Two Main Paradigms

Statistical learning methods are broadly categorized into two main types: supervised learning and unsupervised learning. The distinction lies in the nature of the data available and the goals of the learning task.

In supervised learning, the algorithm learns from a labeled dataset, meaning each data point (or observation) is tagged with a known outcome or label. The goal is to learn a mapping function that can predict the output variable for new, unseen input data. Think of it as learning with a "teacher" who provides the correct answers during the training phase. Supervised learning problems can be further divided into:

- Regression problems: Where the output variable is a continuous value. For example, predicting the price of a house based on its features (size, number of bedrooms, location) or forecasting stock prices.

- Classification problems: Where the output variable is a categorical value, meaning it belongs to one of several predefined classes or groups. Examples include identifying whether an email is spam or not spam, or classifying an image as containing a cat or a dog.

Unsupervised learning, on the other hand, deals with unlabeled data. The algorithm tries to learn patterns, structures, or relationships directly from the input data without any predefined output labels. There is no "teacher" providing correct answers. The objective is often to explore the data and discover hidden insights. Common unsupervised learning tasks include:

- Clustering: Grouping similar data points together based on their characteristics. For example, segmenting customers into different groups based on their purchasing behavior.

- Dimensionality reduction: Reducing the number of variables (features) in a dataset while preserving important information. This can simplify models, reduce computational cost, and help with visualization. Principal Component Analysis (PCA) is a well-known dimensionality reduction technique.

- Association rule mining: Discovering interesting relationships or associations among variables in large datasets. A classic example is "market basket analysis," which identifies products that are frequently purchased together.

Understanding the difference between these two paradigms is crucial as it dictates the type of algorithms used and the approach taken to solve a particular data problem.

These courses provide an excellent introduction to both supervised and unsupervised learning concepts from different institutional perspectives:

Evaluating Success: Model Metrics and Validation

Building a statistical learning model is only half the battle; evaluating its performance is equally critical. How do we know if a model is good? We use various model evaluation metrics and validation techniques. The choice of metric depends heavily on the type of problem (regression or classification) and the specific goals of the application.

For regression problems, common metrics include:

- Mean Squared Error (MSE): Measures the average squared difference between the predicted values and the actual values. Lower MSE indicates a better fit.

- Root Mean Squared Error (RMSE): The square root of MSE, which brings the metric back to the original units of the output variable, making it more interpretable.

- R-squared (Coefficient of Determination): Represents the proportion of the variance in the dependent variable that is predictable from the independent variables. It ranges from 0 to 1, with higher values indicating a better fit.

For classification problems, metrics often revolve around a confusion matrix, which summarizes the correct and incorrect predictions. Key metrics include:

- Accuracy: The proportion of correctly classified instances. While intuitive, it can be misleading for imbalanced datasets (where one class is much more frequent than others).

- Precision: Of all instances predicted as positive, what proportion was actually positive? (True Positives / (True Positives + False Positives)). Important when the cost of false positives is high.

- Recall (Sensitivity or True Positive Rate): Of all actual positive instances, what proportion was correctly predicted as positive? (True Positives / (True Positives + False Negatives)). Important when the cost of false negatives is high.

- F1-Score: The harmonic mean of precision and recall, providing a single score that balances both. Useful for imbalanced classes.

- Area Under the ROC Curve (AUC-ROC): The ROC curve plots the true positive rate against the false positive rate at various threshold settings. AUC represents the probability that the classifier will rank a randomly chosen positive instance higher than a randomly chosen negative one.

Beyond metrics, validation techniques are essential to ensure that a model generalizes well to new, unseen data and isn't just memorizing the training data (a phenomenon called overfitting). A common technique is cross-validation, such as k-fold cross-validation. Here, the data is split into 'k' subsets (folds). The model is trained on k-1 folds and evaluated on the remaining fold. This process is repeated k times, with each fold used as the test set once. The average performance across the k folds gives a more robust estimate of the model's performance on unseen data.

The Balancing Act: Bias-Variance Tradeoff and Preventing Overfitting

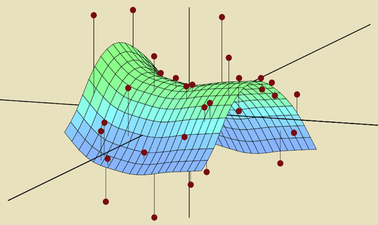

A central challenge in statistical learning is managing the bias-variance tradeoff. This concept is fundamental to building models that perform well not just on the data they were trained on, but also on new, unseen data.

Bias refers to the error introduced by approximating a real-world problem, which may be complex, by a much simpler model. Models with high bias pay little attention to the training data and oversimplify the underlying patterns. This leads to underfitting, where the model performs poorly on both the training data and new data because it fails to capture the true relationships.

Variance, on the other hand, refers to the amount by which the model's learned function would change if it were trained on a different training dataset. Models with high variance pay too much attention to the training data, capturing not only the underlying patterns but also the noise. This leads to overfitting, where the model performs very well on the training data but poorly on new, unseen data because it has essentially memorized the training set, including its idiosyncrasies.

The bias-variance tradeoff describes the inverse relationship between bias and variance. Generally, as you decrease a model's bias (e.g., by making it more complex), its variance tends to increase, and vice versa. The goal is to find a sweet spot, a model complexity that minimizes the total error (which is a function of bias squared, variance, and irreducible error – the inherent noise in the data that no model can eliminate).

Preventing overfitting is a key aspect of managing this tradeoff. Techniques to combat overfitting include:

- Cross-validation: As mentioned earlier, helps in assessing how well the model generalizes.

- Regularization: Adding a penalty term to the model's objective function to discourage overly complex models. Common types include L1 (Lasso) and L2 (Ridge) regularization.

- Pruning (for tree-based models): Simplifying decision trees by removing branches that contribute little to predictive power on unseen data.

- Early stopping: Stopping the training process before the model starts to overfit, often monitored by performance on a validation set.

- Using more data: Generally, more training data can help models generalize better.

- Feature selection: Choosing only the most relevant features to reduce model complexity and noise.

Effectively navigating the bias-variance tradeoff is crucial for developing robust and reliable statistical learning models.

This advanced course delves into methods for optimizing model fitting, which directly relates to managing bias and variance:

A Glimpse into Common Algorithms

The world of statistical learning is populated by a diverse array of algorithms, each suited for different types of problems and data. Here's a brief introduction to some of the most common categories:

Regression Algorithms:

- Linear Regression: One of the simplest and most widely used regression algorithms. It models the relationship between a dependent variable and one or more independent variables by fitting a linear equation to the observed data.

- Polynomial Regression: An extension of linear regression that models the relationship as an nth degree polynomial, allowing for curved relationships.

- Support Vector Regression (SVR): An adaptation of Support Vector Machines for regression tasks, aiming to fit the error within a certain threshold.

Classification Algorithms:

- Logistic Regression: Despite its name, logistic regression is a classification algorithm. It models the probability of a binary outcome (e.g., yes/no, 0/1) using a logistic function.

- k-Nearest Neighbors (k-NN): A non-parametric algorithm that classifies a new data point based on the majority class of its 'k' closest neighbors in the feature space.

- Support Vector Machines (SVM): A powerful algorithm that finds an optimal hyperplane that best separates data points belonging to different classes in a high-dimensional space.

- Decision Trees: Tree-like models where each internal node represents a "test" on an attribute, each branch represents the outcome of the test, and each leaf node represents a class label. They are intuitive and easy to interpret.

- Random Forests: An ensemble learning method that constructs multiple decision trees during training and outputs the class that is the mode of the classes (classification) or mean prediction (regression) of the individual trees. They often improve upon the performance of single decision trees and are robust to overfitting.

- Naive Bayes Classifiers: A family of probabilistic classifiers based on applying Bayes' theorem with strong (naive) independence assumptions between the features.

Clustering Algorithms:

- k-Means Clustering: An iterative algorithm that partitions a dataset into 'k' distinct, non-overlapping clusters. It aims to minimize the variance within each cluster.

- Hierarchical Clustering: Builds a hierarchy of clusters, either agglomerative (bottom-up, starting with individual points and merging them) or divisive (top-down, starting with all points and splitting them).

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): A density-based clustering algorithm that can find arbitrarily shaped clusters and identify noise points.

This is just a small sample, and many other algorithms and variations exist. The choice of algorithm depends on the specific problem, the nature of the data, computational resources, and the desired level of interpretability. Exploring these fundamental techniques is an important step in mastering statistical learning.

Consider these resources for a deeper understanding of various algorithms:

Historical Development of Statistical Learning

The field of statistical learning, as we know it today, did not emerge in a vacuum. It is the culmination of centuries of thought in statistics, mathematics, and, more recently, computer science. Understanding its historical development provides valuable context for appreciating its current state and future trajectory, particularly for those engaged in academic research or pursuing advanced studies.

Pioneering Steps: Early Statistical Foundations (19th-20th Century)

The roots of statistical learning can be traced back to early work in probability theory and statistics. Figures like Thomas Bayes (18th century) laid groundwork with concepts like Bayes' theorem, which is fundamental to many modern machine learning algorithms. The 19th century saw significant advancements with Carl Friedrich Gauss developing the method of least squares, a cornerstone of linear regression. Francis Galton and Karl Pearson were pioneers in correlation and regression analysis, laying essential groundwork for understanding relationships within data.

The early to mid-20th century was a fertile period for statistical theory. Ronald A. Fisher made monumental contributions, including the development of analysis of variance (ANOVA), likelihood-based inference, and experimental design. Jerzy Neyman and Egon Pearson developed the framework for hypothesis testing. John Tukey championed exploratory data analysis, emphasizing the importance of visualizing and understanding data before formal modeling. These classical statistical methods provided the intellectual tools and conceptual frameworks that would later be adapted and scaled in the computational era.

Many of these foundational statistical concepts, though developed long before modern computers, remain deeply relevant. The emphasis on rigorous inference, understanding uncertainty, and model adequacy are principles that continue to inform best practices in statistical learning. The challenges of that era, such as dealing with limited data and manual computation, spurred the development of efficient and robust statistical techniques that are still valuable today.

The Computational Revolution: Enabling Modern Methods

While the theoretical foundations of many statistical learning methods were laid decades, or even centuries, ago, their widespread application and the development of more complex algorithms were largely impractical without significant computational power. The advent and proliferation of electronic computers from the mid-20th century onwards marked a transformative turning point for the field.

Increased processing speeds, larger memory capacities, and more efficient data storage solutions allowed researchers and practitioners to handle datasets of unprecedented size and complexity. Algorithms that were once computationally prohibitive, such as iterative optimization techniques or methods involving extensive matrix operations, became feasible. This computational revolution directly enabled the shift from primarily inferential statistics to more predictive and algorithmic approaches, characteristic of modern statistical learning and machine learning.

The development of high-level programming languages also played a crucial role. Languages like Fortran, and later C, followed by specialized statistical software packages like S (and its open-source successor R), and general-purpose languages with strong data science libraries like Python, made it easier for statisticians and scientists to implement and experiment with complex models. This democratization of computational tools accelerated research and innovation, allowing the field to rapidly evolve and tackle increasingly sophisticated problems.

Landmarks and Turning Points: Key Papers and Paradigm Shifts

The evolution of statistical learning has been marked by several key academic papers and conceptual breakthroughs that shifted the field's trajectory. While a comprehensive list is extensive, some influential developments highlight these paradigm shifts. For instance, the work on classification and regression trees (CART) by Breiman, Friedman, Olshen, and Stone in the 1980s provided a powerful and interpretable non-parametric approach to modeling.

The development of Support Vector Machines (SVMs) by Vapnik and others, stemming from statistical learning theory (or VC theory), offered a new way to think about classification problems, particularly in high-dimensional spaces. The concept of boosting, notably AdaBoost developed by Freund and Schapire, demonstrated how combining many "weak" learners could create a powerful "strong" learner, leading to significant performance improvements in many tasks.

More recently, the resurgence of neural networks and the rise of deep learning, while often considered a distinct branch of machine learning, has drawn heavily on statistical principles for optimization, regularization, and evaluation. The "No Free Lunch" theorems for optimization and search, while not specific to statistical learning, remind practitioners that no single algorithm is universally best for all problems, emphasizing the need for a diverse toolkit and careful model selection based on the data and task at hand. These, and many other contributions, have collectively shaped statistical learning into the rich and dynamic field it is today.

These texts are considered seminal in the field and cover many of these important developments:

Pushing Boundaries: Current Research Frontiers

Statistical learning is far from a static field; it is an area of active and vibrant research, continually pushing the boundaries of what is possible. Several frontiers are currently attracting significant attention from researchers. One major area is the development of more robust and interpretable models. While complex models like deep neural networks can achieve high predictive accuracy, understanding *why* they make certain predictions remains a challenge. Research into explainable AI (XAI) and interpretable machine learning aims to address this, which is crucial for applications in sensitive domains like healthcare and finance.

Another active research area is causal inference within statistical learning frameworks. Moving beyond correlation to understand causation is a critical step for making effective interventions and policy decisions. Integrating causal reasoning with predictive modeling is a complex but highly impactful endeavor. Research also continues in areas like reinforcement learning, federated learning (training models on decentralized data while preserving privacy), and automated machine learning (AutoML), which aims to automate the process of applying machine learning to real-world problems.

The challenges posed by "Big Data" also continue to drive research, including the development of scalable algorithms, methods for handling streaming data, and techniques for dealing with high-dimensional and unstructured data (e.g., text, images, graphs). Furthermore, ensuring fairness, accountability, and transparency in algorithmic decision-making is a critical research frontier, addressing the ethical and societal implications of deploying statistical learning models at scale.

For those interested in advanced topics and recent developments, specialized courses often highlight current research directions:

Formal Education Pathways

For individuals aspiring to build a career in statistical learning, a formal education often provides a structured and comprehensive foundation. Academic programs at the undergraduate and graduate levels offer rigorous training in the theoretical underpinnings and practical applications of the field. This section explores typical educational routes and what they entail.

Building a Base: Undergraduate Coursework Recommendations

At the undergraduate level, a strong foundation in mathematics and statistics is paramount. Students typically pursue majors in Statistics, Mathematics, Computer Science, or a related quantitative field. Key coursework often includes multiple semesters of calculus, linear algebra, probability theory, and mathematical statistics. These courses equip students with the essential tools to understand the derivations and mechanics of various statistical learning algorithms.

Introductory programming courses, often in languages like Python or R, are also crucial. Data structures and algorithms courses from a computer science curriculum can be highly beneficial, providing an understanding of computational efficiency and how to implement models effectively. Courses specifically titled "Statistical Learning," "Machine Learning," or "Data Mining" at the undergraduate level will provide a direct introduction to the core concepts and methods. Furthermore, courses in applied statistics, regression analysis, and experimental design can offer practical experience in analyzing data and interpreting results.

Many universities also offer interdisciplinary programs or specializations that combine elements from statistics, computer science, and domain-specific knowledge (e.g., bioinformatics, econometrics). These can provide a well-rounded education tailored to particular career interests within the broader field of data analysis. Exploring options on platforms like OpenCourser's Data Science category can reveal the types of foundational courses available.

Advancing Knowledge: Graduate Programs and Research Opportunities

For those seeking deeper expertise or careers in research and development, a graduate degree (Master's or Ph.D.) is often a prerequisite. Master's programs in Statistics, Data Science, Machine Learning, Business Analytics, or related fields typically offer more advanced coursework and specialized tracks. These programs often delve deeper into theoretical aspects, advanced algorithms, and practical applications through capstone projects or internships.

Ph.D. programs are geared towards individuals interested in conducting original research and contributing to the advancement of the field. These programs involve intensive study of advanced statistical theory, machine learning algorithms, and computational methods, culminating in a dissertation that presents novel research. Research opportunities at the graduate level are diverse, ranging from developing new algorithms and theoretical frameworks to applying statistical learning methods to solve complex problems in various scientific and industrial domains.

When selecting a graduate program, it's important to consider factors such as faculty research interests, available specializations, industry connections, and computational resources. Many programs also offer opportunities for interdisciplinary research, collaborating with experts in fields like biology, medicine, engineering, or social sciences. Such collaborations can lead to impactful work at the intersection of statistical learning and other domains.

The following courses are examples of what leading institutions offer, with the first being a classic in the R language and the second representing more advanced specialized study often found in graduate programs:

This text is a common fixture in many graduate-level statistics and machine learning curricula:

Deep Dives: PhD-Level Specialization Areas

At the PhD level, specialization becomes key. Students often focus on a particular subfield of statistical learning, aiming to become experts and contribute novel research. Specialization areas can be theoretical, methodological, or applied. Theoretical specializations might involve developing new foundations for statistical inference in high-dimensional settings, understanding the properties of complex algorithms, or exploring the limits of learnability.

Methodological specializations focus on creating new algorithms or refining existing ones. This could include work in areas like deep learning architectures, reinforcement learning, causal inference from observational data, graphical models, non-parametric Bayesian methods, or privacy-preserving machine learning. Researchers in these areas often publish in top-tier statistics, machine learning, and artificial intelligence journals and conferences.

Applied specializations involve leveraging statistical learning techniques to address specific challenges in other disciplines. Examples include bioinformatics and computational biology (analyzing genomic data, predicting protein structures), computational neuroscience (modeling brain activity), econometrics and finance (financial forecasting, risk modeling), natural language processing (machine translation, sentiment analysis), or computer vision (image recognition, object detection). These interdisciplinary specializations often require a deep understanding of both statistical learning and the domain of application.

The choice of specialization is often guided by a student's interests, the expertise of faculty advisors, and emerging trends in the field. A PhD in a statistical learning-related area equips graduates for careers in academia, industrial research labs, and advanced roles in data-driven organizations.

Books like these often form the basis for advanced PhD-level coursework and research in specialized areas:

Bridging Fields: Interdisciplinary Connections

Statistical learning does not exist in isolation; it thrives on its connections with various other disciplines. Computer Science is a primary partner, providing the algorithmic foundations, data structures, and computational infrastructure necessary to implement and scale statistical learning methods. Areas like algorithm design, database management, and distributed computing are all relevant.

Applied Mathematics is another crucial connection, offering the tools for optimization, numerical analysis, and understanding the mathematical properties of models. Fields like signal processing and information theory also share conceptual and methodological links with statistical learning. The ability to translate real-world problems into mathematical formulations and then solve them computationally is a hallmark of the field.

Beyond these foundational connections, statistical learning is increasingly integrated with virtually every scientific and engineering discipline. In biology and medicine, it leads to bioinformatics and biostatistics. In economics, it forms the basis of econometrics and quantitative finance. In engineering, it's used for control systems, robotics, and material science. In the social sciences, it helps analyze survey data, model social networks, and understand human behavior. These interdisciplinary ties not only provide rich application areas for statistical learning but also inspire new methodological developments as unique challenges arise from different domains.

Self-Directed Learning Strategies

For career changers, professionals looking to upskill, or individuals who prefer learning at their own pace, self-directed learning offers a flexible and increasingly viable path into statistical learning. With a wealth of online resources available, a structured approach and dedication can lead to significant skill development. OpenCourser's Learner's Guide provides many articles on how to maximize the benefits of online learning.

Charting Your Course: Structured Learning Roadmaps

Embarking on a self-learning journey in statistical learning can feel daunting without a clear plan. Creating a structured learning roadmap tailored to your goals is essential. Start by defining what you want to achieve: Are you aiming for a foundational understanding, the ability to apply common algorithms, or expertise in a specific niche like natural language processing or computer vision?

A typical roadmap might begin with strengthening mathematical prerequisites: linear algebra, calculus, probability, and basic statistics. Online courses and textbooks abound for these topics. Next, move into introductory statistical learning or machine learning courses that cover core concepts like supervised and unsupervised learning, model evaluation, and the bias-variance tradeoff. As you progress, you can delve into specific algorithms (e.g., regression, decision trees, SVMs, neural networks) and their implementations, often using Python or R.

Consider breaking down your learning into manageable modules, setting realistic timelines, and tracking your progress. Supplement theoretical learning with practical exercises and coding assignments. Platforms like OpenCourser allow you to save courses to a list, which can help you organize your chosen curriculum and track your learning path effectively. Remember to revisit earlier concepts periodically to reinforce your understanding.

These courses offer diverse starting points for a self-directed learning roadmap, covering general statistical learning, a Python-based approach, and an overview of machine learning:

Learning by Doing: Project-Based Skill Development

Theoretical knowledge is crucial, but practical application is where true understanding and skill mastery develop in statistical learning. A project-based approach is one of the most effective ways for self-directed learners to solidify concepts and build a portfolio that showcases their abilities. Start with small, well-defined projects and gradually tackle more complex challenges as your confidence grows.

Look for publicly available datasets from sources like Kaggle, UCI Machine Learning Repository, or government open data portals. Choose datasets that interest you, as this will help maintain motivation. Early projects could involve tasks like performing exploratory data analysis, implementing a simple linear regression model, or building a classifier for a binary outcome. Document your process thoroughly: data cleaning steps, feature engineering choices, model selection rationale, and how you evaluated performance.

As you gain experience, you can tackle more ambitious projects, perhaps participating in online data science competitions or developing an end-to-end application that involves data collection, model training, and deployment. These projects not only reinforce your learning but also provide tangible evidence of your skills to potential employers. Sharing your projects on platforms like GitHub can further enhance your visibility within the community.

This course focuses on applying Python to research, which often involves project-based learning:

This book provides hands-on experience with popular Python libraries for machine learning, ideal for project work:

Tapping into the Ecosystem: Open-Source Tools and Communities

The statistical learning landscape is rich with open-source tools and vibrant online communities that are invaluable resources for self-directed learners. Familiarizing yourself with these tools is essential for practical application. Python and R are the two dominant programming languages in the field, each with a vast ecosystem of libraries specifically designed for data analysis and machine learning.

For Python, libraries such as NumPy (for numerical computation), Pandas (for data manipulation and analysis), Matplotlib and Seaborn (for data visualization), and Scikit-learn (a comprehensive library for machine learning algorithms) are fundamental. For more advanced applications, particularly in deep learning, TensorFlow and PyTorch are widely used. R, on the other hand, has a strong tradition in statistical modeling and visualization, with packages like `dplyr` for data manipulation, `ggplot2` for visualization, and `caret` or `mlr3` for machine learning workflows.

Beyond tools, online communities offer immense support. Websites like Stack Overflow are indispensable for troubleshooting coding issues and getting answers to specific technical questions. Forums and communities associated with specific tools (e.g., the Scikit-learn or TensorFlow communities) or platforms like Reddit (e.g., r/MachineLearning, r/datascience) provide spaces for discussion, sharing resources, and learning from peers. Engaging with these communities can accelerate your learning, expose you to new ideas, and help you stay updated with the latest developments.

These books are excellent resources for learning how to use Python for statistical learning and machine learning tasks:

Finding Equilibrium: Theory and Practical Implementation

A successful journey in statistical learning, especially for self-learners, hinges on finding the right balance between understanding the underlying theory and gaining hands-on practical experience. It can be tempting to jump directly into coding and applying algorithms without grasping the "why" behind them. Conversely, getting bogged down in purely theoretical details without practicing implementation can hinder skill development.

Strive for a synergistic approach. When learning a new algorithm, take the time to understand its mathematical basis, its assumptions, its strengths, and its limitations. What kind of problems is it well-suited for? When might it perform poorly? Complement this theoretical study by implementing the algorithm on real or toy datasets. Experiment with different parameters, observe how the results change, and try to interpret the outputs.

This iterative process of learning theory, applying it in practice, observing outcomes, and then revisiting the theory to understand those outcomes is crucial for deep learning. Don't be afraid to make mistakes; they are often the best learning opportunities. The goal is not just to know *how* to run a piece of code, but to understand *what* that code is doing and *why* it's the appropriate approach for a given problem. This balanced approach will build a robust and adaptable skillset.

Courses like the one below often strive to connect theoretical foundations with practical coding skills, particularly in an engineering context:

Statistical Learning in Industry Applications

The true power of statistical learning is realized when its methods are applied to solve real-world business problems and drive decision-making across various industries. Companies are increasingly leveraging data to gain competitive advantages, optimize operations, and create innovative products and services. This section explores how statistical learning translates into tangible value in different sectors and discusses the demand for these skills.

Transforming Business: Case Studies in Finance, Healthcare, and Technology

Statistical learning has become indispensable in the finance industry. Banks and financial institutions use it extensively for credit risk assessment, determining the likelihood that a borrower will default on a loan. Sophisticated fraud detection systems employ statistical learning to identify unusual patterns in transaction data that may indicate fraudulent activity, saving institutions and customers millions. Algorithmic trading strategies, which make automated trading decisions based on market data and predictive models, are another prominent application.

In healthcare, statistical learning is revolutionizing patient care and medical research. Predictive models help in early disease detection by analyzing patient records, medical imaging, and even genetic information. For example, algorithms can identify subtle patterns in mammograms that might indicate early-stage breast cancer. Pharmaceutical companies use statistical learning to accelerate drug discovery and optimize clinical trial design. Hospitals can use predictive analytics for resource allocation, such as forecasting patient admissions to manage staffing levels effectively.

The technology sector is perhaps one of the most visible adopters of statistical learning. Search engines like Google use complex algorithms to rank web pages and provide relevant search results. E-commerce giants like Amazon rely heavily on recommendation systems, built using techniques like collaborative filtering, to suggest products to users. Social media platforms use statistical learning to personalize news feeds, suggest connections, and detect inappropriate content. Furthermore, the development of self-driving cars, virtual assistants, and advanced robotics all lean heavily on statistical learning and machine learning principles.

These are just a few examples, and the applications continue to grow as more industries recognize the potential of data-driven insights. According to a report by McKinsey, AI adoption is widespread, and organizations are already reporting meaningful business outcomes from its use, with many applications rooted in statistical learning principles.

Measuring Impact: Return on Investment (ROI) for Business Implementations

Businesses invest in statistical learning initiatives with the expectation of a tangible return on investment (ROI). This ROI can manifest in various forms, such as increased revenue, reduced costs, improved efficiency, enhanced customer satisfaction, or better risk management. For example, a retail company implementing a statistical learning model for demand forecasting can reduce overstocking and stockouts, leading to lower inventory holding costs and fewer lost sales.

Calculating the ROI of statistical learning projects involves comparing the benefits derived from the implementation against the costs incurred. Costs can include data acquisition and preparation, software and hardware infrastructure, salaries for data scientists and engineers, and training. Benefits might be direct, like increased sales from a targeted marketing campaign powered by predictive analytics, or indirect, such as improved operational efficiency from optimizing a supply chain.

Demonstrating ROI is crucial for securing ongoing investment and support for statistical learning projects within an organization. It often requires careful planning, clear metrics for success, and effective communication of results to stakeholders. As businesses become more data-driven, the ability to quantify the value generated by statistical learning initiatives becomes increasingly important for strategic decision-making.

The Hunt for Talent: Market Demand for Statistical Learning Skills

The demand for professionals with statistical learning skills has been robust and is projected to continue growing. As organizations across industries collect ever-increasing volumes of data, they need skilled individuals who can turn that data into actionable insights and predictive models. Roles such as Data Scientist, Machine Learning Engineer, Statistician, and Data Analyst are consistently in high demand.

According to the U.S. Bureau of Labor Statistics (BLS), employment of data scientists is projected to grow 36 percent from 2023 to 2033, which is much faster than the average for all occupations. This growth is driven by the increasing need for data-driven decision-making across all sectors of the economy. The BLS also notes that about 20,800 openings for data scientists are projected each year, on average, over the decade. Similar growth trends are observed for related roles that heavily utilize statistical learning techniques.

This high demand translates into competitive salaries and numerous career opportunities for individuals with the right qualifications. Employers look for a combination of strong analytical skills, proficiency in programming languages like Python or R, experience with machine learning libraries, and the ability to communicate complex findings effectively. Continuous learning is also critical, as the field is rapidly evolving with new tools and techniques emerging regularly.

These careers are directly related to the skills developed in statistical learning:

At the Forefront: Emerging Industry-Specific Methodologies

While core statistical learning principles are broadly applicable, many industries are seeing the development of specialized methodologies tailored to their unique challenges and data types. For instance, in finance, there's ongoing research into sophisticated models for high-frequency trading, credit risk modeling that incorporates alternative data sources, and explainable AI for regulatory compliance in lending decisions.

In healthcare, an emerging area is the application of statistical learning to electronic health records (EHRs) for predictive diagnostics and personalized treatment pathways. Federated learning techniques are gaining traction to enable model training across multiple hospitals without sharing sensitive patient data. The analysis of genomic data and its integration with clinical data also presents unique methodological challenges and opportunities.

The technology sector continues to push boundaries with advancements in natural language processing for more nuanced human-computer interaction, computer vision for more accurate image and video analysis (e.g., in autonomous vehicles), and reinforcement learning for optimizing complex systems like recommendation engines or robotics. As industries mature in their adoption of statistical learning, the demand for these specialized, domain-aware methodologies and the experts who can develop and apply them is likely to increase.

These courses touch upon specialized areas that are highly relevant in specific industries and application domains:

Career Progression and Roles

A career in statistical learning offers diverse pathways and significant opportunities for growth. As individuals gain experience and expertise, they can progress through various roles, each with increasing responsibility and impact. Understanding these potential trajectories can help aspiring professionals and those already in the field to plan their career development effectively.

Starting Out: Entry-Level Positions and Required Competencies

Entry-level positions in statistical learning often carry titles like Junior Data Scientist, Data Analyst, Quantitative Analyst, or Machine Learning Engineer (Associate/Junior level). These roles typically require a bachelor's or master's degree in a quantitative field such as Statistics, Mathematics, Computer Science, Economics, or a related engineering discipline. Some employers may prefer or require a master's degree, especially for data scientist roles.

Key competencies for entry-level positions include a solid understanding of fundamental statistical concepts and machine learning algorithms (e.g., regression, classification, clustering). Proficiency in programming languages commonly used for data analysis, such as Python or R, along with experience with relevant libraries (e.g., Scikit-learn, Pandas, NumPy for Python; dplyr, ggplot2, caret for R) is essential. Familiarity with database querying languages like SQL is also highly valued.

Beyond technical skills, employers look for strong problem-solving abilities, analytical thinking, attention to detail, and good communication skills. The ability to understand business problems, translate them into analytical questions, and present findings clearly to both technical and non-technical audiences is crucial. Internships, capstone projects, and participation in data science competitions can provide valuable experience and make a candidate more competitive. Many learners begin their journey by exploring foundational topics through resources available on OpenCourser's Data Science section.

Growing Expertise: Mid-Career Specialization Paths

As professionals gain a few years of experience in statistical learning, they often begin to specialize. Mid-career roles might include titles like Data Scientist, Senior Data Scientist, Machine Learning Engineer, or Statistician. At this stage, individuals are expected to have a deeper understanding of various algorithms, model tuning, feature engineering, and deployment processes. They typically take on more complex projects, lead smaller initiatives, and may start mentoring junior team members.

Specialization paths can diverge based on interests and organizational needs. Some may choose to deepen their technical expertise, becoming specialists in areas like deep learning, natural language processing (NLP), computer vision, reinforcement learning, or MLOps (Machine Learning Operations, focusing on the deployment and maintenance of models). Others might focus on specific industry domains, such as finance, healthcare, e-commerce, or cybersecurity, developing deep subject matter expertise alongside their statistical learning skills.

Another path involves focusing on the research and development of new algorithms and techniques, often requiring a Ph.D. or extensive research experience. Stronger programming skills, experience with big data technologies (e.g., Spark, Hadoop), and cloud computing platforms (e.g., AWS, Azure, GCP) become increasingly important. Continuous learning is vital at this stage to keep up with the rapidly evolving landscape of tools and methodologies.

Leading the Way: Leadership Roles in Data-Driven Organizations

With significant experience and a proven track record, professionals in statistical learning can advance to leadership positions. These roles might include Lead Data Scientist, Principal Data Scientist, Manager of Data Science/Analytics, Director of AI/Machine Learning, or even Chief Data Officer (CDO) or Chief Analytics Officer (CAO) in larger organizations. Leadership roles involve not only deep technical expertise but also strong strategic thinking, people management, and communication skills.

Leaders in this domain are typically responsible for setting the technical vision and strategy for their teams, overseeing the development and deployment of complex machine learning systems, and ensuring that data-driven insights translate into business value. They manage teams of data scientists and engineers, mentor talent, and foster a culture of innovation and collaboration. They also play a key role in communicating with executive leadership, advocating for data-driven initiatives, and ensuring alignment with overall business objectives.

A crucial aspect of leadership is staying abreast of emerging trends in statistical learning and AI, evaluating new technologies and methodologies, and guiding the organization in adopting best practices. Ethical considerations, data governance, and ensuring the responsible use of AI also become significant responsibilities at this level. These roles require a blend of technical depth, business acumen, and leadership qualities.

Forging Your Own Path: Freelance and Consulting Opportunities

For experienced statistical learning professionals, freelance and consulting work offers an alternative career path with greater autonomy and flexibility. Many organizations, particularly small and medium-sized enterprises (SMEs) or those in niche industries, may not have the resources or consistent need to hire full-time senior data scientists or machine learning engineers. They often turn to consultants or freelancers for specific projects or expert advice.

Successful consultants in statistical learning typically have a strong portfolio of completed projects, deep expertise in one or more specialized areas, and excellent client management and communication skills. They might help businesses develop data strategies, build custom machine learning models, provide training, or offer guidance on adopting new technologies. Networking, building a strong professional brand, and the ability to effectively market one's services are crucial for success in consulting.

Freelancing platforms and professional networks can provide opportunities, but many consultants also build their client base through referrals and direct outreach. This path allows individuals to work on a diverse range of problems across different industries, offering continuous learning and a high degree of control over their work-life balance, though it also comes with the responsibilities of managing a business, including client acquisition and project scoping.

Ethical Considerations in Statistical Learning

As statistical learning models become increasingly powerful and pervasive, influencing decisions in critical areas from loan applications to medical diagnoses and criminal justice, the ethical implications of their use demand careful consideration. It is crucial for practitioners, researchers, and policymakers to address these challenges to ensure that technology serves humanity responsibly.

Unmasking Unfairness: Algorithmic Bias and Fairness Metrics

Algorithmic bias is a significant ethical concern. Statistical learning models are trained on data, and if that data reflects existing societal biases (e.g., racial, gender, or socioeconomic biases), the models can inadvertently learn and even amplify these biases. This can lead to discriminatory outcomes, where certain groups are unfairly disadvantaged. For example, a hiring algorithm trained on historical data from a male-dominated field might unfairly penalize female applicants, or a facial recognition system might perform less accurately for individuals with darker skin tones if it was predominantly trained on images of lighter-skinned individuals.

Addressing algorithmic bias requires a multi-faceted approach. It starts with careful examination of training data for potential biases and efforts to collect more representative and diverse datasets. Researchers are also developing fairness metrics to quantify bias in models. These metrics can assess whether a model's predictions or error rates differ significantly across different demographic groups. Examples include demographic parity (ensuring the likelihood of a positive outcome is the same across groups) and equalized odds (ensuring true positive and false positive rates are similar across groups).

Mitigation techniques can be applied at different stages: pre-processing (modifying the training data), in-processing (modifying the learning algorithm to incorporate fairness constraints), or post-processing (adjusting the model's predictions). However, defining and achieving "fairness" is complex, as there are multiple, sometimes conflicting, mathematical definitions of fairness, and the appropriate definition can depend on the societal context and the specific application. Continuous monitoring and auditing of models in deployment are also essential to detect and address emergent biases.

Organizations like the National Institute of Standards and Technology (NIST) provide resources and frameworks like the AI Risk Management Framework (AI RMF) to help manage risks associated with AI, including bias and fairness.

Guarding Secrets: Privacy-Preserving Techniques

Statistical learning models often require large amounts of data to train effectively, and this data can frequently contain sensitive personal information. Protecting individual privacy is a paramount ethical and legal obligation. There's a growing focus on developing and deploying privacy-preserving machine learning (PPML) techniques that allow for data analysis and model training without compromising the confidentiality of the underlying data.

One set of techniques falls under the umbrella of differential privacy. Differential privacy provides a formal mathematical guarantee that the output of an analysis will not significantly change if any single individual's data is added to or removed from the dataset. This is typically achieved by adding carefully calibrated noise to the data or the model's outputs, making it difficult to infer information about specific individuals. Other approaches include homomorphic encryption, which allows computations to be performed directly on encrypted data without decrypting it first, and secure multi-party computation (SMPC), which enables multiple parties to jointly compute a function over their inputs while keeping those inputs private.

Federated learning is another emerging paradigm where models are trained on decentralized datasets (e.g., on users' mobile devices or at different hospitals) without the raw data ever leaving its local environment. Only model updates or aggregated parameters are shared with a central server, reducing the risk of direct data exposure. These techniques are crucial for building trust and ensuring that the benefits of statistical learning can be realized without sacrificing individual privacy.

Navigating the Rules: Regulatory Compliance Challenges

The rapid advancement of statistical learning and AI has outpaced the development of comprehensive legal and regulatory frameworks in many jurisdictions. However, governments and regulatory bodies worldwide are increasingly recognizing the need to establish rules and guidelines for the development and deployment of these technologies, particularly in high-risk applications.

Regulations like the European Union's General Data Protection Regulation (GDPR) have significant implications for how personal data can be collected, processed, and used for training statistical learning models. GDPR emphasizes principles like data minimization, purpose limitation, and individuals' rights to access and control their data. In the United States, various sectoral regulations (e.g., HIPAA for healthcare, FCRA for credit reporting) and emerging state-level privacy laws like the California Consumer Privacy Act (CCPA) also impose obligations.

Compliance challenges include ensuring data governance, maintaining records of data processing activities, conducting impact assessments for high-risk AI systems, and implementing appropriate security measures. The "black box" nature of some complex models can also make it difficult to demonstrate compliance with requirements for explainability or non-discrimination. Organizations deploying statistical learning models must stay informed about evolving regulatory landscapes and proactively integrate compliance considerations into their design and development processes.

Peeking Inside the Box: Transparency and Model Interpretability

Many powerful statistical learning models, especially complex ones like deep neural networks or large ensemble models, can operate as "black boxes." While they may achieve high predictive accuracy, understanding *how* they arrive at their decisions can be challenging. This lack of transparency and interpretability poses significant ethical concerns, particularly when models are used to make decisions that have serious consequences for individuals (e.g., loan approvals, medical diagnoses, parole decisions).

If a model denies someone a loan, they have a right to understand why. If a model makes an incorrect medical diagnosis, doctors need to be able to understand the model's reasoning to identify the error. Transparency is also crucial for debugging models, identifying biases, and building trust with users and stakeholders. There is a growing field of research focused on developing techniques for model interpretability and eXplainable AI (XAI).

Interpretability methods can be broadly categorized into those that aim to make the entire model transparent (e.g., by using inherently interpretable models like linear regression or decision trees, or by developing techniques to understand global model behavior) and those that explain individual predictions (e.g., LIME (Local Interpretable Model-agnostic Explanations) or SHAP (SHapley Additive exPlanations), which identify the features that contributed most to a specific prediction). Striving for greater transparency and interpretability is essential for the responsible and ethical deployment of statistical learning systems.

This book touches on some of the statistical underpinnings that can contribute to model understanding:

Emerging Trends in Statistical Learning

Statistical learning is a field characterized by rapid innovation and evolution. New techniques, tools, and applications are constantly emerging, driven by advances in computational power, the availability of vast datasets, and ongoing research efforts. Staying abreast of these trends is crucial for researchers, practitioners, and anyone looking to understand the future direction of this dynamic domain.

Synergy and Power: Integration with Deep Learning Architectures

One of the most significant trends in recent years has been the increasing integration of statistical learning principles with deep learning architectures. Deep learning, a subfield of machine learning based on artificial neural networks with many layers (deep neural networks), has achieved state-of-the-art performance in complex tasks like image recognition, natural language processing, and speech recognition.

While deep learning models are incredibly powerful, they often benefit from the rigor and theoretical grounding of statistical learning. Concepts from statistical learning, such as regularization techniques (e.g., L1/L2 regularization, dropout) to prevent overfitting, methods for model selection and hyperparameter tuning, and frameworks for uncertainty quantification, are increasingly being applied to deep learning models. Bayesian deep learning, for example, combines Bayesian methods with deep learning to provide more robust uncertainty estimates for predictions.

This synergy flows both ways. Statistical learning practitioners are also exploring how techniques and architectures from deep learning, such as the use of embeddings for representing categorical data or attention mechanisms for handling sequential data, can enhance traditional statistical models. This cross-pollination is leading to more powerful, robust, and interpretable models that combine the strengths of both approaches.

These resources are relevant to understanding this powerful integration:

The Rise of Automation: Automated Machine Learning (AutoML) Developments

Automated Machine Learning (AutoML) is another rapidly emerging trend that aims to automate the end-to-end process of applying machine learning to real-world problems. Building effective machine learning models often involves a series of time-consuming and expertise-intensive tasks, including data preprocessing, feature engineering, model selection, hyperparameter optimization, and model deployment. AutoML tools seek to automate these steps, making machine learning more accessible to non-experts and increasing the productivity of data scientists.

AutoML systems typically employ various techniques, such as sophisticated search algorithms (e.g., Bayesian optimization, evolutionary algorithms) to explore different combinations of models and hyperparameters, and meta-learning to leverage experience from previous tasks to speed up learning on new tasks. The goal is to automatically discover the best-performing machine learning pipeline for a given dataset and problem with minimal human intervention.

While AutoML is still an evolving field, it holds significant promise for democratizing machine learning and accelerating its adoption across industries. However, it's important to note that AutoML is not a complete replacement for human expertise. Domain knowledge, understanding the problem context, and the ability to interpret and validate model results remain crucial. AutoML is best viewed as a tool that can augment the capabilities of data scientists, allowing them to focus on higher-level strategic tasks.

Learning on the Go: Edge Computing and Resource-Constrained Implementations

Traditionally, statistical learning models, especially large and complex ones, have been trained and deployed on powerful servers or cloud computing infrastructure. However, there is a growing trend towards deploying models directly on edge devices – such as smartphones, wearables, IoT sensors, and autonomous vehicles. This is known as edge computing or edge AI.

Deploying models on the edge offers several advantages, including lower latency (as data doesn't need to be sent to a central server for processing), reduced bandwidth consumption, enhanced privacy (as sensitive data can be processed locally), and offline functionality. However, edge devices typically have limited computational resources (processing power, memory, energy) compared to servers. This presents challenges for running complex statistical learning models.

Research in this area focuses on developing techniques for model compression (e.g., pruning, quantization) to reduce the size and computational requirements of models, designing efficient model architectures tailored for resource-constrained environments (e.g., MobileNets, TinyML), and developing on-device learning techniques that allow models to adapt and update locally. The growth of IoT and the increasing demand for real-time intelligent applications are driving innovation in edge computing for statistical learning.

Interdisciplinary Fusion: Cross-Pollination with Other Scientific Disciplines

Statistical learning has always been an interdisciplinary field, drawing from statistics, computer science, and mathematics. However, the trend of cross-pollination with other scientific disciplines is accelerating, leading to novel applications and methodological advancements.

In the physical sciences, statistical learning is being used to analyze data from large-scale experiments (e.g., in particle physics), model complex physical systems, and accelerate scientific discovery. In the social sciences, it's being applied to analyze large survey datasets, model social networks, and understand human behavior from digital traces. The humanities are also beginning to explore statistical learning for tasks like analyzing large text corpora (digital humanities) or classifying artistic styles.

This cross-disciplinary fusion is mutually beneficial. Other scientific fields gain powerful new tools for data analysis and modeling, while statistical learning benefits from new types of data, unique problem structures, and domain-specific insights that can inspire the development of new methods. As data becomes more ubiquitous across all areas of research and industry, the importance of these interdisciplinary collaborations will only continue to grow, pushing the frontiers of both statistical learning and the disciplines it intersects with.

Many courses now explicitly cover how machine learning, a close relative of statistical learning, applies across diverse fields, reflecting this interdisciplinary nature:

Frequently Asked Questions

As you consider a path in statistical learning, many questions may arise regarding prerequisites, career paths, and the nature of the field. This section aims to address some of the most common inquiries from individuals exploring this exciting and evolving domain.

What are the essential mathematical prerequisites for entering the field?

A solid mathematical foundation is indeed crucial for statistical learning. The key areas include:

- Linear Algebra: Understanding vectors, matrices, matrix operations (multiplication, inversion, decomposition), eigenvalues, and eigenvectors is fundamental. Many statistical learning algorithms are expressed and implemented using linear algebra.

- Calculus: Concepts from differential calculus, particularly derivatives and gradients, are essential for understanding how many algorithms are optimized (e.g., gradient descent). Integral calculus is also useful for probability theory.

- Probability Theory: This is at the heart of statistics. Key concepts include random variables, probability distributions (e.g., Gaussian, binomial, Poisson), conditional probability, Bayes' theorem, expectation, and variance.

- Basic Statistics: Familiarity with descriptive statistics (mean, median, mode, standard deviation), inferential statistics (hypothesis testing, confidence intervals, p-values), and concepts like correlation and regression will provide a strong starting point.

While you don't need to be a pure mathematician, a good conceptual grasp and the ability to apply these mathematical tools are important for understanding how algorithms work, why they are chosen, and how to interpret their results. Many online courses specifically cover the mathematics for machine learning to help learners build this foundation.

How do academic and industry career trajectories compare in statistical learning?

Both academic and industry career paths in statistical learning offer rewarding opportunities, but they differ in their focus and typical activities.

Academic careers (e.g., university professor, research scientist at a non-profit institute) primarily focus on research and education. This involves developing new statistical learning theories and methods, publishing research in academic journals and conferences, teaching courses, mentoring students, and securing research funding through grants. The emphasis is often on pushing the frontiers of knowledge and contributing to the fundamental understanding of the field. A Ph.D. is typically required for tenure-track academic positions.

Industry careers (e.g., Data Scientist, Machine Learning Engineer, Quantitative Analyst in a company) are generally focused on applying statistical learning techniques to solve specific business problems and create value for the organization. This involves tasks like data collection and cleaning, feature engineering, model building and deployment, and communicating insights to stakeholders. While research can be a component of some industry roles (especially in R&D labs of large tech companies), the primary driver is often practical impact and achieving business objectives. Educational requirements vary, with bachelor's, master's, or Ph.D. degrees all being viable entry points depending on the role and company.

There can be overlap, with academics sometimes consulting for industry and industry professionals publishing research or teaching. The choice between them often depends on individual preferences for research focus versus application focus, and the desired work environment.

How can I retain skills in such a rapidly evolving discipline?

Statistical learning is indeed a fast-moving field, and continuous learning is essential for skill retention and staying current. Here are some strategies:

- Follow Key Publications and Conferences: Keep an eye on major journals (e.g., Journal of Machine Learning Research, Annals of Applied Statistics) and conferences (e.g., NeurIPS, ICML, KDD) to see the latest research. Many papers and presentations are available online.

- Engage with Online Communities: Participate in forums, blogs, and social media groups dedicated to statistical learning, data science, and machine learning. These are great places to learn about new tools, techniques, and discussions.

- Take Online Courses and Workshops: Platforms like OpenCourser list numerous courses, including advanced and specialized topics. Periodically taking a new course or workshop can help refresh existing knowledge and introduce new skills. Consider checking for deals on courses to make continuous learning more affordable.

- Work on Projects: Continuously apply your skills to new datasets and problems. Personal projects or contributing to open-source projects can be excellent ways to practice and learn.

- Read Books and Technical Blogs: Stay updated by reading new books in the field and following blogs from leading researchers and practitioners.

- Attend Meetups and Webinars: Local meetups (if available) and online webinars offer opportunities to learn from others and network.

- Teach or Mentor Others: Explaining concepts to others is a great way to solidify your own understanding.

The key is to cultivate a mindset of lifelong learning and curiosity. Dedicate regular time to exploring new developments and practicing your skills.

Where are the geographic hotspots for statistical learning careers?

While statistical learning skills are in demand globally, certain geographic regions have a higher concentration of job opportunities, often driven by the presence of major technology companies, research institutions, and venture capital investment.

In the United States, prominent hotspots include Silicon Valley/San Francisco Bay Area, Seattle, New York City, Boston, and Austin. These areas are home to numerous tech giants, innovative startups, and leading universities with strong data science programs. Other cities with growing tech scenes also offer increasing opportunities.

Internationally, cities like London (UK), Toronto and Montreal (Canada), Berlin (Germany), Paris (France), Amsterdam (Netherlands), Shanghai and Beijing (China), Bangalore (India), and Singapore are also recognized as significant hubs for AI, data science, and statistical learning talent and job opportunities.

However, the rise of remote work has also broadened geographic possibilities. Many companies are now more open to hiring talent regardless of physical location, which can create opportunities for skilled professionals in a wider range of areas. It's advisable to research job markets based on your specific career interests and lifestyle preferences.

What are the typical salary expectations across different experience levels?

Salaries in statistical learning and related fields like data science are generally competitive, reflecting the high demand for these skills. However, actual figures can vary significantly based on factors such as geographic location, industry, company size, specific role, years of experience, and educational qualifications.

According to the U.S. Bureau of Labor Statistics (BLS), the median annual wage for data scientists was $112,590 in May 2024. Entry-level positions will typically command lower salaries, while senior-level professionals and those in management roles with extensive experience can earn significantly more. For instance, BLS data from its Occupational Employment and Wage Statistics survey shows a wide range, with the 10th percentile annual wage for data scientists at $61,070 and the 90th percentile at $147,670 as of May 2023 (note that dates and specific definitions in different BLS reports can vary slightly). Some industry reports suggest that top-tier data scientists and machine learning engineers in high-demand areas can earn well into six figures, with compensation packages often including bonuses and stock options.

It's important to research salary benchmarks for specific roles and locations using resources like the BLS Occupational Outlook Handbook, industry salary surveys (e.g., from Burtch Works, Glassdoor, Levels.fyi), and job postings. Remember that negotiation and demonstrating a strong skillset and portfolio can also influence salary outcomes.

How resilient is a career in statistical learning against automation trends?