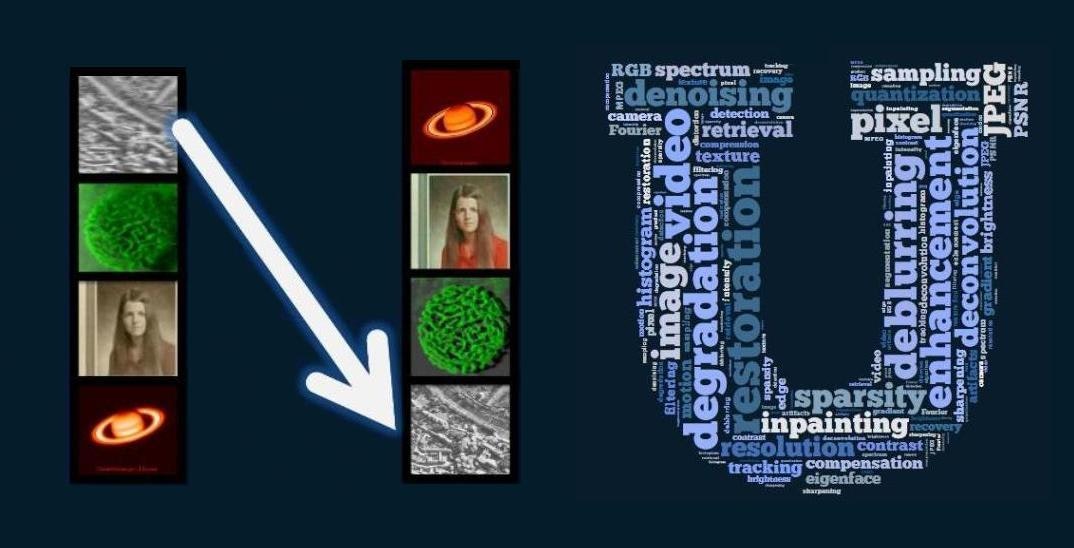

Image Enhancement

Comprehensive Guide to Image Enhancement

Image enhancement is the process of adjusting digital images to make them more suitable for display or further image analysis. The primary goal is to improve the visual quality of an image, making it easier to interpret, or to extract useful information. This can involve a variety of operations such as increasing contrast, reducing noise, sharpening details, or adjusting colors. Think of it as giving an image a "makeover" to bring out its best features or to prepare it for more specialized tasks.

Working in image enhancement can be quite engaging. Imagine the satisfaction of transforming a blurry, indistinct medical scan into a clear image that aids in a crucial diagnosis. Or consider the excitement of developing algorithms that can restore faded historical photographs, bringing lost details back to life. The field also constantly evolves with advancements in artificial intelligence, offering continuous learning and innovation opportunities.

Introduction to Image Enhancement

Image enhancement focuses on improving the visual appearance of an image or transforming it into a representation that is better suited for subsequent automated processing. The goal is to make images clearer, more interpretable, or more visually appealing. This might involve making subtle adjustments to brightness and contrast, similar to how you might tweak a personal photograph on your smartphone, or it could involve more complex algorithms to reveal hidden details or remove unwanted artifacts.

Defining Image Enhancement and Its Goals

At its core, image enhancement aims to improve an image's interpretability or the perception of information within it. This doesn't necessarily mean making an image subjectively "prettier," although that can be a goal in fields like photography. More often, especially in scientific and technical applications, the goal is to accentuate specific features, reduce ambiguities, or prepare an image for further analysis by either humans or machines.

The primary objectives of image enhancement include improving visual quality for human viewers and preparing images for automated tasks. For human viewers, this could mean increasing the contrast to make details more apparent or reducing noise to make the image less distracting. For automated systems, such as those used in object recognition or medical diagnosis, enhancement might involve highlighting edges or specific textures that are important for the system's algorithms.

Ultimately, the "enhancement" is often application-dependent. An enhancement that works well for one type of image or purpose might be unsuitable for another. For example, smoothing an image to reduce noise might be beneficial for a medical scan but could be detrimental if the goal is to preserve fine textural details in a satellite image.

Image Enhancement vs. Image Restoration

It's common to confuse image enhancement with image restoration, but they are distinct concepts with different objectives. Image enhancement is generally a subjective process. Its aim is to modify an image to make it more suitable or pleasing for a specific task or viewer, without necessarily being concerned about the fidelity to the original, uncorrupted image. Think of it as applying filters on a social media app – the goal is a better-looking result, not necessarily a more "accurate" one.

Image restoration, on the other hand, is an objective process that attempts to reconstruct or recover an image that has been degraded. This degradation could be due to factors like blur, noise, or geometric distortions. The goal of restoration is to model the degradation and apply an inverse process to recover the original, pristine image as accurately as possible. It's more about repairing damage than simply improving appearance.

While both can improve image quality, enhancement is about making an image look better for a specific purpose, while restoration is about undoing damage. For instance, increasing the contrast of a washed-out photo is an enhancement. Trying to remove the blur from a shaky photo by estimating the motion and reversing its effect would be image restoration.

The Importance of Image Enhancement in Various Fields

Image enhancement is not just about making vacation photos look better; it plays a crucial role in a vast array of fields. In medical imaging, for instance, enhancing X-rays, MRIs, or CT scans can help doctors make more accurate diagnoses by making subtle abnormalities more visible. This can involve reducing noise or sharpening details to improve the visual representation of anatomical areas.

In remote sensing, satellite and aerial imagery are enhanced for better mapping, environmental monitoring, and agricultural planning. Techniques like contrast stretching can reveal subtle variations in land cover or vegetation health. Security and forensics rely heavily on image enhancement to clarify surveillance footage or extract crucial details from crime scene photographs. Furthermore, in industrial settings, enhanced images are used for automated quality control, helping to identify defects in manufactured goods.

Even in everyday consumer applications, from digital cameras to social media platforms, image enhancement algorithms work behind the scenes to improve the photos we take and share. The ability to transform images to provide a better representation of details makes it a fundamental tool for researchers and practitioners across many disciplines.

A Simple, Relatable Example of Image Enhancement

Perhaps the most relatable example of image enhancement is adjusting the brightness and contrast of a photograph. Imagine you've taken a photo on a cloudy day, and it looks a bit dull and flat. The subject might be hard to see, and the details are lost in the shadows or overly bright areas.

Using even basic photo editing software on your phone or computer, you can increase the brightness to make the overall image lighter. Then, by adjusting the contrast, you can increase the difference between the light and dark areas, making the objects in the photo stand out more clearly. You might also apply a sharpening filter to make edges appear crisper. These simple adjustments are all forms of image enhancement. They don't necessarily make the photo a more "accurate" representation of the scene as a sensor captured it, but they make it more visually appealing and easier to interpret for the human eye.

This fundamental idea of manipulating pixel values to improve visual quality or highlight information is at the heart of all image enhancement techniques, whether simple adjustments or complex algorithms.

History and Evolution

The journey of image enhancement is deeply intertwined with the broader history of image processing. Its roots can be traced back to the mid-20th century, with early applications driven by necessities in fields like photojournalism and, significantly, the space program. The desire to improve the quality of images transmitted from space was a major catalyst for the development of digital image processing techniques.

Origins in Early Digital Imaging and Space Exploration

The earliest forms of image enhancement were often manual and analog. However, the advent of digital computers opened new frontiers. One of the first and most notable applications of digital image processing, including enhancement, was in the American space program in the 1960s. Images of the moon transmitted by Ranger 7 in 1964, for example, were processed by computers at the Jet Propulsion Laboratory (JPL) to correct for distortions and enhance contrast, revealing details that would have otherwise been missed.

These early efforts were pioneering, dealing with significant limitations in computational power and storage. The techniques developed laid the groundwork for many of the methods still in use today. The need to extract maximum information from precious, hard-won images from remote sources was a powerful driver of innovation.

These foundational courses can help you understand the historical context and early techniques that shaped the field.

For those interested in the foundational texts that document these early developments and principles, these books are considered classics.

Key Milestones and Breakthroughs

Several key milestones mark the evolution of image enhancement. The invention of the Charge-Coupled Device (CCD) in 1969 at Bell Labs was a pivotal moment, revolutionizing digital image acquisition and providing higher quality inputs for processing. The development of fundamental algorithms in the 1970s and 1980s, such as histogram equalization, various spatial filtering techniques (like mean and median filters), and frequency domain methods using the Fast Fourier Transform (FFT), provided a robust toolkit for image manipulation.

The standardization of image formats and the increasing affordability of digital cameras and scanners brought image processing capabilities to a wider audience. More recently, the rise of machine learning, particularly deep learning and Convolutional Neural Networks (CNNs) starting in the 2010s, has led to another paradigm shift. These techniques can learn complex transformations directly from data, achieving state-of-the-art results in tasks like denoising, super-resolution, and colorization.

Understanding these breakthroughs is crucial for appreciating the current state and future potential of image enhancement.

Transition from Analog to Digital Techniques

Before the widespread adoption of digital computers, image enhancement was primarily an analog process. This involved photographic darkroom techniques, such as dodging and burning to adjust local brightness, or using special lenses and filters during image capture. While effective in skilled hands, these methods were often laborious, difficult to reproduce consistently, and offered limited flexibility.

The transition to digital techniques, beginning in earnest in the latter half of the 20th century, brought about a sea change. Digital images, represented as matrices of pixel values, could be manipulated with mathematical precision using computer algorithms. This allowed for a much wider range of operations, greater consistency, and the ability to automate complex enhancement workflows. The ease of copying and modifying digital images without degradation also fundamentally changed how images were handled and processed.

This shift democratized image enhancement to some extent, as powerful tools became accessible beyond specialized labs. The core principles of manipulating image properties remained, but the methods became far more versatile and powerful.

Impact of Computational Power and Digital Sensors

The evolution of image enhancement is inextricably linked to advancements in computational power and digital sensor technology. Early digital image processing tasks were computationally intensive and slow, limited by the processing capabilities of computers at the time. An operation that takes milliseconds on a modern smartphone might have taken hours or even days on early mainframes.

The exponential growth in processing power, often described by Moore's Law, has enabled the development and practical application of increasingly sophisticated enhancement algorithms. Techniques that were once purely theoretical due to computational cost, such as complex iterative methods or large-scale neural network training, are now feasible. Specialized hardware, particularly Graphics Processing Units (GPUs), has further accelerated tasks like deep learning-based enhancement.

Simultaneously, improvements in digital sensor technology—found in everything from satellites to consumer cameras—have led to higher resolution images with better dynamic range and lower noise. While better sensors reduce the need for some types of enhancement (e.g., extreme noise reduction), they also provide richer data for more advanced enhancement techniques to work with, pushing the boundaries of what's possible.

Core Concepts and Principles

To truly grasp image enhancement, one must first understand some fundamental building blocks of digital images and the common issues that affect their quality. These core concepts provide the vocabulary and the conceptual framework necessary to discuss and apply various enhancement techniques effectively. From the smallest element of an image to the way colors are represented, these principles are universal.

Pixels, Image Resolution, and Color Spaces

At the most basic level, a digital image is a grid of tiny elements called pixels (short for picture elements). Each pixel has a specific location and a value that represents its intensity or color. The more pixels an image has, the finer the detail it can represent. This is directly related to image resolution, which often refers to the dimensions of the pixel grid (e.g., 1920 pixels wide by 1080 pixels high). Higher resolution generally means more detail, but also larger file sizes and more data to process.

The way color is represented in a pixel is defined by a color space. Common color spaces include:

- RGB (Red, Green, Blue): This is an additive color model where red, green, and blue light are combined in various proportions to reproduce a broad array of colors. It's widely used in digital displays and cameras.

- Grayscale: In a grayscale image, each pixel has a single value representing its intensity, typically ranging from black (lowest value) to white (highest value), with various shades of gray in between.

- HSV (Hue, Saturation, Value): This color space represents color in a way that is often more intuitive to humans. Hue defines the pure color (e.g., red, green, blue), saturation defines the intensity or "purity" of that color (from gray to vivid), and value defines the brightness.

Understanding these concepts is crucial because many enhancement techniques operate by directly manipulating pixel values or by transforming the image between different color spaces to isolate specific attributes like luminance or chrominance for targeted adjustments.

Image Histograms and Their Use in Enhancement

An image histogram is a graphical representation of the tonal distribution in a digital image. It plots the number of pixels for each tonal value. For a grayscale image, the x-axis represents the different gray levels (e.g., 0 to 255), and the y-axis represents the number of pixels in the image that have that specific gray level. For color images, histograms can be generated for each color channel (e.g., separate histograms for Red, Green, and Blue) or for overall intensity.

Histograms provide valuable information about an image's contrast and brightness. For example:

- A histogram clustered towards the left side indicates a dark image.

- A histogram clustered towards the right side indicates a bright image.

- A histogram concentrated in a narrow range suggests low contrast.

- A histogram spread out over a wide range suggests high contrast.

Image enhancement techniques frequently use histograms. For instance, histogram equalization is a method that redistributes the pixel intensities to make the histogram flatter, which often has the effect of increasing global contrast in images that appear washed out. Analyzing an image's histogram can help decide which enhancement techniques might be most effective.

These courses offer a good introduction to the role of histograms in image processing.

Common Image Degradations

Images are often imperfect and suffer from various forms of degradation that image enhancement aims to address. Some of the most common degradations include:

-

Noise: This refers to random variations in brightness or color information in an image. Noise can originate from various sources, including the image sensor (especially in low light), electronic interference during transmission, or even quantization effects. Common types of noise include:

- Gaussian noise: Characterized by a bell-shaped probability distribution, where pixel values are slightly perturbed from their true values.

- Salt-and-pepper noise: Appears as sparsely occurring white and black pixels (or bright and dark spots) randomly distributed over the image.

- Blur: Blur results in a loss of sharpness and detail. It can be caused by camera motion during exposure, an out-of-focus lens, atmospheric disturbances, or object movement.

- Low Contrast: This occurs when the range of intensity values in an image is limited, making it difficult to distinguish features. Images might appear "flat" or "washed out." This can be due to poor lighting conditions during capture or limitations of the imaging sensor.

Many image enhancement techniques are specifically designed to counteract these degradations, for example, by using filters to reduce noise, deblurring algorithms to sharpen images, or contrast stretching to improve visibility.

Spatial Domain vs. Frequency Domain Processing

Image enhancement techniques can be broadly categorized into two main domains: spatial domain methods and frequency domain methods.

Spatial domain methods involve direct manipulation of the pixel values in an image. Operations are performed on the image plane itself. For example, adjusting the brightness of an image by adding a constant value to every pixel is a spatial domain operation. Spatial filters, which operate on a neighborhood of pixels, are also a key part of spatial domain processing. These methods are often intuitive and computationally simpler for many tasks.

Frequency domain methods, on the other hand, operate on the Fourier transform of an image. The image is first transformed from its spatial representation (pixels) into a representation based on frequencies. Different frequencies correspond to different rates of change in pixel intensities; for example, sharp edges and fine details correspond to high frequencies, while smooth regions correspond to low frequencies. Filtering is then performed by modifying these frequency components (e.g., attenuating high frequencies to smooth the image or boosting them to sharpen it). After filtering, an inverse Fourier transform is applied to convert the image back to the spatial domain. Frequency domain techniques can be very powerful for certain types of filtering and analysis, especially when dealing with periodic noise or when precise control over frequency components is needed.

The choice between spatial and frequency domain techniques often depends on the specific problem, the desired outcome, and computational considerations. Some operations are easier or more efficient in one domain than the other.

These resources delve deeper into these distinct processing domains.

For a foundational understanding, consider these widely respected books.

Fundamental Techniques for Image Enhancement

A variety of fundamental techniques form the bedrock of image enhancement. These methods, primarily operating in the spatial and frequency domains, address common issues like poor contrast, noise, and lack of sharpness. Understanding these core techniques is essential for anyone looking to delve deeper into the field, as they are widely used and often serve as components in more advanced approaches.

Point Processing Operations

Point processing operations are among the simplest forms of image enhancement. In these operations, the new value of each pixel depends only on its original value, not on the values of its neighbors. This means each pixel is modified independently. Common point processing techniques include:

- Contrast Stretching: This technique expands the range of intensity values in an image to span a wider portion of the available dynamic range. It's often used to improve images that appear washed out or have low contrast. By mapping a narrow range of input intensities to a wider range of output intensities, details in both dark and bright areas can become more apparent.

- Brightness Adjustment: This involves uniformly increasing or decreasing the intensity values of all pixels in an image, making the entire image appear lighter or darker. It's a straightforward way to correct for over or under-exposure.

- Thresholding: Thresholding converts a grayscale image into a binary image (black and white). A threshold value is chosen, and all pixels with intensity values above the threshold are set to white, while those below are set to black (or vice-versa). This is often used as a preliminary step in image segmentation to separate objects from the background.

- Gamma Correction: This is a non-linear operation used to adjust the luminance of an image. It can help to correct for the way human vision perceives brightness or to compensate for the display characteristics of a monitor. A gamma value less than 1 will make darker regions brighter, while a gamma value greater than 1 will make brighter regions darker, effectively controlling the overall brightness and contrast.

These techniques are computationally inexpensive and can provide significant improvements in image quality with relatively simple implementations.

This course provides a good overview of fundamental image processing operations.

Spatial Filtering

Spatial filtering involves modifying a pixel's value based on its own value and the values of its neighboring pixels. This is typically done by convolving the image with a small matrix called a filter or kernel. The kernel slides across the image, and at each position, a new pixel value is calculated based on the weighted sum of the pixels in the neighborhood defined by the kernel. Spatial filters are broadly classified into smoothing and sharpening filters.

-

Smoothing Filters (Low-pass filters): These filters are used to reduce noise and blur an image. They work by averaging the pixel values in a neighborhood, which tends to smooth out sharp variations, including noise.

- Mean Filter: Replaces each pixel value with the average of the pixel values in its neighborhood. It's simple but can blur edges significantly.

- Median Filter: Replaces each pixel value with the median of the pixel values in its neighborhood. It's particularly effective at removing salt-and-pepper noise while preserving edges better than the mean filter.

-

Sharpening Filters (High-pass filters): These filters are designed to enhance edges and fine details in an image. They work by emphasizing high-frequency components, which correspond to abrupt changes in intensity, like edges.

- Laplacian Filter: This filter uses a second-order derivative to highlight regions of rapid intensity change. It tends to produce sharp edges but can also amplify noise.

- Gradient-based Filters (e.g., Sobel, Prewitt): These filters use first-order derivatives to detect edges and their orientations. While primarily used for edge detection, the resulting gradient magnitude can be used to sharpen an image.

The choice of filter and kernel size depends on the specific type of noise or the desired level of sharpening.

To learn more about these essential filtering techniques, consider the following resources:

The following books offer in-depth explanations of spatial filtering concepts.

Histogram-Based Methods

As discussed earlier, an image histogram provides a summary of the tonal distribution. Histogram-based enhancement methods modify an image by altering its histogram. Two prominent techniques are:

- Histogram Equalization: This technique aims to produce an output image with a more uniform (flatter) histogram. It works by spreading out the most frequent intensity values, effectively stretching the contrast of the image. Histogram equalization is a global technique, meaning it applies the same transformation to all parts of the image. While often effective for improving overall contrast, it can sometimes result in an unnatural appearance or amplify noise in relatively uniform regions.

- Histogram Specification (or Histogram Matching): This is a more flexible technique where, instead of aiming for a flat histogram, the goal is to transform the input image's histogram to match the shape of a specified target histogram. This allows for more control over the output image's appearance. For instance, one could try to make an image's histogram look like that of another image known to have good visual quality.

Adaptive versions of these techniques, like Contrast Limited Adaptive Histogram Equalization (CLAHE), apply the equalization locally, which can often yield better results by preserving local details and avoiding over-amplification of noise.

Frequency Domain Filtering Basics

Frequency domain filtering involves transforming an image into the frequency domain using techniques like the Fourier Transform, manipulating its frequency components, and then transforming it back to the spatial domain. The core idea is that different image features correspond to different frequencies.

- Low-pass Filters: These filters attenuate or remove high-frequency components while allowing low-frequency components to pass through. Since high frequencies often correspond to noise and fine details, low-pass filtering results in a smoother, less noisy image (blurring). Examples include the Ideal Low-pass Filter and the Gaussian Low-pass Filter.

- High-pass Filters: These filters do the opposite: they attenuate or remove low-frequency components and allow high-frequency components to pass. Since low frequencies represent the slowly varying, smooth parts of an image, and high frequencies represent edges and sharp details, high-pass filtering tends to sharpen the image and enhance edges. Examples include the Ideal High-pass Filter and the Gaussian High-pass Filter.

- Band-pass Filters: These filters allow a specific range (band) of frequencies to pass while attenuating frequencies outside this band. They can be useful for isolating features that exist within a particular frequency range.

Filtering in the frequency domain can be more intuitive for certain tasks, especially when dealing with periodic noise that manifests as distinct spikes in the frequency spectrum. However, it often involves more computational steps (forward transform, filtering, inverse transform) compared to direct spatial domain filtering for simple operations.

Advanced Techniques and Modern Approaches

Beyond the fundamental techniques, the field of image enhancement has seen significant advancements, particularly with the rise of sophisticated mathematical models and machine learning. These advanced approaches often provide more powerful and nuanced ways to improve image quality, tackling complex degradations and enabling entirely new enhancement capabilities. They are at the forefront of research and are increasingly found in specialized applications.

Variational Methods and Partial Differential Equations (PDEs)

Variational methods and Partial Differential Equations (PDEs) represent a mathematically rigorous approach to image enhancement. In this framework, an enhanced image is often formulated as the solution to an optimization problem (variational method) or as the outcome of an evolutionary process described by a PDE. These methods can be very effective for tasks like denoising, deblurring, and inpainting (filling in missing parts of an image).

The core idea is often to define an "energy functional" that measures the quality of an image, incorporating terms that penalize noise or deviation from desired properties (like smoothness in certain regions while preserving edges). The enhanced image is then the one that minimizes this energy. PDEs can be derived from these energy functionals or formulated directly to describe how pixel values should change over "time" (an iterative process) to achieve enhancement. For example, anisotropic diffusion is a PDE-based technique that smooths an image more in relatively uniform regions and less near strong edges, thus preserving important structural details while reducing noise.

These methods offer a high degree of control and can incorporate sophisticated prior knowledge about image characteristics. However, they are often computationally intensive and require a good understanding of advanced mathematics.

Wavelet Transforms

The wavelet transform is a powerful mathematical tool that decomposes an image into different frequency components at different resolutions. Unlike the Fourier transform, which only provides frequency information, the wavelet transform provides both frequency and spatial (location) information. This multi-resolution analysis makes wavelets particularly well-suited for image enhancement tasks like denoising and feature enhancement.

In wavelet-based denoising, an image is transformed into the wavelet domain. Noise tends to be spread out across many small wavelet coefficients, while significant image features are represented by a few large coefficients. By thresholding or shrinking the small coefficients (assumed to be noise) and then performing an inverse wavelet transform, one can often achieve effective noise reduction while preserving important image details better than traditional frequency domain filters. Wavelets have also been used for contrast enhancement and sharpening by manipulating specific wavelet subbands.

The ability to analyze and manipulate image information at different scales is a key advantage of wavelet-based methods.

The Rise of Deep Learning: CNNs for Enhancement

The most significant recent advancements in image enhancement have been driven by deep learning, particularly Convolutional Neural Networks (CNNs). CNNs are a class of neural networks that are highly effective at learning hierarchical features from grid-like data, such as images. Instead of relying on handcrafted rules or explicit mathematical models, deep learning approaches learn to perform enhancement tasks directly from large datasets of example images.

CNNs have achieved state-of-the-art results in a wide range of enhancement tasks:

- Denoising: CNNs can learn to distinguish noise from underlying image content with remarkable accuracy, even for complex noise patterns.

- Super-Resolution: This involves generating a high-resolution image from a low-resolution input. CNNs can learn to hallucinate plausible high-frequency details, leading to significantly sharper and more detailed upscaled images than traditional interpolation methods.

- Colorization: Given a grayscale image, CNNs can learn to add realistic colors by understanding the semantic content of the image. For example, they can learn that grass is typically green and skies are typically blue.

- Deblurring, Dehazing, and Low-Light Enhancement: Deep learning models have also shown great success in removing various types of image degradation.

The power of deep learning lies in its ability to learn complex, non-linear mappings from input to output images, often capturing subtle image characteristics that are difficult to model explicitly. However, these methods require large amounts of training data and significant computational resources for training.

These courses can provide an introduction to the application of deep learning in image-related tasks.

The following book offers insights into modern computer vision, including deep learning approaches.

Generative Adversarial Networks (GANs) for Realistic Enhancement

Generative Adversarial Networks (GANs) are a specific type of deep learning architecture that has shown exceptional promise for generating highly realistic images. A GAN consists of two neural networks, a generator and a discriminator, that are trained simultaneously in a competitive manner.

- The generator tries to create realistic enhanced images from degraded inputs.

- The discriminator tries to distinguish between real (ground truth) enhanced images and those created by the generator.

Through this adversarial process, the generator learns to produce images that are increasingly difficult for the discriminator to identify as fake, leading to very realistic and perceptually convincing results. GANs have been particularly successful in tasks like super-resolution (where they can produce sharper and more natural-looking textures than traditional CNNs alone) and style transfer (e.g., making a photo look like a painting by a famous artist). They are also used for tasks like image inpainting and artifact removal.

While GANs can produce impressive visual quality, they can also be challenging to train stably and may sometimes introduce subtle artifacts or "hallucinate" details that were not present in the original low-quality input.

High Dynamic Range (HDR) Imaging and Tone Mapping

High Dynamic Range (HDR) imaging is a set of techniques that aim to capture and represent a greater range of luminosity levels than is possible with standard digital imaging. Real-world scenes often have a very wide dynamic range – from very dark shadows to very bright highlights. Standard cameras can only capture a limited portion of this range, leading to loss of detail in either the dark or bright areas (or both).

HDR techniques typically involve capturing multiple images of the same scene at different exposure levels and then combining them into a single HDR image that contains information from the entire range. However, most display devices (monitors, prints) have a lower dynamic range than HDR images. This is where tone mapping comes in. Tone mapping is the process of converting an HDR image into a Low Dynamic Range (LDR) image suitable for display, while trying to preserve as much of the detail and contrast from the original HDR scene as possible. The goal is to produce an image that looks natural and reveals details in both shadows and highlights, mimicking how the human visual system adapts to different brightness levels.

HDR and tone mapping are crucial in photography and computer graphics for creating more realistic and visually rich images.

Applications Across Industries

Image enhancement is not an abstract academic pursuit; its techniques are applied across a multitude of industries, solving real-world problems and creating significant value. From peering inside the human body to monitoring our planet from space, and from ensuring the safety of products to bringing stories to life on screen, enhanced images are indispensable. Understanding these applications highlights the versatility and impact of this field.

Medical Imaging

In medical imaging, clarity and detail are paramount for accurate diagnosis and treatment planning. Image enhancement techniques are routinely applied to various medical modalities, including:

- X-rays: Enhancing contrast in X-ray images can help reveal subtle fractures or abnormalities in bone and soft tissue.

- MRIs (Magnetic Resonance Imaging): Noise reduction and edge enhancement in MRI scans can improve the visualization of brain structures, tumors, and other soft tissue details.

- CT (Computed Tomography) Scans: Sharpening and contrast adjustment can aid in identifying lesions, organ damage, or vascular issues.

- Ultrasound: Speckle noise reduction is a common enhancement in ultrasound imaging to improve the visibility of anatomical structures.

By improving the visual representation of medical images, enhancement techniques assist radiologists and clinicians in detecting diseases earlier and more accurately, ultimately leading to better patient outcomes.

These resources delve into the specifics of medical image processing.

Remote Sensing

Remote sensing involves acquiring information about the Earth's surface (or other planets) from a distance, typically using satellites or aircraft. The imagery obtained often requires significant enhancement to be useful. Applications include:

- Mapping: Enhancing satellite images helps in creating accurate maps of land cover, urban areas, and transportation networks. Contrast stretching and spatial filtering can highlight features like roads and rivers.

- Environmental Monitoring: Enhanced imagery is crucial for tracking deforestation, monitoring water quality, assessing the impact of natural disasters (like floods or wildfires), and studying climate change effects. Color enhancement can help differentiate between healthy and stressed vegetation.

- Agriculture: Farmers and agronomists use enhanced multispectral or hyperspectral imagery to assess crop health, identify areas needing irrigation or fertilization, and predict yields.

- Resource Management: Geological features can be enhanced to aid in mineral exploration or water resource management.

Techniques like contrast enhancement, noise reduction, and edge sharpening are vital for extracting meaningful information from raw remote sensing data.

This book covers the application of image processing in remote sensing.

Photography and Cinematography

The world of photography and filmmaking heavily relies on image enhancement, both for corrective and creative purposes.

- Consumer Photography: Everyday users benefit from automated enhancement algorithms in smartphones and digital cameras that adjust brightness, contrast, color saturation, and sharpness to produce more pleasing photos. Software like Adobe Photoshop and GIMP offer a vast array of manual enhancement tools.

- Professional Photography: Photographers use sophisticated techniques for retouching portraits, enhancing landscapes, and achieving specific artistic looks. This includes noise reduction for low-light shots, detail enhancement, and precise color grading.

- Film Restoration: Old films often suffer from scratches, dust, color fading, and instability. Image enhancement and restoration techniques are used to digitally clean up and restore these films for modern audiences.

- Special Effects (VFX): In cinematography, image enhancement is part of the visual effects pipeline, used for compositing, color matching, and ensuring that computer-generated elements blend seamlessly with live-action footage.

From basic adjustments to complex artistic manipulations, image enhancement is integral to visual storytelling and aesthetic quality in these creative fields.

For those looking to edit and organize photos, especially on a Mac, this course might be a good starting point.

Beginners looking for quick image editing hacks might find this course useful.

Security and Forensics

In security and forensic science, image enhancement can be critical for investigation and evidence gathering.

- Surveillance Footage: Video from security cameras is often low quality, poorly lit, or blurry. Enhancement techniques like noise reduction, sharpening, and contrast adjustment can help clarify details such as faces, license plates, or specific actions.

- Forensic Evidence Analysis: Enhancing photographs from crime scenes can reveal latent fingerprints, footprints, or other subtle evidence that might otherwise be invisible. Sharpening can clarify details in bite marks or tool marks.

- Document Examination: Enhancing scanned or photographed documents can help in deciphering faded or altered text.

The goal in these applications is often to extract as much objective information as possible from an image, which can be crucial for solving crimes and in legal proceedings. However, it's also an area where ethical considerations about manipulation are particularly important.

This course touches upon Open-Source Intelligence (OSINT), which can involve analyzing various forms of media, including images.

Industrial Inspection

Automated industrial inspection systems rely on machine vision, and image enhancement is a key preprocessing step to ensure reliable performance.

- Quality Control: On assembly lines, cameras capture images of products, and these images are analyzed to detect defects such as cracks, scratches, misalignments, or missing components. Enhancing the image by increasing contrast or sharpening edges can make these defects easier for an automated system to identify.

- Defect Detection: In industries like electronics manufacturing, enhancement techniques help in inspecting circuit boards for soldering errors or component flaws. In textiles, they can identify weaving imperfections.

- Process Monitoring: Enhanced images can be used to monitor ongoing manufacturing processes, ensuring they are operating within specified tolerances.

By improving the clarity and consistency of images used in automated inspection, enhancement techniques contribute to higher product quality, reduced waste, and increased manufacturing efficiency.

Tools and Technologies

A wide array of tools and technologies are available for performing image enhancement, ranging from comprehensive programming libraries for those who want to build custom solutions, to user-friendly standalone software for direct manipulation, and even cloud-based services. The choice of tool often depends on the complexity of the task, the user's programming skills, and the scale of the operation.

Popular Programming Libraries

For developers and researchers who need to implement custom image enhancement algorithms or integrate them into larger applications, several powerful programming libraries are widely used:

- OpenCV (Open Source Computer Vision Library): Perhaps the most popular library for computer vision and image processing, OpenCV offers a vast collection of algorithms for image enhancement, filtering, feature detection, object recognition, and much more. It has interfaces for C++, Python, Java, and MATLAB, making it highly versatile. You can explore OpenCV courses on OpenCourser.

- Scikit-image: A Python-based library, Scikit-image provides a comprehensive set of algorithms for image processing, including various filters, segmentation techniques, feature extraction, and more. It integrates well with other scientific Python libraries like NumPy and SciPy.

- Pillow (PIL Fork): Pillow is a friendly fork of the Python Imaging Library (PIL). It provides extensive file format support and basic image manipulation capabilities, including point operations, filtering with a set of built-in convolution kernels, and color space conversions. It's often used for simpler image tasks in Python applications.

- MATLAB Image Processing Toolbox: For those working within the MATLAB environment, the Image Processing Toolbox offers a rich set of functions and apps for image analysis, processing, visualization, and algorithm development. It's widely used in academic research and engineering. Many learners seek MATLAB image processing courses to get started.

These libraries provide the building blocks for creating sophisticated image enhancement workflows.

This course introduces digital image processing with a popular proprietary tool.

The following books are excellent resources for learning image processing with specific tools.

Widely Used Standalone Software

For users who prefer a graphical interface and don't need to write code, several standalone software packages offer powerful image enhancement capabilities:

- Adobe Photoshop: The industry standard for professional image editing, Photoshop provides an extensive suite of tools for enhancement, retouching, compositing, and much more. It supports layers, masks, adjustment layers, and a wide variety of filters.

- GIMP (GNU Image Manipulation Program): A free and open-source alternative to Photoshop, GIMP offers a comprehensive set of tools for image retouching, composition, and image authoring. It's highly extensible through plugins and scripts. [xy44kf]

- ImageJ: A public domain, Java-based image processing program developed at the National Institutes of Health. ImageJ is particularly popular in the scientific community, especially in biology and medicine, due to its extensive plugin architecture and tools for analysis and measurement.

These tools are excellent for hands-on learning and for applying enhancement techniques without needing to delve into programming. Many introductory and advanced courses focus on mastering these software packages.

Courses that teach practical skills using GIMP can be very helpful for aspiring image editors.

Role of Specialized Hardware (GPUs)

Many advanced image enhancement techniques, especially those based on deep learning, are computationally very intensive. Training a deep neural network can involve processing vast amounts of image data and performing billions of calculations. While modern CPUs are powerful, they are often not optimized for the kind of parallel processing that these tasks demand.

This is where Graphics Processing Units (GPUs) come into play. Originally designed for rendering graphics in video games, GPUs have highly parallel architectures with thousands of smaller cores. This makes them exceptionally well-suited for the matrix and vector operations that are fundamental to deep learning and many other image processing algorithms. Using GPUs can reduce training times for deep learning models from weeks or months to days or even hours, making these advanced techniques practical. Many deep learning libraries have built-in GPU support, allowing researchers and practitioners to leverage this specialized hardware.

For tasks involving real-time image enhancement, such as in video processing or autonomous systems, GPUs are often essential to achieve the required processing speeds.

Cloud Platforms Offering Image Processing Services

Cloud computing platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure are increasingly offering specialized image processing and machine learning services. These platforms provide scalable infrastructure, pre-trained models, and APIs that allow developers to easily integrate sophisticated image enhancement capabilities into their applications without needing to manage the underlying hardware or develop models from scratch.

Services might include automated image tagging, object detection, facial recognition, and even custom model training. For image enhancement, cloud platforms can offer solutions for tasks like automated background removal, image upscaling (super-resolution), and content-aware filtering. They also provide the computational resources (including GPUs) needed for training and deploying custom deep learning models for enhancement.

Using cloud-based services can be a cost-effective way to access cutting-edge image processing technologies, especially for businesses or individuals who may not have the resources or expertise to build and maintain their own systems.

Formal Education Pathways

For those aspiring to a career deeply rooted in image enhancement, particularly in research, development, or advanced applications, a strong formal education is often beneficial. This typically involves university-level studies that provide a solid theoretical foundation in the underlying mathematics, computer science, and engineering principles. While self-study and online courses can offer valuable skills, a formal degree program often provides a more structured and comprehensive learning experience, along with research opportunities and academic credentials that are valued by many employers in specialized roles.

Relevant Undergraduate Majors

Several undergraduate majors can provide a strong foundation for a career involving image enhancement. The most common and relevant ones include:

- Computer Science: This major provides essential programming skills, algorithm design, data structures, and often includes specializations or elective courses in computer graphics, computer vision, and artificial intelligence, all of which are highly relevant to image enhancement.

- Electrical Engineering (or Computer Engineering): These programs often cover signal processing in depth, which is the mathematical backbone of image processing. Courses in digital signal processing, systems and control, and embedded systems can be very pertinent.

- Applied Mathematics or Statistics: A strong mathematical background is crucial for understanding and developing advanced image enhancement algorithms. Majors focusing on areas like linear algebra, calculus (especially multivariable and differential equations), probability, statistics, and numerical analysis are excellent preparation.

- Physics or Biomedical Engineering: For those interested in specific application domains like medical imaging or scientific imaging, a major in a related science or engineering field, coupled with strong computational coursework, can be advantageous.

Regardless of the specific major, it's beneficial to take courses that emphasize programming, data analysis, and mathematical modeling.

Typical University Courses

Within these majors, certain university courses are particularly central to building expertise in image enhancement:

- Digital Image Processing: This is usually the core course, covering fundamental concepts like image representation, sampling, quantization, image transforms (Fourier, Wavelet), spatial and frequency domain filtering, image restoration, and basic enhancement techniques like histogram equalization and contrast stretching. [87yjxs]

- Computer Vision: This field is closely related to image processing and often builds upon it. Courses cover topics like feature detection, image segmentation, object recognition, 3D reconstruction, and motion analysis. Many advanced enhancement techniques are developed within the context of computer vision problems.

- Machine Learning / Artificial Intelligence: Given the increasing importance of deep learning in image enhancement, courses in machine learning are essential. These cover topics like supervised and unsupervised learning, neural networks, convolutional neural networks (CNNs), and generative adversarial networks (GANs).

- Signal Processing: Courses on digital signal processing provide a deep understanding of concepts like convolution, filtering, and transforms, which are fundamental to both spatial and frequency domain image processing. [i2pg0p]

- Numerical Methods / Scientific Computing: These courses teach techniques for solving mathematical problems computationally, which is important for implementing and optimizing image processing algorithms.

These foundational courses provide a comprehensive understanding of the principles and techniques used in image enhancement.

For those seeking authoritative texts, these books are often used in university courses.

Graduate Studies (Master's, PhD)

For individuals aiming for research positions, academic roles, or highly specialized R&D roles in industry, graduate studies (Master's or PhD) are often necessary. Graduate programs allow for deeper specialization in specific areas of image enhancement and computer vision.

- Master's Degree: A Master's program typically involves advanced coursework and often a research project or thesis. It can provide more specialized knowledge and skills than an undergraduate degree, preparing students for more advanced engineering or development roles. Many Master's programs offer specializations in areas like machine learning, computer vision, or signal processing.

- PhD (Doctor of Philosophy): A PhD is a research-focused degree that involves conducting original research culminating in a dissertation. PhD programs are essential for those who wish to become independent researchers, lead research teams, or teach at the university level. Research areas in image enhancement at the PhD level might include developing novel deep learning architectures, exploring new mathematical models for image representation, or pioneering techniques for specific application domains (e.g., medical image enhancement, remote sensing analysis).

Graduate studies provide the opportunity to contribute to the cutting edge of the field and to develop a deep expertise that is highly valued for roles requiring significant innovation and problem-solving.

Importance of Strong Mathematical Foundations

A recurring theme in formal education for image enhancement is the critical importance of a strong mathematical foundation. Many image processing techniques are inherently mathematical.

- Linear Algebra: Images are often represented as matrices, and operations like transformations, filtering, and feature extraction heavily rely on concepts from linear algebra (e.g., vectors, matrices, eigenvalues, singular value decomposition).

- Calculus: Differential calculus is used in edge detection (gradients, Laplacians) and in optimization methods common in advanced techniques like variational approaches and deep learning (gradient descent). Integral calculus appears in concepts like convolution.

- Probability and Statistics: These are essential for understanding noise models, statistical approaches to image restoration and enhancement, and for evaluating the performance of algorithms. Machine learning, in particular, is heavily grounded in statistical principles.

- Fourier Analysis and Differential Equations: Understanding transforms (like Fourier and Wavelet) is key for frequency domain processing, and differential equations are central to PDE-based enhancement methods.

While it's possible to use existing tools and libraries without a deep mathematical understanding, developing new algorithms, truly understanding why certain techniques work, or troubleshooting complex problems often requires a solid grasp of these mathematical underpinnings.

Online Learning and Self-Study

While formal education provides a structured path, online learning and self-study have become increasingly viable and valuable avenues for acquiring knowledge and skills in image enhancement. The wealth of resources available online, from comprehensive courses to practical tutorials and open-source projects, empowers learners to master this field at their own pace, supplement traditional education, or even pivot careers. OpenCourser itself is a testament to the power of online learning, offering a vast catalog of Computer Science courses and Data Science courses that can build a strong foundation.

Availability and Types of Online Courses

The landscape of online courses for image enhancement is diverse, catering to different learning goals and prior levels of experience. You can find:

- Conceptual Overviews: These courses focus on explaining the fundamental principles of image processing and enhancement without necessarily diving deep into the mathematics or programming. They are great for beginners or those seeking a general understanding.

- Programming-Focused Courses: Many courses teach image enhancement by focusing on practical implementation using popular libraries like OpenCV in Python or tools like MATLAB. [87yjxs, imzojw] These often include coding exercises and projects.

- Theory-Intensive Courses: Some online courses, often from universities, delve deeper into the mathematical foundations of image processing, covering topics like signal processing theory, Fourier analysis, and statistical methods.

- Specialized Deep Learning Courses: With the rise of AI, numerous online courses now focus specifically on applying deep learning (e.g., CNNs, GANs) to image enhancement tasks like super-resolution, denoising, and colorization.

Platforms like Coursera, Udemy, edX, and others host a wide range of such courses, often taught by university professors or industry experts. OpenCourser’s browsing features can help you discover courses tailored to your specific interests within image processing and related fields like Artificial Intelligence.

These courses are excellent starting points for learners interested in the fundamentals of digital image and video processing through online platforms.

For learners who prefer a different language or specific tools, these options are also available.

Using Online Resources for Practical Learning

Beyond structured courses, a plethora of online resources can facilitate practical learning in image enhancement:

- Tutorials and Blogs: Websites like Towards Data Science, Medium, and individual blogs by experts often feature step-by-step tutorials on implementing specific image enhancement techniques or using particular libraries. OpenCourser Notes, the official blog of OpenCourser, also provides insights and guidance.

- Documentation: The official documentation for libraries like OpenCV, Scikit-image, and TensorFlow is an invaluable resource, providing detailed explanations of functions, parameters, and often including example code.

- Open-Source Projects: Platforms like GitHub host countless open-source image enhancement projects. Studying the code of these projects, or even contributing to them, can be an excellent way to learn practical implementation details and see how different algorithms are put together.

- Research Papers: Websites like arXiv.org provide free access to the latest research papers. While often advanced, reading these papers can give insights into cutting-edge techniques and current research directions.

Combining these resources with online courses can create a rich and flexible learning experience.

Building a Portfolio Through Personal Projects

For anyone learning image enhancement, especially those aiming for a career in the field, building a portfolio of personal projects is crucial. Theoretical knowledge is important, but employers and collaborators want to see that you can apply that knowledge to solve real problems. Personal projects demonstrate practical skills, initiative, and passion.

Some ideas for portfolio projects include:

- Enhancing Personal Photos: Start by applying fundamental techniques (contrast adjustment, sharpening, noise reduction) to your own photographs. Try to implement some of these algorithms from scratch.

- Implementing Classic Algorithms: Choose a well-known algorithm (e.g., histogram equalization, a specific spatial filter, or even a basic denoising CNN) and implement it yourself using a programming language like Python. Compare your results to those from standard libraries.

- Replicating a Research Paper: Find an interesting image enhancement paper (perhaps an older, foundational one to start) and try to replicate its results. This is a challenging but highly rewarding way to learn.

- Developing a Niche Application: Think of a specific problem that could be solved or improved with image enhancement (e.g., enhancing old scanned documents, improving underwater photos, creating an artistic filter) and build a small tool or application around it.

- Contributing to Open-Source: Find an open-source image processing project on GitHub and contribute by fixing bugs, adding features, or improving documentation.

Document your projects well, explaining the problem, your approach, the techniques used, and the results (with visual examples). A well-curated portfolio hosted on a platform like GitHub can be a powerful asset in a job search. The OpenCourser Learner's Guide offers tips on how to structure self-learning and stay motivated.

Supplementing Formal Education or Facilitating a Career Pivot

Online learning is not just for those starting from scratch; it's also an excellent way for individuals with formal education to supplement their knowledge or for professionals to facilitate a career pivot.

Students enrolled in university programs can use online courses to get a different perspective on a topic, learn a new programming language or tool relevant to their studies (e.g., a specific deep learning framework), or explore specialized areas not covered in their curriculum. Online resources can also help with homework and projects.

For professionals looking to transition into image enhancement from a different field, online courses provide a flexible and often more affordable way to gain the necessary skills. Someone with a background in software development, for example, could take online courses in digital image processing fundamentals and machine learning to reorient their career. A curated learning path, perhaps saved and managed using OpenCourser's "Save to List" feature (accessible via My Lists), can guide this transition. Building a strong portfolio of projects (as mentioned above) will be especially important for career changers to demonstrate their new capabilities.

Even for those already working in the field, the rapid pace of development, particularly in AI-driven enhancement, means continuous learning is essential. Online courses and resources are perfect for staying updated with the latest techniques and tools.

Online Communities and Forums for Support

Learning, especially self-study, can sometimes be challenging. Online communities and forums provide invaluable support, opportunities for collaboration, and a way to connect with fellow learners and experts.

- Stack Overflow: A question-and-answer website for programmers. If you get stuck on a coding problem related to image enhancement, chances are someone has asked a similar question, or you can ask your own.

- Reddit: Subreddits like r/computervision, r/imageprocessing, and r/MachineLearning are active communities where people discuss concepts, share projects, ask for help, and post news about the latest developments.

- Kaggle: While known for machine learning competitions, Kaggle also has forums and datasets relevant to image processing. Participating in competitions can be a great way to learn.

- Specialized Forums: Many online courses have their own dedicated forums where students can interact with each other and with instructors or teaching assistants.

- GitHub: Beyond hosting projects, GitHub's issue trackers and discussion sections for various libraries and tools serve as de facto forums for those specific technologies.

Engaging with these communities can help you overcome hurdles, get feedback on your work, learn from others' experiences, and stay motivated throughout your learning journey.

Career Paths and Opportunities

A strong foundation in image enhancement opens doors to a variety of exciting and impactful career paths across numerous industries. As digital imagery becomes increasingly central to technology, science, and communication, professionals with the skills to manipulate, analyze, and improve images are in growing demand. The career landscape is diverse, ranging from engineering and research roles to more application-focused positions.

Common Job Titles

While specific titles can vary by company and industry, some common job titles for professionals working with image enhancement include:

- Image Processing Engineer/Scientist: This is a core role focused on designing, developing, and implementing algorithms and systems for acquiring, processing, analyzing, and enhancing digital images.

- Computer Vision Engineer/Scientist: Computer vision is a broader field that includes image enhancement as a crucial component. These roles involve developing systems that enable computers to "see" and interpret visual information from the world.

- Machine Learning Engineer (specializing in Vision): With the rise of AI, many roles focus on applying machine learning, particularly deep learning, to solve image-related problems, including enhancement tasks like super-resolution, denoising, and colorization.

- Research Scientist (Imaging): Typically found in academic institutions or corporate R&D labs, these roles involve conducting cutting-edge research to advance the theory and practice of image processing and enhancement.

- Software Engineer (with Image Processing focus): Many software development roles, especially in areas like mobile app development, medical imaging software, or multimedia applications, require expertise in integrating and optimizing image enhancement features.

- Data Scientist (with Vision expertise): In roles that involve analyzing large datasets of images, skills in image processing and enhancement are valuable for preparing data and extracting features.

- Photo Editor/Digital Imaging Specialist: These roles are more focused on the practical application of existing tools (like Photoshop or GIMP) to enhance and retouch images, often in creative industries like photography, media, or marketing.

The specific responsibilities can range from algorithm development and software engineering to research and application-specific problem-solving.

Key Skills Sought by Employers

Employers in the field of image enhancement typically look for a combination of technical and soft skills:

- Programming Proficiency: Strong skills in languages commonly used for image processing, such as Python (with libraries like OpenCV, Scikit-image, TensorFlow, PyTorch) and C++, are often essential. Experience with MATLAB is also valued in some research and engineering environments.

- Algorithm Development: The ability to understand, design, and implement image processing and enhancement algorithms (e.g., filters, transforms, segmentation, feature extraction).

- Mathematics: A solid understanding of linear algebra, calculus, probability, and statistics is crucial for many roles, especially those involving algorithm development or research.

- Machine Learning/Deep Learning: Expertise in machine learning concepts and experience with deep learning frameworks are increasingly in demand, particularly for advanced enhancement tasks.

- Specific Tool Knowledge: Familiarity with image editing software (Photoshop, GIMP), image processing libraries, and version control systems (like Git) can be important.

- Domain Knowledge: For roles in specific industries (e.g., medical imaging, remote sensing, forensics), understanding the unique characteristics and challenges of images in that domain is a significant asset.

- Problem-Solving and Analytical Skills: The ability to analyze complex problems, devise innovative solutions, and critically evaluate results.

- Communication Skills: The ability to explain technical concepts clearly, both verbally and in writing, and to collaborate effectively with team members.

Continuously updating these skills is important, as the field is dynamic and new techniques are constantly emerging.

Potential Career Progression

Career progression in image enhancement can follow various paths, depending on individual interests, skills, and the type of organization. A typical trajectory might look like this:

- Entry-Level Positions: Graduates might start as Junior Image Processing Engineers, Software Engineers with an imaging focus, or Research Assistants. These roles often involve implementing and testing existing algorithms, contributing to software development projects, or assisting senior researchers.

- Mid-Level Positions: With experience, individuals can advance to roles like Image Processing Engineer, Computer Vision Engineer, or Data Scientist. Responsibilities may include leading smaller projects, designing new algorithms or system components, and mentoring junior team members.

- Senior/Lead Roles: Senior Engineers, Lead Scientists, or Principal Investigators take on more responsibility for technical direction, project management, architectural design, and innovation. They may lead teams, define research agendas, or be responsible for key product features.

- Management/Specialist Tracks: From senior roles, individuals might move into management positions (e.g., Engineering Manager, Research Director) or choose to deepen their technical expertise as a distinguished engineer, staff scientist, or subject matter expert.

- Entrepreneurship: Some experienced professionals may choose to start their own companies, developing specialized image enhancement products or services.

Continuous learning, building a strong portfolio, networking, and potentially pursuing advanced degrees or certifications can all contribute to career advancement. The Career Development resources on OpenCourser can provide further guidance.

Opportunities for Internships, Co-ops, and Research Assistantships

For students and recent graduates, internships, co-operative education (co-op) programs, and research assistantships are excellent ways to gain practical experience, apply academic knowledge, and make connections in the field of image enhancement.

- Internships: Many tech companies, research labs, and even government agencies offer internships focused on image processing, computer vision, or machine learning. These provide hands-on experience with real-world projects and tools.

- Co-op Programs: Co-op programs typically involve multiple work terms alternating with academic study, providing more extensive industry experience than a single internship.

- Research Assistantships: Universities often employ undergraduate and graduate students as research assistants in labs working on image enhancement and related topics. This is a great way to get involved in cutting-edge research, work closely with faculty, and potentially co-author publications.

These opportunities not only enhance a resume but also help individuals explore different career paths within the field and develop valuable professional skills. Actively seeking out these experiences during one's education can significantly improve job prospects upon graduation.

Industries with High Demand

The demand for image enhancement skills is widespread and growing across various industries. Some of the sectors with particularly high demand include:

- Technology (Software and Hardware): Companies developing operating systems, mobile devices, social media platforms, search engines, and camera technology all employ image enhancement experts.

- Healthcare and Medical Devices: The medical imaging industry (MRI, CT, X-ray, ultrasound manufacturers) and companies developing healthcare AI solutions heavily rely on image enhancement for diagnostics and analysis.

- Automotive: The development of Advanced Driver-Assistance Systems (ADAS) and autonomous vehicles requires sophisticated image processing and computer vision for tasks like lane detection, object recognition, and pedestrian detection, often in challenging visual conditions that necessitate enhancement.

- Aerospace and Defense: Applications in satellite imaging, reconnaissance, target tracking, and remote sensing drive demand in these sectors.

- Security and Surveillance: Companies providing security systems, video analytics, and forensic tools need expertise in enhancing often low-quality imagery.

- Entertainment and Media: The film, television, and gaming industries use image enhancement for visual effects, post-production, and content creation.

- E-commerce and Retail: Enhancing product photography for online stores is crucial for attracting customers.

- Industrial Automation and Robotics: Manufacturing and logistics companies use machine vision systems (which often include image enhancement) for quality control, inspection, and robot guidance.

As AI and imaging technologies continue to advance, the range of industries seeking these skills is likely to expand further.

Ethical Considerations and Challenges

While image enhancement offers powerful capabilities for improving and analyzing visual information, it also brings a host of ethical considerations and practical challenges. The ability to alter images, sometimes imperceptibly, raises questions about authenticity, bias, and potential misuse. Furthermore, the subjective nature of "enhancement" and the limitations of current technologies present ongoing hurdles for researchers and practitioners.

Potential for Misuse (e.g., Creating Misleading Images, Deepfakes)

One of the most significant ethical concerns is the potential for image enhancement techniques to be used to create misleading or outright false images. While basic photo manipulation has existed for a long time, advanced techniques, particularly those powered by AI like Generative Adversarial Networks (GANs), can generate highly realistic alterations or entirely fabricated images (often called "deepfakes").

This capability can be misused in various ways:

- Disinformation and Propaganda: Altered images can be used to spread false narratives, manipulate public opinion, or damage reputations.

- Fraud: Forged documents or manipulated evidence can be created for financial gain or to obstruct justice.

- Non-consensual Pornography: Deepfake technology has been infamously used to create explicit images of individuals without their consent.

- Erosion of Trust: The proliferation of manipulated images can lead to a general distrust of visual media, making it harder to discern truth from falsehood.

Addressing these misuses requires a multi-faceted approach, including developing technologies for detecting manipulated images, promoting media literacy, and establishing legal and ethical guidelines for the creation and dissemination of altered visual content.

Algorithmic Bias in Enhancement Techniques

Algorithmic bias is a serious concern in many AI applications, and image enhancement is no exception. Bias can creep into enhancement algorithms in several ways:

- Biased Training Data: If the datasets used to train deep learning models for enhancement are not representative of the diversity of human appearance (e.g., skewed towards certain skin tones, genders, or age groups), the resulting models may perform poorly or produce undesirable artifacts for underrepresented groups. For example, an enhancement algorithm trained primarily on images of light-skinned individuals might not work as well for darker skin tones, or could even introduce color distortions.

- Flawed Algorithmic Design: The choices made by developers when designing algorithms, such as the features they choose to prioritize or the assumptions embedded in their models, can inadvertently lead to biased outcomes.

The consequences of algorithmic bias in image enhancement can range from producing aesthetically unpleasing results for certain demographics to having more serious implications in applications like medical imaging (if enhancement performs differently across patient populations) or security (if facial recognition systems reliant on enhanced images are less accurate for some groups). Ensuring fairness and equity in image enhancement technologies requires careful attention to data collection, model design, and rigorous testing across diverse populations.

Challenge of Objectively Evaluating Enhancement Quality

A fundamental challenge in image enhancement is that the notion of "better" quality is often subjective and context-dependent. While some objective metrics exist (like Peak Signal-to-Noise Ratio (PSNR) or Structural Similarity Index (SSIM)), these do not always correlate well with human perception of visual quality, especially for enhancements that aim for aesthetic improvement rather than strict fidelity to a "ground truth" image (which may not even exist in many real-world scenarios).

What one person considers a good enhancement, another might find over-processed or unnatural. The "best" enhancement often depends on the specific application and the intended viewer. For example, an enhancement that highlights subtle details for a medical diagnosis might look overly sharpened or artificial for a casual photograph.

This subjectivity makes it difficult to create universal benchmarks for evaluating enhancement algorithms. Researchers often rely on subjective evaluations by human observers, but these can be time-consuming, expensive, and prone to their own biases. Developing more robust and perceptually relevant objective quality assessment methods for enhanced images remains an active area of research.

Limitations of Current Techniques and Areas Needing Improvement

Despite significant progress, current image enhancement techniques still have limitations and areas where improvement is needed:

- Handling Extreme Degradations: While modern methods can handle moderate levels of noise or blur, very severely degraded images (e.g., extreme low light, severe motion blur, very high noise) remain challenging to enhance effectively without introducing significant artifacts or losing too much detail.

- Artifact Generation: Some advanced techniques, particularly deep learning models, can sometimes introduce subtle (or not-so-subtle) artifacts that were not present in the original image. This is especially a concern with GANs, which might "hallucinate" plausible but incorrect details.

- Generalization: Models trained on specific types of images or degradations may not generalize well to new, unseen data. An algorithm that works well for enhancing natural outdoor scenes might perform poorly on medical images or satellite imagery.

- Computational Cost: Many state-of-the-art techniques, especially deep learning approaches, are computationally expensive, requiring significant processing power (often GPUs) and time. This can limit their applicability in resource-constrained environments or real-time applications.

- Interpretability and Controllability: Many deep learning models operate as "black boxes," making it difficult to understand why they produce a particular output or to control the enhancement process in a fine-grained way.

Ongoing research aims to address these limitations by developing more robust, efficient, and interpretable enhancement algorithms.

Briefly Consider the Environmental Impact of Large-Scale Computation

The training of large-scale deep learning models, which are increasingly used for advanced image enhancement, requires substantial computational resources. This, in turn, consumes significant amounts of electrical energy, contributing to carbon emissions and environmental impact, especially if the energy sources are not renewable. Data centers housing powerful GPUs for training these models have a considerable energy footprint.