Approximation Algorithms

proximation Algorithms: Finding Good Enough Solutions When Perfection is Too Costly Approximation algorithms are a fascinating and vital area within computer science and mathematics. At a high level, these are algorithms designed to find solutions to optimization problems that are "good enough," even if they aren't perfectly optimal. This approach becomes crucial when finding the absolute best solution would take an impractical amount of time or computational resources, a common scenario for a class of problems known as NP-hard problems. Imagine trying to plan the shortest possible route for a delivery driver visiting hundreds of locations – finding the single best route might take a supercomputer weeks, but an approximation algorithm could find a route that's very close to optimal in just minutes. The world of approximation algorithms offers intellectually stimulating challenges and the opportunity to make a real-world impact. Developing these algorithms often involves clever problem-solving and a deep understanding of mathematical concepts. Furthermore, these algorithms are the engines behind solutions to critical practical problems, from designing efficient communication networks and scheduling complex manufacturing processes to optimizing resource allocation in logistics and even refining machine learning models. The ability to devise an algorithm that provides a near-optimal solution quickly can translate into significant savings in time, money, and resources for businesses and organizations.

For those intrigued by computational problem-solving and its practical applications, exploring approximation algorithms can be a rewarding journey. OpenCourser offers a variety of resources, including online courses and books, to help you build a solid foundation. You can easily browse through thousands of courses in Computer Science to find options that suit your learning style and goals. Don't forget to use the "Save to list" feature to keep track of interesting courses and refer back to them later using the manage list page.

What Exactly Are Approximation Algorithms?

To truly appreciate approximation algorithms, it's helpful to understand what sets them apart from their "exact" counterparts and why they are so essential in many real-world situations.

Definition and Purpose of Approximation Algorithms

An approximation algorithm is a method for solving optimization problems that aims to find a solution close to the optimal one, within a provable bound, in an amount of time that is considered efficient (typically polynomial time). The core purpose is to tackle problems where finding the exact best solution is computationally infeasible, often because the problem is NP-hard. Instead of getting stuck trying to find the perfect answer, which might take an impractical amount of time, an approximation algorithm provides a workable, near-optimal solution quickly.

Think of it like trying to fit as many items of different sizes as possible into a backpack (the knapsack problem, a classic example). An exact algorithm would try every single combination to find the absolute best way to pack, which becomes incredibly time-consuming as the number of items grows. An approximation algorithm, on the other hand, might use a simpler rule, like "always pack the most valuable item that still fits," which might not be perfect but will likely give a good result much faster.

The "provable bound" is a key feature. This means that for a given approximation algorithm, we can mathematically guarantee how far its solution will be from the optimal one, at worst. This guarantee is often expressed as an approximation ratio or performance guarantee.

Key Differences from Exact Algorithms

The primary distinction between approximation algorithms and exact algorithms lies in their goals and guarantees. Exact algorithms are designed to find the absolute optimal solution to a problem. They leave no stone unturned and, if given enough time, will produce the best possible answer. However, for many important problems (NP-hard problems), "enough time" can mean an astronomically large amount, rendering exact algorithms impractical for all but the smallest instances.

Approximation algorithms, in contrast, trade a bit of optimality for efficiency. They don't promise the perfect solution, but they do promise a solution that is within a certain factor of the optimal, and they promise to find it in a reasonable (polynomial) amount of time. This makes them invaluable for large-scale, real-world applications where a good solution now is better than a perfect solution too late (or never).

So, while an exact algorithm for the traveling salesman problem would aim for the single shortest route, an approximation algorithm would aim for a route that is, for example, guaranteed to be no more than 1.5 times the length of the shortest route, but findable in a fraction of the time.

Real-World Scenarios Requiring Approximation Methods

The need for approximation algorithms is widespread across numerous industries and scientific disciplines. Any situation involving complex optimization where finding the perfect solution is too slow or costly is a candidate for approximation techniques.

Consider network design: telecommunication companies need to lay cables to connect many points with minimal cost. This is a variation of the Steiner tree problem or facility location problems, which are NP-hard. Approximation algorithms help design networks that are cost-effective and built in a timely manner.

In scheduling and resource allocation, think about a busy factory trying to schedule jobs on machines to minimize completion time, or an airline assigning crews to flights. These are complex optimization tasks where finding the absolute best schedule might be impossible within practical timeframes. Approximation algorithms provide good, workable schedules quickly. The bin packing problem, where items of different sizes must be packed into a minimum number of bins, is another classic example with applications in logistics and resource management.

Even in machine learning, approximation can play a role. For instance, some complex models might be approximated by simpler ones to reduce computation time during inference or to make them deployable on resource-constrained devices. Additionally, training some machine learning models involves solving large optimization problems where approximation techniques can speed up the process.

Other examples include the vertex cover problem (finding a minimum set of vertices in a graph that "covers" all edges), which has applications in areas like circuit design and network security, and the set cover problem (finding a minimum number of sets whose union covers all elements in a universe), relevant to problems like facility location and database query optimization.

These are just a few examples, but they illustrate the broad applicability and critical importance of approximation algorithms in solving real-world computational challenges efficiently.

If these concepts are new to you, or if you're looking to solidify your understanding, the following courses provide excellent introductions to the world of algorithms and the types of problems approximation algorithms address.

For those who prefer a comprehensive text, these books offer in-depth coverage of algorithmic concepts, including the foundations necessary for understanding approximation algorithms.

Key Concepts in Approximation Algorithms

To navigate the world of approximation algorithms effectively, it's essential to grasp some of the core theoretical concepts that underpin their design and analysis. These concepts provide the language and mathematical tools for understanding how well these algorithms perform and how they are categorized.

Performance Ratios and Approximation Factors

The cornerstone of evaluating an approximation algorithm is its performance ratio, also known as the approximation factor or approximation ratio. This ratio quantifies the quality of the solution returned by the approximation algorithm compared to the optimal solution. For a minimization problem (where the goal is to find the smallest possible value, like the shortest path), the performance ratio ρ (rho) is defined as C / C*, where C is the cost of the solution found by the approximation algorithm, and C* is the cost of the optimal solution. Since C will always be greater than or equal to C*, ρ will always be greater than or equal to 1. A ratio of 1 means the algorithm found the optimal solution.

For a maximization problem (where the goal is to find the largest possible value, like the maximum profit), the ratio is often defined as C* / C, where C is the value of the solution found by the approximation algorithm and C* is the optimal value. Here, 0 < C ≤ C*, so this ratio is also greater than or equal to 1. Alternatively, it can be expressed as C / C*, which would be a value between 0 and 1, with 1 being optimal. The key is to be consistent in interpretation. An algorithm that guarantees a performance ratio of ρ is called a ρ-approximation algorithm.

For example, a 2-approximation algorithm for a minimization problem guarantees that the solution it finds will be at most twice the cost of the optimal solution. This guarantee is a worst-case bound; the algorithm might perform much better on average or on specific instances, but it will never be worse than that factor of 2. Understanding these ratios is crucial for choosing the right algorithm when faced with a trade-off between solution quality and computation time.

Classes of Approximation Algorithms (e.g., PTAS, FPTAS)

Approximation algorithms can be further classified based on the nature of their approximation ratios. Two important classes are Polynomial Time Approximation Schemes (PTAS) and Fully Polynomial Time Approximation Schemes (FPTAS).

A Polynomial Time Approximation Scheme (PTAS) is an algorithm that can achieve an approximation ratio of (1 + ε) for any ε > 0 for minimization problems (or (1 - ε) for maximization problems). The crucial part is that for any fixed ε, the algorithm runs in polynomial time with respect to the input size 'n'. However, the running time might depend exponentially on 1/ε (e.g., n^(1/ε)). This means that as you demand better and better approximations (smaller ε), the running time can increase dramatically, though it remains polynomial in 'n' for that fixed, desired level of accuracy.

A Fully Polynomial Time Approximation Scheme (FPTAS) is even more powerful. Like a PTAS, it can achieve a (1 + ε) approximation. However, an FPTAS requires that the algorithm's running time is polynomial in both the input size 'n' and 1/ε. This is a much stronger guarantee, meaning that even as you demand very high precision (very small ε), the algorithm remains efficient. Problems that admit an FPTAS are considered to be "easier" to approximate to a high degree of accuracy compared to those that only have a PTAS or a constant-factor approximation.

Not all NP-hard optimization problems admit a PTAS or FPTAS. The existence of such schemes depends on the specific structure of the problem.

Reduction Techniques and Hardness of Approximation

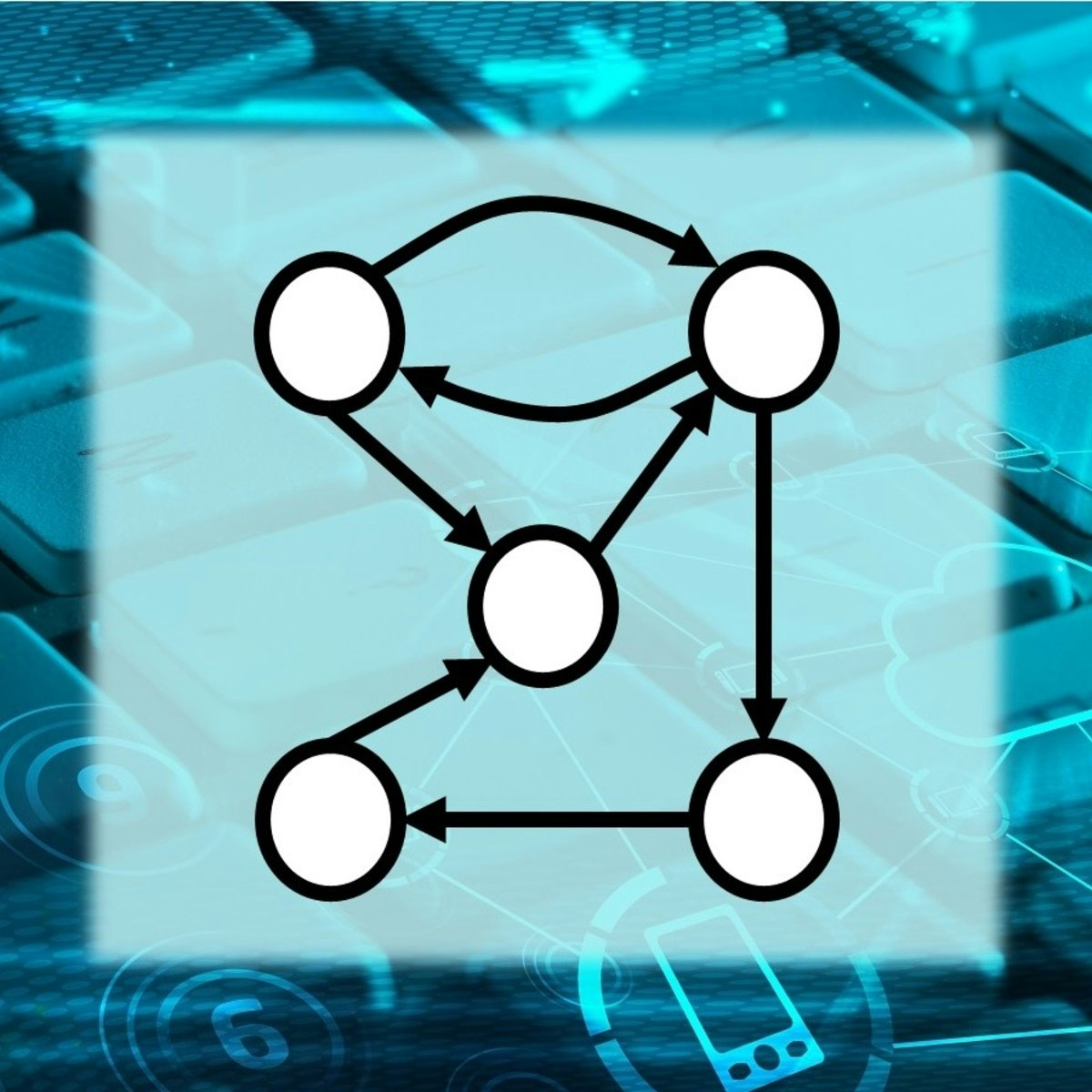

Reduction is a fundamental concept in complexity theory. If problem A can be "reduced" to problem B, it means that an algorithm for solving B can be used (with some polynomial-time transformations) to solve A. This is often used to prove that a new problem is NP-hard by showing that a known NP-hard problem can be reduced to it.

In the context of approximation algorithms, reductions can also be used to transfer approximability results. If problem A has a ρ-approximation and can be reduced to problem B in an "approximation-preserving" way, then problem B might also be approximable with a related factor.

Conversely, there's the concept of hardness of approximation (also known as inapproximability). This field aims to prove limits on how well certain NP-hard problems can be approximated in polynomial time, assuming P ≠ NP. For some problems, it has been proven that achieving an approximation ratio better than a certain threshold is itself NP-hard. This means that unless P=NP (which is widely believed to be false), no polynomial-time algorithm can guarantee a better approximation.

The PCP theorem (Probabilistically Checkable Proofs theorem) is a landmark result in this area, providing a powerful tool for proving hardness of approximation results for many problems. For instance, it has been used to show that for some problems, the approximation ratios achieved by well-known simple algorithms are essentially the best possible unless P=NP. Understanding hardness of approximation is crucial because it tells us when to stop searching for better approximation algorithms and perhaps focus on heuristics or exact algorithms for smaller instances if higher precision is absolutely required.

These foundational courses delve deeper into the analysis of algorithms and the complexity that necessitates approximation.

For a rigorous theoretical grounding, these books are highly recommended.

Historical Development

The field of approximation algorithms didn't emerge in a vacuum. Its development is intertwined with the broader history of computer science, particularly the advancements in complexity theory and the persistent efforts to tackle computationally hard problems. Understanding this evolution provides context to the current state of the art.

Milestones in the 20th Century (e.g., Traveling Salesman Problem)

The seeds of approximation algorithms were sown in the mid-20th century as researchers began to grapple with problems that resisted efficient exact solutions. While the formal theory of NP-completeness was yet to be fully developed, the intractability of certain problems was becoming apparent. Early work in operations research and graph theory laid some of the groundwork.

A significant milestone was the work by Graham in 1966, who analyzed the performance of a simple greedy algorithm for a scheduling problem, providing one of the first worst-case performance ratio analyses. This was a precursor to the formal study of approximation ratios.

The Traveling Salesman Problem (TSP), a classic NP-hard problem, has been a driving force in the development of approximation algorithms. The challenge of finding the shortest tour visiting a set of cities and returning to the start has spurred numerous algorithmic innovations. In the 1970s, after the theory of NP-completeness solidified the understanding of such problems' difficulty, the focus on finding good approximate solutions intensified. Christofides' algorithm, developed in 1976, provided a 1.5-approximation for the TSP under the triangle inequality (metric TSP), a landmark result that remains influential. Around the same time, similar independent work was conducted by Serdyukov in the USSR.

Other problems like bin packing and set cover also saw early approximation algorithms developed during this period, often with analyses of their worst-case behavior. The book "Computers and Intractability: A Guide to the Theory of NP-Completeness" by Garey and Johnson in 1979 became a cornerstone, cataloging many NP-complete problems and further motivating the search for approximation techniques.

Impact of Complexity Theory Advancements

The formalization of NP-completeness by Cook (1971) and Karp (1972) was a watershed moment. It provided a rigorous framework for classifying problems as "hard" and fueled the understanding that for these NP-hard problems, seeking exact solutions in polynomial time was likely futile (assuming P ≠ NP). This realization directly spurred the growth of approximation algorithms as a legitimate and necessary field of study. If you can't solve it perfectly and quickly, how well can you solve it approximately and quickly?

Further advancements in complexity theory, particularly in the 1990s with the development of the PCP theorem (Probabilistically Checkable Proofs), revolutionized the understanding of the limits of approximation. The PCP theorem and related results allowed researchers to prove "hardness of approximation" or "inapproximability" results. These results establish lower bounds on the approximation ratios achievable in polynomial time for certain problems, unless P=NP. This meant that for some problems, researchers could identify thresholds beyond which further improvement in the approximation guarantee was computationally as hard as finding an exact solution.

This interplay between finding better approximation algorithms and proving limits on approximability has been a defining characteristic of the field. It has led to a much deeper understanding of the fine-grained complexity of NP-hard problems.

Key Contributors and Landmark Papers

Many brilliant minds have contributed to the field of approximation algorithms. Beyond those already mentioned (Graham, Christofides, Cook, Karp, Garey, Johnson), numerous researchers have developed foundational techniques and solved long-standing open problems.

The development of techniques like linear programming relaxations, randomized rounding, and the primal-dual method involved contributions from a wide array of scientists over several decades. For instance, the work of Goemans and Williamson in the 1990s on using semidefinite programming for the Max-Cut problem was a significant breakthrough, achieving an approximation ratio that was notably better than previously known methods and connecting approximation algorithms to new mathematical tools.

Several influential textbooks have also shaped the field. "Approximation Algorithms for NP-Hard Problems" edited by Dorit S. Hochbaum (1997), "Approximation Algorithms" by Vijay V. Vazirani (2001), and "The Design of Approximation Algorithms" by David P. Williamson and David B. Shmoys (2011) are considered seminal texts that have educated generations of students and researchers.

The history of approximation algorithms is a story of persistent inquiry, clever insights, and the power of mathematical rigor in tackling some of the most challenging computational problems. It's a field that continues to evolve as new problems emerge and new algorithmic techniques are discovered.

For those interested in the theoretical underpinnings that drive approximation algorithms, these resources offer a deeper dive.

These books provide comprehensive coverage of algorithms, including the complexity theory that motivates approximation.

Algorithm Design Techniques

Designing effective approximation algorithms requires a toolkit of established techniques. These methods provide structured approaches to crafting algorithms that are both efficient and offer provable guarantees on solution quality. While the specifics vary by problem, several core strategies are frequently employed.

Greedy Algorithms and Local Search

Greedy algorithms are among the simplest and most intuitive design techniques. A greedy algorithm builds a solution step-by-step, at each point making the choice that seems best at that moment—the "locally optimal" choice—without regard for future consequences. While this approach doesn't always yield a globally optimal solution (and sometimes can be very far off), for many problems, a carefully designed greedy strategy can provide a good approximation ratio. Examples include Kruskal's or Prim's algorithm for Minimum Spanning Trees (which actually find the exact optimum) and greedy approaches for problems like set cover or the fractional knapsack problem. The challenge often lies in proving the approximation guarantee of a greedy choice.

Local search algorithms take a different approach. They start with an initial feasible solution and then iteratively try to improve it by making small, "local" changes. For example, in the Traveling Salesman Problem, a local change might involve swapping the order of two cities in the tour. If a local change leads to a better solution, the algorithm adopts it and continues searching from the new solution. This process repeats until no local change can improve the current solution, at which point a "local optimum" is reached. While a local optimum isn't necessarily the global optimum, local search can be quite effective. The quality of the approximation often depends on how the "neighborhood" (the set of possible local changes) is defined and the strategy for exploring it. Analyzing the approximation ratio of local search algorithms can be complex, often involving arguments about the structure of local optima.

Linear Programming Relaxations

Many combinatorial optimization problems can be formulated as integer linear programs (ILPs), where the goal is to optimize a linear objective function subject to linear constraints, with the added condition that variables must be integers. Solving ILPs exactly is generally NP-hard.

The technique of linear programming (LP) relaxation involves dropping the integer requirement on the variables, allowing them to take on fractional values. The resulting LP can be solved optimally in polynomial time. The solution to the LP relaxation provides a bound on the optimal ILP solution (e.g., for a minimization problem, the LP solution value will be less than or equal to the ILP optimal value). The key step is then to "round" the fractional LP solution to a feasible integer solution for the original problem without losing too much in terms of solution quality. Various rounding techniques exist, and the success of this approach hinges on how well the rounding preserves the quality indicated by the LP solution. This method is powerful because it leverages the mature theory and efficient solvers for linear programming.

Randomized Rounding and Primal-Dual Methods

Randomized rounding is a technique often used in conjunction with LP relaxations. Instead of deterministically rounding a fractional variable to an integer, randomized rounding treats the fractional value as a probability. For example, if a variable x_i in the LP solution has a value of 0.7, it might be rounded to 1 with probability 0.7 and to 0 with probability 0.3. The properties of the resulting integer solution are then analyzed in expectation. This can often lead to algorithms with good approximation ratios, sometimes simpler or better than deterministic rounding schemes.

The primal-dual method is another sophisticated technique rooted in the theory of linear programming duality. Many optimization problems have a "primal" LP formulation and a corresponding "dual" LP formulation. The optimal values of the primal and dual LPs are equal. The primal-dual method for approximation algorithms typically involves iteratively constructing a feasible primal solution and a feasible dual solution simultaneously, using the dual solution to guide the construction of the primal. The algorithm ensures that the cost of the primal solution is bounded by some factor of the cost of the dual solution, which in turn provides an approximation guarantee relative to the true optimum. This technique has been successfully applied to a variety of problems, particularly in network design and covering problems.

These design techniques are not mutually exclusive and are often combined. The choice of technique depends heavily on the specific structure of the problem at hand. Mastering these methods is a key part of becoming proficient in designing approximation algorithms.

To gain practical experience with these techniques and see them in action, consider exploring these courses:

The following books offer extensive discussions and examples of these design paradigms:

Applications in Industry

The theoretical power of approximation algorithms translates into significant practical value across a multitude of industries. Businesses and organizations continually face complex optimization challenges where finding the absolute best solution is either too slow or computationally prohibitive. In these scenarios, approximation algorithms provide the means to achieve near-optimal results efficiently, leading to cost savings, improved performance, and better resource utilization.

Network Design and Optimization

One of the most prominent application areas for approximation algorithms is in network design and optimization. This encompasses a wide range of problems, from designing physical networks like telecommunication systems, computer networks, and transportation routes, to optimizing flows and connectivity within existing networks. Problems such as the Steiner Tree Problem (finding the minimum cost tree connecting a set of required terminals), various facility location problems (deciding where to place facilities like warehouses or cell towers to serve clients optimally), and network routing problems often rely on approximation algorithms.

For instance, when a telecommunications company plans to lay fiber optic cables to connect multiple cities, finding the absolute minimum amount of cable is an NP-hard problem. An approximation algorithm can design a network topology that is provably close to the minimum cost, enabling the company to make economically sound decisions in a practical timeframe. Similarly, in wireless network design, algorithms help determine the placement of base stations to maximize coverage while minimizing interference and cost, often using approximation techniques for problems like set cover or maximum coverage.

Scheduling and Resource Allocation

Scheduling and resource allocation problems are ubiquitous in manufacturing, logistics, computing, and project management. These problems involve assigning tasks to resources (e.g., jobs to machines, appointments to time slots, personnel to projects) in a way that optimizes some objective, such as minimizing completion time, maximizing throughput, or minimizing costs. Many scheduling problems are NP-hard, especially when dealing with multiple constraints, dependencies between tasks, and diverse resources.

Approximation algorithms are crucial for developing effective scheduling systems. For example, in a factory, an algorithm might schedule production runs on different machines to minimize the overall time it takes to complete all orders (makespan minimization). In cloud computing, algorithms allocate virtual machines and other resources to users in a way that balances load and ensures quality of service. The job shop scheduling problem is a well-known example where approximation is often necessary.

Airlines use sophisticated optimization techniques, often involving approximation, for crew scheduling, aircraft routing, and gate assignments. These algorithms must handle a vast number of variables and constraints to produce efficient and cost-effective operational plans.

Machine Learning Model Approximation

While not always the first area that comes to mind, approximation techniques also find applications in machine learning. Sometimes, very large and complex machine learning models, while accurate, may be too slow for real-time prediction or too resource-intensive for deployment on devices with limited computational power (like mobile phones or embedded systems). In such cases, model approximation or model compression techniques can be used. These techniques aim to create a smaller, faster model that mimics the behavior of the larger, more complex model, with only a small loss in accuracy. This can involve methods like pruning (removing less important connections in a neural network), quantization (using lower-precision numbers for weights), or knowledge distillation (training a smaller model to reproduce the outputs of a larger one).

Furthermore, the training process of some machine learning models itself involves solving large-scale optimization problems. While exact solutions to these optimization problems might be sought, sometimes approximation methods or stochastic gradient descent variants (which can be seen as a form of iterative approximation) are used to find good model parameters in a feasible amount of time, especially with massive datasets.

The ability of approximation algorithms to deliver high-quality solutions efficiently makes them indispensable tools in these and many other industrial domains, driving innovation and operational excellence.

To understand how algorithms, including approximation techniques, are applied in specific domains like machine learning and data analysis, consider these courses:

The following books explore algorithms with a broad view of their applications:

Academic Pathways in Approximation Algorithms

For those inspired to delve deep into the world of approximation algorithms, a structured academic path can provide the necessary theoretical foundations and research skills. This journey typically involves a strong grounding in computer science and mathematics, often culminating in specialized graduate-level study.

Core Courses in Theoretical Computer Science

A solid understanding of approximation algorithms begins with foundational coursework in theoretical computer science. Undergraduate students interested in this area should focus on courses such as:

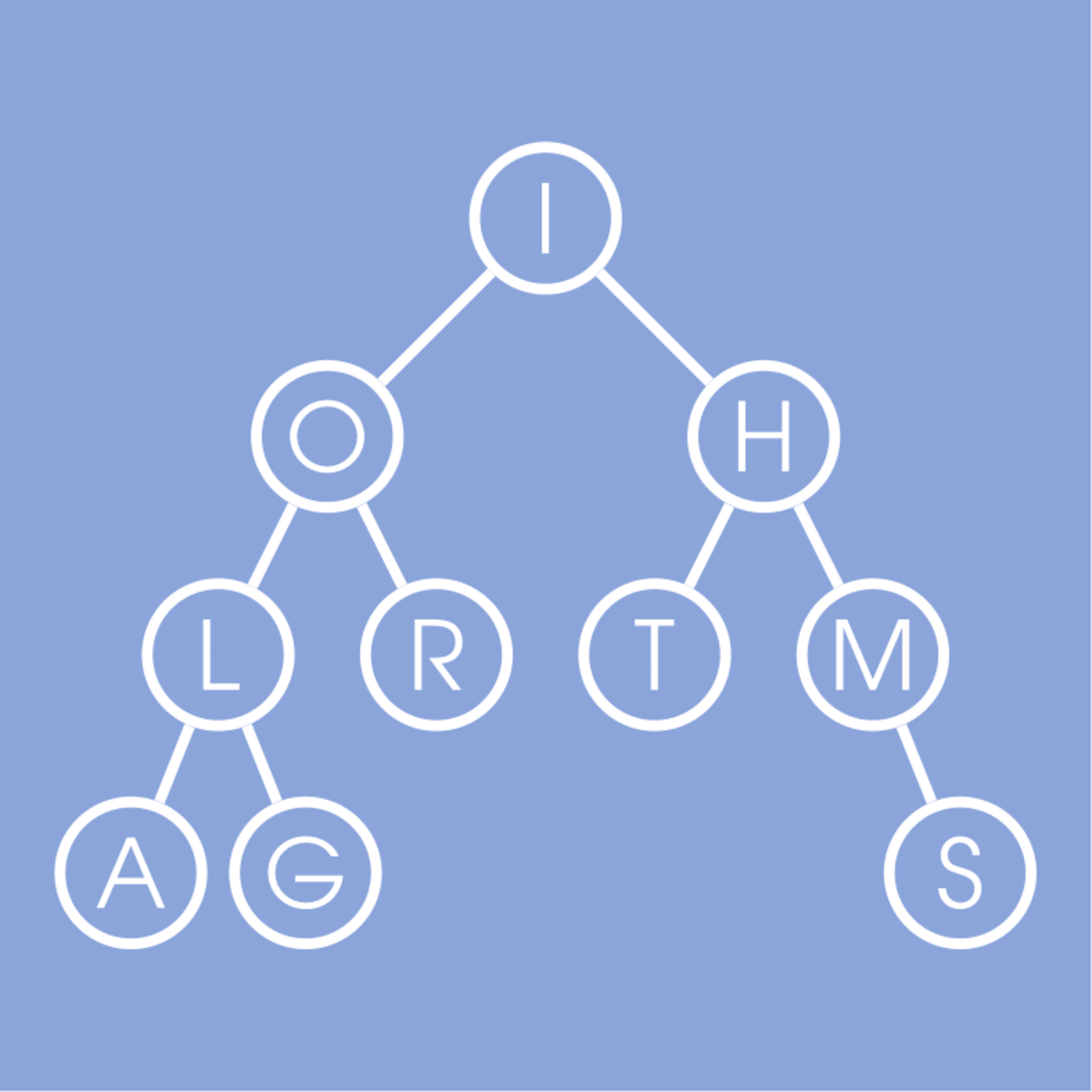

- Data Structures and Algorithms: This is fundamental. A deep understanding of common data structures (arrays, lists, trees, graphs, hash tables) and standard algorithm design paradigms (divide and conquer, dynamic programming, greedy algorithms) is essential. You'll learn how to analyze algorithms for correctness and efficiency (time and space complexity).

- Discrete Mathematics: This area provides the mathematical language for computer science, including logic, set theory, combinatorics, graph theory, and proof techniques. Graph theory, in particular, is central to many approximation algorithm problems.

- Theory of Computation / Automata Theory: These courses introduce formal models of computation (like Turing machines), explore the limits of what can be computed, and define complexity classes like P, NP, and NP-complete. Understanding NP-completeness is key to appreciating why approximation algorithms are needed.

- Linear Algebra and Probability & Statistics: Many advanced approximation techniques, such as those based on linear programming relaxations or randomized algorithms, require a good grasp of linear algebra and probability theory.

At the graduate level, students would then take more specialized courses, potentially including:

- Advanced Algorithms: Building on undergraduate algorithms, this would cover more sophisticated techniques and analyses, often with a greater emphasis on randomized algorithms, amortized analysis, and more complex data structures.

- Approximation Algorithms: A dedicated course focusing specifically on the design and analysis techniques discussed earlier (greedy, local search, LP rounding, primal-dual, etc.), performance ratios, and hardness of approximation.

- Complexity Theory: A deeper dive into complexity classes, reductions, and topics like the PCP theorem and its implications for inapproximability.

- Combinatorial Optimization: This field is closely related and often overlaps with approximation algorithms, focusing on finding optimal solutions to problems defined on discrete structures.

- Linear and Semidefinite Programming: Understanding the theory and application of these optimization techniques is vital for many modern approximation algorithms.

Research Areas for PhD Candidates

For those pursuing a PhD, approximation algorithms offer a rich landscape of research opportunities. Some active research areas include:

- Developing new approximation algorithms: There are still many NP-hard problems for which the best possible approximation ratio is unknown, or where existing algorithms are complex and simpler/faster alternatives are sought. This includes work on classic problems like the Traveling Salesman Problem or graph coloring, as well as newer problems arising from areas like machine learning or computational biology.

- Proving hardness of approximation (inapproximability) results: Establishing tight bounds on how well a problem can be approximated is as important as finding good algorithms. This often involves sophisticated mathematical tools and connections to complexity theory, such as the Unique Games Conjecture.

- Approximation algorithms for dynamic or online problems: In many real-world scenarios, the input to a problem arrives sequentially, or changes over time. Designing approximation algorithms that can handle such dynamic inputs efficiently and maintain good performance guarantees is a challenging area.

- Approximation in the presence of uncertainty: Dealing with problems where input data is noisy, incomplete, or stochastic. This often involves robust optimization or stochastic approximation techniques.

- Connections to other fields: Applying approximation algorithm techniques to problems in areas like algorithmic game theory (e.g., designing mechanisms with good approximation properties for social welfare), bioinformatics (e.g., sequence alignment, phylogenetic tree construction), machine learning, and quantum computing.

- Parameterized approximation: This combines ideas from parameterized complexity and approximation algorithms, seeking to find algorithms whose approximation ratio or running time depends favorably on some structural parameter of the input instance, rather than just its overall size.

Collaboration Opportunities with Industry

While deeply theoretical, the field of approximation algorithms has strong ties to industry. Many companies, particularly in tech, logistics, finance, and manufacturing, encounter large-scale optimization problems where approximation algorithms are directly applicable. This creates opportunities for collaboration:

- Internships and sponsored research: PhD students and faculty often collaborate with industry research labs on problems of mutual interest. Companies can provide real-world problem instances and data, while academic researchers bring cutting-edge algorithmic expertise.

- Consulting: Experts in approximation algorithms may consult for companies looking to optimize specific processes or develop custom algorithmic solutions.

- Startups: New companies are sometimes formed around novel algorithmic solutions to challenging optimization problems in specific domains.

- Open source projects: Contributing to or utilizing open-source libraries that implement optimization algorithms can be a form of collaboration and knowledge sharing.

The demand for individuals who can bridge the gap between theory and practice in optimization is significant. A strong academic background in approximation algorithms can open doors to impactful roles in both academia and industry.

To begin exploring these academic pathways, the following courses can provide a strong foundation in algorithmic thinking and problem-solving:

For those looking for foundational texts, these books are invaluable resources in the study of algorithms:

Online Learning and Self-Study Resources

For individuals who are self-taught developers, career changers, or those looking to supplement their formal education, the world of online learning offers a wealth of resources to understand and master approximation algorithms. With dedication and the right approach, you can build a strong foundation in this field without necessarily following a traditional academic route.

One of the great advantages of online learning is its flexibility. You can learn at your own pace, revisit complex topics as needed, and often choose from a variety of teaching styles and platforms. OpenCourser is an excellent starting point, as it aggregates courses from numerous providers, allowing you to search for specific topics like "approximation algorithms" and compare different offerings. You can also explore broader categories like Algorithms to build foundational knowledge.

Open-Source Textbooks and Lecture Notes

Many universities and individual academics generously make their course materials available online. This often includes full textbooks, comprehensive lecture notes, and even video recordings of lectures. Searching for "approximation algorithms lecture notes PDF" or "open source algorithms textbook" can yield high-quality academic resources.

These materials often form the basis of university courses and can provide a rigorous, in-depth understanding of the subject. While they might be challenging at times, they offer unparalleled depth. Look for materials from reputable computer science departments. Some well-known books, like "Approximation Algorithms" by Vijay V. Vazirani or "The Design of Approximation Algorithms" by Williamson and Shmoys, are standard references and parts of their content or related notes might be found through academic channels.

When using these resources, it's helpful to be disciplined. Treat it like a real course: set a schedule, work through examples, and try to solve the exercises provided. This active engagement is crucial for learning theoretical material.

Interactive Coding Platforms

While approximation algorithms are often theoretical, implementing them and related foundational algorithms can greatly enhance understanding. Interactive coding platforms provide environments where you can practice problem-solving and coding skills. Websites like LeetCode, HackerRank, and TopCoder feature a vast array of algorithmic problems, ranging from basic to very advanced. While not all problems will directly be about designing a novel approximation algorithm, they will sharpen your skills in algorithm design, complexity analysis, and coding – all of which are essential.

Some platforms might have specific sections or contests focused on optimization problems where approximate solutions are relevant. Participating in these challenges can be a great way to apply what you're learning. Even working on problems that require exact solutions for smaller inputs (like many NP-hard problems on these platforms) helps you understand the structure of these problems, which is crucial for designing approximation algorithms for larger instances.

OpenCourser can also point you to courses that incorporate coding exercises. Look for courses that emphasize practical application alongside theory. You can explore courses in broader areas like Data Structures or Programming to build the necessary coding proficiency.

MOOC Specializations in Algorithms

Massive Open Online Courses (MOOCs) offered by platforms like Coursera, edX, and others provide structured learning paths, often in the form of "Specializations" or "MicroMasters" programs. These consist of a series of related courses that build upon each other, culminating in a deeper understanding of a subject area like algorithms.

Look for specializations in "Algorithms," "Data Structures and Algorithm Design," or "Theoretical Computer Science." While a specialization might not focus exclusively on approximation algorithms, it will likely cover NP-completeness, core algorithmic techniques (greedy, dynamic programming, graph algorithms), and complexity analysis, all of which are prerequisites for understanding approximation algorithms. Some advanced specializations or individual courses within them may indeed delve directly into approximation techniques.

These structured programs often include video lectures, readings, quizzes, and programming assignments, providing a comprehensive learning experience. Many also offer certificates upon completion, which can be a valuable addition to your profile. OpenCourser features many such courses and specializations, making it easier to find and compare them. For instance, you might find courses like "Approximation Algorithms Part I" and "Approximation Algorithms Part II" that are directly relevant. [m2w5od, 709dwi]

For self-learners, remember that persistence is key. Theoretical computer science can be challenging, but the rewards in terms of problem-solving ability and understanding are immense. Don't hesitate to use online forums, study groups, and the resources available on OpenCourser's Learner's Guide to support your learning journey. The guide offers tips on creating a study plan, staying motivated, and making the most of online courses.

Here are some courses available on OpenCourser that can kickstart your self-study journey or complement your existing learning:

And for those who prefer books, these are excellent resources for self-paced learning:

Career Progression and Roles

A strong foundation in approximation algorithms, and algorithms in general, can open doors to a variety of intellectually stimulating and financially rewarding career paths. While "Approximation Algorithm Specialist" might not be a common standalone job title, the skills developed in this area are highly valued in roles that involve complex problem-solving, optimization, and efficient computation. For those new to the field or considering a career pivot, it's important to have realistic expectations while also recognizing the significant opportunities available.

The journey into such roles often requires dedication and continuous learning. It's a field where a deep understanding of theoretical concepts translates directly into practical problem-solving capabilities. If you find yourself drawn to the elegance of algorithmic solutions and the challenge of tackling hard computational problems, this path can be immensely satisfying. Remember that every expert was once a beginner, and consistent effort in learning and applying these concepts will build your expertise over time.

Entry-Level Positions (e.g., Algorithm Engineer)

For individuals starting their careers with a good grasp of algorithms (including approximation concepts, often as part of a broader algorithms skillset), entry-level roles like Algorithm Engineer, Software Engineer (with a focus on backend or systems), or Data Scientist (with an algorithmic focus) are common. In these roles, you might be involved in:

- Designing and implementing algorithms for specific product features or internal tools.

- Analyzing the performance of existing systems and identifying bottlenecks that could be addressed with more efficient algorithms.

- Working with datasets to develop algorithmic solutions for tasks like recommendation, search, or anomaly detection.

- Collaborating with senior engineers and researchers to translate theoretical algorithmic ideas into practical code.

A bachelor's or master's degree in Computer Science, Mathematics, or a related field is typically expected. Strong programming skills (Python, C++, Java are common) and a solid understanding of data structures, algorithm analysis, and possibly machine learning fundamentals are crucial. Companies in sectors like tech (search engines, social media, e-commerce), finance (algorithmic trading, risk analysis), and logistics often hire for these roles.

The initial years are about building practical experience, applying theoretical knowledge to real-world constraints, and learning from more experienced colleagues. Don't be discouraged if the problems seem daunting at first; this is a field where continuous learning and problem-solving are part of the job. Your ability to break down complex problems and think algorithmically will be your greatest asset.

Advanced Roles in R&D and Optimization

With experience and potentially further education (like a PhD or specialized Master's), professionals can move into more advanced roles focused on research and development (R&D) or specialized optimization. Titles might include Research Scientist (Optimization), Senior Algorithm Engineer, Principal Engineer (Algorithms), or Optimization Specialist.

In these roles, responsibilities often involve:

- Leading the design of novel algorithms (including approximation algorithms) for challenging, often open-ended, business or scientific problems.

- Conducting research to stay at the forefront of algorithmic advancements and identifying opportunities to apply new techniques.

- Working on problems where standard solutions are insufficient, requiring deep theoretical understanding and creative problem-solving.

- Publishing research findings or developing intellectual property.

- Mentoring junior engineers and setting the technical direction for algorithmic development within a team or organization.

These positions often require a very strong theoretical background, a proven track record of solving hard problems, and sometimes a portfolio of publications or patents. Industries that rely heavily on cutting-edge optimization, such as large tech companies, quantitative finance, advanced manufacturing, and research institutions, are primary employers. A PhD is often preferred, or even required, for roles that are heavily research-focused.

Transitioning to Leadership in Tech Firms

Individuals who excel in algorithmic roles and also demonstrate strong communication, strategic thinking, and people management skills can transition into leadership positions within tech firms. This could include roles like Engineering Manager, Director of Engineering, or even more senior technical leadership roles like Chief Technology Officer (CTO) or VP of Engineering in algorithmically-driven companies.

In these leadership roles, the focus shifts from direct algorithm design to:

- Building and managing teams of engineers and researchers.

- Defining the technical vision and strategy for algorithmic products or platforms.

- Making high-level architectural decisions.

- Balancing technical innovation with business objectives and product roadmaps.

- Fostering a culture of technical excellence and continuous learning.

While day-to-day coding might decrease, a deep understanding of algorithms remains crucial for making informed decisions, guiding technical teams effectively, and understanding the core technologies that drive the business. The ability to communicate complex technical ideas to both technical and non-technical audiences becomes increasingly important.

Embarking on a career that leverages expertise in approximation algorithms is a commitment to lifelong learning and intellectual challenge. It's a path that can be incredibly rewarding, offering the chance to solve some of the most interesting and impactful problems in technology and science. OpenCourser provides resources like the Career Development section to help you plan your professional growth.

To build foundational skills relevant to these roles, consider these courses:

For those looking to deepen their understanding through comprehensive texts:

Challenges and Limitations

While approximation algorithms offer powerful solutions for tackling computationally hard problems, they are not without their own set of challenges and limitations. Understanding these aspects is crucial for both algorithm designers and practitioners who deploy these algorithms in real-world systems. It's important to approach their use with a clear understanding of the trade-offs involved.

Trade-offs Between Accuracy and Efficiency

The very essence of an approximation algorithm involves a trade-off: sacrificing some degree of solution optimality in exchange for computational efficiency (typically, polynomial runtime). The central challenge is finding the right balance. An algorithm that is very fast but provides a very poor approximation (a high approximation ratio for a minimization problem) might not be useful in practice. Conversely, an algorithm that offers an excellent approximation guarantee but is still too slow for the specific application's needs is also not ideal.

For some problems, there's a known spectrum of algorithms offering different points on this trade-off curve. For example, a problem might have a fast 2-approximation algorithm, a slower 1.5-approximation algorithm, and perhaps even a Polynomial Time Approximation Scheme (PTAS) where one can get arbitrarily close to (1+ε)-approximation, but the runtime increases significantly as ε decreases. Choosing the appropriate algorithm requires a careful consideration of the specific application's tolerance for error versus its constraints on computation time and resources.

Furthermore, the "worst-case" guarantee provided by the approximation ratio might not always reflect typical performance. An algorithm might perform much better on average than its worst-case bound suggests. However, relying solely on average-case performance without understanding the worst-case can be risky in critical applications.

Handling Dynamic or Adversarial Inputs

Many classical approximation algorithms are designed for static problem instances where the entire input is known upfront. However, real-world systems often deal with dynamic inputs, where data arrives incrementally or changes over time. For example, in network routing, link capacities or travel times can change, or new routing requests can arrive. Designing approximation algorithms that can efficiently adapt to such changes while maintaining good performance guarantees is a significant challenge. This often falls under the umbrella of "online algorithms" or "dynamic algorithms."

Another challenge arises from adversarial inputs. The worst-case approximation ratio is defined over all possible inputs. An adversary, in theory, could construct an input instance specifically designed to make a particular approximation algorithm perform poorly (up to its proven worst-case bound). While this is a theoretical concern, it highlights that the performance guarantee is indeed a worst-case. In some security-sensitive applications or game-theoretic settings, considering how an algorithm performs against strategic or adversarial behavior can be important.

Robust approximation algorithms aim to perform well even under uncertainty or perturbations in the input data, which is a related and increasingly important area of research.

Ethical Implications of Approximate Decision-Making

When approximation algorithms are used to make decisions that affect people or allocate resources, significant ethical implications can arise. Because these algorithms, by definition, may not find the optimal solution, the "approximations" they make can lead to outcomes that are unfair, biased, or discriminatory, even if unintentionally.

Consider resource allocation problems, such as assigning limited medical supplies, scheduling public services, or even in hiring processes that use algorithmic screening. If an approximation algorithm is used, the deviation from optimality could mean that some individuals or groups are systematically disadvantaged. For example, an algorithm designed to approximately optimize delivery routes might inadvertently lead to certain neighborhoods receiving slower service if the "approximation" consistently deprioritizes them to achieve overall efficiency gains.

The choice of what objective function to approximate, and how "closeness" to optimal is defined, can embed biases. If historical data used to tune or design the algorithm reflects existing societal biases, the approximation algorithm might perpetuate or even amplify these biases. Ensuring fairness, transparency, and accountability when using approximation algorithms for decision-making is a critical challenge that requires careful consideration beyond just the mathematical performance guarantees. This often involves interdisciplinary collaboration with ethicists, social scientists, and domain experts.

Navigating these challenges requires a nuanced understanding of both the mathematical properties of the algorithms and the context in which they are applied. It underscores the responsibility of algorithm designers and users to be mindful of the potential broader impacts of their work.

To explore the complexities and advanced topics within algorithm design, including some that touch upon these challenges, these resources are recommended:

These books provide deeper insights into advanced algorithmic concepts:

Future Trends and Research Frontiers

The field of approximation algorithms is dynamic and continually evolving, driven by new computational paradigms, the increasing complexity of real-world problems, and cross-disciplinary fertilization of ideas. Researchers are actively exploring several exciting frontiers that promise to expand the capabilities and applicability of approximation techniques.

Quantum Approximation Algorithms

The advent of quantum computing has opened up new possibilities for algorithm design, and this extends to approximation algorithms. Quantum approximation algorithms aim to leverage the principles of quantum mechanics (like superposition and entanglement) to potentially achieve better approximation ratios or faster running times for certain NP-hard problems than what is believed to be possible with classical algorithms.

Research in this area is still in its relatively early stages, and building practical, fault-tolerant quantum computers remains a significant engineering challenge. However, theoretical work is exploring how quantum phenomena could be harnessed. For example, algorithms like the Quantum Approximate Optimization Algorithm (QAOA) are being investigated for their potential to solve combinatorial optimization problems. Identifying problems where quantum computers could offer a significant advantage in approximation is a key research direction. This often involves understanding the interplay between quantum algorithms and classical approximation techniques, and determining which parts of a problem are best suited for quantum versus classical processing.

Integration with AI-Driven Optimization

Artificial intelligence (AI), particularly machine learning (ML), is increasingly being integrated with traditional optimization techniques, including approximation algorithms. This synergy, often referred to as AI-driven optimization or learning-augmented algorithms, holds considerable promise.

One direction involves using ML to guide or improve existing approximation algorithms. For instance, ML models could learn to make better heuristic choices within a greedy or local search framework, potentially leading to better solution quality or faster convergence. ML could also be used to predict problem parameters or structures that allow for the selection of the most suitable approximation algorithm from a portfolio of options.

Conversely, approximation algorithms can play a role in making AI systems more efficient or robust. As mentioned earlier, model compression can be viewed as an approximation problem. Furthermore, the design of efficient training algorithms for large-scale ML models often involves techniques related to optimization and approximation.

The challenge lies in developing hybrid approaches that combine the rigorous performance guarantees of traditional approximation algorithms with the adaptive learning capabilities of AI, ensuring that the resulting systems are both effective and reliable.

Cross-Disciplinary Applications in Bioinformatics

Bioinformatics and computational biology have long been fertile ground for the application and development of algorithmic techniques, and approximation algorithms are no exception. Many fundamental problems in bioinformatics are computationally hard, making approximation a necessity.

Examples include:

- Sequence Alignment: Finding the best alignment between DNA, RNA, or protein sequences can be computationally intensive for very long sequences or large numbers of sequences. Approximation algorithms can help find near-optimal alignments efficiently.

- Phylogenetic Tree Reconstruction: Determining the evolutionary relationships between species by constructing phylogenetic trees from molecular data is often an NP-hard problem. Approximation algorithms are used to find trees that are good representations of the evolutionary history.

- Protein Folding and Structure Prediction: Predicting the 3D structure of a protein from its amino acid sequence is a grand challenge. While often tackled with heuristics and simulation, approximation concepts can inform the search for good conformational states.

- Genome Assembly: Reconstructing a full genome from fragmented sequence reads is a complex combinatorial puzzle where approximation algorithms can play a role in finding consistent and accurate assemblies.

The massive datasets generated by modern biological experiments continue to drive the need for highly efficient and scalable algorithms. The interplay between computer science, mathematics, and biology in this area is leading to novel approximation techniques tailored to the specific structures and constraints of biological problems.

These research frontiers highlight the ongoing vitality of approximation algorithms as a field. As computational challenges grow in scale and complexity across various domains, the need for innovative and effective approximation techniques will only increase.

For those looking to get a glimpse into advanced algorithmic topics that touch upon these frontiers, the following courses might be of interest:

These books often discuss the state-of-the-art and the theoretical underpinnings that drive future research:

Ethical Considerations in Approximation

As approximation algorithms become increasingly integrated into decision-making processes across various sectors, it is paramount to address the ethical considerations that arise from their use. While these algorithms offer efficiency in solving complex problems, their inherent nature of providing "good enough" rather than perfect solutions can lead to unintended and potentially unfair consequences if not carefully managed. Tech ethicists, product managers, policymakers, and algorithm designers all share a responsibility in navigating these complexities.

Bias in Resource Allocation Algorithms

One of the most significant ethical concerns is the potential for bias in resource allocation algorithms. Many real-world applications of approximation algorithms involve distributing limited resources, such as healthcare services, educational opportunities, financial loans, or even the routing of public services. If the data used to train or design these algorithms reflects existing societal biases, or if the objective function being approximated inadvertently favors certain groups over others, the resulting "approximate" solution can perpetuate or even amplify these inequities.

For example, an algorithm designed to optimize the placement of new public facilities (like parks or libraries) might, due to its approximation, consistently under-serve certain neighborhoods if historical data or proxy variables for "need" or "impact" are biased. Similarly, in scheduling systems, an approximation algorithm might lead to consistently less favorable shifts or routes for certain demographics if fairness metrics are not explicitly incorporated into the design and evaluation.

It is crucial to critically examine the objective functions being optimized and the data sources used. Developers must ask: "An approximation of what, for whom, and with what potential disparate impacts?" Simply achieving a good mathematical approximation ratio is not sufficient if the outcome is systematically unfair.

Transparency of Approximation Guarantees

The transparency of approximation guarantees and the behavior of the algorithm is another key ethical consideration. While an algorithm might come with a proven worst-case performance ratio (e.g., a 1.5-approximation), this mathematical guarantee might be difficult for non-experts or affected individuals to understand. What does it practically mean for a service to be "1.5 times worse than optimal" in a given context?

Moreover, the worst-case guarantee might not be representative of typical performance, or the algorithm might behave very differently on different subsets of the input space. If an algorithm consistently performs closer to its worst-case bound for certain demographic groups while performing near-optimally for others, this disparity needs to be understood and addressed, even if the overall worst-case guarantee is met for all.

Efforts towards algorithmic transparency should include not only explaining the mathematical guarantees in accessible terms but also providing insights into how the algorithm makes its "choices" and where the approximations might lead to suboptimal outcomes for specific cases or groups. This is particularly important when the decisions made by the algorithm have significant consequences for individuals.

Regulatory Compliance in Critical Systems

When approximation algorithms are deployed in critical systems—such as those in healthcare, finance, autonomous vehicles, or public safety—ensuring regulatory compliance and accountability becomes even more vital. Regulations may exist or are being developed to govern the use of automated decision-making systems, particularly concerning fairness, non-discrimination, safety, and reliability.

For approximation algorithms, this means that their design and deployment must consider how their "approximate" nature aligns with these regulatory requirements. Can an approximate solution meet the safety standards required for an autonomous system? Does an approximately fair allocation of resources satisfy non-discrimination laws? Proving compliance can be challenging because the deviation from optimality, even if bounded, introduces a degree of uncertainty or potential sub-optimality that needs to be carefully assessed against legal and ethical standards.

Mechanisms for auditing algorithmic decisions, explaining outcomes, and providing recourse for individuals adversely affected by these decisions are essential. This may involve developing new methodologies for testing and validating approximation algorithms not just for their mathematical performance but also for their adherence to ethical principles and regulatory frameworks.

Addressing these ethical considerations requires a proactive and multidisciplinary approach. It involves ongoing dialogue between algorithm developers, domain experts, ethicists, policymakers, and the public to ensure that the pursuit of computational efficiency does not come at the cost of fairness, justice, and human well-being.

While specific courses on the ethics of approximation algorithms are rare, foundational knowledge in algorithms and awareness of AI ethics are crucial. These courses provide a strong algorithmic base:

Exploring broader literature on AI ethics and responsible technology development is also highly recommended for anyone working with or impacted by these algorithms. Consider browsing topics related to Public Policy or Philosophy on OpenCourser for related discussions.

Frequently Asked Questions (Career Focus)

Embarking on or transitioning into a career that leverages knowledge of approximation algorithms can bring up many practical questions. Here, we address some common queries to provide actionable insights for career planning in algorithm-focused roles. Remember, the field is challenging but also highly rewarding for those with a passion for problem-solving.

What industries hire approximation algorithm specialists?

While "Approximation Algorithm Specialist" isn't a typical job title, professionals with strong skills in designing, analyzing, and implementing algorithms (including approximation techniques) are sought after in a variety of industries. Key sectors include:

- Technology Companies: This is a major area, encompassing software companies, internet giants (search, social media, e-commerce), cloud computing providers, and hardware manufacturers. They need algorithmic expertise for everything from search result ranking and recommendation systems to network optimization, distributed systems, and compiler design.

- Finance: The financial industry, particularly in areas like algorithmic trading, risk management, portfolio optimization, and fraud detection, relies heavily on sophisticated algorithms.

- Logistics and Supply Chain Management: Companies involved in transportation, shipping, warehousing, and supply chain optimization use algorithms for route planning (like variations of the Traveling Salesman Problem), facility location, inventory management, and scheduling.

- Telecommunications: Network design, capacity planning, call routing, and spectrum allocation are all areas where algorithmic optimization is crucial.

- Manufacturing: Production scheduling, resource allocation, process optimization, and robotics often involve solving complex combinatorial problems.

- Healthcare: While still an emerging area for deep algorithmic application, there are opportunities in medical imaging analysis, drug discovery (e.g., molecular modeling), optimizing treatment plans, and managing hospital resources.

- Energy Sector: Optimizing power grids, planning energy distribution, and managing renewable energy sources involve complex algorithmic challenges.

- Research and Academia: Universities and research institutions employ researchers who specifically advance the theory and application of approximation algorithms.

Essentially, any industry facing large-scale optimization problems where exact solutions are intractable is a potential employer.

How do salaries compare to other CS specializations?

Salaries for roles requiring strong algorithmic skills, such as Algorithm Engineer or Research Scientist in optimization, are generally competitive and often above average within the broader computer science field. According to Salary.com, the average salary for an Algorithm Engineer in the US is around $128,622, though this can vary significantly based on location, experience, education, and the specific company. ZipRecruiter reports a similar average annual pay for an Algorithm Engineer in the United States at around $111,632, with a typical range between $80,500 and $132,500. Some sources like 6figr.com indicate that highly experienced algorithm engineers at top companies or in lucrative niches can earn significantly more, with averages potentially skewed by stock options and bonuses.

Compared to some other CS specializations, roles heavily focused on advanced algorithm design and optimization (which often imply a deeper theoretical understanding, potentially a Master's or PhD) can command higher salaries, particularly in R&D divisions of major tech companies or in quantitative finance. However, highly specialized roles like "Optimization Specialist" might have a wider salary range depending on the industry and the complexity of the optimization tasks; ZipRecruiter shows an average around $55,794 for "Optimization Specialist" but this title can be broad. "Process Optimization Specialist" salaries average higher, around $90,650 according to ZipRecruiter. It's important to look at specific job descriptions and required qualifications, as titles can vary.

Factors that positively influence salary include advanced degrees (MS or PhD) in a relevant field, a strong portfolio of projects or publications, expertise in high-demand programming languages, and experience in lucrative industries like finance or big tech.

Is a PhD required for advanced roles?

For many advanced roles, particularly those with a significant research component (e.g., "Research Scientist") or those focused on developing novel algorithms for cutting-edge problems, a PhD in Computer Science, Mathematics, Operations Research, or a closely related field is often preferred or even required. A PhD program provides deep theoretical training, research experience, and the ability to tackle open-ended problems independently, which are highly valued for these positions.

However, a PhD is not universally mandatory for all advanced algorithm-related roles. Exceptional individuals with a Master's degree and a strong track record of innovation and problem-solving in industry can also reach senior technical positions. For roles like "Senior Algorithm Engineer" or "Principal Engineer," extensive practical experience, a portfolio of impactful projects, and demonstrated expertise can sometimes be as valuable as a PhD. The key is a demonstrable ability to handle complex algorithmic challenges and deliver results.

For roles that are more focused on the implementation and adaptation of existing algorithmic techniques rather than groundbreaking research, a Master's degree or even a Bachelor's with significant relevant experience might be sufficient, even at a senior level.

Which programming languages are most relevant?

The choice of programming language often depends on the specific application area and performance requirements. However, some languages are commonly used in algorithm development:

- Python: Increasingly popular due to its readability, extensive libraries for scientific computing and data analysis (NumPy, SciPy, Pandas), and rapid prototyping capabilities. It's widely used in machine learning, data science, and for implementing many types of algorithms.

- C++: Valued for its performance and control over system resources, making it a good choice for applications where speed is critical, such as high-frequency trading, game development, operating systems, and embedded systems. The Standard Template Library (STL) provides efficient implementations of common data structures and algorithms.

- Java: Known for its platform independence, strong object-oriented features, and large ecosystem of libraries. It's widely used in enterprise applications, large-scale systems, and Android development.

- R: Primarily used for statistical computing and data analysis, R is popular in academic research and fields requiring specialized statistical modeling.

- MATLAB: Often used in engineering, numerical analysis, and academic research for algorithm development and simulation, particularly those involving matrix computations.

For roles specifically in approximation algorithms, a strong command of at least one of Python or C++/Java is generally expected, along with the ability to learn new languages or tools as needed. Understanding the trade-offs between these languages in terms of development speed, runtime performance, and available libraries is also important.

How to showcase approximation skills in interviews?

Showcasing skills in approximation algorithms during interviews, especially when the problems given are standard coding challenges, requires a nuanced approach:

- Problem Identification: If you're presented with a problem that you recognize as NP-hard (or suspect it might be), briefly mentioning this (and why you think so) can be a good starting point. This shows theoretical awareness.

- Discussing Exact vs. Approximate: You can then discuss that finding an exact solution might be too slow for large inputs. This opens the door to discussing approximation.

- Proposing Heuristics/Greedy Approaches: Even if not explicitly asked for an approximation algorithm, you can suggest a simpler heuristic or a greedy approach as a first pass or as a practical solution if optimality isn't strictly required or if time is constrained. Explain the logic behind your heuristic.

- Analyzing the Approximation (if possible): If you can sketch an argument for why your heuristic might provide a reasonable approximation, or even better, if you know a standard approximation algorithm for a similar problem, you can discuss its approximation ratio. This demonstrates deeper knowledge. For example, if faced with a vertex cover like problem, you might mention the simple 2-approximation algorithm.

- Complexity Analysis: Always analyze the time and space complexity of any solution you propose, whether exact or approximate. Efficiency is key for approximation algorithms.

- Trade-offs: Be prepared to discuss the trade-offs between the quality of the solution and the runtime of your proposed algorithm.

- Examples: If relevant, you can briefly mention real-world scenarios where approximation algorithms are used for similar types of problems.

In interviews for research-oriented roles, you might be directly asked to design or analyze an approximation algorithm. For general software engineering roles, demonstrating that you understand when and why approximation might be necessary, and that you can think in terms of efficient heuristics, is often more important than recalling specific complex approximation algorithms.

Impact of AI tools on algorithm design careers?

AI tools, including large language models (LLMs) and automated machine learning (AutoML) platforms, are beginning to impact various aspects of software development, and algorithm design is no exception. However, rather than replacing algorithm designers, these tools are more likely to augment their capabilities and shift the focus of their work.

- Code Generation and Prototyping: AI tools can assist in generating boilerplate code or helping to quickly prototype algorithmic ideas, potentially speeding up the development cycle.

- Learning Heuristics: As discussed in future trends, AI can be used to learn effective heuristics or to tune parameters within algorithmic frameworks, potentially leading to better-performing hybrid algorithms.

- Problem Formulation: AI might help in exploring different ways to formulate an optimization problem or in identifying relevant features from data.

- Focus on Harder Problems: By automating some of the more routine aspects of algorithm implementation or heuristic design, AI tools may free up human experts to focus on more complex, novel, or theoretically challenging problems where human ingenuity and deep insight are still paramount.

- Verification and Analysis: There's a potential for AI to assist in verifying the correctness of algorithms or in analyzing their performance, though this is a complex research area.

However, the core skills of understanding problem structures, designing novel algorithmic approaches, proving performance guarantees (crucial for approximation algorithms), and deep theoretical reasoning are unlikely to be fully automated soon. Careers in algorithm design will likely evolve to incorporate these AI tools as powerful assistants, requiring designers to be adept at leveraging them while still possessing strong fundamental algorithmic and mathematical skills. The ability to critically evaluate the outputs of AI tools and to understand their limitations will also become increasingly important.

For those considering a career in this field, continuous learning and adaptability will be key. Staying updated with both foundational algorithmic principles and emerging AI technologies will be essential for long-term success. OpenCourser's Artificial Intelligence section can be a good place to explore relevant AI topics.

These courses and books can help you build a strong foundation to tackle these career questions with confidence:

The journey into the world of approximation algorithms is one of continuous learning and intellectual discovery. It's a field that combines rigorous mathematical thinking with practical problem-solving, offering the chance to make a tangible impact on how we approach some of the most computationally challenging tasks. Whether you are a student exploring future paths, a professional considering a career shift, or a lifelong learner fascinated by the power of algorithms, the resources and insights provided here aim to help you navigate this exciting domain. Remember that OpenCourser is here to support your learning journey, offering a vast catalog of courses and a wealth of information to help you achieve your goals. The path may be demanding, but for those with a passion for solving complex puzzles, the rewards are well worth the effort.