Microprocessors

Introduction to Microprocessors

A microprocessor is the central processing unit (CPU) of a computer system, fabricated on a small chip of semiconductor material. It's the "brain" that executes instructions and performs calculations, enabling everything from your smartphone to complex supercomputers to function. Understanding microprocessors means delving into the core of modern technology, a field that is constantly evolving and pushing the boundaries of what's possible. For those intrigued by the intricate workings of electronics and the power of computation, exploring microprocessors can be a fascinating and rewarding journey.

Working with microprocessors can be incredibly engaging. Imagine designing the next generation of chips that will power revolutionary technologies, from artificial intelligence and machine learning applications to breakthroughs in medical devices and autonomous vehicles. There's also the excitement of problem-solving at a fundamental level, optimizing performance, power efficiency, and cost for a myriad of applications. The field offers a chance to be at the forefront of innovation, contributing to advancements that shape our daily lives and the future of technology.

Introduction to Microprocessors

This section provides a foundational understanding of microprocessors, covering their basic definition, historical development, core components, and how they differ from related technologies like microcontrollers and GPUs. It's designed to be accessible for those new to the topic while still providing a solid base for further exploration. Whether you're simply curious about the technology that powers your devices or considering a career in this dynamic field, this introduction will equip you with the essential knowledge to get started.

Definition and Core Function of Microprocessors

A microprocessor is an integrated circuit (IC) that contains the arithmetic, logic, and control circuitry required to perform the functions of a computer's central processing unit (CPU). Think of it as the engine of a computer, responsible for executing sequences of instructions, called a program, to perform specific tasks. These tasks can range from simple calculations to complex data processing and system control.

The core function of a microprocessor involves a continuous cycle of fetching instructions from memory, decoding them to understand the required operation, executing that operation, and then writing back any results to memory. This fetch-decode-execute cycle is fundamental to how all microprocessors operate, regardless of their complexity or application. The speed and efficiency with which a microprocessor can perform this cycle largely determines the overall performance of the computing device it powers.

Modern microprocessors are incredibly complex, containing billions of transistors packed onto a tiny piece of silicon. They are designed to handle a vast array of tasks, making them versatile components in everything from personal computers and servers to embedded systems and mobile devices. Their ability to be programmed to perform different functions is what makes them so powerful and ubiquitous in today's technology-driven world.

For those interested in diving deeper into the fundamentals of how microprocessors work and how they are built, the following courses offer excellent starting points. They cover the essential concepts and provide a solid foundation for understanding computer architecture.

Historical Milestones in Microprocessor Development

The journey of the microprocessor began in the late 1960s, with several companies and researchers working on ways to integrate the functions of a CPU onto a single chip. The first commercially available microprocessor, the Intel 4004, was introduced in 1971. This 4-bit processor, initially designed for a calculator, marked a revolutionary step in electronics, paving the way for the personal computer revolution and the proliferation of digital devices.

Throughout the 1970s and 1980s, microprocessor technology advanced rapidly. Companies like Intel, Motorola, and Zilog developed increasingly powerful 8-bit and 16-bit microprocessors, such as the Intel 8080, Motorola 6800, and Zilog Z80. These chips fueled the growth of the personal computer market, with iconic machines like the Apple II and IBM PC relying on these evolving processors. The introduction of 32-bit architectures in the mid-1980s, such as the Intel 80386 and Motorola 68020, brought even greater processing power and capabilities.

The 1990s and 2000s saw continued innovation, with a focus on increasing clock speeds, improving instruction-level parallelism, and integrating more features onto the chip, such as caches and floating-point units. This era was largely characterized by the dominance of x86 architecture in personal computers and servers, while other architectures like ARM gained traction in mobile and embedded devices. The relentless pursuit of Moore's Law, which predicted the doubling of transistors on a chip roughly every two years, drove much of this progress.

The following books offer comprehensive insights into the design and history of microprocessors, covering key architectural developments and the impact of these tiny powerhouses on the world of computing.

Basic Components: ALU, Control Unit, Registers

At the heart of every microprocessor are several key components that work together to execute instructions. The Arithmetic Logic Unit (ALU) is responsible for performing arithmetic operations (like addition and subtraction) and logical operations (like AND, OR, and NOT). It's the computational core of the microprocessor, carrying out the calculations dictated by the program instructions.

The Control Unit (CU) directs the flow of operations within the microprocessor. It fetches instructions from memory, decodes them, and then generates control signals to coordinate the activities of the ALU, registers, and other parts of the processor. The control unit ensures that instructions are executed in the correct sequence and that data moves to the right places at the right time. It's like the conductor of an orchestra, ensuring all parts work in harmony.

Registers are small, high-speed storage locations within the microprocessor. They are used to temporarily hold data and instructions that are actively being processed. Different types of registers serve specific purposes, such as holding the address of the next instruction to be fetched (program counter), storing the current instruction being executed (instruction register), or holding data that the ALU is working on (general-purpose registers). The speed and number of registers significantly impact the processor's performance, as they provide much faster access to data than main memory.

These components are fundamental to understanding how a microprocessor processes information. The following courses delve into computer architecture, providing a deeper look at these components and their interactions.

Comparison with Microcontrollers and GPUs

While microprocessors are powerful general-purpose processing units, it's helpful to distinguish them from other types of processors like microcontrollers and Graphics Processing Units (GPUs). A microprocessor typically requires external components like memory, input/output (I/O) peripherals, and timers to function as a complete computing system. This modularity allows for flexibility and scalability, making them suitable for a wide range of applications, from personal computers to powerful servers.

A microcontroller, on the other hand, is essentially a computer on a single chip. It integrates a microprocessor core with memory (RAM and ROM), I/O ports, timers, and other peripherals onto a single integrated circuit. This makes microcontrollers ideal for embedded systems where space, cost, and power consumption are critical constraints. You'll find microcontrollers in everyday devices like washing machines, remote controls, and automotive engine control units. They are designed for specific control tasks rather than general-purpose computing.

Graphics Processing Units (GPUs) are specialized processors designed to accelerate the creation of images, videos, and animations for display on a screen. While originally focused on graphics rendering for video games and professional visualization, their highly parallel architecture has made them increasingly useful for computationally intensive tasks beyond graphics, such as machine learning, scientific simulations, and cryptocurrency mining. Unlike general-purpose microprocessors, GPUs are optimized for performing the same operation on large datasets simultaneously (SIMD - Single Instruction, Multiple Data).

Understanding these distinctions is important for choosing the right processing solution for a given application. For those interested in embedded systems and the practical application of microcontrollers, these resources can be valuable.

Microprocessor Architecture and Design

This section delves into the more technical aspects of microprocessor architecture and design. It's geared towards individuals with some existing technical background or a strong interest in the engineering principles behind how microprocessors are built and function. We will explore different architectural approaches, instruction sets, performance enhancement techniques, and critical design considerations like power efficiency and thermal management. A solid grasp of these concepts is crucial for anyone looking to pursue a career in hardware engineering or microprocessor design.

Von Neumann vs Harvard Architectures

Two fundamental architectural models define how microprocessors access memory: the Von Neumann architecture and the Harvard architecture. The Von Neumann architecture, named after the mathematician John von Neumann, uses a single address space for both instructions and data. This means that the CPU fetches both program instructions and the data those instructions operate on from the same memory unit, using the same bus (a set of electrical pathways).

The primary advantage of the Von Neumann architecture is its simplicity and efficient use of memory. Since instructions and data share the same memory, the system can flexibly allocate memory between them. However, this shared bus can also create a bottleneck, known as the Von Neumann bottleneck, because instructions and data cannot be fetched simultaneously. This can limit the processor's overall throughput, as it must wait for one type of transfer to complete before initiating the other.

In contrast, the Harvard architecture uses separate address spaces and dedicated buses for instructions and data. This allows the CPU to fetch an instruction and access data at the same time, potentially leading to higher performance, especially in applications that require frequent memory access. Many modern digital signal processors (DSPs) and microcontrollers utilize Harvard or modified Harvard architectures to achieve high throughput for real-time signal processing and control tasks. While pure Harvard architectures are less common in general-purpose CPUs, many modern processors incorporate aspects of it, such as separate instruction and data caches, to mitigate the Von Neumann bottleneck.

Instruction Set Architectures (CISC/RISC)

The Instruction Set Architecture (ISA) is a critical aspect of microprocessor design, defining the set of instructions that a processor can understand and execute. ISAs can be broadly categorized into two main types: Complex Instruction Set Computing (CISC) and Reduced Instruction Set Computing (RISC). These represent different philosophies in how to design the interface between software and hardware.

CISC architectures, like the widely used x86 ISA found in most personal computers and servers, feature a large and diverse set of instructions. Some of these instructions can perform complex operations, such as a single instruction that loads data from memory, performs an arithmetic operation, and then stores the result back to memory. The goal of CISC was to make assembly language programming easier and to reduce the number of instructions needed for a given task, thereby potentially saving memory, which was a scarce resource in earlier computing days.

RISC architectures, on the other hand, emphasize a smaller, simpler, and highly optimized set of instructions. Each instruction is designed to perform a single, simple operation and typically executes in a single clock cycle. Complex operations are broken down into a sequence of these simpler instructions by the compiler. The simplicity of RISC instructions allows for easier pipelining and can lead to more efficient and faster processors. Architectures like ARM, MIPS, and the open-source RISC-V are prominent examples of RISC ISAs, widely used in mobile devices, embedded systems, and increasingly in other areas. These courses offer deeper dives into RISC-V architecture and assembly programming.

For those seeking a comprehensive understanding of computer organization and design, particularly with a focus on RISC-V, this book is an excellent resource.

Pipelining and Parallel Processing Techniques

To enhance performance, modern microprocessors employ sophisticated techniques like pipelining and parallel processing. Pipelining is a method where the execution of an instruction is broken down into several stages (e.g., fetch, decode, execute, memory access, write-back). Multiple instructions can be in different stages of execution simultaneously, much like an assembly line in a factory. This allows the processor to achieve a higher throughput of instructions, significantly improving overall performance without necessarily increasing the clock speed.

Parallel processing takes this concept further by executing multiple instructions or tasks truly at the same time. This can be achieved in several ways. Superscalar architectures, for example, have multiple execution units (like multiple ALUs) within a single processor core, allowing them to issue and execute more than one instruction per clock cycle. Multi-core processors take this even further by integrating two or more independent processor cores onto a single chip. Each core can execute a separate instruction stream (or thread) concurrently, leading to substantial performance gains for software that is designed to take advantage of multiple cores.

Other parallel processing techniques include Single Instruction, Multiple Data (SIMD) extensions, which allow a single instruction to operate on multiple data elements simultaneously, beneficial for multimedia processing and scientific computing. The effective use of pipelining and various parallel processing strategies is crucial for achieving the high performance levels expected from contemporary microprocessors. As the limits of increasing clock speeds are reached, these techniques become even more vital for continued performance scaling.

Power Efficiency and Thermal Design Challenges

As microprocessors become more powerful and pack more transistors into smaller spaces, power efficiency and thermal design have emerged as critical challenges. Higher power consumption not only leads to increased energy costs and shorter battery life in portable devices but also generates more heat. Excessive heat can degrade performance, cause system instability, and even damage the microprocessor itself. Therefore, managing power and heat is a paramount concern for chip designers.

Designers employ various strategies to improve power efficiency. This includes using lower operating voltages, implementing power-gating techniques (turning off unused parts of the chip), dynamic voltage and frequency scaling (DVFS) which adjusts the processor's speed and power based on the workload, and designing more power-efficient microarchitectures. Architectural choices, such as the move towards multi-core designs with simpler, more efficient cores rather than extremely complex single cores, are also influenced by power considerations.

Thermal design involves developing effective ways to dissipate the heat generated by the microprocessor. This can range from simple heat sinks and fans in personal computers to more complex liquid cooling systems in high-performance servers and data centers. The physical design of the chip package and its interface with the cooling solution are also crucial. The ongoing challenge is to balance the demand for higher performance with the need for manageable power consumption and effective thermal solutions, especially as transistors continue to shrink and component densities increase.

These courses offer insights into the architectural aspects that influence power and performance, key considerations in modern chip design.

This book provides a developer's perspective on ARM systems, which are known for their power efficiency.

Applications of Modern Microprocessors

Modern microprocessors are the unseen engines driving a vast array of technologies that shape our daily lives and various industries. From the smartphones in our pockets to the complex systems managing industrial automation and the massive data centers powering the internet, microprocessors are indispensable. This section will highlight some key application areas, demonstrating the versatility and impact of these powerful chips. Understanding these applications can provide context for those considering careers in fields that utilize or develop microprocessor technology, including those looking to transition from other areas.

Consumer Electronics (Smartphones, IoT Devices)

Consumer electronics represent one of the largest and most dynamic markets for microprocessors. Smartphones, tablets, smartwatches, and a plethora of Internet of Things (IoT) devices rely heavily on sophisticated, power-efficient microprocessors. In smartphones, for instance, System-on-a-Chip (SoC) designs integrate CPU cores, GPU cores, memory controllers, wireless communication modules (like Wi-Fi and cellular), and other specialized processing units onto a single chip. These SoCs need to deliver high performance for demanding applications like gaming and video streaming while consuming minimal power to ensure long battery life.

The proliferation of IoT devices, ranging from smart home appliances (like thermostats, lighting, and security systems) to wearable fitness trackers and smart city infrastructure (like traffic management and environmental sensors), is another significant driver for microprocessor innovation. These devices often require small, low-cost, and ultra-low-power microprocessors or microcontrollers capable of performing specific tasks, collecting sensor data, and communicating wirelessly. The design challenges in this space often revolve around balancing connectivity, security, processing capability, and extreme power constraints.

The rapid evolution of consumer electronics continually pushes the boundaries of microprocessor design, demanding smaller form factors, greater integration, improved performance per watt, and enhanced security features. The growth in this sector is expected to continue, driven by consumer demand for smarter, more connected, and more capable devices. According to Fortune Business Insights, the global microprocessor market is projected to grow from USD 123.82 billion in 2025 to USD 181.35 billion by 2032. This growth is significantly fueled by the increasing demand for advanced processors in consumer electronics, particularly mobile devices and tablets.

Industrial Automation and Embedded Systems

Microprocessors and microcontrollers are fundamental to industrial automation and a wide array of embedded systems. In manufacturing, they control robotic arms, manage assembly lines, monitor production processes, and ensure quality control. Programmable Logic Controllers (PLCs), which are ruggedized computers widely used in industrial environments, rely on microprocessors to execute control logic and interface with sensors and actuators. The trend towards "Industry 4.0" or the "smart factory" further increases the demand for sophisticated processing capabilities for real-time data analysis, predictive maintenance, and networked control systems.

Beyond manufacturing, embedded systems powered by microprocessors are ubiquitous. They are found in automotive systems (engine control, anti-lock braking, infotainment, advanced driver-assistance systems - ADAS), medical devices (patient monitoring systems, infusion pumps, diagnostic equipment), aerospace and defense applications (avionics, guidance systems, communication systems), and critical infrastructure (power grid management, transportation systems). These applications often have stringent requirements for reliability, real-time performance, and, in many cases, safety and security.

The design of microprocessors for industrial and embedded applications often prioritizes determinism (predictable timing), robustness in harsh environments (temperature extremes, vibration), long operational lifecycles, and specific I/O capabilities tailored to interfacing with industrial sensors and machinery. As industrial processes become more complex and interconnected, the need for powerful and specialized embedded processing solutions continues to grow. These courses provide foundational knowledge for those interested in the world of embedded systems, which are central to industrial automation.

This book offers a deep dive into the design and implementation of embedded systems.

High-Performance Computing and Data Centers

High-Performance Computing (HPC) and data centers represent another major domain where microprocessors play a critical role. HPC systems, including supercomputers, are used for tackling some of the most computationally demanding problems in science, engineering, and finance. This includes tasks like climate modeling, drug discovery, materials science research, financial risk analysis, and complex simulations. These systems rely on massively parallel architectures, often employing thousands or even millions of interconnected microprocessor cores to achieve petaflop and exaflop levels of performance.

Data centers, the backbone of the internet and cloud computing, house vast numbers of servers, each powered by one or more microprocessors. These servers run a wide range of applications, from web hosting and e-commerce platforms to large-scale data analytics, artificial intelligence model training, and content delivery networks. The performance, power efficiency, and reliability of the microprocessors used in data centers are crucial for the overall efficiency and cost-effectiveness of these massive facilities. According to Infosys Knowledge Institute, the data center market, particularly for GPUs and other high-performance chips, is expected to be a primary growth driver for the semiconductor industry.

In both HPC and data center environments, there is a continuous drive for higher computational density, greater energy efficiency (to reduce operational costs and environmental impact), and improved interconnect technologies to facilitate rapid data movement between processors and memory. Specialized processors, such as GPUs and custom AI accelerators, are increasingly being deployed alongside traditional CPUs to handle specific workloads more efficiently. The evolution of microprocessors for these demanding applications is a key area of ongoing research and development. The semiconductor industry is projected to reach approximately $697 billion in sales in 2025, largely driven by strong demand in data centers and AI technologies.

Emerging Applications in AI/ML Edge Computing

A significant and rapidly growing area for modern microprocessors is Artificial Intelligence (AI) and Machine Learning (ML) at the "edge." Edge computing refers to processing data closer to where it is generated, rather than sending it to a centralized cloud or data center. This is crucial for applications that require low latency (fast response times), operate in environments with limited or unreliable network connectivity, or have strict data privacy and security requirements.

Microprocessors designed for AI/ML edge computing are optimized to efficiently run inference tasks (using trained AI models to make predictions or decisions) on devices like smart cameras, autonomous drones, industrial robots, medical diagnostic tools, and even advanced automotive systems. These chips often incorporate specialized hardware accelerators, such as Neural Processing Units (NPUs) or Tensor Processing Units (TPUs), designed to speed up the mathematical operations common in AI algorithms. The trend is towards integrating these AI capabilities directly into the microprocessors that power edge devices.

The demand for AI at the edge is driven by the desire for more intelligent, responsive, and autonomous systems. For example, a smart security camera with on-board AI processing can analyze video locally to detect specific events, reducing the need to stream large amounts of video data to the cloud. In autonomous vehicles, edge AI processors are essential for real-time perception, decision-making, and control. As AI models become more sophisticated and the range of edge applications expands, the development of power-efficient, high-performance microprocessors with integrated AI acceleration will be a key enabler. The market for AI-driven semiconductors is skyrocketing, with a strong push towards creating specialized processors for AI applications.

Formal Education Pathways

For those aspiring to a career in microprocessor design, research, or related fields, a strong formal education is typically the starting point. This section outlines the common academic routes, from high school preparation to undergraduate and graduate studies. Understanding these pathways can help students and career explorers plan their educational journey effectively. While the path can be rigorous, a solid academic foundation opens doors to some of the most innovative and impactful roles in technology.

High School Prerequisites (Math/Physics/CS)

A strong foundation in mathematics, physics, and computer science during high school is highly beneficial for anyone considering a future in microprocessor engineering or related fields. Advanced math courses, including algebra, trigonometry, calculus, and discrete mathematics, develop the analytical and problem-solving skills essential for understanding complex engineering concepts. Physics, particularly topics related to electricity, magnetism, and semiconductor physics, provides a fundamental understanding of the physical principles underlying electronic devices.

Introductory computer science courses, especially those that cover programming fundamentals, data structures, and algorithms, are also crucial. Familiarity with programming helps in understanding how software interacts with hardware and is a prerequisite for more advanced topics like computer architecture and digital logic design. Exposure to basic electronics or robotics clubs and competitions can also provide valuable hands-on experience and spark further interest in the field.

While these subjects provide a strong base, cultivating curiosity, a persistent attitude towards problem-solving, and good study habits are equally important. The journey into microprocessor engineering is challenging, but a solid high school preparation can make the transition to university-level studies smoother and more successful. Exploring resources like K-12 subject guides on OpenCourser can also supplement high school learning.

Undergraduate Electrical/Computer Engineering Curricula

A bachelor's degree in Electrical Engineering (EE) or Computer Engineering (CE) is the most common educational pathway into the field of microprocessors. Both disciplines provide the core knowledge required, though they may have slightly different emphases. EE programs often focus more on the physical aspects of electronics, semiconductor devices, circuit design (both analog and digital), and signal processing. CE programs typically bridge the gap between hardware and software, covering topics like digital logic design, computer architecture, operating systems, and embedded systems, in addition to many core EE subjects.

Typical undergraduate curricula will include foundational courses in calculus, differential equations, linear algebra, physics, and chemistry. Core engineering courses will cover circuit theory, digital logic design, electronics, electromagnetics, signals and systems, and computer programming. Specialized courses relevant to microprocessors often include computer organization and architecture, microprocessor systems, embedded systems design, VLSI (Very Large Scale Integration) design, and semiconductor device physics. Many programs also include laboratory components where students gain hands-on experience designing, building, and testing circuits and systems.

Students should look for programs accredited by recognized engineering accreditation bodies. Furthermore, seeking internships or co-op opportunities during undergraduate studies can provide invaluable practical experience and networking opportunities within the industry. Browsing engineering courses on OpenCourser can give a good overview of the topics covered in these degree programs.

These courses provide a taste of the topics covered in undergraduate engineering programs focusing on digital systems and computer architecture.

Graduate Research Specializations

For those interested in pushing the boundaries of microprocessor technology through research and development, or in pursuing highly specialized design roles, a graduate degree (Master's or Ph.D.) is often necessary. Graduate programs allow for in-depth specialization in specific areas of microprocessor design and computer architecture. These specializations can include advanced computer architecture, VLSI design and testing, low-power design, high-performance computing architectures, reconfigurable computing, embedded systems, hardware security, and emerging computing paradigms like neuromorphic or quantum computing.

Master's programs typically involve advanced coursework and often a research project or thesis, providing a deeper understanding and specialized skills. Ph.D. programs are heavily research-focused, culminating in a dissertation that contributes original knowledge to the field. Graduate research often involves working closely with faculty members on cutting-edge projects, publishing research papers, and presenting at academic conferences. This environment fosters critical thinking, advanced problem-solving skills, and the ability to conduct independent research.

Choosing a graduate program often involves identifying universities and faculty members whose research aligns with one's interests. Areas of active research in microprocessors include developing novel architectures to overcome the limitations of Moore's Law, designing specialized hardware for AI/ML, improving energy efficiency, enhancing security at the hardware level, and exploring new materials and device technologies. Advanced degrees are particularly crucial for R&D positions in industry research labs and for academic careers.

Laboratory and Prototyping Skill Development

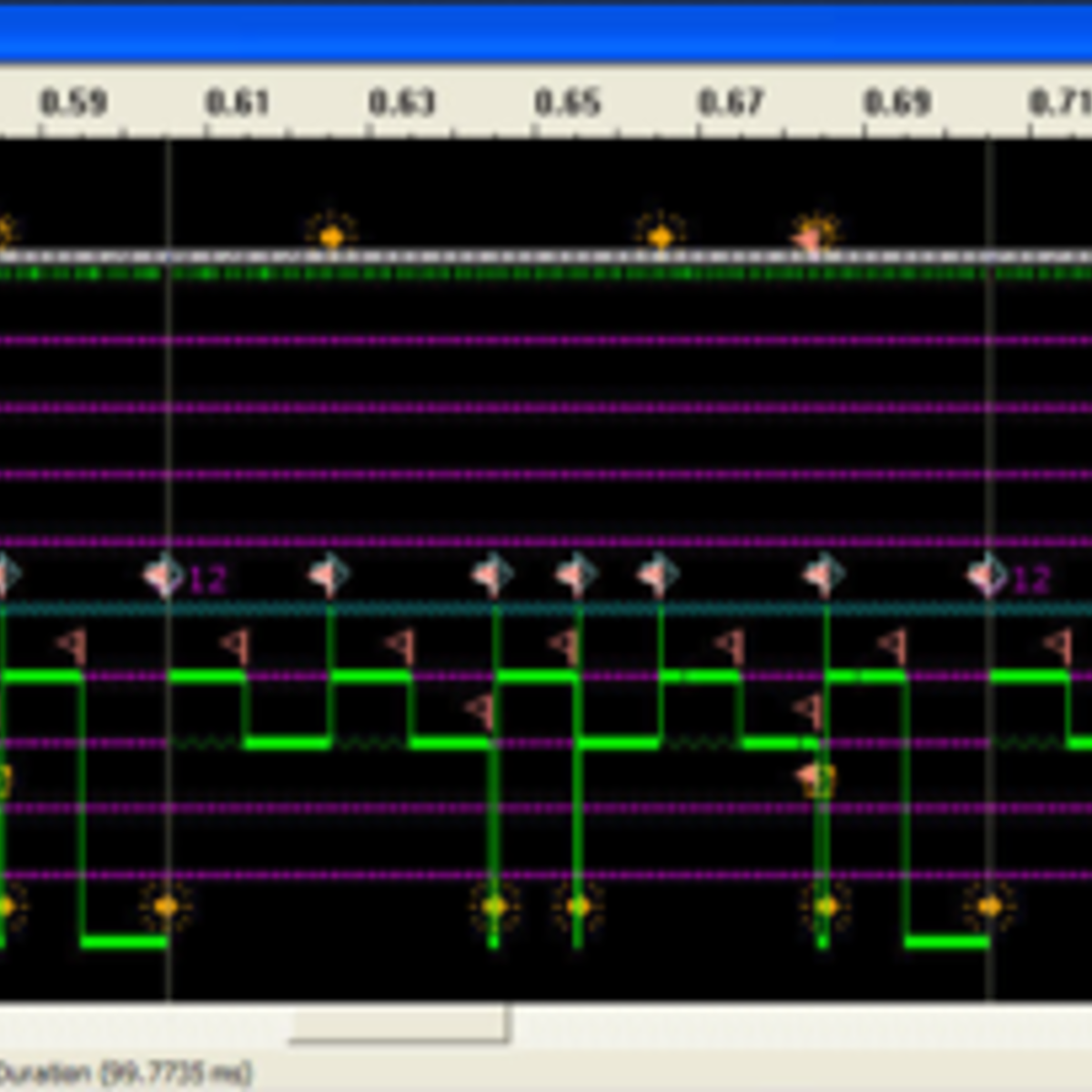

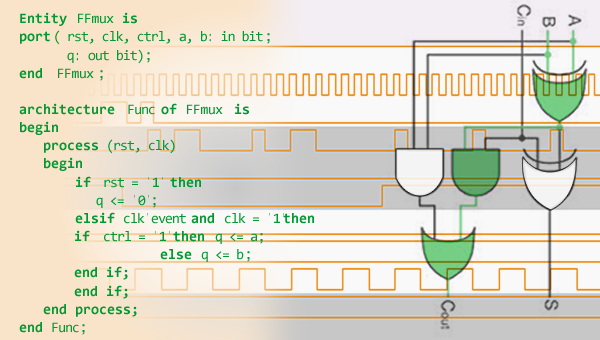

Beyond theoretical knowledge, practical laboratory and prototyping skills are essential for anyone working with microprocessors, whether in design, testing, or application development. Formal education pathways typically include significant hands-on lab work where students learn to use electronic test and measurement equipment, such as oscilloscopes, logic analyzers, and signal generators. They also gain experience with circuit simulation software, PCB (Printed Circuit Board) design tools, and hardware description languages (HDLs) like Verilog or VHDL, which are used to describe and design digital circuits.

Prototyping skills involve taking a design from concept to a working physical implementation. This can involve breadboarding circuits, soldering components, programming microcontrollers or FPGAs (Field-Programmable Gate Arrays), and debugging both hardware and software. Experience with development kits, evaluation boards, and rapid prototyping platforms (like Arduino or Raspberry Pi for introductory purposes, and more advanced FPGA boards for serious digital design) is highly valuable. These tools allow engineers to quickly test ideas, verify designs, and iterate on their solutions.

Many university courses, particularly in computer engineering and electrical engineering, include design projects that require students to apply their knowledge to build and test a microprocessor-based system. These projects provide crucial experience in the entire design cycle, from specification and component selection to implementation, testing, and documentation. Developing strong lab and prototyping skills not only reinforces theoretical concepts but also prepares students for the practical challenges of real-world engineering.

Self-Directed Learning Strategies

While formal education provides a structured path, self-directed learning is increasingly viable and valuable for those looking to understand microprocessors, whether for career change, skill enhancement, or personal interest. The availability of online resources, open-source hardware, and powerful simulation tools has democratized access to knowledge and hands-on experience in this field. This section explores effective strategies for independent learners, emphasizing a practical, project-based approach.

Online courses offer a flexible and accessible way to build a strong foundation in microprocessors and related topics. Platforms like OpenCourser provide a vast catalog of courses from various institutions, covering everything from basic electronics and digital logic to advanced computer architecture and embedded systems programming. Learners can find courses tailored to different skill levels, allowing them to start with fundamentals and progressively tackle more complex subjects. OpenCourser's features, such as course syllabi, reviews, and the "Save to List" function, can help learners curate a personalized learning path. For those looking to make the most of these resources, the OpenCourser Learner's Guide offers valuable tips on creating a structured curriculum and staying disciplined with self-study.

Open-Source Hardware Platforms for Experimentation

Open-source hardware platforms have revolutionized the way individuals can learn about and experiment with microprocessors and electronics. Platforms like Arduino and Raspberry Pi, while often associated with hobbyists, provide excellent entry points for understanding basic microcontroller programming, interfacing with sensors and actuators, and building simple embedded systems. Their large communities and abundant online tutorials make them accessible even for beginners.

For those interested in delving deeper into digital logic design and processor architecture, FPGA (Field-Programmable Gate Array) development boards are powerful tools. FPGAs are integrated circuits that can be configured by the user after manufacturing. This means you can design your own digital circuits, including simple microprocessors, using Hardware Description Languages (HDLs) like Verilog or VHDL, and then implement them on the FPGA. Many affordable FPGA boards are available, often accompanied by open-source toolchains and educational resources. Working with FPGAs provides invaluable hands-on experience in designing and testing digital hardware.

The open-source hardware movement also extends to microprocessor ISAs themselves, with RISC-V being a prominent example. RISC-V is an open-standard instruction set architecture, meaning anyone can design, manufacture, and sell RISC-V chips and software. This openness has fostered a vibrant ecosystem of open-source processor cores, development tools, and educational materials, making it an exciting area for self-learners to explore the frontiers of processor design.

These courses can introduce you to the world of embedded systems and hardware interaction, often utilizing platforms that are either open-source or widely accessible for experimentation.

Simulation Tools and FPGA Development Boards

Simulation tools are indispensable for both learning and professional microprocessor design. Software simulators allow you to model and test digital circuits and even entire processor architectures before committing them to physical hardware. This is crucial for debugging designs, verifying functionality, and exploring different architectural trade-offs without the cost and time involved in hardware fabrication. Many universities and online courses utilize circuit simulators (like Logisim or SPICE-based tools for analog/mixed-signal aspects) and HDL simulators (like ModelSim, Verilator, or GHDL for digital designs).

As mentioned earlier, FPGA development boards provide a physical platform for implementing and testing digital designs. Learning to use the development environments provided by FPGA vendors (such as Xilinx's Vivado or Intel's Quartus) is a valuable skill. These environments typically include tools for HDL synthesis (translating your HDL code into a configuration for the FPGA), place and route (mapping your design onto the FPGA's internal resources), and debugging. Many FPGA boards also come with various peripherals like LEDs, switches, memory, and I/O connectors, allowing you to build and test complete systems.

Combining simulation with FPGA prototyping creates a powerful learning loop. You can design and simulate a circuit or processor, then implement it on an FPGA to see it run in real hardware, interact with physical inputs and outputs, and identify any discrepancies between simulation and reality. This hands-on experience is invaluable for developing a deep understanding of digital design principles and microprocessor operation.

Project-Based Learning Approaches

A project-based learning approach is highly effective for self-directed study in microprocessors. Instead of passively consuming information, you actively apply what you learn by building tangible projects. This could start with simple projects like programming a microcontroller to blink an LED or read sensor data, and gradually progress to more complex endeavors such as designing a custom peripheral, implementing a simple ALU on an FPGA, or even attempting to design and simulate a basic microprocessor core.

Setting clear project goals, breaking down complex tasks into manageable steps, and iteratively developing and testing your design are key aspects of this approach. Online communities, forums, and project-sharing websites (like GitHub for code, and sites dedicated to electronics projects) can be great sources of inspiration, guidance, and collaboration. Documenting your projects, even if they are for personal learning, is also a good practice, as it helps solidify your understanding and creates a portfolio of your work.

Project-based learning not only reinforces theoretical knowledge but also helps develop critical problem-solving skills, debugging techniques, and an understanding of the practical challenges involved in hardware and software development. It's a rewarding way to learn that keeps you motivated and provides a sense of accomplishment as you see your creations come to life.

Combining Software Skills with Hardware Knowledge

For those looking to work with microprocessors, particularly in areas like embedded systems, firmware development, or even hardware verification, a strong combination of software skills and hardware knowledge is essential. Understanding how software interacts with the underlying hardware allows for more efficient and effective system design and debugging. This includes knowing assembly language for the target microprocessor, as well as higher-level languages commonly used in embedded development, such as C or C++.

Familiarity with concepts like memory-mapped I/O, interrupt handling, device drivers, and real-time operating systems (RTOS) is crucial when developing software that runs directly on microprocessors or microcontrollers. Conversely, hardware designers benefit from understanding software development principles, as it helps them create processors that are easier to program and better suited to the needs of software developers.

Self-learners can focus on projects that bridge the gap between hardware and software. For example, writing firmware to control a custom peripheral you've designed on an FPGA, or developing a device driver for a sensor connected to a microcontroller. This interdisciplinary approach not only broadens your skillset but also makes you a more versatile and valuable engineer in a field where hardware and software are increasingly intertwined.

Career Progression in Microprocessor Engineering

A career in microprocessor engineering offers a dynamic and intellectually stimulating path with opportunities for growth and specialization. The field is constantly evolving, driven by technological advancements and the insatiable demand for faster, more efficient, and more capable processors. This section explores typical career trajectories, from entry-level positions to senior roles and leadership opportunities, providing insights for university students and early-career professionals looking to navigate this exciting domain. It's a field that demands continuous learning, but the rewards can be significant, both professionally and in terms of contributing to cutting-edge technology.

The semiconductor industry, which encompasses microprocessor engineering, is experiencing robust growth. According to a report by Deloitte, the semiconductor industry had a strong 2024, with expected sales of US$627 billion, and 2025 is projected to be even better, with sales potentially reaching US$697 billion. This growth is a positive indicator for job prospects in the field. However, the industry also faces talent challenges, with a need to add a significant number of skilled workers by 2030.

Entry-Level Roles: Verification Engineer, Layout Designer

Graduates with a bachelor's or master's degree in electrical or computer engineering often start their careers in roles such as Design Verification Engineer or Physical Design/Layout Engineer. Design Verification (DV) Engineers are responsible for ensuring that a microprocessor design is functionally correct and meets its specifications before it is manufactured. This involves creating complex test environments, writing test cases, running simulations, and debugging any issues found in the design. Strong skills in hardware description languages (like Verilog or SystemVerilog), scripting languages (like Python or Perl), and an understanding of computer architecture are crucial for DV roles.

Physical Design or Layout Engineers focus on translating the logical design of a microprocessor into a physical layout of transistors and interconnects on the silicon chip. This involves tasks like floorplanning (arranging major blocks on the chip), placement (positioning individual cells), routing (connecting the cells with wires), and ensuring that the design meets timing, power, and manufacturability requirements. Expertise in EDA (Electronic Design Automation) tools, semiconductor device physics, and an understanding of fabrication processes are important for these roles.

These entry-level positions provide a fantastic opportunity to learn the intricacies of the microprocessor development lifecycle, work with advanced tools and methodologies, and contribute to complex chip projects. They serve as a solid foundation for future specialization and career advancement. The average annual pay for a Microprocessor Engineer in the United States is around $167,438, though this can vary based on experience, location, and specific role. For CPU Design Engineers, salaries can also be quite competitive, with averages potentially differing based on the data source but generally indicating strong earning potential.

Mid-Career Paths: Architecture Specialist, Power Optimization

With several years of experience, engineers in the microprocessor field can move into more specialized and senior roles. One common path is to become a Microprocessor Architect or a Computer Architecture Specialist. Architects are involved in the high-level design of new processors or significant enhancements to existing ones. They define the instruction set, the microarchitecture (how the instructions are implemented), memory hierarchy, interconnect strategies, and overall system performance targets. This role requires a deep understanding of computer architecture principles, performance analysis, and emerging technologies.

Another critical mid-career specialization is Power Optimization Engineer. As power consumption and thermal management have become major constraints in processor design, engineers who specialize in developing low-power architectures, power management techniques, and energy-efficient circuits are in high demand. This involves analyzing power usage, identifying hotspots, and implementing design changes to reduce power consumption without unduly sacrificing performance. This specialization is vital across all segments, from mobile devices to high-performance data centers.

Other mid-career paths include becoming a lead verification engineer, a senior physical design engineer focusing on complex blocks or methodologies, or specializing in areas like performance modeling and analysis, security hardware design, or I/O subsystem design. These roles typically require a proven track record of technical expertise, problem-solving skills, and often the ability to mentor junior engineers.

Research and Development Leadership Positions

For individuals with advanced degrees (often Ph.D.s) and extensive experience, leadership positions in research and development (R&D) become attainable. These roles involve guiding research teams, setting strategic R&D directions, and spearheading the development of next-generation microprocessor technologies. R&D leaders might work in large semiconductor companies, research institutions, or start-ups focused on novel computing paradigms.

Responsibilities can include identifying future technological trends and challenges, proposing innovative architectural solutions, managing complex research projects, securing funding (in academic or institutional settings), and overseeing the transition of research concepts into product development. These positions require not only deep technical expertise but also strong leadership, communication, and strategic thinking skills. They are at the forefront of innovation, exploring areas like new device technologies, advanced packaging, AI-specific hardware, and post-Moore's Law computing.

The path to R&D leadership often involves a significant period of contribution as a senior researcher or principal engineer, a strong publication record (in academia or industry research labs), and a reputation for technical vision and innovation. These roles are highly competitive but offer the opportunity to shape the future of computing technology. A KPMG report indicates that 72% of semiconductor industry respondents predict an increase in R&D spending, highlighting the ongoing commitment to innovation.

Cross-Industry Mobility Opportunities

The skills and knowledge gained in microprocessor engineering are highly transferable and can open doors to opportunities in a variety of related industries. Engineers with expertise in computer architecture, digital design, embedded systems, and low-power design are sought after in sectors beyond traditional semiconductor companies.

For example, automotive companies are increasingly hiring hardware engineers to design the complex electronic control units (ECUs) and SoCs for advanced driver-assistance systems (ADAS), infotainment, and autonomous driving. The aerospace and defense industries require engineers for avionics, communication systems, and secure computing platforms. Consumer electronics companies that design their own chips (like Apple or Google) also offer many opportunities. Furthermore, the burgeoning field of IoT and the increasing integration of AI into various devices create demand for engineers who can design efficient hardware for these applications.

Additionally, skills in verification, testing, and familiarity with complex EDA tools can be valuable in software companies that develop these tools, or in system companies that integrate microprocessors into larger products. The ability to understand the hardware-software interface is a key asset that can facilitate mobility across different segments of the technology industry. This cross-industry demand contributes to a generally positive job outlook for those with microprocessor-related expertise.

Industry Trends Shaping Microprocessor Development

The microprocessor industry is in a constant state of flux, driven by relentless technological innovation, evolving market demands, and global economic factors. Understanding these trends is crucial for anyone involved in the field, from engineers and researchers to investors and policymakers. This section will explore some of the key trends shaping the future of microprocessor development, including the challenges to Moore's Law, architectural competition, geopolitical influences, and the growing importance of sustainability. These trends are not just shaping chips; they are shaping the future of technology itself.

The overall semiconductor market is projected for significant growth, with some forecasts expecting it to become a trillion-dollar industry by 2030. This growth is fueled by various applications, especially AI and data centers.

Moore's Law Challenges and Heterogeneous Computing

For decades, Moore's Law, the observation that the number of transistors on a microchip doubles approximately every two years, has been a guiding principle for the semiconductor industry. This relentless scaling has driven exponential increases in computing power and reductions in cost. However, the industry is now facing significant challenges in maintaining this pace. As transistors approach atomic scales, physical limitations, rising fabrication costs, and power dissipation issues are making it increasingly difficult and expensive to continue traditional scaling.

In response to these challenges, the industry is increasingly turning towards heterogeneous computing and advanced packaging techniques. Heterogeneous computing involves integrating different types of specialized processing cores (e.g., CPUs, GPUs, NPUs, DSPs) onto a single chip or within a single package. Each type of core is optimized for specific tasks, allowing the overall system to achieve better performance and power efficiency than a system relying solely on general-purpose CPUs. Chiplets, which are small, specialized dies that can be combined in a mix-and-match fashion within a package, represent a key trend in enabling more flexible and cost-effective heterogeneous integration.

The future of performance gains is likely to come less from simply shrinking transistors and more from innovations in architecture, materials, and how different processing elements are combined and communicate. This shift presents both significant challenges and exciting opportunities for microprocessor designers.

Arm vs x86 Architecture Market Competition

The microprocessor market has long seen competition between different instruction set architectures (ISAs), with Intel's x86 architecture dominating the PC and server markets for many years, while Arm-based processors have been prevalent in mobile and embedded devices due to their power efficiency. However, this landscape is becoming more dynamic. Arm is making significant inroads into new markets, including laptops, data centers, and even high-performance computing, challenging x86's traditional strongholds.

The appeal of Arm-based processors often lies in their licensing model, which allows various companies (like Qualcomm, Apple, and Nvidia) to design their own custom SoCs based on Arm cores, tailoring them for specific applications and achieving a balance of performance and power efficiency. This flexibility has fostered innovation and competition. Major cloud providers are also developing and deploying Arm-based servers in their data centers, attracted by potential cost savings and power advantages for certain workloads.

In response, x86 proponents, primarily Intel and AMD, are continuing to innovate, focusing on improving performance, power efficiency, and adding new features to their processors. The rise of the open-source RISC-V ISA is another factor adding to the competitive landscape, offering an alternative that is free from licensing fees and allows for even greater customization. This ongoing competition between different architectures is a key driver of innovation in the microprocessor industry, benefiting consumers and businesses with a wider range of processing solutions.

Geopolitical Factors in Semiconductor Manufacturing

The semiconductor industry has become a focal point of geopolitical attention due to its critical role in the global economy and national security. The manufacturing of advanced microprocessors is highly complex and concentrated in a few regions, particularly Taiwan and South Korea. This geographic concentration has raised concerns about supply chain vulnerabilities, as demonstrated by chip shortages experienced in recent years, which impacted numerous industries, from automotive to consumer electronics.

In response, major economic powers, including the United States, the European Union, and China, are implementing policies and investing billions of dollars to bolster their domestic semiconductor manufacturing capabilities and reduce reliance on foreign suppliers. Initiatives like the CHIPS and Science Act in the U.S. aim to incentivize the construction of new fabrication plants ("fabs") and promote research and development in semiconductor technology. Similarly, the European Chips Act seeks to strengthen Europe's semiconductor ecosystem.

These efforts are leading to a reshaping of global semiconductor supply chains, with a trend towards greater regionalization and diversification. However, building a self-sufficient semiconductor industry is a massive undertaking, requiring substantial long-term investment, a skilled workforce, and access to specialized equipment and materials. Geopolitical tensions and trade restrictions related to semiconductor technology are likely to continue to influence the industry's landscape.

Sustainability Demands in Chip Production

The semiconductor industry is facing increasing pressure to address its environmental footprint and adopt more sustainable practices. Chip manufacturing is a resource-intensive process, consuming significant amounts of energy and water, and utilizing various chemicals, some of which can be hazardous if not managed properly. The generation of greenhouse gases during manufacturing and the growing problem of electronic waste (e-waste) at the end of a product's life are also major concerns.

In response, semiconductor companies are exploring various strategies to improve sustainability. This includes investing in renewable energy sources to power their fabrication plants, implementing water conservation and recycling programs, developing greener manufacturing processes that use fewer harmful chemicals, and designing chips for greater energy efficiency during their operational life. There is also a growing focus on the "circular economy" for electronics, which involves designing products for durability and recyclability, and developing better methods for recovering valuable materials from e-waste.

Stakeholder expectations, including from consumers, investors, and regulatory bodies, are driving this push for greater sustainability. While the technical challenges are significant, the industry recognizes that long-term growth and social license to operate will increasingly depend on its ability to minimize its environmental impact and contribute to a more sustainable technological future.

Ethical Considerations in Microprocessor Technology

The profound impact of microprocessor technology on society brings with it a range of ethical considerations that warrant careful attention. As these tiny powerhouses become ever more integrated into every facet of our lives, it is crucial to consider the broader implications of their design, manufacture, and application. This section will touch upon some key ethical challenges, including labor practices in the supply chain, e-waste management, military applications, and the equitable allocation of these critical resources. Addressing these issues is vital for ensuring that the benefits of microprocessor technology are realized responsibly and equitably.

Supply Chain Labor Practices

The global semiconductor supply chain is incredibly complex, involving numerous stages from raw material extraction and processing to component manufacturing, assembly, and testing. Concerns have been raised about labor practices at various points in this chain, particularly in regions where worker protections may be less stringent. Issues can include long working hours, low wages, unsafe working conditions, and, in some cases, instances of forced labor or the use of child labor, especially in the mining of raw materials like cobalt and other conflict minerals.

Many technology companies and industry consortia are increasingly focused on improving transparency and accountability within their supply chains. This involves establishing codes of conduct for suppliers, conducting audits to monitor compliance, and investing in programs to improve working conditions and protect worker rights. Ethical sourcing initiatives aim to ensure that raw materials are obtained from conflict-free zones and that suppliers adhere to fair labor standards.

However, ensuring ethical labor practices throughout a vast and often opaque global supply chain remains a significant challenge. Consumers, advocacy groups, and investors are putting greater pressure on companies to demonstrate due diligence and take meaningful action to address these issues. The integrity of the semiconductor industry relies not only on technological innovation but also on its commitment to upholding human rights and fair labor standards throughout its operations.

E-waste Management Challenges

The rapid pace of technological advancement and the relatively short lifespan of many electronic devices contribute to a growing global problem: electronic waste, or e-waste. Microprocessors and the devices they power eventually reach the end of their useful lives, and their improper disposal can lead to significant environmental and health problems. E-waste often contains hazardous materials, including heavy metals like lead, mercury, and cadmium, as well as flame retardants, which can leach into soil and water if not managed correctly.

Recycling e-waste is complex and challenging. While some materials can be recovered and reused, the intricate design of modern electronics, especially the increasing integration of components, makes disassembly and material separation difficult and costly. A significant portion of e-waste is still shipped to developing countries, where informal recycling practices often involve unsafe methods that expose workers and communities to toxic substances.

Addressing the e-waste challenge requires a multi-faceted approach. This includes designing products for easier disassembly and recycling ("design for recycling"), promoting extended producer responsibility schemes where manufacturers are responsible for the end-of-life management of their products, investing in advanced recycling technologies, and raising consumer awareness about proper e-waste disposal. The goal is to move towards a more circular economy where materials are reused and recycled, minimizing waste and reducing the demand for virgin resources.

Military Applications and Dual-Use Technologies

Microprocessors are fundamental components in modern military systems, powering everything from communication and surveillance equipment to guidance systems for smart weapons, drones, and autonomous military vehicles. The advanced computational capabilities provided by state-of-the-art microprocessors are critical for maintaining a technological edge in defense and national security.

This gives rise to ethical considerations related to "dual-use" technologies – technologies that have both civilian and military applications. The same microprocessors that enable life-saving medical devices or enhance communication can also be used in weapons systems with lethal capabilities. This duality raises questions about the responsibilities of designers, manufacturers, and governments in controlling the proliferation of advanced microprocessor technology and preventing its misuse.

Export controls and international agreements attempt to regulate the transfer of sensitive technologies, but the global nature of the semiconductor industry and the rapid pace of innovation make enforcement challenging. There are ongoing debates about the ethical implications of developing autonomous weapons systems that rely on AI-powered microprocessors, and the potential for an AI arms race. These are complex issues that require careful consideration by policymakers, industry leaders, and society as a whole to ensure that technological advancements are used responsibly and in ways that promote peace and security.

Global Semiconductor Resource Allocation

The global distribution and allocation of semiconductor resources, particularly access to the most advanced chip manufacturing capabilities, have significant economic and geopolitical implications. As highlighted by recent chip shortages, disruptions in the supply of microprocessors can have cascading effects across numerous industries, impacting everything from car manufacturing to the availability of consumer electronics.

The high cost and complexity of building and operating state-of-the-art semiconductor fabrication plants (fabs) mean that this capability is concentrated in the hands of a few companies and countries. This concentration creates dependencies and can lead to competition for access to limited manufacturing capacity, especially for cutting-edge chips. Decisions about where to invest in new fab capacity, and who gets priority access to that capacity, can have profound impacts on national competitiveness and technological leadership.

Ethical considerations arise around issues of fair access and equitable distribution. Should access to advanced semiconductor technology be considered a strategic national asset, or a global public good? How can the benefits of this technology be shared more broadly, particularly with developing nations? As governments increasingly intervene to support their domestic semiconductor industries through subsidies and other industrial policies (like the US CHIPS Act and the European Chips Act), questions about fair competition and the potential for trade distortions also emerge. Balancing national interests with the need for a stable and equitable global semiconductor ecosystem is a key challenge for the international community.

Frequently Asked Questions (Career Focus)

Embarking on or transitioning into a career related to microprocessors can bring up many questions. This section aims to address some of the common queries that career-oriented individuals might have, from essential skills and job market competitiveness to the importance of advanced degrees and global opportunities. The answers provided are intended to offer concise, actionable advice to help you navigate your career path in this exciting and challenging field.

What programming languages are essential for microprocessor work?

For microprocessor-related work, proficiency in certain programming languages is crucial, though the specific languages can vary depending on the exact role. For hardware design and verification, Hardware Description Languages (HDLs) like Verilog and VHDL are essential. These are used to describe, simulate, and synthesize digital circuits, including microprocessors themselves. You can explore courses related to these languages on Verilog and VHDL on OpenCourser.

For firmware development, embedded software engineering, and writing low-level drivers that interact directly with microprocessor hardware, C is the dominant language due to its efficiency, control over hardware, and widespread compiler support. C++ is also increasingly used in embedded systems for its object-oriented features and ability to manage complexity in larger projects. Understanding Assembly Language for the specific microprocessor architecture you are working with (e.g., ARM, x86, RISC-V) is also highly valuable for debugging, performance optimization, and understanding the hardware-software interface at the deepest level. Scripting languages like Python or Perl are also widely used in the industry for automation, test scripting, data analysis, and tool flow management in design and verification environments.

While these are the core languages, familiarity with other languages or specialized tools might be beneficial depending on the niche. For example, MATLAB/Simulink can be used for system-level modeling and simulation, especially in areas like signal processing and control systems. Ultimately, a strong foundation in programming fundamentals and the ability to learn new languages and tools as needed are key attributes for success. Many universities offer courses covering these languages as part of their electrical and computer engineering curricula.

How competitive are entry-level chip design roles?

Entry-level chip design roles, which include positions like physical design engineer, verification engineer, or junior ASIC/FPGA designer, are generally competitive. The semiconductor industry seeks highly skilled individuals with a strong foundation in digital logic, computer architecture, semiconductor physics, and relevant EDA (Electronic Design Automation) tools. Companies often look for candidates with a Bachelor's or Master's degree in Electrical Engineering, Computer Engineering, or a closely related field. Internships or co-op experiences in the semiconductor industry can significantly enhance a candidate's competitiveness.

The level of competition can also vary based on geographic location (with tech hubs like Silicon Valley, Austin, or regions in Asia being particularly competitive), the specific company, and the current demand for certain skill sets. While the overall semiconductor industry is projected to grow, creating new opportunities, the number of graduates specializing in hardware engineering may also be increasing. According to Deloitte, the semiconductor industry needs to add a million skilled workers by 2030, indicating a strong demand for talent, but also highlighting the need for qualified individuals.

To stand out, candidates should focus on building a strong academic record, gaining practical experience through projects (university or personal), developing proficiency in relevant HDLs and programming languages, and demonstrating a genuine passion for hardware design. Networking through industry events, university career fairs, and online platforms can also be beneficial. While competitive, the field offers rewarding careers for those who are well-prepared and persistent.

Can software engineers transition into hardware roles?

Yes, software engineers can transition into hardware roles, particularly in areas that bridge the hardware-software interface, such as firmware development, embedded systems engineering, and design verification. However, it typically requires a dedicated effort to acquire the necessary hardware-specific knowledge and skills. Software engineers often have strong programming skills, which are valuable, but they will need to learn about digital logic design, computer architecture, hardware description languages (HDLs like Verilog or VHDL), and potentially circuit theory and semiconductor fundamentals.

One common transition path is into design verification, where software development skills (especially in object-oriented programming with languages like SystemVerilog or C++, and scripting with Python/Perl) are highly relevant for creating complex testbenches and verification environments. Another area is embedded software, where C and C++ are extensively used to program microcontrollers and processors at a low level. Firmware development also sits at this intersection.

For those aiming for roles deeper in hardware design (like RTL design or physical design), the learning curve will be steeper. Pursuing additional education, such as a Master's degree in Computer Engineering with a hardware focus, or taking specialized online courses and gaining hands-on experience with FPGAs and EDA tools, can facilitate this transition. Highlighting transferable skills like problem-solving, debugging, and system-level thinking during job applications is also important. While challenging, a transition is certainly possible for motivated software engineers willing to invest in new learning.

What industries hire the most microprocessor specialists?

Microprocessor specialists are in demand across a wide range of industries due to the ubiquitous nature of this technology. The most prominent employers are, of course, semiconductor companies themselves – those that design and/or manufacture microprocessors, microcontrollers, SoCs, and other integrated circuits. This includes large players like Intel, AMD, Nvidia, Qualcomm, Arm, Samsung, and TSMC, as well as many smaller and specialized chip design firms.

Beyond chip manufacturers, consumer electronics companies are major employers. Companies that design smartphones, computers, gaming consoles, wearables, and IoT devices often have in-house teams working on system architecture, chip selection, and sometimes custom chip design (e.g., Apple, Google, Amazon). The automotive industry is another rapidly growing sector for microprocessor specialists, driven by the increasing electronic content in vehicles, particularly for ADAS, infotainment, and electric vehicle systems.

Other significant industries include telecommunications (for network equipment, base stations), aerospace and defense (for avionics, guidance systems, secure communications), industrial automation (for PLCs, robotics, control systems), medical devices (for diagnostic equipment, patient monitoring, implantable devices), and data centers/cloud computing providers (for server design, network infrastructure, and specialized AI hardware). The breadth of these industries underscores the diverse opportunities available for those with expertise in microprocessor technology. The U.S. Bureau of Labor Statistics provides outlooks for various engineering roles, which can offer broader context, although specific data for "microprocessor specialist" might be embedded within categories like "Electrical and Electronics Engineers" or "Computer Hardware Engineers."

How important are advanced degrees for R&D positions?

For research and development (R&D) positions in the microprocessor field, advanced degrees, particularly a Master's degree or a Ph.D., are often highly important and sometimes a formal requirement. R&D roles involve pushing the boundaries of current technology, exploring novel architectures, developing new design methodologies, and tackling fundamental challenges in areas like performance, power efficiency, and security. The in-depth specialized knowledge, research skills, and ability to conduct independent investigation gained through graduate studies are directly applicable to these tasks.

A Master's degree can provide the specialized knowledge needed for advanced design or development roles within R&D teams. A Ph.D. is typically expected for positions that involve leading original research, defining long-term research agendas, and contributing to the academic or scientific understanding of the field. Many principal investigators, senior research scientists, and university faculty members in computer architecture and VLSI design hold Ph.D.s. According to a report by KPMG, a high percentage of semiconductor companies predict an increase in R&D spending, which could sustain the demand for highly qualified individuals with advanced degrees.

While exceptional individuals with a Bachelor's degree and significant relevant experience might occasionally move into R&D-focused roles, an advanced degree generally provides a more direct and common pathway. It signals a commitment to deep technical expertise and the ability to contribute at the forefront of innovation. The complexity of challenges like overcoming Moore's Law limitations and developing next-generation AI hardware often requires the advanced training that graduate programs offer.

What global regions offer strong job markets for this field?

The job market for microprocessor specialists is global, with several key regions offering strong opportunities. North America, particularly the United States (Silicon Valley in California, Austin in Texas, and other tech hubs), remains a major center for microprocessor design, research, and innovation. Many leading semiconductor companies, research institutions, and tech giants have a significant presence there.

East Asia is another critical region. Taiwan is a powerhouse in semiconductor manufacturing, home to TSMC, the world's largest dedicated independent semiconductor foundry. South Korea is also a leader, with major companies like Samsung heavily involved in both memory and logic chip design and manufacturing. China has been investing heavily to build up its domestic semiconductor industry, creating demand for skilled professionals, although geopolitical factors can influence this market. Japan also has a long-standing semiconductor industry with opportunities in specialized areas.

In Europe, countries like Germany, France, the Netherlands, and the UK have significant semiconductor research and manufacturing activities, often focused on automotive, industrial, and telecommunications applications. The European Chips Act aims to further boost the region's capabilities. India has a growing presence in chip design services and is also aspiring to increase its role in semiconductor manufacturing. The global nature of the industry means that opportunities can be found in various locations, often clustered around established technology centers and research universities. The Semiconductor Industry Association (SIA) reports on global sales, which can indirectly indicate regions with strong industry activity and, consequently, job markets.

This article has aimed to provide a comprehensive overview of the world of microprocessors, from fundamental concepts to career pathways and industry trends. The field is undeniably complex and demanding, requiring a strong educational foundation and a commitment to continuous learning. However, it is also a field at the very heart of technological progress, offering the chance to contribute to innovations that shape our world. For those with a passion for technology and a drive to solve challenging problems, exploring microprocessors can be an immensely rewarding endeavor. Whether you are just starting your educational journey, considering a career change, or looking to deepen your existing knowledge, resources like OpenCourser can be invaluable in navigating the vast landscape of learning opportunities available. We encourage you to explore the courses and materials linked throughout this article and to continue your exploration into this fascinating and vital field.