Big Data Analytics

vigating the World of Big Data Analytics

Big Data Analytics is the process of examining large and complex datasets, often referred to as "big data," to uncover hidden patterns, unknown correlations, market trends, and customer preferences. The primary aim is to extract actionable insights that provide tangible value, enabling organizations to make more informed strategic decisions, identify new opportunities, and foster innovation. This field has become increasingly vital as the amount of data generated from diverse sources like social media, Internet of Things (IoT) sensors, financial transactions, and smart devices continues to grow exponentially.

Working in Big Data Analytics can be incredibly engaging and exciting. Imagine being at forefront of discovering groundbreaking insights that can reshape how businesses operate or how societal challenges are addressed. You might find yourself developing algorithms that predict customer behavior with uncanny accuracy, or perhaps building systems that detect fraudulent activities in real-time, saving organizations millions. The thrill of transforming raw, seemingly chaotic data into clear, actionable intelligence is a significant draw for many in this field. Furthermore, the interdisciplinary nature of Big Data Analytics means you'll often collaborate with diverse teams, constantly learning and applying new techniques to solve complex problems.

Introduction to Big Data Analytics

This section provides a foundational overview, setting the stage for a deeper exploration of Big Data Analytics. We will define its scope, trace its historical evolution and the technological drivers behind it, identify key industries that leverage its power, and look at global adoption trends. This context is crucial for understanding the 'why' behind the growing importance of Big Data Analytics for a wide range of audiences.

Defining the Realm of Big Data Analytics

At its core, Big Data Analytics involves the systematic processing and analysis of large and complex datasets to extract valuable information. This isn't just about handling more data; it's about dealing with data characterized by high volume, velocity (the speed at which it's generated), and variety (different forms of data, including structured, semi-structured, and unstructured). Think of the sheer volume of data created daily from social media interactions, online transactions, sensor networks, and more. Big Data Analytics provides the tools and techniques to make sense of this deluge.

The scope of Big Data Analytics is vast and continually expanding. It encompasses various methodologies, including data mining (discovering patterns in large datasets), statistical analysis (to explore data and uncover hidden patterns), predictive modeling (using historical data to forecast future outcomes), and machine learning (enabling systems to learn from data without being explicitly programmed). These techniques are applied to diverse datasets to help organizations better understand market dynamics, customer preferences, operational efficiencies, and other critical business metrics. Ultimately, the goal is to transform raw data into strategic assets that drive better decision-making and innovation.

The insights derived from Big Data Analytics can lead to significant benefits, such as cost savings by identifying operational inefficiencies, enhanced product development by understanding customer needs more deeply, and improved risk management by addressing threats in near real-time.

The Journey of Big Data: Evolution and Technological Impetus

The concept of analyzing data to gain insights is not new, but the scale and complexity we associate with "big data" today began to take shape in the early 2000s. This era saw significant advancements in both software and hardware capabilities, making it feasible for organizations to collect, store, and process massive amounts of unstructured data. Prior to this, traditional data analytics primarily dealt with structured data residing in relational databases, which, while organized, couldn't handle the sheer volume and variety of modern data sources.

Several technological drivers fueled this evolution. The explosion of the internet and digital technologies, including the proliferation of social media, smartphones, and IoT devices, led to an unprecedented generation of data. Concurrently, the development of open-source frameworks like Apache Hadoop and, later, Apache Spark, provided the necessary tools for distributed storage and processing of these large datasets across clusters of computers. These frameworks, along with a growing ecosystem of analytical tools and libraries, empowered organizations to tackle complex analytical tasks that were previously unimaginable. The continuous decrease in storage costs and the rise of cloud computing further democratized access to the infrastructure needed for Big Data Analytics.

Key Industries Harnessing the Power of Big Data

Big Data Analytics is not confined to a single sector; its transformative power is being realized across a multitude of industries. In healthcare, it's used for predictive analytics to improve patient care, forecast disease outbreaks, and personalize treatments. By analyzing patient records, medical imaging, and even data from wearable devices, healthcare providers can make more informed decisions and enhance clinical outcomes.

The finance industry leverages Big Data Analytics extensively for fraud detection, algorithmic trading, risk assessment, and customer relationship management. Analyzing transactional data and market trends in real-time allows financial institutions to identify suspicious activities and make more accurate predictions. In retail, understanding customer behavior is paramount. Big Data Analytics helps retailers analyze purchasing patterns, personalize marketing campaigns, optimize supply chains, and improve customer experiences.

Furthermore, Big Data Analytics is instrumental in the development of smart cities, where data from sensors and IoT devices is used to optimize traffic flow, manage public utilities, and enhance public safety. The manufacturing sector uses it for predictive maintenance, quality control, and supply chain optimization. These are just a few examples, and the applications of Big Data Analytics continue to expand as more industries recognize its potential to drive innovation and efficiency.

These courses offer a glimpse into how Big Data Analytics is applied in specific industry contexts.

Global Adoption and Market Growth

The adoption of Big Data Analytics is a global phenomenon, driven by the increasing recognition of data as a critical organizational asset. Businesses across the world are investing in Big Data technologies and talent to gain a competitive edge, improve operational efficiency, and create new revenue streams. Market forecasts indicate substantial and continued growth in the Big Data Analytics market. For instance, some reports projected the global big data analytics market to reach approximately 84 billion U.S. dollars in 2024 and grow to 103 billion U.S. dollars by 2027. Another forecast suggests the market, valued at $307.51 billion in 2023, could reach $924.39 billion by 2032.

This growth is fueled by several factors, including the falling costs of data storage and processing, the increasing availability of sophisticated analytics tools, and a growing pool of skilled data professionals. Furthermore, the rise of cloud computing has made Big Data Analytics more accessible to organizations of all sizes, not just large enterprises. Developing economies are also increasingly participating in this trend, recognizing the potential of data to leapfrog developmental challenges. The widespread adoption is evident in the increasing demand for data professionals across various sectors and geographies. As organizations become more data-driven, the reliance on Big Data Analytics for strategic decision-making is set to intensify globally.

For those interested in the broader economic impact, these books offer valuable insights.

Core Concepts of Big Data Analytics

To truly understand Big Data Analytics, it's essential to grasp its fundamental concepts. This section will demystify some of the technical jargon and explain the foundational methodologies that underpin this field. We'll explore data mining and machine learning techniques, the tools and frameworks that make big data processing possible, various data storage solutions, and the distinction between real-time and batch processing. Equipping yourself with this vocabulary and technical understanding will pave the way for engaging with more advanced topics.

Unearthing Insights: Data Mining and Machine Learning

Data mining is the process of discovering patterns, anomalies, and correlations within large datasets to predict outcomes. It's like sifting through a mountain of sand to find valuable gems. Techniques used in data mining include clustering (grouping similar data points), classification (assigning data points to predefined categories), regression (predicting continuous values), and association rule mining (discovering relationships between variables). These methods help businesses understand customer behavior, detect fraud, optimize marketing campaigns, and much more. Machine learning (ML), a subset of artificial intelligence, is a critical component of Big Data Analytics. ML algorithms enable computer systems to learn from data and improve their performance over time without being explicitly programmed for each specific task. In the context of big data, ML models can be trained on vast datasets to make predictions, classify information, or uncover complex patterns that would be impossible for humans to discern. Common ML techniques include supervised learning (learning from labeled data), unsupervised learning (finding patterns in unlabeled data), and reinforcement learning (learning through trial and error). The synergy between data mining and machine learning allows organizations to extract deep, predictive insights from their data.These courses provide a solid introduction to the concepts of data mining and machine learning in the context of Big Data.

For a deeper dive into the theoretical underpinnings, these books are excellent resources.

You may also find these topics interesting for further exploration.

Essential Tools and Frameworks: Hadoop and Spark

Handling the sheer volume and complexity of big data requires specialized tools and frameworks. Two of the most prominent open-source frameworks in the Big Data ecosystem are Apache Hadoop and Apache Spark.

Apache Hadoop was one of the pioneering frameworks that made large-scale distributed data processing accessible. It consists of two main components: the Hadoop Distributed File System (HDFS), which allows for storing vast amounts of data across clusters of commodity hardware, and MapReduce, a programming model for processing these large datasets in parallel. While MapReduce can be complex to work with directly, Hadoop laid the groundwork for many subsequent big data technologies. Apache Spark emerged as a faster and more versatile alternative to Hadoop's MapReduce. Spark can perform in-memory processing, which significantly speeds up data analysis tasks. It supports various workloads, including batch processing, real-time stream processing, machine learning, and graph processing. Spark also offers user-friendly APIs in languages like Scala, Python, Java, and R, making it more accessible to a broader range of developers and data scientists. Many organizations now use Spark in conjunction with or as a replacement for Hadoop's processing capabilities, often still relying on HDFS for storage.Beyond these foundational frameworks, a rich ecosystem of tools supports various aspects of Big Data Analytics, including data ingestion tools (like Apache Kafka and Flume), data warehousing solutions, and business intelligence platforms.

These courses offer practical introductions to working with Hadoop and Spark.

To understand these frameworks in more detail, consider these books.

Storing the Unstorable: NoSQL and Cloud Databases

Traditional relational databases, while excellent for structured data, often struggle with the scale, variety, and velocity of big data. This led to the emergence of NoSQL databases (which stands for "Not Only SQL"). NoSQL databases are designed to handle large volumes of unstructured and semi-structured data and offer flexible schemas, horizontal scalability (ability to add more servers to the cluster), and high availability.

There are several types of NoSQL databases, each suited for different use cases:

- Document databases (e.g., MongoDB) store data in document-like structures such as JSON or BSON.

- Key-value stores (e.g., Redis, Amazon DynamoDB) store data as simple key-value pairs, offering very fast read and write operations.

- Column-family stores (e.g., Apache Cassandra, HBase) store data in columns rather than rows, which is efficient for queries over large datasets involving a subset of columns.

- Graph databases (e.g., Neo4j) are designed to store and navigate relationships between data points.

These courses provide insights into different data storage solutions relevant to Big Data.

For a broader understanding of database management concepts, you might explore this topic.

Real-Time vs. Batch Processing: An ELI5 Guide

Imagine you're getting mail. There are two main ways the post office could deliver it:

Batch Processing: Like Getting All Your Mail Once a DayIn batch processing, data is collected over a period (a "batch") and then processed all at once. Think of the postman collecting all the letters for your street throughout the day and then delivering them to everyone in one go.

* Example in Big Data: A company might collect all its sales data from the entire day. Then, overnight, a system processes this "batch" of data to generate a sales report for the managers to see in the morning. * Good for: Tasks that don't need immediate results, like generating weekly reports, archiving data, or complex calculations that take a lot of time on large datasets. It's often more efficient for large volumes of data where speed isn't the absolute top priority for each individual piece of data.

Real-Time Processing: Like Getting a Text Message InstantlyIn real-time processing (or stream processing), data is processed almost immediately as it arrives. Think of getting a text message – it pops up on your phone right after it's sent.

* Example in Big Data: A credit card company analyzing transactions as they happen to detect potential fraud. If a suspicious transaction occurs, the system can flag it or block it instantly. Another example is social media platforms analyzing trending topics as they emerge. * Good for: Situations where you need immediate insights or actions, like fraud detection, live monitoring of systems (e.g., factory equipment, website traffic), or personalized recommendations that update as you browse.

Why the Difference Matters:Choosing between batch and real-time processing depends on what you need to do with the data. If you need to make quick decisions based on the very latest information, real-time processing is key. If you're looking at historical trends or doing large-scale analysis where a bit of a delay is okay, batch processing might be more suitable and cost-effective. Many modern systems actually use a hybrid approach, combining both batch and real-time processing to get the best of both worlds.

Applications Across Industries

Big Data Analytics is not just a theoretical concept; it has tangible, transformative impacts across a wide array of sectors. This section will highlight specific case studies and applications to demonstrate the practical utility of Big Data Analytics. From revolutionizing patient care in healthcare to detecting fraud in finance and understanding customer desires in retail, the versatility of these techniques is reshaping industries. We will also explore its role in building smarter cities and integrating with the Internet of Things (IoT).

Healthcare: Predictive Analytics for Enhanced Patient Care

In the healthcare sector, Big Data Analytics is driving a paradigm shift towards more personalized and predictive patient care. By analyzing vast datasets comprising electronic health records (EHRs), medical imaging, genomic sequences, real-time data from wearable devices, and even social determinants of health, healthcare providers can uncover insights that were previously unattainable. One of the most significant applications is predictive analytics, which uses historical and real-time data to forecast future health events and trends.

For example, predictive models can identify patients at high risk of developing certain conditions (like diabetes or heart disease) or those likely to be readmitted to the hospital shortly after discharge. This allows clinicians to intervene proactively with preventative measures or tailored care plans, ultimately improving patient outcomes and reducing healthcare costs. Furthermore, Big Data Analytics aids in identifying the most effective treatments for specific patient subgroups, advancing the field of precision medicine. It also plays a crucial role in public health by monitoring disease outbreaks, understanding epidemiological trends, and optimizing resource allocation.

These courses delve into the application of data analytics in the healthcare domain.

This book provides a focused look at analytics in the health sector.

For those interested in this specific application, the following topic is highly relevant.

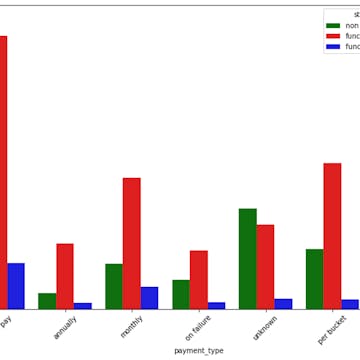

Finance: Safeguarding Assets and Optimizing Trades

The financial services industry, characterized by high transaction volumes and the critical need for security and accuracy, has been an early and enthusiastic adopter of Big Data Analytics. Two prominent applications are fraud detection and algorithmic trading. Financial institutions analyze enormous streams of transactional data in real-time to identify patterns indicative of fraudulent activity, such as unauthorized credit card usage or suspicious account transfers. Machine learning algorithms can learn normal transaction patterns and flag deviations, enabling rapid response to potential threats.

In the realm of algorithmic trading, Big Data Analytics powers high-frequency trading strategies. Sophisticated algorithms analyze vast quantities of market data, news feeds, and even social media sentiment to make split-second trading decisions. This allows firms to capitalize on fleeting market opportunities. Beyond these, Big Data Analytics is also used in finance for credit risk assessment, customer segmentation for personalized financial products, regulatory compliance (RegTech), and optimizing customer service. The ability to process and interpret massive datasets quickly is crucial for maintaining a competitive edge and managing risk in the dynamic financial landscape.

This course touches upon technologies relevant to the FinTech space.

Retail: Deciphering Customer Behavior for Enhanced Experiences

In the competitive retail sector, understanding and anticipating customer behavior is key to success. Big Data Analytics provides retailers with powerful tools to gain deep insights into their customers' preferences, purchasing patterns, and overall journey. By analyzing data from various touchpoints – including online browsing history, point-of-sale transactions, loyalty program activity, social media interactions, and even in-store sensor data – retailers can build comprehensive customer profiles.

This rich understanding enables a host of applications. Personalized marketing campaigns can be targeted with greater precision, offering relevant promotions and product recommendations to individual customers. Customer segmentation allows retailers to tailor their offerings and messaging to different customer groups. Supply chain optimization benefits from more accurate demand forecasting, reducing instances of overstocking or stockouts. Furthermore, insights from Big Data Analytics can inform product development, store layout design, and pricing strategies, all aimed at enhancing the customer experience and driving sales.

Smart Cities and IoT Integration: Building Connected Communities

The vision of a "smart city" relies heavily on the ability to collect, process, and analyze vast amounts of data generated by a network of sensors and interconnected devices – the Internet of Things (IoT). Big Data Analytics is the engine that powers these smart city initiatives, transforming urban living by improving efficiency, sustainability, and quality of life. Applications are diverse and impactful.

For instance, analyzing real-time traffic data from GPS devices, road sensors, and public transport systems can optimize traffic signal timing, alleviate congestion, and improve public transportation scheduling. Smart grids use data from smart meters and sensors to optimize energy distribution, reduce waste, and integrate renewable energy sources more effectively. Public safety can be enhanced through the analysis of data from surveillance cameras and emergency response systems. Environmental monitoring, waste management, and water resource management are other areas where Big Data and IoT integration are making significant contributions to creating more livable and sustainable urban environments.

These courses explore aspects of IoT and its data implications.

Technical Challenges and Solutions

While Big Data Analytics offers immense opportunities, implementing and managing big data systems comes with its own set of technical hurdles. This section will address some of the common pain points and discuss emerging solutions in data management. We will delve into the challenges of scaling data infrastructure, ensuring robust data security and encryption, effectively handling unstructured data, and leveraging edge computing to reduce latency. Understanding these challenges is crucial for data engineers and IT professionals tasked with building and maintaining these complex systems.

Scaling the Unscalable: Data Infrastructure Growth

One of the foremost challenges in Big Data Analytics is the sheer scalability of data infrastructure. As data volumes continue to explode, traditional data storage and processing systems often buckle under the pressure. Organizations need architectures that can grow seamlessly with their data needs without significant performance degradation or prohibitive costs. This involves designing systems that can scale horizontally, meaning adding more machines to a cluster to distribute the load, rather than vertically (upgrading to a more powerful single server), which has inherent limitations.

Solutions to the scalability challenge often involve leveraging distributed computing frameworks like Apache Hadoop and Spark, which are designed to process massive datasets across clusters of commodity hardware. Cloud-based infrastructure-as-a-service (IaaS) and platform-as-a-service (PaaS) offerings also provide elastic scalability, allowing organizations to dynamically adjust their compute and storage resources based on demand. Effective data partitioning, load balancing, and resource management are critical components of building scalable big data systems. Furthermore, designing efficient data pipelines and optimizing queries are essential to ensure that performance keeps pace with data growth.

These courses touch upon aspects of managing and scaling data infrastructure.

Fortifying the Floodgates: Data Security and Encryption

With great data comes great responsibility, and ensuring the security of big data is paramount. The vast and diverse datasets often contain sensitive information, including personal data, financial records, and proprietary business intelligence. A data breach can have severe financial, legal, and reputational consequences. Securing big data environments involves a multi-layered approach, addressing vulnerabilities across the data lifecycle, from collection and storage to processing and transmission.

Encryption is a fundamental security measure. Data should be encrypted both at rest (while stored) and in transit (while moving across networks). Techniques like Transparent Data Encryption (TDE) can encrypt entire databases, while column-level encryption allows for more granular control. For data in motion, protocols like TLS (Transport Layer Security) are essential. Beyond encryption, robust access control mechanisms, identity management, network security, intrusion detection systems, and regular security audits are crucial components of a comprehensive big data security strategy. Emerging technologies like homomorphic encryption, which allows computations to be performed on encrypted data, show promise for enhancing privacy in big data analytics.These resources provide insights into data encryption methods.

Taming the Chaos: Handling Unstructured Data

A significant portion of big data is unstructured or semi-structured, including text documents, social media posts, images, videos, audio files, and sensor data. Unlike structured data that fits neatly into relational database tables, unstructured data lacks a predefined format, making it more challenging to process and analyze using traditional methods. Extracting meaningful insights from this "chaos" requires specialized tools and techniques.

Natural Language Processing (NLP) techniques are used to analyze textual data, enabling tasks like sentiment analysis, topic modeling, and information extraction. Computer vision algorithms process images and videos to identify objects, faces, and scenes. Audio processing techniques can transcribe speech and analyze sound patterns. Storing and querying unstructured data often involves NoSQL databases like document stores or key-value stores, which offer more flexible schemas. Data lakes, which can store vast amounts of raw data in its native format, have also become popular for managing diverse data types before they are processed and refined for specific analytical purposes.

This book offers a comprehensive guide to NLP, a key technique for handling unstructured text data.

The Edge Advantage: Reducing Latency with Edge Computing

In many Big Data applications, particularly those involving IoT devices and real-time decision-making, latency (the delay in data processing and response) can be a critical issue. Sending massive amounts of data from distributed sensors or devices to a centralized cloud for processing and then back to the device for action can introduce unacceptable delays. Edge computing offers a solution by bringing computation and data storage closer to the source of data generation – at the "edge" of the network.

By processing data locally on or near the device, edge computing can significantly reduce latency, conserve network bandwidth, and improve the responsiveness of applications. For example, in autonomous vehicles, critical decisions based on sensor data need to be made almost instantaneously, making edge processing essential. In industrial IoT, analyzing sensor data at the edge can enable rapid detection of equipment anomalies and trigger immediate alerts or shutdowns. While not all data processing needs to happen at the edge, it provides a powerful way to handle time-sensitive tasks and filter or aggregate data before sending it to a central system for more in-depth analysis. This decentralized approach complements centralized cloud architectures, creating a more efficient and responsive big data ecosystem.

Ethical and Privacy Considerations

The power of Big Data Analytics brings with it significant ethical responsibilities and privacy concerns. As we collect, analyze, and utilize vast amounts of personal and sensitive information, it is crucial to navigate this landscape responsibly. This section explores the intersection of technology and societal impact, delving into data protection regulations like GDPR, strategies to mitigate algorithmic bias, the importance of transparency in automated decision-making, and the development of ethical AI frameworks. These considerations are paramount for policymakers, ethicists, corporate leaders, and indeed anyone involved in the Big Data ecosystem.

Navigating the Regulatory Maze: GDPR and Data Sovereignty

The proliferation of data has led to increased scrutiny and regulation regarding its collection, storage, and use. The General Data Protection Regulation (GDPR), implemented by the European Union, is a landmark piece of legislation that has set a global standard for data privacy. GDPR grants individuals significant rights over their personal data, including the right to access, rectify, and erase their data, as well as the right to data portability and the right to object to certain types of processing. Organizations handling the data of EU residents, regardless of where the organization is based, must comply with these stringent requirements or face substantial penalties.

Data sovereignty is another critical concept, referring to the idea that data is subject to the laws and governance structures within the nation or region where it is collected or processed. This means that data stored in a particular country may be subject to that country's laws regarding access, privacy, and security. Navigating the complex web of international data protection laws and data sovereignty requirements is a significant challenge for global organizations. It requires a thorough understanding of legal obligations, robust data governance practices, and often, investments in technology to manage data residency and cross-border data transfers in a compliant manner.Confronting Bias: Strategies for Fairer Algorithms

One of the most significant ethical challenges in Big Data Analytics and Artificial Intelligence is algorithmic bias. Machine learning models are trained on data, and if that data reflects historical biases present in society (e.g., biases related to race, gender, age, or socioeconomic status), the models can inadvertently learn and perpetuate, or even amplify, these biases in their predictions and decisions. This can lead to unfair or discriminatory outcomes in critical areas like loan applications, hiring processes, criminal justice, and healthcare.

Mitigating algorithmic bias requires a multi-faceted approach. It starts with ensuring that training datasets are as diverse and representative as possible and carefully examining them for potential biases. During model development, techniques can be employed to detect and reduce bias, such as re-weighting data, modifying learning algorithms, or adjusting decision thresholds. Post-deployment, continuous monitoring and auditing of algorithmic outputs are necessary to identify and address any emergent biases. Furthermore, promoting diversity within data science teams can bring different perspectives to the table and help in identifying and challenging biased assumptions. Transparency in how algorithms make decisions is also crucial for building trust and enabling scrutiny.

The Glass Box Imperative: Transparency in Automated Decisions

As algorithms increasingly drive automated decision-making in various aspects of our lives, the demand for transparency and explainability has grown significantly. It's no longer sufficient for a system to simply produce an output; users, regulators, and the public want to understand *how* a decision was reached. This is particularly important when automated decisions have significant consequences for individuals, such as in credit scoring, medical diagnosis, or legal judgments.

"Black box" models, where the internal workings are opaque even to their creators, can erode trust and make it difficult to identify errors or biases. Therefore, there's a push towards developing "glass box" or explainable AI (XAI) models. XAI techniques aim to provide insights into how a model arrived at a particular prediction or decision, making the process more interpretable. This transparency is vital for accountability, allowing for the auditing of algorithmic decisions, identifying potential flaws, and ensuring that systems operate fairly and ethically. While achieving full transparency can be challenging, especially with highly complex models, it is a critical area of research and development in responsible AI.

Building Trust: The Rise of Ethical AI Frameworks

In response to the growing awareness of the societal impact of AI and Big Data Analytics, numerous organizations, research institutions, and governmental bodies are developing ethical AI frameworks. These frameworks aim to provide principles, guidelines, and best practices for the responsible design, development, deployment, and governance of AI systems. While specific frameworks may vary, common principles often include fairness, accountability, transparency, privacy, security, non-maleficence (do no harm), and human oversight.

The goal of these frameworks is to ensure that AI technologies are developed and used in a way that aligns with human values, respects fundamental rights, and promotes societal well-being. They encourage a proactive approach to ethics, embedding ethical considerations throughout the entire AI lifecycle, from initial conception to ongoing operation. Ethical AI frameworks are not just about compliance with laws and regulations; they are about fostering a culture of responsibility and building trust with users and the public. As AI continues to evolve, these frameworks will play an increasingly important role in shaping its trajectory and ensuring that its benefits are realized equitably and responsibly. UNESCO, for example, has put forth a Recommendation on the Ethics of Artificial Intelligence, emphasizing human rights and dignity. The Digital Policy Office has also developed an Ethical Artificial Intelligence Framework to guide the incorporation of ethical elements in AI projects.

This course explores the ethical dimensions of Big Data and AI.

These topics delve deeper into the ethical considerations surrounding Big Data.

Career Paths and Skill Development

The field of Big Data Analytics offers a multitude of exciting career opportunities for those with the right skills and passion for data. This section will map out common career trajectories, outline essential technical and soft skills, and discuss strategies for building a strong portfolio and acquiring relevant certifications. Whether you're an aspiring data professional, a student exploring options, or a career changer looking to enter this dynamic field, this guidance aims to provide actionable steps to help you navigate your journey. It's a field that demands continuous learning, but the rewards, both intellectual and professional, can be substantial.

Charting Your Course: Key Roles in Big Data

The Big Data ecosystem encompasses a variety of specialized roles, each contributing to the process of turning raw data into actionable insights. Some of the most prominent roles include:

* Data Scientist: Often considered a multidisciplinary role, Data Scientists use a combination of statistics, machine learning, and domain expertise to analyze complex data, build predictive models, and extract insights. They are typically involved in formulating data-driven questions and communicating findings to stakeholders. * Data Analyst: Data Analysts focus on collecting, cleaning, processing, and performing statistical analysis of data. They create visualizations and reports to help businesses understand trends, make better decisions, and track performance. While they use analytical tools, their work might be less focused on advanced predictive modeling compared to Data Scientists. * Machine Learning Engineer: ML Engineers are responsible for designing, building, and deploying machine learning models at scale. They work closely with Data Scientists to productionize models and ensure they are robust, scalable, and efficient. This role requires strong software engineering skills in addition to ML expertise. * Data Engineer: Data Engineers build and maintain the infrastructure and architecture that allows for the collection, storage, and processing of large datasets. They are responsible for creating data pipelines, ensuring data quality, and making data accessible for analysts and scientists. * Business Analyst (with a data focus): Business Analysts bridge the gap between business needs and technical solutions. In a data context, they help define business problems that can be solved with data analytics, interpret analytical results from a business perspective, and help translate insights into actionable strategies.

It's important to remember that these roles can overlap, and the specific responsibilities may vary depending on the organization's size and structure. Many professionals find themselves growing and evolving between these roles as their careers progress.

Here are some of the key career paths in the Big Data domain.

The Analyst's Toolkit: Essential Technical Proficiencies

A strong foundation in several technical areas is crucial for success in Big Data Analytics. While the specific tools and technologies may evolve, the underlying concepts and skills remain vital. Key technical proficiencies include:

* Programming Languages: Python has become the de facto language for data science and machine learning due to its extensive libraries (like Pandas, NumPy, Scikit-learn, TensorFlow, and PyTorch) and relative ease of use. R is another popular language, particularly favored for statistical analysis and data visualization. For data engineering and working with frameworks like Spark, Scala and Java are also important. * Database Management and SQL: Understanding database concepts (both relational and NoSQL) and proficiency in SQL (Structured Query Language) are fundamental for data retrieval, manipulation, and management. [2wxodg] * Big Data Technologies: Familiarity with frameworks like Apache Hadoop and Apache Spark, as well as cloud-based big data platforms (e.g., AWS, Azure, GCP services), is increasingly essential. * Statistical Analysis and Mathematics: A solid grasp of statistical concepts (hypothesis testing, regression, probability, etc.) and relevant mathematics (linear algebra, calculus) is necessary for understanding and applying analytical techniques effectively. * Data Visualization: The ability to create clear and compelling visualizations using tools like Tableau, Power BI, or Python libraries (e.g., Matplotlib, Seaborn) is crucial for communicating insights effectively. * Machine Learning Techniques: Understanding various machine learning algorithms, how they work, and when to apply them is key, especially for roles like Data Scientist and ML Engineer.

Continuous learning is a hallmark of this field, as new tools and techniques emerge regularly.

These courses can help build foundational technical skills.

This book is a great starting point for Python in data processing.

Beyond the Code: Crucial Soft Skills for Collaboration

While technical skills are the bedrock of a Big Data Analytics career, soft skills are equally important for effective collaboration and impact. Professionals in this field often work in multidisciplinary teams and need to communicate complex findings to non-technical audiences. Key soft skills include:

* Communication: The ability to clearly explain complex analytical concepts and results to diverse audiences, both verbally and in writing, is paramount. This includes storytelling with data to make insights engaging and actionable. * Problem-Solving: Big Data projects often involve tackling ambiguous and complex problems. Strong analytical and critical thinking skills are needed to break down problems, identify appropriate solutions, and interpret results. * Curiosity and Continuous Learning: The field of Big Data is constantly evolving. A natural curiosity and a commitment to lifelong learning are essential for staying up-to-date with new technologies, techniques, and industry trends. * Business Acumen: Understanding the business context and how data insights can drive value is crucial. This involves being able to translate business questions into data problems and data solutions into business actions. * Collaboration and Teamwork: Big Data projects are rarely solo endeavors. The ability to work effectively with colleagues from different backgrounds (e.g., engineers, domain experts, business stakeholders) is vital. * Attention to Detail: Accuracy is critical in data analysis. Meticulous attention to detail in data cleaning, analysis, and interpretation helps ensure the reliability of findings.

Developing these soft skills alongside technical expertise will significantly enhance your effectiveness and career progression in Big Data Analytics.

Paving Your Path: Certifications and Portfolio Power

For those looking to enter or advance in the Big Data Analytics field, certifications and a strong portfolio can be valuable assets. Certifications from reputable organizations or technology providers (e.g., AWS, Google Cloud, Microsoft, Cloudera) can validate your skills in specific tools, platforms, or methodologies. They can demonstrate to potential employers that you have a certain level of proficiency and commitment to the field. While certifications alone may not guarantee a job, they can help your resume stand out and provide a structured learning path.

Perhaps even more impactful is a well-curated portfolio of projects. A portfolio showcases your practical skills and ability to apply your knowledge to real-world (or realistic) problems. These projects can stem from online courses, personal initiatives, contributions to open-source projects, or even work done in previous roles. When building your portfolio:

- Choose projects that align with your career interests and the types of roles you're targeting.

- Clearly document your process, including the problem statement, data sources, methodologies used, code (e.g., on GitHub), and the insights or results achieved.

- Focus on demonstrating a range of skills, from data cleaning and exploration to modeling and visualization.

- If possible, try to quantify the impact of your work or the insights derived.

A strong portfolio provides tangible evidence of your capabilities and can be a powerful talking point during job interviews. Many online courses, like those found on OpenCourser, often include capstone projects that can form the basis of your portfolio.

These courses offer capstone projects that can be excellent additions to a portfolio.

Formal Education Pathways

For individuals considering an academic route into Big Data Analytics, formal education programs offer structured learning environments, access to experienced faculty, and opportunities for in-depth research. This section explores various academic pathways, including undergraduate programs, graduate research opportunities, interdisciplinary PhD programs, and the role of capstone projects and theses. Aligning your educational choices with your long-term career goals, whether in academia or industry, is a key consideration.

Laying the Groundwork: Undergraduate Data Science Programs

The growing demand for data-savvy professionals has led to a surge in undergraduate programs specifically focused on Data Science, Big Data, or Analytics. These programs typically provide a multidisciplinary curriculum that integrates computer science, statistics, and mathematics, along with domain-specific knowledge in areas like business or health sciences. Students in these programs can expect to learn foundational programming skills (often in Python and R), database management, statistical modeling, machine learning principles, and data visualization techniques.

An undergraduate degree in Data Science or a related field like Computer Science, Statistics, or Mathematics with a data-focused specialization can provide a strong launching pad for a career in Big Data Analytics. These programs often emphasize hands-on projects, internships, and sometimes even research opportunities, allowing students to apply their learning to real-world problems. When choosing an undergraduate program, consider factors like the curriculum's breadth and depth, the faculty's expertise, industry connections, and opportunities for practical experience. Exploring options on platforms like OpenCourser's Data Science category can reveal related online courses that might complement formal studies or offer foundational knowledge.

Deep Dives: Graduate Research Opportunities

For those seeking to delve deeper into the theoretical and applied aspects of Big Data Analytics, graduate programs (Master's or PhD) offer significant research opportunities. Master's programs in Data Science, Business Analytics, Computer Science (with a data specialization), or Statistics often include a substantial research component, such as a thesis or a capstone research project. These programs equip students with advanced analytical techniques, machine learning methodologies, and experience in handling complex, large-scale datasets.

PhD programs provide the most intensive research experience, preparing individuals for careers in academia or high-level research roles in industry. Doctoral research in Big Data Analytics can span a wide range of topics, from developing novel machine learning algorithms and scalable data processing architectures to exploring the ethical implications of big data and creating innovative applications in specific domains. Graduate research often involves collaboration with faculty experts, access to specialized computational resources, and opportunities to publish findings in academic journals and conferences. This rigorous training hones critical thinking, problem-solving, and advanced technical skills.

Bridging Disciplines: Interdisciplinary PhD Programs

The inherently interdisciplinary nature of Big Data Analytics has spurred the growth of interdisciplinary PhD programs. These programs recognize that solving complex real-world problems often requires integrating knowledge and methodologies from multiple fields. For example, a PhD program might combine elements of computer science, statistics, and a specific application domain like bioinformatics, computational social science, urban analytics, or environmental science.

Students in such programs benefit from exposure to diverse perspectives and research approaches. They learn to communicate and collaborate effectively with experts from different backgrounds, a skill highly valued in both academic and industrial settings. Interdisciplinary research often tackles cutting-edge problems at the intersection of fields, leading to innovative solutions and a broader understanding of the impact of data. When considering an interdisciplinary PhD, look for programs with strong faculty representation from the relevant disciplines and a clear framework for integrating different areas of study.

Showcasing Mastery: Capstone Projects and Thesis Requirements

A common and highly valuable component of many formal education programs in Data Science and Big Data Analytics, at both undergraduate and graduate levels, is the capstone project or thesis. These culminating experiences provide students with an opportunity to apply the knowledge and skills they've acquired throughout their studies to a substantial, often real-world or research-oriented, problem.

A capstone project typically involves working on a complex data analysis task, from defining the problem and collecting/cleaning data to applying analytical techniques, interpreting results, and presenting findings. It might be done individually or in a team and often involves an external partner or a dataset from a real organization. A thesis, more common at the Master's and PhD levels, involves a more in-depth, original research contribution to the field. Both capstone projects and theses serve as excellent portfolio pieces, demonstrating a student's ability to manage a significant project, solve complex problems, and communicate results effectively. They are often a key differentiator for graduates entering the job market.

Many online specializations and degree programs available through platforms searchable on OpenCourser also culminate in capstone projects, providing flexible pathways to gain this valuable experience.

Online Learning and Self-Education

In the rapidly evolving landscape of Big Data Analytics, online learning and self-education have become indispensable pathways for skill acquisition and career advancement. This section emphasizes the flexibility and project-based learning opportunities offered through online platforms. We'll explore micro-credentials, participation in open-source projects, the use of virtual labs for hands-on practice, and hybrid learning models. These avenues are particularly valuable for self-directed learners and career switchers seeking accessible, non-traditional educational routes into this dynamic field.

Flexible Learning: Micro-Credentials and Nano-Degrees

The rise of online learning platforms has democratized access to education in Big Data Analytics, offering flexible and often more affordable alternatives or complements to traditional degree programs. Micro-credentials, such as certificates from specialized courses or a series of related courses (often called Specializations or Professional Certificates), allow learners to gain targeted skills in specific areas like data visualization, machine learning with Python, or big data processing with Spark. These can be particularly useful for professionals looking to upskill in a particular domain or for individuals seeking to build foundational knowledge before committing to a longer program.

Nano-degrees or similar intensive, often project-based online programs, offer a more comprehensive curriculum designed to equip learners with job-ready skills in a relatively short period. These programs typically focus on practical application and often include mentorship and career support services. Platforms like Coursera, edX, Udacity, and others, which are searchable on OpenCourser, host a vast array of such programs from universities and industry leaders. The flexibility to learn at one's own pace makes these options attractive for those balancing studies with work or other commitments. When choosing micro-credentials or nano-degrees, it's important to research the provider's reputation, the curriculum's relevance to your goals, and the hands-on project opportunities included.These courses represent the types of focused learning experiences available online.

Many learners find success by exploring the diverse catalog of Data Science courses available through OpenCourser to build a customized learning path.

Learning by Doing: Open-Source Project Participation

Contributing to open-source projects is an excellent way for self-directed learners and aspiring Big Data professionals to gain practical experience, build a portfolio, and network with others in the field. The Big Data ecosystem is rich with open-source tools and frameworks like Apache Spark, Hadoop, Kafka, and various machine learning libraries (e.g., TensorFlow, PyTorch, scikit-learn). Many of these projects welcome contributions from the community, ranging from documentation improvements and bug fixes to developing new features.

Participating in open-source projects allows you to:

- Learn from experienced developers: By reviewing existing code and receiving feedback on your contributions, you can learn best practices in software development and data engineering.

- Gain hands-on experience: You'll work with real-world codebases and tackle genuine technical challenges.

- Build your portfolio: Contributions to well-known open-source projects can be a significant asset on your resume and GitHub profile.

- Network: You'll interact with a global community of developers and data professionals, which can lead to mentorship opportunities or even job prospects.

Getting started can be as simple as finding a project that interests you, exploring its codebase, and looking for ways to contribute, often starting with smaller tasks. Many projects have contributor guidelines and communities to help new members get involved.

Simulated Environments: Virtual Labs for Hands-On Practice

One of the challenges in learning Big Data Analytics is gaining access to the necessary infrastructure and datasets for hands-on practice. Setting up a local Hadoop or Spark cluster can be complex and resource-intensive. Virtual labs and cloud-based sandbox environments provide a solution by offering pre-configured environments where learners can experiment with big data tools and techniques without the setup overhead.

Many online courses and platforms now integrate virtual labs directly into their curriculum. These labs allow students to run code, execute queries on large datasets, and work with tools like Jupyter notebooks, Spark, and SQL databases in a simulated but realistic environment. Cloud providers also offer free tiers or trial credits that can be used to explore their big data services (e.g., Amazon S3, Google BigQuery, Azure Data Lake Storage). These hands-on experiences are invaluable for reinforcing theoretical concepts and developing practical skills. They allow learners to experiment, make mistakes, and learn in a safe and accessible setting, which is crucial for mastering the practical aspects of Big Data Analytics.

For learners looking for structured environments to practice, many courses on OpenCourser feature guided projects and virtual lab components.

The Best of Both Worlds: Hybrid Learning Models

Hybrid learning models, which combine elements of online learning with in-person components or mentorship, are gaining traction in Big Data education. These models aim to offer the flexibility and accessibility of online courses while providing the benefits of direct interaction and personalized support. For example, a program might consist primarily of online modules and assignments but also include periodic workshops, bootcamps, or one-on-one sessions with instructors or mentors.Mentorship, whether formal or informal, can be particularly valuable in a complex field like Big Data Analytics. A mentor can provide guidance on learning paths, offer career advice, help troubleshoot challenging problems, and provide encouragement. Some online programs explicitly build mentorship into their structure. Additionally, learners can seek out mentors through professional networking, online communities, or by participating in local tech meetups. The combination of structured online content with personalized human interaction can create a powerful and effective learning experience, catering to different learning styles and providing support throughout the educational journey. For those on a budget, OpenCourser's deals page can be a great resource for finding discounts on courses and learning materials, potentially making hybrid models more accessible.

Industry Trends and Future Directions

The field of Big Data Analytics is anything but static; it is a dynamic and rapidly evolving domain. This section will identify emerging technologies and trends that are set to reshape the analytics landscape. We'll explore the potential convergence of Big Data with quantum computing, the rise of AutoML and the democratization of analytics, the growing importance of sustainability analytics for ESG reporting, and the development of federated learning for enhanced privacy preservation. Staying abreast of these future directions is crucial for innovators, technology strategists, and anyone looking to remain at the forefront of this exciting field.

The Quantum Leap: Convergence with Quantum Computing

While still in its nascent stages, quantum computing holds the potential to revolutionize Big Data Analytics by offering unprecedented processing power. Classical computers, based on bits that represent either 0 or 1, can struggle with the sheer scale and complexity of certain big data problems, especially those involving optimization, simulation, or searching vast solution spaces. Quantum computers, using qubits that can represent 0, 1, or a superposition of both, can perform many calculations simultaneously.

This capability could dramatically accelerate tasks like complex data modeling, drug discovery (by simulating molecular interactions), financial modeling (for risk analysis and portfolio optimization), and breaking complex encryptions. For Big Data Analytics, quantum machine learning algorithms could lead to breakthroughs in pattern recognition and predictive accuracy. While widespread commercial application is still some years away, significant research and investment are being poured into quantum computing, and its eventual integration with big data frameworks could unlock solutions to problems currently considered intractable. According to a report on Gartner's website, exploring emerging technologies like quantum computing is becoming increasingly important for future planning.

Analytics for All: AutoML and the Democratization of Analytics

Automated Machine Learning (AutoML) is a rapidly advancing trend aimed at automating the end-to-end process of applying machine learning to real-world problems. Traditional machine learning model development can be a time-consuming and expertise-intensive process, involving tasks like data preprocessing, feature engineering, model selection, hyperparameter tuning, and model deployment. AutoML tools aim to automate many of these steps, making machine learning more accessible to a broader range of users, including business analysts and domain experts who may not have deep data science expertise.This trend is contributing to the democratization of analytics, empowering more people within an organization to leverage data-driven insights without relying solely on a specialized team of data scientists. By simplifying the ML workflow, AutoML can accelerate the development and deployment of AI solutions, enabling organizations to tackle more analytical projects and integrate AI more broadly into their operations. While AutoML doesn't replace the need for skilled data professionals entirely (human oversight and domain expertise remain crucial), it serves as a powerful enabler, allowing data science teams to focus on more complex and strategic problems.

Data for Good: Sustainability Analytics and ESG Reporting

There is a growing global emphasis on Environmental, Social, and Governance (ESG) factors in business and investment. Organizations are increasingly expected to measure, report on, and improve their performance in these areas. Big Data Analytics is playing a crucial role in enabling sustainability analytics and supporting robust ESG reporting.

By collecting and analyzing data from diverse sources – such as energy consumption metrics, supply chain information, employee diversity statistics, carbon emissions data, and community impact assessments – organizations can gain insights into their ESG performance. Analytics can help identify areas for improvement, track progress towards sustainability goals, optimize resource usage, and ensure compliance with evolving ESG regulations and standards. For example, analyzing sensor data from industrial equipment can help optimize energy efficiency, while analyzing supply chain data can help identify and mitigate environmental or social risks. The ability to provide transparent, data-backed ESG reporting is becoming increasingly important for investor relations, brand reputation, and long-term value creation.

Privacy-Preserving Power: Federated Learning

As concerns about data privacy intensify, and regulations like GDPR become more stringent, there's a growing need for analytical techniques that can extract insights without compromising individual privacy. Federated Learning (FL) is an emerging machine learning approach that addresses this challenge by training a shared global model across multiple decentralized edge devices or servers holding local data samples, without exchanging the raw data itself.

In a federated learning setup, the model is sent to the local devices where the data resides. Each device trains the model on its local data, and then only the updated model parameters (or summaries of the learning) are sent back to a central server to be aggregated into an improved global model. This means the sensitive raw data never leaves the local device, significantly enhancing privacy. Federated learning is particularly relevant for applications involving sensitive data, such as healthcare (training models on patient data from multiple hospitals without sharing patient records), mobile devices (improving predictive keyboards without uploading user text), and finance. While FL presents its own set of challenges (e.g., communication efficiency, statistical heterogeneity of data across devices), it offers a promising path towards collaborative machine learning with enhanced privacy preservation.

Impact on Business Strategy and Economy

Big Data Analytics is more than just a technological advancement; it is a fundamental driver of change in business strategy and the broader economy. This section will link technical capabilities to strategic business outcomes, exploring how organizations are leveraging data-driven decision-making frameworks, analyzing the return on investment (ROI) of analytics implementations, gaining competitive advantage through predictive insights, and navigating the workforce transformation and upskilling costs associated with this data revolution. Understanding these impacts is crucial for C-suite executives, investors, and anyone involved in strategic planning.

The Data-Driven Organization: Decision-Making Frameworks

The ultimate goal of Big Data Analytics in a business context is to foster a data-driven decision-making culture. This means moving away from decisions based on gut feelings or anecdotal evidence and towards strategies grounded in empirical data and analytical insights. Organizations that successfully embed data into their decision-making processes often establish clear frameworks that guide how data is collected, analyzed, interpreted, and acted upon.

These frameworks typically involve several key components:

- Identifying Key Business Questions: Clearly defining the strategic questions that data needs to answer.

- Data Collection and Management: Ensuring access to relevant, high-quality data from various sources.

- Analytical Capabilities: Having the right tools, technologies, and talent to perform robust analysis.

- Insight Generation: Translating analytical findings into understandable and actionable insights for business users.

- Integration into Workflows: Embedding data-driven insights directly into operational processes and strategic planning cycles.

- Continuous Improvement: Regularly evaluating the effectiveness of data-driven decisions and refining the framework.

Adopting such frameworks can lead to more consistent, effective, and agile decision-making across all levels of an organization, ultimately driving better business outcomes.

Measuring the Value: ROI Analysis of Analytics Implementations

Investing in Big Data Analytics capabilities – including technology, talent, and process changes – can be a significant undertaking. Therefore, organizations are keen to understand and measure the Return on Investment (ROI) of these implementations. Calculating the ROI of analytics projects involves quantifying both the costs and the benefits.

Costs can include software and hardware procurement, data storage, salaries for data professionals, training, and consulting fees. Benefits, while sometimes harder to quantify directly, can be substantial. These can manifest as increased revenue (e.g., through better customer targeting or new product development), cost savings (e.g., through operational efficiencies or fraud reduction), improved customer satisfaction and retention, enhanced risk management, and better strategic positioning.

A clear ROI analysis helps justify ongoing investment in Big Data Analytics, prioritize projects that offer the highest value, and demonstrate the tangible impact of data-driven initiatives to stakeholders. It often requires a combination of direct financial metrics and indirect measures of business improvement.

Seeing the Future: Competitive Advantage Through Predictive Insights

In today's highly competitive business environment, the ability to anticipate future trends and customer needs can provide a significant competitive advantage. Big Data Analytics, particularly through the use of predictive insights, empowers organizations to do just that. By analyzing historical data and identifying patterns, predictive models can forecast future outcomes, such as customer churn, demand for products, equipment failures, or market shifts.

This foresight allows businesses to be proactive rather than reactive. For example, if a model predicts that a certain segment of customers is likely to churn, the company can implement targeted retention strategies. If demand for a product is predicted to surge, supply chains can be adjusted accordingly. This ability to make data-informed predictions and take pre-emptive action enables companies to optimize resource allocation, personalize customer experiences, mitigate risks, and seize opportunities faster than their competitors. Organizations that effectively harness predictive insights can differentiate themselves in the marketplace and achieve superior performance.

The Human Element: Workforce Transformation and Upskilling Costs

The rise of Big Data Analytics is driving a profound workforce transformation. As organizations become more data-centric, there is a growing demand for employees with data literacy and analytical skills across all departments, not just within specialized data teams. This necessitates significant investment in upskilling and reskilling the existing workforce to adapt to new tools, technologies, and data-driven ways of working.

The costs associated with this transformation include formal training programs, online courses, workshops, and the time employees spend learning new skills. Furthermore, organizations need to foster a culture that embraces data and encourages continuous learning. While these upskilling costs can be considerable, they are often viewed as a critical investment in building a future-ready workforce capable of leveraging data for innovation and growth. The alternative – failing to adapt – can lead to a skills gap that hinders an organization's ability to compete in the digital economy. According to the U.S. Bureau of Labor Statistics, employment of data scientists is projected to grow significantly, highlighting the increasing need for these skills.

For those looking to navigate this transformation, OpenCourser offers a wide array of courses to help build relevant skills. Exploring the Professional Development section can be a good starting point.

Frequently Asked Questions (Career Focus)

This section aims to provide concise, data-backed answers to common career concerns for those aspiring to work in Big Data Analytics, as well as for hiring managers looking to understand the talent landscape. We'll address entry requirements, in-demand skills, salary expectations, work arrangements, and the long-term outlook for careers in this field.

Breaking In: Can I Enter Analytics Without a Computer Science Degree?

Yes, it is certainly possible to enter the field of Big Data Analytics without a traditional Computer Science (CS) degree. While a CS background can be advantageous, especially for more technical roles like Data Engineering or ML Engineering, many successful professionals come from diverse educational backgrounds, including statistics, mathematics, economics, business, physics, social sciences, and other quantitative fields.

What matters most are the relevant skills and a demonstrated ability to work with data. Many individuals successfully transition by:

- Acquiring foundational knowledge: Through online courses, bootcamps, or self-study in areas like programming (Python/R), SQL, statistics, and machine learning.

- Building a strong portfolio: Showcasing practical skills through personal projects, contributions to open-source projects, or data analysis competitions.

- Gaining domain expertise: Leveraging knowledge from a previous field can be a significant asset, as understanding the context of the data is crucial.

- Networking: Connecting with professionals in the field can provide insights, mentorship, and potential job opportunities.

Employers are increasingly focusing on demonstrable skills and experience rather than solely on degree titles. Highlighting your analytical abilities, problem-solving skills, and any relevant project work is key.

The Lingua Franca of Data: Most In-Demand Programming Languages for 2025?

Predicting the exact landscape for 2025 requires looking at current strong trends. As of now and likely continuing into the near future, Python remains a dominant and highly in-demand programming language for Big Data Analytics and Data Science. Its extensive libraries (Pandas for data manipulation, NumPy for numerical computation, Scikit-learn for machine learning, TensorFlow and PyTorch for deep learning) and its relative ease of learning make it a versatile choice for a wide range of tasks, from data cleaning and analysis to building complex machine learning models.

SQL (Structured Query Language) is another indispensable skill. Despite the rise of NoSQL databases, SQL is fundamental for querying and managing data in relational databases and is also used in many big data query engines (like Apache Hive, Spark SQL, and Presto).While Python and SQL are often considered core, other languages also have their place:

- R: Still widely used, especially for statistical computing, data visualization, and academic research.

- Scala: Often preferred for its performance in conjunction with Apache Spark, particularly for large-scale data engineering tasks.

- Java: Remains relevant in many enterprise big data ecosystems, especially for tools built on the Hadoop platform.

Staying proficient in Python and SQL will likely provide a strong foundation, with knowledge of R, Scala, or Java being beneficial depending on the specific role and industry.

Show Me the Numbers: Salary Benchmarks Across Experience Levels?

Salaries in Big Data Analytics can be quite competitive and vary significantly based on several factors, including:

- Role: Data Scientists and Machine Learning Engineers often command higher salaries than entry-level Data Analyst positions due to the specialized skills required.

- Experience Level: As with any field, salaries generally increase with years of relevant experience and a proven track record. Entry-level positions will have lower starting salaries compared to senior, lead, or managerial roles.

- Location: Salaries can differ considerably based on the cost of living and demand for talent in specific geographic regions. Major tech hubs often offer higher salaries but also have a higher cost of living.

- Industry: Some industries, like finance and technology, may offer higher compensation packages than others.

- Skills and Education: Specialized skills (e.g., deep learning, NLP, specific cloud platforms) and advanced degrees can also influence salary levels.

While it's difficult to provide exact universal figures, you can find more specific salary information through resources like Glassdoor, Salary.com, LinkedIn Salary, and reports from recruitment firms like Robert Half. The U.S. Bureau of Labor Statistics Occupational Outlook Handbook also provides median salary data for related professions like "Data Scientists" and "Database Administrators." Generally, the field is known for offering attractive compensation due to the high demand for skilled professionals.

The Office or The Couch: Remote Work Prevalence in Analytics Roles?

The COVID-19 pandemic significantly accelerated the adoption of remote work across many industries, and Big Data Analytics was no exception. Many analytics roles, particularly those focused on data analysis, model development, and software engineering, can be performed effectively from remote locations, provided there is access to the necessary data, tools, and secure communication channels.

The prevalence of remote work in Big Data Analytics today is quite varied:

- Fully Remote: Many companies, especially in the tech sector or those with a distributed workforce model, offer fully remote positions.

- Hybrid Models: A common approach involves a mix of remote and in-office work, allowing for flexibility while still fostering in-person collaboration.

- Primarily In-Office: Some organizations, particularly in more traditional industries or those with specific security requirements, may still require employees to be predominantly on-site.

The trend towards greater flexibility seems likely to continue. When searching for roles, you'll find that many job postings now specify the work arrangement. The ability to work remotely can broaden your job search geographically and offer a better work-life balance, but it also requires strong self-discipline and effective communication skills.

Future-Proofing Your Career: Longevity Given AI Automation?

The rise of AI and automation, particularly with tools like AutoML, naturally raises questions about the long-term career longevity in Big Data Analytics. While it's true that AI will automate certain routine and repetitive tasks currently performed by data professionals, it is unlikely to replace the need for human expertise entirely. Instead, the nature of the roles is likely to evolve.

AI and automation can be seen as powerful tools that augment human capabilities, allowing professionals to:

- Focus on higher-value tasks: By automating routine data preparation or model building, professionals can spend more time on strategic problem-solving, interpreting complex results, communicating insights, and addressing ethical considerations.

- Tackle more complex problems: AI can help analyze even larger and more intricate datasets, enabling the exploration of new frontiers.

- Develop and manage AI systems: There will be an ongoing need for skilled individuals to design, build, train, deploy, monitor, and maintain these AI systems.

The skills that will remain highly valuable are critical thinking, domain expertise, creativity, communication, and the ability to ask the right questions. Continuous learning and adapting to new tools and methodologies will be crucial for long-term success. Rather than making roles obsolete, AI is more likely to transform them, creating new opportunities for those who can work alongside intelligent systems.

Jumping Ship: Cross-Industry Transition Strategies?

The skills developed in Big Data Analytics are highly transferable across different industries. If you're looking to transition from one industry to another (e.g., from finance to healthcare, or from retail to tech), a background in data analytics can be a strong asset.

Here are some strategies for making a successful cross-industry transition:

- Identify Transferable Skills: Focus on the core analytical skills you possess – problem-solving, statistical analysis, programming, data visualization, machine learning – which are valuable in any data-driven role.

- Learn the New Domain: Invest time in understanding the specific challenges, terminology, data types, and regulatory environment of the target industry. Online courses, industry publications, and networking can be helpful here.

- Tailor Your Resume and Portfolio: Highlight projects and experiences that are most relevant to the new industry. If possible, undertake a personal project using data from your target sector to demonstrate your interest and capability.

- Network in the Target Industry: Attend industry-specific conferences or meetups (online or in-person), and connect with professionals working in that field on platforms like LinkedIn.

- Be Prepared to Start at a Slightly Lower Level (Potentially): Depending on the degree of change and your existing experience, you might need to be open to roles that allow you to gain specific domain experience before moving into more senior positions.

- Emphasize Adaptability and Learning Agility: Highlight your ability to quickly learn new concepts and adapt to different environments.

Many companies value the fresh perspectives that individuals from different industry backgrounds can bring.

Useful Links and Resources

To further aid your exploration into Big Data Analytics, here is a collection of useful resources. These include links to general information, learning platforms, and professional organizations.

- Bureau of Labor Statistics Occupational Outlook Handbook: Provides detailed information on various careers, including data scientists and related roles, covering job duties, education, pay, and outlook.

- IBM Big Data Analytics: Offers insights and resources from a leading technology provider in the big data space.

- Tableau on Big Data Analytics: Features articles and learning materials on big data concepts and visualization.

- TechTarget - Big Data Analytics Definition: Provides a concise definition and overview of the topic.

- OpenCourser - Data Science Courses: A comprehensive directory to find online courses in Data Science and Big Data Analytics from various providers.

- OpenCourser Learner's Guide: Contains articles and tips on how to make the most of online learning for career development.

Embarking on a journey into Big Data Analytics is a commitment to continuous learning and adaptation in a field that is constantly pushing the boundaries of what's possible with data. The challenges are significant, but the opportunities to make a meaningful impact are even greater. Whether you are just starting to explore this domain or are looking to deepen your expertise, the resources and pathways discussed in this article aim to provide a comprehensive guide to help you navigate your path. Remember that grounding your aspirations in a realistic understanding of the demands, coupled with a proactive approach to skill development, will be key to achieving your goals in the exciting world of Big Data Analytics.