Database Management

vigating the World of Database Management

Database management is the practice of organizing, storing, and retrieving an organization's data. At its core, it involves using specialized software, known as a Database Management System (DBMS), to control the creation, maintenance, and use of a database. Think of it as the librarian for a vast collection of information, ensuring everything is in its right place and easily accessible to those who need it while keeping it secure from those who don't.

Working in database management can be quite engaging. Imagine designing the very structure that holds critical information for a hospital, ensuring doctors can quickly access patient records, or for a financial institution, safeguarding sensitive transaction data. The field also offers the excitement of problem-solving, whether it's optimizing a database for faster performance or troubleshooting an unexpected issue. Furthermore, as data becomes increasingly central to how organizations operate, database professionals often find themselves at the forefront of innovation, working with cutting-edge technologies and shaping how businesses leverage their most valuable asset: information.

Introduction to Database Management

This section will lay the groundwork for understanding what database management entails, its fundamental components, its wide-ranging applications, and how it has evolved over time. Whether you're just curious about the field or considering it as a career path, these foundational concepts will provide a solid starting point.

Definition and Purpose of Database Management Systems (DBMS)

A Database Management System (DBMS) is a software package designed to define, manipulate, retrieve, and manage data in a database. Essentially, a DBMS acts as an intermediary between the users/applications and the database itself. Its primary purpose is to provide an efficient and effective method of creating and managing databases and to enable users to store and retrieve database information conveniently and efficiently. Without a DBMS, managing large volumes of data would be an incredibly complex and error-prone task.

The DBMS ensures data integrity and security, meaning it enforces rules to maintain the accuracy and consistency of data and controls access to the database. It also provides a way to back up and recover data in case of system failures. Furthermore, a DBMS allows multiple users and programs to access and manipulate the database concurrently without interfering with each other, a crucial feature in today's multi-user environments.

Consider a large online retailer. Their DBMS manages product information, customer details, orders, and inventory levels. When you browse their website, the DBMS retrieves product information to display. When you place an order, the DBMS updates inventory and records your purchase. All of this happens seamlessly and quickly, thanks to the underlying database management system.

Core Components: Data, Schemas, Query Languages, Storage Engines

Understanding the core components of a database system is crucial. First, there's the data itself – the raw facts and figures that are stored. This could be anything from customer names and addresses to product specifications or scientific research findings. The way this data is organized and structured is defined by the schema. A schema is like a blueprint for the database; it outlines the tables, the fields within those tables, the relationships between tables, and any constraints on the data.

To interact with the data, we use query languages. The most common query language for relational databases is SQL (Structured Query Language). SQL allows users to insert, update, delete, and retrieve data. For example, a simple SQL query might be "SELECT customer_name FROM customers WHERE city = 'New York'". This would retrieve the names of all customers located in New York.

Finally, storage engines are components within the DBMS responsible for actually storing, retrieving, and managing data on physical storage devices like hard drives or solid-state drives. Different storage engines can offer different performance characteristics, features like transaction support, and varying levels of reliability. The choice of storage engine can significantly impact the overall performance and capabilities of the database.

Applications Across Industries (e.g., Healthcare, Finance)

Database management is not confined to a single industry; its applications are vast and varied. In healthcare, databases are critical for managing patient records, tracking medical histories, scheduling appointments, and managing billing information. Secure and efficient access to this data can literally be a matter of life and death, enabling doctors to make informed decisions quickly.

In the finance industry, databases underpin almost every operation. They store customer account information, track financial transactions, manage stock market data, and support fraud detection systems. The accuracy, security, and availability of these databases are paramount for maintaining trust and regulatory compliance. For example, when you use an ATM, a complex series of database interactions verifies your identity, checks your balance, dispenses cash, and records the transaction, all within seconds.

Beyond these, databases are essential in retail (managing inventory, sales, customer loyalty programs), education (student records, course information, library catalogs), manufacturing (supply chain management, production tracking), telecommunications (customer data, call records, network management), and government (citizen records, tax information, public services), to name just a few. Essentially, any organization that deals with a significant amount of information relies on database management.

Historical Evolution (Pre-Digital to Modern Systems)

The concept of organizing information is not new. Before digital computers, manual systems like card catalogs in libraries or filing cabinets in offices served a similar purpose. These "databases" were physical, and retrieving information was often a laborious, time-consuming process. The advent of computers in the mid-20th century revolutionized data storage and retrieval.

Early computerized database systems emerged in the 1960s, with hierarchical and network models being the dominant paradigms. These systems, while an improvement over manual methods, were often complex to design and query. The 1970s saw a pivotal development with Edgar F. Codd's introduction of the relational model at IBM. This model, based on set theory and predicate logic, provided a more structured and mathematically rigorous way to organize and query data, leading to the development of SQL. Relational Database Management Systems (RDBMS) became the industry standard for decades.

The late 1990s and 2000s brought the rise of the internet and "Big Data," characterized by massive volumes, high velocity, and diverse types of data. This spurred the development of NoSQL (Not Only SQL) databases, designed to handle the scalability and flexibility demands of web-scale applications and unstructured data. Today, the database landscape is diverse, with relational databases still widely used for structured data and transactional integrity, while NoSQL databases cater to a variety of specialized needs. Cloud databases, offering scalability and managed services, have also become increasingly prevalent, further transforming how organizations manage their data.

Key Concepts and Terminology

To truly understand database management, one must become familiar with its core concepts and terminology. This section delves into some of an essential distinctions and principles that form the bedrock of database theory and practice. These concepts are fundamental for anyone looking to design, manage, or even just interact intelligently with databases.

Relational vs. Non-Relational Databases

Databases can be broadly categorized into two main types: relational and non-relational (often referred to as NoSQL). Relational databases, as the name suggests, are based on the relational model. They organize data into tables, which consist of rows (records) and columns (attributes). Each table has a primary key, a unique identifier for each row, and relationships between tables are established using foreign keys. SQL is the standard language for querying and managing relational databases. Examples include MySQL, PostgreSQL, Oracle Database, and Microsoft SQL Server. They are known for their data integrity, consistency, and support for complex transactions, making them suitable for applications requiring strong transactional guarantees, like financial systems or inventory management.

Non-relational databases, or NoSQL databases, encompass a variety of database models that do not adhere to the strict tabular structure of relational databases. They emerged to address the challenges of large-scale data processing, high availability, and flexible data models often encountered in web applications and big data scenarios. There are several types of NoSQL databases, including:

- Document databases (e.g., MongoDB, Couchbase): Store data in document-like structures, typically JSON or BSON. They offer flexibility as each document can have its own unique structure.

- Key-value stores (e.g., Redis, Amazon DynamoDB): Store data as a collection of key-value pairs. They are highly scalable and excellent for caching and session management.

- Column-family stores (e.g., Apache Cassandra, HBase): Store data in columns rather than rows, which can be efficient for queries that only need a subset of columns from a wide table.

- Graph databases (e.g., Neo4j, Amazon Neptune): Designed to store and navigate relationships between data points. They excel at handling complex relationships and are used in social networks, recommendation engines, and fraud detection.

The choice between relational and non-relational databases depends heavily on the specific needs of the application, including the nature of the data, scalability requirements, consistency needs, and the types of queries that will be performed.

For those looking to get a foundational understanding, these courses provide excellent starting points for both SQL and NoSQL paradigms.

These books offer comprehensive coverage of database systems, including both relational and non-relational concepts.

ACID Properties and Normalization

ACID properties are a set of characteristics that guarantee database transactions are processed reliably. The acronym ACID stands for Atomicity, Consistency, Isolation, and Durability.

- Atomicity ensures that a transaction is treated as a single, indivisible unit of work. Either all of its operations are completed successfully, or none of them are. If any part of the transaction fails, the entire transaction is rolled back, leaving the database in its original state.

- Consistency guarantees that a transaction brings the database from one valid state to another. It ensures that all data integrity constraints (like primary keys, foreign keys, and data type checks) are maintained.

- Isolation ensures that concurrent transactions operate independently of each other. The intermediate state of one transaction is not visible to other transactions until it is committed. This prevents interference and ensures that transactions produce the same result as if they were executed sequentially.

- Durability ensures that once a transaction has been committed, its changes are permanent and will survive any subsequent system failures, such as power outages or crashes. This is typically achieved by writing changes to non-volatile storage.

ACID properties are particularly important for relational databases that handle critical financial or business data where reliability is paramount.

Normalization is the process of organizing the columns and tables of a relational database to minimize data redundancy and improve data integrity. It involves decomposing larger tables into smaller, well-structured tables and defining relationships between them. The goals of normalization are to eliminate redundant data (storing the same piece of information in multiple places) and to ensure that data dependencies make sense (only storing related data in a table). Normalization is typically achieved by following a series of guidelines called normal forms (e.g., First Normal Form (1NF), Second Normal Form (2NF), Third Normal Form (3NF), Boyce-Codd Normal Form (BCNF)). While normalization reduces redundancy and improves integrity, it can sometimes lead to more complex queries involving multiple table joins. Therefore, a balance often needs to be struck between the level of normalization and performance requirements, sometimes leading to deliberate denormalization for specific use cases.

SQL vs. NoSQL Paradigms

The distinction between SQL and NoSQL paradigms goes beyond just the query language; it reflects fundamental differences in how data is structured, stored, and managed. As mentioned earlier, SQL (Structured Query Language) is the standard language for relational databases. These databases enforce a predefined schema, meaning the structure of the data (tables, columns, data types) must be defined before data can be inserted. This rigid schema ensures data consistency and integrity and is well-suited for structured data where relationships are clearly defined and transactional consistency (ACID properties) is critical. SQL databases excel at complex queries, joins across multiple tables, and enforcing data integrity rules.

NoSQL (Not Only SQL) databases, on the other hand, offer more flexibility in terms of data models and schema. Many NoSQL databases are schema-less or have a dynamic schema, allowing the structure of the data to evolve more easily. This makes them suitable for handling unstructured or semi-structured data, such as social media posts, sensor data, or log files. NoSQL databases are often designed for horizontal scalability (scaling out by adding more servers) and high availability, making them popular for large-scale web applications and real-time data processing. They often prioritize performance and availability over strict consistency (sometimes following the BASE model – Basically Available, Soft state, Eventually consistent – as an alternative to ACID). The query languages for NoSQL databases vary depending on the specific database type (e.g., MongoDB uses a document-based query language, while graph databases have their own graph traversal languages).

Choosing between SQL and NoSQL depends on factors like data structure, scalability needs, consistency requirements, and the types of queries anticipated. It's also increasingly common to see hybrid approaches where organizations use both SQL and NoSQL databases for different parts of their applications.

These courses delve into the practical aspects of using SQL and introduce NoSQL concepts.

Indexing, Transactions, and Concurrency Control

Indexing is a technique used to speed up the retrieval of data from a database. An index is a data structure (often a B-tree or hash table) that stores a small subset of the data from a table, along with pointers to the actual location of the full records. When you query data based on an indexed column, the database can use the index to quickly locate the relevant records without having to scan the entire table. This is analogous to using the index in the back of a book to find information on a specific topic quickly. While indexes improve query performance, they also consume storage space and can slow down data modification operations (inserts, updates, deletes) because the indexes themselves also need to be updated. Therefore, careful consideration is needed when deciding which columns to index.

Transactions, as touched upon with ACID properties, are sequences of one or more database operations that are executed as a single logical unit of work. The key idea is that all operations within a transaction must complete successfully for the transaction to be considered successful (committed). If any operation fails, the entire transaction is rolled back, and the database remains unchanged. Transactions are crucial for maintaining data integrity, especially in multi-step processes where partial completion could lead to inconsistent or incorrect data. For example, transferring money from one bank account to another involves two operations: debiting one account and crediting another. Both must succeed; if one fails, the entire transfer should be aborted.

Concurrency control refers to the mechanisms used by a DBMS to manage simultaneous access to the database by multiple users or processes. Without proper concurrency control, concurrent operations could interfere with each other, leading to data inconsistencies or incorrect results. Common concurrency control techniques include locking (where a transaction acquires a lock on a data item, preventing other transactions from modifying it until the lock is released) and timestamping (where each transaction is assigned a unique timestamp, and operations are ordered based on these timestamps). The goal of concurrency control is to ensure that concurrent transactions are executed in a way that maintains data integrity and isolation, while also maximizing throughput and minimizing delays.

A deeper understanding of transaction processing is offered in this classic text.

Getting Started in Database Management

Embarking on a journey into database management can be an exciting prospect. This section is designed for those at the very beginning of this path, whether you are a student exploring career options or someone considering a career change. We will outline some basic prerequisites, suggest introductory projects for hands-on experience, and point towards common tools and opportunities to get your foot in the door.

Basic Technical Prerequisites (e.g., Logic, Data Structures)

While you don't need to be a seasoned programmer to start learning about databases, a few foundational technical concepts can be very helpful. A good grasp of basic logic is essential. Databases operate on logical principles, and understanding concepts like AND, OR, NOT, and conditional statements will make learning query languages like SQL much easier. Problem-solving skills, which are closely related to logical thinking, are also highly valuable.

Familiarity with fundamental data structures can also provide a significant advantage. Understanding how data can be organized (e.g., arrays, lists, trees, hash tables) will help you appreciate how databases store and retrieve information efficiently. While you won't necessarily be implementing these structures from scratch when you start, knowing the concepts will aid in understanding database design principles and performance considerations like indexing.

Lastly, while not strictly a prerequisite for understanding database concepts, some basic computer literacy, including familiarity with operating systems and file management, will be beneficial. As you progress, you might also find that a foundational understanding of networking concepts becomes useful, especially when dealing with distributed databases or remote access.

Consider these courses to build foundational knowledge in programming and data structures, which are highly relevant to database management.

Introductory Projects for Hands-on Learning

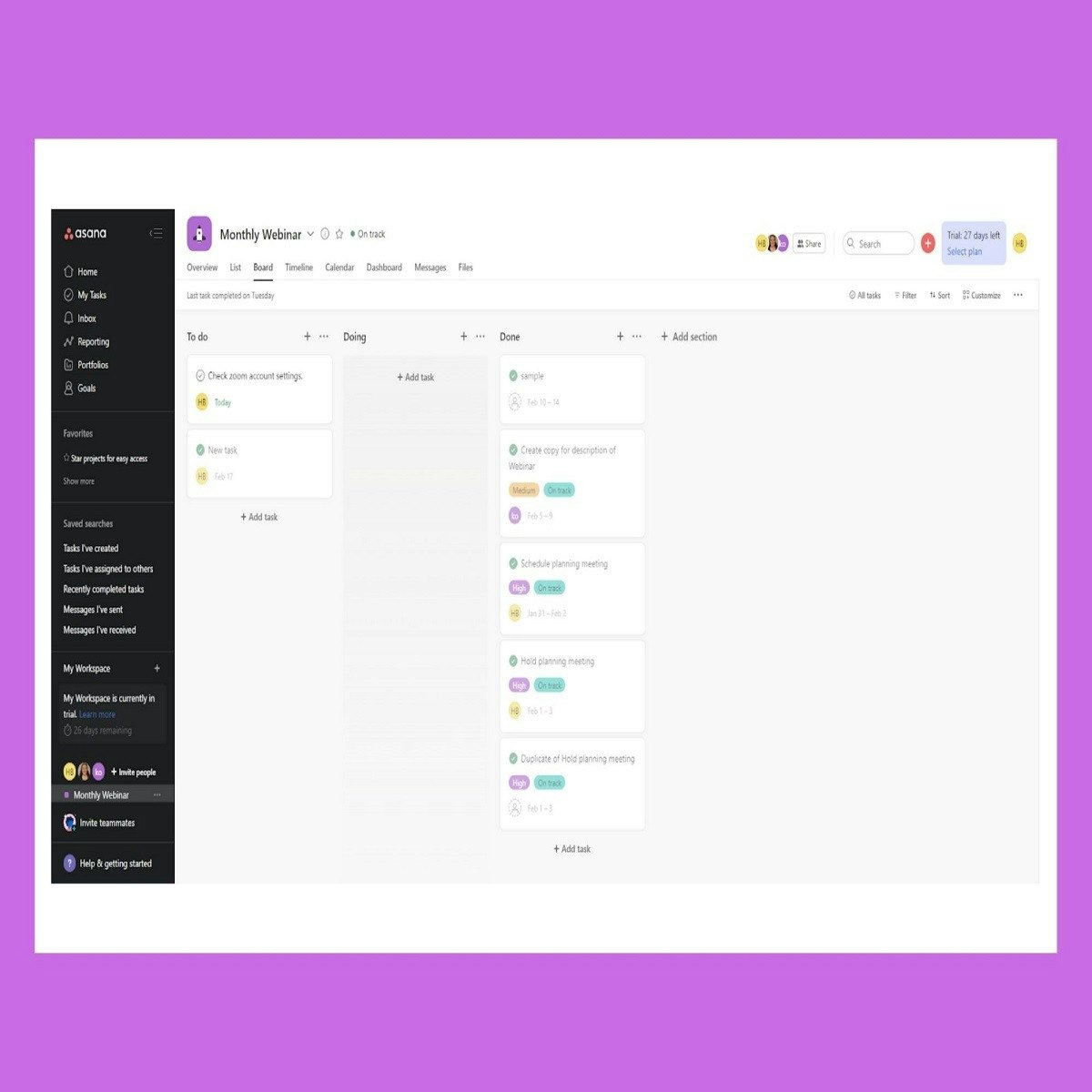

One of the best ways to learn database management is by doing. Starting with small, manageable projects can solidify your understanding and build practical skills. For instance, you could design a database for a personal library. This would involve identifying the necessary information (book title, author, ISBN, publication year, genre), creating tables, defining relationships (e.g., an author can write multiple books), and then populating it with data. You could then practice writing SQL queries to find books by a specific author, genre, or publication year.

Another simple project could be creating a database to track your personal contacts. This might include tables for contacts, addresses, and phone numbers, with relationships between them. You could then try to query for contacts in a specific city or those with a particular job title. As you gain confidence, you could expand these projects, perhaps adding a table for borrowed books in your library database or tracking communication history in your contacts database.

Many online tutorials and introductory courses include guided projects. For example, setting up a simple blog database with tables for posts, users, and comments is a common and instructive exercise. The key is to start simple, focus on understanding the core concepts of data modeling and querying, and gradually increase complexity.

These project-based courses offer excellent hands-on experience for beginners.

Common Tools and Software for Beginners

To get started with hands-on database work, you'll need some tools. For relational databases, many excellent free and open-source options are available. MySQL is a very popular choice, known for its ease of use and wide community support. PostgreSQL is another powerful open-source RDBMS, often favored for its advanced features and extensibility. SQLite is a lightweight, file-based database that's incredibly easy to set up and use, making it great for learning and small projects as it doesn't require a separate server process.

Most of these databases come with command-line interfaces for executing SQL queries. However, graphical user interface (GUI) tools can make interacting with databases much more intuitive for beginners. Popular GUI tools include MySQL Workbench (for MySQL), pgAdmin (for PostgreSQL), DBeaver (a universal database tool that supports many different databases), and Microsoft SQL Server Management Studio (SSMS) if you're working with Microsoft SQL Server (which also has a free Express edition for learning).

For those interested in NoSQL, MongoDB offers a Community Server that is free to use, and its GUI tool, MongoDB Compass, helps visualize and manage data. Airtable is a cloud-based platform that combines the simplicity of a spreadsheet with the power of a database, offering a very gentle introduction to database concepts without requiring SQL knowledge initially.

Many introductory online courses provide guidance on installing and using these tools.

Volunteer/Internship Opportunities

Gaining real-world experience, even in a limited capacity, can be invaluable when starting out. Volunteer opportunities with non-profit organizations can sometimes provide a chance to work with their data. Many smaller non-profits may need help organizing donor lists, volunteer records, or program data, and they might be open to someone willing to learn and assist with basic database tasks.

Internships are another excellent route, particularly for students or recent graduates. Many companies, especially larger ones, offer internships in IT or data-related roles that may involve exposure to database management. These can provide structured learning, mentorship, and a glimpse into how databases are used in a professional setting. Look for internships that mention data analysis, database administration, or software development with a database component.

Even contributing to open-source database projects or related tools can be a way to gain experience and build a portfolio. While this might be more challenging for absolute beginners, it's an avenue to consider as your skills develop. Networking through online communities, forums, and local tech meetups can also uncover informal learning opportunities or mentorship that might lead to practical experience.

Formal Education Pathways

For those seeking a structured and comprehensive approach to mastering database management, formal education offers a well-defined path. This section explores relevant academic degrees, core coursework, research avenues, and industry-recognized certifications that can pave the way for a specialized career in this field. This is particularly relevant for university students and those considering advanced research.

Relevant Undergraduate/Graduate Degrees (e.g., CS, Information Systems)

A bachelor's degree is often the typical entry point into the field of database management. Degrees in Computer Science (CS) are highly relevant, as they provide a strong foundation in programming, algorithms, data structures, and software engineering principles, all of which are applicable to database work. CS programs often include specific courses on database systems.

Another common pathway is a degree in Information Systems (IS) or Management Information Systems (MIS). These programs tend to bridge the gap between business and technology, focusing on how information technology can be used to solve business problems. IS curricula often have a strong emphasis on database design, management, and application. Related degrees in Information Technology (IT), Software Engineering, or Data Science can also provide a solid educational background.

For those aspiring to more advanced roles, such as database architect or research positions, a master's degree or even a Ph.D. in a related field can be beneficial. Graduate programs allow for deeper specialization in areas like database theory, distributed databases, data mining, or big data technologies. According to Zippia, 61 percent of database administrators have a bachelor's degree, while 13 percent hold a master's degree.

These courses are representative of university-level instruction in database systems and related fields.

Core Coursework in Database Design and Optimization

Regardless of the specific degree program, certain core coursework is fundamental for aspiring database professionals. A foundational course in Database Design is essential. This typically covers topics like the relational model, entity-relationship (ER) modeling, normalization (1NF, 2NF, 3NF, BCNF), and SQL for data definition (DDL) and data manipulation (DML). Students learn how to translate real-world requirements into well-structured database schemas.

Beyond basic design, courses in Database Implementation and Management delve into the practical aspects of using a DBMS, including creating databases, managing users and permissions, and performing backup and recovery operations. Advanced topics might include transaction management, concurrency control, and database security.

Database Optimization or Performance Tuning is another critical area. This coursework focuses on techniques to make databases run faster and more efficiently. Topics often include query optimization (understanding how databases process queries and how to write efficient SQL), indexing strategies, understanding execution plans, and physical database design considerations. For those interested in handling very large datasets, courses on Big Data Technologies or NoSQL Databases are becoming increasingly important, covering systems like Hadoop, Spark, and various NoSQL database models.

Consider these courses for a deeper dive into database design and specific database technologies.

These books are staples in many university database courses, offering in-depth knowledge.

Research Opportunities in Emerging Areas (e.g., Distributed Databases)

For those pursuing graduate studies, particularly at the Ph.D. level, the field of database management offers rich opportunities for research in emerging areas. Distributed databases remain a significant area of research, focusing on challenges related to managing data across multiple interconnected computers. This includes topics like distributed query processing, distributed transaction management, data replication, and consistency models in distributed environments.

The rise of Big Data continues to fuel research into scalable data processing frameworks, novel NoSQL database architectures, and techniques for analyzing massive datasets. Related to this is research in cloud databases, exploring issues like multi-tenancy, elasticity, data security in the cloud, and serverless database architectures. Data stream processing, which involves analyzing data in real-time as it arrives, is another active research area, driven by applications like IoT, financial trading, and real-time analytics.

Other emerging research frontiers include graph databases and their application to complex network analysis, database systems optimized for machine learning workloads (e.g., supporting in-database machine learning), blockchain databases for secure and transparent data management, and the implications of new hardware (like persistent memory or quantum computing) on database design and performance. Academic research often involves theoretical work, system building, and experimental evaluation.

Industry-Recognized Certifications (e.g., Oracle, Microsoft)

In addition to formal degrees, industry-recognized certifications can significantly enhance a database professional's credentials and demonstrate proficiency with specific database technologies. Many database vendors offer certification programs for their products. For example, Oracle offers a range of certifications for its database products, such as Oracle Certified Associate (OCA), Oracle Certified Professional (OCP), and Oracle Certified Master (OCM). These certifications validate skills in Oracle database administration, SQL, and development.

Microsoft also provides a suite of certifications related to its SQL Server database platform and Azure data services. Certifications like Microsoft Certified: Azure Database Administrator Associate or those focusing on specific SQL Server versions can demonstrate expertise in Microsoft's data technologies. Other vendors like IBM (for Db2), SAP (for HANA), and cloud providers like Amazon Web Services (AWS Certified Database - Specialty) and Google Cloud (Professional Data Engineer) also offer valuable certifications.

While certifications are not a substitute for hands-on experience and a solid understanding of fundamental concepts, they can be a valuable asset in the job market, especially for entry-level positions or when looking to specialize in a particular vendor's ecosystem. They show a commitment to learning and a validated level of skill with specific tools. Many companies list specific certifications as desirable or even required for certain database roles.

These courses can help prepare for vendor-specific certifications or provide specialized knowledge.

Self-Directed Learning Strategies

For individuals who prefer a more flexible approach, are looking to switch careers, or wish to supplement their existing knowledge, self-directed learning offers a powerful pathway into database management. This section outlines various strategies for learning independently, emphasizing practical application and community engagement. The key to successful self-learning is structure, discipline, and a focus on building a tangible portfolio of skills.

Structured vs. Modular Learning Approaches

When embarking on self-directed learning, you can generally choose between a structured or a modular approach. A structured approach involves following a predefined curriculum, often provided by comprehensive online courses, specialization programs, or even textbook sequences. This path offers a clear roadmap, ensuring that foundational concepts are covered before moving on to more advanced topics. It can be particularly beneficial for beginners who appreciate guidance and a logical progression of material.

A modular approach, on the other hand, allows for more flexibility. You might pick and choose specific topics or skills you want to learn based on your interests or immediate needs. For example, if you're already familiar with basic SQL, you might jump directly into learning about a specific NoSQL database or advanced query optimization techniques. This approach can be effective for those who have some existing knowledge or very specific learning goals. However, it requires more self-discipline to ensure that you build a well-rounded skill set and don't inadvertently skip crucial foundational concepts.

Many learners find a hybrid approach to be effective, perhaps starting with a structured foundational course and then transitioning to a more modular approach to delve into specialized areas. OpenCourser offers a vast library of courses that can support both structured learning paths and modular exploration, allowing you to create personalized learning lists.

These courses offer structured learning for foundational database skills.

Portfolio-Building Through Personal/Open-Source Projects

Regardless of your learning approach, building a portfolio of projects is crucial for self-directed learners. Theoretical knowledge is important, but employers want to see that you can apply that knowledge to solve real problems. Personal projects, as discussed earlier (like a library database or a contact manager), are excellent starting points. Document your design process, the schema you created, and some of the interesting queries you wrote. As your skills grow, you can tackle more complex projects, perhaps involving data from public APIs or building a simple web application with a database backend.

Contributing to open-source projects is another fantastic way to gain experience and build your portfolio. Many database systems, tools, and related applications are open source. You could start by fixing small bugs, improving documentation, or adding minor features. This not only hones your technical skills but also demonstrates your ability to collaborate and work within existing codebases. Platforms like GitHub host countless open-source projects where you can find opportunities to contribute.

Your portfolio, whether it's a collection of personal projects showcased on GitHub or contributions to open-source initiatives, serves as tangible proof of your skills and initiative, which can be particularly compelling for career changers or those without formal degrees in the field.

These courses are project-focused, helping you build tangible portfolio pieces.

Mentorship and Community Engagement

Self-directed learning doesn't mean learning in isolation. Engaging with communities and seeking mentorship can significantly accelerate your progress and provide valuable support. Online forums like Stack Overflow, Reddit communities (e.g., r/Database, r/SQL), and specialized Discord servers or Slack channels are great places to ask questions, learn from others, and stay updated on new developments.

Finding a mentor – someone experienced in the field who is willing to offer guidance and advice – can be incredibly beneficial. A mentor can help you navigate learning resources, provide feedback on your projects, offer career advice, and help you avoid common pitfalls. Mentors can be found through professional networking, online communities, or formal mentorship programs. Don't be afraid to reach out to people whose work you admire, but always be respectful of their time.

Attending local tech meetups (if available) or virtual conferences and webinars can also be valuable for learning and networking. These events often feature talks on various database topics and provide opportunities to connect with other professionals and learners. The OpenCourser Notes blog also provides insights and tips for online learners.

Balancing Theory with Practical Implementation

A common challenge in self-directed learning is finding the right balance between understanding the theoretical underpinnings of database management and gaining practical, hands-on skills. It's tempting to jump straight into coding and building things, but without a grasp of concepts like normalization, ACID properties, or indexing, you might build inefficient or unreliable systems.

Conversely, spending too much time solely on theory without applying it can make it difficult to internalize the concepts and develop real-world problem-solving abilities. The most effective approach usually involves an iterative cycle of learning a concept, then immediately applying it through exercises or small projects. For example, after learning about database normalization, try to normalize a sample dataset. After learning about SQL JOINs, write several queries that use different types of joins on a practice database.

Aim to understand the "why" behind database principles, not just the "how" of using a particular command or tool. This deeper understanding will make you a more effective database professional and allow you to adapt to new technologies and challenges more easily. Resources like the OpenCourser Learner's Guide can offer strategies for effective self-study and balancing theory with practice.

These books provide a strong theoretical foundation that complements practical work.

Career Progression and Roles

The field of database management offers a variety of roles and a clear progression path for those with the right skills and experience. From entry-level positions focused on day-to-day operations to senior roles involving strategic design and leadership, there are numerous opportunities. This section will explore common career trajectories, from initial entry into the field to advanced leadership positions, along with an overview of salary expectations and demand.

Entry-Level Roles (e.g., Database Administrator, Data Analyst)

For individuals starting their careers in database management, several entry-level roles provide a gateway into the field. A common starting point is the role of a Junior Database Administrator (DBA). In this capacity, responsibilities often include assisting senior DBAs with routine tasks such as database monitoring, performing backups and restores, managing user access, and troubleshooting basic performance issues. This role provides invaluable hands-on experience with database software and operational procedures.

Another frequent entry point is the role of a

. While not exclusively a database management role, data analysts heavily rely on databases to extract, clean, and analyze data to provide insights for business decision-making. Entry-level data analysts often write SQL queries, generate reports, and work closely with database systems. This role can be an excellent stepping stone towards more specialized database roles, as it builds strong SQL skills and an understanding of data utilization.Other related entry-level positions might include IT support roles with database responsibilities or junior developer positions that involve database interaction. The key at this stage is to gain practical experience with database technologies, develop strong SQL skills, and understand the fundamentals of database design and maintenance.

These courses are excellent for building the foundational skills needed for entry-level database roles.

If you are interested in the role of a Database Administrator, this career profile may be helpful.

Mid-Career Specialization Paths (e.g., Database Architect)

As professionals gain experience in database management, opportunities for specialization arise. One prominent mid-career path is that of a

. Data architects are responsible for designing and building the overall database structure for an organization. This involves understanding business requirements, translating them into logical and physical database designs, selecting appropriate database technologies, and establishing data standards and governance policies. This role requires deep technical knowledge, strong analytical skills, and the ability to see the bigger picture of how data supports business objectives.Other specialization paths include:

- Database Performance Tuning Specialist: Focuses on optimizing database performance, analyzing query execution plans, designing efficient indexing strategies, and ensuring databases can handle high loads.

- Database Security Specialist: Specializes in protecting databases from unauthorized access, data breaches, and other security threats. This involves implementing security policies, managing user permissions, conducting security audits, and staying updated on security best practices.

- Data Warehouse Engineer/Architect: Designs and manages data warehouses, which are large, centralized repositories of data used for business intelligence and reporting. This often involves ETL (Extract, Transform, Load) processes and working with BI tools.

- Cloud Database Administrator/Architect: Specializes in managing and designing database solutions on cloud platforms like AWS, Azure, or Google Cloud. This involves understanding cloud-specific database services, migration strategies, and cost optimization.

These roles typically require several years of experience as a DBA or in a related data-focused position, along with a proven track record of expertise in specific areas.

These courses cover more advanced topics relevant to mid-career specializations.

Leadership Opportunities (e.g., CTO, Data Governance Roles)

With significant experience and a strong strategic vision, database professionals can advance into leadership roles. Positions such as Database Manager or IT Manager with a focus on data services involve overseeing teams of DBAs and data architects, managing database projects, and aligning database strategy with overall IT and business goals. These roles require not only technical expertise but also strong leadership, communication, and project management skills.

At higher executive levels, individuals with a deep understanding of data's strategic importance can move into roles like Chief Technology Officer (CTO) or Chief Data Officer (CDO). While a CTO has a broader technology focus, a strong background in data management is invaluable. A CDO is specifically responsible for an organization's enterprise-wide data and information strategy, governance, control, policy development, and effective exploitation. Data governance roles are also becoming increasingly critical, focusing on ensuring data quality, security, privacy, and compliance across the organization.

These leadership positions often require a blend of deep technical understanding, business acumen, strategic thinking, and the ability to lead and inspire teams. An advanced degree, such as an MBA or a master's in a relevant technical field, can sometimes be beneficial for these roles.

Consider exploring broader topics in IT management and data strategy if leadership is your goal.

Salary Benchmarks and Geographic Demand Variations

Salaries in database management can be quite competitive and vary based on factors such as role, experience level, skills, industry, and geographic location. According to the U.S. Bureau of Labor Statistics (BLS), the median annual wage for database administrators and architects was $101,510 in May 2023. The BLS also projects employment for database administrators and architects to grow 9 percent from 2023 to 2033, which is much faster than the average for all occupations, indicating strong demand. This growth is expected to result in about 9,500 openings each year over the decade.

Salary aggregator Robert Half's 2025 salary guide indicates that a Database Administrator can expect a salary range from $89,500 (25th percentile for candidates new to the role) to $135,000 (75th percentile for highly experienced individuals). For a Database Manager, the range is $126,000 to $175,000. ZipRecruiter, as of April 2025, reports an average hourly pay for a Database Administrator in Charlotte, NC, as $48.02, with the majority of salaries ranging between $37.55 and $57.74 per hour. Different sources and locations will show variations. For instance, data from 2021 indicated that some of the best-paying states for DBAs included New Jersey, Massachusetts, and Washington.

Geographic demand also plays a significant role. Metropolitan areas with strong tech sectors or large corporate presences typically have more opportunities and potentially higher salaries, though the cost of living in these areas also tends to be higher. The increasing prevalence of remote work may also influence salary benchmarks and demand distribution over time. Researching salary data specific to your region and desired role using resources like the BLS Occupational Outlook Handbook and reputable salary websites can provide more tailored insights.

Technical and Organizational Challenges

While database management is a field rich with opportunity, it is not without its hurdles. Professionals in this domain regularly grapple with a range of technical and organizational challenges. Addressing these effectively is key to ensuring data integrity, availability, and security, and to leveraging data as a strategic asset. This section will highlight some of the common obstacles faced in real-world database implementations.

Scalability Limitations in Legacy Systems

Many organizations rely on legacy database systems that were designed and implemented years, or even decades, ago. While these systems may have served their purpose well in the past, they often struggle to cope with the ever-increasing volume, velocity, and variety of data generated today. Scalability – the ability of a system to handle a growing amount of work – becomes a significant challenge. Legacy systems might have architectural limitations that make it difficult or expensive to scale up (adding more resources to an existing server) or scale out (distributing the load across multiple servers).

Attempting to push these systems beyond their designed capacity can lead to performance degradation, increased response times, and even system outages. Migrating from a legacy system to a modern, more scalable database platform can be a complex, costly, and time-consuming undertaking, fraught with risks of data loss or business disruption during the transition. However, failing to address scalability limitations can hinder an organization's ability to innovate, respond to market changes, and effectively utilize its data.

Understanding modern database architectures is key to overcoming these challenges.

Data Security and Breach Prevention

Data security is a paramount concern in database management. Databases often store sensitive and valuable information, including customer data, financial records, intellectual property, and personal health information. Protecting this data from unauthorized access, use, disclosure, alteration, or destruction is a critical responsibility. The threats are numerous, ranging from external attacks by hackers and cybercriminals to internal threats from malicious insiders or accidental data exposure due to human error.

Implementing robust security measures is essential. This includes strong authentication and authorization mechanisms to control who can access the database and what they can do, encryption of data both at rest (while stored in the database) and in transit (while being transmitted over a network), regular security audits and vulnerability assessments, and intrusion detection and prevention systems. Staying ahead of evolving security threats requires continuous vigilance, updating security patches promptly, and adhering to industry best practices and regulatory requirements like GDPR or HIPAA. A data breach can have severe consequences, including financial losses, reputational damage, legal liabilities, and loss of customer trust.

Courses focusing on IT security and specific database security features are highly relevant.

This topic is also central to the broader field of Information Security.

Cross-Departmental Collaboration Hurdles

Effective database management often requires collaboration across different departments within an organization. For example, database administrators may need to work with software developers to design database schemas for new applications, with business analysts to understand data requirements for reporting, with network administrators to ensure database connectivity and security, and with business users to provide support and training. However, achieving smooth cross-departmental collaboration can be challenging.

Different departments may have conflicting priorities, different levels of technical understanding, or "siloed" ways of working. Communication breakdowns can lead to misunderstandings, delays, and suboptimal database solutions. For instance, if business requirements are not clearly communicated to the database team, the resulting database design may not effectively support business processes. Similarly, if developers are not aware of database performance best practices, they may write inefficient queries that strain the database.

Overcoming these hurdles requires establishing clear communication channels, fostering a culture of collaboration, and ensuring that all stakeholders have a shared understanding of data-related goals and responsibilities. Data governance initiatives can also play a role in defining roles, responsibilities, and processes for managing data across the organization.

Migration Challenges to Cloud-Native Systems

Many organizations are migrating their database workloads to cloud-native systems to take advantage of benefits like scalability, flexibility, cost-efficiency, and managed services. However, migrating databases to the cloud, especially from on-premises legacy systems, presents significant challenges. One major hurdle is choosing the right cloud database service from the myriad options available (e.g., IaaS-hosted databases, PaaS database services like Amazon RDS or Azure SQL Database, or cloud-native NoSQL databases like DynamoDB or Cosmos DB). The choice depends on factors like performance requirements, scalability needs, consistency guarantees, cost, and existing application compatibility.

The migration process itself can be complex and risky. It involves planning the migration strategy (e.g., lift-and-shift, re-platforming, or re-architecting), ensuring data integrity during transfer, minimizing downtime, and thoroughly testing the new cloud-based system. Data security and compliance in the cloud also require careful consideration, as data is being entrusted to a third-party provider. Furthermore, integrating the new cloud database with existing on-premises systems or other cloud services can introduce additional complexity. Successfully navigating these challenges requires careful planning, skilled personnel, and often, a phased approach to migration.

Courses on cloud databases and migration strategies are essential for tackling these modern challenges.

This book explores the broader context of big data analytics, often a driver for cloud migration.

Ethical Considerations in Database Management

The power to collect, store, and analyze vast amounts of data comes with significant ethical responsibilities. Database professionals are often stewards of sensitive information, and their actions can have profound societal impacts. This section explores some of the key ethical considerations that anyone working in database management must be aware of and navigate thoughtfully.

Data Privacy Regulations (GDPR, CCPA)

In recent years, growing concerns about how personal data is collected, used, and shared have led to the enactment of stringent data privacy regulations around the world. Two prominent examples are the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States. These regulations grant individuals greater control over their personal data, including the right to access their data, the right to have it corrected, and the right to have it deleted (the "right to be forgotten").

For database professionals, these regulations have significant implications. Databases must be designed and managed in ways that support compliance. This includes implementing mechanisms for obtaining user consent, ensuring data accuracy, providing data portability, and securely deleting data when requested or required. It also means understanding what constitutes personal data, where it is stored, and how it is processed. Non-compliance can result in hefty fines and reputational damage. Therefore, a strong understanding of applicable data privacy laws and best practices for data protection is essential.

Bias in Training Datasets and Algorithmic Outputs

Databases often provide the raw material – the training datasets – for machine learning models and artificial intelligence algorithms. If these datasets contain biases (e.g., reflecting historical societal biases related to race, gender, or socioeconomic status), the algorithms trained on them can perpetuate and even amplify these biases in their outputs. This can lead to unfair or discriminatory outcomes in areas like loan applications, hiring decisions, criminal justice, and medical diagnoses.

Database professionals have a role to play in mitigating these risks. This can involve working to ensure that datasets are as representative and unbiased as possible, documenting the provenance and characteristics of data, and collaborating with data scientists to identify and address potential biases. It also involves being aware of how the data they manage might be used in algorithmic decision-making and considering the potential ethical implications. The pursuit of fairness and equity in AI starts with the data itself.

This topic connects closely with the broader fields of Data Science and Machine Learning.

Environmental Impact of Large-Scale Data Centers

The digital world runs on physical infrastructure, and large-scale data centers, which house the servers and storage systems for databases and cloud services, have a significant environmental footprint. These facilities consume vast amounts of electricity, primarily for powering servers and for cooling systems to prevent overheating. The source of this electricity is a key factor; if it comes from fossil fuels, data centers contribute to greenhouse gas emissions and climate change. Research indicates that data centers account for a notable percentage of global electricity consumption and carbon emissions, comparable to the aviation industry.

Water consumption is another environmental concern, as many data centers use water-based cooling systems. The production and disposal of electronic hardware (e-waste) also contribute to the environmental impact. Database professionals, while not directly managing data center infrastructure in most cases, should be aware of these impacts. Choosing energy-efficient database software, optimizing queries to reduce processing load, and supporting data lifecycle management practices (e.g., archiving or deleting unnecessary data) can contribute, albeit modestly, to reducing the overall environmental burden. There is a growing movement towards "green IT" and sustainable data center practices, including the use of renewable energy sources and more efficient cooling technologies. A report by Frontier Group highlights that the number of U.S. data centers roughly doubled between 2021 and 2024, and this growth is projected to continue, increasing electricity demand. This report also notes concerns about increased reliance on fossil fuels and even proposals to revive nuclear plants to power data centers.

Policy makers are being urged to ensure data centers are powered by renewable energy, improve transparency on energy and water use, and consider the societal value of energy-intensive computing.

Whistleblowing and Corporate Accountability

Database professionals may sometimes find themselves in situations where they become aware of unethical or illegal practices within their organization related to data. This could involve a company misusing customer data, deliberately concealing a data breach, or violating data privacy regulations. In such circumstances, individuals may face a difficult ethical dilemma: whether to remain silent or to become a whistleblower.

Whistleblowing – reporting misconduct to internal authorities, regulatory bodies, or the public – can be a courageous act aimed at promoting corporate accountability and protecting the public interest. However, it can also carry significant personal and professional risks for the whistleblower. Many organizations have internal policies and channels for reporting concerns, and some legal frameworks offer protections for whistleblowers. Understanding these policies and legal rights, and seeking legal counsel if necessary, is crucial for anyone contemplating such action.

The ethical responsibility to protect data and ensure its proper use may sometimes conflict with loyalty to an employer. Navigating these situations requires a strong ethical compass, courage, and a clear understanding of professional responsibilities and potential consequences.

Future Trends in Database Management

The field of database management is continuously evolving, driven by technological advancements and changing business needs. Staying abreast of emerging trends is crucial for professionals who want to remain relevant and for organizations aiming to leverage data effectively. This section explores some of the key developments shaping the future of databases, with an eye toward their market implications and the need for skill adaptation.

Growth of Vector Databases for AI/ML Applications

One of the most significant recent trends is the rapid growth of vector databases. These specialized databases are designed to store, manage, and query data in the form of vector embeddings – numerical representations of data points (like text, images, or audio) in a multi-dimensional space. Vector databases are becoming increasingly critical for artificial intelligence (AI) and machine learning (ML) applications, particularly those involving similarity search and retrieval-augmented generation (RAG) for large language models (LLMs).

As AI/ML models become more sophisticated, the ability to efficiently search and retrieve similar items from vast datasets of embeddings is paramount. Vector databases use specialized indexing and search algorithms (like HNSW or IVFADC) to perform these similarity searches quickly. The market for vector databases has seen a surge in new entrants, with both native vector DBs and existing multi-modal databases adding vector capabilities. Forrester notes that core functions are being augmented with enhanced security, optimized processing, and integration with embedding transformers and data streaming engines. This trend underscores a shift towards databases that can natively support the unique data types and query patterns of modern AI workloads.

This area is rapidly evolving, and skills in understanding vector embeddings, similarity search, and the specific features of different vector database platforms will be increasingly in demand.

Serverless Database Architectures

Serverless database architectures represent another significant trend, offering a way to use database services without managing the underlying infrastructure. In a serverless model, the cloud provider automatically provisions, scales, and manages the database resources based on demand. Users typically pay only for the resources consumed (e.g., storage used and queries executed), rather than for pre-provisioned capacity. Examples include Amazon Aurora Serverless, Azure SQL Database serverless, and Google Cloud's AlloyDB Omni. A 2022 report by Forrester Research highlighted that serverless databases are gaining popularity due to their cost-efficiency and operational simplicity.

This approach can lead to significant cost savings, especially for applications with variable or unpredictable workloads, as it eliminates the need for over-provisioning resources. It also reduces the operational burden on database administrators, allowing them to focus more on database design, optimization, and application development rather than infrastructure management. However, serverless databases may also introduce new considerations, such as potential "cold start" latencies and different pricing models that require careful monitoring and optimization. The shift towards serverless is part of a broader trend in cloud computing aimed at abstracting away infrastructure management.

Understanding cloud platforms and serverless concepts is becoming essential.

Blockchain-Based Data Verification

Blockchain technology, originally known for its application in cryptocurrencies, is increasingly being explored for its potential in general data management, particularly for data verification and integrity. A blockchain is a distributed, immutable ledger where transactions (or data records) are grouped into blocks and cryptographically linked together. This structure makes it extremely difficult to alter or delete data once it has been recorded, providing a high degree of data tamper-resistance and transparency.

In the context of database management, blockchain can be used to create auditable and verifiable records of data transactions, ensuring data provenance and integrity. This can be particularly valuable in scenarios requiring high levels of trust and transparency, such as supply chain management, healthcare records, or intellectual property rights management. While blockchain is not a replacement for traditional databases for all use cases (as it can have performance and scalability limitations), its unique properties offer new possibilities for enhancing data security and trustworthiness. Hybrid approaches, where traditional databases are augmented with blockchain for specific verification purposes, are also emerging.

Impact of Quantum Computing on Encryption

While still in its nascent stages, quantum computing has the potential to profoundly impact various fields, including database security. Current encryption algorithms, which protect sensitive data in databases and during transmission, rely on the computational difficulty of certain mathematical problems for classical computers (e.g., factoring large numbers). However, quantum computers, with their ability to perform certain types of calculations much faster than classical computers, could potentially break many of these widely used encryption algorithms (like RSA and ECC) in the future.

This poses a long-term threat to data security. As quantum computing technology matures, there will be a need to transition to quantum-resistant encryption algorithms (also known as post-quantum cryptography) to protect data from being decrypted by future quantum computers. The database community and cybersecurity researchers are actively working on developing and standardizing these new cryptographic methods. While the widespread availability of powerful quantum computers capable of breaking current encryption is likely still some years away, organizations, especially those dealing with highly sensitive data with long-term confidentiality requirements, need to start planning for this transition. This will require database systems and security protocols to be updated to support new, quantum-safe encryption standards.

Frequently Asked Questions (Career-Focused)

Choosing a career path involves many questions and uncertainties. This section addresses some common queries related to a career in database management, aiming to provide clarity on job sustainability, skill requirements, and career progression in this dynamic field. If you're considering this path, or are already on it, these insights may help ground your expectations and inform your decisions.

Is database management becoming obsolete due to AI?

This is a common concern, but the short answer is no, database management is not becoming obsolete due to AI. In fact, AI and machine learning are heavily reliant on well-managed, high-quality data. AI systems need vast amounts of data for training, and databases are the primary systems for storing and providing access to this data. As AI adoption grows, the need for skilled professionals who can manage, organize, secure, and optimize these underlying data sources is likely to increase, not decrease.

While AI tools might automate some routine database administration tasks (like performance monitoring or basic anomaly detection), the more complex aspects of database design, architecture, security, strategic data governance, and ensuring data quality for AI models will still require human expertise. Furthermore, new database technologies, like vector databases tailored for AI applications, are emerging, creating new specializations within the field. Instead of making database management obsolete, AI is more likely to transform the role, requiring DBAs to adapt and develop new skills related to supporting AI/ML workloads and managing data in AI-driven environments.

What soft skills complement technical database expertise?

While technical skills are crucial in database management, soft skills are equally important for career success. Problem-solving is paramount; DBAs constantly troubleshoot issues, optimize performance, and find solutions to data challenges. Strong analytical skills are needed to understand data requirements, design efficient database structures, and interpret performance metrics.

Communication skills are also vital. DBAs need to communicate effectively with technical colleagues (like developers and network administrators) and non-technical stakeholders (like business users or management). This includes explaining complex technical concepts in simple terms, writing clear documentation, and actively listening to understand user needs. Attention to detail is critical, as even small errors in database configuration or SQL queries can have significant consequences. Lastly, adaptability and a willingness to learn are essential, as the database landscape is constantly evolving with new technologies and techniques.

How transferable are database skills between industries?

Database skills are generally highly transferable across different industries. The core principles of database design, SQL querying, data modeling, performance tuning, and security are applicable whether you're working in finance, healthcare, retail, manufacturing, or any other sector that relies on data. For example, the ability to write efficient SQL queries to retrieve specific information is valuable regardless of whether that information pertains to financial transactions, patient records, or product inventory.

While specific domain knowledge related to a particular industry can be beneficial (e.g., understanding healthcare privacy regulations like HIPAA if working in healthcare), the fundamental database management skills provide a strong foundation that can be adapted to new contexts. The specific database software used might vary (e.g., Oracle might be more prevalent in large enterprises, while MySQL or PostgreSQL are common in web startups), but the underlying concepts are largely consistent. This transferability makes database management a versatile career choice with opportunities in a wide array of sectors.

Entry-level salary expectations in different regions

Entry-level salary expectations for database roles can vary significantly based on geographic location, the specific role (e.g., junior DBA vs. data analyst with database skills), the size and type of the employing company, and local market demand. As of May 2024, the U.S. Bureau of Labor Statistics (BLS) reported a median annual wage for all workers of $49,500, while for database administrators and architects it was considerably higher. For entry-level positions specifically, salaries will generally be lower than the overall median for experienced professionals.

According to Robert Half's 2018 salary data, entry-level DBA salaries in the US were estimated by Indeed at an average of $73,293, Glassdoor at $80,371, and Salary.com at $64,236. The BLS reported DBAs in the 10th percentile (often indicative of entry-level) averaging $48,480 and the 25th percentile at $63,850 in 2017. More recent data from Robert Half's 2025 guide suggests a starting salary (25th percentile) for a Database Administrator around $89,500.

It's important to research salary data for your specific region and the type of entry-level role you are targeting. Websites like the BLS, Glassdoor, Salary.com, Indeed, and Robert Half provide salary information that can be filtered by location and job title. Remember that cost of living also varies by region, so a higher salary in an expensive city might not necessarily translate to greater purchasing power than a somewhat lower salary in a more affordable area.

Freelance/remote work viability in this field

Freelance and remote work opportunities are increasingly viable in the field of database management. Many database administration and development tasks can be performed remotely, especially with the prevalence of cloud-based database services and secure remote access tools. Companies of all sizes, from startups to large enterprises, may hire freelance DBAs for specific projects, such as database migration, performance tuning, security audits, or short-term operational support.

For freelancers, building a strong portfolio, developing a network of contacts, and marketing one's skills effectively are crucial. Platforms that connect freelancers with clients can be useful resources. Remote work, even in full-time employment, has also become more common, offering flexibility and access to a broader range of job opportunities without geographical constraints. However, remote roles often require strong self-discipline, excellent communication skills, and the ability to work independently. The U.S. Bureau of Labor Statistics has noted that advances in computer networking technology enable many database administrators to work remotely.

Typical career advancement timeline

The timeline for career advancement in database management can vary greatly depending on individual performance, learning agility, opportunities within an organization, and the acquisition of new skills and specializations. Generally, one might start in an entry-level role like a Junior DBA or Data Analyst for 1-3 years, gaining foundational experience.

After several years of solid performance and skill development (typically 3-7 years in total), individuals may advance to a standard Database Administrator role, taking on more responsibility for managing critical database systems. With further experience (perhaps 5-10+ years), specialization paths open up, such as becoming a Senior DBA, Database Architect, Performance Tuning Specialist, or Database Security Specialist. Moving into management roles, like Database Manager, often requires significant experience, leadership capabilities, and typically 7-15 years in the field. Reaching executive levels like CDO or CTO would usually involve a longer career trajectory with a proven track record of strategic impact.

Continuous learning, obtaining relevant certifications, and proactively seeking challenging projects can help accelerate career progression. Networking and mentorship can also play a significant role in identifying and preparing for advancement opportunities. The U.S. Bureau of Labor Statistics indicates that career progression is possible, with many database administrators becoming database architects.

Useful Links and Resources

To further your exploration of database management, here are some helpful resources:

- The Bureau of Labor Statistics Occupational Outlook Handbook for Database Administrators and Architects provides detailed information on job duties, education, pay, and job outlook.

- OpenCourser's Computer Science category offers a wide range of courses relevant to database management and related technologies.

- For those on a budget, exploring OpenCourser Deals can help find savings on online courses and learning materials.

- The OpenCourser Learner's Guide provides valuable tips on how to make the most of online learning, which is essential for keeping skills up-to-date in a rapidly evolving field like database management.

The journey into database management is one of continuous learning and adaptation. Whether you are just starting or looking to advance your career, the resources and information available today make it more accessible than ever to build a rewarding career in this vital field. Remember that persistence, curiosity, and a commitment to practical application are key to success. The world of data is vast and ever-expanding, and skilled database professionals will always be in demand to help navigate and harness its power.