TensorFlow

Introduction to TensorFlow

TensorFlow is an open-source software library extensively used for machine learning and artificial intelligence. While it can be applied to a wide array of tasks, its primary use lies in the training and inference of neural networks, making it a popular choice for deep learning applications. Developed by the Google Brain team, TensorFlow was initially created for Google's internal research and production purposes before being released to the public under the Apache License 2.0 in 2015. Its design facilitates the development of machine learning models, especially deep learning models, by offering a comprehensive suite of tools to build, train, and deploy them across various platforms.

Working with TensorFlow can be an engaging experience due to its robust capabilities in handling complex computations and its scalability across different hardware. The ability to visualize model architectures and training progress using tools like TensorBoard offers a unique insight into the inner workings of machine learning models. Furthermore, the active and vast open-source community continually contributes to its development, ensuring it remains at the forefront of AI innovation.

For those new to the field, TensorFlow provides a structured yet flexible environment to learn and experiment with machine learning concepts. More experienced developers and researchers appreciate its power in building sophisticated models and deploying them in real-world applications, from image recognition to natural language processing.

What is TensorFlow?

TensorFlow is a comprehensive ecosystem of tools, libraries, and community resources designed to empower researchers and developers in the field of machine learning (ML) and artificial intelligence (AI). At its heart, TensorFlow utilizes a system of data flow graphs to represent computations, shared states, and the operations that modify those states. This structure allows for the efficient execution of complex mathematical operations, particularly those involved in neural networks. It's designed to be accessible, flexible, and powerful, catering to a wide range of users from beginners to experts.

One of the key aspects that makes TensorFlow exciting is its ability to simplify the process of building and training sophisticated ML models. Imagine trying to teach a computer to recognize pictures of cats. With TensorFlow, you can define a model (a series of computational steps), feed it thousands of cat pictures (the data), and let TensorFlow figure out the patterns that define a cat. This process, known as training, allows the model to "learn" and then make predictions on new, unseen images. The same principles apply to a multitude of other tasks, such as understanding human language, predicting stock prices, or even helping to diagnose diseases.

Furthermore, TensorFlow's versatility extends to how and where these models can be deployed. Whether you're aiming to run your AI on powerful servers, mobile phones, or even directly in a web browser, TensorFlow provides the tools to make that happen. This opens up a vast landscape of possibilities for creating intelligent applications that can impact various industries and aspects of daily life.

Diving Deeper: A Gentle Introduction

At its core, TensorFlow works with multi-dimensional arrays of data called "tensors." Think of a tensor as a generalization of vectors and matrices to potentially higher dimensions. For instance, a single number (a scalar) is a 0-dimensional tensor, a list of numbers (a vector) is a 1-dimensional tensor, and a grid of numbers (a matrix) is a 2-dimensional tensor. Images, for example, can be represented as 3-dimensional tensors (height, width, color channels).

TensorFlow then defines computations as a "graph." This isn't a graph in the sense of a bar chart, but rather a network of nodes and edges. Each node in this graph represents a mathematical operation (like addition, multiplication, or more complex functions specific to machine learning), and each edge represents a tensor flowing between these operations. So, building a TensorFlow model is like drawing a blueprint of calculations. You define the operations and how data (tensors) flows through them.

Once this computational graph is defined, TensorFlow can execute it. This execution typically happens within a "session" (though this concept has become more abstracted in recent versions of TensorFlow for ease of use). The beauty of this graph-based approach is that TensorFlow can optimize the computations, distribute them across different hardware (like CPUs, GPUs, or specialized TPUs), and efficiently calculate the necessary adjustments to improve the model during training (a process called automatic differentiation). This makes it possible to train very large and complex models on massive datasets.

Historical Context and Evolution

TensorFlow's journey began within Google as a successor to DistBelief, a proprietary machine learning system based on deep learning neural networks that started development in 2011. As DistBelief's use grew across various Alphabet companies, a team of Google Brain researchers, including prominent figures like Jeff Dean and Geoffrey Hinton (whose earlier work on backpropagation was foundational), set out to refactor and simplify its codebase into a more robust, faster, and application-grade library. This effort culminated in the open-source release of TensorFlow in November 2015 under the Apache 2.0 license.

The name "TensorFlow" itself is derived from the way neural networks perform operations on multi-dimensional data arrays, known as tensors. The initial release quickly gained traction in the research and developer communities. Google continued to invest heavily in its development, leading to the release of TensorFlow 2.0 in September 2019. This major update brought significant changes, including eager execution by default (making it more Pythonic and easier to debug), a more intuitive Keras integration as the high-level API, and improved performance.

Over the years, the TensorFlow ecosystem has expanded considerably, with additions like TensorFlow Lite for deploying models on mobile and embedded devices, TensorFlow.js for running models in JavaScript environments, and TensorFlow Extended (TFX) for end-to-end production machine learning pipelines. This evolution reflects TensorFlow's commitment to supporting the entire lifecycle of machine learning projects, from initial experimentation to large-scale deployment.

Core Architecture and Components of TensorFlow

Understanding the fundamental building blocks of TensorFlow is crucial for anyone looking to harness its power. The architecture is designed for flexibility and efficiency, allowing developers to build and deploy a wide variety of machine learning models. At its heart, TensorFlow operates on the concept of a computational graph, which defines the sequence of operations performed on data.

The Computational Graph: TensorFlow's Blueprint

A computational graph is the central abstraction in TensorFlow. It's a directed graph where nodes represent mathematical operations, and the edges represent the multidimensional data arrays (tensors) that flow between them. Think of it as a detailed recipe for your machine learning model: each step (operation) is clearly defined, and the ingredients (tensors) pass from one step to the next.

Initially, in older versions of TensorFlow, developers would explicitly define this static graph first, and then execute it within a session. While powerful, this could sometimes feel less intuitive. With TensorFlow 2.x, "eager execution" became the default. This means operations are evaluated immediately, as they are defined, much like standard Python code. However, the concept of the graph still underlies TensorFlow's operations, and for performance optimization and deployment, TensorFlow can convert Python functions into high-performance, portable graphs using the tf.function decorator.

This graph-based system allows TensorFlow to perform several optimizations. It can, for example, automatically compute gradients (a crucial step in training neural networks through a process called backpropagation), distribute computations across multiple devices, and deploy models on various platforms. The graph clearly defines dependencies between operations, enabling efficient scheduling and execution.

Key Components: Tensors, Variables, and APIs

Several key components form the backbone of TensorFlow operations:

Tensors: As the name TensorFlow suggests, tensors are the fundamental unit of data. They are multi-dimensional arrays, similar to NumPy arrays, that can hold data of various types (like numbers or strings). A scalar (a single number) is a 0-dimensional tensor, a vector is a 1-D tensor, a matrix is a 2-D tensor, and so on. All data – from input features to model parameters and outputs – is represented as tensors in TensorFlow.

Variables: While tensors typically represent the data flowing through the computational graph, TensorFlow Variables are a special kind of tensor used to hold and update mutable state, such as the weights and biases in a neural network during training. Unlike regular tensors whose values might be recomputed each time they are evaluated in a graph, Variables maintain their state across multiple runs of the graph. They are essential for the learning process, as their values are adjusted iteratively to minimize the model's error.

APIs (Application Programming Interfaces): TensorFlow offers various APIs to cater to different needs and levels of abstraction. The most prominent high-level API is Keras, which is now tightly integrated into TensorFlow. Keras provides a user-friendly and modular way to define and train neural networks, making it easier for developers to get started and build complex models quickly. For more fine-grained control, TensorFlow also provides lower-level APIs that allow direct manipulation of tensors and operations. Additionally, TensorFlow supports multiple programming languages, with Python being the most common, but also offering APIs for C++, Java, and JavaScript, broadening its accessibility.

These core components—computational graphs, tensors, variables, and its versatile APIs—work in concert to provide a powerful and flexible platform for machine learning development.

For those looking to get started with the practical aspects of TensorFlow, understanding these fundamental concepts is the first step. The following courses can provide a solid introduction:

These introductory courses are designed to help learners grasp the basics of TensorFlow, including its syntax and fundamental operations, setting a strong foundation for more advanced topics.

Accelerating Computation: GPUs and TPUs

Training complex machine learning models, especially deep neural networks, can be incredibly computationally intensive, often requiring days or even weeks on standard CPUs (Central Processing Units). To address this challenge, TensorFlow is designed to leverage specialized hardware accelerators like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units).

GPUs, originally designed for rendering graphics in video games, possess a highly parallel architecture with thousands of smaller cores. This makes them exceptionally good at performing the same operation on large batches of data simultaneously, a common requirement in neural network training (like matrix multiplications). TensorFlow has built-in support for GPU acceleration, primarily through NVIDIA's CUDA platform, allowing it to offload these intensive computations to the GPU, leading to significant speedups in training time.

TPUs are custom-designed ASICs (Application-Specific Integrated Circuits) developed by Google specifically to accelerate machine learning workloads executed with TensorFlow. They are optimized for the specific tensor operations prevalent in neural networks, often delivering even greater performance and efficiency than GPUs for these tasks. TensorFlow's tf.distribute.Strategy API allows developers to seamlessly distribute training across multiple GPUs or TPUs, further scaling the training process for very large models and datasets. This integration makes it feasible to tackle problems that would be practically impossible with CPUs alone.

The ability to harness these accelerators is a key reason for TensorFlow's prominence in both research and production environments where performance and speed are critical.

To understand how TensorFlow interacts with this specialized hardware, these resources may be helpful:

This course delves into distributing TensorFlow training, a common scenario when using multiple GPUs or TPUs.

Real-World Impact: TensorFlow Applications Across Industries

TensorFlow's versatility and power have led to its adoption across a multitude of industries, driving innovation and solving complex problems. Its ability to handle large datasets, build sophisticated models, and deploy them across various platforms makes it an invaluable tool for businesses and researchers alike.

Transforming Healthcare: Medical Imaging and Diagnostics

In the healthcare sector, TensorFlow is making significant strides, particularly in medical image analysis and diagnostics. Machine learning models built with TensorFlow can analyze medical scans like X-rays, CT scans, and MRIs to assist in the early detection and diagnosis of diseases such as cancer, diabetic retinopathy, and cardiovascular conditions. For example, algorithms can be trained to identify tumors, measure organ dimensions, or highlight anomalies that might be missed by the human eye.

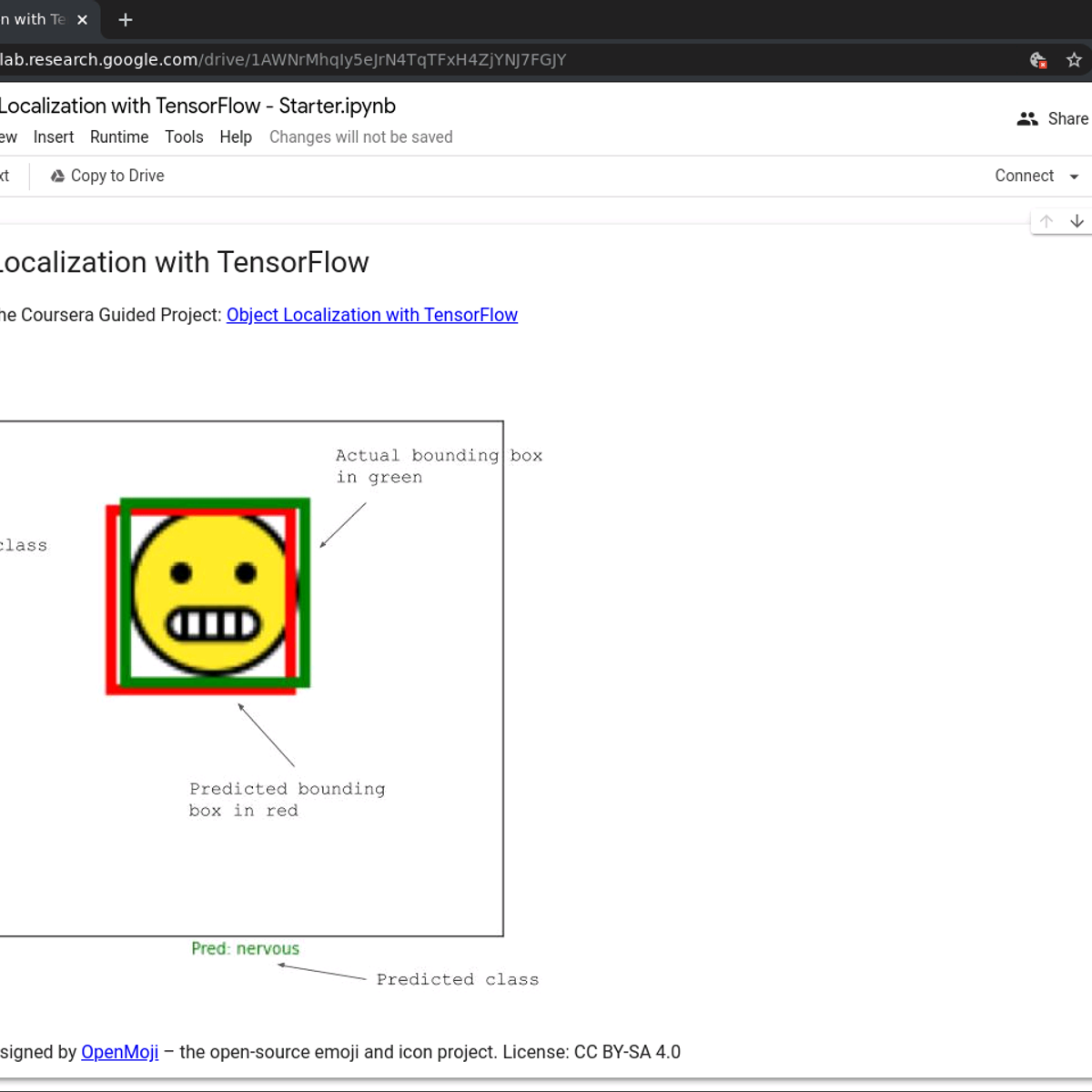

Image segmentation, the process of partitioning an image into meaningful regions, is a critical task where TensorFlow excels. This allows for precise localization of affected areas, aiding in surgical planning and treatment monitoring. The ability to process and learn from vast amounts of imaging data enables the development of more accurate and efficient diagnostic tools, ultimately leading to better patient outcomes. TensorFlow's capacity to integrate with various imaging modalities and support the development of complex neural network architectures is key to its success in this field.

For those interested in the intersection of TensorFlow and healthcare, particularly medical imaging, the following resources offer valuable insights:

This course explores advanced computer vision techniques, which are fundamental to medical image analysis.

Securing Finance: Fraud Detection and Algorithmic Trading

The finance industry leverages TensorFlow for a range of critical applications, including enhancing security through fraud detection and optimizing trading strategies via algorithmic trading. Financial institutions face the constant challenge of identifying and preventing fraudulent transactions. TensorFlow enables the development of sophisticated anomaly detection models that can analyze vast streams of transactional data in real-time, flagging suspicious activities with high accuracy and helping to reduce financial losses.

In the realm of algorithmic trading, TensorFlow is used to build predictive models that forecast market movements, asset prices, and volatility. These models can process historical market data, news sentiment, and other relevant factors to inform trading decisions. Hedge funds and investment firms use these AI-driven strategies to execute trades at high speeds, seeking to capitalize on fleeting market opportunities. Furthermore, TensorFlow aids in credit risk assessment by building models that evaluate borrower creditworthiness based on diverse data sources, leading to more informed lending decisions. The ability to process large datasets and build complex predictive models makes TensorFlow a powerful asset in the data-driven financial sector.

These courses can provide a foundational understanding of how machine learning, and by extension TensorFlow, is applied in financial contexts:

Powering Autonomous Systems: Robotics and Self-Driving Cars

TensorFlow plays a pivotal role in the advancement of autonomous systems, including robotics and self-driving vehicles. In robotics, TensorFlow is used to develop models that enable robots to perceive their environment, understand and interact with objects, and make intelligent decisions. This includes tasks like object recognition, navigation, and manipulation. For instance, TensorFlow can help a robot identify an obstacle, plan a path around it, or grasp an object with precision.

For self-driving cars, TensorFlow is integral to processing data from various sensors (cameras, LiDAR, radar) to understand the vehicle's surroundings. This includes detecting other vehicles, pedestrians, traffic lights, and lane markings. The complex decision-making required for autonomous navigation, such as when to accelerate, brake, or steer, is often handled by deep learning models trained using TensorFlow. Reinforcement learning, a technique where models learn through trial and error by interacting with an environment, is also frequently implemented with TensorFlow to train autonomous agents for complex control tasks. The framework's ability to deploy models on specialized hardware (like NVIDIA Jetson for edge devices or custom TPUs) is crucial for the real-time processing demands of autonomous systems.

To explore the application of TensorFlow in areas related to autonomous systems, particularly computer vision, consider the following:

These courses touch upon deep learning and computer vision, core technologies for autonomous vehicles and robotics.

Learning TensorFlow: Academic Pathways and Research Frontiers

TensorFlow is not just an industry tool; it's also a significant platform for academic learning and cutting-edge research in artificial intelligence and machine learning. Universities worldwide are integrating TensorFlow into their curricula, and researchers are leveraging its capabilities to push the boundaries of AI.

TensorFlow in Academia: From University Courses to Online Learning

Many universities now offer courses in machine learning, deep learning, and artificial intelligence that either explicitly teach TensorFlow or use it as a primary tool for assignments and projects. These courses range from undergraduate introductions to advanced graduate-level seminars. Students gain hands-on experience in building neural networks, processing data, and understanding the theoretical concepts underpinning modern AI techniques through practical application with TensorFlow.

Beyond traditional university settings, the rise of online learning platforms has made TensorFlow education incredibly accessible. OpenCourser, for example, catalogs a vast array of online courses that can help learners at all levels get started with TensorFlow and advance their skills. These courses often provide structured learning paths, practical exercises, and even capstone projects to solidify understanding. Whether you are a student looking to supplement your formal education, a professional aiming to upskill, or an enthusiast eager to learn, online resources provide a flexible and comprehensive way to master TensorFlow.

Online courses are highly suitable for building a foundational understanding of TensorFlow. They allow learners to proceed at their own pace, revisit complex topics, and often provide interactive coding environments. For students already enrolled in academic programs, these online courses can serve as excellent supplementary material, offering different perspectives or deeper dives into specific TensorFlow applications. Professionals can use them to stay abreast of the latest developments in TensorFlow and apply new techniques to their work, potentially leading to improved efficiency or new product innovations.

To begin your learning journey or to deepen your existing knowledge, consider exploring these courses:

These courses provide a range of entry points, from general introductions to more focused model-building techniques.

Fueling Research: AI/ML Innovations and Neural Networks

TensorFlow is a powerhouse for academic research in AI and ML, enabling scientists and engineers to experiment with novel neural network architectures, develop new learning algorithms, and explore uncharted territories in artificial intelligence. Its flexibility allows researchers to implement complex models and test hypotheses efficiently. Many groundbreaking research papers in areas like computer vision, natural language processing, reinforcement learning, and generative models have utilized TensorFlow for their implementations.

The framework's support for distributed training across multiple GPUs and TPUs is particularly valuable for research projects that involve massive datasets and computationally intensive models. Furthermore, TensorFlow's open-source nature encourages collaboration and reproducibility of research findings, as code and models can be easily shared within the scientific community. The availability of pre-trained models through platforms like TensorFlow Hub also allows researchers to build upon existing work, accelerating the pace of innovation. From developing more efficient learning algorithms to creating AI systems that can reason and understand the world in more human-like ways, TensorFlow provides the tools for the next generation of AI breakthroughs.

For those interested in the research applications of TensorFlow, particularly in the context of deep learning and neural networks, these books provide in-depth knowledge:

Advanced Studies: PhD-Level Projects and Publications

At the PhD level and in postdoctoral research, TensorFlow serves as a critical tool for conducting advanced investigations into the frontiers of machine learning. Doctoral candidates often use TensorFlow to design, implement, and validate the novel algorithms and models that form the core of their dissertation research. The ability to quickly prototype ideas, scale experiments, and rigorously evaluate results is essential for producing publishable work in top-tier AI conferences and journals.

PhD projects leveraging TensorFlow span a wide spectrum, from theoretical explorations of deep learning principles to applied research addressing specific real-world challenges in domains like healthcare, robotics, climate science, and more. The framework's comprehensive nature, coupled with its extensive documentation and active community support, empowers researchers to tackle highly ambitious projects. Many significant contributions to the AI field, published in leading venues such as NeurIPS, ICML, and CVPR, feature experiments conducted using TensorFlow, underscoring its importance as a research platform. The skills developed in using TensorFlow for complex research are also highly valued in academia and industry research labs.

For individuals pursuing or considering PhD-level research involving TensorFlow, a deep understanding of its capabilities and the underlying machine learning concepts is paramount. Advanced courses and specialized texts become essential resources.

Consider these courses for more advanced topics that are often relevant in research settings:

These courses cover customizing models, probabilistic approaches, and generative techniques, which are active areas of research.

Career Opportunities and Growth in TensorFlow

The demand for professionals skilled in TensorFlow has grown significantly as industries increasingly adopt artificial intelligence and machine learning. Understanding TensorFlow can open doors to a variety of exciting and well-compensated career paths, from entry-level positions to advanced research roles. The skills you develop are applicable across diverse sectors, making it a valuable asset in today's tech-driven job market.

If you are considering a career change or are new to the job market, the field of machine learning, with TensorFlow as a core skill, can seem daunting. It's true that it requires dedication and continuous learning. However, the journey, while challenging, can be incredibly rewarding. Many have successfully transitioned into AI roles from different backgrounds. The key is a structured approach to learning, consistent practice, and building a portfolio of projects. Don't be discouraged by the complexity; every expert was once a beginner. Focus on incremental progress, celebrate small victories, and leverage the wealth of online resources and communities available for support. Your ambition, coupled with persistent effort, can lead to a fulfilling career in this dynamic field.

Starting Your Journey: Entry-Level Roles

For those beginning their careers or transitioning into the AI/ML space, several entry-level roles frequently require TensorFlow proficiency. One common starting point is the role of a Machine Learning Engineer. In this capacity, you might be responsible for designing, building, training, and deploying machine learning models using TensorFlow. This could involve tasks like data preprocessing, feature engineering, model selection, and performance evaluation. You'll likely work as part of a team, collaborating with data scientists, software engineers, and product managers.

Another accessible role is that of a Data Analyst with machine learning skills. While traditional data analysis focuses on interpreting past data, a data analyst proficient in TensorFlow might also be involved in building predictive models for forecasting or classification tasks. This could involve using TensorFlow to analyze trends, segment customers, or predict outcomes based on historical data.

Other entry points could include roles like AI Developer, Junior Data Scientist, or Research Assistant in an academic or corporate lab. These positions typically require a solid understanding of machine learning fundamentals, programming skills (especially in Python), and hands-on experience with libraries like TensorFlow.

To build the foundational skills necessary for these entry-level positions, consider these comprehensive courses:

These bootcamps aim to provide a broad overview and practical skills in AI, machine learning, and TensorFlow.

A strong portfolio of projects is often crucial for landing an entry-level role. Supplementing online coursework with personal projects, such as participating in Kaggle competitions or developing a unique application using TensorFlow, can significantly enhance your resume and demonstrate practical abilities to potential employers.

Advancing Your Career: Senior and Architect Roles

With experience and continued learning, professionals skilled in TensorFlow can advance to more senior and strategic roles. An AI Architect is responsible for designing the overall architecture of AI systems. This involves making high-level design choices, selecting appropriate technologies (including TensorFlow and its ecosystem components like TFX), and ensuring that the AI solutions are scalable, robust, and aligned with business objectives. AI Architects need a deep understanding of various machine learning paradigms, software engineering principles, and cloud platforms.

A Research Scientist in AI/ML focuses on developing new algorithms, techniques, and models, often pushing the boundaries of what's possible. These roles are common in academic institutions, corporate research labs (like Google AI, Meta AI, Microsoft Research), and R&D departments of tech companies. A PhD or extensive research experience, along with a strong publication record and deep expertise in TensorFlow for implementing and testing novel ideas, is typically required for such positions.

Other advanced roles include Senior Machine Learning Engineer, Lead Data Scientist, or ML Ops Engineer (specializing in the operationalization and lifecycle management of machine learning models). These positions often involve leading teams, mentoring junior members, and taking ownership of complex, mission-critical AI projects. Continuous learning is vital, as the field is rapidly evolving.

For those aiming for these advanced positions, specialized knowledge in areas like distributed training, model optimization, and specific application domains becomes increasingly important. Consider courses that delve into the more advanced aspects of TensorFlow and its deployment:

These courses address the complexities of deploying and scaling TensorFlow models, which are key concerns in senior and architect roles.

Emerging Specializations: The Future of TensorFlow Expertise

The field of AI and machine learning is constantly evolving, leading to new and exciting specializations for TensorFlow experts. One such area is Edge AI or TinyML. This involves deploying machine learning models directly on resource-constrained devices like microcontrollers, sensors, and mobile phones, rather than in the cloud. TensorFlow Lite is a key tool in this domain, enabling the optimization and deployment of models for on-device inference. Professionals specializing in Edge AI work on making models smaller, faster, and more power-efficient without sacrificing too much accuracy. This is crucial for applications requiring real-time processing, low latency, or offline capabilities, such as in smart appliances, industrial IoT, and wearable health monitors.

Another burgeoning area is MLOps (Machine Learning Operations). As more organizations deploy ML models into production, the need for robust processes and tools to manage the entire lifecycle of these models (from data ingestion and training to deployment, monitoring, and retraining) has become critical. TensorFlow Extended (TFX) is a platform specifically designed for building and managing production ML pipelines. MLOps engineers with TensorFlow and TFX skills are in high demand to ensure that AI systems are reliable, scalable, and maintainable.

Other emerging specializations include areas like Responsible AI (focusing on fairness, interpretability, and privacy in AI systems), Quantum Machine Learning (exploring the intersection of quantum computing and ML), and AI for specific scientific domains (e.g., drug discovery, climate modeling). As TensorFlow continues to adapt and expand its capabilities, new career avenues will undoubtedly emerge, requiring continuous learning and adaptation.

To get a glimpse into these emerging areas, particularly related to on-device ML and production pipelines, explore these resources:

Gaining expertise in these cutting-edge specializations can provide a significant competitive advantage in the job market.

Navigating Challenges: Ethical and Technical Considerations

While TensorFlow offers immense power and potential, its application also comes with significant challenges, both ethical and technical. Addressing these thoughtfully is crucial for responsible AI development and successful deployment. Developers, researchers, and organizations must be mindful of the societal impact of their work and the complexities involved in building and maintaining robust AI systems.

The Specter of Bias: Ensuring Fairness in Models

One of the most critical ethical challenges in machine learning, including models built with TensorFlow, is the issue of bias. AI models learn from the data they are trained on. If this data reflects existing societal biases (e.g., regarding race, gender, age, or socioeconomic status), the model will likely learn and even amplify these biases. This can lead to unfair or discriminatory outcomes when the AI system is deployed in the real world, for instance, in loan applications, hiring processes, or even criminal justice.

Addressing bias requires a multi-faceted approach. It starts with careful examination and preprocessing of training data to identify and mitigate potential sources of bias. Techniques might include augmenting datasets to ensure better representation of underrepresented groups or using specialized algorithms designed to promote fairness. TensorFlow provides tools and libraries that can assist in analyzing model behavior and evaluating fairness metrics. However, technology alone is not a complete solution. It also requires diverse development teams, ethical oversight, and ongoing monitoring of deployed systems to ensure they are performing equitably across different demographic groups. The pursuit of fairness in AI is an ongoing research area and a critical responsibility for anyone working with TensorFlow.

Understanding the societal implications of AI is paramount. While specific courses on AI ethics with TensorFlow might be niche, a general understanding of ethical AI practices is essential for all practitioners.

From Lab to Real World: Scalability and Deployment Hurdles

Moving a TensorFlow model from a research environment or a local machine to a production system that serves real users at scale presents numerous technical challenges. Scalability is a major concern: a model that performs well on a small, curated dataset might struggle when faced with the volume, velocity, and variety of real-world data. Ensuring that the model can handle increasing loads and maintain performance requires careful engineering of the entire pipeline, from data ingestion and preprocessing to model serving and monitoring. TensorFlow Extended (TFX) provides a suite of tools to help manage these production pipelines.

Deployment itself can be complex. Models need to be optimized for the target environment, whether it's a cloud server, a mobile device (using TensorFlow Lite), or a web browser (using TensorFlow.js). This might involve model compression techniques to reduce size and latency, selecting the right serving infrastructure, and setting up robust monitoring to detect issues like model drift (where the model's performance degrades over time as the underlying data distribution changes). Versioning of models and data, ensuring reproducibility, and managing the computational resources efficiently are also key aspects of successful deployment. Overcoming these hurdles requires a combination of machine learning expertise, software engineering skills, and often, knowledge of cloud computing and MLOps practices.

These courses address some of the practicalities of deploying and managing TensorFlow models:

These courses focus on building pipelines and optimizing models, crucial for real-world deployment.

The Environmental Footprint: Energy Consumption in Large-Scale Training

Training large-scale deep learning models, especially those with billions of parameters like many state-of-the-art language models or computer vision systems, can be incredibly energy-intensive. The computations involved, often run on powerful GPUs or TPUs for extended periods, consume significant amounts of electricity. This has led to growing concerns about the environmental impact and carbon footprint associated with AI research and development.

Researchers and practitioners are increasingly exploring ways to mitigate this impact. This includes developing more energy-efficient hardware, designing more computationally efficient model architectures, and exploring techniques like model pruning, quantization (reducing the precision of model weights), and knowledge distillation (training smaller models to mimic larger ones). Algorithmic improvements that reduce the amount of data or computation needed to achieve a certain level of performance also play a crucial role. Furthermore, there's a growing awareness of the need to consider the source of energy used for training, with a push towards using renewable energy sources for data centers. While TensorFlow itself provides tools for efficient computation, the broader responsibility lies with the AI community to develop and adopt practices that balance innovation with environmental sustainability. Choosing appropriate model sizes, optimizing training procedures, and being mindful of computational resources are important considerations for TensorFlow users concerned about this issue.

While specific courses on the environmental impact of TensorFlow are rare, awareness of this issue is growing within the AI community. Staying informed through research papers, industry reports, and discussions in forums like those on the World Economic Forum or publications from organizations like McKinsey & Company can provide valuable context.

The TensorFlow Ecosystem: Open Source, Community, and Alternatives

TensorFlow is more than just a library; it's a vast ecosystem supported by a vibrant open-source community and complemented by a suite of tools designed to cover the entire machine learning lifecycle. Understanding this ecosystem, including its extensions and its relationship with other frameworks, is key to effectively leveraging TensorFlow.

Expanding Capabilities: TensorFlow Extended (TFX) and TensorFlow Lite

To address the diverse needs of machine learning practitioners, the TensorFlow ecosystem includes specialized tools. TensorFlow Extended (TFX) is an end-to-end platform for deploying production ML pipelines. It provides a set of components and libraries that help automate and manage the entire ML lifecycle, from data ingestion, validation, and preprocessing, to model training, evaluation, analysis, and deployment. TFX is designed for scalability and reliability, enabling teams to build robust and reproducible machine learning systems suitable for real-world applications. Key components include ExampleGen (for data ingestion), StatisticsGen (for data analysis), SchemaGen (for defining data expectations), Transform (for feature engineering), Trainer (for model training), Evaluator (for model analysis), and Pusher (for deploying validated models).

TensorFlow Lite is a set of tools that enables on-device machine learning. It allows developers to convert TensorFlow models into a highly optimized format that can run efficiently on mobile phones (Android and iOS), embedded systems, and microcontrollers. This is crucial for applications that require low latency, operate without internet connectivity, or need to preserve user privacy by keeping data on the device. TensorFlow Lite provides a converter to optimize models, an interpreter to run them on various hardware (including CPU, GPU, and specialized accelerators like DSPs and Edge TPUs), and pre-trained models for common tasks.

These extensions significantly broaden TensorFlow's applicability, from large-scale cloud-based training and serving with TFX to efficient on-device inference with TensorFlow Lite.

To learn more about these powerful extensions, you might find these courses helpful:

These courses provide focused instruction on TFX and TensorFlow Lite, respectively.

The Power of Community: Contributions and Governance

TensorFlow's success and rapid evolution are significantly driven by its large and active open-source community. Developers, researchers, and enthusiasts from around the world contribute to the TensorFlow codebase, report issues, write documentation, create tutorials, and share pre-trained models and best practices. This collaborative effort ensures that TensorFlow remains at the cutting edge of machine learning research and addresses the practical needs of its diverse user base.

Google continues to be the primary steward of the TensorFlow project, guiding its overall direction and development. However, the governance model encourages community participation. Contributions are typically made through platforms like GitHub, where code changes are reviewed, and discussions about new features and improvements take place. Special Interest Groups (SIGs) focus on specific areas within the TensorFlow ecosystem, such as TFX, networking, or I/O, allowing for more focused community engagement. This open and collaborative model has been instrumental in TensorFlow's widespread adoption and its ability to adapt to the rapidly changing landscape of AI.

The extensive documentation, numerous online forums (like Stack Overflow and the official TensorFlow forum), and a wealth of community-created content make it easier for users to learn TensorFlow, troubleshoot problems, and find solutions to complex challenges.

The Broader Landscape: Competing Frameworks like PyTorch

While TensorFlow is a dominant force in the machine learning world, it's not the only framework available. Its most prominent contemporary is PyTorch, another open-source machine learning library, primarily developed by Meta AI. Both TensorFlow and PyTorch offer powerful capabilities for building and training neural networks, and each has its own strengths and dedicated user base.

Historically, one of the main distinctions was their approach to graph definition: TensorFlow traditionally used static computation graphs (defined once, then executed), while PyTorch favored dynamic graphs (defined and executed on-the-fly, offering more flexibility during development and debugging). However, with TensorFlow 2.x adopting eager execution by default and PyTorch introducing capabilities for static graph export (e.g., via TorchScript), this distinction has become less pronounced.

PyTorch is often lauded for its "Pythonic" feel and ease of use, particularly in research settings where rapid prototyping and experimentation are common. TensorFlow, on the other hand, has historically been recognized for its robust deployment tools (like TensorFlow Serving and TFX) and its scalability for large-scale production environments. TensorFlow also has strong support for mobile and edge deployment through TensorFlow Lite and web deployment via TensorFlow.js. The choice between TensorFlow and PyTorch often comes down to specific project requirements, team familiarity, ecosystem support for particular tasks, or even personal preference. Many organizations and researchers even use both frameworks for different purposes. The competition and cross-pollination of ideas between these major frameworks ultimately benefit the entire machine learning community by driving innovation and improvement.

For those wishing to understand the nuances between these frameworks, the following resources can be enlightening:

These courses offer comparisons or cover multiple backends, giving a broader perspective.

Future Trends and Innovations in TensorFlow

The field of artificial intelligence is characterized by rapid innovation, and TensorFlow is continually evolving to incorporate and drive these advancements. Several exciting trends are shaping the future of TensorFlow, promising to expand its capabilities and make AI more powerful and accessible.

The Quantum Leap: Integrating Quantum Machine Learning

One of the most forward-looking areas is the integration of quantum computing with machine learning, often referred to as Quantum Machine Learning (QML). While still in its nascent stages, QML holds the potential to solve certain types of problems that are currently intractable for classical computers. TensorFlow Quantum (TFQ) is an open-source library for prototyping quantum ML models. It provides tools to build and train quantum models by integrating TensorFlow with quantum computing frameworks like Cirq.

TFQ allows researchers to construct hybrid quantum-classical models, where quantum algorithms can be used as Keras layers within a larger TensorFlow computation. This enables the exploration of how quantum processors might enhance machine learning tasks such as classification, generation, and reinforcement learning. While practical, large-scale quantum computers are still some way off, TensorFlow's early investment in this area positions it to be a key player as quantum hardware matures. This research could lead to breakthroughs in drug discovery, materials science, financial modeling, and optimization problems.

Making AI Accessible: AutoML and the Democratization of AI

Automated Machine Learning (AutoML) aims to automate the end-to-end process of applying machine learning to real-world problems. This includes tasks like data preprocessing, feature engineering, model selection, hyperparameter tuning, and even model architecture design. TensorFlow is actively incorporating AutoML capabilities to make it easier for individuals and organizations without deep machine learning expertise to build high-quality custom models.

Tools like Google's Vertex AI AutoML, which leverages TensorFlow, allow users to train models on their own data with minimal coding, often through a graphical user interface. The goal of AutoML is to democratize AI, making its power accessible to a broader audience and reducing the time and expertise required to develop effective ML solutions. As AutoML techniques become more sophisticated, TensorFlow will likely play a crucial role in providing the underlying engine for these automated systems, enabling faster iteration and deployment of AI applications across various industries.

The following course touches upon leveraging AI platforms which often include AutoML functionalities:

This provides a quick introduction to using AI platforms that often simplify model development and deployment.

Intelligence at the Fringes: Edge Computing and IoT Applications

Edge computing, which involves processing data closer to where it is generated rather than in a centralized cloud, is a rapidly growing trend, particularly with the proliferation of Internet of Things (IoT) devices. TensorFlow Lite is specifically designed to enable machine learning inference on these edge devices, which often have limited computational power, memory, and energy. [3, 0825xk]

The ability to run AI models directly on devices like smartphones, wearables, industrial sensors, and autonomous vehicles offers several advantages: lower latency (as data doesn't need to travel to the cloud and back), reduced bandwidth consumption, enhanced privacy (as sensitive data can be processed locally), and offline functionality. [0825xk] Future innovations in TensorFlow will likely focus on further optimizing models for edge deployment, improving tools for on-device training (federated learning), and expanding support for a wider range of edge hardware. This will unlock a new wave of intelligent applications in areas like smart homes, precision agriculture, predictive maintenance, and personalized healthcare, where real-time, on-device intelligence is critical. [0825xk]

For those interested in deploying models on edge devices, these courses are highly relevant:

These courses offer practical insights into the world of on-device AI with TensorFlow.

Frequently Asked Questions (Career Focus)

Navigating a career in TensorFlow and machine learning can bring up many questions, especially for those new to the field or considering a transition. Here are some common queries with a career-oriented focus.

What are the essential skills for TensorFlow roles in 2025?

Beyond core TensorFlow proficiency, several skills are essential for success. Strong Python programming skills are fundamental, as Python is the primary language for TensorFlow. A solid understanding of machine learning concepts (e.g., supervised and unsupervised learning, model evaluation, overfitting) and deep learning architectures (e.g., CNNs for image data, RNNs/LSTMs for sequential data, Transformers for NLP) is crucial. Experience with data preprocessing and manipulation libraries like Pandas and NumPy is also expected.

Increasingly, employers look for skills in MLOps – the practices for deploying and maintaining ML models in production. This includes familiarity with tools like Docker, Kubernetes, and CI/CD pipelines, as well as TensorFlow-specific tools like TFX. Cloud computing skills (AWS, Google Cloud, Azure) are highly valuable, as many ML workloads are deployed in the cloud. Soft skills, such as problem-solving, communication, and the ability to work in a team, are also critical. Finally, a continuous learning mindset is vital, as the field evolves rapidly.

These courses help build a broad foundation often sought by employers:

This book is a comprehensive guide often recommended for practical machine learning skills.

Freelancing vs. Corporate: Which path is better for a TensorFlow developer?

Both freelancing and corporate employment offer distinct advantages and disadvantages for TensorFlow developers, and the "better" path depends on individual preferences, career goals, and risk tolerance. A corporate role typically offers more stability, a regular salary, benefits, structured career progression, and the opportunity to work on large-scale, long-term projects within a team. You might gain deep expertise in a specific industry or application area and have access to significant resources and mentorship.

Freelancing, on the other hand, offers greater flexibility in terms of work hours, location, and project choice. Freelancers often work on a variety of projects for different clients, which can lead to broader experience. There's also the potential for higher earning rates, although income can be less predictable. However, freelancing requires strong self-discipline, business development skills (finding clients, negotiating contracts), and managing your own finances and benefits. For TensorFlow developers, freelance opportunities might involve building custom models for small businesses, consulting on AI strategy, or developing specific components of larger projects. Some experienced developers may transition to freelancing after gaining substantial experience in a corporate setting.

Certifications vs. Project Portfolios: What holds more weight with employers?

While TensorFlow certifications (like the TensorFlow Developer Certificate) can demonstrate a foundational level of knowledge and commitment to learning, a strong portfolio of practical projects generally holds more weight with employers. Certifications can be a good way to structure your learning and validate your understanding of core concepts, potentially helping your resume stand out, especially for entry-level positions.

However, a project portfolio showcases your ability to apply your TensorFlow skills to solve real-world or near-real-world problems. This is what employers are ultimately looking for. Projects can include personal initiatives, contributions to open-source projects, solutions to Kaggle competitions, or capstone projects from online courses. A well-documented portfolio that demonstrates your problem-solving process, coding skills, and ability to achieve tangible results is often the most compelling evidence of your capabilities. Ideally, a combination of relevant certifications and a robust project portfolio can provide the strongest impression.

Consider these project-based courses to help build your portfolio:

How can I transition into TensorFlow roles from a traditional software engineering background?

Transitioning from traditional software engineering to a TensorFlow-focused role is a common and achievable path. Your existing software engineering skills (e.g., coding proficiency, understanding of data structures and algorithms, version control, testing, and system design) provide a strong foundation. The key is to supplement this with specialized machine learning knowledge.

Start by learning the fundamentals of machine learning and deep learning. Online courses, like those available through OpenCourser's Artificial Intelligence category, can be invaluable. Focus on understanding core concepts before diving deep into TensorFlow's syntax. Then, learn TensorFlow itself, starting with basic tutorials and gradually moving to more complex examples. Practice by implementing classic machine learning models and then deep learning architectures. Build a portfolio of ML projects, perhaps by reframing some of your software engineering projects with an ML component or by tackling new problems that interest you. Leverage your software engineering background by focusing on MLOps aspects, such as model deployment, scalability, and maintainability, where your existing skills are highly transferable. Networking with professionals in the ML field and highlighting your transferable skills on your resume and during interviews will also be beneficial.

This book is often recommended for software engineers looking to get into machine learning:

What are the global job market trends for TensorFlow skills in 2025?

The demand for TensorFlow skills is expected to remain strong globally in 2025 and beyond, driven by the increasing adoption of AI and machine learning across virtually all industries. Sectors like technology, healthcare, finance, automotive, retail, and manufacturing are actively hiring professionals with TensorFlow expertise. According to data from the U.S. Bureau of Labor Statistics on computer and information technology occupations, the overall field is projected to grow much faster than the average for all occupations, and roles involving AI and machine learning are significant contributors to this growth.

Specific roles in demand include Machine Learning Engineers, Data Scientists, AI Researchers, AI Architects, and MLOps Engineers. There's also a growing need for specialists in areas like Natural Language Processing (NLP), Computer Vision, and Reinforcement Learning, where TensorFlow is a key tool. Geographically, tech hubs in North America, Europe, and Asia continue to be major centers for TensorFlow-related jobs, but remote work opportunities are also becoming more prevalent, expanding the geographic reach for skilled professionals. Staying updated with the latest versions of TensorFlow and its ecosystem components (like TFX and TensorFlow Lite) will be important for maintaining a competitive edge in the job market.

How might AI regulations impact careers in TensorFlow and machine learning?

The evolving landscape of AI regulation is likely to have a significant impact on careers in TensorFlow and machine learning. Governments and international bodies worldwide are increasingly looking to establish frameworks for responsible AI development and deployment to address concerns related to bias, privacy, transparency, and accountability. This could lead to new roles and responsibilities for ML professionals.

For TensorFlow developers, this means a greater emphasis on building models that are not only accurate but also fair, interpretable, and robust. There might be increased demand for skills in areas like AI ethics, bias detection and mitigation, explainable AI (XAI), and privacy-preserving machine learning techniques. Professionals may need to become adept at documenting their models and data thoroughly to comply with regulatory requirements. Roles such as AI Ethicist, AI Auditor, or Regulatory Compliance Specialist for AI systems could become more common. While regulations might introduce new complexities, they are also likely to foster greater trust in AI systems, potentially leading to wider adoption and, consequently, more job opportunities for those equipped to navigate this regulated environment. Staying informed about emerging AI policies and ethical best practices will be crucial for career longevity and relevance in the field.

TensorFlow is a powerful and versatile tool that sits at the heart of many advancements in artificial intelligence and machine learning. Whether you are a student embarking on your learning journey, a professional seeking to pivot or advance your career, or a researcher pushing the boundaries of innovation, mastering TensorFlow can open up a world of opportunities. The journey requires dedication, continuous learning, and a willingness to tackle complex challenges. However, with the wealth of resources available, including the extensive catalog of courses and books on OpenCourser, and a supportive global community, the path to proficiency and a rewarding career in this exciting field is more accessible than ever. Embrace the learning process, build practical skills, and you can contribute to the transformative power of TensorFlow.