Random Variables

derstanding Random Variables: A Foundation for Data-Driven Careers

A random variable is a fundamental concept in probability and statistics. At its core, a random variable is a variable whose value is unknown or a function that assigns numerical values to each of an experiment's outcomes. Think of it as a way to quantify uncertainty. For instance, if you flip a coin, the outcome is either heads or tails. A random variable could assign 1 to heads and 0 to tails, transforming these qualitative outcomes into numbers we can analyze. This ability to convert unpredictable results into measurable data is what makes random variables so powerful in numerous fields.

Working with random variables can be intellectually stimulating. It involves unraveling patterns in data, building models to predict future events, and understanding the likelihood of different scenarios. This field is at the heart of data analysis, enabling us to make sense of the complex, data-rich world around us. From forecasting stock market fluctuations to assessing the effectiveness of new medical treatments, random variables provide the mathematical language to describe and manage uncertainty.

Introduction to Random Variables

Random variables are a cornerstone of probability theory and statistics. They serve as a bridge between the abstract world of probability and the concrete analysis of real-world data. Essentially, a random variable is a rule that assigns a numerical value to each possible outcome of a random phenomenon. This allows us to use mathematical tools to study and understand events that involve chance.

Definition and Basic Properties of Random Variables

Formally, a random variable is a function that maps the outcomes of a random experiment to numerical values. For example, if we roll a standard six-sided die, the sample space (the set of all possible outcomes) is {1, 2, 3, 4, 5, 6}. A random variable, often denoted by a capital letter like X, can represent the outcome of this roll. So, X could take on any of the values from 1 to 6. Each value of the random variable has an associated probability.

It's important to distinguish a random variable from a variable in an algebraic equation. An algebraic variable typically has a single, fixed unknown value that we can solve for. In contrast, a random variable can take on a set of possible values, each with a certain probability. The behavior of a random variable is described by its probability distribution, which specifies the probability of each possible value (or range of values) that the random variable can take.

Random variables must be measurable, meaning we can determine the probability that they fall within a certain range of values. They are typically real numbers. The key idea is that random variables allow us to move from describing events in words to analyzing them using numbers and mathematical functions.

Discrete vs. Continuous Random Variables

Random variables are broadly classified into two types: discrete and continuous. Understanding this distinction is crucial because it dictates the types of analyses and probability functions we use.

A discrete random variable is one that can take on a countable number of distinct values. This means the values are often integers, but they don't have to be. Think of things you can count. For example:

- The number of heads when flipping a coin three times (possible values: 0, 1, 2, 3).

- The number of cars sold at a dealership in a day (possible values: 0, 1, 2, ...).

- The number of defective items in a batch from a factory.

A continuous random variable, on the other hand, can take on any value within a given range or interval. This means there are infinitely many possible values. Think of things you measure. For example:

- The height of a person (e.g., could be 1.75 meters, 1.751 meters, 1.7512 meters, and so on, within a certain range).

- The temperature of a room.

- The time it takes for a computer to complete a task.

- The return on a stock.

The type of random variable (discrete or continuous) determines how we describe its probability distribution. For discrete random variables, we use a probability mass function (PMF), and for continuous random variables, we use a probability density function (PDF).

These courses offer a good introduction to the fundamental concepts of random variables, including the distinction between discrete and continuous types.

Role in Probability Theory and Statistics

Random variables are the building blocks of probability theory and statistical inference. They allow us to quantify outcomes of random events and provide a framework for analyzing data and making predictions. By assigning numerical values to outcomes, we can apply mathematical operations and statistical methods to understand patterns, test hypotheses, and model real-world phenomena.

In probability theory, random variables are used to define probability distributions. These distributions describe the likelihood of each possible outcome of a random event. For instance, the probability distribution of a fair die roll assigns a probability of 1/6 to each of the numbers 1 through 6.

In statistics, random variables are essential for making inferences about populations based on samples. For example, if we want to know the average height of all adults in a country, we might take a sample of adults, measure their heights (a continuous random variable), and then use statistical techniques to estimate the average height of the entire population. Random variables are also fundamental to hypothesis testing, where we assess the evidence for or against a particular claim about a population.

Moreover, random variables are crucial in fields like econometrics and regression analysis, where they help determine statistical relationships between different factors. They enable risk analysts to estimate the probability of adverse events and inform decision-making in various domains, from finance to engineering. Essentially, random variables provide the tools to understand and navigate a world full of uncertainty.

For those looking to deepen their understanding of probability theory and its applications, these resources are highly recommended.

You may also wish to explore these related topics to broaden your understanding of the context in which random variables are used.

Probability Distributions of Random Variables

Once we've defined a random variable, the next step is to understand its behavior. This is where probability distributions come in. A probability distribution describes how the probabilities are distributed over the possible values of the random variable. It tells us the likelihood of observing each specific outcome or a value within a certain range.

Common Distributions (Normal, Binomial, Poisson)

Several probability distributions appear frequently in practice due to their ability to model a wide variety of real-world phenomena. Among the most common are the Normal, Binomial, and Poisson distributions.

The Normal Distribution, often called the "bell curve," is perhaps the most well-known continuous distribution. It's characterized by its symmetric, bell-like shape. Many natural phenomena, such as human height, measurement errors, and standardized test scores, tend to follow a normal distribution. Its importance also stems from the Central Limit Theorem, which states that the sum (or average) of a large number of independent and identically distributed random variables will be approximately normally distributed, regardless of the original distribution.

The Binomial Distribution is a discrete distribution that models the number of "successes" in a fixed number of independent trials, where each trial has only two possible outcomes (e.g., success/failure, heads/tails). For example, it can be used to calculate the probability of getting exactly 7 heads in 10 coin flips. Key parameters for the binomial distribution are the number of trials (n) and the probability of success on a single trial (p).

The Poisson Distribution is another discrete distribution. It models the number of times an event occurs in a fixed interval of time or space, given the average rate of occurrence and assuming that events occur independently. For example, it can describe the number of customer arrivals at a service desk per hour, or the number of typos on a page of a book. The key parameter is the average rate (lambda, λ).

Understanding these and other common distributions is essential for anyone working with data, as they provide the foundation for statistical modeling and inference.

These courses delve into various probability distributions, including the widely used Normal, Binomial, and Poisson distributions.

Probability Density/Mass Functions

The way we define the probability distribution mathematically depends on whether the random variable is discrete or continuous.

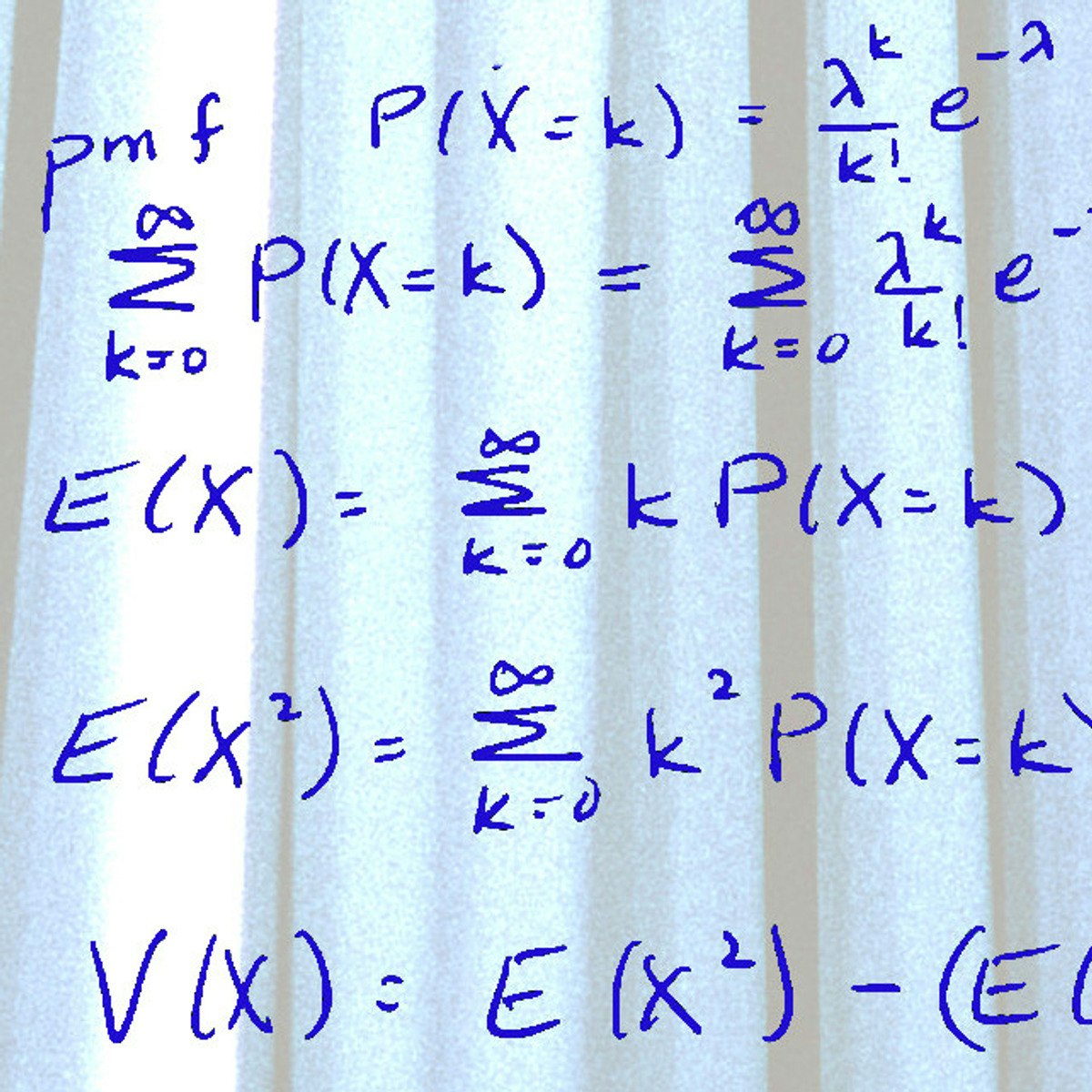

For a discrete random variable, its probability distribution is described by a probability mass function (PMF). The PMF, often denoted as P(X=x) or f(x), gives the probability that the random variable X takes on a specific value x. There are two main conditions for a valid PMF:

- The probability of each value must be non-negative (i.e., f(x) ≥ 0 for all x).

- The sum of the probabilities for all possible values of the random variable must equal one (i.e., Σ f(x) = 1 over all possible x).

For example, for a fair six-sided die, the PMF would assign a probability of 1/6 to each integer from 1 to 6, and 0 to any other value.

For a continuous random variable, its probability distribution is described by a probability density function (PDF). Unlike a PMF, the PDF, also often denoted as f(x), does not directly give the probability of the random variable taking on a specific value. In fact, for a continuous random variable, the probability of it taking on any single exact value is zero, because there are infinitely many possible values. Instead, the PDF describes the relative likelihood of the random variable falling within a certain range of values. The probability that a continuous random variable lies within a given interval is found by calculating the area under the curve of the PDF over that interval, which is done using integration. Similar to the PMF, a valid PDF must satisfy two conditions:

- The function must be non-negative for all values of x (i.e., f(x) ≥ 0 for all x).

- The total area under the curve of the PDF must equal one (i.e., ∫ f(x)dx = 1 over all possible x).

Understanding PMFs and PDFs is fundamental to working with random variables and their distributions.

To gain a deeper understanding of probability mass functions and probability density functions, these resources provide comprehensive explanations and examples.

Expectation, Variance, and Moments

Beyond describing the entire distribution, we often want to summarize key characteristics of a random variable. The most common summary measures are its expectation (or mean) and variance.

The expectation (or expected value or mean) of a random variable, denoted as E(X) or μ, represents its average value in the long run. If you were to repeat an experiment many times and observe the values of the random variable, the average of these observed values would approach the expectation. For a discrete random variable, the expectation is calculated as a weighted average of its possible values, where the weights are the probabilities of each value: E(X) = Σ [x * P(X=x)]. For a continuous random variable, it's calculated by integrating the product of each possible value and its probability density function: E(X) = ∫ [x * f(x)]dx.

The variance of a random variable, denoted as Var(X) or σ², measures the spread or dispersion of its values around the mean. A small variance indicates that the values tend to be clustered closely around the mean, while a large variance indicates that the values are more spread out. The variance is defined as the expected value of the squared difference between the random variable and its mean: Var(X) = E[(X - μ)²]. An equivalent and often easier formula for calculation is Var(X) = E(X²) - [E(X)]². The standard deviation, denoted as σ, is simply the square root of the variance and provides a measure of spread in the same units as the random variable itself.

Moments are a more general set of summary statistics. The k-th moment of a random variable X about a point 'c' is defined as E[(X-c)k]. When c=0, these are called moments about the origin (e.g., E(X) is the first moment about the origin, E(X²) is the second moment about the origin). When c=μ (the mean), these are called central moments (e.g., variance is the second central moment). Moments provide more detailed information about the shape of the distribution, such as its skewness (asymmetry) and kurtosis (peakedness).

These courses provide a solid grounding in calculating and interpreting expectation, variance, and other moments of random variables.

Applications in Finance and Economics

Random variables are indispensable tools in the fields of finance and economics, providing the mathematical framework to model and understand uncertainty, risk, and value. From predicting market movements to assessing investment opportunities, the principles of probability and random variables underpin many critical financial and economic analyses.

Modeling Market Volatility and Asset Prices

Financial markets are inherently uncertain, with asset prices and market conditions fluctuating in ways that are difficult to predict with certainty. Random variables are used extensively to model this volatility and the behavior of asset prices. For example, the future price of a stock can be treated as a random variable, with a probability distribution representing the likelihood of different future prices.

Stochastic models, which incorporate randomness, are used to simulate market conditions and forecast asset prices. These models often treat changes in asset prices over time as random processes. For instance, concepts like random walks are sometimes used as a basis for modeling how stock prices evolve, where future price movements are assumed to be independent of past movements. While simplified, these models provide a starting point for more complex analyses.

Market volatility itself, which measures the degree of variation in a trading price series over time, is often modeled using random variables. Understanding and forecasting volatility is crucial for pricing options and other derivatives, as well as for risk management. Various sophisticated models, like GARCH (Generalized Autoregressive Conditional Heteroskedasticity) models, use random variables to capture the changing nature of volatility over time.

These resources offer insights into how probability and statistics, including random variables, are applied in financial contexts.

Risk Assessment in Portfolios

One of the most critical applications of random variables in finance is in risk assessment, particularly for investment portfolios. Investors and portfolio managers use random variables to quantify the potential risks and returns associated with different assets and combinations of assets.

The return of an individual asset (like a stock or bond) is treated as a random variable. By analyzing historical data or making assumptions about future market conditions, analysts can estimate the expected return (average outcome) and the variance or standard deviation (a measure of risk or volatility) of these returns. A higher standard deviation generally indicates greater risk.

When combining multiple assets into a portfolio, the overall portfolio return is also a random variable. Its characteristics depend not only on the expected returns and risks of the individual assets but also on how the returns of these assets move in relation to each other (their covariance or correlation). The principles of diversification, a cornerstone of modern portfolio theory, are mathematically grounded in how combining assets with less-than-perfect positive correlation can reduce overall portfolio variance (risk) for a given level of expected return. Random variables and their associated probability distributions are fundamental to these calculations and to optimizing portfolio allocations.

Algorithmic Trading Strategies

Algorithmic trading, which involves using computer programs to execute trades at high speeds, heavily relies on statistical models and, by extension, random variables. These algorithms often aim to predict short-term price movements, exploit arbitrage opportunities, or provide liquidity to the market.

Many algorithmic trading strategies are based on identifying statistical patterns or anomalies in market data. Random variables are used to model various aspects of the market, such as order arrival times, trade sizes, and price changes. For example, the intensity of market orders (buy or sell orders to be executed immediately at market prices) might be modeled as a random process, potentially influenced by "influential orders" that can excite the market.

Some algorithms try to predict the probability of price movements in a certain direction based on a multitude of factors, treating these predictions as outcomes of random variables. Techniques like Monte Carlo simulations, which involve generating many random samples to model a range of possible outcomes, are also used in developing and testing trading strategies and in financial forecasting more broadly. The success of these strategies often depends on the accuracy of the underlying probabilistic models and the ability to quickly react to new information, which itself can be thought of as the outcome of a random process.

Understanding the probabilistic nature of market behavior is key to developing effective algorithmic trading systems. These resources provide further context.

Formal Education Pathways

A strong educational foundation is typically essential for those wishing to work extensively with random variables, especially in research or advanced application roles. The level and type of education can vary depending on career goals, but a solid understanding of mathematics and statistics is a common requirement.

Relevant Undergraduate Courses (Probability, Statistics)

For anyone interested in fields that heavily utilize random variables, a bachelor's degree in mathematics, statistics, data science, computer science, economics, or a related quantitative field is a common starting point. Core undergraduate coursework that builds a foundation for understanding random variables includes:

- Calculus: Essential for understanding continuous random variables, probability density functions, expectation, and variance, which often involve integration and differentiation.

- Linear Algebra: Important for handling multiple random variables (random vectors) and for many statistical techniques, especially in machine learning and data analysis.

- Introduction to Probability: This is a cornerstone course, directly covering the definition of random variables, discrete and continuous distributions, PMFs, PDFs, expectation, variance, and fundamental theorems like the Law of Large Numbers and the Central Limit Theorem.

- Mathematical Statistics or Statistical Theory: These courses build upon probability theory, introducing concepts of estimation, hypothesis testing, confidence intervals, and regression analysis, all of which rely heavily on the properties of random variables and their distributions.

- Discrete Mathematics: Often useful for understanding concepts related to discrete random variables and combinatorial probability.

Many universities offer specialized tracks or minors in statistics or data analysis that would provide a strong grounding. Look for courses that offer both theoretical understanding and practical application, perhaps involving statistical software like R or Python.

These introductory courses are excellent for building a strong undergraduate-level understanding of probability and statistics.

Graduate Research in Stochastic Processes

For those interested in more advanced theoretical work or specialized applications, graduate studies become crucial. A Master's or Ph.D. in statistics, mathematics, data science, financial engineering, or a similar field can open doors to research positions or highly specialized roles. A key area of graduate research related to random variables is stochastic processes.

A stochastic process (or random process) is essentially a collection of random variables, often indexed by time or space. It provides a way to model systems that evolve randomly over time. Examples include the movement of a stock price, the flow of customers arriving at a queue, or the spread of an epidemic. Courses and research in stochastic processes delve into topics like:

- Markov Chains: Processes where the future state depends only on the current state, not on the sequence of events that preceded it.

- Poisson Processes: Used to model the number of events occurring in a fixed interval of time or space.

- Brownian Motion: A continuous-time stochastic process often used to model random fluctuations in finance and physics.

- Martingales: Sequences of random variables where, given all prior values, the conditional expected value of the next value is equal to the present value.

Graduate research in this area involves developing new theory, creating more sophisticated models for complex systems, and applying these models to solve real-world problems in fields like finance, engineering, biology, and operations research. Such research requires a very strong mathematical background, particularly in probability theory and real analysis.

This textbook is a valuable resource for anyone pursuing graduate-level studies in probability and random processes.

PhD-Level Applications in Machine Learning

Machine learning (ML) is another field where a deep understanding of random variables is critical, especially at the Ph.D. level. Many machine learning algorithms are fundamentally based on probabilistic models and statistical inference.

Ph.D. research in machine learning often involves:

- Developing new algorithms: This can involve creating models that better capture uncertainty, handle complex data structures, or learn from different types of data. Probabilistic graphical models, Bayesian methods, and reinforcement learning are areas where random variables are central.

- Theoretical understanding of ML methods: Researchers investigate the properties of existing algorithms, such as their convergence rates, generalization ability (how well they perform on unseen data), and robustness. This often requires a deep understanding of probability theory, high-dimensional statistics, and optimization, all of which involve random variables.

- Causal inference in ML: An emerging area is the intersection of causality and machine learning, which aims to build models that can not only make predictions but also understand cause-and-effect relationships. This often involves modeling interventions and counterfactuals using probabilistic frameworks built upon random variables.

- Applications in specific domains: Ph.D. work can also focus on applying and adapting machine learning techniques to solve complex problems in areas like natural language processing, computer vision, robotics, bioinformatics, and climate science, where data is often noisy, high-dimensional, and inherently random.

A strong foundation in probability theory, statistics, linear algebra, calculus, and optimization is essential for Ph.D. work in machine learning. Many programs also expect strong programming skills.

These courses can provide a glimpse into the statistical and probabilistic underpinnings of machine learning, relevant for those considering advanced studies.

Online Learning and Certifications

For those looking to learn about random variables outside traditional academic settings, or to supplement their formal education, online courses and certifications offer flexible and accessible pathways. These resources can be invaluable for self-learners, career changers, and professionals seeking to upskill.

Key Platforms for Probability Courses

Several online platforms host a wide array of courses on probability, statistics, and related data science topics, often taught by instructors from leading universities and industry experts. Platforms like Coursera, edX, and Udacity offer courses ranging from introductory probability to more specialized topics involving random variables.

When choosing an online course, consider factors such as the depth of material covered, the prerequisites, the instructor's reputation, student reviews, and whether the course offers hands-on exercises or projects. Many courses provide a syllabus that outlines the topics, including specific types of random variables and distributions covered. OpenCourser is an excellent resource for finding and comparing such courses, allowing you to browse through mathematics courses or search for specific topics related to probability and statistics. You can also save courses to a list to organize your learning path.

Online courses are suitable for building a foundational understanding of random variables, learning specific applications, or preparing for more advanced study. They can also help learners develop practical skills by incorporating programming languages like R or Python, which are commonly used in statistical analysis.

Many reputable institutions offer their probability courses online, providing high-quality learning experiences. These are a few examples of the many available options:

Certifications for Data Science Roles

While a deep understanding of random variables is often gained through coursework, certifications can help demonstrate practical skills and knowledge in broader data science roles where this understanding is applied. Many professional certifications in data science, machine learning, or business analytics will implicitly or explicitly require a grasp of probability and statistics, including the use of random variables.

Certifications offered by tech companies (like Google, IBM, Microsoft) or industry organizations often focus on the application of statistical concepts using specific tools and platforms. For example, a certification might cover how to perform statistical modeling, hypothesis testing, or data analysis, all of which rely on understanding random variables. These certifications can be particularly useful for career changers or those looking to enter roles like Data Analyst, Business Intelligence Specialist, or entry-level Data Scientist.

When considering certifications, evaluate their relevance to your career goals and the specific skills they validate. Some certifications are part of larger specializations or professional certificate programs that offer a more comprehensive learning experience, often including hands-on projects. You can explore various Data Science programs and certifications on OpenCourser to find options that align with your aspirations. Don't forget to check the deals page for potential savings on courses and certifications.

These resources can help you explore certifications and the skills they cover.

Project-Based Learning Strategies

Theoretical knowledge of random variables becomes much more powerful when combined with practical application. Project-based learning is an excellent strategy to solidify understanding and develop real-world skills. This approach involves working on projects that require you to apply concepts of probability, statistics, and random variables to analyze data and solve problems.

For learners studying random variables, projects could involve:

- Simulating random processes: For example, simulating coin flips, dice rolls, or more complex systems like customer arrivals or stock price movements to understand their distributions and long-term behavior.

- Analyzing real-world datasets: Identifying types of random variables within a dataset (e.g., discrete counts, continuous measurements), visualizing their distributions, calculating summary statistics (mean, variance), and fitting appropriate probability models.

- Hypothesis testing: Formulating hypotheses about a population based on sample data and using statistical tests (which rely on the distributions of random variables) to draw conclusions.

- Building predictive models: Developing simple regression models or machine learning classifiers where the target variable is treated as a random variable.

Many online courses now incorporate hands-on projects. Additionally, platforms like Kaggle offer datasets and competitions that provide excellent opportunities for project-based learning. Building a portfolio of projects can be particularly beneficial for career explorers, as it demonstrates practical skills to potential employers. The "Activities" section often found on OpenCourser course pages can also suggest projects to supplement your learning. Furthermore, the OpenCourser Learner's Guide offers tips on structuring your learning and making the most of online courses, which can be helpful when undertaking project-based work.

Career Opportunities in Data Science

A strong understanding of random variables is fundamental to many roles within the burgeoning field of data science. As organizations increasingly rely on data to make decisions, professionals who can model uncertainty, analyze statistical patterns, and draw meaningful insights are in high demand.

Entry-Level Roles (Data Analyst, BI Specialist)

For those starting their careers, an understanding of random variables can open doors to several entry-level positions. These roles often involve collecting, cleaning, analyzing, and visualizing data to help businesses make better decisions.

A Data Analyst typically examines large datasets to identify trends, develop charts, and create visual presentations to help businesses make more strategic decisions. They might use statistical methods that rely on understanding the distributions of random variables to, for example, assess the significance of changes in key performance indicators or to segment customers based on their behavior. According to some sources, entry-level data scientists (a role closely related to and sometimes overlapping with data analyst) can earn between $95,000 and $130,000 in 2025 in the US. In India, entry-level data scientists might expect a monthly salary between ₹50,000 and ₹1,00,000.

A Business Intelligence (BI) Specialist focuses on designing and developing BI solutions. They work with BI tools and technologies to provide organizations with actionable insights from their data. This can involve creating dashboards and reports that track key metrics, which often represent outcomes of random variables (e.g., daily sales, website traffic).

While these roles may not always require deep theoretical research into random variables, a solid conceptual understanding is crucial for correctly applying analytical techniques and interpreting results. Many online courses focused on data analysis or business intelligence will cover the necessary statistical foundations. You can explore careers like these further on OpenCourser.

Advanced Positions (Machine Learning Engineer)

With more experience and often advanced education, professionals can move into more specialized and senior roles, such as a Machine Learning Engineer. These positions require a deeper understanding of the mathematical and statistical underpinnings of algorithms, where random variables play a central role.

A Machine Learning Engineer designs and implements machine learning models to solve complex problems. This involves understanding various algorithms (many of which are probabilistic in nature), feature engineering, model training, and deployment. They need to grasp concepts like probability distributions, Bayesian inference, and statistical learning theory to build effective and reliable models. For instance, understanding conditional probability and random variables is vital for working with models like Naive Bayes or Hidden Markov Models. The career path often involves starting in roles like data scientist or software engineer and then specializing. Salaries for machine learning engineers are typically high, reflecting the demand for these specialized skills; for example, some data indicates average salaries in the US can be over $130,000, with senior roles commanding significantly more.

Other advanced roles that heavily rely on a sophisticated understanding of random variables and probability include:

- Research Scientist (AI/ML): Focuses on creating new algorithms and advancing the theoretical foundations of machine learning and artificial intelligence.

- Quantitative Analyst ("Quant"): Often found in finance, these professionals develop and implement complex mathematical and statistical models for pricing financial instruments, risk management, and algorithmic trading.

- Statistician: Applies statistical theories and methods to collect, analyze, and interpret numerical data to inform decisions in various fields like healthcare, government, and industry.

These advanced positions often require a Master's degree or Ph.D. in a quantitative field.

Industry Demand and Salary Trends

The demand for professionals with skills in data science, including a strong understanding of statistics and probability (and therefore random variables), remains robust across various industries. Companies are increasingly recognizing the value of data-driven decision-making, leading to a continued need for individuals who can analyze complex datasets, model uncertainty, and extract actionable insights. Fields like technology, finance, healthcare, retail, and consulting are major employers of data science talent.

Salary trends in data science are generally positive, with compensation often reflecting experience, specialization, location, and the complexity of the role. For example, in the US, the average salary for a Data Scientist was reported to be around $126,554 in early 2024, with significant variation based on experience and other factors. Senior-level data scientists and specialized roles like Machine Learning Engineers or AI Specialists often command higher salaries, potentially exceeding $175,000 or even $200,000 annually in some cases. For instance, some 2025 projections suggest senior-level data scientists could earn between $156,666 and $202,692. The U.S. Bureau of Labor Statistics projects strong growth for data scientists and related professions.

It's important to stay updated on industry trends by consulting resources like salary guides and job market analyses from reputable sources. Factors such as gaining proficiency in in-demand skills (like AI, MLOps, and cloud platforms) and building a strong portfolio of projects can enhance earning potential. While the job market can be competitive, the overall outlook for those with strong data science skills, including a solid grasp of random variables and their applications, appears promising.

Exploring career development resources can provide further insights into navigating the job market.

Ethical and Practical Challenges

While the application of random variables and probabilistic models offers powerful tools for understanding and prediction, it also comes with significant ethical and practical challenges. Professionals in this field must be aware of these issues and strive to address them responsibly.

Bias in Probabilistic Models

One of the most critical challenges is the potential for bias in probabilistic models. These models are often trained on historical data, and if that data reflects existing societal biases (e.g., related to race, gender, or socioeconomic status), the models can perpetuate or even amplify these biases in their predictions and decisions. This can lead to unfair or discriminatory outcomes in areas such as loan applications, hiring processes, criminal justice, and healthcare.

For example, if a model used to predict loan default risk is trained on data where certain demographic groups were historically disadvantaged and had higher default rates due to systemic factors rather than individual creditworthiness, the model might unfairly assign higher risk scores to applicants from those groups. Addressing bias requires careful attention to data collection processes, feature selection, model design, and ongoing auditing of model performance across different subgroups. Researchers are actively developing techniques for fairness-aware machine learning to mitigate these issues.

It is crucial for practitioners to critically examine their data sources and model assumptions and to consider the potential societal impact of their work. This includes being transparent about how models are built and used.

Overfitting and Model Validation

A common practical challenge in statistical modeling, including models involving random variables, is overfitting. Overfitting occurs when a model learns the training data too well, including its noise and random fluctuations, to the point where it performs poorly on new, unseen data. An overfit model might appear to have high accuracy on the data it was trained on, but it will not generalize well to real-world scenarios.

Proper model validation techniques are essential to combat overfitting and to ensure that a model is genuinely capturing underlying patterns rather than just memorizing the training set. Common validation strategies include:

- Train-test split: Dividing the available data into a training set (used to build the model) and a test set (used to evaluate its performance on unseen data).

- Cross-validation: A more robust technique where the data is divided into multiple folds. The model is trained on several combinations of these folds and tested on the remaining fold, providing a more reliable estimate of its performance.

- Regularization: Techniques that add a penalty term to the model's objective function to discourage overly complex models, thereby reducing the risk of overfitting.

Careful model selection, feature engineering, and rigorous validation are crucial steps in developing reliable and useful probabilistic models. Failing to address overfitting can lead to models that are not only inaccurate but potentially harmful if deployed in critical applications.

This book delves into data analysis techniques, which often involve considerations of model validation and potential biases.

Data Privacy Concerns

The use of random variables and statistical modeling often involves working with large datasets, which can contain sensitive personal information. This raises significant data privacy concerns. As models become more sophisticated and data becomes more granular, the risk of inadvertently revealing private information about individuals increases.

For example, even if a dataset is "anonymized" by removing direct identifiers like names and addresses, it might still be possible to re-identify individuals by linking different pieces of information. Furthermore, the outputs of models themselves, if not carefully managed, could leak information about the data they were trained on.

Addressing data privacy requires a combination of legal, ethical, and technical approaches. This includes:

- Data minimization: Collecting only the data that is strictly necessary for the intended purpose.

- Anonymization and pseudonymization techniques: Methods to de-identify data, although their effectiveness can be limited.

- Differential privacy: A formal mathematical framework for adding noise to data or model outputs in a way that provides provable privacy guarantees while still allowing for useful statistical analysis.

- Secure multi-party computation: Cryptographic techniques that allow multiple parties to jointly compute a function over their private data without revealing the data itself.

- Compliance with data protection regulations: Adhering to laws like GDPR (General Data Protection Regulation) in Europe or CCPA (California Consumer Privacy Act) in the United States.

Professionals working with data have a responsibility to handle it ethically and to implement appropriate measures to protect individual privacy. This is an ongoing area of research and development, as the need to balance data utility with privacy protection becomes ever more critical.

Emerging Trends and Research

The field related to random variables and their applications is continuously evolving, driven by advances in computational power, new theoretical insights, and the increasing availability of complex data. Several emerging trends and research areas are shaping the future of how we understand and utilize randomness.

AI-Driven Stochastic Modeling

Artificial intelligence (AI), particularly machine learning, is profoundly impacting stochastic modeling. Traditional stochastic models often rely on specific mathematical assumptions about underlying random processes. AI, however, can learn complex patterns and dependencies directly from data, leading to more flexible and potentially more accurate stochastic models.

For example, deep learning techniques, such as recurrent neural networks (RNNs) and generative adversarial networks (GANs), are being used to model time series data and generate synthetic data that mimics the characteristics of real-world stochastic processes. This has applications in areas like financial forecasting, natural language processing (where sequences of words can be seen as a stochastic process), and simulating complex physical systems. AI can also help in automatically discovering the structure of stochastic models from data, a task that was previously reliant on domain expertise and manual effort. The synergy between AI and traditional stochastic modeling is opening up new avenues for understanding and predicting random phenomena in increasingly complex environments.

Quantum Computing Applications

Quantum computing, though still in its relatively early stages of development, holds the potential to revolutionize fields that rely on probability and random variables. Quantum mechanics itself is inherently probabilistic. Quantum computers leverage quantum phenomena like superposition and entanglement to perform computations that are intractable for classical computers.

In the context of random variables and stochastic modeling, quantum computing could offer significant speedups for certain types of problems. For example:

- Monte Carlo simulations: Quantum algorithms may be able to accelerate Monte Carlo methods, which are widely used for estimating expected values, pricing financial derivatives, and risk analysis.

- Optimization: Many problems in statistics and machine learning involve optimization, and quantum algorithms could potentially solve certain optimization problems more efficiently.

- Sampling from complex probability distributions: Generating samples from very high-dimensional or complex probability distributions, a task that can be challenging for classical computers, might become more feasible with quantum computers.

While widespread practical application is still some way off, research into quantum algorithms for probabilistic and statistical tasks is an active and exciting area. As quantum hardware improves, we may see these theoretical possibilities translate into real-world advantages.

Interdisciplinary Research Opportunities

The study and application of random variables are inherently interdisciplinary. The mathematical foundations are rooted in probability theory and statistics, but the applications span a vast range of fields. This creates numerous opportunities for interdisciplinary research where concepts related to randomness are applied in novel ways or where insights from different fields lead to new theoretical developments.

Examples of interdisciplinary research areas involving random variables include:

- Computational Biology and Bioinformatics: Modeling genetic mutations, protein folding, or the spread of diseases using stochastic processes and statistical inference.

- Climate Science: Developing probabilistic models to forecast weather patterns, predict the impact of climate change, and assess the risk of extreme events.

- Neuroscience: Using random variables to model neural firing patterns, understand brain connectivity, and analyze neuroimaging data.

- Social Sciences: Applying statistical models to understand social networks, predict human behavior, or analyze economic trends.

- Materials Science: Using stochastic models to simulate the behavior of materials at the molecular level or to design new materials with desired properties.

Collaboration between mathematicians, statisticians, computer scientists, and domain experts in these and other fields is crucial for advancing both the theory and application of random variables. Such collaborations often lead to innovative solutions for complex real-world problems.

If you are interested in exploring the intersection of data science with other fields, consider browsing through various categories on OpenCourser, such as Biology or Environmental Sciences.

Frequently Asked Questions (Career Focus)

For those considering a career path that involves a deep understanding of random variables, several practical questions often arise. Addressing these can help you make informed decisions about your educational and professional journey.

Essential skills for roles involving random variables

Roles that heavily involve random variables, such as Data Scientist, Statistician, Machine Learning Engineer, or Quantitative Analyst, require a blend of technical and soft skills. Key technical skills include:

- Strong mathematical foundation: Proficiency in probability theory, calculus, linear algebra, and statistics is fundamental.

- Statistical modeling and inference: The ability to choose appropriate statistical models, estimate parameters, test hypotheses, and interpret results.

- Programming skills: Proficiency in languages like Python or R, and familiarity with relevant libraries for data analysis, statistical modeling, and machine learning (e.g., NumPy, Pandas, Scikit-learn, TensorFlow, PyTorch).

- Data manipulation and analysis: Skills in cleaning, transforming, analyzing, and visualizing large datasets.

- Machine learning knowledge: For many roles, an understanding of various machine learning algorithms and their applications is crucial.

In addition to technical skills, strong analytical and problem-solving abilities, effective communication skills (to explain complex findings to non-technical audiences), and domain knowledge in the specific industry of application are also highly valued.

Industries with high demand for this expertise

Expertise in random variables and their applications in probability and statistics is in high demand across a wide array of industries. Some of the most prominent include:

- Technology: For roles in data science, machine learning, AI research, software development, and data engineering.

- Finance and Insurance: For quantitative analysis, risk management, algorithmic trading, fraud detection, and actuarial science.

- Healthcare and Pharmaceuticals: For biostatistics, clinical trial analysis, epidemiological modeling, drug discovery, and personalized medicine.

- E-commerce and Retail: For customer analytics, recommendation systems, supply chain optimization, and demand forecasting.

- Consulting: Providing data-driven insights and solutions to clients across various sectors.

- Government and Public Sector: For policy analysis, economic forecasting, public health surveillance, and defense/intelligence applications.

- Research and Academia: Conducting fundamental and applied research in statistics, data science, machine learning, and other quantitative fields.

The versatility of these skills means that opportunities can be found in almost any sector that deals with data and uncertainty.

Impact of AI on traditional statistical roles

Artificial intelligence, particularly machine learning, is transforming many fields, including traditional statistical roles. Rather than making statisticians obsolete, AI is often augmenting their capabilities and creating new areas of specialization. AI can automate certain repetitive tasks, handle much larger and more complex datasets than traditional methods, and uncover patterns that might be difficult for humans to detect.

However, the core principles of statistics—sound experimental design, rigorous inference, understanding bias and variance, and interpreting results in context—remain crucial. Statisticians are increasingly needed to:

- Develop and validate the statistical underpinnings of AI and ML algorithms.

- Ensure the ethical and responsible use of AI, including addressing issues of bias and fairness in models.

- Interpret the outputs of complex AI models and communicate their implications effectively.

- Integrate AI tools with traditional statistical methods to solve problems more effectively.

The rise of AI is creating a greater need for professionals who understand both statistical theory and modern computational tools. This often means that traditional statistical roles are evolving to incorporate more data science and machine learning skills.

Certifications vs. academic degrees

The choice between pursuing certifications and academic degrees depends on your career goals, current educational background, and the specific roles you are targeting.

- Academic Degrees (Bachelor's, Master's, Ph.D.): Degrees typically provide a more comprehensive and theoretical understanding of the subject matter, including the mathematical foundations of probability, statistics, and random variables. They are often prerequisites for research positions, highly specialized roles (like some Machine Learning Engineer positions), and academic careers. A degree from a reputable institution can also carry significant weight with employers.

- Certifications: Certifications tend to be more focused on specific skills, tools, or platforms. They can be a quicker way to gain practical, job-relevant competencies and demonstrate proficiency in particular areas (e.g., a specific programming language, cloud platform, or data analysis software). Certifications can be valuable for career changers, those looking to upskill quickly, or individuals seeking to complement an existing degree with more applied knowledge.

In many cases, a combination of both can be beneficial. For example, someone with a relevant academic degree might pursue certifications to gain expertise in new tools or specializations. Conversely, someone starting with certifications might later decide to pursue a degree for deeper theoretical knowledge or career advancement. Ultimately, employers value demonstrated skills and the ability to apply knowledge effectively, whether gained through degrees, certifications, or hands-on experience. According to the Bureau of Labor Statistics, data scientists typically need a bachelor's degree, though master's or doctoral degrees are common, especially for more advanced positions.

Freelancing opportunities in statistical modeling

Yes, there are freelancing and consulting opportunities for individuals with strong skills in statistical modeling, which inherently involves working with random variables. Many businesses, especially small and medium-sized enterprises (SMEs) or startups, may not have the resources to hire full-time statisticians or data scientists but still require expertise for specific projects.

Freelancers in this space might offer services such as:

- Data analysis and interpretation for specific business problems.

- Building predictive models for sales forecasting, customer churn, etc.

- Designing and analyzing surveys or experiments.

- Providing statistical consulting on research projects.

- Developing custom data visualizations and dashboards.

- Offering training in statistical software or methods.

Success in freelancing requires not only strong technical skills but also good business acumen, including marketing yourself, managing client relationships, and project management. Online platforms that connect freelancers with clients can be a good starting point. Building a strong portfolio and network is also crucial.

Long-term career growth trajectories

The long-term career growth trajectories for professionals working with random variables are generally very promising, given the increasing importance of data across all industries. Starting in roles like Data Analyst or Junior Data Scientist, individuals can progress to more senior and specialized positions.

Potential career paths include:

- Technical Leadership: Advancing to roles like Senior Data Scientist, Principal Data Scientist, Staff Machine Learning Engineer, or Data Science Manager, leading teams and complex projects.

- Specialization: Focusing on niche areas such as Deep Learning, Natural Language Processing, Computer Vision, Causal Inference, or specific industry applications (e.g., quantitative finance, bioinformatics).

- Management and Strategy: Moving into broader management roles like Director of Data Science, Chief Data Officer (CDO), or other executive positions that shape the data strategy of an organization.

- Entrepreneurship: Founding a startup that leverages data science and statistical modeling to offer innovative products or services.

- Academia and Research: Pursuing a Ph.D. and a career in academic research and teaching, contributing to the theoretical advancements in statistics, machine learning, or related fields.

Continuous learning is key to long-term growth, as the field is constantly evolving with new tools, techniques, and theoretical developments. Staying updated through online courses, attending conferences, reading research papers, and engaging with the professional community will be essential. You can always find new learning opportunities by exploring OpenCourser for the latest courses and materials.

Understanding random variables is more than just an academic exercise; it's a foundational skill for anyone looking to navigate and shape our increasingly data-driven world. Whether you are just starting to explore this topic or are looking to deepen your expertise, the journey of learning about random variables offers a pathway to intellectually stimulating and impactful career opportunities. We encourage you to embrace the challenge, explore the resources available, and set your sights on making a meaningful contribution in this exciting field.