Distributed Systems

derstanding Distributed Systems: A Comprehensive Guide

A distributed system is a collection of independent computer components, often spread across a network, that appear to users as a single, cohesive system. These components work together by communicating and coordinating their actions to achieve a common goal, such as processing large amounts of data or handling high volumes of user requests. The internet itself is a vast example of a distributed system, and chances are, you interact with numerous distributed systems daily, from using search engines and social media to online shopping and banking.

Working with distributed systems can be incredibly engaging. Imagine building software that can handle millions of users simultaneously or designing systems that remain operational even if some of their parts fail. This field offers the thrill of tackling complex technical challenges and the satisfaction of creating robust, scalable, and resilient applications that power modern technology. Furthermore, the constant evolution of distributed systems, with new technologies and approaches emerging regularly, ensures a dynamic and intellectually stimulating career path.

Introduction to Distributed Systems

This section will provide a foundational understanding of distributed systems, exploring what they are, how they came to be, and why they are so crucial in today's technological landscape. This will set the stage for a deeper dive into the core concepts and practical aspects of working with these complex yet fascinating systems.

Definition and core characteristics of distributed systems

At its core, a distributed system consists of multiple autonomous computing entities, known as nodes, that are interconnected by a network. These nodes could be separate physical hardware devices, virtual machines, or even individual software processes. The defining characteristic is that these components communicate and coordinate their actions by passing messages to achieve a shared objective. To the end-user, this intricate collection of interconnected components ideally appears as a single, unified system. This transparency means that users interact with an application or website without needing to know about the underlying complexity of the distributed architecture that powers it.

Several core characteristics define distributed systems. Scalability is a key feature, referring to the system's ability to handle an increasing workload by adding more resources, such as additional nodes. Fault tolerance is another critical aspect; a well-designed distributed system can continue to operate correctly even if some of its individual components fail. This is achieved through redundancy and mechanisms that allow the system to detect and recover from failures. Concurrency is inherent, as multiple components operate simultaneously. Other important characteristics include resource sharing, where hardware, software, or data can be shared among nodes, and openness, which allows for the system to be extended and modified.

The interaction between these characteristics often involves trade-offs. For example, ensuring strong consistency (where all nodes have the same view of data at all times) can sometimes impact availability (the system's ability to respond to requests). Understanding these fundamental properties and their interplay is crucial for anyone looking to delve into the world of distributed systems.

Historical evolution and key milestones

The roots of distributed systems can be traced back to the 1960s with research into operating system architectures that involved concurrent processes communicating via message passing. The 1970s saw the invention of local area networks (LANs) like Ethernet, which were among the first widespread distributed systems. Around the same time, the ARPANET, a precursor to the internet, was developed, and email emerged as its most successful application, arguably the earliest large-scale distributed application.

The 1980s brought a shift towards client-server architectures, where a central server provided services to multiple client computers. As hardware became more affordable and virtualization technologies matured, the development of more complex distributed systems accelerated. The late 1990s saw the rise of peer-to-peer (P2P) computing, exemplified by platforms like Napster, which allowed direct resource sharing between computers without a central server.

More recently, the ubiquity of cloud computing, pioneered by vendors like Amazon and Microsoft, has profoundly impacted distributed systems. Cloud platforms provide the infrastructure for building and deploying highly scalable and resilient distributed applications. The development of containerization technologies like Docker and orchestration tools like Kubernetes has further simplified the deployment and management of distributed applications, allowing for "infrastructure as code" and enhancing service resiliency. Today, distributed systems underpin most modern web applications, big data processing, and cloud-based services.

Importance in modern computing and technology ecosystems

Distributed systems are not just a niche area of computer science; they are the bedrock of modern computing and technology ecosystems. Think about the applications and services you use daily: search engines processing billions of queries, e-commerce platforms handling massive transaction volumes, social media networks connecting billions of users, and cloud services providing on-demand computing power. All of these rely heavily on distributed systems to function effectively.

The importance of distributed systems stems from their ability to address several critical needs of modern applications. Scalability allows businesses to handle growing numbers of users and data without a proportional decrease in performance. High availability and fault tolerance ensure that services remain operational even in the face of hardware failures or network issues, which is crucial for business continuity. Performance can be significantly improved by distributing workloads and processing data in parallel across multiple machines. Furthermore, distributed systems enable geographical distribution, allowing services to be deployed closer to users, reducing latency and improving user experience.

In essence, distributed systems provide the architectural foundation for building applications that are robust, scalable, efficient, and capable of meeting the demands of a global, interconnected world. As technology continues to evolve, with trends like edge computing, the Internet of Things (IoT), and artificial intelligence generating and processing vast amounts of data, the role and importance of distributed systems will only continue to grow.

Core Concepts in Distributed Systems

Understanding the fundamental principles that govern the design and behavior of distributed systems is essential. This section delves into some of the most critical concepts: scalability, fault tolerance, and consistency models. These concepts are interconnected and often involve making careful trade-offs to meet specific application requirements.

Scalability and horizontal vs. vertical scaling

Scalability is a crucial attribute of distributed systems, representing the system's capacity to handle a growing amount of work or to expand in response to increased demand without degrading performance or reliability. As user bases expand, data volumes grow, and transaction rates climb, a scalable system can adapt by efficiently incorporating additional resources or nodes. Maintaining performance and responsiveness under increasing load is paramount for user satisfaction and business success.

There are two primary ways to scale a system: vertical scaling (scaling up) and horizontal scaling (scaling out). Vertical scaling involves increasing the resources of a single server, such as adding more CPU, memory, or storage. While this can be simpler to implement initially, it has limitations. There's an upper bound to how much a single server can be upgraded, and it can become very expensive. Moreover, it still represents a single point of failure.

Horizontal scaling, on the other hand, involves adding more machines or nodes to the system. The workload is then distributed across these nodes, often with the help of a load balancer. This approach is common in cloud environments and is generally more flexible and resilient. Distributed systems are inherently designed for horizontal scalability, allowing them to grow incrementally as needed. Linear scalability, where adding 'n' resources results in 'n' times the throughput, is the ideal but not always achievable scenario.

Effective scalability isn't an afterthought; it must be designed into the system from the outset. Poorly designed systems may find that adding more resources doesn't proportionally improve performance or may even introduce new bottlenecks.

These courses can help build a foundational understanding of how to design and manage scalable systems.

You may also find these books insightful for deeper exploration of data-intensive application design and scalability.Fault tolerance and redundancy mechanisms

Fault tolerance is the ability of a distributed system to continue operating correctly even when one or more of its components fail. In a complex system with many interconnected parts, failures are inevitable, whether due to hardware malfunctions, software bugs, or network disruptions. The goal of fault tolerance is to ensure that these individual failures do not cascade into a system-wide outage, thereby maintaining high availability and reliability.

Redundancy is a cornerstone of fault tolerance. This involves duplicating critical components, data, or services. If one component fails, a redundant copy can take over its function. For example, data might be replicated across multiple storage nodes. If one node becomes unavailable, the data can still be accessed from other nodes. Similarly, critical services might have multiple instances running, with a mechanism to switch to a healthy instance if one fails (failover).

Achieving fault tolerance requires careful design. Systems must be able to detect failures, isolate the faulty components, and seamlessly transition to backup resources. This often involves complex protocols and algorithms. While redundancy enhances reliability, it also introduces its own set of challenges, such as keeping redundant copies of data consistent and managing the increased complexity of the system. However, the ability to withstand failures without significant impact on performance or availability is a hallmark of a well-engineered distributed system.

These courses explore how to build resilient systems capable of handling failures.

Consistency models (e.g., CAP theorem)

Consistency in distributed systems refers to the property that all nodes in the system see the same data at the same time. When data is written to one node, ensuring that this change is propagated and visible across all other nodes in a timely and orderly manner is a significant challenge, especially in the presence of network delays and concurrent updates. Consistency models provide a contract between the system and its programmers, defining the guarantees the system offers regarding how and when updates will be visible.

The CAP Theorem, also known as Brewer's Theorem, is a fundamental principle in distributed system design. It states that a distributed data store can only simultaneously guarantee two of the following three properties:

- Consistency (C): Every read receives the most recent write or an error. All nodes see the same data at the same time.

- Availability (A): Every request receives a (non-error) response, without the guarantee that it contains the most recent write. All working nodes return a valid response.

- Partition Tolerance (P): The system continues to operate despite an arbitrary number of messages being dropped (or delayed) by the network between nodes (i.e., a network partition).

In reality, network partitions are a fact of life in distributed systems, so partition tolerance (P) is usually a requirement. Therefore, designers are often forced to choose between strong consistency (C) and high availability (A) when a partition occurs. For instance, a system might choose to sacrifice availability to ensure consistency by refusing to process requests if it cannot guarantee that all nodes are up-to-date. Conversely, it might sacrifice strong consistency to maintain availability by allowing nodes to serve potentially stale data during a partition.

Beyond the CAP theorem, various consistency models exist, each offering different trade-offs. Strong consistency (or linearizability) ensures that every operation appears to take effect instantaneously, and all clients see the same order of operations. This is the strictest model but can lead to higher latency. Eventual consistency, on the other hand, guarantees that if no new updates are made to a given data item, eventually all accesses to that item will return the last updated value. This model allows for temporary inconsistencies between nodes but offers higher availability and lower latency. Many modern systems, particularly those operating at a large scale, opt for eventual consistency or weaker consistency models to achieve better performance and availability. Understanding these models and their implications is crucial for choosing the right approach for a given application.

These courses delve into the intricacies of consistency in distributed systems, including the CAP theorem.

This book provides a comprehensive look at data consistency and related concepts.Key Technologies and Tools

Building and managing distributed systems relies on a diverse set of powerful technologies and tools. These tools help automate deployment, manage communication between services, and store and retrieve vast amounts of data across multiple nodes. This section highlights some of the most prominent categories of tools used in modern distributed systems development.

Orchestration tools (e.g., Kubernetes)

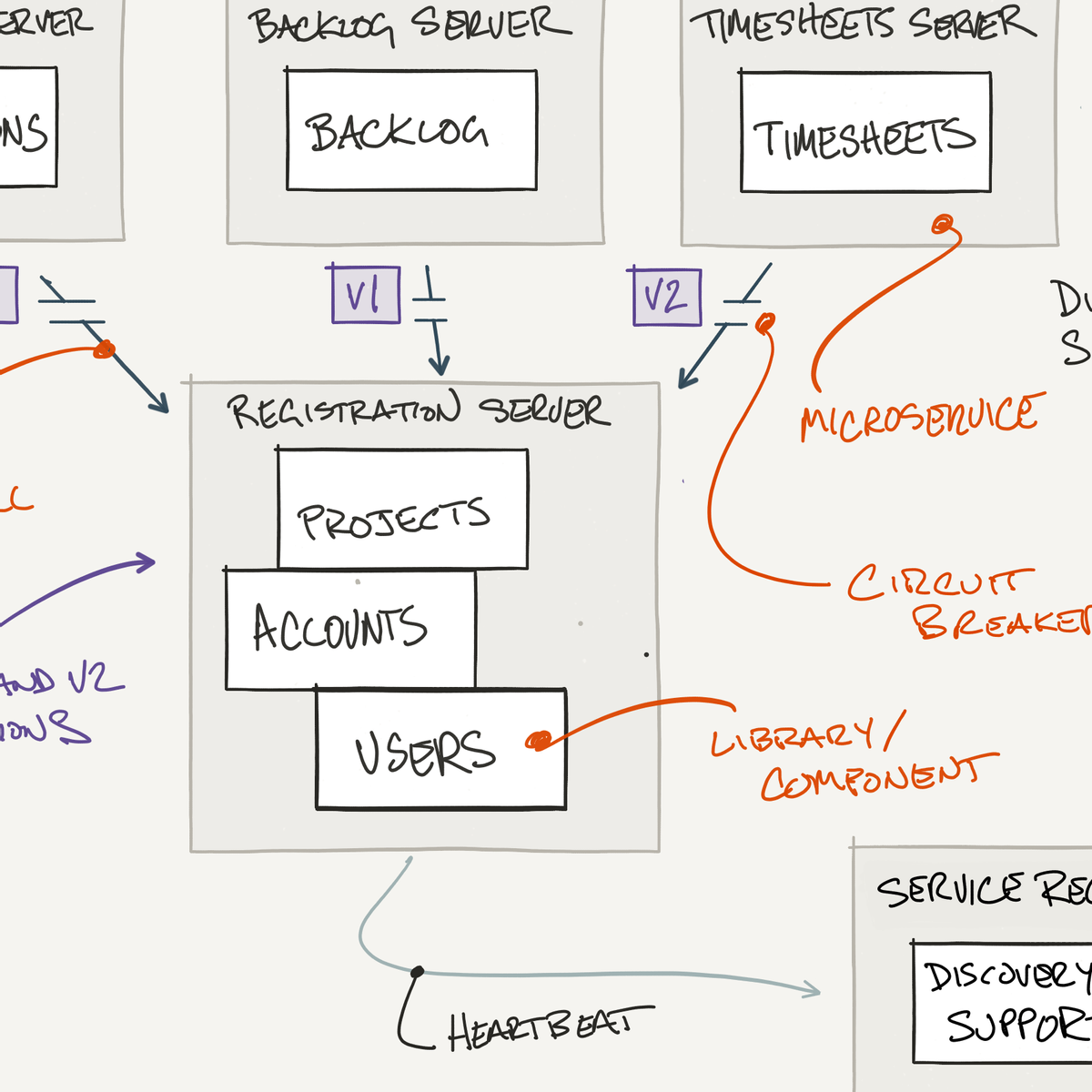

Container orchestration tools are essential for managing the lifecycle of containerized applications in a distributed environment. As applications are broken down into smaller, independent microservices running in containers, managing their deployment, scaling, networking, and health becomes a complex task. Orchestration platforms automate these processes, making it feasible to run complex distributed systems at scale.

Kubernetes has emerged as the de facto standard for container orchestration. It provides a powerful framework for deploying, scaling, and managing containerized applications across clusters of machines. Kubernetes handles tasks such as service discovery, load balancing, automated rollouts and rollbacks, self-healing (restarting failed containers), and configuration management. Its declarative approach allows developers to define the desired state of their application, and Kubernetes works to maintain that state. This significantly simplifies the operational complexity of running distributed applications.

Other orchestration tools exist, and many cloud providers offer managed Kubernetes services, further reducing the operational burden. Understanding the principles of containerization and orchestration is becoming increasingly vital for anyone working with modern distributed systems, as these tools provide the foundation for deploying and managing applications in a scalable and resilient manner.

For those looking to get started with managing distributed applications, these courses offer valuable insights into popular orchestration technologies.

Message brokers (e.g., Apache Kafka)

Message brokers play a crucial role in facilitating communication between different components of a distributed system, particularly in asynchronous, loosely coupled architectures like microservices and event-driven systems. They act as intermediaries, receiving messages from producer applications and delivering them to consumer applications. This decoupling means that producers don't need to know about the consumers, and vice-versa, leading to more flexible and resilient systems.

Apache Kafka is a widely adopted distributed streaming platform often used as a high-throughput, fault-tolerant message broker. It is designed to handle large volumes of real-time data streams. Kafka allows applications to publish and subscribe to streams of records, similar to a message queue or enterprise messaging system. Key features include its ability to store streams of records in a fault-tolerant durable way, process streams as they occur, and scale horizontally. Kafka is commonly used for building real-time data pipelines and streaming applications, enabling use cases like activity tracking, log aggregation, and event sourcing.

Other message queuing technologies include RabbitMQ and Amazon SQS. The choice of message broker often depends on specific requirements such as message ordering guarantees, throughput needs, and persistence requirements. Understanding how message brokers work and how to integrate them is essential for building robust and scalable distributed applications that rely on asynchronous communication.

These courses provide a good starting point for understanding message brokers and their role in distributed architectures.

This book offers a practical perspective on integrating messaging systems.Distributed databases (e.g., Cassandra)

Distributed databases are designed to store and manage data across multiple physical locations or computer nodes. Unlike traditional centralized databases, they can handle much larger volumes of data and higher transaction rates by distributing the data and the workload. They are a critical component of many large-scale applications that require high availability, scalability, and fault tolerance for their data storage needs.

Apache Cassandra is a popular open-source, distributed NoSQL database designed for handling large amounts of data across many commodity servers, providing high availability with no single point of failure. Cassandra's architecture is decentralized, meaning all nodes are peers, which contributes to its fault tolerance and scalability. It offers tunable consistency, allowing developers to choose the level of consistency required for their application, often trading strong consistency for higher availability and lower latency, especially during network partitions. It's well-suited for applications with high write throughput requirements and those that need to scale globally.

Other examples of distributed databases include MongoDB (a document database that can be configured for distributed deployments), Google Cloud Spanner (a globally distributed, strongly consistent database), and Amazon DynamoDB (a key-value and document database). The choice of a distributed database depends on factors like the data model (e.g., key-value, document, columnar), consistency requirements, scalability needs, and the specific workload characteristics of the application. Understanding the principles behind different distributed database technologies is crucial for effective data management in distributed systems.

To learn more about how data is managed in distributed environments, consider these courses.

These books provide in-depth knowledge on handling large datasets and designing data-intensive applications.Design Principles for Distributed Systems

Effective design is paramount when building robust, scalable, and maintainable distributed systems. Adhering to sound design principles helps manage complexity and achieve desired system characteristics. This section explores key architectural approaches and design philosophies that guide the development of modern distributed systems.

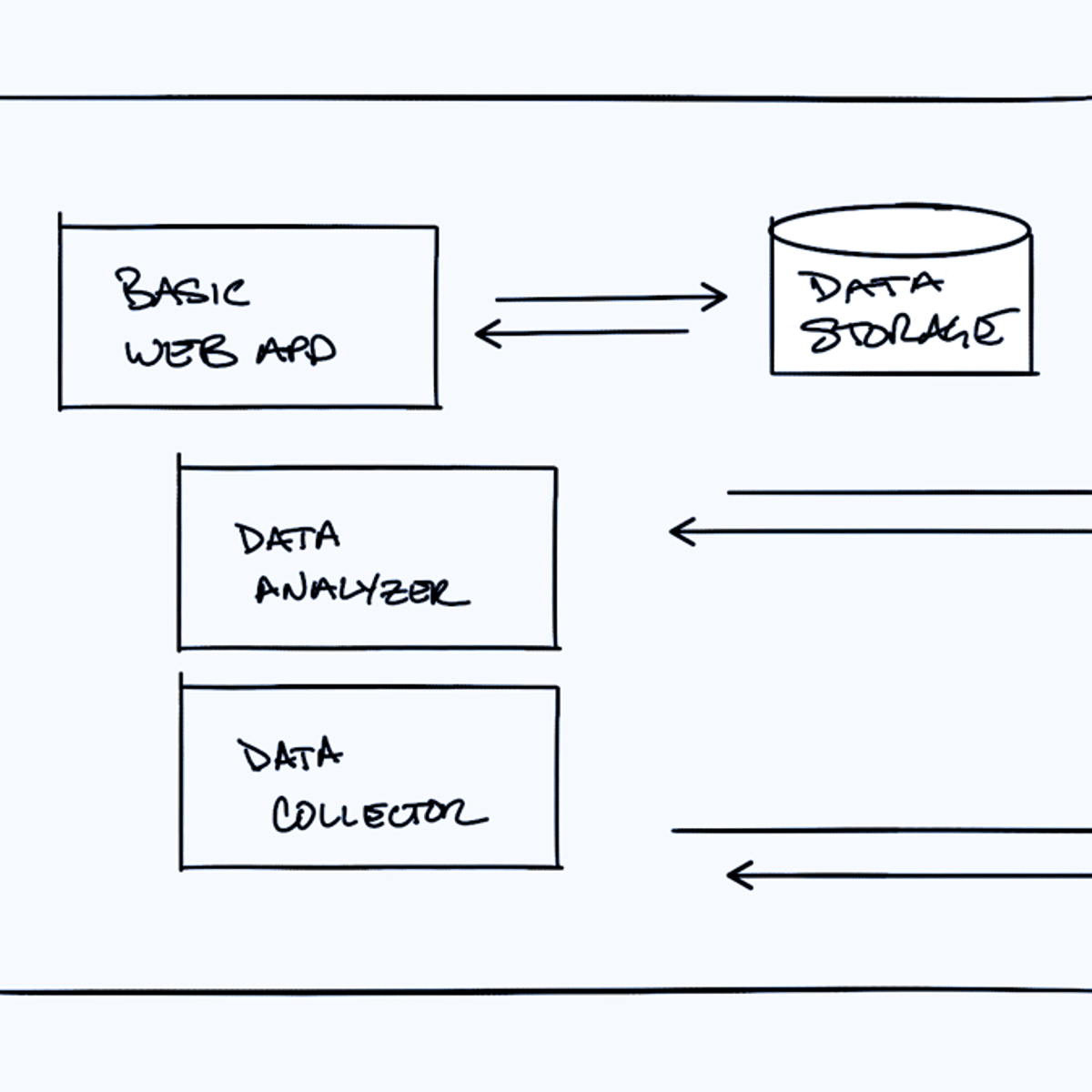

Microservices architecture

Microservices architecture is an architectural style that structures an application as a collection of small, autonomous services, modeled around a business domain. Each microservice is self-contained, can be developed, deployed, and scaled independently. This is a significant departure from traditional monolithic architectures where the entire application is built as a single, large unit.

The primary advantage of microservices is improved agility and scalability. Because services are independent, teams can develop and deploy them separately, leading to faster release cycles. Scaling can also be more granular; only the services that require more resources need to be scaled, rather than scaling the entire application. Fault isolation is another benefit: if one microservice fails, it doesn't necessarily bring down the entire application, assuming proper fault tolerance mechanisms are in place.

However, microservices also introduce their own challenges. Managing a larger number of services increases operational complexity. Inter-service communication adds network latency and requires robust mechanisms. Testing and debugging a distributed system composed of many services can be more difficult than with a monolith. Despite these challenges, the microservices approach has become a popular choice for building complex, scalable applications, particularly in cloud environments.

These courses offer guidance on designing and implementing microservices.

Event-driven design

Event-driven architecture (EDA) is a design paradigm that promotes the production, detection, consumption of, and reaction to events. An "event" can be defined as a significant change in state. For example, when a customer places an order on an e-commerce website, an "order placed" event is generated. Other services can then subscribe to this event and react accordingly, such as an inventory service updating stock levels or a notification service sending a confirmation email.

In an event-driven system, services communicate asynchronously through events. This loose coupling between services enhances scalability and resilience. Producers of events don't need to know which services consume them, and consumers don't need to know where the events originated. This allows services to be added, removed, or modified independently without affecting other parts of the system. Message brokers, like Apache Kafka, often play a central role in EDA, acting as the backbone for event transmission and storage.

Event-driven design is well-suited for applications that need to react to real-time changes, handle high volumes of asynchronous operations, or integrate disparate systems. It can lead to more responsive and adaptable applications. However, it also introduces challenges such as ensuring event-ordering guarantees if required, managing event schemas, and debugging complex event flows.

Exploring these resources can help you understand how to build event-driven systems.

Decentralized vs. centralized systems

The distinction between decentralized and centralized systems is fundamental to understanding distributed computing. A centralized system relies on a single, central component or authority to manage and coordinate operations. For example, a traditional client-server application where a single server handles all requests and data storage is a centralized system. While simpler to design and manage initially, centralized systems can suffer from performance bottlenecks, a single point of failure (if the central component goes down, the entire system fails), and scalability limitations.

In contrast, a decentralized system distributes control and decision-making away from a central point. Components in a decentralized system operate more autonomously and make local decisions. Many distributed systems inherently incorporate decentralized elements. For instance, in a peer-to-peer network, there is no central server; nodes communicate directly with each other. Blockchain technology is a prominent example of a decentralized system, where a distributed ledger is maintained by a network of computers without a central authority.

Decentralized systems can offer greater fault tolerance (no single point of failure), improved scalability (as workload can be distributed more effectively), and potentially enhanced resilience against censorship or control by a single entity. However, they also introduce challenges in areas like achieving consensus among distributed components, ensuring data consistency across the network, and managing security in an environment without a central authority. The choice between a centralized, decentralized, or hybrid approach depends on the specific goals and constraints of the system being built.

These courses provide insights into architectures that move beyond centralized control.

Challenges and Solutions

While distributed systems offer significant advantages, they also present unique and complex challenges. Successfully building and operating these systems requires a deep understanding of these potential pitfalls and the strategies to mitigate them. This section explores some of the common hurdles encountered in distributed environments and approaches to overcome them.

Network latency and partition handling

Network communication is fundamental to distributed systems, but it's also a primary source of challenges. Network latency, the delay in data transmission between nodes, is unavoidable. Even on high-speed networks, the physical distance between nodes and the overhead of network protocols contribute to latency. High latency can degrade application performance and responsiveness, especially for operations that require communication across multiple nodes. Designing systems to minimize the impact of latency, for example, by reducing the number of cross-node calls or by using asynchronous communication patterns, is crucial.

Network partitions occur when communication between different parts of the distributed system is disrupted, effectively splitting the system into two or more isolated sub-networks. Nodes within a partition can communicate with each other, but not with nodes in other partitions. Handling network partitions gracefully is a critical aspect of fault tolerance. As discussed with the CAP theorem, during a partition, a system often has to choose between maintaining consistency (potentially by becoming unavailable to some users) or maintaining availability (potentially by allowing operations that could lead to data inconsistencies once the partition heals). Strategies for dealing with partitions include using consensus algorithms to ensure agreement, designing systems to detect and recover from partitions, and implementing mechanisms to resolve conflicting updates that may have occurred during a partition.

Both latency and partitions are inherent properties of networked environments. Robust distributed systems must be designed with the explicit acknowledgment that the network is unreliable and that delays and disruptions will occur.

These resources delve into the complexities of network behavior in distributed systems.

Data consistency trade-offs

Maintaining data consistency across multiple nodes in a distributed system is a persistent challenge. When data is replicated or distributed, ensuring that all reads access the most up-to-date version of the data, and that concurrent updates are handled correctly, becomes complex. As previously discussed in the context of the CAP theorem and consistency models, there's often a trade-off between the strength of consistency guarantees and other desirable system properties like availability, performance (latency), and partition tolerance.

Strong consistency models, such as linearizability, provide the simplest programming model because they ensure that all operations appear to occur atomically and in a single, well-defined order. However, achieving strong consistency in a distributed system often requires significant coordination between nodes, which can increase latency and reduce availability, especially in the face of network partitions. For applications like financial transactions, where correctness is paramount, strong consistency is often a requirement.

Many large-scale systems, particularly those prioritizing high availability and low latency (like social media feeds or product catalogs), opt for weaker consistency models, such as eventual consistency. With eventual consistency, if no new updates are made, all replicas will eventually converge to the same state. However, during the convergence period, different nodes might return different versions of the data. This approach can improve performance and availability but requires careful application design to handle potential data staleness and resolve conflicts that may arise from concurrent updates. Developers must carefully consider the specific requirements of their application to choose the appropriate consistency model and understand the implications of that choice.

Understanding consistency trade-offs is crucial for system design. These courses offer more detailed explanations.

This book is a highly recommended resource for understanding data-intensive application design, including consistency.Synchronization complexities

Synchronization in distributed systems refers to the coordination of actions or agreement on data values among multiple processes or nodes. Achieving synchronization is challenging due to the lack of a global clock, unpredictable network delays, and the possibility of concurrent operations and component failures.

One common synchronization problem is clock synchronization. Each computer in a distributed system has its own physical clock, and these clocks tend to drift over time. For many distributed algorithms and applications, having a consistent notion of time across nodes is important. Algorithms like Network Time Protocol (NTP) are used to synchronize clocks, but perfect synchronization is impossible. This "clock skew" can lead to issues in ordering events or coordinating time-sensitive tasks.

Another major challenge is achieving consensus, where a group of processes must agree on a single value or coordinate an action. This is fundamental to many distributed operations, such as electing a leader, committing a transaction across multiple nodes, or maintaining a consistent replicated state. Consensus algorithms like Paxos and Raft are designed to solve this problem in a fault-tolerant manner, but they can be complex to implement and understand. Furthermore, managing access to shared resources and preventing issues like deadlocks (where processes are stuck waiting for each other) requires careful synchronization mechanisms, adding another layer of complexity to distributed system design.

These courses provide further insights into synchronization mechanisms and challenges.

Distributed Systems in Modern Industries

The principles and technologies of distributed systems are not just theoretical constructs; they are the engines powering critical operations across a multitude of industries. From managing global financial transactions to enabling real-time e-commerce and advancing healthcare, distributed systems provide the scalability, reliability, and performance necessary for modern business. This section highlights how these systems are applied in various key sectors.

Financial systems and blockchain

The financial industry relies heavily on distributed systems to manage the immense volume and complexity of global transactions, ensure data integrity, and provide high availability. Core banking systems, payment processing networks, stock exchanges, and fraud detection platforms are all built upon distributed architectures. These systems must handle high throughput, provide low latency for trading operations, and guarantee strong consistency for financial records to prevent errors like double-spending or incorrect balances.

Blockchain technology, which underpins cryptocurrencies like Bitcoin and Ethereum, is a particularly innovative application of distributed systems in finance. A blockchain is essentially a decentralized, distributed ledger that records transactions across many computers. This decentralization, combined with cryptographic principles, aims to provide security, transparency, and immutability of records without relying on a central authority. While blockchain offers exciting possibilities for areas like cross-border payments, smart contracts, and trade finance, it also brings its own set of distributed systems challenges, particularly around scalability, consensus mechanisms, and energy consumption. The ongoing development in this space seeks to address these challenges to unlock the full potential of distributed ledger technologies in the financial sector and beyond.

These courses can provide a deeper understanding of blockchain and its distributed nature.

Healthcare data interoperability

Distributed systems are playing an increasingly vital role in the healthcare sector, particularly in addressing the challenge of data interoperability. Healthcare data, including patient records, medical imaging, and research data, is often stored in disparate systems across different hospitals, clinics, and research institutions. Making this data securely accessible and shareable among authorized providers and researchers is crucial for improving patient care, advancing medical research, and enhancing public health initiatives.

Distributed architectures can facilitate the secure exchange and integration of healthcare information. For example, health information exchange (HIE) platforms often leverage distributed system principles to allow different electronic health record (EHR) systems to communicate and share patient data. Cloud-based distributed systems offer scalable storage and processing capabilities for large medical datasets, enabling advanced analytics and machine learning applications for diagnostics and treatment planning. Furthermore, emerging technologies like federated learning, a distributed machine learning approach, allow models to be trained on data from multiple institutions without the need to centralize sensitive patient data, thereby addressing privacy concerns.

However, the healthcare industry also faces significant challenges in implementing distributed systems, including strict regulatory compliance (e.g., HIPAA in the United States), ensuring data security and patient privacy, and overcoming the inertia of legacy systems. Despite these hurdles, the potential of distributed systems to transform healthcare through better data accessibility and collaboration is immense.

E-commerce and real-time inventory management

The e-commerce industry is a prime example of a sector built on and thriving because of distributed systems. Online retail platforms must handle massive, fluctuating user traffic (especially during peak shopping seasons), manage vast product catalogs, process millions of transactions securely, and provide personalized user experiences—all in real-time. Distributed architectures, including microservices, cloud computing, and distributed databases, are essential for achieving the required scalability, availability, and performance.

Real-time inventory management is a critical function in e-commerce that heavily relies on distributed systems. Accurately tracking inventory levels across potentially multiple warehouses and distribution centers, and reflecting this information instantly to online shoppers, is vital to prevent overselling and ensure customer satisfaction. Distributed databases and event-driven architectures enable inventory updates to be processed and propagated quickly. When a customer adds an item to their cart or completes a purchase, events are generated that trigger updates in the inventory system, ensuring that stock levels are consistently and accurately reflected across the platform.

Content delivery networks (CDNs), which are themselves large-scale distributed systems, are used to cache product images and other static content closer to users, reducing latency and improving page load times. Recommendation engines, fraud detection systems, and payment gateways within e-commerce platforms are also often implemented as distributed services. The ability to scale these components independently and ensure high availability is fundamental to the success of modern online retail.

These courses explore the application of distributed systems in handling large-scale data and e-commerce scenarios.

This topic is highly relevant to understanding the data challenges in e-commerce.Security in Distributed Systems

Security is a paramount concern in any computing system, but it takes on unique complexities in distributed environments. With components spread across a network, potentially spanning different geographical locations and administrative domains, the attack surface increases, and traditional security perimeters become less effective. Ensuring the confidentiality, integrity, and availability of data and services in distributed systems requires a multi-layered approach.

Encryption and secure communication

Protecting data both in transit and at rest is fundamental to distributed system security. Encryption is the primary mechanism for achieving this. Data transmitted over the network between nodes in a distributed system should always be encrypted to prevent eavesdropping and man-in-the-middle attacks. Protocols like Transport Layer Security (TLS)/Secure Sockets Layer (SSL) are commonly used to secure communication channels.

Data stored on disk within the distributed system (data at rest) should also be encrypted. This ensures that even if an attacker gains physical access to storage media or compromises a node, the data remains unreadable without the appropriate decryption keys. Managing encryption keys securely then becomes a critical challenge in itself, often requiring dedicated key management systems.

Beyond basic encryption, ensuring secure communication involves verifying the identity of communicating parties (authentication) and ensuring that messages have not been tampered with during transit (integrity). Digital signatures and message authentication codes (MACs) are cryptographic techniques used to provide these guarantees. The design of secure communication protocols must account for the specific threats present in a distributed environment.

Authentication/authorization frameworks

Authentication is the process of verifying the identity of a user, service, or device attempting to access the distributed system. In a distributed environment, where components are constantly interacting, robust authentication mechanisms are crucial to prevent unauthorized access. This can involve various techniques, from traditional username/password combinations (though often insufficient on their own for system components) to more secure methods like digital certificates, API keys, and token-based authentication (e.g., OAuth 2.0, OpenID Connect).

Once a user or service is authenticated, authorization determines what actions they are permitted to perform and what resources they are allowed to access. Authorization frameworks implement policies that define these permissions. Role-Based Access Control (RBAC) is a common model where permissions are assigned to roles, and users or services are assigned to roles. Attribute-Based Access Control (ABAC) offers more fine-grained control by considering various attributes of the user, resource, and environment.

Implementing authentication and authorization in a distributed system requires careful consideration of how identities and policies are managed and enforced across multiple, potentially heterogeneous components. Centralized identity providers and policy decision points can simplify management but may also become bottlenecks or single points of failure if not designed for high availability.

Threats specific to distributed architectures

Distributed architectures, while offering benefits like scalability and fault tolerance, also introduce specific security vulnerabilities. The increased number of interconnected components and network communication pathways expands the potential attack surface. Attackers can target individual nodes, network links, or the communication protocols themselves.

One common threat is the Denial of Service (DoS) or Distributed Denial of Service (DDoS) attack, where attackers attempt to overwhelm the system with a flood of traffic or requests, rendering it unavailable to legitimate users. The distributed nature of these attacks makes them harder to defend against. Another concern is ensuring the integrity and consistency of data when it is replicated or sharded across multiple nodes; an attacker compromising one node might attempt to corrupt data or introduce inconsistencies.

Insider threats, where a legitimate user or component with some level of access abuses their privileges, can also be more complex to detect and mitigate in a distributed environment. The complexity of managing and monitoring a distributed system can sometimes lead to misconfigurations or overlooked vulnerabilities that attackers can exploit. Therefore, a defense-in-depth strategy, incorporating network security, host-based security, secure coding practices, and continuous monitoring, is essential. Adopting a "zero trust" security model, where no user or component is trusted by default, regardless of whether they are inside or outside the network perimeter, is increasingly important for securing distributed systems.

For those looking to deepen their understanding of system security, especially in networked environments, this topic is a good starting point.

You may also find this book on general machine learning relevant, as many modern security systems leverage ML techniques for threat detection.

Formal Education Pathways

For individuals seeking a structured and in-depth understanding of distributed systems, formal education offers a robust foundation. Universities and academic institutions provide comprehensive programs that cover the theoretical underpinnings, design principles, and practical implementation aspects of these complex systems. This section outlines typical academic routes for aspiring distributed systems experts.

Relevant undergraduate/graduate degrees

A bachelor's degree in Computer Science or Software Engineering typically provides the foundational knowledge required to specialize in distributed systems. Core coursework in these programs often includes data structures, algorithms, operating systems, computer networks, and database systems, all of which are highly relevant. Some universities may offer elective courses specifically focused on distributed computing or cloud computing at the undergraduate level.

For those seeking deeper expertise and to engage in cutting-edge research or advanced development roles, a graduate degree (Master's or Ph.D.) is often beneficial. Master's programs in Computer Science frequently offer specializations or advanced courses in distributed systems, cloud computing, big data technologies, and related areas. These programs often involve more project-based learning and a deeper dive into theoretical concepts. A Ph.D. is typically pursued by those interested in academic research, pushing the boundaries of knowledge in distributed systems, or taking on highly specialized research and development roles in industry.

When considering formal education, look for programs that not only cover theoretical aspects but also provide opportunities for hands-on experience with relevant technologies and tools. Many universities have strong industry connections, offering internships or collaborative projects that can provide valuable real-world exposure. Exploring Computer Science programs on OpenCourser can help identify institutions with strong curricula in areas related to distributed systems.

PhD research areas (e.g., consensus algorithms)

A Ph.D. in Computer Science with a research focus on distributed systems opens doors to contributing to the fundamental advancements in the field. Research in this area is vibrant and covers a wide array of challenging problems. One significant area of research is consensus algorithms. These algorithms, such as Paxos and Raft, enable a set of distributed processes to agree on a value, which is crucial for tasks like leader election, distributed transactions, and maintaining replicated state machines. Research continues to explore more efficient, scalable, and understandable consensus protocols.

Other active research areas include:

- Scalability and Performance: Developing new architectures and techniques to build systems that can handle ever-increasing loads and data volumes efficiently.

- Fault Tolerance and Reliability: Designing systems that can gracefully handle various types of failures with minimal disruption.

- Distributed Data Management: Researching novel distributed database designs, data consistency models, and techniques for managing large-scale, geographically distributed data.

- Security and Privacy in Distributed Systems: Addressing the unique security challenges of distributed environments, including secure multi-party computation, privacy-preserving data analysis, and resilient architectures.

- Edge Computing: Exploring architectures and algorithms for processing data closer to where it is generated, reducing latency and bandwidth consumption.

- Serverless Computing: Investigating new models and platforms for event-driven, function-as-a-service architectures.

Ph.D. candidates often work closely with faculty advisors on specific research projects, publish their findings in academic conferences and journals, and contribute to the broader scientific community.

These courses touch upon some of the foundational concepts often explored in advanced research.

University labs and research groups

Many universities around the world host specialized research labs and groups dedicated to distributed systems and related fields like cloud computing, networking, and data science. These labs are at the forefront of innovation, conducting groundbreaking research and training the next generation of experts. Examples include research groups at institutions renowned for their computer science programs, such as MIT, Stanford University, University of California, Berkeley, and Carnegie Mellon University, among many others globally.

These research labs often collaborate with industry partners, providing students and researchers with opportunities to work on real-world problems and cutting-edge technologies. They typically focus on a range of topics, from theoretical foundations to practical system building. Being part of such a lab as a graduate student offers access to state-of-the-art resources, mentorship from leading academics, and a vibrant intellectual community.

Prospective Ph.D. students interested in distributed systems should research the faculty and ongoing projects at various university labs to find a good match for their interests. The work coming out of these labs often shapes the future direction of the field, influencing both academia and industry practices. Information about specific labs and their research can usually be found on university department websites.

Online Learning and Self-Study

For those looking to enter the field of distributed systems or upskill without pursuing a formal degree, online learning and self-study offer flexible and accessible pathways. A wealth of resources is available, from comprehensive courses on MOOC platforms to open-source projects that provide invaluable hands-on experience. This approach allows learners to tailor their education to their specific interests and career goals at their own pace.

Online courses are highly suitable for building a strong foundation in distributed systems. They can introduce core concepts, common architectural patterns, and key technologies in a structured manner. Many platforms like Coursera, edX, and Udemy offer courses taught by university professors or industry experts, covering topics from the basics of distributed computing to specialized areas like cloud architecture, big data, and microservices. OpenCourser makes it easy to search through thousands of such courses, compare syllabi, and read reviews to find the perfect fit for your learning journey.

MOOC platforms and certifications

Massive Open Online Course (MOOC) platforms have democratized access to high-quality education in distributed systems. Platforms like Coursera, edX, and Udacity host a wide array of courses, specializations, and even MicroMasters programs related to distributed computing, cloud technologies, and big data. These courses often include video lectures, readings, quizzes, and programming assignments to reinforce learning. Many also offer forums where learners can interact with peers and teaching assistants.

Many MOOCs offer the option to earn a certificate upon completion, which can be a valuable addition to a resume or LinkedIn profile. Some platforms also partner with industry leaders like Google, Amazon Web Services (AWS), and Microsoft to offer professional certifications that validate skills in specific cloud platforms or technologies. These certifications can be particularly beneficial for career changers or professionals looking to demonstrate proficiency in in-demand skills. While the value of certifications versus hands-on experience is often debated, a relevant certification can certainly help in getting noticed by recruiters, especially for entry-level roles.

When choosing online courses, consider factors like the reputation of the institution or instructor, the course content and its relevance to your goals, learner reviews, and the availability of hands-on labs or projects. OpenCourser's Learner's Guide offers articles on topics like how to earn a certificate from an online course and how to add it to your professional profiles, which can be very helpful in navigating the online learning landscape.

Here are some courses available on various platforms that cover different aspects of distributed systems.

Open-source projects for hands-on experience

Contributing to or experimenting with open-source projects is an excellent way to gain practical, hands-on experience in distributed systems. Many of the foundational technologies used in distributed computing, such as Apache Kafka, Kubernetes, Apache Cassandra, and the Hadoop ecosystem, are open-source. This means their codebase is publicly available, and there are often active communities around them.

Getting involved can range from studying the source code to understand how these systems are built, to fixing bugs, adding new features, or improving documentation. This not only deepens your technical understanding but also allows you to collaborate with experienced developers and build a portfolio of work. Platforms like GitHub host countless open-source projects related to distributed systems. You can start by looking for projects that align with your interests and skill level, and often there are "good first issue" tags to help newcomers get started.

Even if you don't contribute directly, you can download, install, and experiment with these systems on your own. Setting up a small Kubernetes cluster, deploying a Kafka instance, or building an application that uses a distributed database like Cassandra can provide invaluable learning experiences. This hands-on work complements theoretical knowledge gained from courses and books, solidifying your understanding and building practical problem-solving skills.

These books are considered foundational for anyone serious about understanding distributed systems.

These topics are central to many open-source distributed systems projects.Hybrid learning for skill validation

A hybrid learning approach, combining the structured learning of online courses with the practical application of hands-on projects and potentially formal certifications, can be a very effective way to build and validate skills in distributed systems. This blended model allows learners to gain theoretical knowledge and then immediately apply it in a practical context, which reinforces understanding and builds confidence.

For example, one might take an online course on microservices architecture, then undertake a personal project to build a small application using microservices principles, perhaps deploying it on a cloud platform using Kubernetes. Following this, pursuing a certification in a relevant cloud technology or Kubernetes could further validate the acquired skills and make a candidate more attractive to employers.

Skill validation in a field as complex as distributed systems often comes from a combination of demonstrating conceptual understanding (e.g., through discussions or interviews) and showcasing practical abilities (e.g., through coding assignments, project portfolios, or contributions to open-source projects). Online platforms sometimes offer "capstone projects" or "nanodegrees" that aim to provide this kind of integrated learning and validation experience. Ultimately, the ability to articulate how you've solved real or simulated distributed systems problems is a powerful way to demonstrate your capabilities.

Many professionals find that supplementing their existing education or work experience with targeted online courses helps them stay current or pivot into new specializations. OpenCourser's "Activities" section on course pages often suggests projects or further learning that can help learners go beyond the course material and apply their knowledge.

Career Progression and Roles

A career in distributed systems offers a challenging and rewarding path with significant growth potential. Professionals in this field are responsible for designing, building, and maintaining the complex, large-scale systems that power much of the digital world. The demand for these skills is high across various industries, and the roles can evolve from entry-level engineering positions to specialized architectural roles and leadership positions.

If you are considering a career transition or are early in your career journey, know that the path to becoming a distributed systems expert requires dedication and continuous learning. The concepts can be demanding, but the impact you can make is substantial. Ground yourself in the fundamentals, be persistent in your learning, and seek out opportunities to apply your knowledge. Even if a top-tier distributed systems architect role seems distant now, every complex system built or challenging problem solved is a milestone achieved.

Entry-level roles (e.g., distributed systems engineer)

Entry-level roles in distributed systems often carry titles like Software Engineer (with a focus on backend or systems), Systems Development Engineer, or more specifically, Distributed Systems Engineer. In these roles, individuals typically work as part of a team to develop, test, deploy, and maintain components of larger distributed systems. Responsibilities might include writing code in languages like Java, Python, Go, or C++, working with distributed databases, implementing messaging systems, or contributing to services running on cloud platforms.

A strong foundation in computer science fundamentals, including data structures, algorithms, operating systems, and networking, is usually expected. Familiarity with core distributed systems concepts such as scalability, fault tolerance, and consistency is also important. Employers often look for candidates who have some practical experience, perhaps through internships, personal projects, or contributions to open-source software. Problem-solving skills and the ability to learn quickly are highly valued, as the field is constantly evolving.

For those starting out, focusing on building a solid understanding of the basics and gaining hands-on experience with common tools and technologies is key. Don't be discouraged if the learning curve seems steep; persistence and a passion for tackling complex problems will serve you well. Many companies offer mentorship programs and on-the-job training for entry-level engineers.

These courses are excellent for building the foundational skills needed for entry-level positions.

Consider these career profiles as potential starting points.Mid-career specializations (e.g., cloud architect)

As professionals gain experience in distributed systems, they often develop specializations. One common mid-career path is that of a Cloud Architect. Cloud architects are responsible for designing and overseeing an organization's cloud computing strategy, including cloud adoption plans, cloud application design, and cloud management and monitoring. They need a deep understanding of various cloud services (e.g., from AWS, Azure, GCP), distributed system principles, networking, security, and cost optimization.

Other mid-career specializations can include:

- Big Data Engineer: Focusing on designing and building systems for collecting, storing, processing, and analyzing large volumes of data using technologies like Hadoop, Spark, and distributed NoSQL databases.

- Site Reliability Engineer (SRE): Blending software engineering and systems administration to ensure that large-scale systems are reliable, scalable, and efficient. SREs often focus on automation, monitoring, and incident response.

- Distributed Database Administrator/Specialist: Specializing in the design, deployment, and optimization of distributed database systems like Cassandra or MongoDB.

- Senior Distributed Systems Engineer: Taking on more complex design and development tasks, often leading projects and mentoring junior engineers.

These roles typically require several years of hands-on experience, a proven track record of working on complex distributed systems, and often a deeper expertise in specific technologies or problem domains. Continuous learning and staying abreast of new technologies are crucial for success in these evolving roles.

These courses can help experienced professionals deepen their expertise for specialized roles.

These career paths represent common specializations. These books offer advanced insights relevant to mid-career professionals.Leadership roles in tech strategy

With extensive experience and a deep understanding of distributed systems and their business implications, professionals can advance into leadership roles that shape technology strategy. These roles often involve looking beyond individual systems to define the broader architectural vision and technological direction for an organization. Titles might include Principal Engineer, Staff Engineer, Engineering Manager, Director of Engineering, or Chief Technology Officer (CTO) in smaller organizations.

In these positions, individuals are often responsible for making high-level design choices, evaluating emerging technologies, setting technical standards, and guiding teams of engineers. They need strong technical expertise combined with excellent communication, leadership, and strategic thinking skills. They must be able to articulate complex technical concepts to both technical and non-technical audiences and align technology decisions with business goals.

Leadership roles in tech strategy often involve mentoring other engineers, fostering a culture of innovation, and ensuring that the organization's technology investments are sound and future-proof. They may also be involved in recruiting and building high-performing engineering teams. The path to such roles typically involves a proven track record of technical excellence, successful project delivery, and the ability to influence and inspire others.

While specific courses for these advanced leadership roles are less common, continuous learning in areas like technology management, strategy, and emerging technologies remains crucial.

Future Trends and Innovations

The field of distributed systems is constantly evolving, driven by new technological advancements and changing application demands. Staying aware of emerging trends is crucial for professionals and researchers alike, as these innovations will shape the next generation of distributed architectures and capabilities. This section looks at some of an key future directions in distributed systems.

Edge computing and IoT integration

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the sources of data generation – typically devices at the "edge" of the network, such as IoT sensors, smartphones, or local servers. This contrasts with traditional cloud computing, where data is often sent to centralized data centers for processing. The primary drivers for edge computing are the need for lower latency, reduced bandwidth consumption, improved privacy, and autonomous operation in environments with intermittent connectivity.

The proliferation of Internet of Things (IoT) devices is a major catalyst for edge computing. Billions of connected devices are generating vast amounts of data, and processing all of this data in a centralized cloud can be inefficient or impractical. Edge computing allows for initial data processing, filtering, and analytics to happen locally, with only relevant summaries or insights sent to the cloud. This is particularly important for applications requiring real-time responses, such as autonomous vehicles, industrial automation, and remote healthcare monitoring. The integration of AI with edge computing (Edge AI) is another significant trend, enabling intelligent decision-making directly on edge devices.

Distributed systems principles are fundamental to designing and managing edge computing infrastructures, which can involve a large number of geographically dispersed and potentially resource-constrained nodes. Challenges include managing distributed data, ensuring security across the edge-to-cloud continuum, and orchestrating applications across a heterogeneous environment.

These courses touch upon technologies relevant to edge and IoT scenarios.

Quantum computing implications

Quantum computing, while still in its relatively early stages of development, holds the potential to revolutionize certain types of computation by harnessing the principles of quantum mechanics. Quantum computers are not intended to replace classical computers for all tasks, but they promise to solve specific classes of problems that are currently intractable for even the most powerful supercomputers. These include problems in areas like drug discovery, materials science, financial modeling, cryptography, and optimization.

The implications of quantum computing for distributed systems are multifaceted. On one hand, quantum computers could eventually break many of the cryptographic algorithms currently used to secure distributed systems, necessitating a transition to quantum-resistant cryptography. On the other hand, quantum computing could enhance certain aspects of distributed systems, for example, by enabling more powerful optimization algorithms for resource allocation or by facilitating new forms of secure communication through quantum networks. Some cloud providers are beginning to offer access to quantum computing resources via the cloud, allowing researchers and developers to experiment with this emerging technology. As quantum technology matures, its integration with and impact on distributed architectures will become an increasingly important area of research and development.

AI-driven system optimization

Artificial Intelligence (AI) and Machine Learning (ML) are increasingly being applied to optimize the design, management, and operation of distributed systems themselves. The complexity of modern distributed systems, with their numerous interacting components, dynamic workloads, and potential failure modes, makes manual optimization challenging. AI/ML techniques can help automate and improve various aspects of system management.

For example, AI can be used for:

- Automated resource provisioning and scaling: ML models can predict workload patterns and automatically adjust resource allocation to meet demand while minimizing costs.

- Anomaly detection and predictive maintenance: AI can analyze telemetry data (logs, metrics, traces) from distributed systems to detect unusual behavior that might indicate an impending failure or security breach, allowing for proactive intervention.

- Intelligent load balancing: ML algorithms can make more sophisticated decisions about how to distribute traffic across servers based on real-time conditions and predicted performance.

- Network traffic optimization: AI can be used to optimize routing and manage network congestion in distributed environments.

- Automated performance tuning: ML models can learn the optimal configuration parameters for different components of a distributed system to maximize performance or efficiency.

The integration of AI into the operational fabric of distributed systems (often referred to as AIOps) promises to lead to more resilient, efficient, and self-managing systems. This synergy between AI and distributed systems is a rapidly advancing field with significant potential.

These courses and topics provide a foundation for understanding AI and its applications.

This book covers the fundamentals of machine learning.Frequently Asked Questions (Career Focus)

Embarking on or advancing a career in distributed systems can bring up many questions. This section aims to address some common queries, particularly for those focused on job seeking and career development in this dynamic field.

Essential skills for entry-level roles?

For entry-level roles in distributed systems, a strong foundation in core computer science principles is paramount. This includes a good understanding of data structures and algorithms, as these are fundamental to solving complex problems efficiently. Proficiency in at least one mainstream programming language such as Java, Python, Go, or C++ is crucial, as you'll be writing, testing, and debugging code.

Knowledge of operating systems concepts (e.g., processes, threads, memory management, concurrency) and computer networking fundamentals (e.g., TCP/IP, HTTP, DNS) is also essential, as distributed systems inherently involve multiple computers communicating over a network. Basic familiarity with database concepts (both SQL and NoSQL) will be beneficial.

Beyond technical skills, problem-solving abilities are highly valued. You should be able to analyze complex problems, break them down into smaller parts, and devise effective solutions. Good communication skills and the ability to work effectively in a team are also important, as software development is often a collaborative effort. Finally, a demonstrated willingness to learn and adapt is key, as the technologies and techniques in distributed systems are constantly evolving.

Consider these courses to build these essential skills.

Industries with high demand for distributed systems expertise?

Expertise in distributed systems is in high demand across a wide array of industries. The technology sector itself is a major employer, with companies ranging from large tech giants (like Google, Amazon, Microsoft, Meta) to innovative startups constantly seeking engineers to build and scale their platforms and services. These companies rely on distributed systems for everything from search engines and social media to cloud computing infrastructure and e-commerce.

Beyond pure tech, the finance industry heavily utilizes distributed systems for trading platforms, payment processing, risk management, and increasingly, blockchain applications. The e-commerce and retail sectors depend on distributed systems for online storefronts, inventory management, recommendation engines, and supply chain logistics. In healthcare, distributed systems are used for electronic health records, medical imaging, telemedicine, and data analytics.

Other industries with significant demand include telecommunications (for network management and service delivery), entertainment (for streaming services and online gaming), automotive (especially with the rise of connected cars and autonomous driving), and manufacturing (for IoT and smart factory initiatives). Essentially, any industry that deals with large amounts of data, requires high availability and scalability, or leverages cloud computing will have a need for professionals skilled in distributed systems.

Impact of cloud computing on career opportunities?

Cloud computing has had a profound and largely positive impact on career opportunities in distributed systems. In many ways, cloud platforms (like AWS, Microsoft Azure, and Google Cloud Platform) are massive distributed systems themselves, and they provide the building blocks for creating other distributed applications. This has led to a surge in demand for professionals who understand how to design, build, deploy, and manage applications in the cloud.

The rise of the cloud has created new roles, such as Cloud Engineer, Cloud Architect, DevOps Engineer (with a cloud focus), and Cloud Security Specialist. It has also transformed existing roles; for example, software engineers now often need to be proficient in deploying and managing their applications on cloud infrastructure. Familiarity with cloud services related to compute, storage, databases, networking, and messaging is becoming a standard expectation for many distributed systems roles.

Furthermore, cloud computing has lowered the barrier to entry for building distributed systems. Startups and smaller companies can now access powerful infrastructure and services on a pay-as-you-go basis, without the need for large upfront investments in hardware. This has democratized the development of distributed applications and created more opportunities for engineers to gain experience in this area. Overall, cloud computing has significantly expanded the landscape and demand for distributed systems expertise.

These courses focus on cloud technologies, which are integral to modern distributed systems.

This book provides a comprehensive overview of cloud computing.Certifications vs. experience: Which matters more?

This is a common question, and the general consensus in the tech industry is that hands-on experience and demonstrable skills ultimately matter more than certifications alone. However, certifications can still play a valuable role, especially for certain career stages or goals.

Experience showcases your ability to apply knowledge in real-world scenarios, solve complex problems, work in a team, and deliver results. A portfolio of projects, contributions to open-source software, or a track record of successful roles will often carry more weight with employers than a list of certifications. Practical experience demonstrates that you can not only understand concepts but also implement them effectively.

Certifications, particularly those from reputable providers like AWS, Google Cloud, Microsoft Azure, or organizations like the Cloud Native Computing Foundation (CNCF) for Kubernetes, can be beneficial in several ways:

- For entry-level candidates or career changers: Certifications can help validate foundational knowledge and demonstrate a commitment to learning a new field, potentially helping your resume stand out.

- For specializing in a specific technology: If you want to become an expert in a particular cloud platform or tool, a certification can demonstrate that focused expertise.

- For meeting employer requirements: Some companies, particularly those that are partners with cloud providers, may prefer or even require certain certifications for specific roles.

In summary, certifications can complement experience but rarely replace it. The ideal approach is often to gain practical experience while potentially pursuing certifications that align with your career path and the technologies you are working with or aspire to work with. Focus on building real skills and projects, and view certifications as a way to validate and signal that knowledge. You can explore various certification paths and related courses on OpenCourser to find options that fit your career aspirations.

Remote work trends in distributed systems roles?

The nature of work in distributed systems, which often involves interacting with systems and colleagues spread across different locations, lends itself well to remote work. Many software engineering roles, including those focused on distributed systems, have seen a significant increase in remote opportunities, a trend accelerated by recent global events but already underway due to the tools and practices common in the tech industry.

Companies that build and operate distributed systems often have a culture that supports remote collaboration, utilizing tools like video conferencing, instant messaging, shared code repositories (like GitHub), and project management software. The ability to effectively design, build, and troubleshoot systems that are themselves distributed often aligns with the skills needed to work effectively as part of a distributed team.

While some companies are returning to office-based or hybrid models, many others continue to offer fully remote or remote-flexible positions for distributed systems engineers, cloud architects, and related roles. This can provide greater flexibility for employees and allows companies to tap into a broader talent pool. When searching for roles, you'll often find remote options available, though the prevalence can vary by company, specific role requirements, and geographical location. Job boards and company career pages will typically specify if a role is open to remote candidates.

Long-term career growth potential in the field?

The long-term career growth potential in the field of distributed systems is generally considered to be excellent. As technology becomes increasingly integral to all aspects of business and society, the need for robust, scalable, and reliable systems will only continue to grow. Distributed systems are at the heart of this technological infrastructure, powering everything from cloud computing and big data analytics to artificial intelligence and the Internet of Things.

Professionals with expertise in distributed systems can follow various career paths. They can deepen their technical expertise to become principal engineers or distinguished engineers, recognized as leading experts in their domain. They can move into architectural roles, designing complex systems and defining technology strategy. There are also opportunities to transition into engineering management, leading teams and shaping the direction of projects and products.

The skills developed in distributed systems – such as complex problem-solving, systems thinking, and understanding trade-offs in scalability, reliability, and performance – are highly transferable and valued across the tech industry. According to the U.S. Bureau of Labor Statistics, the employment of software developers, quality assurance analysts, and testers is projected to grow much faster than the average for all occupations. While this is a broad category, the specialized skills in distributed systems are likely to be in particularly high demand within this growing field. Continuous learning and adaptation will be key to maximizing long-term career growth, as the technologies and paradigms in distributed systems continue to evolve rapidly.

These careers represent paths that often require deep expertise in distributed systems.

Further Resources and Useful Links

To continue your journey in understanding and mastering distributed systems, a variety of resources can provide further learning and insights. Below are some suggestions for further exploration.

Relevant OpenCourser Browse Pages

OpenCourser offers a vast library of courses and learning materials. These browse pages can help you find relevant content quickly:

- Computer Science: For foundational knowledge.

- Cloud Computing: Essential for modern distributed systems.

- IT & Networking: Understanding the backbone of distributed communication.

- Software Architecture: For designing robust systems.

- Big Data: Many distributed systems are built to handle large datasets.

External Authoritative Resources

For those looking to delve deeper into industry trends and research, these resources can be valuable:

- The USENIX Association often publishes proceedings and papers related to advanced computing systems, including distributed systems.

- The Association for Computing Machinery (ACM) Digital Library is a comprehensive database of articles and conference proceedings covering all aspects of computing.

- Major cloud providers like AWS, Google Cloud, and Microsoft Azure have official blogs that frequently discuss distributed systems concepts, best practices, and case studies related to their platforms.

- All Things Distributed by Werner Vogels, CTO of Amazon: www.allthingsdistributed.com offers insightful articles on distributed systems principles and cloud computing.

The journey into distributed systems is one of continuous learning and exploration. The field is vast and ever-evolving, offering endless opportunities to tackle challenging problems and build impactful technologies. Whether you are just starting or looking to deepen your expertise, the resources available today make it more accessible than ever to engage with this fascinating domain. We encourage you to leverage platforms like OpenCourser to find courses and materials that align with your learning goals and to actively participate in the broader community of distributed systems practitioners and researchers. The ability to design, build, and manage these complex systems is a highly valuable skill in our increasingly interconnected world, and the path to mastering it, while demanding, is filled with intellectual rewards.