Anomaly Detection

vigating the World of Anomaly Detection

Anomaly detection, at its core, is the process of identifying data points, events, or observations that deviate significantly from the expected or normal behavior of a dataset. Think of it as finding the "odd one out" in a large collection of items. This could be an unusually high transaction on a credit card, a sudden spike in network traffic, or a subtle change in a manufacturing process. While the concept might seem straightforward, its applications are vast and increasingly critical in our data-driven world. The field has a rich history, initially rooted in statistical analysis where experts would manually scrutinize charts and data for abnormalities. Today, it heavily leverages the power of artificial intelligence (AI) and machine learning (ML) to automate this process, enabling the analysis of massive and complex datasets.

Working in anomaly detection can be quite engaging. Imagine being the detective who uncovers hidden threats in cybersecurity by spotting unusual network activity, or the financial guardian who prevents fraud by identifying suspicious transactions in real-time. There's also the satisfaction of optimizing complex systems, such as in manufacturing, by detecting subtle deviations that could lead to equipment failure or defects. The ability to unearth these critical insights from vast seas of data is a powerful and rewarding aspect of this field.

Introduction to Anomaly Detection

For those new to the concept, anomaly detection might sound like a highly technical and inaccessible field. However, the fundamental idea is something we encounter in everyday life. If you're driving and suddenly hear an unfamiliar noise from your car, you've just performed a basic form of anomaly detection. You've identified something that deviates from the normal sounds your car makes. Similarly, if you notice an item on your grocery bill that you didn't purchase, that's another instance of spotting an anomaly. Anomaly detection in the context of data science and technology simply applies this same principle to datasets, often on a much larger and more complex scale.

This field is becoming increasingly important because the amount of data being generated globally is exploding. Manually sifting through this data to find irregularities is often impossible. Anomaly detection systems provide an automated way to monitor data and flag potential issues, which could range from critical system failures and security breaches to opportunities for improvement and optimization. It’s a cornerstone of modern data analysis, helping organizations make sense of their data and react to important events quickly and efficiently.

Definition and Basic Examples

Anomaly detection, also known as outlier detection, is the technique of identifying rare items, events, or observations that raise suspicions by differing significantly from the majority of the data. These "anomalies" or "outliers" don't conform to a well-defined notion of normal behavior. They might be errors in the data, or they could represent genuine, significant events that require attention.

Consider a simple example: monitoring daily website traffic. If your website typically receives around 1,000 visitors per day, and one day it suddenly drops to 50 visitors, or skyrockets to 50,000, these would be considered anomalies. Another common example is credit card fraud detection. If a credit card that is typically used for small, local purchases suddenly shows a large international transaction, this would be flagged as an anomaly. In manufacturing, an anomaly could be a slight variation in the temperature or pressure of a machine that, if undetected, could lead to a product defect or equipment failure.

These examples highlight that anomalies are context-dependent. What is considered anomalous in one dataset or situation might be perfectly normal in another. The key is to establish a baseline of "normal" behavior and then identify deviations from that baseline.

Historical Context and Evolution

The roots of anomaly detection can be traced back to early statistical methods. Analysts would manually inspect data, often visually using charts and graphs, to identify points that seemed out of place. Basic statistical measures like mean and standard deviation were used to define "normal" ranges, and anything falling too far outside these ranges was considered an outlier. For instance, in the 1930s, early quality control processes in manufacturing would classify data points that were three standard deviations away from the mean as anomalies.

A significant step in the evolution of anomaly detection came in the 1980s with the work of Dorothy E. Denning on intrusion detection systems. Her research laid the groundwork for many modern anomaly detection techniques, particularly in the realm of cybersecurity. This marked a shift towards more automated methods capable of analyzing larger datasets and detecting more complex patterns.

The advent of machine learning and artificial intelligence has dramatically transformed the field. Modern anomaly detection systems can learn complex patterns from data and identify subtle deviations that would be impossible for humans to detect. Techniques like clustering, classification, and neural networks are now commonly employed. The explosion of data in recent years has made these advanced, automated techniques not just useful, but essential.

Key Industries and Applications

Anomaly detection is a versatile tool with applications across a multitude of industries. Its ability to identify critical deviations makes it invaluable for maintaining security, quality, and efficiency.

In the financial sector, anomaly detection is a cornerstone of fraud prevention. It's used to identify suspicious transactions, detect money laundering activities, and spot unusual trading patterns that might indicate market manipulation. Banks and financial institutions rely heavily on these systems to protect assets and comply with regulations.

Cybersecurity is another major area where anomaly detection plays a critical role. Intrusion detection systems use anomaly detection to identify unusual network traffic or user behavior that could signal a cyberattack, malware infection, or unauthorized access. Given the increasing sophistication of cyber threats, proactive anomaly detection is essential for protecting sensitive data and systems.

The manufacturing industry utilizes anomaly detection for quality control and predictive maintenance. By monitoring sensor data from machinery, manufacturers can detect subtle deviations that might indicate an impending equipment failure or a flaw in the production process, thereby preventing costly downtime and ensuring product quality.

In healthcare, anomaly detection can be used to identify abnormal patient conditions from monitoring data, detect irregularities in medical imaging, or even flag fraudulent insurance claims. This can lead to earlier diagnosis and more effective treatments.

Other industries leveraging anomaly detection include retail (for identifying unusual sales trends or predicting customer churn), telecommunications (for network monitoring and identifying service disruptions), and transportation and logistics (for optimizing routes and predicting maintenance needs for vehicles). The ability to detect anomalies in Internet of Things (IoT) sensor data is also becoming increasingly important across many sectors.

Why It Matters in Modern Data-Driven Environments

In today's world, data is often described as the new oil – a valuable resource that can drive innovation, efficiency, and competitive advantage. However, the sheer volume, velocity, and variety of data being generated can be overwhelming. Anomaly detection provides a crucial mechanism for navigating this complex data landscape and extracting meaningful insights. Without effective anomaly detection, organizations risk missing critical events, making flawed decisions based on erroneous data, or failing to identify opportunities for improvement.

The importance of anomaly detection is amplified by several factors. Firstly, the increasing interconnectedness of systems means that a small anomaly in one area can have cascading effects elsewhere. Secondly, the speed at which business operates today demands real-time insights and rapid responses. Anomaly detection systems can provide early warnings, enabling organizations to act proactively rather than reactively. Thirdly, the consequences of undetected anomalies can be severe, ranging from significant financial losses and reputational damage to safety risks and regulatory penalties.

Moreover, as organizations increasingly rely on automated decision-making driven by AI and ML, ensuring the quality and integrity of the input data is paramount. Anomalies can significantly skew the performance of these models, leading to incorrect predictions or biased outcomes. Therefore, anomaly detection is not just a standalone tool but an integral part of the broader data science and MLOps (Machine Learning Operations) lifecycle. It helps ensure that data-driven decisions are based on sound, reliable information.

Key Concepts in Anomaly Detection

To truly understand anomaly detection, it's helpful to become familiar with some of its core concepts. These concepts provide the vocabulary and framework for discussing and implementing different anomaly detection techniques. They also help in understanding the nuances and challenges involved in identifying those elusive "odd ones out."

From the different forms an anomaly can take to the various ways we can teach a machine to find them, these foundational ideas are crucial for anyone looking to delve deeper into this fascinating field. Understanding these concepts will also be beneficial when evaluating the suitability of different approaches for specific problems.

Types of Anomalies: Point, Contextual, Collective

Anomalies are not all created equal; they can manifest in different ways. Understanding these distinctions is important for selecting the appropriate detection methods. The three main types of anomalies are point, contextual, and collective anomalies.

A point anomaly is an individual data instance that is anomalous with respect to the rest of the data. This is the simplest and most common type of anomaly. For example, a single, unusually large credit card transaction for a user who typically makes small purchases would be a point anomaly. In a dataset of human heights, an individual recorded as being 10 feet tall would clearly be a point anomaly.

A contextual anomaly (also known as a conditional anomaly) is a data instance that is anomalous in a specific context, but not otherwise. The "context" is determined by contextual attributes in the data (e.g., time, location). For instance, a spike in retail sales in December is normal due to holiday shopping, but the same spike in August might be a contextual anomaly. Similarly, a temperature of 90°F is normal in the summer but would be a contextual anomaly in the middle of winter in a typically cold region.

A collective anomaly occurs when a collection of related data instances is anomalous with respect to the entire dataset, even though the individual instances within the collection may not be anomalous by themselves. Imagine a human electrocardiogram (ECG). A single beat might look normal, but a sustained period of an unusually low heart rate, even if each individual beat is within a plausible range, could represent a collective anomaly indicating a potential health issue. Detecting collective anomalies often requires analyzing sequences or spatial relationships within the data.

These distinctions help in framing the problem and choosing the right algorithms, as different techniques are better suited for different types of anomalies.

The following book provides a comprehensive overview of outlier analysis, which is another term for anomaly detection, and covers these different types of anomalies in detail.

Supervised vs. Unsupervised Approaches

When it comes to training machine learning models for anomaly detection, a key distinction is whether you have labeled data – that is, data where past anomalies have already been identified. This leads to two primary approaches: supervised and unsupervised anomaly detection.

Supervised anomaly detection techniques are used when you have a dataset that contains labeled instances of both normal and anomalous data. The model learns to distinguish between these two classes. Essentially, it's a classification problem where one class (anomalies) is typically much rarer than the other (normal data). While potentially very accurate if good labeled data is available, supervised methods are less common in practice for anomaly detection. This is because obtaining accurately labeled anomaly data can be difficult and expensive, as anomalies are, by definition, rare events.

Unsupervised anomaly detection techniques are far more common because they do not require labeled data. These methods assume that anomalies are rare and different from the normal instances. They work by trying to find a model of "normal" behavior from the unlabeled data and then identifying instances that deviate significantly from this model. Common unsupervised approaches include clustering-based methods (anomalies are points that don't belong to any cluster or belong to very small clusters), density-based methods (anomalies are in low-density regions), and distance-based methods (anomalies are far from their nearest neighbors). While more flexible due to the lack of reliance on labels, unsupervised methods might require more careful tuning and can sometimes have higher false positive rates if the assumptions about normal behavior are not accurate.

There is also a third category, semi-supervised anomaly detection. In this approach, the model is trained on a dataset that contains only normal instances. The goal is to learn a model that accurately represents normal behavior. Any new data instance that does not conform well to this learned model is then flagged as an anomaly. This can be useful when it's easier to obtain a "pure" dataset of normal examples than to label all anomalies.

These courses can help you explore the differences and applications of supervised and unsupervised learning in more depth.

This book is a foundational text in data mining and covers various supervised and unsupervised techniques relevant to anomaly detection.

Common Evaluation Metrics (Precision, Recall, F1-Score)

Evaluating the performance of an anomaly detection system is crucial, but it can be tricky, especially because anomalies are often rare. Standard accuracy (the proportion of correctly classified instances) can be misleading. For example, if anomalies make up only 0.1% of your data, a model that simply classifies everything as "normal" would have 99.9% accuracy, yet it would be useless for detecting anomalies.

Therefore, other metrics are commonly used, particularly those derived from a confusion matrix. A confusion matrix shows the counts of:

- True Positives (TP): Anomalies correctly identified as anomalies.

- False Positives (FP): Normal instances incorrectly identified as anomalies (also known as Type I error or false alarms).

- True Negatives (TN): Normal instances correctly identified as normal.

- False Negatives (FN): Anomalies incorrectly identified as normal (also known as Type II error or missed detections).

From these, we can calculate:

- Precision: TP / (TP + FP). This measures the proportion of instances flagged as anomalous that were actually anomalies. High precision means fewer false alarms.

- Recall (or Sensitivity): TP / (TP + FN). This measures the proportion of actual anomalies that were correctly identified. High recall means fewer missed anomalies.

- F1-Score: 2 * (Precision * Recall) / (Precision + Recall). This is the harmonic mean of precision and recall, providing a single score that balances both. It's particularly useful when the classes are imbalanced, as is often the case in anomaly detection.

The choice of which metric to prioritize often depends on the specific application. For instance, in medical diagnosis, high recall (minimizing false negatives) might be more critical, even if it means lower precision (more false positives that require further investigation). In contrast, for a system that automatically blocks network traffic based on anomaly detection, high precision might be preferred to avoid blocking legitimate traffic. Understanding these metrics is essential for effectively comparing and tuning anomaly detection models.

Data Preprocessing Requirements

The quality of data fed into an anomaly detection algorithm significantly impacts its performance. Raw data is often messy, incomplete, and inconsistent, making preprocessing a critical step. Without proper preprocessing, even the most sophisticated algorithms can produce misleading or inaccurate results.

Common data preprocessing steps include:

- Handling Missing Values: Data points might have missing attributes. Depending on the extent and nature of the missing data, these can be imputed (filled in with estimated values), or the entire data instance might be removed.

- Data Cleaning/Noise Reduction: Data can contain errors or random fluctuations (noise) that can obscure true anomalies or be mistaken for them. Smoothing techniques or outlier removal (ironically, sometimes a preliminary step before the main anomaly detection) can be applied. Differentiating between noise and true anomalies is a key challenge.

- Feature Scaling/Normalization: Many algorithms are sensitive to the scale of input features. If features have vastly different ranges (e.g., age in years vs. income in dollars), features with larger values might dominate the distance calculations. Scaling features to a common range (e.g., 0 to 1 or with a mean of 0 and standard deviation of 1) is often necessary.

- Feature Engineering: This involves creating new features from existing ones that might be more informative for the anomaly detection task. For example, in time-series data, features like the rate of change or moving averages could be engineered.

- Dimensionality Reduction: High-dimensional data (data with many features) can pose challenges for some anomaly detection algorithms due to the "curse of dimensionality." Techniques like Principal Component Analysis (PCA) can be used to reduce the number of features while retaining most of the important information.

The specific preprocessing steps required will depend on the nature of the data and the chosen anomaly detection technique. Neglecting this stage can lead to suboptimal performance and unreliable results, making it a crucial part of the anomaly detection pipeline.

To learn more about the foundational statistical concepts that underpin many data preprocessing and anomaly detection techniques, you might find this topic useful.

Anomaly Detection Techniques and Algorithms

The heart of anomaly detection lies in the diverse array of techniques and algorithms developed to identify unusual patterns. These methods range from relatively simple statistical approaches to highly complex machine learning models. The choice of technique often depends on the nature of the data, the types of anomalies being sought, and the computational resources available.

Understanding these different approaches allows practitioners to select the most appropriate tools for their specific problem. It also provides insight into the ongoing evolution of the field, as researchers continually develop new and improved methods for tackling the challenge of finding the "odd one out" in increasingly complex datasets.

These courses offer a good starting point for those interested in the practical application of machine learning algorithms, including those used for anomaly detection.

This book delves into the principles and algorithms behind anomaly detection, offering a more theoretical perspective.

Statistical Methods (Z-score, Grubbs' Test)

Statistical methods were among the earliest techniques used for anomaly detection and remain relevant for certain types of data and problems. These methods typically assume that the normal data instances follow a certain statistical distribution (e.g., a Gaussian or normal distribution), and instances that have a low probability of being generated by this distribution are flagged as anomalies.

One of the simplest and most widely known statistical methods is the Z-score (or standard score). For a given data point, the Z-score measures how many standard deviations it is away from the mean of the dataset. A common rule of thumb is to consider data points with a Z-score greater than 3 or less than -3 as anomalies. This method is effective when the data is approximately normally distributed and the anomalies are far from the mean.

Grubbs' Test (also known as the maximum normalized residual test) is another statistical test used to detect a single outlier in a univariate dataset that follows an approximately normal distribution. It tests the null hypothesis that there are no outliers in the dataset. If an outlier is found, it can be removed, and the test can be iteratively applied to detect multiple outliers (though this is generally not recommended without caution).

Other statistical approaches include those based on regression models (where points far from the regression line are considered anomalies) and methods using interquartile range (IQR) to identify outliers (e.g., points below Q1 - 1.5*IQR or above Q3 + 1.5*IQR). While these methods are often easy to understand and implement, their primary limitation is the assumption of a specific underlying data distribution, which may not hold true for complex, real-world datasets. They are also generally more suited to univariate (single variable) data, although multivariate extensions exist.

Machine Learning Approaches (Isolation Forest, Autoencoders)

Machine learning (ML) has brought a new level of sophistication and power to anomaly detection, enabling the analysis of large, high-dimensional, and complex datasets where traditional statistical assumptions may not apply. ML-based approaches can learn patterns from data and identify deviations without requiring explicit programming of rules.

Isolation Forest is a popular and effective unsupervised anomaly detection algorithm. It works by randomly partitioning the data and isolating instances. The underlying idea is that anomalies are "few and different," so they should be easier to isolate (i.e., require fewer partitions) than normal instances. The algorithm builds an ensemble of "isolation trees" and averages the path lengths required to isolate each instance. Shorter average path lengths indicate a higher likelihood of being an anomaly.

Autoencoders are a type of neural network often used for unsupervised anomaly detection, particularly in the realm of deep learning. An autoencoder is trained to reconstruct its input. It consists of an encoder that compresses the input into a lower-dimensional representation (the "bottleneck" or latent space) and a decoder that attempts to reconstruct the original input from this compressed representation. The autoencoder is trained on normal data. The idea is that it will learn to reconstruct normal instances well but will struggle to reconstruct anomalous instances, resulting in a higher reconstruction error for anomalies. This reconstruction error can then be used as an anomaly score.

Other ML approaches include:

- One-Class SVM (Support Vector Machine): This algorithm learns a boundary that encloses the normal data instances. New instances that fall outside this boundary are considered anomalies.

- Clustering-based methods (e.g., k-means, DBSCAN): These methods group similar data instances together. Anomalies can be identified as instances that do not belong to any cluster, belong to very small clusters, or are far from the centroids of their clusters.

- Nearest-neighbor based methods (e.g., k-Nearest Neighbors, Local Outlier Factor - LOF): These methods assess the local density or distance of data points. Anomalies are typically instances that are far from their neighbors or reside in regions of very low density.

The choice of ML algorithm often depends on the dataset characteristics, the computational resources, and whether labeled data is available. Many of these techniques are available in popular Python libraries like Scikit-learn, making them accessible for practical implementation.

These courses provide insights into deep learning and machine learning techniques relevant to anomaly detection.

This book focuses on using Python-based deep learning for anomaly detection.

For those interested in the broader field of machine learning, this topic page is a great resource.

Time-Series Analysis Techniques

Time-series data, where observations are recorded sequentially over time, is prevalent in many domains where anomaly detection is crucial, such as finance (stock prices, transaction logs), IoT sensor readings, system monitoring (CPU usage, network traffic), and healthcare (ECG, patient vital signs). Detecting anomalies in time-series data presents unique challenges and opportunities.

Techniques for time-series anomaly detection often need to account for temporal dependencies, seasonality, and trends present in the data. Some common approaches include:

- Moving Averages and Exponential Smoothing: These methods smooth out short-term fluctuations and highlight longer-term trends. Anomalies can be detected as points that deviate significantly from the smoothed values.

- ARIMA (Autoregressive Integrated Moving Average) models: ARIMA models are a class of statistical models used for analyzing and forecasting time-series data. Once an ARIMA model is fitted to the normal behavior of a time series, future observations that fall outside the model's prediction intervals can be flagged as anomalies.

- Decomposition Methods: These methods decompose a time series into its constituent components: trend, seasonality, and residuals (the remaining part after removing trend and seasonality). Anomalies are often found in the residual component.

- Machine Learning Approaches: Many general ML techniques, such as autoencoders (especially recurrent neural network based autoencoders like LSTM autoencoders), Isolation Forests, and One-Class SVMs, can be adapted for time-series data, often by using lagged values of the time series as input features (creating a "sliding window").

- Change Point Detection: While not strictly anomaly detection, change point detection algorithms identify times when the statistical properties of a time series change. Such change points can sometimes correspond to the onset of anomalous behavior.

A key consideration in time-series anomaly detection is distinguishing between true anomalies and natural, albeit infrequent, variations or shifts in the underlying process. Contextual information often plays a more significant role here.

This course delves into time series forecasting, which shares many underlying principles with time-series anomaly detection.

This book offers a practical guide to using deep learning for time series analysis, including anomaly detection.

Hybrid and Ensemble Methods

No single anomaly detection algorithm is universally superior for all types of data and anomalies. Different algorithms have different strengths and weaknesses. Hybrid and ensemble methods aim to overcome the limitations of individual algorithms by combining multiple techniques to achieve more robust and accurate anomaly detection.

Hybrid methods typically involve a multi-stage approach where different types of algorithms are used sequentially or in parallel. For example, a clustering algorithm might first be used to segment the data, and then a statistical or distance-based method could be applied within each cluster to find local anomalies. Another hybrid approach might combine a statistical model for global anomalies with a machine learning model for more complex, local anomalies.

Ensemble methods combine the outputs of multiple anomaly detection algorithms (or multiple instances of the same algorithm with different parameters or trained on different subsets of data). The idea is that by aggregating the "opinions" of several diverse detectors, the overall system can achieve better performance and be less sensitive to the weaknesses of any single detector. Common ensemble techniques include:

- Averaging/Weighted Averaging: The anomaly scores from multiple detectors are averaged (possibly with weights reflecting the perceived reliability of each detector) to produce a final anomaly score.

- Voting: Each detector "votes" on whether an instance is an anomaly, and the instance is flagged if it receives a sufficient number of votes.

- Feature Bagging: Multiple detectors are trained on different random subsets of features.

- Stacking: A meta-learner is trained to combine the outputs (anomaly scores or labels) of several base-level anomaly detectors.

Ensemble methods, particularly those based on diverse base learners, often lead to improved detection rates and a reduction in false positives. However, they can also be more computationally expensive and complex to implement and interpret than single algorithms.

The book "Outlier Ensembles" specifically focuses on this advanced area of anomaly detection.

Real-World Applications of Anomaly Detection

The theoretical concepts and sophisticated algorithms of anomaly detection come to life in a wide array of real-world applications. Across diverse industries, these techniques are actively working to identify critical deviations, prevent losses, enhance security, and improve operational efficiency. Seeing how anomaly detection is applied in practice can provide a clearer understanding of its impact and importance.

From safeguarding financial systems to ensuring the reliability of industrial machinery and protecting digital networks, the practical uses of anomaly detection are both numerous and vital. These examples illustrate the tangible benefits that organizations derive from effectively identifying the "odd one out" in their data streams.

Fraud Detection in Banking

The banking and financial services industry is a prime example of where anomaly detection provides immense value, particularly in the ongoing battle against fraud. Financial institutions process vast numbers of transactions daily, and manually scrutinizing each one for fraudulent activity is impossible. Anomaly detection systems automate this process, identifying patterns and deviations that may indicate various types of fraud.

Common applications include:

- Credit Card Fraud: Identifying transactions that are unusual for a cardholder based on their typical spending habits, location, transaction amount, or merchant type. For example, a large purchase made in a foreign country when the cardholder has no history of international travel would be flagged.

- Insurance Fraud: Detecting suspicious claims by looking for anomalies in claim amounts, frequency, or patterns that deviate from typical claim behavior or known fraud schemes.

- Anti-Money Laundering (AML): Monitoring transactions for patterns indicative of money laundering, such as unusually large cash deposits, rapid movement of funds between accounts, or transactions involving high-risk jurisdictions.

- Loan Application Fraud: Identifying applications with inconsistent information, unusually high income claims for a given profession, or other indicators that deviate from legitimate application patterns.

Machine learning models are extensively used in this domain, learning from historical transaction data to identify subtle and evolving fraud tactics. The ability to detect fraud in real-time is crucial for minimizing financial losses and maintaining customer trust.

These courses and books offer insights into fraud detection and risk analytics, which heavily rely on anomaly detection techniques.

Individuals specializing in this area often pursue careers such as Fraud Analyst.

Predictive Maintenance in Manufacturing

In the manufacturing sector, unexpected equipment failures can lead to costly downtime, production delays, and safety hazards. Predictive maintenance, powered by anomaly detection, aims to prevent these failures by identifying early warning signs in machinery data.

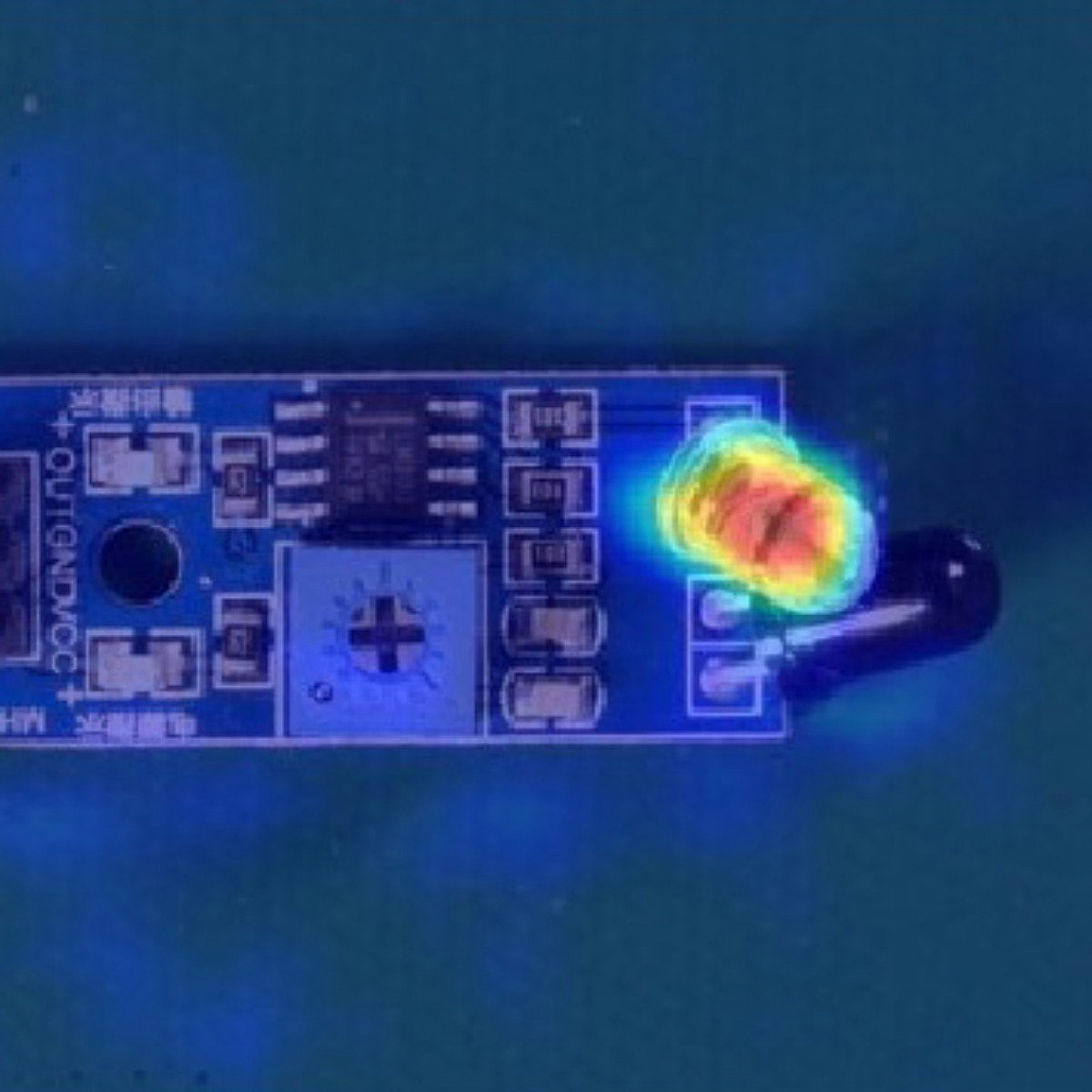

Modern manufacturing equipment is often equipped with numerous sensors that generate continuous streams of data on parameters like temperature, pressure, vibration, speed, and energy consumption. Anomaly detection algorithms analyze this sensor data to identify subtle deviations from normal operating patterns that might indicate wear and tear, impending component failure, or suboptimal performance.

For example:

- An unusual increase in the vibration levels of a motor might indicate a bearing is about to fail.

- A gradual rise in the operating temperature of a machine could signal a cooling system malfunction.

- Changes in energy consumption patterns might point to inefficiencies or developing faults.

By detecting these anomalies early, manufacturers can schedule maintenance proactively, before a catastrophic failure occurs. This not only reduces unplanned downtime but also extends the lifespan of equipment, optimizes maintenance schedules (avoiding unnecessary servicing), and improves overall operational efficiency (OOE). The Industrial Internet of Things (IIoT) plays a significant role here, providing the connectivity and data streams necessary for effective anomaly detection.

This course focuses on analyzing manufacturing processes, a key area for applying anomaly detection for predictive maintenance.

This book discusses data-driven prediction for industrial processes, relevant to predictive maintenance.

A relevant career in this domain is that of a Quality Control Engineer.

Network Intrusion Detection

Cybersecurity is a domain where anomaly detection is indispensable for protecting networks and systems from a constantly evolving landscape of threats. Network Intrusion Detection Systems (NIDS) employ anomaly detection techniques to monitor network traffic and system logs for suspicious activities that deviate from established normal patterns.

Anomalous network behavior can manifest in various ways:

- Unusual Traffic Volumes: Sudden spikes or drops in network traffic, or unusually high data transfers to or from specific IP addresses.

- Atypical Protocols or Ports: Use of unexpected network protocols or communication over non-standard ports.

- Suspicious Connection Patterns: Attempts to connect to multiple systems in rapid succession (port scanning), connections from known malicious IP addresses, or unusual login patterns (e.g., logins from geographically distant locations in a short time frame).

- Malware Signatures: While some intrusion detection relies on signature-based detection (matching known malware patterns), anomaly detection can help identify novel or zero-day attacks for which signatures do not yet exist by focusing on behavioral deviations.

Machine learning algorithms, particularly unsupervised methods, are crucial here as they can adapt to new and unseen attack vectors. By establishing a baseline of normal network activity, these systems can flag deviations that might indicate reconnaissance efforts, malware propagation, data exfiltration attempts, or denial-of-service attacks. The goal is to provide early warnings to security analysts so they can investigate and mitigate potential threats before significant damage occurs.

This book specifically addresses network anomaly detection.

A career path closely related to this application is that of a Security Analyst.

Exploring topics like Information Security and Cybersecurity can provide broader context.

Medical Diagnosis Systems

In the healthcare industry, anomaly detection is increasingly being explored and applied to aid in medical diagnosis and patient monitoring. The human body and its physiological signals are complex, and deviations from normal patterns can indicate the presence of disease or adverse health events.

Applications in this area include:

- Medical Imaging: Identifying anomalous regions in medical images like X-rays, MRIs, or CT scans that could indicate tumors, lesions, or other abnormalities. Deep learning models, particularly convolutional neural networks, have shown promise in this area.

- Patient Monitoring: Analyzing real-time physiological data from wearable sensors or bedside monitors (e.g., heart rate, blood pressure, oxygen saturation, ECG signals) to detect sudden changes or patterns that deviate from a patient's baseline or established normal ranges, potentially signaling a critical event.

- Disease Outbreak Detection: Monitoring public health data (e.g., hospital admission rates, symptom reporting) to identify unusual clusters or spikes in illnesses that could indicate the beginning of an epidemic.

- Genomic Anomaly Detection: Identifying unusual patterns or mutations in genetic sequences that might be associated with diseases.

Anomaly detection in healthcare can help clinicians make more informed decisions, enable earlier detection of diseases when they are more treatable, and improve patient outcomes. However, this field also comes with significant ethical considerations regarding data privacy and the potential impact of false positives or false negatives on patient care.

This course explores AI applications in healthcare, including those involving anomaly detection.

A relevant career is Healthcare Analyst, which may involve using anomaly detection for various health-related data analyses.

The broader field of Health & Medicine offers many opportunities for applying data science techniques.

Formal Education Pathways

For individuals aspiring to work in the field of anomaly detection, a strong educational foundation is typically essential. While self-directed learning can play a significant role, formal education often provides the structured knowledge in mathematics, statistics, computer science, and domain-specific areas that are crucial for understanding and developing sophisticated anomaly detection systems.

Universities and academic institutions offer various programs and courses that can equip students with the necessary theoretical understanding and practical skills. From undergraduate degrees that lay the groundwork to graduate studies that delve into specialized research, formal education pathways can pave the way for a successful career in this data-centric field.

Relevant Undergraduate Majors (CS, Statistics)

A bachelor's degree in a quantitative or computational field is generally the starting point for a career involving anomaly detection. Several undergraduate majors provide a strong foundation:

Computer Science (CS): This is perhaps the most common and direct pathway. A CS curriculum typically covers fundamental programming skills, data structures, algorithms, database management, and often an introduction to machine learning and artificial intelligence. These are all core competencies for developing and implementing anomaly detection systems. Courses in software engineering are also valuable for building robust and scalable solutions.

Statistics: A degree in statistics provides a deep understanding of probability theory, statistical modeling, hypothesis testing, and data analysis techniques. This is crucial because many anomaly detection methods are rooted in statistical principles. Statisticians are well-equipped to understand the assumptions behind different models, evaluate their performance rigorously, and interpret the results correctly.

Other relevant majors include:

- Mathematics: Provides a strong theoretical foundation in areas like linear algebra, calculus, and discrete mathematics, which are essential for understanding many machine learning algorithms.

- Data Science: An increasingly popular interdisciplinary major that combines elements of computer science, statistics, and domain expertise specifically geared towards extracting insights from data.

- Engineering (various disciplines): Fields like Electrical Engineering, Industrial Engineering, or Software Engineering can also be relevant, especially if they include a strong focus on data analysis, signal processing, or systems control.

Regardless of the specific major, it's beneficial to take courses in programming (Python and R are widely used), databases, machine learning, and statistical analysis. Building a solid quantitative and computational skillset is key.

You can explore courses in Computer Science and Mathematics on OpenCourser to build these foundational skills.

Graduate Research Opportunities

For those looking to delve deeper into the theoretical aspects of anomaly detection, contribute to cutting-edge research, or pursue more advanced roles, graduate studies (Master's or Ph.D.) offer significant opportunities. Graduate programs allow for specialization and in-depth exploration of specific areas within anomaly detection and related fields like machine learning, data mining, and artificial intelligence.

Research opportunities at the graduate level can focus on:

- Developing Novel Algorithms: Creating new anomaly detection techniques that are more accurate, efficient, scalable, or robust to different types of data and anomalies. This could involve work on deep learning architectures, ensemble methods, or algorithms for specific data types like time-series or graph data.

- Theoretical Foundations: Exploring the mathematical and statistical underpinnings of anomaly detection, aiming to better understand the properties and limitations of existing methods or to develop new theoretical frameworks.

- Domain-Specific Applications: Applying and adapting anomaly detection techniques to solve specific problems in areas like cybersecurity, finance, healthcare, climate science, or manufacturing. This often involves interdisciplinary collaboration.

- Explainability and Interpretability: Addressing the "black box" nature of some complex models by developing methods to explain why a particular instance was flagged as an anomaly. This is crucial for building trust and enabling actionable insights.

- Handling Data Challenges: Researching methods for dealing with issues like high-dimensional data, streaming data, concept drift (where the definition of "normal" changes over time), and adversarial attacks on anomaly detection systems.

Graduate research often involves working closely with faculty members who are experts in the field, publishing research papers, and presenting at academic conferences. This pathway is ideal for individuals passionate about pushing the boundaries of knowledge and developing innovative solutions.

Exploring topics like Artificial Intelligence and Data Science can lead to discovering specific graduate programs and research areas.

Key Coursework: Data Mining, Statistical Modeling

Within a formal education pathway, certain courses are particularly crucial for building the expertise needed in anomaly detection. Beyond foundational programming and mathematics, coursework that focuses on data analysis and modeling is key.

Data Mining: This course typically covers techniques for discovering patterns and knowledge from large datasets. Topics often include classification, clustering, association rule mining, and, importantly, outlier or anomaly detection itself. Students learn about various algorithms, their applications, and how to evaluate their performance. Understanding data mining principles is essential for effectively applying anomaly detection in practice. You can explore data mining courses to gain these skills.

Statistical Modeling: This area of study focuses on building mathematical models to represent relationships in data and to make inferences or predictions. Courses in statistical modeling cover topics like regression analysis, time series analysis, Bayesian methods, and generalized linear models. A strong grasp of statistical modeling helps in understanding the assumptions behind many anomaly detection techniques, particularly statistical ones, and in developing more nuanced approaches to defining "normal" behavior.

Other highly relevant coursework includes:

- Machine Learning: This is a cornerstone, covering supervised and unsupervised learning algorithms, model evaluation, feature engineering, and often deep learning fundamentals.

- Database Systems: Understanding how data is stored, managed, and queried is essential for working with the large datasets typically involved in anomaly detection.

- Probability Theory: Provides the mathematical foundation for understanding uncertainty and randomness, which is central to many statistical and machine learning models.

- Linear Algebra: Essential for understanding the mechanics of many machine learning algorithms, particularly those involving matrix operations (e.g., PCA, neural networks).

- Algorithm Design and Analysis: Helps in understanding the computational complexity and efficiency of different anomaly detection methods.

A curriculum that balances theoretical understanding with practical application, often through projects and lab work, is ideal for preparing for a career in anomaly detection.

This comprehensive book is a standard reference for data mining concepts and techniques.

This course introduces data mining in the context of smart cities, showcasing a specific application area.

Capstone/Thesis Project Ideas

A capstone project or thesis is a common requirement in many undergraduate and graduate programs, providing an opportunity for students to apply their knowledge to a substantial problem. For those interested in anomaly detection, such a project can be a valuable way to gain practical experience and develop a portfolio piece.

Potential project ideas could involve:

- Developing an Anomaly Detection System for a Specific Domain: For example, building a system to detect anomalies in IoT sensor data from a smart home, identifying unusual trading patterns in cryptocurrency markets, or detecting anomalies in public health datasets to spot potential disease outbreaks.

- Comparing the Performance of Different Anomaly Detection Algorithms: Selecting a specific dataset (or generating synthetic data with known anomalies) and systematically evaluating the performance of various statistical and machine learning techniques (e.g., Isolation Forest, One-Class SVM, Autoencoders) based on metrics like precision, recall, and F1-score.

- Investigating the Impact of Data Preprocessing on Anomaly Detection: Exploring how different preprocessing steps (e.g., normalization techniques, handling of missing values, feature engineering) affect the performance of anomaly detection algorithms on a chosen dataset.

- Exploring Anomaly Detection in Time-Series Data: Implementing and evaluating techniques for detecting anomalies in time-series data, perhaps focusing on a specific application like detecting anomalies in website traffic logs or financial time series.

- Building an Explainable Anomaly Detection System: Focusing not just on detecting anomalies but also on providing explanations for why a particular data point was flagged as anomalous. This could involve implementing or extending techniques for model interpretability.

- Anomaly Detection in High-Dimensional Data: Tackling the challenges of detecting anomalies in datasets with a very large number of features, potentially exploring dimensionality reduction techniques or algorithms specifically designed for high-dimensional spaces.

The key to a successful capstone or thesis project is to choose a well-defined problem, select appropriate methods, conduct a thorough analysis, and clearly present the findings. Such projects can significantly enhance a student's resume and demonstrate practical skills to potential employers.

Self-Directed Learning Strategies

While formal education provides a strong foundation, the journey to mastering anomaly detection often involves significant self-directed learning. This is especially true given the rapidly evolving nature of machine learning and data science. For career pivoters or those looking to supplement their existing knowledge, proactive and structured self-study can be incredibly effective.

The abundance of online resources, open-source tools, and vibrant online communities has made it more accessible than ever to learn new technical skills independently. However, it requires discipline, a clear plan, and a commitment to hands-on practice. If you're charting your own course into anomaly detection, remember that the path can be challenging but also deeply rewarding. Set realistic goals, celebrate small victories, and don't be afraid to seek help or connect with fellow learners. Your dedication can open up exciting new possibilities.

Building Foundational Math/Stats Skills

A solid understanding of certain mathematical and statistical concepts is fundamental to grasping how anomaly detection algorithms work and how to apply them effectively. Even if you have a non-technical background, or if it's been a while since you've engaged with these topics, dedicating time to (re)build these foundations is a crucial first step.

Key areas to focus on include:

- Linear Algebra: Concepts like vectors, matrices, dot products, and eigenvalues/eigenvectors are central to many machine learning algorithms, including those used for dimensionality reduction (PCA) and neural networks (autoencoders).

- Calculus: Understanding derivatives and gradients is important for grasping how many machine learning models are optimized (e.g., through gradient descent).

- Probability Theory: Concepts like probability distributions (especially the normal distribution), conditional probability, and Bayes' theorem are foundational for statistical anomaly detection methods and for understanding uncertainty in models.

- Descriptive Statistics: Understanding measures like mean, median, mode, variance, standard deviation, and quartiles is essential for basic data exploration and for some simpler anomaly detection techniques (e.g., Z-score, IQR method).

- Inferential Statistics: Concepts like hypothesis testing and confidence intervals are important for evaluating the significance of findings and for understanding the assumptions behind statistical tests for outliers (e.g., Grubbs' test).

Numerous online courses, textbooks, and free resources are available to learn or refresh these concepts. Platforms like Khan Academy, Coursera, edX, and many university websites offer excellent introductory materials. Don't feel pressured to become an expert mathematician overnight. Focus on understanding the concepts intuitively and how they apply to data analysis and machine learning. Even a working knowledge can significantly enhance your ability to learn and apply anomaly detection techniques.

OpenCourser offers a wide range of courses in Mathematics, including foundational topics relevant to data science. You can also explore resources under the Data Science category for more applied learning.

Open-Source Tools (Python Libraries)

One of the most empowering aspects of learning anomaly detection today is the availability of powerful open-source tools, particularly within the Python ecosystem. These libraries provide ready-to-use implementations of many common algorithms, allowing learners and practitioners to experiment, build, and deploy anomaly detection solutions without having to code everything from scratch.

Some key Python libraries for anomaly detection include:

- Scikit-learn: This is a comprehensive machine learning library in Python that offers a wide array of algorithms for classification, regression, clustering, dimensionality reduction, model selection, and preprocessing. For anomaly detection, it includes implementations of One-Class SVM, Isolation Forest, Local Outlier Factor (LOF), and tools for robust covariance estimation. Its consistent API makes it relatively easy to switch between different models.

- PyOD (Python Outlier Detection): As the name suggests, PyOD is a dedicated library specifically focused on outlier detection. It provides access to a vast collection of anomaly detection algorithms, including many not found in Scikit-learn, covering statistical methods, nearest-neighbor based methods, clustering-based methods, ensemble techniques, and neural network-based approaches.

- TensorFlow and PyTorch: These are leading deep learning frameworks. While not exclusively for anomaly detection, they are essential for building more complex neural network models like autoencoders (including LSTMs or GRUs for time-series data) or Generative Adversarial Networks (GANs) that can be used for anomaly detection tasks.

- Statsmodels: This library provides classes and functions for the estimation of many different statistical models, as well as for conducting statistical tests and statistical data exploration. It's useful for implementing more traditional statistical anomaly detection methods and for time-series analysis (e.g., ARIMA models).

- Pandas and NumPy: While not anomaly detection libraries themselves, Pandas (for data manipulation and analysis, especially with tabular data) and NumPy (for numerical operations, especially with arrays and matrices) are fundamental prerequisites for almost any data science work in Python, including preparing data for anomaly detection algorithms.

Getting comfortable with these tools involves not just learning the syntax but also understanding how to apply them to real datasets, preprocess data effectively, tune model parameters, and interpret the results. Many online tutorials, documentation pages, and community forums offer guidance and examples.

This project-based course provides a hands-on introduction to using PyCaret, a library that simplifies many machine learning workflows, including anomaly detection.

Learning Programming, particularly Python, is a crucial step. OpenCourser lists many introductory and advanced Python courses.

Kaggle Competitions/Personal Projects

Theoretical knowledge and familiarity with tools are essential, but true mastery in anomaly detection, as in any data science skill, comes from hands-on practice with real-world (or realistic) data. Engaging in Kaggle competitions and undertaking personal projects are excellent ways to develop practical skills, build a portfolio, and deepen your understanding.

Kaggle Competitions: Kaggle is a popular platform that hosts data science competitions. While not all competitions are specifically about anomaly detection, many datasets used in these competitions contain outliers or require careful data cleaning, which can provide relevant practice. Some competitions might explicitly focus on fraud detection, intrusion detection, or defect detection, all of which are anomaly detection tasks. Participating in competitions allows you to:

- Work with diverse and often messy datasets.

- See how others approach similar problems by studying public notebooks and discussion forums.

- Benchmark your solutions against others.

- Learn about new techniques and tools.

Personal Projects: Undertaking personal projects allows you to explore areas of anomaly detection that particularly interest you and to tailor the complexity to your current skill level. Consider projects like:

- Analyzing publicly available datasets (e.g., sensor data, financial data, network logs) to find anomalies.

- Implementing an anomaly detection algorithm from scratch (for deeper understanding) and then comparing it to library implementations.

- Building a simple web application that uses an anomaly detection model (e.g., a tool to upload a dataset and get potential outliers).

- Replicating the results of an interesting research paper in anomaly detection.

- Applying anomaly detection to a hobby or personal interest (e.g., detecting unusual patterns in sports statistics, analyzing your own fitness tracker data for anomalies).

For both Kaggle competitions and personal projects, the process of defining the problem, acquiring and preprocessing data, selecting and tuning models, evaluating results, and iterating is invaluable for learning. Documenting your projects, perhaps in a GitHub repository or a blog post, can also showcase your skills to potential employers or collaborators.

OpenCourser's Learner's Guide offers tips on structuring your learning and making the most of online resources, which can be helpful when undertaking self-directed projects.

Mentorship and Community Engagement

Learning in isolation can be challenging, especially when tackling complex topics like anomaly detection. Engaging with communities and seeking mentorship can provide invaluable support, guidance, and motivation along your self-directed learning journey.

Mentorship: A mentor can be someone more experienced in the field who can offer advice, review your work, help you navigate challenges, and provide insights into career pathways. Mentors can be found through:

- Professional Networks: Platforms like LinkedIn can help you connect with professionals in data science and anomaly detection.

- University Alumni Networks: If you're a graduate, your university's alumni network might have experienced professionals willing to mentor.

- Online Communities: Some online forums or groups focused on data science or specific technologies might have informal mentorship opportunities.

- Formal Mentorship Programs: Some organizations or industry groups offer structured mentorship programs.

Even if you don't have a formal mentor, seeking advice from multiple experienced individuals can be very beneficial.

Community Engagement: Participating in data science and machine learning communities can enhance your learning experience significantly. This can take many forms:

- Online Forums: Websites like Stack Overflow, Reddit (e.g., r/datascience, r/MachineLearning), Kaggle forums, and specialized discussion groups are great places to ask questions, share knowledge, and learn from others' experiences.

- Meetups and Conferences: Attending local meetups or larger conferences (many now have virtual options) allows you to network with peers and experts, learn about the latest trends, and get inspired.

- Open Source Contributions: Contributing to open-source projects related to anomaly detection or machine learning libraries can be a great way to learn, collaborate, and build your reputation.

- Study Groups: Forming or joining a study group with fellow learners can provide mutual support, accountability, and opportunities to discuss concepts and solve problems together.

- Social Media: Following experts and organizations in the field on platforms like Twitter or LinkedIn can help you stay updated on new research, tools, and discussions.

Remember, learning is a journey, and being part of a supportive community can make it more enjoyable and effective. Don't hesitate to ask questions (after doing your own initial research) and to share what you've learned with others. This collaborative spirit is a hallmark of the data science community.

Career Progression in Anomaly Detection

A career involving anomaly detection can be both intellectually stimulating and impactful, with opportunities spanning various industries. As organizations increasingly rely on data to drive decisions and manage risks, the demand for professionals skilled in identifying and interpreting anomalies is growing. The career path can evolve from entry-level analytical roles to specialized technical positions and even leadership opportunities within data science and AI teams.

For those starting out, or considering a pivot into this area, understanding the potential career trajectories and the skills required at each stage is important. Building a strong portfolio and continuously developing your expertise are key to advancing in this dynamic field. While the journey requires dedication, the skills gained in anomaly detection are highly transferable and valuable in the broader data science landscape.

Entry-Level Roles (Data Analyst, Junior ML Engineer)

For individuals beginning their careers in or transitioning into fields related to anomaly detection, several entry-level roles can provide valuable experience and a pathway for growth. These roles often involve working with data, applying analytical techniques, and supporting more senior team members in developing and implementing anomaly detection solutions.

Data Analyst: This is often a common entry point. Data Analysts typically collect, clean, analyze, and visualize data to identify trends, patterns, and insights. In the context of anomaly detection, a Data Analyst might be responsible for:

- Exploring datasets to understand normal behavior and identify potential outliers.

- Generating reports on detected anomalies and their potential impact.

- Assisting in the evaluation of anomaly detection models.

- Using business intelligence tools to monitor key metrics and flag deviations.

While not always directly building complex anomaly detection algorithms, Data Analysts develop crucial skills in data handling, interpretation, and communication. A Data Analyst role can serve as an excellent stepping stone.

Junior Machine Learning (ML) Engineer: For those with stronger programming and ML fundamentals, a Junior ML Engineer role can be a direct entry into the more technical aspects of anomaly detection. Responsibilities might include:

- Assisting in the development and implementation of ML models for anomaly detection.

- Preprocessing and preparing data for ML algorithms.

- Running experiments and tuning model parameters.

- Supporting the deployment and monitoring of ML models in production.

This role typically requires a good understanding of ML concepts, programming skills (often Python), and familiarity with ML libraries. You can explore the Career Development section on OpenCourser for more insights into such roles.

Other related entry-level positions could include Junior Data Scientist, Business Intelligence Analyst, or roles with a specific domain focus like Junior Fraud Analyst or Junior Security Analyst. The key is to gain hands-on experience with data and analytical tools, and to continuously learn and develop specialized skills in anomaly detection.

Mid-Career Specialization Paths

As professionals gain experience in anomaly detection and related data science fields, they often have opportunities to specialize further, deepening their expertise in specific areas or industries. Mid-career roles typically involve more responsibility, a higher degree of technical skill, and often a focus on solving more complex problems.

Potential specialization paths include:

- Senior Data Scientist / Machine Learning Engineer (focused on Anomaly Detection): These roles involve designing, developing, and deploying sophisticated anomaly detection models. Responsibilities include selecting appropriate algorithms, feature engineering, model tuning, and ensuring the robustness and scalability of solutions. They often lead projects and mentor junior team members.

- Domain-Specific Anomaly Detection Specialist: Focusing on anomaly detection within a particular industry, such as a Fraud Detection Specialist in finance, a Cybersecurity Threat Analyst, a Predictive Maintenance Specialist in manufacturing, or a Clinical Data Analyst in healthcare. These roles require deep domain knowledge in addition to technical skills.

- Research Scientist (Anomaly Detection): For those with advanced degrees (Ph.D.) or a strong research background, this path involves conducting research to develop new anomaly detection methodologies, publishing papers, and contributing to the advancement of the field. This is common in academic institutions or industrial research labs.

- MLOps Engineer (with Anomaly Detection focus): As anomaly detection models are increasingly deployed in production, MLOps Engineers specialize in the operational aspects, including model deployment, monitoring, retraining, and ensuring the reliability and performance of these systems in live environments.

Mid-career professionals often need to stay updated with the latest advancements in machine learning and anomaly detection techniques. Continuous learning, attending conferences, and participating in professional communities are important for career growth. Specialization allows individuals to become recognized experts in their chosen niche, leading to more challenging and rewarding opportunities.

Leadership Opportunities in AI/ML Teams

With significant experience and a proven track record in anomaly detection and broader AI/ML fields, professionals can advance into leadership roles. These positions involve managing teams, setting strategic direction, and overseeing the development and deployment of AI-driven solutions, including those focused on anomaly detection.

Leadership opportunities can include:

- Lead Data Scientist / Lead ML Engineer: These roles involve leading a team of data scientists or ML engineers, providing technical guidance, overseeing project execution, and ensuring the quality and effectiveness of the models developed. They often bridge the gap between technical teams and business stakeholders.

- Manager of Data Science / AI: This role involves managing a larger department or group focused on data science and AI initiatives. Responsibilities include resource allocation, talent development, setting research agendas, and aligning AI strategies with overall business goals.

- Director or VP of AI/Analytics: Senior leadership positions that involve defining the organization's vision for AI and analytics, driving innovation, managing budgets, and representing the AI/ML function at the executive level. They play a key role in how the organization leverages data and AI for competitive advantage and risk management.

- Chief Data Officer (CDO) or Chief Analytics Officer (CAO): Executive-level roles responsible for the organization's overall data strategy, governance, and analytics capabilities. Anomaly detection would be one component of a broader data-driven strategy.

Leadership in AI/ML requires not only strong technical understanding but also excellent communication, strategic thinking, project management, and people management skills. Leaders in this space must be able to articulate the value of anomaly detection and other AI initiatives to diverse audiences, foster a culture of innovation, and navigate the ethical and operational challenges associated with deploying AI systems. The ability to translate complex technical concepts into business impact is crucial at this level.

For those aspiring to leadership, developing skills in Management and Professional Development is highly recommended.

Portfolio Development Strategies

For anyone pursuing a career in anomaly detection, especially those who are early in their career or making a career transition, a strong portfolio is essential. A portfolio showcases your practical skills, your ability to solve real-world problems, and your passion for the field. It provides tangible evidence of your capabilities beyond what's listed on a resume.

Effective strategies for portfolio development include:

- Personal Projects: As mentioned in self-directed learning, undertake projects that involve applying anomaly detection techniques to interesting datasets. Choose projects that allow you to demonstrate a range of skills, from data acquisition and preprocessing to model selection, implementation, evaluation, and interpretation of results.

- Kaggle Competitions and Other Challenges: Participate in online data science competitions. Even if you don't win, the process of working on the problem and the code you produce can be valuable additions to your portfolio. Document your approach and learnings.

- Open Source Contributions: Contributing to open-source libraries related to anomaly detection, machine learning, or data science can demonstrate your coding skills, your ability to collaborate, and your commitment to the community.

- Blog Posts or Technical Articles: Write about your projects, explain complex anomaly detection concepts in an accessible way, or share your learnings from a particular course or research paper. This demonstrates your communication skills and your ability to articulate technical ideas. Platforms like Medium, DEV.to, or a personal blog are good options.

- GitHub Repository: Host your project code, analyses, and documentation on GitHub. This allows potential employers to see your work directly. Ensure your code is clean, well-commented, and that your projects have clear README files explaining the problem, your approach, and the results.

- Visualizations and Dashboards: If your project involves identifying anomalies, create compelling visualizations or interactive dashboards (e.g., using tools like Matplotlib, Seaborn, Plotly, Tableau, or Power BI) to present your findings. This showcases your ability to communicate insights effectively. You can explore data visualization skills.

When building your portfolio, focus on quality over quantity. A few well-executed projects that demonstrate depth of understanding are more valuable than many superficial ones. Tailor your portfolio to the types of roles you are targeting, highlighting the skills and experiences most relevant to those positions. Regularly update your portfolio as you complete new projects and learn new skills.

OpenCourser's profile settings allow you to showcase your achievements and link to external portfolios, which can be helpful when you've curated a strong collection of your work.

Ethical Challenges in Anomaly Detection

While anomaly detection offers powerful capabilities for identifying critical deviations and driving insights, its application is not without ethical challenges. As these systems become more integrated into decision-making processes across various domains, it's crucial to consider their potential societal impacts and to develop and deploy them responsibly.

Addressing these ethical considerations is not just a matter of compliance but is fundamental to building trust in AI systems and ensuring they are used in a fair, transparent, and accountable manner. Ignoring these challenges can lead to biased outcomes, privacy violations, and a loss of public confidence in the technology.

Bias in Training Data

One of the most significant ethical challenges in anomaly detection, as with many machine learning applications, is the potential for bias in training data. Anomaly detection models learn what constitutes "normal" behavior from the data they are trained on. If this training data reflects existing societal biases or historical prejudices, the model can inadvertently learn and perpetuate these biases, leading to unfair or discriminatory outcomes.

For example:

- In fraud detection, if historical data shows that certain demographic groups have been unfairly scrutinized or flagged more often (perhaps due to biased human practices in the past), an anomaly detection model trained on this data might learn to flag individuals from these groups as "anomalous" at a higher rate, even for legitimate behavior.

- In predictive policing, if arrest data (which can be influenced by biased policing practices) is used to detect "anomalous" crime patterns, the system might disproportionately target certain neighborhoods or communities, reinforcing existing inequalities.

- In hiring, if a system is trained to detect "anomalous" resumes based on past successful hires, and if those past hires were predominantly from a certain demographic, the system might unfairly flag candidates from underrepresented groups as anomalous.

Addressing data bias requires careful attention throughout the machine learning lifecycle. This includes:

- Data Auditing: Examining training datasets for potential biases and representational gaps.

- Bias Mitigation Techniques: Employing algorithmic techniques designed to detect and reduce bias in models, such as re-weighting data, adversarial debiasing, or fairness-aware learning algorithms.

- Diverse and Representative Data: Striving to collect and use training data that is as diverse and representative as possible of the population the system will affect.

- Human Oversight: Ensuring that human reviewers are involved in scrutinizing the outputs of anomaly detection systems, especially in sensitive applications, to catch and correct biased outcomes.

Recognizing and actively working to mitigate bias in training data is crucial for ensuring that anomaly detection systems are fair and equitable.

Privacy Concerns with Monitoring Systems

Anomaly detection systems often rely on the collection and analysis of large volumes of data, which can include sensitive personal information. This raises significant privacy concerns, particularly when these systems are used for monitoring individuals' behavior, communications, or activities.

For example:

- Employee Monitoring: Systems that detect anomalous employee behavior by analyzing email content, web browsing history, or keystroke patterns can be perceived as invasive and erode trust if not implemented transparently and with clear justification.

- Surveillance Systems: Anomaly detection in video surveillance or public sensor networks, while potentially useful for security, can lead to concerns about mass surveillance and the chilling effect on public life if not carefully regulated.

- Healthcare Monitoring: While detecting anomalies in patient data can be life-saving, it also involves handling highly sensitive medical information, requiring stringent safeguards to prevent unauthorized access or breaches.

- Financial Transaction Monitoring: Tracking financial activities to detect fraud or money laundering involves analyzing personal financial data, which necessitates robust security and adherence to privacy regulations.

To address these privacy concerns, several principles and practices are important:

- Data Minimization: Collecting only the data that is strictly necessary for the anomaly detection task.

- Anonymization and Pseudonymization: Removing or obscuring personally identifiable information from datasets where possible, though re-identification can still be a risk.

- Encryption: Protecting data both in transit and at rest using strong encryption methods.

- Transparency: Being clear with individuals about what data is being collected, how it is being used for anomaly detection, and what their rights are.

- Consent: Obtaining informed consent for data collection and processing where appropriate.

- Access Controls: Implementing strict access controls to ensure that only authorized personnel can access sensitive data.

Balancing the benefits of anomaly detection with the fundamental right to privacy is a critical ethical tightrope to walk. Adherence to data protection regulations like GDPR (General Data Protection Regulation) in Europe or CCPA (California Consumer Privacy Act) is essential. You can learn more about data protection and privacy regulations through resources such as the official GDPR website.

False Positive/Negative Tradeoffs

Anomaly detection systems are not perfect; they will inevitably make errors. These errors come in two main forms: false positives (normal instances incorrectly flagged as anomalies) and false negatives (actual anomalies that are missed). The tradeoff between these two types of errors has significant ethical and practical implications, and how this tradeoff is managed depends heavily on the context of the application.

False Positives (Type I Error):

- Consequences: Can lead to wasted resources (e.g., investigating a benign event), unnecessary alarms causing "alert fatigue" for analysts, disruption of normal operations (e.g., blocking legitimate transactions or network traffic), or unfair treatment of individuals (e.g., wrongly accusing someone of fraud or suspicious activity).

- When to Minimize: In systems where the cost of a false alarm is very high, or where false alarms can cause significant inconvenience or harm.

False Negatives (Type II Error):

- Consequences: Can lead to missed opportunities to prevent harm (e.g., failing to detect a critical system failure before it occurs, missing a genuine fraud attempt, or not identifying a serious security breach). The impact can be severe, including financial loss, safety risks, or compromised security.

- When to Minimize: In systems where failing to detect an anomaly has critical consequences, such as in medical diagnosis of life-threatening conditions or in detecting safety-critical equipment failures.

The decision of whether to tune a system for higher precision (fewer false positives) or higher recall (fewer false negatives) is often a difficult one and involves weighing the potential costs and benefits of each type of error. This decision should involve not only technical experts but also domain experts and stakeholders who understand the real-world impact of these errors. Transparency about the system's error rates and the rationale behind the chosen tradeoff is also important for building trust and accountability.

Regulatory Compliance (GDPR, HIPAA)

The use of anomaly detection systems, especially those processing personal or sensitive data, is increasingly subject to legal and regulatory frameworks. Compliance with these regulations is not just a legal obligation but also an ethical imperative, ensuring that data is handled responsibly and individuals' rights are protected.

Key regulations that often impact anomaly detection applications include: