Text Processing

Comprehensive Guide to Text Processing

Text processing is a fundamental component of how we interact with and harness the vast amounts of textual data generated every day. At its core, text processing involves the automated manipulation, analysis, and understanding of human language by computers. This can range from simple tasks like finding and replacing words in a document to complex operations such as understanding the sentiment expressed in a news article or translating text from one language to another. Given the exponential growth of digital text data, from social media posts to academic papers and business reports, the ability to effectively process this information is becoming increasingly crucial across numerous fields.

Working in text processing can be an engaging and exciting endeavor for several reasons. Firstly, it's a field at the forefront of artificial intelligence and data science, offering opportunities to work on cutting-edge problems and develop innovative solutions. Imagine building systems that can automatically summarize lengthy legal documents, power intelligent chatbots that provide instant customer support, or analyze patient records to identify potential health risks. Secondly, the interdisciplinary nature of text processing means you'll often collaborate with experts from diverse backgrounds, including linguists, computer scientists, and domain specialists, fostering a rich learning environment. Finally, the societal impact of text processing is profound, with applications spanning healthcare, finance, education, and beyond, allowing you to contribute to meaningful advancements that can improve lives and transform industries.

Introduction to Text Processing

This section will introduce you to the foundational concepts of text processing, its historical development, and its relationship with other key technological domains.

Definition and Scope of Text Processing

Text processing, in its broadest sense, refers to the theory and practice of automating the creation, manipulation, and analysis of electronic text. It encompasses a wide array of techniques and methodologies aimed at transforming unstructured text data into a structured and understandable format that computers can efficiently work with. The scope of text processing is vast, ranging from basic operations like editing and formatting text to more sophisticated tasks such as information retrieval, text mining, and natural language understanding. It forms the bedrock for many applications we use daily, from search engines that help us find information online to spam filters that protect our inboxes.

The initial stages of text processing often involve cleaning and preparing the text. This might include removing irrelevant characters or formatting, correcting spelling errors, and handling different character encodings. Once the text is in a usable state, various analytical techniques can be applied. For instance, one might want to count the frequency of words, identify common phrases, or categorize documents based on their topics. More advanced text processing delves into understanding the meaning and context of the text, enabling applications like machine translation, sentiment analysis, and question answering.

Ultimately, the goal of text processing is to extract valuable insights and knowledge from textual data. As the volume of digital text continues to explode, the importance of efficient and effective text processing techniques becomes ever more apparent. It is a dynamic field that continually evolves to meet the challenges posed by the complexity and nuances of human language.

Historical Evolution and Key Milestones

The journey of text processing began long before the advent of modern computers, with early efforts focused on mechanical aids for writing and printing. However, the digital era truly revolutionized the field. Early digital text processing in the mid-20th century centered on basic tasks like sorting and searching, driven by the needs of information retrieval and library science. The development of programming languages and operating systems with built-in text manipulation capabilities, such as those found in Unix-based systems, provided powerful tools for developers and researchers.

A significant milestone was the emergence of Natural Language Processing (NLP) as a distinct field of study. NLP aimed to enable computers to understand and generate human language in a way that is both meaningful and useful. Early NLP systems focused on rule-based approaches, relying on hand-crafted grammars and lexicons. While these systems achieved some success in limited domains, they struggled with the ambiguity and variability inherent in human language.

The statistical revolution in the late 20th and early 21st centuries marked another major turning point. Machine learning algorithms, trained on large collections of text data (corpora), began to outperform rule-based systems in many NLP tasks. Techniques like n-grams, probabilistic models, and later, support vector machines, became standard tools. More recently, the rise of deep learning and neural networks, particularly transformer architectures, has led to unprecedented advancements, with models like BERT and GPT achieving human-like performance on a wide range of language tasks. The increasing availability of computational power and vast datasets continues to fuel innovation in text processing.

These courses offer a glimpse into the practical tools and techniques used in text processing, from foundational command-line utilities to specialized applications in areas like clinical data.

Core Objectives: Data Extraction, Transformation, Analysis

The core objectives of text processing can be broadly categorized into three interconnected stages: data extraction, data transformation, and data analysis.

Data Extraction is the initial step, focused on identifying and retrieving relevant textual information from various sources. This could involve scraping text from websites, pulling data from databases, reading text from files in different formats (like PDFs or Word documents), or even converting spoken language into text through speech recognition. The challenge here often lies in dealing with diverse and sometimes messy data sources, requiring robust methods to handle inconsistencies and errors.

Data Transformation follows extraction and involves converting the raw text into a more structured and usable format. This is a critical phase where the text is cleaned, normalized, and prepared for analysis. Common transformation tasks include removing irrelevant characters or HTML tags, converting all text to lowercase, correcting spelling mistakes, and segmenting the text into meaningful units like sentences or words (a process known as tokenization). More advanced transformations might involve stemming (reducing words to their root form) or lemmatization (reducing words to their dictionary form), and part-of-speech tagging (identifying the grammatical role of each word).

Data Analysis is the final stage, where the processed text is examined to uncover insights, patterns, and knowledge. This can range from simple descriptive analytics, like calculating word frequencies or identifying common topics, to more sophisticated predictive and prescriptive analytics. Examples include sentiment analysis to determine the emotional tone of a text, topic modeling to discover underlying themes in a collection of documents, named entity recognition to identify key entities like people, organizations, and locations, and machine translation to convert text from one language to another. The specific analytical techniques employed will depend heavily on the goals of the text processing task.

Relationship to Fields Like NLP, Data Science, and Linguistics

Text processing is not an isolated discipline; it is deeply intertwined with several other fields, most notably Natural Language Processing (NLP), Data Science, and Linguistics. Understanding these relationships provides a richer context for the role and significance of text processing.

Natural Language Processing (NLP) is perhaps the most closely related field. In fact, text processing techniques form the foundational toolkit for most NLP applications. While text processing focuses on the manipulation and preparation of text data, NLP aims to imbue computers with the ability to understand, interpret, and generate human language. Tasks like machine translation, sentiment analysis, and chatbot development are all core NLP problems that rely heavily on underlying text processing methodologies for cleaning, tokenizing, and structuring textual input.

Data Science is a broader, interdisciplinary field that uses scientific methods, processes, algorithms, and systems to extract knowledge and insights from data in various forms, both structured and unstructured. Text is a significant source of unstructured data, and text processing skills are therefore essential for data scientists. Many data science projects involve analyzing textual data to understand customer feedback, identify market trends, or build predictive models. Techniques from text processing are used to convert raw text into features that can be fed into machine learning models, a crucial step in the data science workflow.

Linguistics, the scientific study of language, provides the theoretical underpinnings for much of text processing and NLP. Concepts from linguistics, such as phonology (sound systems), morphology (word formation), syntax (sentence structure), and semantics (meaning), inform the design of algorithms and models used to process and understand text. For example, understanding sentence structure (syntax) is crucial for tasks like information extraction, while knowledge of word meanings (semantics) is vital for machine translation and question answering. While text processing often takes a computational approach, the insights from linguistic theory are invaluable for developing more sophisticated and accurate language technologies.

These books are foundational texts for anyone serious about understanding the theoretical and practical aspects of how computers process and understand human language.

Key Concepts in Text Processing

To effectively work with text, a solid understanding of several key concepts is necessary. These concepts form the building blocks for more advanced text processing techniques and applications.

Tokenization, Stemming, and Lemmatization

At the heart of processing text is the need to break it down into manageable units and normalize these units for consistent analysis. Three fundamental techniques for this are tokenization, stemming, and lemmatization.

Tokenization is the process of segmenting a stream of text into smaller pieces, known as tokens. These tokens are often words, but they can also be phrases, symbols, or other meaningful elements depending on the specific task. For example, the sentence "Text processing is fascinating!" might be tokenized into "Text", "processing", "is", "fascinating", and "!". Tokenization is a crucial first step in many text processing pipelines as it converts a raw string of characters into a list of items that can be more easily analyzed or fed into subsequent processing stages.

Stemming is a process of reducing inflected (or sometimes derived) words to their word stem, base, or root form—generally a written word form. The stem itself may not be a valid word in the language. For example, the words "running", "ran", and "runner" might all be stemmed to "run". Stemming algorithms often use heuristic rules to chop off the ends of words. The goal is to group variations of a word together for analysis, treating them as a single concept. This can be useful in information retrieval, where a search for "boats" should also find documents containing "boat".

Lemmatization is similar to stemming in that it aims to reduce words to a base or dictionary form, known as the lemma. However, unlike stemming, lemmatization considers the morphological analysis of the words. This means it uses vocabulary and morphological analysis of words, normally aiming to remove inflectional endings only and to return the base or dictionary form of a word, which is known as the lemma. For instance, the word "better" has "good" as its lemma. This is a more sophisticated process than stemming because it requires understanding the context and part of speech of a word to determine its correct lemma. While computationally more intensive, lemmatization often leads to more accurate results in tasks requiring a deeper understanding of language.

Understanding these fundamental techniques is crucial for anyone venturing into text processing. The following course provides practical experience in handling text data, which often involves these preprocessing steps.

Regular Expressions and Pattern Matching

Regular expressions, often shortened to "regex" or "regexp," are a powerful tool for text processing, providing a concise and flexible means for matching strings of text, such as particular characters, words, or patterns of characters. They are a fundamental concept for anyone working with textual data, enabling complex search-and-replace operations and sophisticated data validation.

At its core, a regular expression is a sequence of characters that defines a search pattern. These patterns are used by string searching algorithms for "find" or "find and replace" operations on strings, or for input validation. For example, a simple regular expression like ^d{5}$ could be used to check if a string consists of exactly five digits, a common validation for a US ZIP code. More complex patterns can identify email addresses, URLs, phone numbers, or specific grammatical structures within text.

Mastering regular expressions involves understanding a special syntax used to define these patterns. This includes metacharacters (special characters with meanings beyond their literal interpretation, like *, +, ?, [], {}), character classes (sets of characters like d for digits or w for word characters), quantifiers (specifying how many times a character or group should appear), and anchors (specifying positions like the beginning or end of a line). While the syntax can appear daunting at first, the power and versatility they offer make them an indispensable skill for text processing tasks ranging from data cleaning and extraction to parsing and transformation.

These courses will help you master regular expressions, a vital skill for text manipulation and pattern matching in various programming contexts.

For those looking to dive deeper into practical text manipulation, these books offer comprehensive guidance.

Text Normalization Techniques

Text normalization is a crucial preprocessing step in text processing that aims to put all text on a level playing field, ensuring that variations in form do not hinder effective analysis. It involves transforming text into a canonical, or standard, form. This process helps in reducing noise and redundancy in the data, making subsequent analysis more accurate and efficient.

Several techniques fall under the umbrella of text normalization. Case folding, for instance, involves converting all characters to a single case, typically lowercase. This ensures that words like "Text", "text", and "TEXT" are treated as the same token. Another common technique is the removal of punctuation, although the decision to do so depends on the specific task; sometimes punctuation carries important semantic information (e.g., in sentiment analysis). Stop word removal involves eliminating common words that carry little semantic meaning, such as "the", "is", "in", "and". These words occur frequently but often don't help in distinguishing between documents or understanding the core topics.

Other normalization techniques can include correcting spelling errors, expanding contractions (e.g., "don't" to "do not"), handling special characters and symbols, and even converting numbers to their word equivalents or a standardized numerical format. The choice of which normalization techniques to apply depends heavily on the nature of the text data and the goals of the text processing task. For example, in information retrieval, aggressive normalization might be beneficial to ensure comprehensive matching, while in tasks like authorship attribution, preserving subtle stylistic variations might be more important.

Vectorization (TF-IDF, Word Embeddings)

Many machine learning algorithms and analytical techniques require numerical input. Since text data is inherently categorical, it needs to be converted into a numerical representation. This process is known as vectorization, where text is transformed into numerical vectors. Two prominent methods for text vectorization are Term Frequency-Inverse Document Frequency (TF-IDF) and word embeddings.

TF-IDF stands for Term Frequency-Inverse Document Frequency. It's a statistical measure used to evaluate how important a word is to a document in a collection or corpus. The importance increases proportionally to the number of times a word appears in the document (Term Frequency - TF) but is offset by the frequency of the word in the corpus (Inverse Document Frequency - IDF). The IDF part diminishes the weight of terms that occur very frequently in the document set and increases the weight of terms that occur rarely. This approach helps to highlight words that are characteristic of a particular document.

Word Embeddings are a more modern and often more powerful approach to text vectorization. Instead of representing words as sparse vectors based on counts, word embeddings represent words as dense, low-dimensional vectors in a continuous vector space. These embeddings are learned from large amounts of text data, and they capture semantic relationships between words. Words with similar meanings tend to have similar vector representations. Popular word embedding techniques include Word2Vec, GloVe, and FastText. More advanced contextual embeddings, like those produced by models such as ELMo, BERT, and GPT, generate different vectors for a word depending on its context, capturing even richer semantic nuances.

Vectorization is a critical bridge between raw text and the quantitative methods used in data analysis and machine learning, enabling powerful computational approaches to understanding language.

Formal Education Pathways

For those seeking a structured approach to mastering text processing, formal education offers comprehensive learning paths. Universities and academic institutions provide programs that lay a strong theoretical and practical foundation in the concepts and technologies underpinning this field.

Relevant Undergraduate Majors (Computer Science, Linguistics)

Several undergraduate majors can provide a strong foundation for a career in text processing. The most direct routes often come from Computer Science and Linguistics, or a combination of both.

A Computer Science degree equips students with essential programming skills, data structures and algorithms knowledge, and an understanding of software development principles. Courses in artificial intelligence, machine learning, database management, and algorithm design are particularly relevant. Many computer science programs also offer specializations or elective tracks in areas like data science or AI, which often include specific courses on natural language processing or text mining.

A Linguistics degree, on the other hand, provides a deep understanding of the structure and meaning of human language. Students learn about phonetics, phonology, morphology, syntax, semantics, and pragmatics. This knowledge is invaluable for understanding the complexities of language that text processing systems aim to handle. Computational linguistics, a subfield that bridges linguistics and computer science, is especially pertinent, focusing on the computational modeling of linguistic phenomena.

Increasingly, interdisciplinary programs that combine elements of computer science and linguistics are emerging. These programs are specifically designed to train students for careers in language technology. Other related majors that can offer a good pathway include mathematics (for the theoretical underpinnings of machine learning algorithms), statistics (for data analysis and model evaluation), and cognitive science (for understanding human language processing).

This book offers a broad introduction to speech and language processing, suitable for those exploring the intersection of linguistics and computation.

Graduate Programs Focusing on NLP or Computational Linguistics

For individuals aiming for more specialized roles or research positions in text processing, pursuing a graduate degree (Master's or Ph.D.) is often a beneficial step. Many universities offer graduate programs specifically focused on Natural Language Processing (NLP) or Computational Linguistics. These programs delve much deeper into the advanced theories, algorithms, and applications within the field.

Master's programs in NLP or computational linguistics typically build upon undergraduate foundations in computer science and/or linguistics. They offer advanced coursework in areas such as machine learning for NLP, statistical NLP, deep learning for text, information retrieval, machine translation, sentiment analysis, and dialogue systems. These programs often include a significant research component, such as a thesis or capstone project, allowing students to apply their knowledge to solve real-world problems or explore novel research questions.

Ph.D. programs are research-intensive and are suited for those who wish to contribute to the cutting edge of the field, often leading to careers in academia or industrial research labs. Doctoral candidates conduct original research, publish scholarly papers, and develop a deep expertise in a specific area of NLP or computational linguistics. These programs require a strong commitment and a passion for discovery and innovation in language technology.

When considering graduate programs, it's advisable to look at the research interests of the faculty, the resources available (such as computing facilities and datasets), and the career outcomes of past graduates. Many strong programs are housed within Computer Science departments, while others might be in Linguistics departments or interdisciplinary institutes focused on AI or data science.

This advanced course on SAS Macro Language can be valuable for students in graduate programs who need to handle complex data manipulation tasks in their research.

Research Opportunities in Academia

Academia is a vibrant hub for research in text processing and Natural Language Processing (NLP). Universities and research institutions around the world are actively pushing the boundaries of what's possible in understanding and generating human language. For students and aspiring researchers, these environments offer numerous opportunities to get involved in cutting-edge projects.

Research opportunities in academia can take many forms. Undergraduate students might participate in research projects under the guidance of faculty members, often as part of honors programs or summer research initiatives. This can provide valuable early exposure to the research process. Master's students typically engage in more substantial research, often culminating in a thesis that demonstrates their ability to conduct independent research. Ph.D. students are at the forefront of academic research, dedicating several years to investigating a specific problem, developing novel solutions, and contributing new knowledge to the field.

Academic research in text processing covers a vast spectrum of topics. These include fundamental challenges like improving the accuracy and efficiency of parsing algorithms, developing more robust machine translation systems, creating models that can understand nuanced sentiment and emotion, and exploring the ethical implications of language technologies. Researchers also work on applying text processing techniques to diverse domains, such as analyzing historical texts, understanding social media trends, improving healthcare through clinical text analysis, and developing educational tools. Collaboration is common, with researchers often working in teams and partnering with industry or other academic disciplines.

Integration with Data Science Curricula

Text processing is increasingly becoming an integral part of Data Science curricula at both undergraduate and graduate levels. This integration reflects the growing importance of unstructured text data in the broader field of data analytics and machine learning. As organizations collect vast amounts of textual information from sources like customer reviews, social media, emails, and internal documents, the ability to extract insights from this data is a critical skill for data scientists.

Data science programs often introduce text processing as a specialized area within machine learning or data mining courses. Students learn the fundamental techniques for preparing text data, such as tokenization, stemming, and stop word removal. They are then introduced to methods for converting text into numerical representations suitable for machine learning models, including TF-IDF and word embeddings. Core NLP tasks relevant to data science, such as text classification (e.g., spam detection, sentiment analysis), topic modeling (e.g., discovering themes in customer feedback), and information extraction (e.g., identifying key entities in reports), are commonly covered.

The emphasis in data science curricula is often on the practical application of these techniques to solve real-world problems. Students work with popular programming languages like Python and R, utilizing libraries such as NLTK, spaCy, scikit-learn, and Gensim for text processing tasks. Project-based learning is common, where students might analyze product reviews to understand customer sentiment, categorize news articles by topic, or build a system to recommend articles based on text similarity. This hands-on experience prepares data scientists to effectively handle and derive value from the abundance of textual data they are likely to encounter in their careers.

For those building a data science skillset, these books are excellent resources for understanding the theoretical underpinnings and practical applications of machine learning and information retrieval, both crucial for advanced text analysis.

Online and Self-Directed Learning

Beyond formal education, a wealth of resources is available for those who prefer to learn text processing at their own pace or supplement their existing knowledge. Online courses, tutorials, and open-source projects offer flexible and accessible pathways to acquiring these valuable skills.

Skill-Building Priorities for Self-Study

Embarking on a self-study journey in text processing requires a clear set of priorities to build a strong foundation and progressively tackle more complex topics. A good starting point is to master a programming language commonly used in the field, with Python being the most popular choice due to its extensive libraries and supportive community. Understanding basic programming concepts, data structures (like strings, lists, and dictionaries), and control flow is essential.

Once comfortable with a programming language, focus on fundamental text processing techniques. This includes learning about string manipulation, regular expressions for pattern matching, tokenization, stemming, and lemmatization. Familiarize yourself with core libraries like NLTK, spaCy, or scikit-learn in Python, which provide tools for these tasks. Next, delve into methods for representing text numerically, starting with simpler approaches like bag-of-words and TF-IDF, and then moving towards more advanced techniques like word embeddings (Word2Vec, GloVe, FastText).

As your foundational skills solidify, begin exploring core Natural Language Processing (NLP) tasks such as text classification (e.g., sentiment analysis, spam detection), topic modeling, and named entity recognition. Understanding the basics of machine learning will be crucial here, so concurrently learning about supervised and unsupervised learning algorithms, model evaluation, and feature engineering is highly recommended. Finally, stay updated with recent advancements, particularly in deep learning for NLP (e.g., recurrent neural networks, transformers), as this is a rapidly evolving area. Prioritizing hands-on practice through projects and coding exercises throughout this journey is key to reinforcing concepts and building practical expertise.

These courses can kickstart your self-study journey by providing practical experience with Python and fundamental Linux tools often used in data processing pipelines.

Project-Based Learning Strategies

Project-based learning is an exceptionally effective strategy for mastering text processing, as it allows you to apply theoretical knowledge to tangible problems and build a portfolio of work. Start with small, well-defined projects and gradually increase the complexity as your skills grow. For example, a beginner project could be to build a simple word frequency counter for a text file or a program that identifies all email addresses in a document using regular expressions.

As you advance, consider projects like building a spam filter using text classification techniques. This would involve collecting a dataset of spam and non-spam emails, preprocessing the text, vectorizing it, training a machine learning model (e.g., Naive Bayes or Logistic Regression), and evaluating its performance. Another engaging project could be sentiment analysis of product reviews or tweets, where you classify text as positive, negative, or neutral. This will introduce you to challenges like handling sarcasm, emojis, and informal language.

For more advanced learners, projects could involve developing a basic chatbot, a system for summarizing articles, or exploring topic modeling on a collection of news articles to identify recurring themes. Don't be afraid to tackle problems that genuinely interest you, as this will keep you motivated. Utilize publicly available datasets from platforms like Kaggle, UCI Machine Learning Repository, or specific NLP dataset collections. Document your projects well, perhaps on GitHub, explaining your approach, the challenges you faced, and the results you achieved. This not only solidifies your learning but also creates valuable assets for showcasing your skills to potential employers or collaborators.

Open-Source Tools and Datasets

The field of text processing thrives on a rich ecosystem of open-source tools and datasets, which are invaluable resources for both learning and practical application. These resources lower the barrier to entry and foster a collaborative environment for innovation.

For programming, Python stands out as the dominant language, largely due to its extensive collection of open-source libraries tailored for text processing and NLP. Key libraries include:

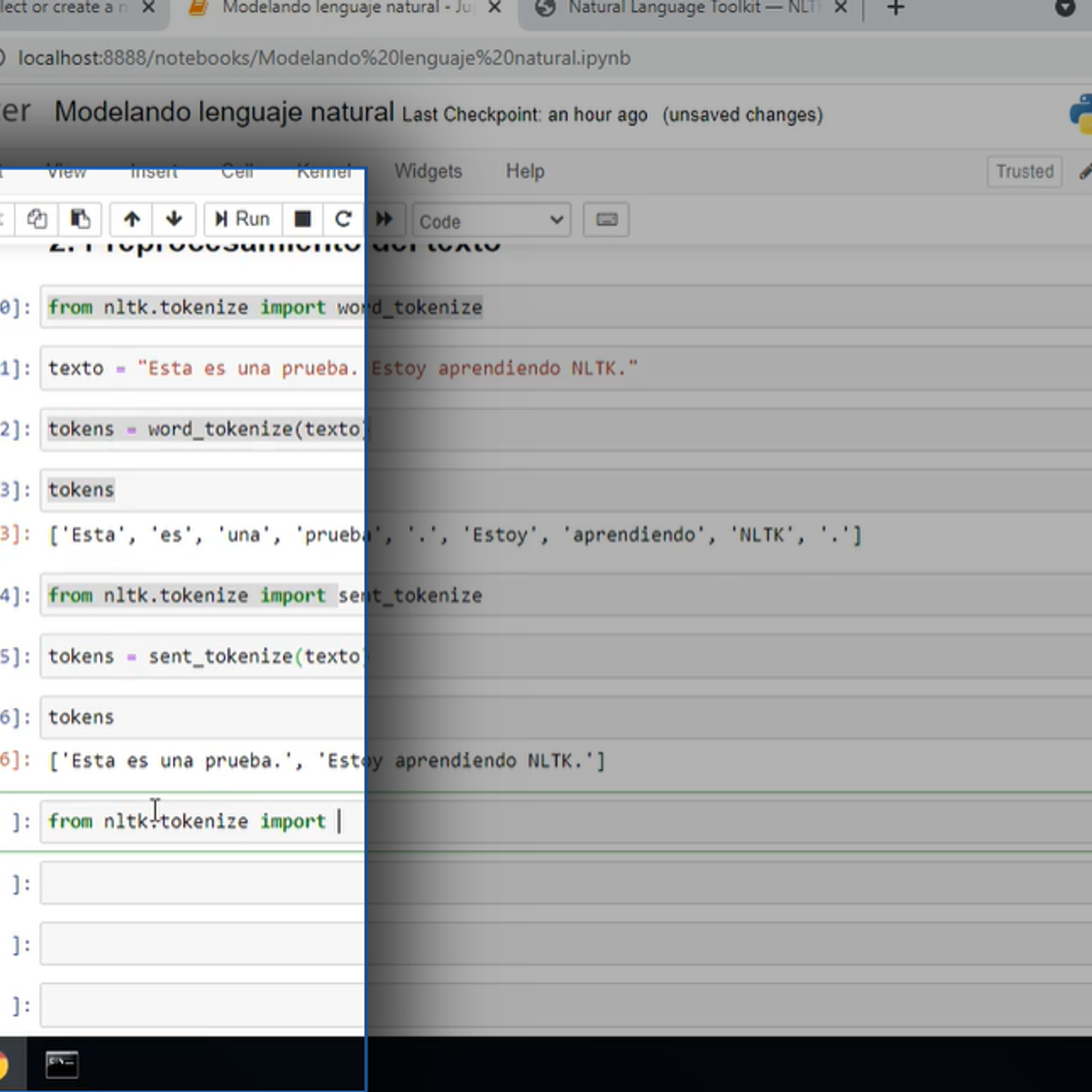

- NLTK (Natural Language Toolkit): A comprehensive library for a wide range of symbolic and statistical NLP tasks, excellent for learning fundamental concepts.

- spaCy: Known for its speed and efficiency, spaCy is designed for production-level NLP and offers pre-trained models for various languages and tasks.

- scikit-learn: A versatile machine learning library that includes tools for text preprocessing, feature extraction (like TF-IDF), and various classification and clustering algorithms applicable to text data.

- Gensim: Specializes in topic modeling and document similarity analysis, implementing algorithms like Latent Semantic Analysis (LSA) and Latent Dirichlet Allocation (LDA), as well as Word2Vec.

- Transformers by Hugging Face: Provides access to thousands of pre-trained transformer models (like BERT, GPT-2, RoBERTa) for state-of-the-art NLP tasks, along with tools for fine-tuning them.

Beyond Python, other languages like R also have packages for text mining (e.g., `tm`, `tidytext`). Access to open datasets is equally crucial. Many universities, research institutions, and companies release datasets for public use. Some popular sources include:

- UCI Machine Learning Repository: A vast collection of datasets for various machine learning tasks, including many text-based datasets.

- Kaggle Datasets: Hosts a wide array of datasets, often associated with data science competitions, covering diverse topics and text types.

- Papers With Code: A great resource for finding datasets associated with research papers, particularly in NLP and machine learning.

- Specific NLP datasets: Collections like the Penn Treebank (for syntactic parsing), SQuAD (for question answering), IMDB movie reviews (for sentiment analysis), and various corpora for different languages.

Leveraging these open-source tools and datasets allows learners and practitioners to experiment, build sophisticated applications, and contribute to the ongoing advancements in text processing without the need for substantial upfront investment in proprietary software or data collection efforts.

Exploring courses that introduce tools for handling text data, even in specialized contexts, can broaden your understanding of available open-source capabilities.

Balancing Theoretical and Applied Knowledge

A successful journey in learning text processing, especially through self-direction, hinges on finding the right balance between theoretical understanding and practical application. Both aspects are crucial and reinforce each other. Simply learning to use libraries and tools without understanding the underlying principles can limit your ability to troubleshoot problems, adapt to new challenges, or develop innovative solutions. Conversely, focusing solely on theory without hands-on experience can make it difficult to translate knowledge into real-world impact.

Start by grasping the foundational theories. For example, when learning about TF-IDF, don't just learn how to call the function in a library; understand the mathematical intuition behind term frequency and inverse document frequency and why this weighting scheme is effective. Similarly, when studying word embeddings, try to understand the basic concepts of distributional semantics and how models like Word2Vec learn vector representations from context. Reading introductory textbooks, research papers, and well-written blog posts can help build this theoretical foundation.

Simultaneously, engage in applied learning. Implement the concepts you're studying by writing code, working through tutorials, and tackling small projects. For instance, after learning about regular expressions, practice writing patterns to extract different types of information from sample texts. When studying classification algorithms, apply them to a sentiment analysis task using a public dataset. This hands-on work will solidify your understanding of the theory and expose you to the practical challenges and nuances of working with real text data. As you progress, aim to contribute to open-source projects or develop your own more complex applications. This iterative process of learning theory, applying it in practice, reflecting on the results, and then revisiting the theory will lead to a much deeper and more robust understanding of text processing.

This book provides a practical approach to text mining, which can help bridge the gap between theory and application.

Career Opportunities in Text Processing

The demand for professionals skilled in text processing and Natural Language Processing (NLP) is robust and growing across various industries. As organizations increasingly recognize the value locked within their textual data, they are seeking individuals who can help them extract, analyze, and leverage this information.

Aspiring professionals should focus on building a strong portfolio of projects and staying updated with the latest advancements in the field, particularly in areas like deep learning and large language models. Networking with others in the field, attending conferences, and contributing to open-source projects can also open doors to exciting career opportunities. OpenCourser offers a Career Development section that can further guide you in navigating these paths.

Roles: NLP Engineer, Data Analyst, Research Scientist

Several distinct roles cater to individuals with text processing skills, each with a different focus and set of responsibilities. Some of the most common include NLP Engineer, Data Analyst (with a text specialization), and Research Scientist.

An NLP Engineer is primarily focused on designing, developing, and deploying software systems that can understand, interpret, and generate human language. They build applications such as chatbots, machine translation systems, sentiment analyzers, and information extraction tools. This role requires strong programming skills (often in Python), a deep understanding of NLP algorithms and machine learning models, and experience with NLP libraries and frameworks. NLP Engineers often work closely with software development teams to integrate NLP capabilities into larger products or platforms.

A Data Analyst with a specialization in text processing uses their skills to extract insights and trends from textual data. They might analyze customer feedback to identify areas for product improvement, track brand sentiment on social media, or examine survey responses to understand public opinion. While they also need programming and analytical skills, their focus is more on interpreting data and communicating findings to business stakeholders. They might use tools like SQL for data retrieval, Python or R for analysis, and visualization tools to present their results.

A Research Scientist in NLP or computational linguistics works on advancing the fundamental understanding and capabilities of language technologies. They often work in academic institutions or corporate research labs, conducting experiments, developing new algorithms and models, and publishing their findings in scholarly venues. This role typically requires an advanced degree (Ph.D. or Master's) and a strong background in research methodology, machine learning theory, and a specific area of NLP. They are at the forefront of innovation, pushing the boundaries of what's possible in the field.

Other related roles include Machine Learning Engineer (who may specialize in NLP models), Data Scientist (for whom text data is one of many data types they work with), and Computational Linguist (who often focuses on the intersection of linguistic theory and computational methods).

Industry Demand Trends (Tech, Healthcare, Finance)

The demand for text processing skills is surging across a multitude of industries, driven by the explosion of digital text data and the increasing recognition of its value. The tech industry is a major employer, with companies developing search engines, social media platforms, virtual assistants, and AI-powered applications all relying heavily on text processing and NLP expertise.

Healthcare is another sector experiencing significant growth in the application of text processing. Clinical notes, medical research papers, patient EMRs/EHRs, and biomedical literature represent vast sources of unstructured text. NLP is being used to extract critical information from these sources to improve patient care, accelerate medical research, enhance pharmacovigilance, and streamline administrative processes. For example, analyzing patient records can help identify individuals at risk for certain diseases, while processing research papers can speed up drug discovery.

The finance industry also shows strong demand. Financial institutions use text processing for tasks like sentiment analysis of news articles to predict market movements, fraud detection by analyzing textual communication, automated processing of legal documents and contracts, and enhancing customer service through chatbots and automated email responses. Regulatory compliance often involves sifting through large volumes of textual data, a task where text processing can offer significant efficiencies.

Other industries actively hiring text processing specialists include retail and e-commerce (for analyzing customer reviews and personalizing recommendations), legal services (for e-discovery and contract analysis), marketing and advertising (for understanding consumer sentiment and campaign effectiveness), and government and intelligence (for analyzing reports and open-source intelligence). As more businesses embrace data-driven decision-making, the need for professionals who can unlock insights from text will only continue to grow.

This course offers a specialized look into how text processing is applied within the clinical domain, reflecting the growing demand in healthcare.

Entry-Level vs. Senior Positions

Career progression in text processing, like in many tech fields, typically moves from entry-level positions to more senior and specialized roles. The expectations, responsibilities, and required skill sets evolve with this progression.

Entry-level positions often focus on more defined tasks and may involve supporting senior team members. An entry-level NLP Engineer or Data Analyst working with text might be responsible for data collection and cleaning, implementing existing algorithms under supervision, running experiments, and generating reports. These roles typically require a bachelor's degree in a relevant field (like Computer Science, Linguistics, or Data Science) and foundational knowledge of programming, basic text processing techniques, and some machine learning concepts. A strong portfolio of projects, internships, or contributions to open-source initiatives can be highly beneficial for securing an entry-level role. The average salary for an entry-level NLP engineer can range significantly based on location and other factors, but reports suggest figures around $116,000-$117,000 annually in the US.

Senior positions, such as Senior NLP Engineer, Lead Data Scientist (NLP), or Research Scientist, come with greater responsibility, autonomy, and complexity. Professionals in these roles are expected to lead projects, design and architect complex NLP systems, mentor junior team members, and make strategic decisions about technology choices and research directions. They often have a Master's or Ph.D. degree, several years of hands-on experience, a deep understanding of advanced NLP and machine learning techniques (including deep learning), and a track record of successful projects or publications. Senior roles also require strong problem-solving, communication, and leadership skills. Salaries for senior positions are considerably higher, reflecting the advanced expertise and impact on the organization. For instance, NLP engineers with 15+ years of experience can earn over $150,000 annually in the US.

Career growth can also lead to management roles, such as NLP Team Lead or Director of AI, or to highly specialized technical roles focusing on a niche area of research or development.

Freelance and Remote Work Possibilities

The nature of text processing work, which is often computer-based and project-oriented, lends itself well to freelance and remote work arrangements. This offers flexibility for both professionals and companies seeking specialized talent.

Many companies, from startups to large enterprises, engage freelance NLP engineers or text processing consultants for specific projects. This could involve developing a custom sentiment analysis tool, building a specialized chatbot, or performing a one-time analysis of a large text dataset. Freelancers in this field typically need a strong portfolio, excellent self-management skills, and the ability to clearly communicate with clients about project requirements and progress. Online freelancing platforms often list projects related to NLP, machine learning, and data analysis, providing a marketplace for these skills.

Remote work opportunities have also become increasingly common, even for full-time positions. Advances in communication and collaboration technologies have made it easier for teams to work effectively from different locations. For text processing professionals, this means a wider range of job opportunities, not limited by geographical constraints. Companies benefit by being able to access a larger talent pool. Whether freelancing or in a full-time remote role, strong communication skills, discipline, and the ability to work independently are crucial for success.

For those looking to enhance their skills for remote or freelance work, consider courses that offer flexible learning and project-based outcomes.

Ethical Considerations in Text Processing

As text processing technologies become more powerful and pervasive, it is crucial to address the ethical considerations that arise from their development and deployment. These technologies can have significant societal impacts, and a responsible approach is necessary to mitigate potential harms.

Bias in Training Data and Algorithms

One of the most significant ethical challenges in text processing is the issue of bias in training data and algorithms. Machine learning models, including those used for NLP, learn patterns and relationships from the data they are trained on. If this training data reflects existing societal biases related to gender, race, ethnicity, age, or other characteristics, the models are likely to learn and even amplify these biases.

For example, word embeddings trained on large, uncurated text corpora from the internet have been shown to associate certain professions more strongly with one gender than another (e.g., "doctor" with "man" and "nurse" with "woman"). Similarly, sentiment analysis models might disproportionately assign negative sentiment to text associated with certain demographic groups if the training data contains such biases. These biases can lead to unfair or discriminatory outcomes when the models are deployed in real-world applications, such as resume screening, loan applications, or content moderation.

Addressing bias requires a multi-faceted approach. This includes careful curation of training datasets to ensure diversity and representativeness, developing techniques to detect and mitigate bias in models, and promoting fairness-aware machine learning. Researchers and practitioners are actively working on methods for debiasing word embeddings, creating fairer algorithms, and developing metrics to assess model fairness. Transparency in how models are trained and what data they use is also crucial for identifying and addressing potential biases.

Privacy Concerns with Text Data

Text data often contains sensitive and personal information, raising significant privacy concerns. Emails, private messages, medical records, financial documents, and even public social media posts can reveal details about individuals' lives, beliefs, and relationships. When these types of data are used to train or deploy text processing systems, there is a risk that this private information could be inadvertently exposed or misused.

For instance, language models trained on large email datasets might unintentionally memorize and reproduce snippets of personal conversations. Systems designed to analyze customer feedback could potentially link opinions to specific individuals without their explicit consent. The aggregation and analysis of text data, even if anonymized, can sometimes lead to the re-identification of individuals, especially when combined with other datasets.

Protecting privacy in text processing involves several strategies. Data minimization, which means collecting and using only the data that is strictly necessary for a given task, is a fundamental principle. Anonymization and pseudonymization techniques can help to de-identify data, although they are not always foolproof. Differential privacy offers a more formal mathematical framework for ensuring that the output of an analysis does not reveal information about any single individual in the dataset. Secure multi-party computation and federated learning are emerging techniques that allow models to be trained on decentralized data without requiring the raw data to be shared. Obtaining informed consent from individuals about how their text data will be used is also a critical ethical and legal requirement.

Regulatory Compliance (GDPR, CCPA)

In response to growing concerns about data privacy and the ethical implications of AI, governments and regulatory bodies around the world are implementing laws and guidelines that impact text processing. Two prominent examples are the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States. These regulations establish rules for how organizations can collect, process, and store personal data, including textual data.

GDPR, for instance, grants individuals significant rights over their personal data, including the right to access, rectify, and erase their data, as well as the right to data portability and the right to object to certain types of processing. It mandates that organizations obtain explicit consent for data processing and implement appropriate technical and organizational measures to protect data. CCPA provides similar rights to California consumers, including the right to know what personal information is being collected and the right to opt-out of the sale of their personal information.

For professionals and organizations involved in text processing, compliance with these and other relevant regulations is crucial. This involves understanding the legal requirements related to data handling, implementing privacy-by-design principles in system development, conducting data protection impact assessments, and ensuring transparency with users about data practices. Failure to comply can result in significant financial penalties and reputational damage. As the regulatory landscape for AI and data privacy continues to evolve, staying informed about new laws and guidelines is an ongoing responsibility.

Responsible AI Practices

Beyond specific issues like bias and privacy, the broader concept of Responsible AI encompasses a commitment to developing and deploying artificial intelligence systems, including text processing technologies, in a way that is ethical, transparent, accountable, and beneficial to society. This involves considering the potential impacts of these technologies on individuals, communities, and the environment throughout their entire lifecycle, from design and development to deployment and decommissioning.

Key principles of Responsible AI often include:

- Fairness and Non-Discrimination: Ensuring that AI systems do not perpetuate or amplify unjust biases and treat all individuals equitably.

- Transparency and Explainability: Making AI systems understandable, so that their decision-making processes can be scrutinized and trusted. This is particularly important for "black box" models where the internal workings are not immediately obvious.

- Accountability: Establishing clear lines of responsibility for the outcomes of AI systems, including mechanisms for redress if things go wrong.

- Privacy and Security: Protecting personal data and ensuring that AI systems are robust against malicious attacks.

- Safety and Reliability: Designing AI systems that are safe, perform reliably as intended, and have mechanisms to prevent unintended harm.

- Human Oversight: Ensuring that humans retain appropriate levels of control and can intervene when necessary.

- Societal and Environmental Well-being: Considering the broader societal consequences and striving to use AI for positive impact, while also being mindful of the environmental footprint of developing and running large-scale AI models.

Adopting Responsible AI practices requires a cultural shift within organizations, involving not just technical teams but also legal, ethical, and business stakeholders. It often involves developing internal ethical guidelines, establishing oversight committees, conducting regular audits, and engaging in ongoing dialogue about the ethical implications of AI technologies. Many organizations and research consortia are actively developing frameworks and tools to support the implementation of Responsible AI.

Industry Applications of Text Processing

Text processing technologies have moved beyond research labs and are now integral to a wide array of applications across numerous industries. These applications leverage the ability of computers to understand and manipulate text to improve efficiency, gain insights, and create new products and services.

Sentiment Analysis in Market Research

Sentiment analysis, also known as opinion mining, is a powerful application of text processing used extensively in market research. It involves automatically determining the emotional tone expressed in a piece of text—whether it's positive, negative, or neutral. Businesses use sentiment analysis to gauge public opinion about their products, services, brands, or even specific marketing campaigns.

The raw material for sentiment analysis often comes from social media platforms, online reviews, customer surveys, news articles, and discussion forums. By processing this vast amount of textual data, companies can gain real-time insights into how consumers perceive them and their offerings. For example, a sudden spike in negative sentiment on Twitter regarding a new product launch can alert a company to potential issues that need immediate attention. Conversely, identifying key themes in positive reviews can help businesses understand what they are doing well and reinforce those strengths.

Sentiment analysis tools typically use machine learning algorithms trained on labeled datasets of text. These models learn to associate certain words, phrases, and linguistic patterns with different sentiments. More advanced systems can even detect nuanced emotions like sarcasm or identify sentiment towards specific aspects or features mentioned in the text. The insights derived from sentiment analysis help businesses make more informed decisions regarding product development, marketing strategies, customer service improvements, and overall brand management.

This course could provide a practical understanding of how to analyze market data, including textual feedback, using Python.

Chatbots and Customer Service Automation

Chatbots and automated customer service systems are among the most visible applications of text processing and Natural Language Processing (NLP). These systems are designed to understand customer queries written in natural language and provide relevant responses, automate routine tasks, and offer 24/7 support.

The core of a chatbot's ability to interact effectively lies in its text processing capabilities. When a user types a question or statement, the chatbot must first process this input. This involves tokenization (breaking the input into words), intent recognition (understanding what the user wants to achieve), and entity extraction (identifying key pieces of information, like product names or order numbers). Based on this understanding, the chatbot then retrieves an appropriate response from a knowledge base or generates a new response using natural language generation (NLG) techniques.

Chatbots are used across various industries, including e-commerce (answering product questions, tracking orders), banking (checking account balances, making transfers), healthcare (providing information, scheduling appointments), and IT support (troubleshooting common issues). By automating responses to frequently asked questions and handling simple tasks, chatbots can significantly reduce the workload on human customer service agents, allowing them to focus on more complex or sensitive issues. This not only improves efficiency and reduces costs but can also enhance customer satisfaction by providing instant support. As NLP technology continues to advance, chatbots are becoming more sophisticated, capable of handling more complex conversations and providing more personalized interactions.

Legal Document Analysis

The legal industry deals with vast quantities of textual data in the form of contracts, case files, statutes, regulations, and legal correspondence. Text processing technologies are increasingly being used to help legal professionals manage, analyze, and understand this information more efficiently and effectively.

One key application is e-discovery, the process of identifying and producing electronically stored information (ESI) relevant to a legal case. Text processing tools can sift through massive volumes of documents to find those containing specific keywords, concepts, or patterns, significantly speeding up the review process and reducing costs. Another important use case is contract analysis. NLP models can be trained to automatically extract key clauses, identify potential risks or obligations, and compare different versions of contracts. This can help lawyers review agreements more quickly and accurately.

Text processing is also used for legal research, helping lawyers and paralegals find relevant case law and statutes more effectively than traditional keyword searches. Furthermore, predictive analytics based on textual data from past cases can offer insights into potential case outcomes or litigation trends. While these tools are not intended to replace human legal expertise, they serve as powerful assistants, augmenting the capabilities of legal professionals and allowing them to focus on higher-value strategic tasks.

Healthcare Data Mining

Text processing plays a crucial role in healthcare data mining, enabling the extraction of valuable insights from the vast amounts of unstructured text generated in the healthcare domain. This includes clinical notes from physicians and nurses, patient discharge summaries, radiology and pathology reports, medical literature, and patient-reported outcomes.

One significant application is in improving clinical decision support. By analyzing clinical notes, NLP systems can help identify patient cohorts for clinical trials, detect adverse drug events, or flag patients at risk for specific conditions. For example, a system might analyze a patient's history and current symptoms described in free-text notes to suggest potential diagnoses or recommend appropriate tests. This can assist clinicians in making more informed and timely decisions.

Text processing is also vital for biomedical research. Researchers use NLP to mine scientific literature to discover new relationships between genes, diseases, and drugs, accelerating the pace of discovery. Pharmacovigilance, the monitoring of drug safety after they have been released to the market, benefits from text processing by analyzing sources like patient forums, social media, and adverse event reports to identify potential safety signals. Furthermore, text processing can help in public health surveillance by analyzing news reports or social media to track the spread of infectious diseases. As electronic health records become more prevalent, the ability to effectively mine the rich textual information they contain is becoming increasingly important for advancing both individual patient care and population health.

This course offers a specialized focus on the application of NLP in a clinical context, directly relevant to healthcare data mining.

These books delve into the broader areas of speech and language processing, which are foundational for many advanced healthcare data mining applications that involve analyzing spoken or written medical narratives.

Emerging Trends in Text Processing

The field of text processing is dynamic and constantly evolving, driven by advancements in artificial intelligence, increasing computational power, and the ever-growing volume of textual data. Several emerging trends are shaping the future of how we interact with and understand language.

Large Language Models (LLMs) and Their Limitations

Large Language Models (LLMs) represent a paradigm shift in text processing and Natural Language Processing. Models like GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), LLaMA, and PaLM have demonstrated remarkable capabilities in understanding, generating, and manipulating human language. They are trained on vast amounts of text data, enabling them to perform a wide range of tasks, often with minimal task-specific fine-tuning. These tasks include text generation, summarization, translation, question answering, and even code generation.

The impact of LLMs is already being felt across various industries, powering more sophisticated chatbots, enhancing search engines, and enabling new forms of content creation. However, despite their impressive abilities, LLMs also have significant limitations. One major challenge is the issue of "hallucinations," where models generate text that is plausible-sounding but factually incorrect or nonsensical. They can also perpetuate biases present in their training data, leading to unfair or discriminatory outputs. Furthermore, the reasoning capabilities of LLMs are still under development, and they can struggle with tasks requiring deep common-sense understanding or multi-step logical inference. The sheer size and computational cost of training and running these models also pose challenges related to accessibility and environmental impact. Researchers are actively working to address these limitations, focusing on improving factuality, reducing bias, enhancing reasoning, and developing more efficient model architectures.

This course delves into Generative AI, a field heavily influenced by the advancements in LLMs.

Multilingual Processing Challenges

As globalization and digital communication connect people across linguistic boundaries, the demand for effective multilingual text processing is rapidly increasing. While significant progress has been made, particularly for high-resource languages like English, Spanish, and Chinese, many challenges remain in developing systems that can accurately and robustly process a wide range of the world's languages.

One major hurdle is data scarcity. Many NLP models, especially large language models, require vast amounts of text data for training. For the majority of the world's thousands of languages (often referred to as low-resource languages), such large digital corpora simply do not exist. This makes it difficult to train high-performing models specifically for these languages.

Linguistic diversity itself presents challenges. Languages differ significantly in their morphology (word structure), syntax (sentence structure), and writing systems. Techniques that work well for one language family may not be easily transferable to another. For example, highly inflectional languages or languages with complex character systems require specialized tokenization and processing approaches. Furthermore, cultural nuances and context are deeply embedded in language, and accurately capturing these in a multilingual setting is a complex task. Cross-lingual transfer learning, where knowledge gained from high-resource languages is applied to low-resource languages, and the development of universal language representations are active areas of research aimed at addressing these challenges.

This course provides a hands-on introduction to building AI applications with LangChain.js, a framework that often involves working with and adapting models for various, potentially multilingual, tasks.

Low-Resource Language Support

A significant and growing focus within text processing and NLP is the development of technologies that can effectively support low-resource languages. These are languages for which there are limited digital text and speech data, as well as fewer pre-existing linguistic tools like parsers, taggers, and lexicons. The vast majority of the world's approximately 7,000 languages fall into this category, yet most NLP research and development has historically concentrated on a small number of high-resource languages.

The lack of resources poses substantial challenges for building NLP applications for these languages, hindering access to information and digital services for their speakers. Addressing this gap is crucial for digital inclusivity and preserving linguistic diversity. Researchers are exploring various techniques to overcome data scarcity. Transfer learning, where models trained on high-resource languages are adapted for low-resource languages, is a promising approach. This often involves techniques like cross-lingual word embeddings and multilingual pre-trained models (e.g., mBERT, XLM-R) that learn representations shared across multiple languages.

Other strategies include unsupervised and semi-supervised learning methods that can leverage unlabeled data (which may be more readily available than labeled data), active learning to intelligently select the most informative data points for manual annotation, and community-based efforts to create and share linguistic resources. The development of morphological analyzers and other basic linguistic tools for low-resource languages is also a critical foundational step. Initiatives like Masakhane, focused on NLP for African languages, exemplify the collaborative efforts being made to advance low-resource language technology.

For learners interested in how NLP can be applied to diverse languages, this Spanish-language course on NLTK could offer insights, even if the focus language differs from their target low-resource language.

Integration with Multimodal AI Systems

A fascinating emerging trend is the integration of text processing with multimodal AI systems. Traditional AI systems often focus on a single type of data, such as text, images, or audio. However, human understanding of the world is inherently multimodal; we seamlessly combine information from various senses. Multimodal AI aims to build systems that can process, understand, and generate information from multiple modalities simultaneously.

In this context, text processing plays a crucial role in enabling AI to understand the linguistic component of multimodal data. For example, a system might analyze an image along with its textual caption to gain a richer understanding of the scene. Another application is video analysis, where text processing can be used to analyze spoken dialogue (transcribed to text), on-screen text, and even textual descriptions of actions or events occurring in the video. This allows for more comprehensive video search, summarization, and content understanding.

The challenges in multimodal AI often involve effectively fusing information from different modalities and learning joint representations that capture the relationships between them. Techniques from text processing, such as attention mechanisms developed in transformer models, are being adapted to help align and integrate textual information with visual or auditory data. Applications of multimodal AI with a strong text component include image captioning (generating textual descriptions of images), visual question answering (answering questions about an image using both visual and textual reasoning), and creating more engaging and context-aware conversational agents that can understand and respond to both text and other sensory inputs. This integration promises to lead to more intelligent and human-like AI systems.

These books provide a deep dive into the theoretical underpinnings of how language is structured and understood, which is essential for developing sophisticated multimodal AI systems that can effectively process and integrate textual information.

Frequently Asked Questions

Navigating the world of text processing can bring up many questions, especially for those considering it as a career path or a new area of study. Here are answers to some frequently asked questions.

What programming languages are essential for text processing?

While several programming languages can be used for text processing, Python has emerged as the de facto standard in the field. Its popularity stems from its gentle learning curve, readability, and, most importantly, its extensive ecosystem of libraries specifically designed for text processing, Natural Language Processing (NLP), and machine learning. Libraries such as NLTK, spaCy, scikit-learn, Gensim, and Hugging Face's Transformers provide powerful tools that simplify many complex tasks.

R is another language popular in statistical computing and data analysis, and it also offers robust packages for text mining (e.g., `tm`, `tidytext`, `quanteda`). Java and Scala are sometimes used in enterprise-level applications or big data environments (e.g., with Apache Spark) due to their performance and scalability. Perl, historically strong in text manipulation due to its powerful regular expression capabilities, still finds use, though Python has largely superseded it for newer projects. For foundational tasks or performance-critical components, C++ might be employed. However, for most practitioners, especially those starting, a strong command of Python will provide the broadest access to tools, communities, and job opportunities in text processing.

These courses offer practical programming experience relevant to text processing tasks.

How competitive are entry-level roles in this field?

Entry-level roles in text processing and Natural Language Processing (NLP) can be competitive, largely due to the growing interest in AI and data science fields. Many aspiring data scientists and software engineers are drawn to the exciting challenges and impactful applications of language technology. However, the demand for these skills is also consistently high and projected to grow, which creates numerous opportunities.

To stand out as an entry-level candidate, it's crucial to have more than just theoretical knowledge. A strong portfolio of practical projects is highly valued by employers. This could include personal projects, contributions to open-source NLP libraries, or relevant internship experiences. Demonstrating proficiency in Python, familiarity with core NLP libraries, and a solid understanding of machine learning concepts are usually expected. Experience with version control systems like Git is also a plus.

Networking can play a significant role. Attending industry meetups (virtual or in-person), participating in online forums, and connecting with professionals in the field can provide valuable insights and potential job leads. While competition exists, candidates who can showcase practical skills, a genuine passion for the field, and a willingness to learn continuously are well-positioned to secure entry-level roles. Starting salaries can be attractive; for instance, the average for an NLP engineer with 0-1 years of experience in the US is around $117,000, though this varies by location and company.

Can text processing skills transition to other AI domains?

Yes, absolutely. Text processing skills are highly transferable and form a strong foundation for transitioning into other domains within Artificial Intelligence (AI). Many of the core concepts and techniques used in text processing have broader applicability across the AI landscape.

For example, machine learning is a fundamental component of modern text processing. The experience gained in training, evaluating, and deploying machine learning models for tasks like text classification or sentiment analysis is directly relevant to other AI applications, such as image recognition, predictive analytics in finance, or recommendation systems. Similarly, deep learning techniques, particularly architectures like transformers, which have revolutionized NLP, are also being successfully applied to other areas like computer vision and speech recognition.

Data preprocessing and feature engineering skills, which are critical in text processing for cleaning data and converting it into a usable format for algorithms, are universally important in any data-driven AI field. Furthermore, the problem-solving mindset, analytical thinking, and programming proficiency developed while working on text processing challenges are valuable assets in any technical AI role. Therefore, a background in text processing can serve as an excellent springboard for exploring and specializing in various other exciting areas of artificial intelligence.

This course on Generative AI can be a good next step for those looking to apply their text processing knowledge to a broader AI context.

What industries hire the most text processing specialists?

Text processing specialists are in demand across a wide array of industries, as organizations in virtually every sector are grappling with and seeking to derive value from large volumes of textual data. However, some industries stand out for their particularly high hiring rates.

The Technology sector is a primary employer. This includes large tech companies developing search engines, social media platforms, cloud computing services, and AI-powered applications, as well as numerous startups focused on NLP-specific solutions. Finance and Insurance also heavily recruit text processing talent for applications like fraud detection, algorithmic trading (based on news sentiment), customer service automation, and regulatory compliance. The Healthcare and Pharmaceutical industries are rapidly expanding their use of text processing for analyzing clinical notes, medical research, patient feedback, and drug discovery.

Other significant sectors include Retail and E-commerce (for customer review analysis, recommendation systems, and chatbots), Marketing and Advertising (for sentiment analysis, brand monitoring, and content generation), Legal Services (for e-discovery and contract analysis), and Consulting firms that help other businesses implement data science and AI solutions. Government and defense sectors also employ text processing specialists for intelligence analysis and information management. The breadth of industries highlights the versatility and widespread applicability of text processing skills.

Is advanced mathematics required for most roles?

The level of advanced mathematics required for roles in text processing can vary significantly depending on the specific position and its focus. For many applied roles, such as an NLP Engineer or a Data Analyst working with text, a strong intuitive understanding of core mathematical concepts is more critical than the ability to derive complex proofs from scratch.

A solid grasp of linear algebra is very helpful, as text data is often represented as vectors and matrices. Basic probability and statistics are essential for understanding machine learning algorithms, evaluating model performance, and interpreting results. Calculus, particularly concepts related to optimization (like gradient descent), is fundamental to how many machine learning models are trained, especially in deep learning. However, for many practical roles, you will be using libraries and frameworks that implement these mathematical operations, so the emphasis is more on knowing when and how to use them and understanding their implications.

For research-oriented roles, such as a Research Scientist in NLP or a Ph.D. student, a deeper and more formal mathematical background is typically required. These positions often involve developing new algorithms or theoretical frameworks, which necessitates a more profound understanding of advanced mathematics. However, for individuals aiming for engineering or analyst roles, a good conceptual understanding combined with strong programming skills and practical experience is often sufficient. Many successful practitioners in the field have come from diverse educational backgrounds, and while a mathematical aptitude is beneficial, it's not always a strict prerequisite for entry if one is willing to learn the necessary concepts as they go.

How does text processing differ from general data analysis?

While text processing is a form of data analysis, it has distinct characteristics that differentiate it from general data analysis, which often deals with structured, numerical data.

The primary distinction lies in the nature of the data. Text is unstructured or semi-structured, meaning it doesn't fit neatly into rows and columns like data in a traditional database. It's inherently categorical and requires significant preprocessing to be converted into a format that machine learning algorithms or statistical methods can work with. This preprocessing phase, involving tasks like tokenization, stemming, lemmatization, stop-word removal, and vectorization (e.g., TF-IDF or word embeddings), is a core component of text processing and is often more complex and nuanced than the preprocessing required for structured numerical data.

Furthermore, text processing deals with the complexities of human language, including ambiguity, context-dependency, sarcasm, and evolving slang. General data analysis might focus on identifying correlations or trends in numerical datasets, while text processing often aims to understand meaning, sentiment, or intent. Specialized techniques from Natural Language Processing (NLP), such as part-of-speech tagging, named entity recognition, syntactic parsing, and semantic analysis, are unique to working with textual data. While both disciplines share foundational statistical and machine learning principles, text processing requires a specialized toolkit and a deeper understanding of linguistic concepts to effectively unlock insights from language data.

Consider exploring OpenCourser's Data Science category page for a broader look at data analysis courses, which can complement your text processing studies.

Embarking on a journey into text processing can be both challenging and immensely rewarding. The field is at the intersection of linguistics, computer science, and artificial intelligence, offering a dynamic landscape for continuous learning and innovation. Whether you are looking to pivot your career, enhance your current skills, or simply explore a fascinating domain, text processing provides a wealth of opportunities to engage with the power of language in the digital age. With dedication and the right resources, you can develop the expertise to unlock valuable insights from the ever-expanding universe of textual data.