Optimization Algorithms

Comprehensive Guide to Optimization Algorithms

Optimization algorithms are the engines that drive decision-making in a vast array of fields, from designing more efficient airplanes to crafting investment strategies and even helping machine learning models learn. At its core, an optimization algorithm is a procedure or method used to find the best possible solution from a set of available alternatives, typically by maximizing or minimizing some function. Imagine trying to find the lowest point in a hilly landscape while blindfolded; optimization algorithms are like the systematic strategies you might use, such as always taking a step in the steepest downward direction. This fundamental concept underpins much of modern technology and scientific inquiry, making it a vibrant and impactful area of study.

Working with optimization algorithms can be intellectually stimulating. It involves a fascinating blend of mathematical theory, computational thinking, and practical problem-solving. The thrill of designing an algorithm that can sift through immense complexity to pinpoint an optimal solution is a significant draw. Furthermore, the applications are incredibly diverse, offering the chance to contribute to breakthroughs in fields as varied as logistics, finance, healthcare, and artificial intelligence. The constant evolution of the field, with new challenges and algorithmic innovations emerging regularly, ensures that it remains a dynamic and engaging career path.

What are Optimization Algorithms?

At a high level, optimization algorithms are systematic methods designed to find the "best" solution to a problem from a set of possible solutions. This "best" solution is typically defined in terms of maximizing or minimizing a specific mathematical function, often called an objective function. Think of it like trying to find the highest peak in a mountain range (maximization) or the lowest valley (minimization). These algorithms provide a structured way to search through the landscape of possible solutions to identify the optimal one, or at least one that is very close to optimal.

The power of optimization algorithms lies in their ability to tackle complex problems that would be impossible for humans to solve through intuition or trial-and-error alone. They are the workhorses behind many of the conveniences and advancements we see today, from the way your GPS finds the fastest route, to how a financial institution might manage its investment portfolio, or how an e-commerce platform recommends products. The core idea is to make choices that lead to the best possible outcome given certain rules or limitations.

Defining the "Best" Solution: Objective Functions and Constraints

The heart of any optimization problem lies in its objective function. This is a mathematical expression that quantifies what you are trying to achieve. For example, if you are a delivery company, your objective function might be to minimize total delivery time or fuel consumption. If you are an investor, your objective function might be to maximize your portfolio's return while minimizing risk.

However, the search for the best solution is rarely without limits. These limits are known as constraints. Constraints define the boundaries of what is possible or acceptable. In the delivery company example, constraints might include the maximum carrying capacity of each truck, the available working hours of drivers, or the requirement that all packages reach their destinations. For the investor, constraints could be the total amount of capital available or limits on how much can be invested in a single asset. The set of all solutions that satisfy all constraints is known as the feasible region. Optimization algorithms search within this feasible region for the solution that yields the best value for the objective function.

Understanding how to formulate a problem in terms of an objective function and constraints is a critical first step in applying optimization algorithms. It requires a clear understanding of the problem's goals and limitations and the ability to translate these into a mathematical framework.

The Landscape of Solutions: Local vs. Global Optima

Imagine you are navigating a foggy mountain range, trying to find the absolute highest peak (the global optimum). You might climb a nearby hill, and once you reach its summit, it appears to be the highest point in your immediate vicinity (a local optimum). However, there might be a much taller mountain hidden in the fog elsewhere in the range. This analogy illustrates a common challenge in optimization: distinguishing between local optima and the true global optimum.

Many simpler optimization algorithms, particularly those that rely on making incremental improvements (like always stepping uphill), can get "stuck" on local optima. More sophisticated algorithms are designed to explore the solution space more broadly or to have mechanisms to escape local optima in search of the global one. The nature of the problem itself—specifically, whether it is "convex"—can also determine how difficult it is to find the global optimum. In a convex optimization problem, any local optimum is also a global optimum, which significantly simplifies the search. However, many real-world problems are non-convex, presenting a more complex and challenging landscape to navigate.

ELI5: Finding the Best Parking Spot

Imagine you're driving into a crowded parking lot, and you want to find the best parking spot. What does "best" mean? Maybe it means the spot closest to the entrance (your objective function is to minimize walking distance). But there are rules (constraints): you can only park in marked spots, and you can't park in handicapped spots unless you have a permit.

So, you start driving around. You see an open spot (a potential solution). Is it the best? Maybe there's one closer. You keep looking. If you just take the first spot you see, that might be a "local optimum" – it's good for the aisle you're in, but there might be a much better one just around the corner (the "global optimum").

Optimization algorithms are like different strategies for finding that best spot. One strategy might be to drive up and down every single aisle systematically. Another might be to quickly scan the aisles closest to the entrance first. Some strategies are faster, some are more likely to find the absolute best spot, and some are better suited for different types of parking lots (different types of problems). The goal is always the same: find the best spot according to your definition of "best," while following all the rules.

A Brief History of Optimization Algorithms

The quest to find the "best" way to do things is as old as humanity itself. However, the formal mathematical study of optimization has more recent origins, with significant developments occurring over centuries. Understanding this historical context can provide valuable insights into the evolution of the field and the foundations upon which modern algorithms are built.

The journey of optimization algorithms is a story of mathematical ingenuity coupled with the ever-increasing power of computation. Early theoretical work laid the groundwork, but it was the advent of computers that truly unlocked the potential to solve complex, large-scale optimization problems that were previously intractable.

Early Mathematical Seeds

The conceptual roots of optimization can be traced back to early mathematicians who pondered problems of maxima and minima. For instance, the development of calculus in the 17th century by Isaac Newton and Gottfried Wilhelm Leibniz provided fundamental tools for finding optimal values of functions by examining their derivatives. The "calculus of variations," which deals with finding functions that optimize certain integrals, emerged in this era and laid the groundwork for many optimization principles. Problems like finding the curve of quickest descent (the brachistochrone problem) were early examples of optimization thinking.

These early explorations were largely theoretical, focusing on the mathematical properties of optimal solutions. While not "algorithms" in the modern computational sense, they established the core mathematical language and concepts that would later be essential for developing practical optimization methods. This period was crucial for building the intellectual toolkit for future generations of optimizers.

The 20th Century: Breakthroughs and the Dawn of Computation

The 20th century witnessed transformative advancements in optimization, largely driven by the needs of wartime logistics and industrial planning. One of the most significant breakthroughs was the development of linear programming in the 1930s and 1940s by mathematicians like Leonid Kantorovich and Tjalling Koopmans, and later George Dantzig, who developed the simplex method in 1947. The simplex method provided a systematic and efficient way to solve problems where the objective function and constraints are all linear. This was a revolutionary development with immediate applications in resource allocation, scheduling, and production planning.

The post-war era saw a flourishing of operations research, a field heavily reliant on optimization techniques. The development of digital computers during this period was a game-changer. Suddenly, the complex calculations required by methods like the simplex algorithm could be performed at speeds previously unimaginable. This synergy between algorithmic development and computational power opened the door to solving increasingly complex optimization problems in diverse fields. Researchers began exploring non-linear programming, integer programming, and network optimization, among other areas.

The following book is a classic text that provides a comprehensive overview of numerical optimization techniques, covering many of the foundational algorithms developed during this influential period and beyond.

The Influence of Modern Computing and Machine Learning

The late 20th and early 21st centuries have been characterized by an explosion in computational power and the rise of machine learning, both of which have profoundly impacted the field of optimization. The ability to process vast amounts of data and perform complex calculations has enabled the development and application of more sophisticated optimization algorithms. Many modern algorithms are iterative, meaning they progressively refine a solution through many steps; increased computing speed makes such approaches feasible for larger and more intricate problems.

Machine learning, in particular, has become a major driver and consumer of optimization algorithms. Training a machine learning model inherently involves an optimization problem: finding the model parameters that best fit the training data. This has spurred research into new classes of algorithms, especially those suited for large-scale, non-convex optimization problems, such as variants of stochastic gradient descent. Furthermore, optimization techniques are now being used to design better machine learning architectures and to tune their hyperparameters automatically. The interplay between optimization and machine learning continues to be a fertile ground for innovation.

These books delve into the crucial role of optimization in machine learning and the specific types of algorithms employed.

For those interested in the broader context of how data drives decision-making, often with optimization at its core, these topics are highly relevant.

Key Concepts and Terminology in Optimization

To navigate the world of optimization algorithms effectively, it's essential to understand some fundamental concepts and terminology. These ideas form the building blocks for discussing, designing, and applying optimization methods. While some concepts can be mathematically nuanced, we'll aim for intuitive explanations, often using analogies to make them more accessible, especially for those new to the field or considering a career pivot.

Think of this section as learning the basic vocabulary and grammar of optimization. Once you grasp these core ideas, you'll be better equipped to understand the different types of algorithms and their applications. It's like learning the rules of a game before you can play effectively; understanding these concepts is key to mastering optimization.

Objective Functions, Constraints, and Feasible Regions Revisited

As we touched upon earlier, the objective function is the mathematical expression you aim to maximize or minimize. It's the "score" you're trying to get as high or as low as possible. For example, in designing a bridge, the objective function might be to minimize the total weight of materials used, or to maximize its strength.

Constraints are the rules of the game – the limitations or conditions that must be satisfied. For our bridge, constraints might include a minimum load-bearing capacity, maximum allowable stress on any component, or limitations on the types of materials available. These constraints define the boundaries of what is a permissible solution.

The feasible region encompasses all possible solutions that satisfy every single constraint. It's the "playing field" where you search for the optimal solution. If a solution violates even one constraint, it's considered infeasible and is not a valid answer. The goal of an optimization algorithm is to find the point within this feasible region that gives the best possible value for the objective function.

Local vs. Global Optima: The Hill Climbing Analogy

Imagine you're a hiker trying to find the highest point in a vast, hilly national park (the global optimum). You start at some random location. A simple strategy (akin to a basic optimization algorithm) might be to always walk uphill. Eventually, you'll reach a peak where every direction around you is downhill. This is a local optimum. It's the highest point in its immediate neighborhood, but is it the highest peak in the entire park? Not necessarily. There could be a much taller mountain further away.

Many optimization algorithms, particularly those that make decisions based only on local information (like the slope of the terrain immediately around you), can get "stuck" at local optima. Finding the global optimum – the absolute best solution across the entire feasible region – can be much more challenging, especially in complex, "non-convex" landscapes with many peaks and valleys. Differentiating between these and designing algorithms that can find or approximate global optima is a central theme in optimization research.

This book offers a deep dive into convex optimization, a class of problems where local optima are also global optima, simplifying the search significantly.

The Significance of Convexity and Gradient-Based Methods

The concept of convexity is incredibly important in optimization. A problem is convex if its feasible region is a convex set (meaning if you take any two points in the region, the straight line connecting them is also entirely within the region) and its objective function is convex (for minimization) or concave (for maximization). Informally, for a minimization problem, a convex function looks like a "bowl." The key property of convex optimization problems is that any local optimum is automatically a global optimum. This makes them much easier to solve reliably and efficiently than non-convex problems.

Many powerful optimization algorithms leverage the gradient of the objective function. The gradient is a vector that points in the direction of the steepest increase of the function at a particular point. For minimization problems, moving in the opposite direction of the gradient (the direction of steepest decrease) is a natural strategy. This is the core idea behind gradient-based methods, such as the famous gradient descent algorithm. These methods iteratively take steps in the direction indicated by the gradient (or its negative) until they converge to an optimum. The existence and properties of gradients are central to their effectiveness. Calculus, particularly differential calculus, provides the mathematical tools for computing and using gradients.

These resources provide foundational knowledge in calculus and linear algebra, which are essential for understanding gradient-based methods and many other optimization techniques.

For a deeper theoretical understanding of convex optimization, these books are highly recommended.

Deterministic vs. Stochastic Approaches

Optimization algorithms can also be broadly categorized based on whether they are deterministic or stochastic (randomized).

A deterministic algorithm, given the same starting point and problem, will always follow the exact same sequence of steps and arrive at the exact same solution. Many classical optimization methods, like the simplex method for linear programming or standard gradient descent (with a fixed learning rate and initialization), are deterministic. They operate with a predictable, fixed logic.

In contrast, stochastic algorithms incorporate elements of randomness. This randomness can appear in various ways: perhaps the algorithm starts at a random point, or it makes random choices during its search process, or it uses a random subset of data at each step (as in stochastic gradient descent). Examples include genetic algorithms, simulated annealing, and particle swarm optimization. The advantage of stochastic approaches is often their ability to escape local optima and explore a wider range of the solution space, making them particularly useful for complex, non-convex problems. However, they typically don't guarantee finding the absolute global optimum, and running the same stochastic algorithm multiple times might yield slightly different results.

The following course introduces numerical methods and optimization, including stochastic approaches like stochastic gradient descent, implemented in Python.

Types of Optimization Algorithms

The world of optimization algorithms is vast and diverse, with a multitude of techniques tailored to different types of problems and computational environments. Understanding the broad categories of these algorithms can help in selecting the right tool for a specific task and appreciating the rich tapestry of approaches developed by researchers and practitioners. We will explore some of the most prominent families of optimization algorithms, highlighting their core ideas and typical use cases.

Think of these different types of algorithms as various tools in a workshop. Just as a carpenter wouldn't use a hammer for every task, an optimization specialist needs to understand which algorithm is best suited for the problem at hand. Some are designed for speed on specific problem structures, while others offer robustness for more complex, less-defined landscapes.

Gradient Descent and Its Variants

Gradient descent is arguably one of the most fundamental and widely used optimization algorithms, especially in the realm of machine learning. The core idea is simple: to find a local minimum of a function, one takes iterative steps in the direction of the negative gradient (the direction of steepest descent). Imagine rolling a ball down a hill; it naturally follows the steepest path downwards. The size of these steps is determined by a parameter called the "learning rate."

Several important variants of gradient descent have been developed to address its limitations and improve its performance in different scenarios:

- Batch Gradient Descent: Computes the gradient using the entire dataset in each iteration. This can be computationally expensive for large datasets but provides a stable estimate of the gradient.

- Stochastic Gradient Descent (SGD): Computes the gradient using only a single, randomly chosen data point (or a small "mini-batch") in each iteration. This is much faster per iteration and can help escape shallow local minima, but its path to the optimum can be noisy.

- Mini-Batch Gradient Descent: Strikes a balance between batch gradient descent and SGD by using a small, random subset of the data (a mini-batch) in each iteration. This is often the most practical approach for training large machine learning models.

Advanced variants like AdaGrad, RMSProp, and Adam further refine the learning rate adaptation during the optimization process, often leading to faster convergence and better results. Understanding gradient descent and its family is crucial for anyone working with large-scale data and machine learning models.

This course covers various optimization techniques, including gradient descent and its advanced versions like ADAGrad, RMSProp, and ADAM optimizer.

Evolutionary Algorithms

Evolutionary algorithms (EAs) are a fascinating class of optimization techniques inspired by the principles of biological evolution, such as reproduction, mutation, recombination, and selection. These algorithms maintain a "population" of candidate solutions. In each "generation" (iteration), solutions are evaluated based on the objective function (their "fitness"). Fitter solutions are more likely to be selected to "reproduce" and create new solutions for the next generation, often with some random variations (mutations) or by combining features of parent solutions (recombination).

Prominent examples of evolutionary algorithms include:

- Genetic Algorithms (GAs): Perhaps the most well-known type, GAs typically represent solutions as strings (like chromosomes) and use operations like crossover (mixing parts of two parent strings) and mutation (randomly flipping bits in a string) to generate new solutions.

- Swarm Intelligence Algorithms: These algorithms are inspired by the collective behavior of social animals or insects. Examples include Particle Swarm Optimization (PSO), inspired by bird flocking or fish schooling, and Ant Colony Optimization (ACO), inspired by the foraging behavior of ants. In PSO, for instance, individual "particles" (solutions) fly through the search space, influenced by their own best-found position and the best-found position of the entire swarm.

Evolutionary algorithms are particularly well-suited for complex, non-linear, and non-convex optimization problems where gradient information might be unavailable or unreliable. They are robust and can explore large search spaces effectively, though they may require significant computational resources.

Metaheuristics

Metaheuristics are high-level problem-independent algorithmic frameworks that provide a set of guidelines or strategies to develop heuristic optimization algorithms. The term "meta" implies "beyond" or "at a higher level," and "heuristic" refers to methods that find good (though not necessarily optimal) solutions by trial and error. Metaheuristics are often used for problems where finding an exact optimal solution is computationally infeasible, especially for large-scale combinatorial optimization problems.

Key characteristics of metaheuristics include their ability to escape local optima and explore a broad search space. They often incorporate mechanisms to balance "exploration" (searching new, unvisited areas of the solution space) and "exploitation" (refining good solutions already found). Some well-known metaheuristics include:

- Simulated Annealing (SA): Inspired by the annealing process in metallurgy, where a material is heated and then slowly cooled to reduce defects. SA starts with a high "temperature," allowing it to accept worse solutions occasionally (to escape local optima). As the temperature gradually decreases, the algorithm becomes more selective, converging towards a good solution.

- Tabu Search (TS): Uses a "tabu list" to keep track of recently visited solutions or moves, preventing the algorithm from cycling and encouraging it to explore new regions of the search space.

Metaheuristics are widely applied in logistics, scheduling, network design, and many other operational research domains. The following book provides an introduction to derivative-free optimization methods, which include many metaheuristics.

These books offer a broad perspective on algorithms and optimization techniques, including metaheuristics.

Linear, Quadratic, and Interior-Point Methods

For certain classes of optimization problems with specific mathematical structures, highly efficient specialized algorithms exist.

- Linear Programming (LP): Deals with problems where both the objective function and all constraints are linear functions of the decision variables. The simplex method, developed by George Dantzig, is a classic and still widely used algorithm for solving LPs. LP has vast applications in resource allocation, production planning, and network flows.

- Quadratic Programming (QP): Involves optimizing a quadratic objective function subject to linear constraints. QP problems arise in areas like portfolio optimization (where risk is often a quadratic function) and control theory.

- Interior-Point Methods: Represent an alternative to the simplex method for linear programming and can also be applied to convex quadratic programming and more general convex optimization problems (specifically, semidefinite programming and conic optimization). Unlike the simplex method, which moves along the edges of the feasible region, interior-point methods traverse the interior of the feasible region. For very large-scale LPs, interior-point methods can be significantly faster than the simplex method.

These methods are foundational in the field of Operations Research and are critical for solving many large-scale industrial problems efficiently. The following courses and books delve into these specific types of optimization.

This course provides an introduction to optimization with a focus on linear and non-linear problems, relevant for understanding these methods.

These books are excellent resources for learning about linear programming and its associated algorithms.

This topic is central to many of these methods.

Applications in Industry and Research

Optimization algorithms are not just theoretical constructs; they are powerful tools that drive efficiency, innovation, and decision-making across a multitude of industries and research domains. From streamlining global supply chains to designing life-saving drugs and powering the artificial intelligence that is reshaping our world, the impact of optimization is profound and pervasive. Understanding these applications can highlight the real-world relevance and career opportunities associated with this field.

The ability to find the "best" way to do something, given a set of constraints and objectives, is a universal need. This is why skills in optimization are highly valued in so many different sectors. Whether it's saving costs, improving performance, managing risk, or enabling new capabilities, optimization algorithms are often at the heart of the solution.

Streamlining Supply Chains and Allocating Resources

One of the most significant and economically impactful applications of optimization algorithms is in supply chain logistics and resource allocation. Companies worldwide use optimization to decide how to source raw materials, manufacture products, manage inventory, and deliver goods to customers in the most efficient and cost-effective manner. This involves solving complex problems like vehicle routing (the "Traveling Salesperson Problem" and its variants), facility location (where to build warehouses or factories), production scheduling, and network design.

For example, airlines use optimization to schedule their flights, crews, and maintenance, minimizing costs while meeting passenger demand and regulatory requirements. Shipping companies optimize routes for their vessels to save fuel and time. In manufacturing, algorithms help determine the optimal production levels and product mix to maximize profit given limited resources like machinery, labor, and raw materials. The savings generated by even small improvements in these large-scale systems can amount to millions or even billions of dollars. According to a report by McKinsey, AI-based solutions in the supply chain can improve logistics costs by 15% and service levels by 65%.

This career directly involves applying optimization to supply chain and logistics challenges.

Optimizing Portfolios in the World of Finance

The financial industry heavily relies on optimization algorithms for a wide range of decision-making processes, most notably in portfolio optimization. The seminal work of Harry Markowitz in the 1950s introduced the concept of mean-variance optimization, which seeks to find an allocation of assets that maximizes expected return for a given level of risk (or minimizes risk for a given level of expected return). This is a classic quadratic programming problem.

Beyond basic portfolio allocation, optimization is used in risk management (e.g., calculating Value at Risk or Conditional Value at Risk), algorithmic trading (designing strategies that automatically buy and sell assets based on market signals and optimization criteria), derivative pricing, and credit scoring. Financial institutions also use optimization for capital budgeting, determining the most profitable way to invest limited capital across various projects. The speed and complexity of modern financial markets make optimization tools indispensable for staying competitive and managing risk effectively.

This career path often involves the use of sophisticated optimization models in finance.

Fine-Tuning Machine Learning Models: Hyperparameter Optimization

As mentioned earlier, the training of machine learning models is itself an optimization problem. However, another critical application of optimization within machine learning is hyperparameter tuning (also known as hyperparameter optimization). Machine learning models often have numerous "hyperparameters" – settings that are not learned from the data directly but are set before the training process begins. Examples include the learning rate in gradient descent, the number of trees in a random forest, or the architecture of a neural network.

Finding the optimal combination of hyperparameters can significantly impact a model's performance. Manually tuning these can be a tedious and often suboptimal process. Optimization algorithms, including grid search, random search, Bayesian optimization, and evolutionary algorithms, are used to automate this search, leading to better-performing models with less human effort. As machine learning models become more complex, the importance of efficient hyperparameter optimization continues to grow.

These courses touch upon hyperparameter tuning, a key application of optimization in machine learning.

This topic is intrinsically linked to this application.

Powering Energy Grids and Promoting Sustainability

Optimization plays a vital role in the energy sector, contributing to both economic efficiency and environmental sustainability. In energy grid optimization, algorithms are used to manage the generation, transmission, and distribution of electricity. This includes "unit commitment" problems (deciding which power plants to turn on and when, to meet fluctuating demand at minimum cost) and "optimal power flow" problems (determining how electricity should flow through the network to minimize losses and maintain stability).

With the increasing integration of renewable energy sources like solar and wind, which are intermittent by nature, optimization becomes even more critical for balancing supply and demand and ensuring grid reliability. Optimization is also used in designing more energy-efficient buildings, optimizing transportation systems to reduce fuel consumption and emissions, and managing natural resources sustainably. For example, algorithms can help determine optimal harvesting strategies in forestry or fishing to ensure long-term viability.

These topics relate to the broader context of applying optimization for sustainable solutions.

Formal Education Pathways in Optimization

For those aspiring to delve deep into the world of optimization algorithms, particularly with an eye towards research, advanced development, or specialized industry roles, a formal education pathway often provides the most comprehensive grounding. Universities worldwide offer programs and courses that cover the theoretical underpinnings, algorithmic techniques, and diverse applications of optimization. Understanding these pathways can help students and career pivoters make informed decisions about their educational journey.

A structured academic environment offers rigorous training in the mathematical and computational skills essential for mastering optimization. It also provides opportunities for research and collaboration with leading experts in the field. While self-learning is increasingly viable, a formal education can provide a depth of understanding and credentials that are highly valued in many sectors.

Building the Foundation: Undergraduate Prerequisites

A strong foundation in mathematics is paramount for anyone serious about studying optimization. Key undergraduate courses typically include:

- Calculus: Single and multivariable calculus are essential for understanding concepts like derivatives, gradients, Hessians, and Taylor series expansions, which are fundamental to many optimization algorithms, especially gradient-based methods.

- Linear Algebra: Concepts such as vectors, matrices, eigenvalues, eigenvectors, and matrix factorizations are crucial for representing and solving optimization problems, particularly linear programs and many numerical methods.

- Probability and Statistics: Important for understanding stochastic optimization algorithms, data-driven optimization, and for analyzing the performance of algorithms.

- Introduction to Computer Science/Programming: Proficiency in at least one programming language (Python is very common in the field) and understanding basic data structures and algorithms are necessary for implementing and experimenting with optimization techniques.

These foundational subjects provide the language and tools needed to tackle more advanced optimization coursework. Strong performance in these areas is often a prerequisite for graduate-level study.

These topics on OpenCourser can help build the necessary mathematical foundation.

Diving Deeper: Graduate-Level Coursework

Graduate programs (Master's or Ph.D.) in fields like Operations Research, Computer Science, Applied Mathematics, Industrial Engineering, Statistics, or specific engineering disciplines often offer specialized coursework in optimization. Common graduate-level topics include:

- Linear Programming: In-depth study of the simplex method, duality theory, interior-point methods, and applications.

- Nonlinear Programming: Covers unconstrained and constrained optimization for non-linear functions, including methods like gradient descent, Newton's method, conjugate gradient methods, and sequential quadratic programming.

- Convex Optimization: Focuses on the theory and algorithms for convex problems, which have the desirable property that any local optimum is global. Topics include convex sets and functions, duality, and algorithms for specific classes of convex problems like semidefinite programming.

- Integer Programming: Deals with optimization problems where some or all variables must be integers. This includes techniques like branch and bound, cutting planes, and heuristics.

- Stochastic Optimization and Dynamic Programming: Addresses problems involving uncertainty and sequential decision-making.

- Numerical Methods/Numerical Analysis: Explores the algorithms for solving mathematical problems numerically, which is crucial for implementing optimization techniques efficiently and robustly.

Institutions like Stanford's Institute for Computational & Mathematical Engineering (ICME) and MIT's Operations Research Center are renowned for their graduate programs and research in these areas. Courses in these programs often involve rigorous mathematical proofs, algorithmic design, and computational implementation.

These courses provide an introduction to optimization concepts often covered at the graduate level, though they are accessible for advanced undergraduates as well.

These books are standard texts in many graduate optimization courses.

Pushing the Boundaries: PhD Research Areas

A Ph.D. in an optimization-related field involves conducting original research to advance the state of the art. Current research areas are diverse and often interdisciplinary. Some active areas of PhD research include:

- Large-Scale Optimization: Developing algorithms that can efficiently solve problems with millions or even billions of variables and constraints, often driven by big data applications and machine learning.

- Non-Convex Optimization: Designing algorithms with theoretical guarantees or strong empirical performance for challenging non-convex problems, which are prevalent in modern machine learning (e.g., training deep neural networks).

- Distributed and Decentralized Optimization: Creating algorithms that can run on multiple processors or machines, or where data is distributed and cannot be moved to a central location (e.g., federated learning).

- Optimization under Uncertainty: Developing methods for robust optimization and stochastic programming where problem data is uncertain or noisy.

- Discrete Optimization: Advancing techniques for combinatorial optimization problems, which involve finding optimal discrete structures like graphs or sequences.

- Optimization for Machine Learning: A very active area focusing on new algorithms for training ML models, hyperparameter tuning, and designing ML architectures.

Researchers publish their findings in academic journals such as SIAM Journal on Optimization, Mathematical Programming, Operations Research, and Journal of Machine Learning Research, as well as in conference proceedings like NeurIPS, ICML, and INFORMS. For those deeply passionate about pushing the frontiers of knowledge in optimization, a Ph.D. offers the most intensive and rewarding path.

Certifications and Lifelong Learning

While formal degrees are the most common route for deep specialization, various certifications and online courses can supplement one's knowledge or provide focused training in specific optimization tools or application areas. Organizations like INFORMS (The Institute for Operations Research and the Management Sciences) offer resources and certifications for operations research professionals, which heavily involve optimization.

The field of optimization is constantly evolving, with new algorithms, software tools, and application domains emerging. Therefore, a commitment to lifelong learning is essential, even for those with advanced degrees. Attending conferences, participating in workshops, reading research papers, and engaging with online communities are all ways to stay current. Platforms like OpenCourser provide access to a wide range of courses, including those in mathematics and computer science, which can help individuals at all stages of their careers to learn and grow.

This course offers an introduction to how technology, including optimization, assists in decision-making, suitable for continuous learning.

Online and Self-Directed Learning in Optimization

While formal education provides a structured path, the digital age has opened up unprecedented opportunities for online and self-directed learning in optimization algorithms. Whether you are a curious learner looking to understand the basics, a student supplementing your university coursework, or a professional seeking to pivot or upskill, a wealth of resources is available at your fingertips. This path requires discipline and initiative but offers flexibility and accessibility.

Online learning platforms, open-source software, and vibrant online communities have democratized access to knowledge in fields like optimization. With the right approach, individuals can build a strong understanding of optimization principles and practical skills from virtually anywhere in the world. OpenCourser itself is a testament to this, offering a vast catalog to help learners find courses and books tailored to their needs. You can explore general programming or more specific data science topics to complement your optimization studies.

Leveraging Open-Source Tools and Libraries

One of the most powerful enablers for self-directed learning in optimization is the availability of high-quality open-source software. Python, with its rich ecosystem of scientific computing libraries, has become a de facto standard in many data science and optimization circles. Key libraries include:

- SciPy (optimize module): Provides a collection of optimization algorithms for various problem types, including linear programming, non-linear optimization, least squares, and root finding. It's an excellent starting point for implementing and experimenting with classical optimization techniques.

- PuLP, Pyomo, CVXPY: These are Python-based modeling languages that allow users to express optimization problems in a more natural, algebraic way. They then interface with various open-source and commercial solvers to find solutions. CVXPY is particularly powerful for convex optimization problems.

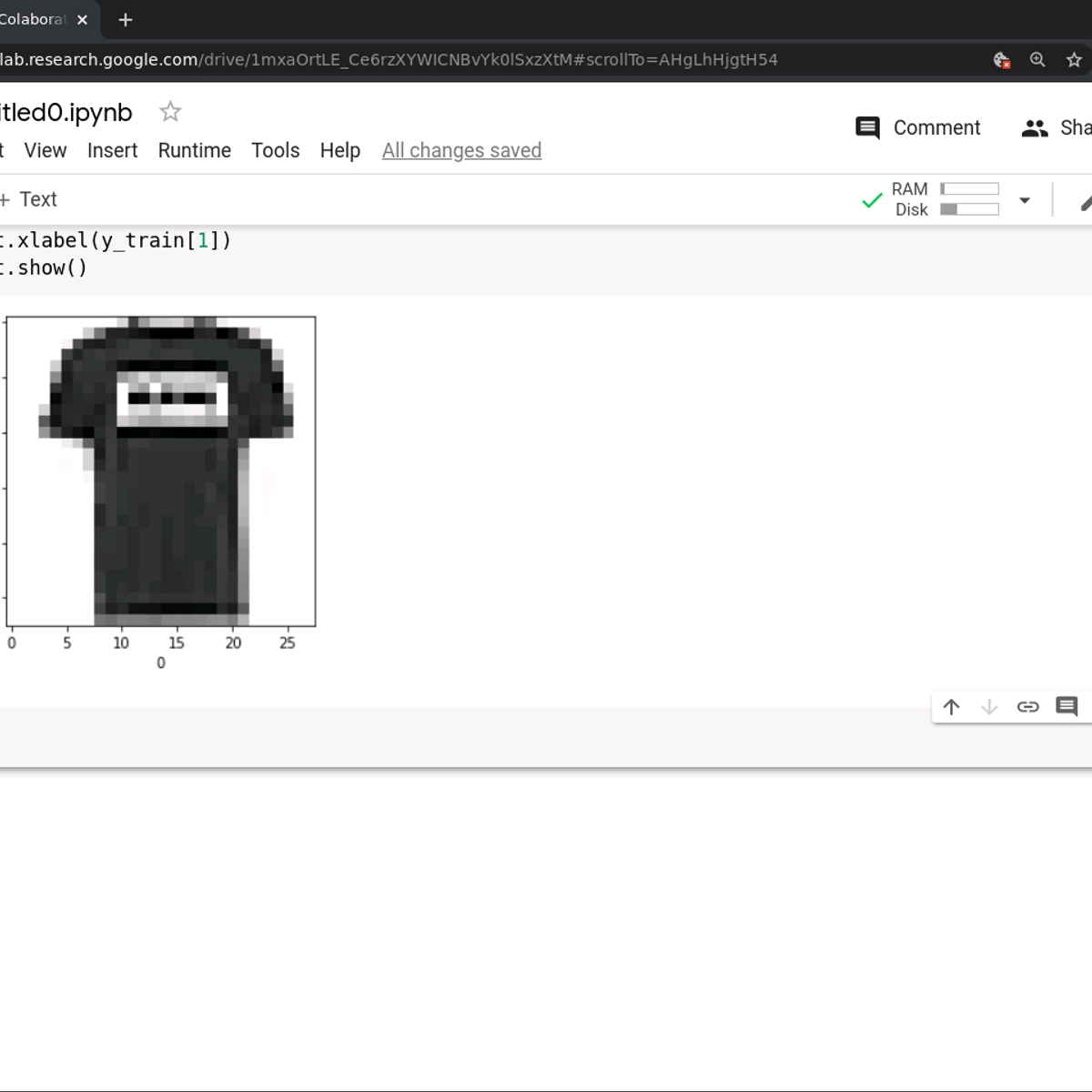

- TensorFlow and PyTorch: While primarily known as deep learning frameworks, these libraries have sophisticated automatic differentiation capabilities and include implementations of many gradient-based optimization algorithms crucial for training neural networks. Understanding their optimizers (like SGD, Adam, RMSProp) is key for machine learning applications.

Working with these tools allows learners to move beyond theory and gain hands-on experience in formulating and solving real optimization problems. Many online tutorials and documentation resources are available to get started.

This course focuses on numerical methods and optimization in Python, providing practical experience with relevant tools.

The Power of Project-Based Learning

For self-directed learners, project-based learning is an incredibly effective strategy. Instead of just passively consuming information, actively working on projects helps solidify understanding and develop practical skills. Start with small, well-defined problems and gradually tackle more complex ones. Some project ideas could include:

- Implementing a classic optimization algorithm (e.g., gradient descent, simplex method for small LPs) from scratch to understand its inner workings.

- Using an optimization library to solve a real-world-inspired problem, such as finding the optimal route for a delivery service (even a simplified version), scheduling tasks, or allocating a budget.

- Participating in online coding challenges or competitions (e.g., on platforms like Kaggle) that involve an optimization component.

- Replicating the results of a research paper or a textbook example.

Documenting your projects, perhaps on a personal blog or a platform like GitHub, can also be a valuable way to showcase your skills to potential employers or collaborators. OpenCourser's "Activities" section, often found on course pages, can also provide inspiration for projects to undertake before, during, or after a course to deepen understanding.

Balancing Theory with Practical Implementation

A successful self-directed learning journey in optimization requires a balance between understanding the underlying mathematical theory and mastering practical implementation. It's tempting to jump straight into coding, but without a grasp of the theoretical concepts (like convexity, convergence properties, or the assumptions behind an algorithm), it's easy to misuse tools or misinterpret results.

Conversely, getting bogged down in too much theory without applying it can make learning abstract and less engaging. A good approach is to alternate between studying a concept and then immediately trying to implement it or use a tool that embodies it. For example, after learning about gradient descent, try coding a simple version or using SciPy's implementation on a sample function. This iterative process of learning theory and then applying it reinforces understanding and builds confidence. Many online courses aim to strike this balance by combining video lectures with coding exercises.

These books are foundational for understanding the theory behind many optimization algorithms.

Engaging with Online Communities and Collaborative Platforms

Learning in isolation can be challenging. Fortunately, the internet hosts numerous communities and platforms where learners can connect, ask questions, share knowledge, and collaborate. Websites like Stack Overflow (especially the [math.stackexchange.com] and [datascience.stackexchange.com] communities), Reddit (e.g., r/optimization, r/learnmachinelearning), and specialized forums related to operations research or specific software tools can be invaluable resources.

Many MOOCs (Massive Open Online Courses) also have dedicated discussion forums where students can interact with peers and teaching assistants. Contributing to open-source optimization projects on platforms like GitHub is another excellent way to learn by doing and to collaborate with experienced developers. Don't hesitate to ask questions (after doing your due diligence and research, of course) and to share your own learning experiences. The collective wisdom of these communities can significantly accelerate your learning journey. Furthermore, OpenCourser's Learner's Guide offers articles on how to effectively learn from online resources and structure your self-study.

Career Progression and Opportunities in Optimization

A strong foundation in optimization algorithms opens doors to a wide array of career opportunities across diverse industries. As organizations increasingly rely on data-driven decision-making and seek to enhance efficiency, the demand for professionals skilled in optimization is robust and growing. The career path can vary significantly based on one's level of education, specialization, and industry focus, but generally offers intellectual stimulation and the chance to make a tangible impact.

For those considering this field, it's encouraging to know that the skills are transferable and highly valued. Whether you are just starting or looking to specialize further, understanding the typical career progression and the types of roles available can help you chart your course. Remember, even if a full-fledged career as an "Optimization Specialist" seems distant, incorporating optimization skills into your existing role can significantly enhance your capabilities and value.

Entry-Level Roles: Building Practical Experience

For individuals with a bachelor's or master's degree in a relevant field (like mathematics, computer science, engineering, operations research, or data science), several entry-level roles provide a great platform to apply and develop optimization skills. These roles often involve working as part of a larger team on specific components of optimization projects. Examples include:

- Data Analyst / Business Analyst: While broader roles, they often involve using optimization techniques (sometimes embedded in software tools) to analyze data, improve processes, or support decision-making. For instance, an analyst might use Excel Solver or Python scripts to optimize marketing spend or inventory levels.

- Operations Analyst / Operations Research Analyst: These roles are more directly focused on applying mathematical modeling and optimization to improve operational efficiency in areas like logistics, supply chain management, manufacturing, or service delivery. According to the U.S. Bureau of Labor Statistics, employment of operations research analysts is projected to grow 23 percent from 2023 to 2033, much faster than the average for all occupations.

- Junior Software Engineer (with an optimization focus): In some tech companies, entry-level software engineers might work on implementing or integrating optimization algorithms into larger software systems, for example, in routing engines, recommendation systems, or resource schedulers.

These positions provide valuable hands-on experience in formulating problems, selecting appropriate algorithms, using optimization software, and interpreting results in a business context.

These careers are common entry points or closely related fields.

Mid-Career Specialization: Deepening Expertise

With a few years of experience and possibly further education (like a specialized Master's degree or Ph.D., or significant on-the-job learning), professionals can move into more specialized and senior roles. These positions often require a deeper understanding of specific classes of optimization algorithms and their application in a particular domain.

- Machine Learning Engineer: Develops and deploys machine learning models, a significant part of which involves selecting and tuning optimization algorithms for model training and hyperparameter optimization. They need a strong grasp of gradient-based methods and techniques for large-scale optimization.

- Quantitative Analyst ("Quant"): Primarily in the finance industry, quants develop and implement mathematical models (often involving sophisticated optimization) for trading, risk management, and investment strategies.

- Senior Operations Research Consultant: Works with clients across various industries to solve complex business problems using advanced operations research and optimization techniques. This role often involves leading projects and communicating complex technical solutions to non-technical stakeholders.

- Algorithm Engineer/Scientist: Focuses specifically on designing, developing, and improving optimization algorithms themselves, or highly specialized applications of them, often in tech companies or research-oriented firms.

These roles typically involve more autonomy, responsibility for complex projects, and often mentoring junior team members. Continuous learning and staying abreast of new algorithmic developments are crucial at this stage.

These careers represent common mid-career specializations.

Research and Advanced Development: Pushing the Frontiers

For individuals with a Ph.D. in optimization or a related field, opportunities exist in academic research and advanced industrial research labs. These roles focus on pushing the boundaries of what is possible with optimization algorithms.

- University Professor/Researcher: Conducts fundamental research in optimization theory and algorithms, teaches courses, mentors graduate students, and publishes scholarly articles. They contribute to the core body of knowledge in the field.

- Research Scientist (Industry Labs): Many large technology companies (e.g., Google, Microsoft, Amazon, IBM) and specialized research firms have dedicated research labs where scientists work on developing novel optimization algorithms for challenging problems relevant to the company's products and services. This can involve areas like AI, logistics, cloud computing, or hardware design.

These positions require a deep passion for research, strong mathematical and analytical skills, and the ability to tackle highly complex, often ill-defined problems. The work can be incredibly rewarding, leading to breakthroughs that can have a broad impact.

This career path is common for those with PhDs focusing on research.

Freelancing and Consultancy: Applying Expertise Independently

Experienced optimization professionals may also choose to work as independent freelancers or consultants. This path offers flexibility and the opportunity to work on a diverse range of projects across different industries. Consultants might be brought in to solve specific optimization problems, develop custom solutions, provide training, or offer strategic advice on how a company can leverage optimization to improve its operations or products.

Success in freelancing or consultancy requires not only strong technical skills in optimization but also excellent communication, project management, and business development abilities. Building a strong professional network and a portfolio of successful projects is key. While challenging, this can be a very rewarding path for those with an entrepreneurial spirit and a desire to apply their expertise in varied contexts. For those looking to build a portfolio or gain diverse experience, exploring projects and courses on platforms like OpenCourser's Professional Development section can be beneficial.

Emerging Trends and Future Directions in Optimization

The field of optimization is far from static; it is a dynamic and evolving discipline, constantly adapting to new computational paradigms, tackling increasingly complex problems, and integrating with other rapidly advancing technologies, most notably artificial intelligence. For those immersed in or entering this field, particularly at the research or advanced development level, understanding these emerging trends is crucial for staying at the forefront of innovation.

The future of optimization promises exciting developments, driven by the relentless pursuit of more powerful, efficient, and broadly applicable algorithms. These trends often lie at the intersection of mathematics, computer science, and various application domains, reflecting the interdisciplinary nature of modern research.

The Dawn of Quantum Optimization

Quantum optimization algorithms represent a paradigm shift, leveraging the principles of quantum mechanics to potentially solve certain types of optimization problems much faster than classical computers. While still in its early stages of development and facing significant hardware challenges, quantum computing holds the promise of tackling problems currently intractable for even the most powerful supercomputers. Algorithms like the Quantum Approximate Optimization Algorithm (QAOA) and quantum annealing are being explored for their potential in areas like drug discovery, materials science, financial modeling, and complex logistical challenges.

Researchers are actively working on developing new quantum algorithms, understanding which classes of problems are best suited for quantum speedups, and building more stable and scalable quantum hardware. While widespread practical application is likely still some years away, the theoretical and experimental progress is rapid, making this a vibrant area for PhD students and academic researchers. For those interested in the cutting edge, exploring resources like quantum computing courses can provide foundational knowledge.

Federated Learning and Privacy-Preserving Optimization

In an era of increasing data privacy concerns and regulations, there's a growing need for optimization techniques that can learn from distributed datasets without requiring the raw data to be centralized. Federated learning is a machine learning paradigm where multiple decentralized edge devices or servers collaboratively train a model under the coordination of a central server, but without exchanging their local data samples. This requires specialized optimization algorithms that can work effectively in this distributed, privacy-sensitive setting.

Developing robust and efficient optimization algorithms for federated learning involves addressing challenges like communication bottlenecks, statistical heterogeneity of data across devices, and ensuring privacy guarantees. Techniques from differential privacy are often integrated into these optimization methods to provide formal privacy assurances. This intersection of optimization, machine learning, and privacy-enhancing technologies is a critical research area with significant real-world implications, particularly in healthcare, finance, and personal devices.

Synergy with AI-Driven Automated Decision-Making

Optimization algorithms have long been a cornerstone of automated decision-making systems. The current wave of advancements in Artificial Intelligence (AI), particularly in machine learning and deep learning, is creating new synergies and pushing the capabilities of these systems even further. AI models can learn complex patterns and make predictions from data, while optimization algorithms can use these predictions and learned models to make optimal decisions.

For example, an AI might predict customer demand for various products, and an optimization algorithm would then determine the optimal inventory levels and distribution strategy. Reinforcement learning, a type of machine learning where an agent learns to make decisions by interacting with an environment and receiving rewards or penalties, often relies heavily on optimization techniques to find optimal policies. The integration is becoming tighter, with research exploring end-to-end learning and optimization, where the decision-making process itself is learned or refined through AI. According to a McKinsey report, AI adoption continues to grow, and optimization is a key component of many AI applications.

This topic explores the broader field where optimization plays a critical role.

This course provides an introduction to how technology, including AI and optimization, supports decision-making processes.

Navigating Ethical Challenges and Algorithmic Bias

As optimization algorithms become more powerful and integrated into critical decision-making processes (e.g., in loan applications, hiring, criminal justice, and resource allocation), the ethical implications and potential for algorithmic bias are receiving increasing attention. An objective function, no matter how mathematically precise, is a human construct and can inadvertently encode societal biases if not carefully designed and audited.

Future research in optimization will increasingly need to address these challenges. This includes developing techniques for fairness-aware optimization, where algorithms explicitly try to mitigate bias and ensure equitable outcomes across different demographic groups. It also involves creating more transparent and interpretable optimization models, so that the decisions they make can be understood and scrutinized. The development of ethical guidelines and regulatory frameworks for the use of optimization and AI in sensitive applications is an ongoing societal and research endeavor.

Ethical Considerations in Optimization Algorithms

As optimization algorithms become increasingly embedded in the fabric of our society, making decisions that affect lives and livelihoods, it is paramount to consider the ethical implications of their design and deployment. While these algorithms are tools designed to achieve specific objectives efficiently, their application is not neutral. The choices made in defining objective functions, selecting data, and interpreting results can have profound societal consequences, sometimes perpetuating or even amplifying existing biases and inequities.

A responsible approach to optimization requires a keen awareness of these ethical dimensions. For practitioners, researchers, and policymakers alike, understanding these challenges is the first step towards developing and deploying optimization technologies in a way that is fair, transparent, and aligned with human values. This is not just a technical challenge but a socio-technical one, requiring interdisciplinary collaboration and ongoing critical reflection.

Bias in Objective Function Design

The objective function is the mathematical representation of the "goal" the optimization algorithm is trying to achieve. However, translating complex, real-world goals into a precise mathematical function can be fraught with challenges and potential biases. If the objective function inadvertently prioritizes certain outcomes over others in a way that disadvantages specific groups, the algorithm, even if perfectly "optimal" with respect to that function, can lead to unfair or discriminatory results.

For example, an algorithm designed to optimize hiring decisions based purely on maximizing predicted job performance might inadvertently discriminate against candidates from underrepresented backgrounds if the historical data used to train the performance prediction model reflects past biases. Similarly, an algorithm optimizing resource allocation for public services might disproportionately favor wealthier neighborhoods if "efficiency" is defined in a way that doesn't account for existing disparities in access. Carefully scrutinizing the assumptions embedded in objective functions and considering multiple, potentially conflicting objectives (multiobjective optimization) are crucial steps in mitigating such biases.

Environmental Impact of Computationally Intensive Algorithms

Many modern optimization algorithms, particularly those used in large-scale machine learning and complex simulations, can be extremely computationally intensive. Training large deep learning models or running extensive evolutionary algorithms can require vast amounts of energy, contributing to carbon emissions and environmental concerns. This is especially true when these computations are performed in data centers powered by fossil fuels.

There is a growing awareness and concern within the research community about the environmental footprint of AI and large-scale computation. Ethical considerations in optimization should therefore include the pursuit of more energy-efficient algorithms ("green AI" or "green optimization"). This might involve designing algorithms that converge faster with less computation, developing hardware that is more energy-efficient, or making conscious choices about the scale of models and computations undertaken, balancing performance with environmental impact. Researchers and practitioners have a role to play in promoting sustainable computational practices.

Transparency and Interpretability in Automated Decision Systems

When optimization algorithms are used to make high-stakes decisions that significantly impact individuals (e.g., in healthcare, finance, or the justice system), a lack of transparency and interpretability can be a major ethical concern. If an algorithm denies someone a loan or flags them as high-risk, but it's impossible to understand why the algorithm made that decision (the "black box" problem), it undermines accountability and the ability to contest or rectify errors.

There is a growing field of research focused on developing more interpretable machine learning models and optimization techniques. This includes methods for explaining the decisions of complex models, visualizing how algorithms arrive at their solutions, and designing systems where human oversight and intervention are possible. Ensuring that automated decision systems are transparent and that their reasoning can be scrutinized is essential for building trust and ensuring fairness. For those working in these areas, particularly in roles like finance and economics or public policy, understanding these aspects is increasingly important.

Regulatory Frameworks and Compliance

As the societal impact of AI and optimization algorithms grows, governments and regulatory bodies worldwide are beginning to develop frameworks and regulations to govern their use. These regulations may address issues like data privacy (e.g., GDPR), algorithmic bias, transparency, and accountability, particularly in sensitive sectors. For example, the European Union's AI Act is a significant step towards regulating artificial intelligence systems based on their risk level.

Professionals working with optimization algorithms, especially in industries subject to these emerging regulations, need to be aware of their compliance obligations. This may involve conducting impact assessments, ensuring data governance practices are robust, implementing mechanisms for algorithmic auditing, and being prepared to explain how their systems work and what measures are in place to mitigate risks. Ethical considerations are thus not just a matter of good practice but are increasingly becoming a legal and regulatory requirement.

Frequently Asked Questions (FAQs) about Optimization Algorithms

Embarking on a journey to learn about optimization algorithms, or considering a career in this field, naturally brings up many questions. This section aims to address some of the common queries that aspiring learners, career pivoters, and curious individuals might have. We hope these answers provide clarity and further guidance as you explore this fascinating and impactful domain.

What entry-level jobs use optimization algorithms?

Several entry-level roles can involve the use of optimization algorithms, often as part of a broader set of analytical or technical responsibilities. Positions such as Data Analyst, Business Analyst, Operations Analyst, or Junior Industrial Engineer frequently encounter situations where optimization can improve processes or decision-making. For example, an analyst might use tools with built-in optimization capabilities (like Excel Solver) or write simple scripts (perhaps in Python using libraries like SciPy) to solve specific problems related to resource allocation, scheduling, or basic logistics. While these roles may not require designing new algorithms from scratch, they do involve understanding how to formulate problems for optimization and interpret the results. A solid grasp of fundamental optimization concepts can be a significant asset. Many companies in logistics, manufacturing, finance, and consulting hire for such roles.

These careers are common starting points:

Can I transition into this field without a strong formal math/CS degree?

Transitioning into a field that heavily utilizes optimization algorithms without a traditional math or computer science degree is challenging but certainly not impossible, especially with the abundance of online learning resources. The key is to be realistic about the type of roles you're targeting and to be prepared to put in significant effort to build the necessary foundational knowledge. For roles that involve applying existing optimization tools and techniques rather than developing new algorithms, a strong portfolio of projects and practical skills gained through online courses and self-study can be very compelling.

Focus on building a solid understanding of core mathematical concepts like calculus and linear algebra, and gain proficiency in programming (Python is highly recommended). Online platforms like OpenCourser offer numerous courses in these prerequisite areas as well as introductory optimization. Start with practical applications and gradually delve deeper into the theory. If you're aiming for research or highly specialized algorithmic development roles, a formal advanced degree often becomes more critical. However, for many industry positions, demonstrated ability and a passion for problem-solving can open doors. Be prepared to start in a more general analytical role and specialize over time.

Consider these foundational topics to build your knowledge:

How competitive are research positions in optimization (academia and industry labs)?

Research positions in optimization, whether in academia (e.g., professorships, postdoctoral fellowships) or industry research labs (e.g., at companies like Google Research, Microsoft Research, IBM Research), are generally very competitive. These roles typically require a Ph.D. in optimization, operations research, computer science, applied mathematics, or a closely related field from a reputable institution.

Successful candidates usually have a strong publication record in top-tier journals and conferences, demonstrating original contributions to the field. They need deep theoretical knowledge, excellent mathematical and algorithmic skills, and often, strong programming abilities. For industry research labs, there's often an added emphasis on the potential for research to translate into impactful applications for the company. Networking, presenting at conferences, and securing strong letters of recommendation are also crucial. While highly competitive, the demand for top-tier optimization researchers remains strong due to the increasing complexity of problems and the drive for innovation in areas like AI and large-scale data analysis.

What industries hire the most optimization specialists?

Optimization specialists are sought after in a wide range of industries due to the universal need to improve efficiency, reduce costs, and make better decisions. Some of the industries that most actively hire optimization specialists include:

- Technology: Companies involved in software development, e-commerce, social media, search engines, and cloud computing hire optimization experts for everything from algorithm design for their core products (e.g., recommendation systems, ad serving, logistics for cloud resources) to improving their internal operations.

- Logistics and Supply Chain Management: This sector is a massive employer of optimization talent, with applications in vehicle routing, warehouse location, inventory control, and network design.

- Finance: Banks, investment firms, hedge funds, and financial technology (FinTech) companies use optimization for portfolio management, risk assessment, algorithmic trading, and fraud detection.

- Manufacturing: Optimization is crucial for production planning, scheduling, resource allocation, quality control, and facility layout.

- Energy: The energy sector uses optimization for grid management, resource extraction, renewable energy integration, and energy trading.

- Consulting: Management and technical consulting firms hire optimization specialists to help clients across various industries solve complex operational and strategic problems.

- Aerospace and Automotive: For design optimization, production processes, and autonomous systems.

- Healthcare: For treatment planning, resource scheduling, drug discovery, and healthcare system optimization.

The U.S. Bureau of Labor Statistics highlights that operations research analysts, who heavily use optimization, find employment across professional, scientific, and technical services, finance and insurance, manufacturing, and government.

Are optimization skills transferable to data science?

Yes, optimization skills are highly transferable and, in many ways, foundational to data science. Many core data science tasks, particularly in machine learning, are inherently optimization problems. For example, training a machine learning model involves finding the model parameters that minimize a loss function (an objective function) on the training data. Understanding optimization algorithms like gradient descent and its variants is therefore crucial for machine learning practitioners.

Furthermore, data scientists often need to solve problems related to resource allocation, A/B testing design, feature selection, and model interpretation, all of which can benefit from optimization techniques. The analytical and problem-solving mindset developed through studying optimization—breaking down complex problems, formulating them mathematically, and finding systematic solutions—is also directly applicable to data science. While data science is a broader field encompassing statistics, data visualization, and domain expertise, a strong grounding in optimization can be a significant advantage and a key differentiator. Many data scientists have backgrounds in operations research, applied math, or computer science, where optimization is a core component. Browsing courses in Data Science on OpenCourser can show this overlap.

These careers are closely related and often share skill sets:

How does AI automation affect career prospects in optimization?

The rise of AI and automation presents both challenges and opportunities for career prospects in optimization. On one hand, AI tools can automate some of the more routine tasks involved in applying optimization, and "autoML" (automated machine learning) platforms can automate aspects of model selection and hyperparameter tuning, which are optimization tasks. This might suggest a reduction in demand for certain types of optimization work.

However, AI is also creating new and more complex optimization problems. Designing, training, and deploying sophisticated AI systems often requires advanced optimization expertise. Moreover, the integration of AI with optimization is leading to more powerful decision-making tools across industries, increasing the demand for professionals who can work at this intersection. The focus may shift from routine application to more specialized roles involving the development of novel optimization algorithms for AI, ensuring the ethical and robust deployment of AI-driven optimization, and solving the highly complex optimization challenges that arise in large-scale AI systems. As Erik Brynjolfsson and Andrew McAfee noted in a Harvard Business Review article, "AI won't replace managers, but managers who use AI will replace those who don't." A similar principle applies: AI is unlikely to replace optimization experts, but optimization experts who can leverage AI will be increasingly valuable.

Concluding Thoughts

Optimization algorithms are a cornerstone of modern science, engineering, and business, providing the tools to make informed decisions and find the best possible solutions in a world of complex choices and constraints. From the foundational principles of calculus and linear algebra to the cutting-edge research in quantum computing and AI-driven optimization, this field offers a rich intellectual landscape and a wealth of opportunities for those willing to engage with its challenges.

Whether you are a student embarking on your educational journey, a professional considering a career shift, or simply a curious learner, the path to understanding optimization algorithms is one that rewards diligence and critical thinking. The skills acquired are not only valuable in specialized roles but also enhance problem-solving capabilities across a wide spectrum of disciplines. As technology continues to evolve and the demand for efficiency and intelligence in decision-making grows, the importance of optimization will only continue to expand. We encourage you to explore the resources available, including the vast catalog of courses and books on OpenCourser, and to consider the exciting possibilities that a deeper understanding of optimization algorithms can unlock.