Linear Algebra

Linear Algebra: A Foundational Pillar of Modern Quantitative Fields

Linear algebra is a branch of mathematics concerning vector spaces and linear mappings between such spaces. It includes the study of lines, planes, and subspaces, but is also concerned with properties common to all vector spaces. At its core, linear algebra deals with systems of linear equations, matrices, determinants, vector spaces, and linear transformations. While its origins can be traced back to the study of solving systems of linear equations, its concepts and techniques have become fundamental across numerous scientific and engineering disciplines.

Understanding linear algebra unlocks the ability to model and solve complex problems involving relationships between multiple variables. This mathematical toolkit is essential for anyone working with data, simulations, or optimization problems. The elegance of expressing intricate systems through matrices and vectors, and the power to manipulate these structures to find solutions or gain insights, are compelling aspects for many learners. Furthermore, its foundational role in cutting-edge fields like machine learning and quantum computing makes it an exciting area of study with significant real-world impact.

What is Linear Algebra?

Definition and Historical Context

Linear algebra is fundamentally the study of vectors, vector spaces (also called linear spaces), linear transformations, and systems of linear equations. Vectors, often visualized as arrows possessing both magnitude and direction, are elements of vector spaces. Matrices, rectangular arrays of numbers, provide a powerful way to represent and work with linear transformations – functions that preserve vector addition and scalar multiplication.

The history of linear algebra stretches back centuries, with early work on solving systems of linear equations found in ancient Babylonian and Chinese texts. Key developments occurred in the 17th century with the introduction of determinants by Seki Kōwa and Gottfried Leibniz, used initially to determine whether systems of linear equations had unique solutions. The 19th century saw the formalization of matrices and vector spaces by mathematicians like Arthur Cayley, James Joseph Sylvester, and Hermann Grassmann. The term "linear algebra" itself emerged later, reflecting the focus on linear combinations and transformations.

Over time, the focus shifted from merely solving equations to understanding the underlying structures and properties of vector spaces and transformations. This abstraction proved incredibly powerful, allowing the same mathematical framework to be applied to diverse problems across mathematics, science, and engineering. Today, linear algebra is an indispensable tool built upon centuries of mathematical refinement.

Key Motivations for Studying Linear Algebra

One primary motivation for studying linear algebra is its immense applicability. It provides the mathematical language and computational tools necessary to handle problems involving multiple interacting variables, which are ubiquitous in the real world. From modeling complex physical systems and analyzing large datasets to creating realistic computer graphics and optimizing resource allocation, linear algebra offers a systematic approach.

Beyond specific applications, linear algebra develops crucial abstract reasoning and problem-solving skills. Learning to think in terms of vector spaces and linear transformations cultivates an ability to identify underlying structures and relationships in complex scenarios. This mode of thinking transcends specific mathematical problems and enhances analytical capabilities applicable to many domains.

Furthermore, linear algebra serves as a critical prerequisite for many advanced areas of study, particularly in quantitative fields. Understanding concepts like eigenvalues, eigenvectors, and matrix decompositions is essential for delving into machine learning, data science, advanced statistics, quantum mechanics, control theory, and numerical analysis. Mastering linear algebra opens doors to deeper engagement with these cutting-edge disciplines.

These foundational courses offer a solid introduction to the core ideas and techniques of linear algebra.

For those seeking comprehensive texts, these books are highly regarded introductions.

Relationship to Other Mathematical Fields

Linear algebra does not exist in isolation; it is deeply intertwined with other branches of mathematics. Its most immediate connection is perhaps with calculus, particularly multivariable calculus. Concepts like derivatives and integrals of vector-valued functions rely heavily on linear algebraic ideas. The Jacobian matrix, composed of partial derivatives, represents the best linear approximation of a multivariable function near a given point, showcasing a direct link between differential calculus and linear transformations.

Abstract algebra, which studies algebraic structures like groups, rings, and fields, provides the formal framework for vector spaces. While linear algebra focuses specifically on vector spaces and linear maps, abstract algebra explores broader algebraic concepts. However, many techniques and ideas, such as the study of homomorphisms (structure-preserving maps), are shared between the two fields.

Functional analysis extends the methods of linear algebra and calculus to function spaces, which are infinite-dimensional vector spaces. Concepts like norms, inner products, and linear operators are generalized to these infinite-dimensional settings. Numerical analysis heavily relies on linear algebra for developing algorithms to solve large systems of linear equations, find eigenvalues, and perform matrix computations efficiently and accurately, often dealing with issues like stability and conditioning that arise in practical computation.

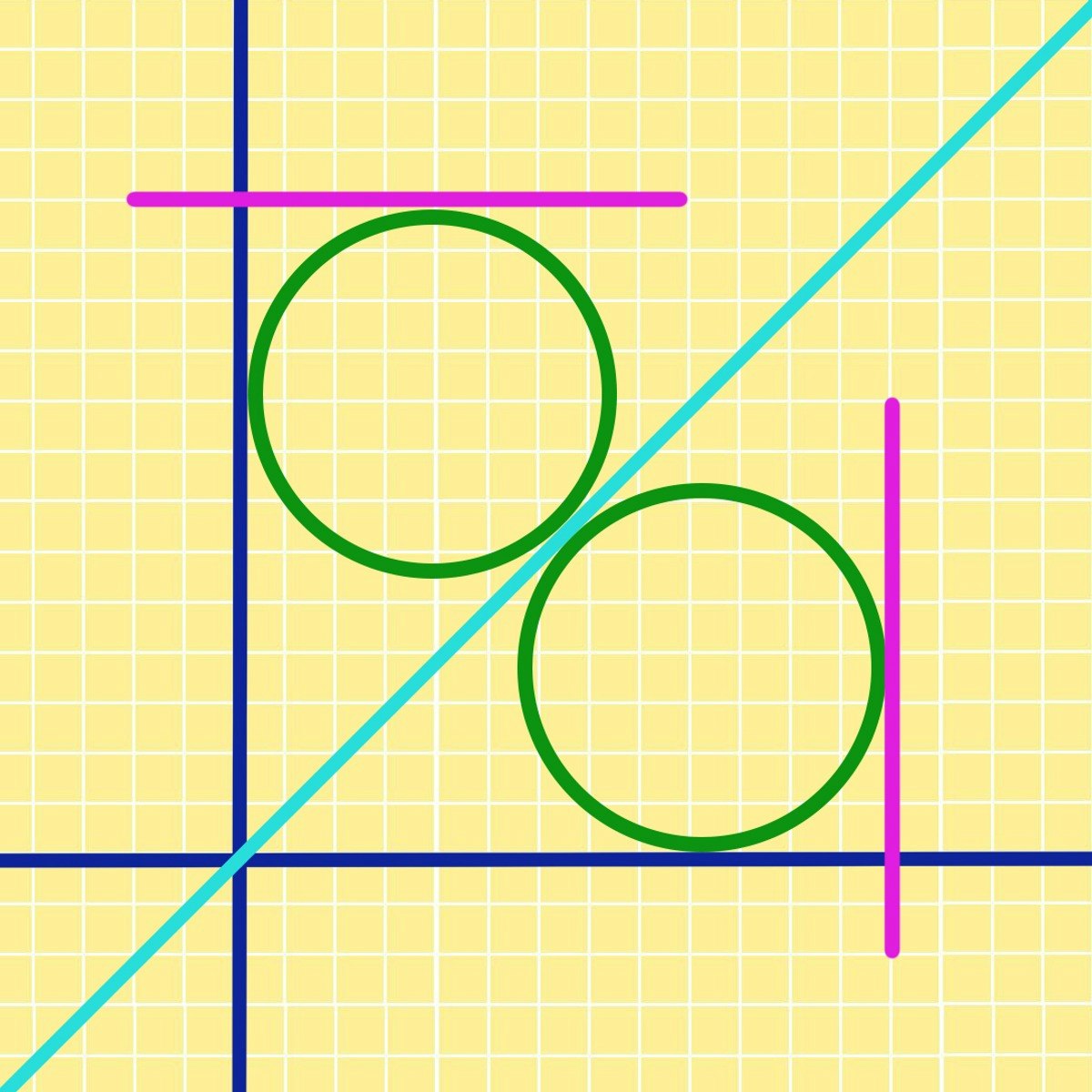

Geometry also has strong ties to linear algebra. Vector spaces provide the setting for Euclidean geometry, and linear transformations correspond to geometric operations like rotations, reflections, and scaling. Projective geometry and differential geometry also utilize linear algebraic tools extensively.

You may wish to explore these related mathematical topics:

Real-World Relevance Across Disciplines

The principles of linear algebra find application in an astonishingly wide array of fields. In computer science, it's fundamental to computer graphics for transformations (rotation, scaling, translation) of objects in 3D space, image processing, and algorithm design. Search engines use concepts like eigenvalues and eigenvectors to rank web pages based on relevance (e.g., Google's PageRank algorithm).

In engineering disciplines, linear algebra is used to solve systems of equations that arise in circuit analysis, structural analysis (finite element method), fluid dynamics, control theory (state-space representation), and signal processing (Fourier analysis often involves linear transformations). Optimization problems, common in logistics and operations research, frequently rely on linear programming, a subfield deeply rooted in linear algebra.

Data science and machine learning are heavily dependent on linear algebra. Techniques like Principal Component Analysis (PCA) for dimensionality reduction, regression analysis for modeling relationships, and the formulation of algorithms like Support Vector Machines (SVMs) and neural networks all rely extensively on matrix operations, vector spaces, and eigenvalue problems. Economics and finance use linear algebra for portfolio optimization, econometric modeling, and analyzing systems of economic interactions.

Even fields like physics and biology utilize linear algebra. Quantum mechanics is formulated almost entirely in the language of linear algebra (Hilbert spaces, linear operators, eigenvectors representing states). In biology, it can be used in population modeling, computational neuroscience, and analyzing genetic data.

These courses demonstrate the application of linear algebra in specific domains:

Core Concepts in Linear Algebra

Vectors and Vector Spaces

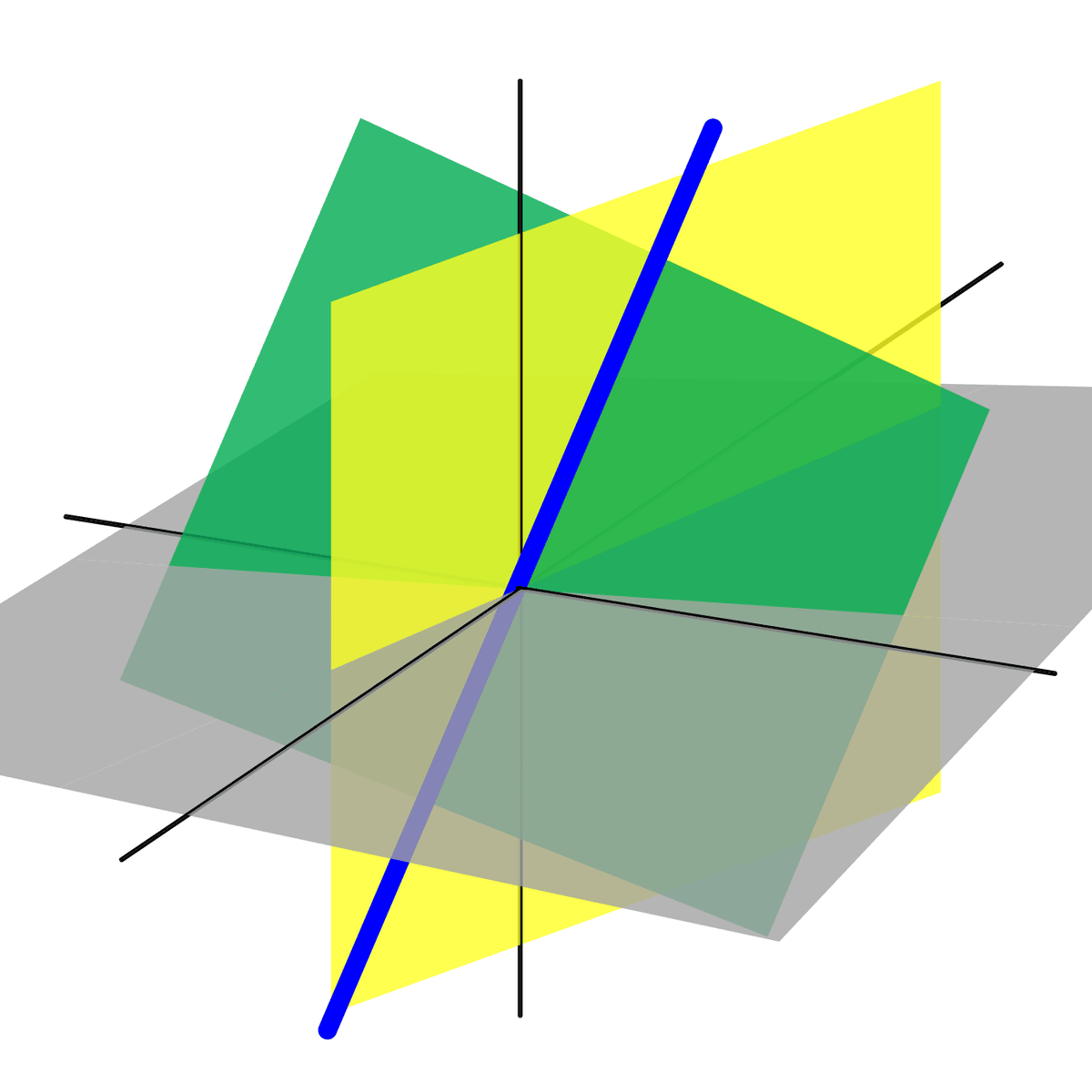

At the heart of linear algebra lies the concept of a vector. Intuitively, in two or three dimensions, a vector can be pictured as an arrow starting from the origin, possessing both a length (magnitude) and a direction. Mathematically, vectors are often represented as ordered lists of numbers called components, like (x, y) in 2D or (x, y, z) in 3D. However, the concept is more general.

A vector space is a collection of objects called vectors, which can be added together and multiplied ("scaled") by numbers called scalars (typically real or complex numbers). These operations must satisfy certain axioms, such as commutativity and associativity of addition, existence of a zero vector, and distributive properties. Common examples include the set of all 2D vectors (denoted R²), the set of all 3D vectors (R³), and more generally, Rⁿ (vectors with n real number components). But vector spaces can be more abstract, encompassing objects like polynomials up to a certain degree, or continuous functions defined on an interval.

Key concepts within vector spaces include linear combinations (forming new vectors by adding scaled versions of existing vectors), span (the set of all possible linear combinations of a set of vectors), linear independence (a set of vectors where none can be expressed as a linear combination of the others), and basis (a linearly independent set of vectors that spans the entire space). The dimension of a vector space is the number of vectors in any basis for that space.

These courses delve into the foundational concepts of vectors and matrices.

Matrices and Matrix Operations

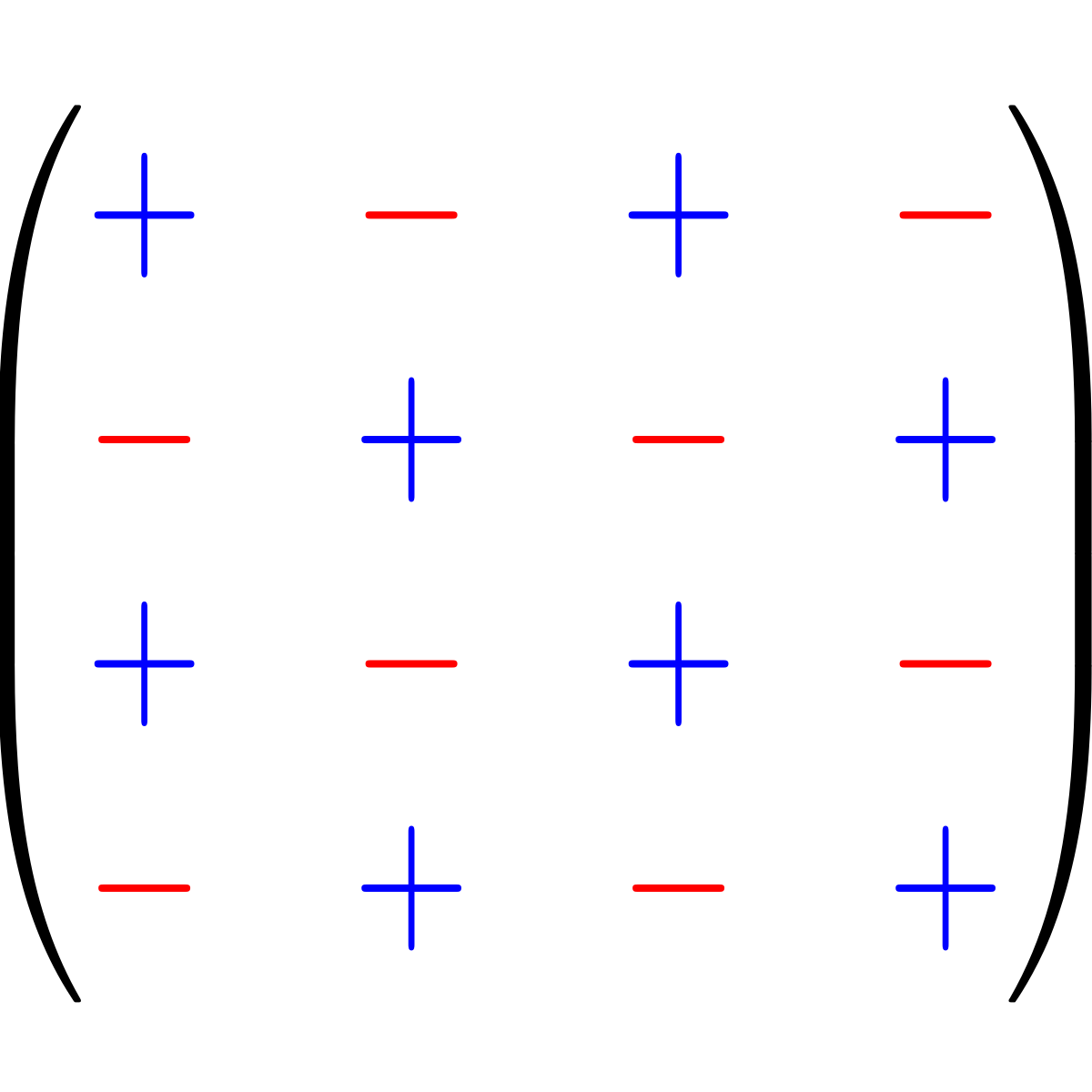

A matrix is a rectangular array of numbers, symbols, or expressions, arranged in rows and columns. Matrices provide a compact way to represent and manipulate systems of linear equations and linear transformations. An m x n matrix has m rows and n columns. Individual entries are identified by their row and column index.

Basic matrix operations include addition and subtraction (performed element-wise on matrices of the same dimensions) and scalar multiplication (multiplying every entry by a scalar). Matrix multiplication is more complex: the product AB is defined only if the number of columns in matrix A equals the number of rows in matrix B. The resulting matrix has the number of rows of A and the number of columns of B. Matrix multiplication corresponds to the composition of linear transformations and is generally not commutative (AB ≠ BA).

Other important matrix concepts include the transpose (swapping rows and columns), the identity matrix (a square matrix with ones on the main diagonal and zeros elsewhere, acting like the number 1 in multiplication), and the inverse of a square matrix (if it exists, denoted A⁻¹, such that AA⁻¹ = A⁻¹A = I, the identity matrix). The determinant is a scalar value associated with a square matrix, indicating properties like invertibility (a matrix is invertible if and only if its determinant is non-zero) and the scaling factor of the associated linear transformation.

These operations form the computational backbone for solving many problems in linear algebra.

Systems of Linear Equations

One of the original motivations for developing linear algebra was solving systems of linear equations. A linear equation involves variables raised only to the first power, like 2x + 3y = 7. A system of linear equations is a collection of two or more linear equations involving the same set of variables, such as:

a₁₁x₁ + a₁₂x₂ + ... + a₁nxn = b₁

a₂₁x₁ + a₂₂x₂ + ... + a₂nxn = b₂

...

am₁x₁ + am₂x₂ + ... + amnxn = bmThe goal is to find values for the variables x₁, x₂, ..., xn that simultaneously satisfy all equations. Such systems can have exactly one solution, infinitely many solutions, or no solution.

Linear algebra provides powerful tools for analyzing and solving these systems. The entire system can be represented concisely using matrix notation as Ax = b, where A is the matrix of coefficients (aᵢⱼ), x is the column vector of variables, and b is the column vector of constants. Techniques like Gaussian elimination (or row reduction) systematically manipulate the augmented matrix [A | b] using elementary row operations (swapping rows, multiplying a row by a non-zero scalar, adding a multiple of one row to another) to transform it into row-echelon form, from which the solution(s) can be easily determined.

Understanding the relationship between the matrix A, the vector b, and the nature of the solution set (unique, infinite, none) is a central theme. Concepts like the rank of a matrix (the dimension of the vector space spanned by its columns or rows) play a crucial role in determining the existence and uniqueness of solutions.

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are fundamental concepts associated with square matrices and linear transformations. For a given square matrix A, an eigenvector v is a non-zero vector that, when multiplied by A, results in a scaled version of the original vector v. The scaling factor is the eigenvalue λ (lambda). Mathematically, this relationship is expressed as Av = λv.

In essence, eigenvectors represent directions that remain unchanged (except for scaling) by the linear transformation represented by matrix A. The corresponding eigenvalue λ indicates the factor by which the eigenvector is stretched or shrunk along that direction. A negative eigenvalue implies a reversal of direction.

Finding eigenvalues and eigenvectors involves solving the characteristic equation det(A - λI) = 0, where I is the identity matrix and det denotes the determinant. The solutions λ to this polynomial equation are the eigenvalues. For each eigenvalue λ, the corresponding eigenvectors v are found by solving the system of linear equations (A - λI)v = 0.

Eigenvalues and eigenvectors have numerous applications. They are used in stability analysis of differential equations, vibration analysis in mechanics, data compression techniques like Principal Component Analysis (PCA), quantum mechanics (where eigenvalues represent measurable quantities), and algorithms like Google's PageRank. Understanding the eigen-structure of a matrix reveals deep insights into its behavior as a transformation.

These courses cover the important topics of eigenvalues and eigenvectors.

ELI5: Vectors, Matrices, and Systems

Imagine you're giving directions. A vector is like one step of those directions: "Go 3 blocks East and 2 blocks North." It has a distance (how far) and a direction. You can add vectors: if the next step is "Go 1 block East and 1 block South," adding them tells you the total displacement from the start.

Now, imagine you have a lot of data, like scores for different students on different tests. A matrix is like a grid or spreadsheet organizing this data neatly in rows (students) and columns (tests). Matrices help us work with large sets of numbers together. We can do things like add matrices (combining scores from two sets of tests) or multiply them, which is a bit more complex but helps transform the data in useful ways.

Finally, think about finding a meeting spot. You know you're on Street A, and your friend is on Avenue B. A system of linear equations is like having several clues (equations) about where something is (the variables). For example, "2 apples + 3 bananas cost $7" and "1 apple + 1 banana costs $3". Solving the system means finding the exact price of one apple and one banana that makes both statements true. Linear algebra gives us organized methods, often using matrices, to find that single point (or maybe multiple points, or even realize there's no point) where all the clues intersect.

Applications of Linear Algebra

Computer Graphics and Game Development

Linear algebra is indispensable in the world of computer graphics and game development. Virtually every visual element you see on screen, from the movement of characters to the rendering of complex 3D environments, relies heavily on its principles. Transformations like translation (moving), rotation (turning), and scaling (resizing) of objects are represented and computed using matrices and vectors.

For instance, a 3D model is typically defined by a collection of vertices (points in 3D space, represented as vectors). To move the model, you add a translation vector to each vertex. To rotate it around an axis, you multiply each vertex vector by a rotation matrix. To scale it, you use a scaling matrix. Combining these transformations involves multiplying their corresponding matrices together. Perspective projection, which makes distant objects appear smaller to create a sense of depth, is also achieved through matrix multiplication.

Beyond basic transformations, linear algebra is used in lighting calculations (dot products determine angles between surfaces and light sources), camera positioning, collision detection, and character animations (skeletal animation often involves hierarchies of matrix transformations). Efficient computation of these operations is critical for real-time rendering in games, driving the development of specialized hardware (GPUs) optimized for parallel vector and matrix calculations.

This course shows how mathematics, including linear algebra, is used in game development.

Machine Learning Algorithms

Linear algebra forms the mathematical bedrock upon which much of modern machine learning (ML) is built. Data in ML is typically represented as vectors (for individual data points) or matrices (for entire datasets, where rows might be samples and columns are features). Many ML algorithms involve operations on these vectors and matrices.

Linear regression, a fundamental ML technique, models the relationship between variables by fitting a linear equation to observed data. This inherently involves solving systems of linear equations, often using matrix methods like the normal equation or gradient descent, which relies on vector calculus derived from linear algebra. Dimensionality reduction techniques, such as Principal Component Analysis (PCA), use eigenvalues and eigenvectors of covariance matrices to find the directions of maximum variance in the data, allowing complex datasets to be represented more simply while retaining essential information.

Support Vector Machines (SVMs) find optimal hyperplanes to separate data points into different classes, a process defined using vector geometry and optimization. Even complex deep learning models (neural networks) rely heavily on linear algebra. Each layer often performs a matrix multiplication (applying weights to inputs) followed by a non-linear activation function. Training these networks involves calculating gradients (using calculus built on linear algebra) and updating weight matrices iteratively.

These courses focus on the linear algebra needed for machine learning.

Understanding the mathematical underpinnings is crucial for effective ML practice.

Quantum Computing Foundations

Quantum computing, a revolutionary paradigm aiming to harness quantum mechanical phenomena for computation, is deeply rooted in linear algebra. The state of a quantum bit, or qubit, the fundamental unit of quantum information, is represented as a vector in a two-dimensional complex vector space (specifically, a Hilbert space). Unlike classical bits (0 or 1), a qubit can exist in a superposition of states, represented by a linear combination of basis vectors corresponding to |0⟩ and |1⟩.

Quantum operations, or gates, that manipulate qubits are represented by unitary matrices. Unitary matrices are complex square matrices whose conjugate transpose is also their inverse, ensuring that the transformations preserve the norm (length) of the quantum state vector, consistent with the principles of quantum mechanics. Applying a sequence of quantum gates corresponds to multiplying the state vector by a sequence of unitary matrices.

Multi-qubit systems are described using tensor products of single-qubit vector spaces, another concept from linear algebra. Phenomena like entanglement, where qubits become correlated in ways impossible classically, are described using vectors in these higher-dimensional tensor product spaces. Measurement in quantum mechanics involves projecting the state vector onto basis vectors, with probabilities determined by the squared magnitudes of the vector components (related to the inner product in Hilbert space). Eigenvalues and eigenvectors also play a crucial role, representing possible measurement outcomes and corresponding states.

These courses touch upon the mathematical prerequisites for quantum computing.

Economic Modeling and Optimization

Linear algebra provides essential tools for modeling economic systems and solving optimization problems in economics and finance. Input-output analysis, developed by Wassily Leontief, uses matrices to model the interdependencies between different sectors of an economy. It allows economists to analyze how changes in demand in one sector propagate throughout the entire economy.

Many economic models involve systems of linear equations representing equilibrium conditions, market clearing, or resource constraints. Matrix algebra is used to solve these systems and analyze their properties. In econometrics, linear regression models, often expressed in matrix form (Y = Xβ + ε), are fundamental for analyzing relationships between economic variables and testing hypotheses using statistical data.

Optimization is central to economics, dealing with maximizing utility or profit, or minimizing costs, subject to constraints. Linear programming, a technique heavily reliant on linear algebra, solves optimization problems where both the objective function and the constraints are linear. It's widely used in resource allocation, production planning, and logistics. Concepts like duality theory in linear programming provide deep economic insights. Furthermore, financial modeling often uses matrices to represent portfolios, calculate returns, and manage risk (e.g., portfolio variance calculation involves covariance matrices).

This book provides a foundation in mathematical economics, heavily utilizing linear algebra.

This course introduces mathematical concepts relevant to finance and business.

Formal Education Pathways

Typical Undergraduate Curriculum Structure

Linear algebra is a cornerstone of most undergraduate mathematics curricula, as well as programs in engineering, computer science, physics, and economics. Typically, students encounter a first course in linear algebra in their first or second year, often after completing one or two semesters of single-variable calculus.

An introductory course usually covers core topics such as systems of linear equations, Gaussian elimination, matrix algebra (addition, multiplication, inverses, determinants), vector spaces (subspaces, linear independence, basis, dimension), linear transformations (kernel, range, matrix representation), and eigenvalues/eigenvectors. Emphasis is often placed on computational techniques and applications, particularly solving systems and basic matrix manipulations.

A second course in linear algebra, often taken by mathematics majors or students in highly quantitative fields, delves deeper into theoretical aspects. Topics might include inner product spaces (orthogonality, Gram-Schmidt process), spectral theory (diagonalization, Jordan canonical form), and possibly more abstract concepts like dual spaces and bilinear forms. This course usually involves more rigorous proofs and abstract reasoning compared to the introductory course.

Many universities offer courses designed for specific disciplines, like "Linear Algebra for Engineers" or "Linear Algebra for Data Science," which tailor the content and applications to the relevant field.

These courses represent typical introductory university-level offerings.

Graduate-Level Specializations

At the graduate level, linear algebra typically ceases to be a standalone course and instead becomes an essential prerequisite and integrated tool within various mathematical specializations. Students pursuing advanced degrees in mathematics, statistics, computer science, physics, or engineering will utilize and build upon their undergraduate linear algebra knowledge extensively.

In pure mathematics, advanced linear algebra concepts are fundamental in areas like abstract algebra (module theory, representation theory), functional analysis (operator theory on Hilbert and Banach spaces), differential geometry (tangent spaces, tensors), and topology. Numerical analysis specialization heavily involves numerical linear algebra, focusing on developing, analyzing, and implementing efficient and stable algorithms for matrix computations (e.g., solving large sparse systems, eigenvalue problems, matrix factorizations like SVD and QR).

In applied fields like statistics and machine learning, graduate studies involve advanced topics where linear algebra is critical, such as multivariate statistics, optimization theory, high-dimensional data analysis, and the theory behind complex algorithms. Engineering and physics programs often require advanced mathematical methods courses that include sophisticated applications of linear algebra in areas like control theory, signal processing, quantum mechanics, and continuum mechanics.

These advanced courses build upon undergraduate foundations.

This book is often used in more advanced courses.

Research Opportunities in Academia

Linear algebra, both in its theoretical aspects and its applications, remains an active area of research in academia. Numerical linear algebra is particularly vibrant, driven by the ever-increasing scale and complexity of computational problems in science and engineering. Researchers work on developing faster, more robust, and parallelizable algorithms for matrix computations, tackling challenges posed by massive datasets and high-performance computing architectures.

Theoretical research explores deeper properties of vector spaces, linear operators, and matrix theory. This includes areas like spectral graph theory (using eigenvalues of matrices associated with graphs), random matrix theory (studying properties of matrices with random entries, with applications in physics, statistics, and number theory), and non-commutative algebra related to linear structures.

Much research also lies at the intersection of linear algebra and other fields. For example, developing new linear algebraic techniques for machine learning (e.g., tensor decompositions for multi-way data analysis), applying linear algebra to quantum information theory, using matrix methods in optimization and control theory, or exploring algebraic structures relevant to coding theory and cryptography. Opportunities exist for graduate students and postdoctoral researchers to contribute to these areas within mathematics, computer science, statistics, and engineering departments.

Prerequisites for Advanced Study

To succeed in advanced studies involving linear algebra, a solid foundation in prerequisite mathematical concepts is essential. Foremost among these is a strong grasp of single-variable calculus, including differentiation and integration techniques, sequences, and series. Multivariable calculus is also crucial, as concepts like partial derivatives, gradients, and multiple integrals are often intertwined with linear algebraic ideas in higher dimensions.

A rigorous introductory course in linear algebra, covering the core concepts mentioned earlier (systems of equations, matrices, vector spaces, eigenvalues), is the most direct prerequisite. Comfort with mathematical notation, logical reasoning, and proof techniques is increasingly important for more theoretical courses. An introductory course on proofs or discrete mathematics can be highly beneficial for developing this mathematical maturity.

Depending on the specific area of advanced study, familiarity with other subjects may be required. For numerical linear algebra, some programming experience (e.g., in Python, MATLAB, or C++) and an understanding of basic numerical methods are needed. For applications in probability and statistics, a foundational course in probability theory is necessary. For theoretical areas like functional analysis or abstract algebra, prior exposure to real analysis or abstract algebra, respectively, is typically expected.

These courses cover prerequisite mathematical topics often needed alongside linear algebra.

Self-Directed Learning Strategies

Effective Use of Open-Source Tools (e.g., Python/R)

Self-directed learners can significantly benefit from using open-source programming languages like Python or R to explore and apply linear algebra concepts. These languages offer powerful libraries specifically designed for numerical computation and matrix manipulation, allowing learners to experiment and gain practical intuition beyond theoretical understanding.

In Python, libraries such as NumPy provide efficient data structures for arrays and matrices, along with a vast collection of functions for linear algebra operations (e.g., matrix multiplication, determinants, inverses, eigenvalue calculations, solving linear systems). SciPy builds upon NumPy, offering more advanced numerical routines. Matplotlib enables visualization of vectors, transformations, and data, which can greatly aid understanding. Using these tools, learners can implement algorithms, test concepts on sample data, and visualize geometric interpretations.

R, widely used in statistics, also has strong capabilities for matrix operations built into its core language, further extended by numerous packages. Working through examples, solving problems computationally, and verifying hand calculations using these tools reinforces learning and bridges the gap between theory and practice. Many online tutorials, documentation resources, and communities support learning these tools in the context of linear algebra.

These courses integrate programming tools with linear algebra concepts.

Project-Based Learning Approaches

Engaging in projects is an exceptionally effective way to solidify understanding and demonstrate mastery of linear algebra. Instead of just solving textbook exercises, applying concepts to solve a specific problem provides context, motivation, and practical experience. Projects force learners to integrate different concepts and often require navigating the challenges of real-world data or scenarios.

Project ideas can range in complexity. A beginner might implement Gaussian elimination to solve systems of equations or write code to perform basic matrix operations. An intermediate project could involve using Singular Value Decomposition (SVD) for image compression, implementing the PageRank algorithm for a small network, or performing Principal Component Analysis (PCA) on a dataset for visualization or dimensionality reduction.

More advanced projects could involve building a simple recommendation system using matrix factorization, simulating a physical system governed by differential equations solved using linear algebra techniques, or implementing basic computer graphics transformations. Documenting the project, explaining the underlying linear algebra, and presenting the results (perhaps in a blog post or a portfolio) further enhances the learning experience and provides tangible evidence of acquired skills.

Many online courses incorporate project components. Consider exploring the course offerings on OpenCourser to find project-based learning opportunities.

Competitions and Hackathons

Participating in data science competitions (like those hosted on platforms such as Kaggle) or relevant hackathons can be a stimulating way to apply and hone linear algebra skills in a practical, time-bound setting. Many competition problems, particularly in machine learning and data analysis, require a solid understanding of linear algebra for feature engineering, model building, and optimization.

While not solely focused on linear algebra, these challenges often involve manipulating large matrices of data, performing dimensionality reduction, implementing algorithms based on matrix factorizations, or fine-tuning models where linear algebraic concepts are implicitly used. Success often hinges on efficient implementation and a good grasp of the underlying mathematics.

Hackathons focused on specific themes like AI, game development, or scientific computing can also provide opportunities to apply linear algebra. Working collaboratively under pressure encourages rapid learning and creative problem-solving. Even if not winning, the process of tackling a real problem, learning from others, and seeing how theoretical concepts translate into practical solutions is invaluable for self-directed learners.

Building Mathematical Intuition

Beyond memorizing formulas and procedures, developing a deep intuition for linear algebra is crucial for effective application and problem-solving. This involves understanding the geometric meaning behind the operations and concepts. Visualizing vectors, the effect of matrix transformations (rotations, shears, projections), the concepts of span and linear independence in 2D or 3D space can make abstract ideas more concrete.

Actively seeking connections between different concepts helps build a cohesive understanding. For example, understanding how determinants relate to area/volume changes under transformations, or how eigenvalues/eigenvectors represent invariant directions, provides deeper insight than just knowing the calculation methods. Experimenting with examples, perhaps using visualization tools or programming libraries, allows learners to "play" with the concepts and observe patterns.

Trying to explain concepts in simple terms (the "Feynman technique") or relating them to familiar analogies can also solidify intuition. Engaging with resources that emphasize geometric interpretations alongside algebraic formulations is beneficial. While rigor is important, complementing it with intuitive understanding makes linear algebra a more powerful and flexible tool in one's intellectual arsenal. OpenCourser's Learner's Guide offers tips on effective study strategies that can help build intuition.

These resources emphasize conceptual understanding and intuition.

Career Progression in Linear Algebra-Intensive Fields

Entry-Level Roles Requiring Linear Algebra

A solid understanding of linear algebra opens doors to various entry-level roles across industries, particularly those involving quantitative analysis and computation. Positions in data analysis often require linear algebra for tasks like regression modeling and understanding algorithms used in data mining tools. Junior software engineers working on graphics, simulations, or scientific computing frequently apply linear algebraic concepts.

Entry-level roles in machine learning engineering, while often requiring broader knowledge, depend heavily on linear algebra for implementing and understanding core algorithms. Quantitative analyst ("quant") positions in finance, especially those focused on modeling or risk management, demand strong mathematical foundations, including linear algebra, probability, and calculus. Operations research analyst roles involve using mathematical modeling, including linear programming and other optimization techniques rooted in linear algebra, to improve business processes.

Even in traditional engineering fields (mechanical, electrical, aerospace), entry-level positions involving simulation, control systems design, or signal processing benefit significantly from linear algebra proficiency. Research assistant positions in academic labs or industrial R&D departments focusing on computational science often list linear algebra as a key required skill.

Consider exploring these career paths if you enjoy applying linear algebra:

Mid-Career Specialization Paths

As professionals gain experience in fields utilizing linear algebra, various specialization paths emerge. One common route is deeper specialization within data science or machine learning, focusing on areas like deep learning, natural language processing, computer vision, or reinforcement learning. These domains require advanced understanding and application of linear algebraic concepts, often combined with calculus and probability, to develop novel algorithms or sophisticated models.

Another path involves specializing in numerical computation and high-performance computing. Professionals in this area develop and optimize the core linear algebra routines (like solvers for linear systems or eigenvalue algorithms) that underpin scientific simulations and large-scale data analysis. This often involves expertise in parallel computing and specific numerical libraries.

Specialization can also occur within specific application domains. For instance, a financial quant might specialize in algorithmic trading strategy development or complex derivatives pricing, both heavily reliant on advanced mathematical modeling including linear algebra. An engineer might become an expert in control systems theory or finite element analysis, applying sophisticated linear algebraic techniques to complex design problems.

Moving into research roles, either in academia or industrial R&D labs, represents another mid-career path for those with deep expertise in linear algebra and its applications. These roles focus on pushing the boundaries of knowledge and developing innovative solutions.

Leadership Roles in Technical Organizations

Strong foundational knowledge, including linear algebra, combined with experience and leadership skills, can lead to management and leadership roles within technical organizations. Individuals may progress to become team leads, project managers, or technical managers overseeing groups of data scientists, machine learning engineers, software developers, or quantitative analysts.

In these roles, while day-to-day hands-on computation might decrease, a deep understanding of the underlying principles, including linear algebra, remains crucial. Leaders need to understand the capabilities and limitations of the methods their teams employ, guide technical strategy, evaluate proposed solutions, and communicate complex technical concepts to broader audiences, including non-technical stakeholders.

Further progression can lead to roles like Director of Data Science, Head of AI Research, Chief Technology Officer (CTO) in tech-focused companies, or Principal Scientist/Engineer. These positions involve setting long-term technical vision, managing larger teams or departments, making strategic decisions about technology adoption, and representing the organization's technical capabilities externally. A strong quantitative background remains an asset even at these senior levels.

Freelancing and Consulting Opportunities

Expertise in linear algebra and its applications creates opportunities for freelancing and consulting work. Businesses across various sectors often require specialized skills for specific projects involving data analysis, machine learning model development, simulation, or optimization, but may not need a full-time expert.

Freelancers or consultants with strong linear algebra skills can offer services such as building custom machine learning models, developing algorithms for specific computational problems, providing expertise in numerical methods, or advising companies on data strategy and analytics implementation. Success in consulting requires not only technical proficiency but also strong communication skills to understand client needs, explain complex methods clearly, and deliver actionable results.

Building a portfolio of successful projects and establishing a professional network are key to finding consulting engagements. Specializing in a high-demand niche, such as applying ML to a specific industry (e.g., healthcare, finance) or expertise in a particular area like computer vision or optimization, can enhance marketability. Online platforms connect freelancers with potential clients, but direct networking and referrals are also common routes to finding opportunities.

Transferable Skills from Linear Algebra

Abstract Problem-Solving Techniques

Studying linear algebra cultivates powerful abstract problem-solving skills. It trains the mind to think in terms of structures, transformations, and relationships, moving beyond specific numbers or contexts. Learning to represent problems using vectors and matrices, and applying systematic procedures like Gaussian elimination or eigenvalue analysis, develops a methodical approach to tackling complex challenges.

The ability to work with abstract concepts like vector spaces, subspaces, and linear independence fosters analytical thinking. Learners become adept at identifying the core structure of a problem, stripping away irrelevant details, and applying general principles to find solutions. This skill is highly transferable to diverse fields, enabling individuals to approach unfamiliar problems with confidence and structure.

Furthermore, linear algebra often involves understanding and constructing mathematical proofs, which enhances logical reasoning and the ability to formulate rigorous arguments. This capacity for abstraction and structured thinking is valuable not only in technical roles but also in strategic planning, policy analysis, and various forms of research.

Data Pattern Recognition

Linear algebra provides fundamental tools for recognizing patterns and structure within data. Representing data as vectors and matrices allows for systematic analysis of relationships between variables and samples. Techniques like calculating correlations, covariances, and performing matrix factorizations are central to uncovering hidden patterns.

Dimensionality reduction methods like Principal Component Analysis (PCA) and Singular Value Decomposition (SVD), which are rooted in linear algebra, are explicitly designed to identify the most significant patterns or underlying factors within high-dimensional datasets. By projecting data onto lower-dimensional subspaces defined by eigenvectors or singular vectors, these techniques reveal dominant trends and reduce noise.

Understanding concepts like rank, linear dependence, and the properties of different matrix types helps in assessing the quality and characteristics of data matrices. This ability to mathematically analyze and interpret data structures is crucial in fields like data science, statistics, machine learning, and signal processing, where identifying meaningful patterns is a primary goal.

Algorithmic Thinking

Linear algebra is inherently algorithmic. Many of its core procedures, such as Gaussian elimination for solving linear systems, the Gram-Schmidt process for orthogonalization, or algorithms for computing eigenvalues and matrix factorizations (like LU or QR decomposition), involve step-by-step computational processes.

Learning and implementing these algorithms fosters algorithmic thinking – the ability to break down complex problems into a sequence of well-defined, executable steps. It involves understanding computational efficiency, numerical stability, and the flow of operations. This skill is directly transferable to computer programming and software development, where designing efficient and correct algorithms is paramount.

Even when using pre-built libraries for linear algebra computations, understanding the underlying algorithms helps in choosing the right method for a given problem, interpreting the results correctly, and troubleshooting potential issues. This procedural and computational mindset is valuable in any field requiring systematic process design or analysis.

These related fields also heavily rely on algorithmic thinking:

Cross-Domain Modeling Capabilities

One of the most powerful aspects of linear algebra is its ability to provide a unifying language for modeling phenomena across vastly different domains. The same mathematical framework – vectors, matrices, linear transformations – can be used to describe electrical circuits, population dynamics, economic interactions, quantum states, image transformations, and network flows.

Mastering linear algebra equips individuals with a versatile modeling toolkit. They learn to translate real-world problems into mathematical formulations involving linear systems or matrix equations, apply standard techniques to analyze or solve these formulations, and interpret the results back in the context of the original domain. This abstraction allows insights and methods developed in one field to potentially be adapted and applied to others.

This cross-domain applicability enhances adaptability and fosters innovation. Professionals with strong linear algebra skills can often move between different industries or research areas more easily, recognizing familiar mathematical structures in new contexts. It provides a fundamental perspective for understanding and quantifying relationships in complex systems, regardless of their specific nature.

Emerging Trends in Linear Algebra

High-Dimensional Data Challenges

Modern science and technology generate data of unprecedented scale and dimensionality. Genomics, climate modeling, social network analysis, and machine learning deal with datasets where the number of features (dimensions) can be enormous, sometimes exceeding the number of samples. Analyzing such high-dimensional data presents significant challenges, often requiring specialized linear algebraic techniques.

Traditional methods may become computationally intractable or statistically unreliable in high dimensions (the "curse of dimensionality"). Research focuses on developing algorithms that scale effectively, often exploiting sparsity or other structures in the data matrices. Randomized linear algebra techniques, which use random sampling or projections to approximate solutions for large matrices, have gained prominence as a way to trade exactness for computational speed.

Techniques like sparse PCA, robust PCA, and various forms of matrix and tensor factorizations are being developed to handle noise, outliers, and the inherent complexities of high-dimensional data. Understanding the theoretical properties and limitations of linear algebra methods in high-dimensional settings is an active area of research in statistics, machine learning, and numerical analysis.

Tensor-Based Computations

While matrices (2nd-order tensors) are fundamental, many real-world datasets possess multi-way structures that are more naturally represented by tensors – multi-dimensional arrays. Examples include video data (height x width x time), hyperspectral imaging (spatial dimensions x spectral bands), or multi-relational network data. Tensor decompositions (e.g., CANDECOMP/PARAFAC, Tucker decomposition) generalize matrix factorizations like SVD to higher orders.

These tensor methods aim to uncover latent factors and underlying patterns in multi-way data, finding applications in recommendation systems, signal processing, computer vision, and chemometrics. Developing efficient and scalable algorithms for tensor computations, understanding their theoretical properties, and building robust software libraries are key areas of ongoing research and development.

The intersection of tensor algebra and machine learning, particularly in deep learning (e.g., tensor networks), is another rapidly evolving area. As data becomes increasingly complex and multi-modal, tensor-based methods leveraging higher-order linear algebra are expected to play an increasingly important role.

Numerical Linear Algebra Advancements

Numerical linear algebra, focused on the practical implementation of algorithms on computers, continues to evolve. Key drivers include the need to handle ever-larger matrices, exploit modern parallel computing architectures (multi-core CPUs, GPUs), and ensure numerical stability and accuracy in the presence of finite-precision arithmetic.

Research includes developing faster direct solvers for dense and sparse linear systems, more efficient iterative methods (like Krylov subspace methods), improved algorithms for eigenvalue problems and singular value decomposition, especially for large-scale or structured matrices. Preconditioning techniques, which transform linear systems to make them easier for iterative solvers to handle, remain a critical area of study.

The development of robust and highly optimized linear algebra software libraries (like BLAS, LAPACK, ScaLAPACK, and libraries within Python/MATLAB) is crucial for scientific computing. Ensuring these libraries effectively utilize hardware advancements and maintain accuracy is an ongoing challenge and focus of research.

This course provides background on numerical methods, often relying on linear algebra.

Intersection with Quantum Computing

As quantum computing progresses, its deep reliance on linear algebra fuels research at their intersection. Developing quantum algorithms often involves translating classical problems into the language of linear operators, unitary matrices, and vector states in Hilbert spaces. The HHL algorithm, for instance, offers a potential exponential speedup for solving certain systems of linear equations on a quantum computer.

Research focuses on identifying other problems where quantum linear algebra algorithms might provide advantages, designing efficient quantum circuits to implement these algorithms, and understanding their limitations, particularly concerning data input/output and error correction. Conversely, classical linear algebra techniques are used extensively to simulate quantum systems on conventional computers, pushing the limits of numerical methods to handle the exponentially large matrices involved.

Furthermore, concepts from linear algebra are being applied to develop quantum machine learning algorithms, exploring how quantum phenomena might enhance tasks like classification, clustering, or dimensionality reduction. This synergy between the abstract structures of linear algebra and the unique capabilities of quantum mechanics represents a frontier in both fields.

These courses explore quantum computing, which heavily relies on linear algebra.

Ethical Considerations in Applied Linear Algebra

Bias in Machine Learning Systems

Linear algebra underpins many machine learning algorithms used in decision-making systems, from loan applications and hiring recommendations to facial recognition and content filtering. However, if the data used to train these models reflects historical biases or societal inequalities, the resulting models can perpetuate or even amplify these biases. Linear algebraic techniques like dimensionality reduction or feature representation can inadvertently encode these biases.

For example, if facial recognition data predominantly features certain demographic groups, the resulting model (built using techniques reliant on linear algebra) may perform poorly for underrepresented groups. Similarly, word embeddings, vector representations of words learned using matrix factorization techniques, can inherit gender or racial biases present in the text corpus they were trained on.

Addressing algorithmic bias requires careful consideration throughout the model development pipeline. This includes curating representative datasets, developing fairness-aware algorithms (which may involve modifying optimization objectives or constraints informed by linear algebra), and rigorously auditing models for disparate impacts across different groups. Ethical application demands awareness of how mathematical tools interact with biased data.

Privacy Concerns in Data Transformations

Linear algebraic techniques are often used to transform data for analysis or sharing, for instance, through dimensionality reduction (PCA) or feature extraction. While these transformations can be useful for utility or efficiency, they can also raise privacy concerns if not implemented carefully.

Even if raw data is transformed, it might still be possible to infer sensitive information about individuals from the transformed data, potentially through linkage attacks or reconstruction techniques. For example, while PCA might obscure individual data points, analyzing the principal components themselves could reveal aggregate patterns related to sensitive attributes.

Techniques like differential privacy aim to provide formal mathematical guarantees about the privacy risks associated with data analysis algorithms, including those based on linear algebra. Integrating differential privacy mechanisms into linear algebraic computations (e.g., adding calibrated noise during matrix operations) is an active area of research aiming to balance data utility with individual privacy protection.

Environmental Impact of Large Computations

Many cutting-edge applications of linear algebra, particularly in large-scale machine learning (training massive neural networks) and scientific simulations (solving huge systems of equations), require immense computational resources. These large computations consume significant amounts of electrical energy, contributing to carbon emissions and environmental impact, especially if the energy sources are not renewable.

The development of more computationally efficient linear algebra algorithms, including those optimized for specialized hardware like GPUs and TPUs or leveraging techniques like randomized methods or mixed-precision arithmetic, can help mitigate this impact. Research into energy-efficient hardware architectures and algorithmic design that minimizes computational cost is crucial.

Ethical considerations involve weighing the potential benefits of these large-scale computations against their environmental footprint. This includes exploring greener computing practices, optimizing code for energy efficiency, and considering whether the scale of computation is truly necessary for the desired outcome. The field of numerical linear algebra plays a role in developing algorithms that achieve desired results with fewer computational resources.

Responsible Innovation Frameworks

Given the power and pervasive application of linear algebra in technologies that shape society, responsible innovation is paramount. This involves proactively considering the potential societal consequences, ethical implications, and unintended negative effects of deploying systems built upon these mathematical tools.

Frameworks for responsible innovation encourage practitioners to engage in critical reflection, stakeholder engagement, and iterative assessment of impacts throughout the development lifecycle. This includes asking questions about fairness, accountability, transparency, privacy, security, and environmental sustainability. It means moving beyond purely technical optimization to consider broader human values.

For those applying linear algebra, this translates to understanding the context of application, being aware of potential pitfalls like bias amplification, ensuring data privacy, considering computational costs, and designing systems that are interpretable and accountable where appropriate. It requires collaboration between mathematicians, computer scientists, domain experts, ethicists, and policymakers to guide the development and deployment of powerful technologies responsibly.

Frequently Asked Questions (Career Focus)

What entry-level jobs use linear algebra daily?

While few jobs consist only of solving linear algebra problems by hand, many entry-level technical roles apply its concepts daily using software tools. Data Analysts often use regression (based on linear algebra) and matrix manipulations within analysis software. Junior Machine Learning Engineers work with algorithms fundamentally built on vectors, matrices, and transformations. Software Engineers in computer graphics, game development, or scientific computing frequently use linear algebra libraries for tasks like 3D transformations, physics simulations, or numerical modeling.

Quantitative Analysts (Quants) in finance apply linear algebra in portfolio optimization, risk modeling, and pricing derivatives. Operations Research Analysts use linear programming and other optimization techniques derived from linear algebra. Entry-level engineers in fields like signal processing or control systems also regularly encounter and apply linear algebraic principles.

The key is often not manual calculation but understanding the concepts well enough to choose the right tools, interpret results, and troubleshoot issues when applying these techniques using software libraries (like NumPy in Python or built-in functions in MATLAB/R).

How important is linear algebra for data science roles?

Linear algebra is critically important for data science roles. It provides the fundamental mathematical language for representing and manipulating data (vectors, matrices) and underlies a vast number of data analysis and machine learning algorithms. Without a solid grasp of linear algebra, a data scientist might be able to use pre-built library functions but will struggle to understand how they work, why they work, their limitations, and how to customize or troubleshoot them.

Key data science tasks like dimensionality reduction (PCA, SVD), recommendation systems (matrix factorization), natural language processing (word embeddings like Word2Vec), network analysis (graph Laplacians), and nearly all forms of regression and classification modeling rely heavily on linear algebraic concepts. Understanding eigenvalues/eigenvectors, matrix decompositions, vector spaces, and norms is essential for interpreting results and developing effective models.

While the required depth can vary (a role focused purely on data visualization might need less than one developing novel ML algorithms), a strong conceptual and practical understanding of linear algebra is generally considered a prerequisite for a successful career in data science. According to the U.S. Bureau of Labor Statistics, data science roles often require strong analytical and mathematical skills.

These courses are tailored for data science applications.

Can I transition into AI without advanced linear algebra?

Transitioning into certain roles within Artificial Intelligence (AI) without advanced linear algebra is possible, but a solid foundational understanding is generally necessary. Roles focused more on applying existing AI tools, AI ethics, product management for AI, or data annotation might not require deep theoretical knowledge daily. However, for roles involving the development, customization, or research of AI algorithms (especially in machine learning and deep learning), linear algebra is fundamental.

Many core AI concepts, particularly in machine learning, are expressed and implemented using linear algebra. Understanding how neural networks process information via matrix multiplications, how dimensionality reduction works, or how optimization algorithms navigate vector spaces requires familiarity with these concepts. Trying to work in core AI development without it would be like trying to write complex software without understanding data structures.

For those aiming for technical AI roles, investing time in learning foundational linear algebra is highly recommended. It doesn't necessarily mean completing multiple advanced graduate-level courses, but mastering the content of a standard undergraduate sequence is crucial. Starting with foundational concepts and gradually deepening knowledge as needed for specific AI applications is a viable path for career transitioners. Persistence and targeted learning are key.

These courses focus on the mathematics required for AI.

What industries value linear algebra expertise most?

Expertise in linear algebra is highly valued across a wide range of industries that rely on quantitative analysis, computation, and modeling. The technology sector is a major employer, needing linear algebra for software development (graphics, simulation, scientific computing), data science, machine learning, and AI research.

The finance industry heavily recruits individuals with strong mathematical skills, including linear algebra, for quantitative analysis (quant roles), algorithmic trading, risk management, and portfolio optimization. Engineering disciplines (aerospace, mechanical, electrical, civil) utilize linear algebra extensively for design, simulation (e.g., finite element analysis), control systems, and signal processing.

Consulting firms, particularly those specializing in data analytics, operations research, or management science, value linear algebra skills for solving client problems related to optimization, forecasting, and data-driven strategy. Research and development sectors, both in academia and industry (e.g., pharmaceuticals, energy, materials science), require linear algebra for modeling complex systems and analyzing experimental data. Government agencies involved in defense, intelligence, economics, and scientific research also employ individuals with these skills.

How does linear algebra salary potential compare to other skills?

Linear algebra itself isn't typically listed as a standalone skill dictating salary; rather, it's a foundational mathematical competency that enables high-paying roles. Salaries are more directly tied to the specific job title and industry (e.g., Machine Learning Engineer, Quantitative Analyst, Software Engineer) where linear algebra is applied, combined with other skills like programming, statistics, domain expertise, and experience level.

Roles that heavily rely on linear algebra, such as those in data science, AI/ML, quantitative finance, and certain specialized software engineering fields, are often among the higher-paying technical positions. This is because the ability to understand and apply these mathematical concepts is crucial for solving complex, high-value problems in these domains. According to salary surveys and job market data (like those from Robert Half or the BLS), careers requiring strong quantitative backgrounds generally offer competitive compensation.

Therefore, while learning linear algebra alone doesn't guarantee a specific salary, mastering it is often a necessary step towards qualifying for lucrative careers where analytical and computational skills are paramount. The salary potential comes from effectively combining linear algebra knowledge with other technical skills and applying them within a high-demand field.

Is linear algebra still relevant with AI automation?

Yes, linear algebra remains highly relevant, perhaps even more so, despite advancements in AI automation. While AI tools and libraries can automate the execution of many linear algebraic computations (e.g., multiplying matrices, finding eigenvalues), a fundamental understanding of the underlying principles is crucial for several reasons.

First, designing, developing, and improving AI algorithms often requires deep knowledge of the mathematical foundations, including linear algebra. Researchers and engineers creating new AI models or customizing existing ones need to understand how the components work at a mathematical level. Second, interpreting the results and behavior of AI models often requires understanding the linear algebraic transformations happening internally. Debugging issues or diagnosing unexpected model behavior frequently involves tracing back through the mathematical operations.

Third, selecting the appropriate AI tool or algorithm for a specific task, and configuring its parameters effectively, often benefits from understanding the assumptions and mathematical properties associated with different techniques, many of which are rooted in linear algebra. Blindly applying automated tools without understanding can lead to suboptimal results or misinterpretations. Therefore, while AI automates the computation, the understanding and strategic application provided by linear algebra knowledge remain essential human skills in the age of AI.

Linear algebra is more than just a collection of techniques for solving equations; it's a fundamental way of thinking about relationships and transformations in multidimensional spaces. Its concepts provide the bedrock for numerous advancements in science, technology, and engineering. Whether you aim to delve into data science, pioneer new algorithms, design complex systems, or simply gain a deeper understanding of the quantitative world, mastering linear algebra offers invaluable tools and perspectives. The journey requires effort and practice, but the ability to wield its powerful concepts opens doors to fascinating challenges and rewarding opportunities.