Service Mesh

Understanding Service Mesh: A Guide for Learners and Professionals

A service mesh is a dedicated infrastructure layer for handling service-to-service communication within a complex application, typically one built using a microservices architecture. Think of it as a specialized network designed specifically for your application's internal traffic. Its primary goal is to make these internal communications reliable, secure, and observable without requiring changes to the application code itself. This separation allows development teams to focus on business logic while the service mesh handles the complexities of network interactions.

Working with service mesh technology can be deeply engaging. It involves operating at the intersection of networking, security, and distributed systems, offering a chance to solve challenging problems that impact application performance and reliability at scale. For those fascinated by how large, modern software systems function under the hood, understanding and managing a service mesh provides a unique vantage point. Furthermore, mastering service mesh concepts opens doors to specialized roles in cloud-native infrastructure and site reliability engineering, areas experiencing significant growth and demand.

What is a Service Mesh?

Defining the Concept and Its Purpose

At its core, a service mesh is a configurable, low-latency infrastructure layer designed to handle the network communication between different parts (services) of an application. In modern software development, applications are often broken down into smaller, independent units called microservices. While this approach offers flexibility and scalability, it introduces complexity in managing how these dozens or even hundreds of services talk to each other. The service mesh steps in to manage this complexity.

The fundamental purpose is to provide operational capabilities like traffic management (e.g., routing requests, load balancing), security (e.g., mutual TLS encryption, authentication, authorization), and observability (e.g., collecting metrics, logs, and traces) in a consistent way across all services. Crucially, it achieves this by intercepting network traffic, typically using lightweight proxies deployed alongside each service instance, without embedding this logic directly into the application code.

This separation of concerns is a key benefit. Application developers can concentrate on writing business features, while platform or operations teams manage the communication infrastructure through the service mesh configuration. It standardizes how non-functional requirements related to inter-service communication are implemented and managed.

From Monoliths to Microservices: The Need Arises

To appreciate why service meshes exist, it helps to understand the evolution of application architecture. Historically, many applications were built as monoliths – large, single codebases where all functionality was tightly coupled. While simpler initially, monoliths become difficult to update, scale, and maintain as they grow. Deploying a small change required redeploying the entire application, increasing risk and slowing down development cycles.

The microservices architectural style emerged as a solution. By breaking down the monolith into smaller, independently deployable services, teams could develop, deploy, and scale parts of the application independently. This offered greater agility and resilience. However, it shifted complexity from within the codebase to the network. Managing communication, ensuring security between services, and understanding the flow of requests across potentially dozens of services became significant new challenges.

Early solutions involved embedding libraries for tasks like service discovery, retries, and circuit breaking directly into each microservice. This led to inconsistencies, language-specific implementations, and tight coupling between application logic and network concerns. The service mesh architecture evolved to extract this common networking logic into a separate, dedicated infrastructure layer, providing a more robust and manageable solution for the challenges posed by microservice communication.

You may find these topics helpful for understanding the context in which service meshes operate.

Key Problems Solved by Service Meshes

Service meshes address several critical problems inherent in distributed systems, particularly those built with microservices. One major area is Traffic Management. A service mesh provides sophisticated control over how requests are routed between services. This includes capabilities like intelligent load balancing, canary releases (gradually rolling out new versions), A/B testing, traffic splitting, and fault injection for testing resilience.

Another crucial function is enhancing Security. In a distributed system, securing communication between services is paramount. A service mesh can automatically enforce mutual TLS (mTLS) encryption for all service-to-service traffic, ensuring data confidentiality and integrity. It can also manage service identity and implement fine-grained authorization policies, specifying which services are allowed to communicate with others, often without needing changes in the application code.

Finally, service meshes significantly improve Observability. Understanding what's happening within a complex web of interacting services can be difficult. A service mesh automatically generates detailed telemetry data – metrics (like request rates, latency, error rates), distributed traces (following a request as it travels through multiple services), and logs – for all traffic it manages. This provides deep insights into application performance and behavior, making it easier to diagnose and troubleshoot issues.

An Analogy: Air Traffic Control for Services

Imagine a bustling city where each building represents a microservice in your application. These buildings need to communicate constantly – sending messages, data, and requests back and forth via roads (the network). Without any central coordination, this communication could become chaotic, leading to traffic jams (latency), lost messages (errors), and unauthorized access (security breaches).

A service mesh acts like a highly sophisticated air traffic control system, but for your application's network traffic instead of airplanes. It doesn't build the roads or the buildings (that's the underlying network and the microservices themselves), but it manages how traffic flows between them. It directs requests along the best routes (load balancing), ensures only authorized vehicles travel certain roads (security policies), monitors traffic flow and reports delays or accidents (observability), and can even reroute traffic smoothly around problems (resilience features like retries and circuit breaking).

Just as air traffic controllers manage flights without being inside the cockpit, the service mesh manages service communication without being part of the application code itself. It provides a unified system for controlling, securing, and observing the complex interactions within a modern distributed application, making the entire system more reliable and manageable.

Core Components of a Service Mesh

The Role of Sidecar Proxies

The workhorse of the service mesh's data plane is the sidecar proxy. This is a lightweight network proxy that runs alongside each instance of a service within the application. Popular examples include Envoy or Linkerd's micro-proxy. Instead of services communicating directly over the network, all inbound and outbound traffic for a service instance flows through its dedicated sidecar proxy.

These proxies intercept the network traffic and apply the rules and policies configured in the service mesh's control plane. They handle tasks like load balancing requests across healthy upstream service instances, enforcing security policies (like mTLS encryption/decryption and authorization checks), collecting detailed telemetry data (metrics, traces, logs), and implementing resilience patterns (like retries and circuit breaking).

By deploying proxies as sidecars (in the same execution context, like a Kubernetes Pod, but a separate container), the service mesh functionality is injected without modifying the application code. The application service simply sends requests to what it thinks is the target service's address (e.g., service-b), but the local sidecar proxy intercepts this call, applies relevant policies, and then forwards the request to the sidecar proxy of an appropriate instance of service-b.

Control Plane and Data Plane Architecture

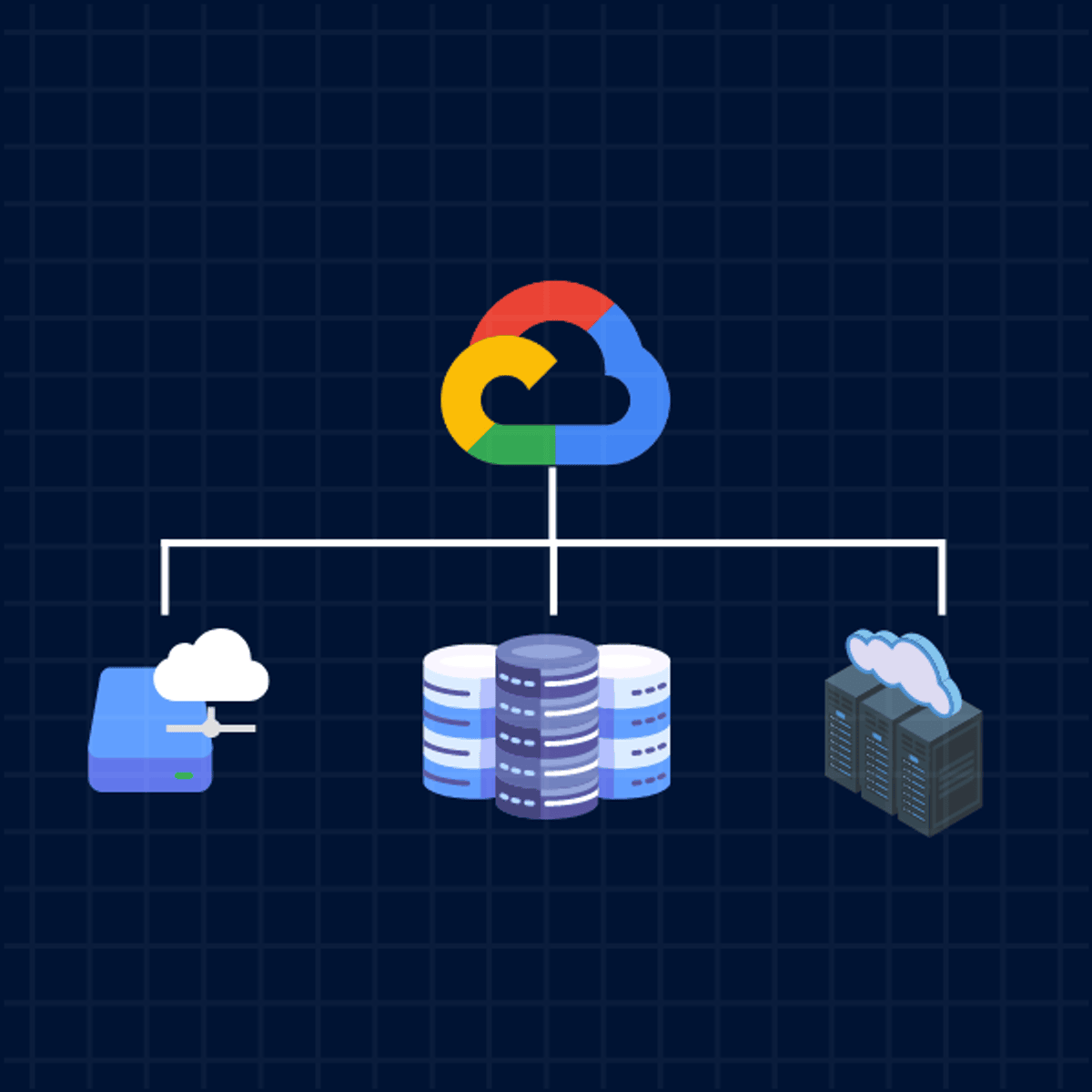

A service mesh architecture is fundamentally divided into two distinct parts: the data plane and the control plane.

The Data Plane consists of the network proxies (usually sidecars like Envoy) deployed alongside the application services. Its responsibility is to actually handle the traffic between services. It intercepts calls, applies routing rules, enforces security policies, collects telemetry, and executes resilience logic based on the configuration it receives. The data plane components directly touch every request and response packet flowing between managed services.

The Control Plane acts as the brain of the service mesh. It does not directly handle any application traffic. Instead, its role is to manage and configure all the data plane proxies to behave correctly. The control plane provides APIs for operators to define policies (e.g., traffic routing rules, security policies). It then translates these high-level policies into specific configurations understood by the proxies and distributes this configuration to them. It also aggregates telemetry data collected by the proxies, providing a centralized view of the mesh's behavior. Examples of control planes include Istio's Istiod or Linkerd's control plane components.

This separation allows the data plane to be highly optimized for performance and low latency, while the control plane handles the complex logic of configuration management, policy enforcement, and providing a central point of control and observability for the entire mesh.

Service Discovery and Load Balancing

In dynamic environments like Kubernetes, where service instances are constantly being created, destroyed, or scaled, knowing where to send requests (service discovery) and distributing those requests intelligently (load balancing) are crucial. Service meshes excel at both.

The control plane typically integrates with the underlying platform's service discovery mechanism (like Kubernetes' Services or Consul). It monitors the available instances (e.g., Pods) for each service and provides this information to the data plane proxies. When a service needs to call another service, its sidecar proxy queries the control plane (or uses configuration pushed by it) to get the current list of healthy, available instances for the target service.

Once the sidecar knows the available instances, it performs load balancing to distribute outgoing requests among them. Service meshes offer more sophisticated load balancing algorithms than simple round-robin, often including options like least-requests, weighted distribution (useful for canary releases), or ring hash (for session affinity). This ensures traffic is distributed efficiently, prevents overloading individual instances, and helps improve overall application resilience and performance.

Understanding service discovery is fundamental. This course provides practical insights into HashiCorp Consul, a popular tool often used in conjunction with or as part of service mesh solutions.

Observability: Tracing, Metrics, and Logging

Understanding the behavior of a distributed system is notoriously difficult. Service meshes provide powerful observability features by leveraging the strategic position of the sidecar proxies, which see all inter-service traffic.

Metrics: Sidecar proxies automatically collect detailed metrics for all traffic they handle. This includes request volume (RPS), error rates (e.g., HTTP 5xx codes), and request latency (e.g., p50, p90, p99 latencies). These "golden signals" provide a high-level overview of service health and performance, often exposed in a format compatible with monitoring systems like Prometheus.

Distributed Tracing: When a request travels through multiple services, understanding the end-to-end flow and identifying bottlenecks requires distributed tracing. Sidecar proxies can automatically propagate trace context headers and generate trace spans for each service hop. These traces can then be collected and visualized in tools like Jaeger or Zipkin, providing a detailed picture of a request's journey across the microservices architecture.

Logging: While application-specific logs remain important, sidecar proxies can generate access logs for all requests they process, providing a consistent format and level of detail about inter-service communication attempts, successes, and failures.

Together, these three pillars of observability, provided out-of-the-box by the service mesh, give operators crucial insights into system behavior, performance bottlenecks, and error sources without requiring developers to instrument every service manually.

Service Mesh in Modern Architectures

Role in Cloud-Native Ecosystems and Kubernetes

Service meshes have become closely associated with cloud-native architectures, particularly those orchestrated by Kubernetes. Kubernetes provides a powerful platform for deploying, scaling, and managing containerized applications, but it primarily focuses on container lifecycle management and basic network connectivity (Services, Ingress). It doesn't inherently offer the rich L7 traffic management, security, and observability features needed for complex microservice interactions.

This is where service meshes integrate seamlessly. They leverage Kubernetes' primitives (like Services for discovery and sidecar injection via Pod specifications) to deploy and manage their components. The service mesh extends Kubernetes' capabilities by providing a dedicated layer for sophisticated inter-service communication control directly within the cluster. Features like mTLS, fine-grained traffic splitting for canary deployments, and automatic telemetry collection become readily available for applications running on Kubernetes without significant application changes.

Many service mesh implementations are designed specifically with Kubernetes in mind, making the integration relatively smooth. They often use Kubernetes Custom Resource Definitions (CRDs) for configuration, allowing operators to manage service mesh policies using familiar kubectl commands and GitOps workflows. This synergy makes service meshes a popular choice for enhancing applications deployed on Kubernetes.

These courses delve into using service meshes within Google Cloud's Kubernetes environment (GKE) and Anthos platform, illustrating practical integration.

Understanding the broader cloud-native landscape is beneficial.

Comparison with API Gateways and Other Middleware

It's common to wonder how a service mesh differs from other network middleware, particularly API Gateways. While there can be overlapping functionalities, their primary focus and deployment model differ significantly.

An API Gateway typically sits at the edge of a system, acting as the single entry point for external client requests (from web browsers, mobile apps, or third-party systems). Its main concerns are managing north-south traffic (traffic entering or leaving the system), handling API authentication/authorization, rate limiting, request/response transformations, and routing external requests to the appropriate internal services. It focuses on exposing and protecting internal services to the outside world.

A Service Mesh, conversely, focuses primarily on managing internal, service-to-service communication within the cluster (east-west traffic). Its concerns are reliability, security, and observability for interactions between microservices. It uses distributed sidecar proxies deployed alongside each service, rather than a centralized gateway. While some service meshes offer ingress gateway functionality, their core strength lies in managing the internal network fabric.

Other middleware, like message queues or enterprise service buses (ESBs), address different communication patterns (asynchronous messaging vs. synchronous request/response) and often involve more heavyweight infrastructure or direct application integration compared to the transparent proxying approach of a service mesh.

Illustrative Use Cases in Large Systems

While avoiding specific company names, we can describe common scenarios where large organizations adopt service meshes. Consider a large e-commerce platform with hundreds of microservices handling product catalogs, inventory, user accounts, recommendations, checkout, and payments. Ensuring reliable and secure communication between these services during peak shopping seasons is critical. A service mesh can provide automatic retries for transient network issues between the checkout and payment services, enforce strict security policies ensuring only authorized services can access sensitive user data, and provide detailed tracing to quickly diagnose latency issues in the recommendation engine.

Another example is a financial institution migrating from monolithic systems to microservices for trading applications. Here, low latency and high security are paramount. A service mesh can enforce mTLS for all internal traffic, provide fine-grained traffic shifting capabilities to safely roll out new algorithm versions to a small percentage of traffic initially (canary deployment), and offer detailed metrics to monitor the performance impact of changes in real-time.

Telecommunication companies managing vast networks and services also leverage service meshes. They might use them to manage communication between control plane functions in their 5G core networks, ensuring resilience and providing observability into complex signaling flows. These examples highlight how service meshes address the operational complexities arising from large-scale, distributed systems across various industries.

Weighing Costs and Benefits for Enterprises

Adopting a service mesh is a significant decision for an enterprise, involving both substantial benefits and potential costs. On the benefit side, a service mesh offers standardized and improved security (mTLS, authorization), enhanced observability (metrics, traces, logs), advanced traffic management capabilities (canary releases, fault injection), and increased developer productivity by offloading common networking concerns from application code.

However, introducing a service mesh also brings costs and complexity. There's the operational overhead of deploying, managing, and upgrading the service mesh components (control plane and proxies) themselves. Sidecar proxies consume additional CPU and memory resources for each service instance, potentially increasing infrastructure costs. There is also a significant learning curve for development and operations teams to understand service mesh concepts, configuration, and troubleshooting. Performance overhead, while often minimal for well-tuned meshes, is another consideration, especially for latency-sensitive applications.

The decision often hinges on the scale and complexity of the microservices architecture. For applications with only a few services, the overhead might outweigh the benefits. But for large, complex systems with dozens or hundreds of services, the operational consistency, security enhancements, and observability provided by a service mesh can become invaluable, justifying the investment. Organizations should carefully evaluate their specific needs and operational maturity before adoption. According to industry analysts like Gartner, understanding this trade-off is key to successful implementation.

Formal Education Pathways

Relevant University Coursework

A strong foundation in computer science or computer engineering provides the necessary background for working with complex systems like service meshes. University coursework in several areas is particularly relevant. Core subjects include Data Structures and Algorithms, Operating Systems, and Computer Networking, as these provide fundamental knowledge about how software interacts with hardware and networks.

More specialized courses in Distributed Systems are highly valuable. These often cover topics like consensus algorithms, replication, consistency models, inter-process communication, and failure detection – concepts that are directly applicable to understanding how service meshes operate and the problems they solve. Courses covering Cloud Computing and Virtualization also provide essential context, as service meshes are frequently deployed in cloud environments, often using containerization technologies.

Additionally, courses in Software Engineering, particularly those focusing on software architecture patterns (like microservices) and system design, help build an understanding of the broader context in which service meshes are used. Security-focused courses covering network security, cryptography (relevant for mTLS), and access control are also beneficial given the security functions provided by service meshes.

Research Opportunities in Distributed Systems

For those inclined towards deeper academic exploration, the field of distributed systems offers numerous research opportunities related to service mesh technology. Universities with strong systems research groups often investigate challenges that service meshes aim to address or introduce.

Research areas might include developing more efficient or intelligent traffic routing algorithms, exploring novel approaches to security policy enforcement in dynamic environments, or designing lower-overhead mechanisms for observability data collection. Performance analysis and optimization of service mesh data planes (the proxies) is another active area, focusing on minimizing latency and resource consumption.

Further research might explore the formal verification of service mesh policies, techniques for automating service mesh configuration and management (potentially using AI/ML), or extending service mesh concepts to new domains like edge computing or serverless platforms. Contributing to research in these areas often involves building prototypes, running large-scale simulations or experiments, and publishing findings in academic conferences and journals.

Advanced Studies and Contributions

At the PhD level, researchers can make significant contributions to the evolution of service mesh technology. This could involve fundamental work on the underlying networking protocols, developing new architectural patterns for control planes or data planes, or creating novel security models tailored for service mesh environments.

PhD research might focus on theoretical underpinnings, such as analyzing the performance guarantees of different load balancing strategies under various failure conditions, or developing formal methods to prove the correctness of complex traffic routing rules. It could also involve highly practical work, such as designing and implementing extensions to existing open-source service meshes or even creating entirely new service mesh systems with unique capabilities.

Contributions at this level often push the boundaries of what's possible, influencing the direction of industry standards and the features incorporated into next-generation service mesh products. Graduates with PhDs in relevant areas are highly sought after for roles in research labs, advanced technology groups within large companies, and faculty positions at universities.

Certifications and Focused Workshops

While a formal degree provides a broad foundation, specific certifications and workshops can offer focused, practical skills highly relevant to service mesh roles. Vendor-neutral certifications related to cloud-native technologies, such as those offered by the Cloud Native Computing Foundation (CNCF) around Kubernetes (like CKA, CKAD, CKS), are often prerequisites or highly valued, as service meshes are commonly deployed on Kubernetes.

Specific service mesh implementations like Istio or Linkerd may have associated training programs or workshops offered by the projects themselves, cloud providers, or third-party training companies. These often include hands-on labs focused on installation, configuration, and operation. Cloud provider certifications (e.g., AWS Certified Solutions Architect, Google Cloud Professional Cloud Architect, Microsoft Certified: Azure Solutions Architect Expert) often include modules covering networking, security, and container orchestration, which are relevant context.

Attending workshops at industry conferences focused on cloud-native technologies, DevOps, or SRE can also be a valuable way to gain practical exposure and learn about best practices and emerging trends directly from practitioners and project maintainers. While certifications alone don't guarantee expertise, they can demonstrate a specific level of knowledge and commitment to potential employers.

This course provides foundational knowledge relevant to Google Cloud's ecosystem, touching upon service mesh concepts within their platform.

Self-Directed Learning and Online Resources

Hands-On Experience with Open Source Projects

One of the most effective ways to learn about service meshes is through direct, hands-on experience with leading open-source projects. Istio and Linkerd are two prominent examples. Both have extensive documentation, tutorials, and active communities.

Start by following their official "Getting Started" guides. These typically walk you through installing the service mesh onto a local or cloud-based Kubernetes cluster and deploying a sample application. Experimenting with core features like traffic shifting (e.g., setting up a canary release), enforcing mutual TLS, or visualizing traffic using the built-in dashboards provides invaluable practical understanding.

Don't just follow the steps; try to understand why each configuration change has the effect it does. Break things intentionally to see how the system reacts and how to troubleshoot common issues. Contributing back to these projects, even with small documentation improvements or bug reports, can deepen your understanding and build your profile within the community.

These courses offer introductions and hands-on experience with popular service mesh implementations like Istio and Linkerd.

Setting Up Your Own Lab Environment

Reading documentation is helpful, but practical experimentation requires a lab environment. Since most service meshes run on Kubernetes, setting up a local Kubernetes cluster is a common starting point. Tools like Minikube, Kind (Kubernetes in Docker), or K3s allow you to run a lightweight, single-node Kubernetes cluster on your laptop or a virtual machine.

Once you have a local cluster, you can install a service mesh like Istio or Linkerd and deploy sample applications (often provided by the service mesh projects) to experiment with. Alternatively, major cloud providers (AWS, Google Cloud, Azure) offer managed Kubernetes services (EKS, GKE, AKS) with free tiers or credits for new users, allowing you to build a more realistic, albeit potentially more complex, cloud-based lab environment.

Having your own environment allows you to freely experiment, test configurations, deploy different applications, and simulate failure scenarios without impacting production systems. This hands-on tinkering is crucial for building intuition and practical skills. OpenCourser's IT & Networking and Cloud Computing categories offer courses that can help you set up these environments.

This course focuses specifically on setting up Kubernetes clusters, a prerequisite for most service mesh labs.

Leveraging Community Forums and Documentation

The official documentation for service mesh projects like Istio and Linkerd is typically comprehensive and should be your primary resource. It includes architectural overviews, installation guides, task-based tutorials, API references, and operational guides. Spend time reading through these materials to understand the concepts and available features.

Beyond the official docs, the community is a valuable resource. Most open-source service mesh projects have active community forums, mailing lists, or chat channels (like Slack or Discord). These are excellent places to ask questions when you get stuck, learn from others' experiences, and stay updated on new developments. Observing discussions and solutions can provide insights into common challenges and best practices.

Blogs maintained by the project teams, contributors, or companies heavily invested in service mesh technology often contain deep dives into specific features, performance tuning tips, or real-world case studies. Following these resources helps bridge the gap between documentation and practical application. Remember to use OpenCourser to search for courses and books that might cover specific aspects you encounter.

Balancing Theory and Practice

Effective self-directed learning involves balancing theoretical understanding with practical experimentation. Simply following tutorials without grasping the underlying concepts limits your ability to apply the knowledge in different contexts or troubleshoot effectively. Conversely, only reading theory without hands-on practice makes it difficult to appreciate the practical implications and operational challenges.

Adopt a cycle of learning: Read about a concept (e.g., circuit breaking) in the documentation or a book. Then, implement it in your lab environment using a sample application. Observe its behavior, tweak the configuration parameters, and see how the system responds. Try to predict the outcome before making a change. When things don't work as expected, dive back into the documentation or community forums to understand why.

Consider setting specific learning goals or mini-projects, such as "Implement mTLS for all services in the sample app" or "Set up a canary release for the frontend service." This structured approach provides direction and ensures you cover key functionalities. Document your experiments and findings; teaching yourself often involves explaining concepts back to yourself clearly.

This book provides practical insights into implementing Istio, helping bridge theory and practice.

Career Opportunities and Progression

Entry-Level Roles and Exposure

Directly entering a role solely focused on "Service Mesh Engineer" might be challenging without prior experience. However, several entry-level positions provide excellent exposure to service mesh technologies and the surrounding ecosystem. Roles in DevOps or Site Reliability Engineering (SRE) often involve managing the infrastructure where service meshes run, typically Kubernetes clusters.

Junior Cloud Engineers or Cloud Associates working with platforms like AWS, Azure, or Google Cloud may encounter managed service mesh offerings (like AWS App Mesh, Azure Service Fabric Mesh, or Anthos Service Mesh) or help deploy open-source solutions onto managed Kubernetes services. Even entry-level Software Engineers working on microservices might interact with a service mesh, consuming its observability data or configuring basic traffic rules for their services, especially within platform-focused teams.

These initial roles provide opportunities to understand the operational context, learn Kubernetes fundamentals, and gradually gain hands-on experience with service mesh concepts and tools under the guidance of more senior engineers. Focus on building strong foundational skills in Linux, networking, scripting, containerization (Docker), and Kubernetes.

These career paths often serve as entry points or related fields.

Mid-Career Specialization Paths

With foundational experience in cloud-native environments and perhaps initial exposure to service meshes, several specialization paths open up. Engineers can choose to deepen their expertise in specific aspects of service mesh functionality.

Specializing in Security involves leveraging the service mesh for enforcing robust security postures, managing mTLS certificate lifecycles, defining fine-grained authorization policies (e.g., using Open Policy Agent integration), and ensuring compliance within the mesh. This path often aligns with roles like Security Engineer or Cloud Security Specialist.

Focusing on Observability means becoming an expert in configuring and utilizing the telemetry data (metrics, traces, logs) generated by the mesh. This involves integrating the mesh with monitoring and logging platforms (Prometheus, Grafana, ELK stack, Datadog), building dashboards, setting up alerts, and using distributed tracing to optimize performance and troubleshoot complex issues. This aligns well with SRE roles.

Another path involves specializing in Traffic Management and Resilience, becoming adept at configuring complex routing rules, implementing advanced deployment strategies (blue/green, canary), tuning resilience features like retries and circuit breakers, and potentially contributing to performance optimization of the data plane proxies.

This role specifically focuses on service mesh technologies.

Leadership Roles in Architecture and SRE

Experienced professionals with deep expertise in service meshes and related cloud-native technologies often progress into leadership roles. As a Cloud Architect or Solutions Architect, they might be responsible for designing large-scale distributed systems, deciding whether and how to incorporate a service mesh into the overall architecture, evaluating different service mesh options, and setting technical strategy.

Within Site Reliability Engineering (SRE), senior engineers or technical leads often take ownership of the service mesh platform itself. This involves defining best practices for its usage across the organization, managing its lifecycle (upgrades, patching), ensuring its reliability and performance, and providing guidance and support to application teams consuming the mesh.

These leadership roles require not only deep technical knowledge of service meshes and Kubernetes but also strong communication skills, the ability to make strategic decisions considering trade-offs (like complexity vs. benefit), and experience mentoring junior engineers. They play a critical role in ensuring the successful adoption and operation of service mesh technology within an organization.

These senior roles often leverage service mesh expertise.

Freelance and Consulting Opportunities

As service mesh adoption grows, so does the demand for specialized expertise, creating opportunities for freelance and consulting work. Organizations embarking on their service mesh journey often seek external help for initial evaluation, design, implementation, and training.

Consultants might assist companies in choosing the right service mesh for their needs, designing the integration with their existing Kubernetes environment, migrating applications onto the mesh, and establishing operational best practices. They might also provide workshops to upskill internal teams or help troubleshoot complex issues related to performance or security within the mesh.

Freelancers with deep expertise in specific service meshes like Istio or Linkerd, or particular aspects like security hardening or performance tuning, can find project-based work helping organizations optimize their deployments. Success in these roles requires not only strong technical skills but also excellent communication, problem-solving abilities, and the capacity to quickly understand diverse client environments and requirements.

Challenges and Limitations of Service Meshes

Complexity vs. Benefit Trade-offs

Perhaps the most significant challenge associated with service meshes is their inherent complexity. Introducing a service mesh adds several new components (control plane, sidecar proxies) that need to be deployed, managed, configured, and monitored. Understanding the interactions between the mesh components, the underlying orchestrator (like Kubernetes), and the applications themselves requires significant expertise.

The configuration options for traffic routing, security policies, and telemetry can be extensive and nuanced, leading to a steep learning curve for both operations and development teams. Debugging issues can also become more complex, as problems might originate in the application, the sidecar proxy, the control plane, or the interactions between them.

Organizations must carefully weigh this added complexity against the benefits provided. For simpler applications or teams with limited operational capacity, the overhead of managing a service mesh might not be justified. The benefits are most pronounced in large, complex microservice environments where the standardization and advanced features offered by the mesh provide substantial value in managing scale and heterogeneity.

Performance Overhead Concerns

Injecting a sidecar proxy into the network path for every service instance inevitably introduces some performance overhead. Each request and response must pass through two additional proxy hops (the caller's sidecar and the receiver's sidecar). This adds latency, typically measured in single-digit milliseconds per hop under optimal conditions, but it can accumulate, especially for requests traversing multiple services.

Furthermore, the sidecar proxies themselves consume CPU and memory resources on the nodes where they run. While modern proxies like Envoy are highly optimized, this resource consumption can be significant across a large cluster, potentially increasing infrastructure costs. The control plane also consumes resources, although it doesn't sit in the request path.

While vendors continuously work to minimize this overhead, performance remains a critical consideration, especially for latency-sensitive applications. Careful performance testing and tuning are often required after implementing a service mesh to ensure it meets application requirements. Some newer approaches, like ambient mesh or sidecar-less models, aim to reduce the per-node resource footprint, though they may introduce different trade-offs.

Vendor Lock-in Risks

While many popular service meshes are open-source (Istio, Linkerd, Kuma), relying heavily on their specific features and APIs can create a form of vendor or project lock-in. Migrating from one service mesh to another, or removing a service mesh entirely, can be a complex and costly undertaking if application deployment strategies or operational tooling have become tightly coupled to its specific implementation or configuration model (e.g., custom resource definitions).

Managed service mesh offerings from cloud providers, while simplifying operations, can further increase lock-in to that provider's ecosystem. Efforts like the Service Mesh Interface (SMI) aim to provide a standard set of APIs for common service mesh functionalities, potentially reducing lock-in by allowing interaction with different mesh implementations through a common interface. However, SMI adoption and coverage are still evolving, and advanced features often remain specific to individual mesh implementations.

Organizations should be mindful of this potential lock-in when adopting a service mesh, favoring standard interfaces where possible and designing their integrations to minimize tight coupling with implementation-specific details.

Learning Curve for Teams

Successfully adopting and operating a service mesh requires a significant investment in team education and training. Developers need to understand how the mesh affects their services, how to leverage its observability features for debugging, and potentially how to configure basic traffic rules relevant to their applications.

Operations and SRE teams bear the primary responsibility for installing, managing, upgrading, and troubleshooting the service mesh infrastructure itself. They need deep expertise in the chosen service mesh, Kubernetes (or the relevant orchestrator), networking concepts, and security principles. Understanding the complex interactions and potential failure modes requires dedicated learning time and hands-on experience.

This learning curve can slow down initial adoption and requires ongoing effort to keep skills current as the technology evolves. Organizations need to factor in the cost and time associated with training and potentially hiring specialized personnel when considering a service mesh implementation. Providing adequate resources, documentation, and internal support is crucial for overcoming this challenge.

Future Trends in Service Mesh Technology

Convergence with AIOps

The rich telemetry data generated by service meshes (metrics, traces, logs) provides fertile ground for Artificial Intelligence for IT Operations (AIOps). Future trends suggest a tighter integration where AI/ML algorithms analyze mesh data to automatically detect anomalies, predict potential failures, perform root cause analysis, and even suggest or automate remediation actions.

Imagine a system that automatically adjusts traffic routing based on predicted latency spikes or proactively scales services based on patterns observed in mesh metrics. AIOps could also optimize security policies by identifying unusual communication patterns or automatically fine-tune resilience settings like retry budgets based on observed network conditions.

This convergence aims to move beyond passive observability towards proactive, intelligent automation of network operations within the microservices environment, helping manage the increasing complexity of large-scale distributed systems. You can explore related concepts in Artificial Intelligence.

Edge Computing and Multi-Cluster Management

As applications become more distributed, extending beyond traditional data centers and clouds to edge locations, service meshes are evolving to manage communication across these diverse environments. Managing traffic, security, and observability consistently across multiple Kubernetes clusters, potentially spanning different regions or even hybrid cloud/edge setups, is a growing challenge.

Future service mesh developments focus on improved multi-cluster federation, allowing a single logical mesh to span multiple physical clusters. This enables seamless service discovery, load balancing, and policy enforcement across cluster boundaries. Specific optimizations for edge computing scenarios, considering constraints like limited bandwidth and higher latency, are also emerging, potentially involving lighter-weight edge proxies or different control plane architectures.

Standardization Efforts and Emerging Protocols

While projects like Istio and Linkerd are popular, the lack of strong standardization can hinder interoperability and increase lock-in concerns. Efforts like the Service Mesh Interface (SMI) aim to define common APIs for core functionalities like traffic splitting, metrics, and access control. Continued development and broader adoption of such standards could simplify multi-mesh strategies and make tooling more portable.

Furthermore, underlying technologies are evolving. The development of new network protocols or extensions (like advancements in HTTP/3 and QUIC) may influence future data plane implementations. There's also ongoing exploration of alternative data plane models beyond the traditional sidecar, such as node-level proxies or eBPF-based networking, aiming to reduce overhead or simplify operations, which could significantly shape the next generation of service meshes.

Market Growth and Adoption Insights

The service mesh market continues to grow as more organizations adopt microservices and Kubernetes, facing the inherent complexities of distributed systems communication. While adoption is still maturing beyond early adopters, the value proposition of enhanced security, observability, and traffic control resonates strongly with enterprises managing complex applications at scale. Market analysis from firms like McKinsey & Company often highlights cloud-native technologies, including service meshes, as key enablers for digital transformation.

Surveys conducted by organizations like the Cloud Native Computing Foundation (CNCF) provide insights into adoption rates and the popularity of different service mesh projects within the community. Future growth is expected as tooling matures, complexity barriers are lowered (perhaps through managed offerings and improved automation), and the benefits become increasingly clear for mainstream enterprise users. The ecosystem continues to evolve rapidly with both open-source innovation and commercial vendor offerings.

Frequently Asked Questions (Career Focus)

Do I need a cloud certification to work with service meshes?

While not strictly mandatory, a cloud certification (like AWS Solutions Architect, Google Cloud Professional Cloud Architect, or Azure Solutions Architect Expert) is often highly beneficial. Service meshes are most commonly deployed in cloud environments, particularly on managed Kubernetes services (EKS, GKE, AKS). Certifications demonstrate foundational knowledge of the cloud platform, including its networking, security, and compute services, which provides crucial context for deploying and managing a service mesh.

Furthermore, certifications related to Kubernetes itself (like CKA, CKAD, CKS from the CNCF) are arguably even more directly relevant, as deep Kubernetes knowledge is almost always a prerequisite for effectively working with service meshes. While hands-on experience trumps certifications, they can help validate your skills to potential employers, especially when transitioning into the field.

Consider using OpenCourser's Learner's Guide for tips on preparing for certifications and leveraging online courses effectively.

How transferable are service mesh skills across industries?

Service mesh skills are generally quite transferable across industries. The core problems they solve – managing complex inter-service communication, enhancing security, improving observability, and enabling resilient traffic management – are common to large-scale distributed systems regardless of the specific industry (e.g., finance, e-commerce, telecommunications, healthcare).

The underlying technologies (Kubernetes, Envoy, Linkerd, Istio, networking concepts, security principles) are industry-agnostic. While specific compliance requirements or performance demands might vary between sectors (e.g., stricter regulations in finance vs. high throughput needs in streaming media), the fundamental skills required to design, implement, and operate a service mesh remain largely the same.

Expertise in popular open-source service meshes and Kubernetes is valuable across a wide range of technology-driven companies undergoing digital transformation or operating modern cloud-native platforms.

What entry-level roles expose you to service mesh technology?

As mentioned earlier, direct entry-level "Service Mesh Engineer" roles are rare. Exposure typically comes through related infrastructure or platform roles. Look for titles like:

- Junior DevOps Engineer / SRE: Often involved in managing Kubernetes clusters and the associated tooling, potentially including a service mesh.

- Cloud Associate / Junior Cloud Engineer: May work with managed Kubernetes services and could encounter managed service mesh offerings or assist senior engineers with deployments.

- Platform Engineer (Entry-Level): Teams building internal developer platforms often manage service meshes as part of the infrastructure provided to application teams.

- Junior Software Engineer (on Platform/Infra teams): Might contribute to tooling around the service mesh or interact with its APIs and observability data.

Focus on roles that explicitly mention Kubernetes, Docker, microservices, and cloud platforms in their descriptions. Even if the role doesn't initially involve deep service mesh work, building strong foundational skills in these adjacent areas is the best pathway.

These careers are common starting points or related fields.

Is prior Kubernetes experience mandatory?

Yes, for most practical purposes, prior Kubernetes experience is effectively mandatory for working deeply with service meshes. The vast majority of service mesh implementations (Istio, Linkerd, etc.) are designed to run on Kubernetes, leveraging its APIs and primitives for deployment, service discovery, and configuration.

While some service meshes can technically run outside Kubernetes (e.g., Consul Connect with VMs), the dominant deployment pattern is within Kubernetes clusters. Understanding core Kubernetes concepts like Pods, Services, Deployments, Namespaces, ConfigMaps, Secrets, and networking (CNI) is essential for installing, configuring, operating, and troubleshooting a service mesh effectively.

If you aim to specialize in service meshes, investing time in learning Kubernetes thoroughly is a non-negotiable prerequisite. Many online courses and resources are available on OpenCourser to help build this foundational knowledge.

How competitive is the job market for service mesh specialists?

The job market for individuals with genuine expertise in service meshes is currently quite strong and competitive, but it's also a niche specialization. While many companies are adopting microservices and Kubernetes, the deployment of full-scale service meshes is still less widespread, often concentrated in larger tech companies or those with particularly complex systems.

There is high demand for experienced engineers who can navigate the complexities of service mesh implementation and operation, particularly those skilled in popular tools like Istio or Linkerd combined with deep Kubernetes knowledge. However, the absolute number of dedicated "Service Mesh Engineer" roles might be smaller compared to broader roles like "DevOps Engineer" or "Cloud Engineer".

For those with the right skills and experience, competition can be lower than in more generalist roles, potentially leading to better compensation. However, reaching that level of expertise requires significant investment in learning and hands-on practice. It's a specialized field with high demand for true experts.

Can open-source contributions substitute for formal experience?

Meaningful contributions to relevant open-source projects (like Istio, Linkerd, Envoy, Kubernetes itself, or related tooling) can significantly bolster your profile and partially substitute for formal, paid work experience, especially when entering the field or seeking specialized roles.

Contributing demonstrates initiative, practical skills, the ability to collaborate within a development community, and deep understanding of the technology. Fixing bugs, adding features, improving documentation, or helping answer user questions in community forums showcases your expertise in a tangible way that employers value highly. It shows you can understand complex codebases and contribute meaningfully.

While it might not entirely replace years of operational experience managing production systems, significant open-source contributions can definitely differentiate you from other candidates and demonstrate a passion and proficiency that are hard to convey through a resume alone. It's an excellent way to build practical skills and gain visibility within the ecosystem.

Embarking on a journey to understand and work with service meshes requires dedication, given the complexity of the underlying distributed systems concepts and tooling. However, the skills developed are highly valuable in the modern cloud-native landscape. Whether you pursue formal education, self-directed learning through online courses and hands-on labs, or contribute to open-source projects, persistence and continuous learning are key. The ability to manage, secure, and observe complex microservice interactions is a critical capability for building reliable and scalable modern applications.