Big Data Processing

vigating the World of Big Data Processing

Big Data Processing refers to the techniques and technologies used to handle and analyze massive and complex datasets that traditional data-processing software cannot effectively manage. These datasets, often characterized by their sheer volume, the velocity at which they are generated, and the variety of data types they encompass, require specialized approaches to unlock valuable insights. The ability to harness big data is transforming industries by enabling more informed decision-making, uncovering new opportunities, and optimizing operational efficiencies.

Working in Big Data Processing can be an intellectually stimulating journey, offering the chance to solve complex puzzles hidden within vast seas of information. Imagine sifting through terabytes of data to identify patterns that could predict the next market trend, detect fraudulent activities in real-time, or even contribute to breakthroughs in healthcare research. The field is also at the forefront of technological innovation, constantly evolving with new tools and techniques, providing a dynamic and exciting environment for professionals who are passionate about data and its potential.

Introduction to Big Data Processing

This section will introduce you to the fundamental concepts of Big Data Processing. We'll explore what it means, how it came to be, where it's making a significant impact, and the typical steps involved in turning raw data into actionable intelligence. Understanding these basics is the first step in appreciating the power and complexity of this field.

Definition and Scope of Big Data Processing

Big Data Processing encompasses the methods and systems designed to collect, store, manage, process, and analyze datasets that are exceptionally large or intricate. The "bigness" of big data isn't just about size; it's also about the complexity and speed at which data is generated. Think about the constant stream of information from social media, financial transactions, scientific experiments, or the Internet of Things (IoT) devices – this is the realm of big data. Traditional database and software tools often falter when faced with such scale and diversity, necessitating a new generation of technologies and analytical approaches.

The scope of big data processing is vast and continually expanding. It involves not only handling structured data (like that found in traditional databases) but also unstructured data (such as text, images, and videos) and semi-structured data. The ultimate goal is to extract meaningful insights, identify patterns, and make predictions that can drive innovation and solve complex problems across various domains. This often involves techniques from computer science, statistics, and machine learning.

To truly grasp the concept, consider the "Vs" often used to characterize big data: Volume (the sheer amount of data), Velocity (the speed at which data is generated and processed), and Variety (the different types of data). Some models also include Veracity (the quality and accuracy of the data) and Value (the usefulness of the insights derived). Successfully addressing these characteristics is at the core of big data processing.

Historical Evolution and Technological Drivers

The concept of collecting and analyzing data isn't new; civilizations have been doing it for centuries, from ancient tally sticks used to track supplies to early censuses. However, the "big data" era as we know it emerged more recently, fueled by significant technological advancements. The late 20th and early 21st centuries saw an explosion in digital data generation, driven by the rise of the internet, personal computers, mobile devices, and social media.

Early attempts to quantify the growth of information date back to the 1940s. The term "big data" started gaining traction in the late 1990s and early 2000s, with figures like John Mashey and Roger Mougalas popularizing the concept to describe datasets that overwhelmed traditional processing capabilities. A pivotal moment was the development of open-source frameworks like Apache Hadoop around 2005, which was based on Google's MapReduce paradigm. These technologies made it feasible to store and process massive datasets on clusters of commodity hardware, democratizing access to big data capabilities.

The proliferation of the Internet of Things (IoT), advancements in cloud computing offering scalable storage and processing power, and the increasing sophistication of Artificial Intelligence (AI) and Machine Learning algorithms have further accelerated the evolution and importance of big data processing. These drivers continue to shape the field, enabling more complex analyses and real-time insights.

For those interested in the foundational technologies, understanding the history and capabilities of these tools is crucial. These resources offer a good starting point:

These courses provide practical introductions to widely used big data frameworks:

Key Industries Reliant on Big Data Processing

Big Data Processing is not confined to a single sector; its applications are diverse and transformative across numerous industries. The ability to analyze vast datasets provides a competitive edge, enhances efficiency, and drives innovation. Many sectors now consider big data analytics fundamental to their operations and strategy.

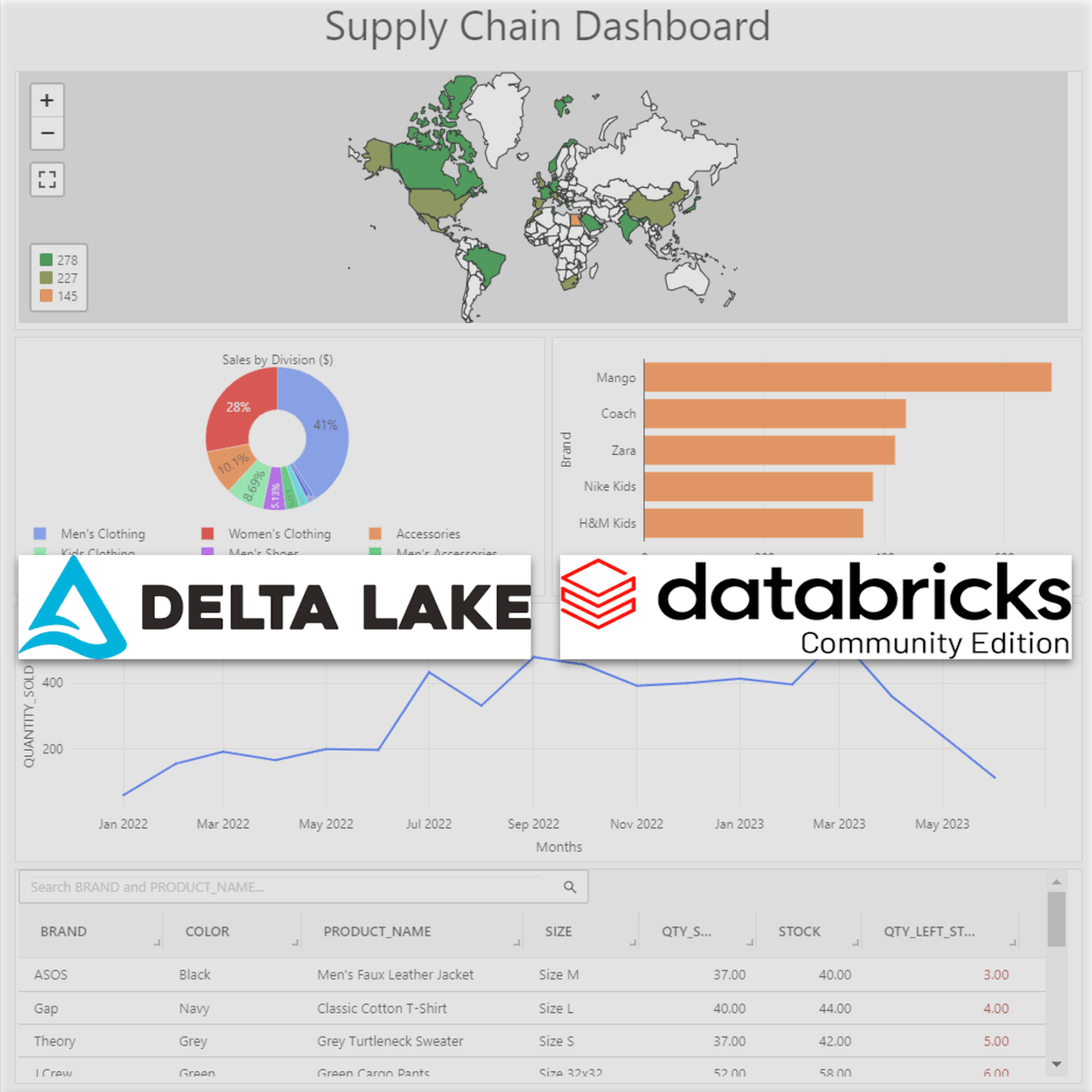

The finance and banking industry heavily relies on big data for fraud detection, risk assessment, algorithmic trading, and personalizing customer services. In healthcare, big data analytics is used for improving diagnostics, personalizing medicine, accelerating drug discovery, and predicting patient health trends. The retail sector employs big data to understand customer behavior, personalize recommendations, optimize supply chains, and manage inventory effectively. Telecommunications companies use big data to enhance network performance, predict customer churn, and reduce fraud.

Other significant industries include manufacturing, where big data helps optimize production processes and predict maintenance needs; government, for improving public services, managing utilities, and even preventing crime (while navigating privacy concerns); education, to enhance learning systems and identify at-risk students; and agriculture, for optimizing crop yields and resource management. Even areas like travel and real estate leverage big data for pricing optimization, trend analysis, and understanding market dynamics. The pervasiveness of big data highlights its critical role in the modern economy.

To explore how data is leveraged in specific sectors, you might find these courses insightful:

Basic Workflow Stages (Ingestion, Storage, Analysis, Visualization)

The journey of transforming raw data into actionable insights typically follows a series of well-defined stages, often referred to as the big data processing workflow or pipeline. While specific implementations can vary, the core concepts remain consistent. Understanding this workflow is fundamental to grasping how big data projects are executed.

The first stage is Data Ingestion, which involves collecting raw data from various sources. These sources can be incredibly diverse, ranging from databases and log files to IoT sensors, social media feeds, and streaming platforms. The data can be structured, semi-structured, or unstructured. The next stage is Data Storage. Once ingested, the vast quantities of data need to be stored efficiently and accessibly. This often involves distributed file systems like Hadoop Distributed File System (HDFS) or cloud-based storage solutions like Amazon S3 or Google Cloud Storage. Data lakes, which can store raw data in its native format, are commonly used.

Following storage is Data Processing and Analysis. This is where the "heavy lifting" happens. Data is cleaned, transformed, and analyzed to uncover patterns, trends, and insights. This stage often employs distributed computing frameworks like Apache Hadoop's MapReduce or Apache Spark, and may involve statistical analysis, data mining, and machine learning algorithms. Finally, the insights derived from the analysis need to be communicated effectively, which leads to the Data Visualization and Reporting stage. Tools are used to create charts, graphs, dashboards, and reports that make the findings understandable and actionable for stakeholders.

These courses offer insights into the different stages of the data workflow:

Key Concepts and Techniques in Big Data Processing

Delving deeper into Big Data Processing requires understanding its core concepts and the sophisticated techniques employed to manage and analyze massive datasets. This section explores the foundational methodologies that enable the transformation of raw data into valuable knowledge. We will cover distributed computing, different processing paradigms, data organization strategies, and the crucial role of machine learning in modern data pipelines.

Distributed Computing Frameworks (e.g., MapReduce)

At the heart of Big Data Processing lies the principle of distributed computing. Given that big data, by its nature, often exceeds the processing capacity of a single machine, distributed computing frameworks are essential. These frameworks allow for the division of large tasks into smaller, manageable sub-tasks that can be processed in parallel across a cluster of computers. This parallel processing capability significantly speeds up computation and allows for the analysis of datasets that would otherwise be intractable.

One of the pioneering and most influential distributed computing paradigms in the big data world is MapReduce. Originally developed by Google and later forming a core component of Apache Hadoop, MapReduce provides a programming model for processing large datasets with a parallel, distributed algorithm on a cluster. The model involves two main phases: the "Map" phase, which processes and transforms input data into intermediate key-value pairs, and the "Reduce" phase, which aggregates these intermediate pairs to produce the final output. While other frameworks have emerged, understanding MapReduce provides a solid conceptual foundation for distributed data processing.

Frameworks like Apache Hadoop and Apache Spark are built upon these distributed computing principles. Hadoop, with its Hadoop Distributed File System (HDFS) for storage and MapReduce for processing, was a foundational technology. Spark, on the other hand, offers in-memory processing capabilities, which can lead to significantly faster performance for certain types of workloads, especially iterative algorithms and interactive analytics. These frameworks manage the complexities of distributing data and computation, handling failures, and coordinating tasks across the cluster.

These courses can help you understand and work with distributed computing frameworks:

For a comprehensive understanding of these frameworks, these books are highly recommended:

Batch vs. Stream Processing Paradigms

When it comes to processing big data, two primary paradigms dictate how data is handled: batch processing and stream processing. The choice between them, or a hybrid approach, depends heavily on the specific requirements of the application, particularly the need for timeliness and the nature of the data sources. Understanding the distinctions between these paradigms is crucial for designing effective data processing pipelines.

Batch processing involves processing large volumes of data in groups or "batches." Data is collected over a period, and then processed all at once. This approach is well-suited for tasks where real-time results are not critical, such as generating end-of-day reports, historical analysis, or large-scale data transformations. Apache Hadoop's MapReduce is a classic example of a batch processing system. Batch processing is often more cost-effective for very large datasets where throughput is more important than low latency.

In contrast, stream processing (also known as real-time processing) involves analyzing data as it arrives, typically with very low latency. This is essential for applications that require immediate insights and actions, such as fraud detection, real-time recommendations, monitoring of sensor data from IoT devices, or analyzing social media trends as they happen. Technologies like Apache Spark Streaming, Apache Flink, and Apache Storm are designed for stream processing. While stream processing offers immediacy, it can present challenges in terms of managing state, ensuring data consistency, and handling high-velocity data streams.

Many modern data architectures employ a hybrid approach, sometimes referred to as Lambda or Kappa architecture, to leverage the benefits of both batch and stream processing. This allows organizations to handle both historical analysis and real-time decision-making effectively.

These courses explore real-time data processing concepts:

Data Partitioning and Sharding Strategies

As datasets grow to enormous sizes, managing and querying them efficiently within a single database or storage system becomes a significant challenge. Data partitioning and sharding are two important strategies used to divide large datasets into smaller, more manageable pieces, thereby improving performance, scalability, and manageability. While related, they address different aspects of data distribution.

Data partitioning typically refers to dividing a large table within a single database instance into smaller segments called partitions. Each partition holds a subset of the data based on a defined rule, such as a range of dates, specific values in a column (e.g., geographic region), or a hash of a column. When queries are executed, the database can often identify which partitions contain the relevant data, scanning only those partitions instead of the entire table. This can significantly speed up query performance and simplify maintenance tasks like backups and indexing.

Sharding, on the other hand, takes partitioning a step further by distributing these smaller pieces (shards) across multiple database servers or instances. Each shard is essentially an independent database, and collectively they form a single logical database. This is a form of horizontal scaling, allowing the system to handle much larger datasets and higher transaction volumes by distributing the load across multiple machines. A "shard key" is used to determine which shard holds a particular piece of data. Sharding is common in large-scale distributed systems, such as e-commerce platforms and social media networks, where scalability and availability are paramount.

While partitioning improves performance and manageability within a single database, sharding is crucial for scaling out to handle massive data volumes and high throughput across a distributed environment. Both techniques require careful planning, particularly in choosing the partitioning or sharding key, to ensure balanced data distribution and optimal query performance.

Machine Learning Integration in Data Pipelines

Machine Learning (ML) plays an increasingly vital role in modern Big Data Processing pipelines, transforming raw data into predictive insights and actionable intelligence. Instead of just analyzing historical data for trends, ML algorithms can learn from this data to make forecasts, classify new data points, identify anomalies, and automate decision-making processes. The integration of ML into data pipelines allows organizations to extract deeper value from their vast data reserves.

Within a big data pipeline, ML models can be applied at various stages. For instance, after data ingestion and preprocessing (cleaning, transformation), ML can be used for feature engineering, which involves selecting and creating the most relevant input variables for a predictive model. The core of ML integration lies in training models on large historical datasets and then deploying these trained models to make predictions on new, incoming data. This could involve predicting customer churn, recommending products, identifying fraudulent transactions, or forecasting equipment failures.

Big data frameworks like Apache Spark, with its MLlib library, provide scalable tools for performing machine learning tasks on distributed datasets. These tools enable data scientists and ML engineers to build and deploy sophisticated models that can handle the volume and complexity of big data. The output of these ML models then becomes another valuable data product that can be visualized, reported, or fed into other automated systems, creating a continuous loop of data-driven improvement. As AI and ML continue to advance, their integration into big data processing will only become more seamless and impactful.

For those looking to understand how machine learning is applied in big data contexts, these resources are valuable:

To deepen your understanding of machine learning principles, consider these foundational books:

Big Data Processing Tools and Technologies

The ability to effectively process big data hinges on a diverse ecosystem of tools and technologies. These systems provide the infrastructure and capabilities to handle the immense scale, velocity, and variety of modern datasets. This section will explore some of the most prominent categories of tools used in Big Data Processing, from open-source frameworks that form the backbone of many systems to cloud-based solutions offering managed services, and specialized database systems designed for large-scale data.

Open-Source Frameworks (e.g., Apache Hadoop, Spark)

Open-source frameworks are foundational to the world of Big Data Processing, providing powerful, flexible, and often cost-effective solutions for handling massive datasets. Among the most influential are Apache Hadoop and Apache Spark, which have become cornerstones of many big data architectures. These frameworks offer distributed storage and processing capabilities, enabling organizations to scale their data operations.

Apache Hadoop is an open-source framework designed for distributed storage and processing of large datasets across clusters of computers using simple programming models. Its key components include the Hadoop Distributed File System (HDFS), which provides scalable and fault-tolerant storage, and MapReduce, a programming model for parallel processing of large datasets. Hadoop is well-suited for batch processing of very large datasets where high throughput is essential, and it can run on commodity hardware, making it a cost-effective solution.

Apache Spark is another powerful open-source framework that has gained immense popularity due to its speed and versatility. Spark enhances the capabilities of Hadoop by providing in-memory data processing, which can be significantly faster than Hadoop's disk-based MapReduce for many applications, especially iterative machine learning algorithms and interactive data analysis. Spark also offers a rich set of libraries, including Spark SQL for structured data processing, MLlib for machine learning, Spark Streaming for real-time data processing, and GraphX for graph processing. While Spark can run independently, it often complements Hadoop by processing data stored in HDFS.

Many other open-source tools exist within the broader Hadoop and Spark ecosystems, such as Apache Hive for data warehousing on Hadoop, Apache HBase for NoSQL database capabilities, and Apache Kafka for distributed event streaming. The open-source nature of these tools fosters a vibrant community, continuous innovation, and a wide range of integrations.

These courses offer practical experience with these leading open-source frameworks:

The following books provide in-depth knowledge of Hadoop and related technologies:

Cloud-Based Solutions (e.g., AWS EMR, Google BigQuery)

Cloud computing platforms have revolutionized Big Data Processing by offering scalable, flexible, and often more cost-effective solutions compared to on-premises infrastructure. Major cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer a comprehensive suite of managed services designed specifically for big data storage, processing, and analytics. These solutions allow organizations to offload the complexities of infrastructure management and focus on deriving insights from their data.

Amazon EMR (Elastic MapReduce) is a managed cluster platform that simplifies running big data frameworks like Apache Spark, Hadoop, HBase, Flink, and others on AWS. It allows users to easily provision and scale clusters, and it integrates seamlessly with other AWS services like Amazon S3 for storage. Google BigQuery is a fully managed, serverless data warehouse that enables super-fast SQL queries using the processing power of Google's infrastructure. It's designed for analyzing petabytes of data and offers automatic scaling and a pay-as-you-go pricing model. Microsoft Azure offers similar capabilities with services like Azure HDInsight for managed open-source analytics clusters and Azure Synapse Analytics for enterprise data warehousing and big data analytics.

These cloud-based solutions offer several advantages, including rapid deployment, elasticity (the ability to scale resources up or down as needed), and integration with a wide range of other cloud services, such as machine learning platforms, data visualization tools, and IoT services. This allows organizations to build end-to-end big data pipelines entirely in the cloud. However, it's also important to consider factors like data security, compliance, and potential vendor lock-in when choosing a cloud provider and its services.

To learn more about leveraging cloud platforms for big data, consider these courses:

Database Systems for Large-Scale Data (NoSQL, NewSQL)

Traditional Relational Database Management Systems (RDBMS) often struggle to meet the scalability, performance, and flexibility demands of modern big data applications. This has led to the rise of alternative database technologies, broadly categorized as NoSQL and NewSQL databases, which are specifically designed to handle large volumes of diverse data types and high-velocity workloads.

NoSQL databases (which typically stands for "Not Only SQL") encompass a wide variety of database technologies that move away from the rigid schema and relational model of traditional RDBMS. They are generally characterized by their horizontal scalability (scaling out by adding more servers), flexible data models, and ability to handle unstructured and semi-structured data. Common types of NoSQL databases include:

- Document databases (e.g., MongoDB, Couchbase): Store data in document-like structures such as JSON or BSON.

- Key-value stores (e.g., Redis, Amazon DynamoDB): Store data as simple key-value pairs, offering high performance for read/write operations.

- Column-family stores (e.g., Apache Cassandra, HBase): Store data in columns rather than rows, optimized for queries over large datasets with many attributes.

- Graph databases (e.g., Neo4j, Amazon Neptune): Designed to store and navigate relationships between data points, ideal for social networks, recommendation engines, and fraud detection.

NewSQL databases aim to combine the scalability and flexibility of NoSQL databases with the ACID (Atomicity, Consistency, Isolation, Durability) transactional guarantees and familiar SQL interface of traditional RDBMS. They seek to provide the "best of both worlds" for applications that require both high scalability and strong consistency. Examples include CockroachDB, TiDB, and VoltDB. These systems often employ distributed architectures and innovative storage and processing techniques to achieve their goals.

The choice of database system depends heavily on the specific requirements of the application, including the data model, consistency needs, scalability demands, and query patterns. Many big data architectures utilize a polyglot persistence approach, using different types of databases for different parts of the application to leverage their respective strengths.

These courses provide insights into managing data with different database systems:

This book offers a broader view of data management concepts:

Monitoring and Orchestration Tools (e.g., Airflow, Prometheus)

Managing complex Big Data Processing pipelines, which often involve numerous steps, diverse technologies, and distributed systems, requires robust monitoring and orchestration tools. These tools are essential for ensuring that data workflows run reliably, efficiently, and can be easily managed and debugged. They provide visibility into the health and performance of the pipeline and automate the scheduling and execution of tasks.

Workflow Orchestration Tools, such as Apache Airflow, Luigi, or Prefect, are used to define, schedule, and monitor data pipelines as a series of tasks. Airflow, for example, allows users to author workflows as Directed Acyclic Graphs (DAGs) of tasks using Python. It provides a rich user interface for visualizing pipelines, monitoring progress, and managing task dependencies and retries. Orchestration tools are critical for automating complex ETL/ELT (Extract, Transform, Load / Extract, Load, Transform) processes, machine learning model training, and report generation. They help ensure that tasks are executed in the correct order and that failures are handled gracefully.

Monitoring Tools, like Prometheus, Grafana, or Datadog, are used to collect, visualize, and alert on metrics related to the performance and health of big data systems and applications. Prometheus, an open-source monitoring and alerting toolkit, is widely used for collecting time-series data from various components of a distributed system. It allows users to define alerting rules based on these metrics, enabling proactive identification and resolution of issues. Grafana is often used in conjunction with Prometheus (and other data sources) to create rich, interactive dashboards for visualizing system performance, resource utilization, and application-specific metrics. Effective monitoring is crucial for maintaining the stability and efficiency of big data pipelines, identifying bottlenecks, and ensuring that Service Level Objectives (SLOs) are met.

Together, orchestration and monitoring tools provide the operational backbone for Big Data Processing, enabling developers and operations teams to build, deploy, and maintain robust and scalable data applications.

For those interested in the operational aspects of big data systems, understanding these tools is beneficial. You may find courses related to DevOps and specific tools like Airflow and Prometheus on platforms like OpenCourser.

Career Opportunities in Big Data Processing

The explosion of data has created a significant demand for professionals skilled in Big Data Processing. This field offers a variety of exciting and well-compensated career paths for individuals who can help organizations harness the power of their data. If you're considering a career in this domain, or looking to pivot, understanding the roles, industry demand, and potential career trajectories is essential. This section will shed light on the opportunities available in the world of big data.

Roles: Data Engineer, ML Engineer, Solutions Architect

The field of Big Data Processing encompasses several key roles, each with distinct responsibilities and skill sets, though there can be overlap. Among the most prominent are Data Engineers, Machine Learning (ML) Engineers, and Big Data Solutions Architects.

Data Engineers are the builders and maintainers of the data infrastructure. They are responsible for designing, constructing, installing, testing, and maintaining scalable data management systems and data pipelines. Their work involves data ingestion, transformation, storage, and preparation for analysis by data scientists or other stakeholders. Data Engineers work with a variety of tools, including databases (SQL and NoSQL), ETL/ELT tools, and big data frameworks like Hadoop and Spark. Strong programming skills (e.g., Python, Scala, Java) and a deep understanding of data warehousing and distributed systems are crucial for this role.

Machine Learning Engineers focus on designing, building, and deploying machine learning models into production environments. They bridge the gap between data science and software engineering, taking the models developed by data scientists and making them scalable, reliable, and integrated into applications. ML Engineers need strong programming skills, a solid understanding of ML algorithms and frameworks (like TensorFlow, PyTorch, Scikit-learn), and experience with MLOps (Machine Learning Operations) practices for model deployment, monitoring, and lifecycle management.

Big Data Solutions Architects are responsible for the high-level design and strategy of an organization's big data ecosystem. They define the architecture, select the appropriate technologies and platforms (cloud or on-premises), and ensure that the solutions meet business requirements for scalability, performance, security, and cost-effectiveness. This role requires a broad understanding of various big data technologies, cloud services, data governance principles, and strong communication skills to interact with both technical teams and business stakeholders.

These roles often collaborate closely. For instance, Data Engineers build the pipelines that feed data to ML Engineers, and Solutions Architects design the overarching systems within which both operate. Aspiring professionals should research these roles to understand which best aligns with their interests and skills.

These courses can provide foundational knowledge relevant to these roles:

Industry Demand Analysis (Tech, Finance, Healthcare)

The demand for professionals skilled in Big Data Processing remains robust across a multitude of industries. As organizations increasingly recognize data as a critical asset, the need for individuals who can manage, analyze, and derive insights from large datasets continues to grow. This trend is particularly pronounced in technology, finance, and healthcare sectors, though many other industries are also actively hiring big data talent.

The technology sector is a primary driver of demand for big data professionals. Companies ranging from large tech giants to innovative startups are constantly developing new data-driven products and services, requiring expertise in areas like distributed computing, cloud platforms, and machine learning. Roles such as Data Engineer, ML Engineer, and Data Scientist are in high demand to build and maintain the infrastructure and algorithms that power these offerings.

In the finance and banking industry, big data is crucial for managing risk, detecting fraud, complying with regulations, understanding customer behavior, and developing new financial products. Financial institutions are investing heavily in big data technologies and the talent needed to leverage them, creating numerous opportunities for professionals with skills in data analytics, quantitative modeling, and secure data management.

The healthcare sector is also experiencing a surge in demand for big data expertise. The proliferation of electronic health records, genomic data, medical imaging, and data from wearable devices presents enormous opportunities to improve patient outcomes, personalize treatments, optimize healthcare operations, and advance medical research. Professionals who can navigate the complexities of healthcare data, including its privacy and security requirements, are highly sought after. According to the U.S. Bureau of Labor Statistics, employment in computer and information technology occupations is projected to grow much faster than the average for all occupations, and roles related to data science and database administration are part of this growth trend. Many reports from consulting firms like McKinsey also highlight the increasing adoption of AI and data analytics across industries, further fueling demand for skilled professionals.

Exploring job market trends on platforms that aggregate job postings and analyzing reports from industry analysts can provide more specific insights into current demand in various regions and sectors.

These courses may be relevant for those looking to apply big data skills in specific industries:

Salary Ranges and Geographic Hotspots

Careers in Big Data Processing are generally well-compensated, reflecting the high demand for specialized skills and the significant value these professionals bring to organizations. Salary ranges can vary considerably based on factors such as the specific role (e.g., Data Engineer, ML Engineer, Solutions Architect), years of experience, level of education, industry, company size and type, and geographic location.

Entry-level positions in big data might start with competitive salaries, and as professionals gain experience and expertise, their earning potential can increase substantially. Senior-level roles and positions requiring advanced skills in areas like machine learning, artificial intelligence, or specialized cloud platforms often command premium salaries. For instance, roles like Machine Learning Engineer and Big Data Architect are frequently cited among the higher-paying tech jobs. You can explore salary data on sites like Glassdoor, Indeed, or specialized tech salary websites to get a more granular understanding for specific roles and locations. The U.S. Bureau of Labor Statistics Occupational Employment Statistics also provides wage data for related occupations, such as Database Administrators and Architects, and Computer and Information Research Scientists.

Geographically, technology hubs have traditionally been hotspots for big data careers. In the United States, areas like Silicon Valley/San Francisco Bay Area, Seattle, New York City, Boston, and Austin are known for a high concentration of tech companies and, consequently, numerous big data job opportunities. Similar tech hubs exist globally, including cities in Europe (e.g., London, Berlin, Dublin, Amsterdam) and Asia (e.g., Bangalore, Singapore, Shanghai). However, with the rise of remote work and the increasing adoption of big data technologies across all industries, opportunities are becoming more geographically dispersed. Many companies are now hiring for big data roles in locations outside of traditional tech centers, and remote positions are increasingly common.

It's advisable to research salary benchmarks and job market conditions specific to your target role, experience level, and geographic area of interest to set realistic expectations.

Freelance vs. Corporate Career Paths

When pursuing a career in Big Data Processing, professionals often have the choice between traditional corporate employment and a more independent freelance or consulting path. Both avenues offer distinct advantages and disadvantages, and the best fit depends on individual preferences, career goals, risk tolerance, and work style.

A corporate career path typically involves working as a full-time employee for a company, whether it's a large enterprise, a mid-sized business, or a startup. This path generally offers stability in terms of regular salary, benefits (like health insurance, retirement plans), and a structured work environment. Corporate roles often provide opportunities for deep specialization, career progression within the organization, access to large-scale projects and resources, and collaboration with diverse teams. Mentorship programs and formal training opportunities may also be more readily available. However, corporate roles might involve less autonomy, more bureaucracy, and potentially a slower pace of change compared to freelance work.

The freelance or consulting path offers greater autonomy, flexibility in choosing projects and clients, and often the potential for higher hourly rates. Freelancers in big data can work with multiple clients simultaneously or on a project-by-project basis, gaining exposure to a wide variety of industries and challenges. This path requires strong self-discipline, business development skills (finding clients, negotiating contracts), and the ability to manage one's own finances and benefits. While freelancing can offer a better work-life balance for some, it can also come with income instability, the need to constantly seek new projects, and the responsibility of handling all administrative tasks. The "gig economy" has made freelance opportunities more accessible, but success often depends on building a strong reputation and network.

Some professionals may also choose a hybrid approach, perhaps starting in a corporate role to gain experience and build a portfolio before transitioning to freelance work, or vice versa. The decision between these paths is a personal one. Consider your long-term aspirations, your comfort level with risk and uncertainty, and the type of work environment where you thrive best. For those new to the field, gaining initial experience and building a strong foundational skill set within a corporate setting can be a valuable stepping stone, even if freelance work is a long-term goal.

Challenges in Modern Big Data Processing

While Big Data Processing offers immense opportunities, it also comes with a unique set of challenges that organizations and practitioners must navigate. The sheer scale and complexity of modern datasets push the boundaries of existing technologies and methodologies. Addressing these challenges is crucial for successfully extracting value from big data initiatives. This section will explore some of the key hurdles faced in the realm of big data.

Scalability Limitations with Growing Data Volumes

One of the most fundamental challenges in Big Data Processing is managing the ever-increasing volume of data. As organizations collect more data from more sources, the systems designed to store and process this information must be able to scale effectively to keep pace. Failure to do so can lead to performance degradation, increased costs, and an inability to derive timely insights.

Traditional data processing systems were often designed with vertical scalability in mind, meaning improving performance by adding more resources (CPU, RAM, storage) to a single server. However, there are physical and cost limits to how much a single server can be scaled up. Big data systems, therefore, predominantly rely on horizontal scalability, which involves distributing the data and processing load across a cluster of multiple machines. Frameworks like Hadoop and Spark are designed for horizontal scaling.

Even with horizontally scalable architectures, challenges remain. Ensuring that data is distributed evenly across the cluster, managing the communication overhead between nodes, and designing algorithms that can effectively parallelize are ongoing concerns. As data volumes continue to grow exponentially – sometimes into petabytes or even exabytes – the architectural choices made for storage, processing, and networking become critical. Cloud platforms offer elastic scalability, allowing resources to be provisioned on demand, but this also requires careful cost management and optimization to avoid runaway expenses.

The courses below discuss managing large datasets and the systems designed for them:

Latency Issues in Real-Time Processing

For many modern applications, the value of data diminishes rapidly with time. This has led to a growing demand for real-time or near real-time Big Data Processing, where insights are generated and actions are taken within seconds or even milliseconds of data arrival. However, achieving low latency in processing massive, high-velocity data streams presents significant technical challenges.

Stream processing frameworks like Apache Spark Streaming, Apache Flink, and Apache Storm are designed to handle continuous data flows. They must be able to ingest data rapidly, perform computations on the fly, and deliver results with minimal delay. Latency can be introduced at various points in the pipeline: data ingestion, network communication between nodes, the processing logic itself, and data output or storage.

Optimizing for low latency often involves trade-offs. For example, complex analytical operations might require more processing time, increasing latency. Ensuring fault tolerance and data consistency in a distributed streaming environment can also add overhead. Techniques like in-memory processing, efficient data serialization, optimized network protocols, and careful resource allocation are employed to minimize latency. Furthermore, edge computing, where data is processed closer to its source (e.g., on IoT devices themselves), is an emerging trend aimed at reducing latency for time-sensitive applications.

These courses delve into real-time analytics and stream processing:

Data Quality and Governance Complexities

The adage "garbage in, garbage out" is particularly pertinent in Big Data Processing. The insights derived from data analysis are only as reliable as the quality of the underlying data. However, ensuring data quality when dealing with massive volumes of data from diverse, and often heterogeneous, sources is a complex undertaking. Data can be incomplete, inaccurate, inconsistent, or outdated, leading to flawed analyses and incorrect business decisions.

Data quality issues can arise at various stages, from initial data collection to transformation and integration. Implementing robust data validation, cleaning, and enrichment processes is crucial. This might involve techniques for identifying and handling missing values, correcting errors, removing duplicates, and standardizing formats. These processes themselves can be computationally intensive when applied to big data.

Closely related to data quality is data governance. This refers to the overall management of the availability, usability, integrity, and security of the data used in an organization. Effective data governance involves defining policies, procedures, and responsibilities for how data is collected, stored, accessed, and used. In a big data context, governance becomes more complex due to the sheer volume of data, the variety of data types, and the often distributed nature of data storage and processing. Ensuring compliance with regulations (like GDPR for data privacy), managing data lineage (tracking the origin and transformations of data), and controlling access to sensitive information are critical aspects of big data governance. Lack of proper governance can lead to data breaches, regulatory penalties, and a loss of trust.

This course touches upon aspects of data quality and management:

Consider these books for a deeper dive into data concepts:

Vendor Lock-in Risks with Proprietary Platforms

While cloud-based solutions and proprietary big data platforms offer significant advantages in terms of ease of use, managed services, and integrated ecosystems, they also introduce the risk of vendor lock-in. Vendor lock-in occurs when an organization becomes overly dependent on a specific vendor's products and services, making it difficult or costly to switch to an alternative vendor or bring solutions in-house.

This dependency can arise from various factors, including the use of proprietary APIs, data formats specific to the vendor's platform, deep integration with other services from the same vendor, and the significant effort and cost associated with migrating large datasets and re-architecting applications for a different environment. Once an organization has invested heavily in a particular vendor's ecosystem, the switching costs – in terms of time, money, and resources – can be prohibitively high.

The risk of vendor lock-in can limit an organization's flexibility, potentially leading to higher long-term costs if the vendor increases prices or changes its service offerings unfavorably. It can also hinder innovation if the chosen platform does not keep pace with emerging technologies or if its capabilities are not well-suited for new business needs. To mitigate vendor lock-in, organizations may consider strategies such as adopting open standards and open-source technologies where possible, designing for portability, using multi-cloud or hybrid-cloud approaches, and carefully evaluating the long-term implications of technology choices. Clear data exit strategies should also be considered during the platform selection process.

Understanding the broader landscape of tools can help in making informed decisions. Exploring different platforms and their capabilities is a good practice.

Ethical Considerations in Big Data Processing

The power of Big Data Processing to analyze vast amounts of information and derive deep insights brings with it significant ethical responsibilities. As these technologies become more pervasive, it is crucial for practitioners, organizations, and policymakers to address the potential societal impacts and ensure that data is used responsibly and equitably. This section highlights some of the key ethical considerations in the field of big data.

Privacy Concerns (GDPR, Anonymization Techniques)

One of the most prominent ethical challenges in Big Data Processing is protecting individual privacy. The ability to collect, aggregate, and analyze vast amounts of personal data – from online behavior and social media activity to location data and health records – raises significant concerns about how this information is used, shared, and secured. Individuals may not always be aware of what data is being collected about them or how it is being processed, leading to potential misuse or unauthorized access.

Regulatory frameworks like the General Data Protection Regulation (GDPR) in Europe and similar laws in other jurisdictions have been established to give individuals more control over their personal data and to impose obligations on organizations that collect and process this data. These regulations often require explicit consent for data collection, mandate data minimization (collecting only necessary data), and grant individuals rights to access, rectify, and erase their data. Compliance with these regulations is a critical aspect of ethical big data processing.

Anonymization and pseudonymization techniques are often employed to protect privacy by removing or obscuring personally identifiable information (PII) from datasets. However, achieving true anonymization can be challenging, as re-identification can sometimes be possible by linking anonymized data with other available datasets. Therefore, ongoing research and development of robust privacy-preserving techniques, such as differential privacy, are crucial. Ethical data handling requires a commitment to transparency, fairness, and respecting individual autonomy over their personal information.

These courses touch upon the important aspects of security and privacy in big data:

This book offers insights into privacy in the context of big data:

Bias Amplification in Algorithmic Decision-Making

Big Data Processing often fuels algorithmic decision-making systems used in various domains, from loan applications and hiring processes to criminal justice and content recommendation. While these systems aim for objectivity, they can inadvertently perpetuate and even amplify existing societal biases if the data they are trained on reflects historical discrimination or underrepresentation of certain groups. This is a significant ethical concern with far-reaching implications.

Bias can creep into algorithms in several ways. If the historical data used to train a machine learning model contains biases (e.g., if past hiring decisions were biased against certain demographics), the model will likely learn and replicate these biases in its future predictions or classifications. Furthermore, the features chosen for the model or the way the model is designed can also introduce bias. For example, using proxy variables that are correlated with protected attributes (like race or gender) can lead to discriminatory outcomes, even if the protected attributes themselves are not explicitly used.

The consequences of biased algorithms can be severe, leading to unfair treatment, reinforcement of stereotypes, and exacerbation of social inequalities. Addressing algorithmic bias requires a multi-faceted approach. This includes careful examination of training data for potential biases, developing fairness-aware machine learning algorithms, implementing robust testing and validation procedures to detect and mitigate bias, and ensuring transparency and accountability in how algorithmic systems are developed and deployed. It also involves fostering diversity within data science teams to bring varied perspectives to the design and evaluation process. The pursuit of fairness and equity in algorithmic decision-making is an ongoing and critical area of research and practice in the big data field.

This course explores ethical considerations in data and AI:

Environmental Impact of Data Centers

The infrastructure required for Big Data Processing, particularly the vast data centers that house servers for storage and computation, consumes significant amounts of energy. This energy consumption contributes to greenhouse gas emissions and has a tangible environmental impact, a concern that is growing as the scale of big data continues to expand. Addressing the environmental sustainability of big data operations is an increasingly important ethical and practical consideration.

Data centers require energy not only to power the servers themselves but also for cooling systems to prevent overheating. The manufacturing of hardware components also has an environmental footprint. As the demand for data processing and storage grows, so does the energy footprint of the digital economy. This has led to increased scrutiny of the energy sources used to power data centers and efforts to improve energy efficiency.

Many large technology companies and data center operators are now investing in renewable energy sources, designing more energy-efficient hardware and cooling systems, and exploring innovative approaches like locating data centers in cooler climates or using liquid cooling. Optimizing software and algorithms to reduce computational load can also contribute to lower energy consumption. Furthermore, a "circular economy" approach to hardware, involving recycling and reusing components, can help mitigate the environmental impact of manufacturing. As sustainability becomes a greater global priority, the big data industry faces the challenge of balancing the benefits of data-driven innovation with the responsibility to minimize its environmental footprint.

Transparency in Automated Systems

As Big Data Processing increasingly drives automated decision-making systems that impact people's lives – from credit scoring and insurance underwriting to medical diagnoses and parole decisions – the need for transparency and interpretability becomes paramount. Many advanced algorithms, particularly complex machine learning models like deep neural networks, can operate as "black boxes," where it is difficult to understand precisely how they arrive at a particular decision or prediction. This lack of transparency raises significant ethical concerns.

When automated systems make critical decisions without clear explanations, it can be challenging to identify and correct errors, detect and mitigate biases, or ensure accountability. Individuals affected by these decisions may have no way of understanding the reasoning behind them or appealing an unfair outcome. This opacity can erode trust in automated systems and lead to concerns about fairness, due process, and potential discrimination.

Efforts are underway to develop techniques for "Explainable AI" (XAI) and "Interpretable Machine Learning," which aim to make the decision-making processes of complex algorithms more understandable to humans. This involves creating models that are inherently more transparent or developing methods to provide post-hoc explanations for the predictions of black-box models. Ensuring transparency in automated systems is crucial for building trust, enabling oversight, and empowering individuals to understand and, if necessary, challenge decisions that affect them. It is a key component of responsible AI and ethical big data practice.

This course may offer some insights into decision-making processes assisted by technology:

Formal Education Pathways

For those aspiring to build a career in Big Data Processing, a strong educational foundation is often a key stepping stone. Formal education, through undergraduate and graduate degrees, provides the theoretical knowledge and practical skills necessary to tackle the complexities of this field. This section outlines typical academic routes, core areas of study, research opportunities, and relevant certifications that can pave the way for a successful career in big data.

Relevant Undergraduate/Graduate Degrees (CS, Data Science)

A solid educational background is typically essential for a career in Big Data Processing. Several undergraduate and graduate degree programs can provide the necessary knowledge and skills. The most common and directly relevant fields of study include Computer Science, Data Science, Statistics, and Software Engineering.

A Bachelor's degree in Computer Science provides a strong foundation in programming, algorithms, data structures, database systems, and operating systems, all of which are crucial for understanding and working with big data technologies. Many Computer Science programs now offer specializations or elective tracks in data science, machine learning, or big data.

A degree in Data Science is increasingly popular and specifically tailored to the needs of the data industry. These programs typically offer an interdisciplinary curriculum combining computer science, statistics, and domain expertise. Students learn data analysis techniques, machine learning algorithms, data visualization, and tools for working with large datasets.

Degrees in Statistics or Mathematics are also highly valuable, providing a deep understanding of statistical modeling, probability theory, and mathematical optimization, which are fundamental to data analysis and machine learning. Students in these programs often benefit from complementing their studies with programming skills and experience with data analysis software. For more specialized roles, particularly in research or advanced development, a Master's degree or Ph.D. in one of these fields, or a related area like Artificial Intelligence or Machine Learning, is often preferred or required. These advanced degrees provide opportunities for deeper specialization and original research.

Many universities now offer specialized Master's programs in Big Data Analytics, Data Engineering, or Business Analytics that are designed to equip graduates with the specific skills needed for the big data job market. When choosing a program, consider the curriculum, the faculty's expertise, research opportunities, and industry connections.

This book offers a general introduction to data science concepts:

Core Coursework (Distributed Systems, Statistics)

Regardless of the specific degree program, certain core areas of coursework are fundamental for anyone aiming to work in Big Data Processing. A strong grounding in these subjects will provide the essential knowledge and skills to understand, design, and implement big data solutions.

Distributed Systems and Computing: Understanding how to design and manage systems where data and computation are spread across multiple machines is crucial. Coursework in distributed systems covers topics like parallel computing, distributed algorithms, fault tolerance, consistency models, and networking. This knowledge is directly applicable to working with frameworks like Hadoop and Spark and cloud-based big data platforms.

Statistics and Probability: A solid understanding of statistical concepts and methods is essential for data analysis, hypothesis testing, and interpreting results. Topics like descriptive statistics, inferential statistics, probability distributions, regression analysis, and experimental design are fundamental. This forms the basis for many data mining and machine learning techniques.

Database Systems: Knowledge of database design, data modeling, SQL, and an understanding of both relational (SQL) and NoSQL databases are important. This includes concepts like data warehousing, data lakes, and how different database technologies are suited for different types of data and workloads.

Algorithms and Data Structures: Proficiency in designing and analyzing algorithms, along with a strong understanding of common data structures, is vital for writing efficient code for data processing and analysis. This is particularly important when dealing with large datasets where performance is critical.

Programming Languages: Proficiency in one or more programming languages commonly used in data science and big data, such as Python, Java, or Scala, is essential. Coursework should focus not just on syntax but also on software development best practices.

Machine Learning: An introduction to machine learning concepts, algorithms (e.g., regression, classification, clustering), model evaluation techniques, and popular ML libraries is increasingly important, even for roles that are not purely ML-focused.

Many universities integrate these core topics into their Computer Science, Data Science, and related degree programs. Look for courses that offer hands-on projects and experience with industry-standard tools.

Research Opportunities in Academia

For individuals passionate about pushing the boundaries of Big Data Processing and contributing to new knowledge, academia offers a wealth of research opportunities. Universities and research institutions are at the forefront of exploring novel techniques, developing advanced algorithms, and addressing the fundamental challenges in managing, analyzing, and interpreting massive datasets. Engaging in research can lead to a deeper understanding of the field and contribute to innovations that shape its future.

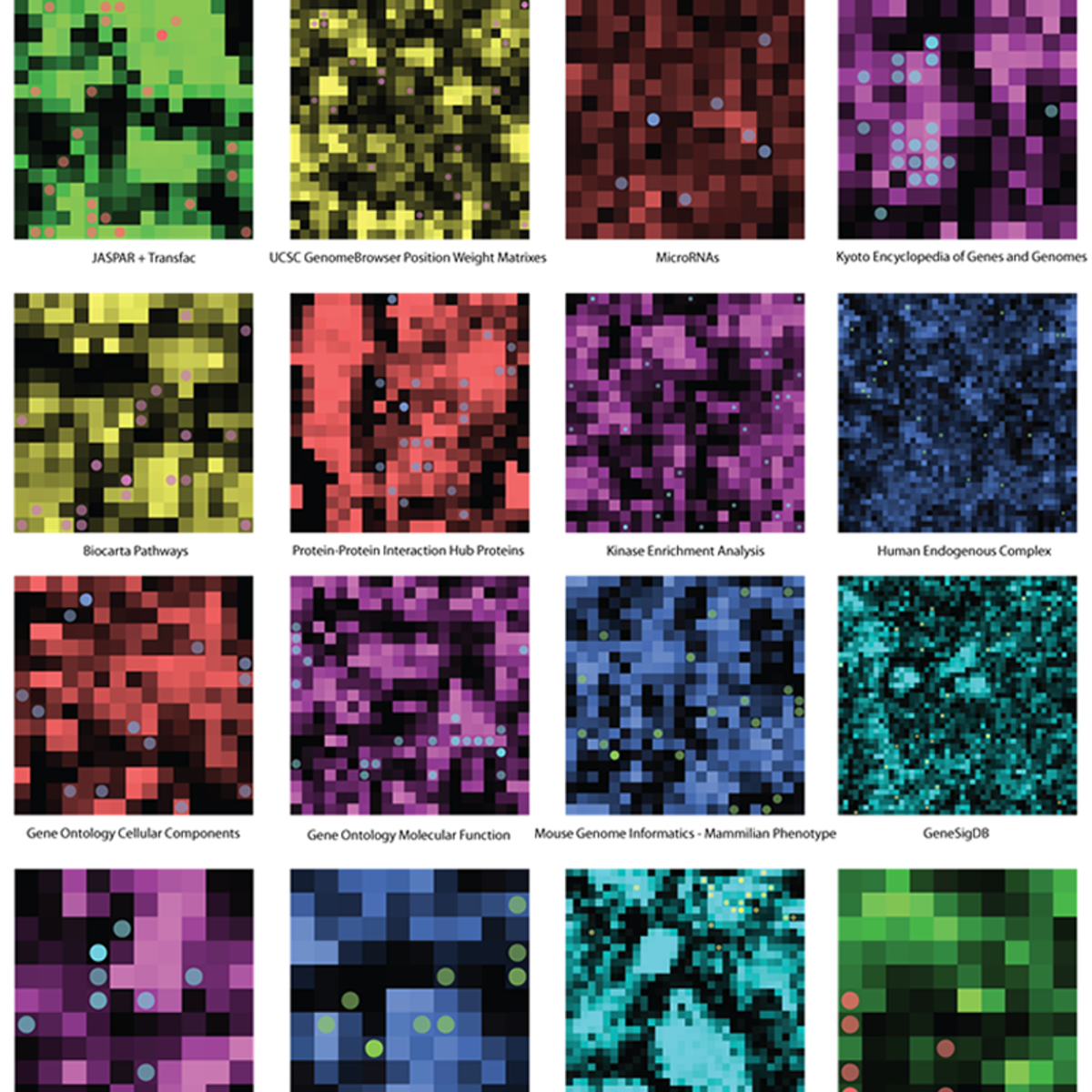

Research areas in big data are diverse and interdisciplinary. They can range from theoretical work on the foundations of distributed computing, statistical learning theory, and algorithm design, to more applied research focusing on specific domains like healthcare informatics, bioinformatics, climate science, urban computing, or social network analysis. Many research groups work on developing new machine learning models, improving the scalability and efficiency of big data systems, addressing data privacy and security challenges, or exploring the ethical implications of data-intensive technologies.

Students pursuing Master's or Ph.D. degrees often have the opportunity to engage in research projects under the guidance of faculty members. This can involve conducting literature reviews, designing experiments, developing and implementing new algorithms or systems, analyzing data, and publishing findings in academic journals and conferences. Such experiences are invaluable for those considering careers in research, either in academia or in industrial research labs. Even for those not pursuing a purely research-focused career, exposure to research methodologies can enhance critical thinking and problem-solving skills, which are highly valued in any data-related profession. Many universities also foster collaborations with industry partners, providing opportunities for research to have a direct real-world impact.

These courses, often from research-active institutions, can provide a glimpse into advanced topics:

Certifications for Specialized Roles

In addition to formal degrees, professional certifications can be a valuable way to demonstrate specialized skills and knowledge in Big Data Processing. Certifications are offered by technology vendors (like AWS, Google Cloud, Microsoft, Cloudera), industry organizations, and training providers. They can be particularly useful for showcasing proficiency in specific tools, platforms, or roles, and can enhance a candidate's resume, especially for those looking to enter the field or pivot to a new specialization.

Vendor-specific certifications, such as the AWS Certified Big Data - Specialty (now often part of broader data analytics or machine learning certifications), Google Professional Data Engineer, or Microsoft Certified: Azure Data Engineer Associate, validate expertise in using a particular cloud provider's big data services. These are highly regarded as many organizations are leveraging cloud platforms for their big data initiatives. Certifications from companies like Cloudera (focused on Hadoop and Spark ecosystems) can also be beneficial, particularly for roles involving on-premises or hybrid deployments.

There are also vendor-neutral certifications that focus on broader concepts and skills in data engineering, data science, or machine learning. While certifications should not be seen as a substitute for hands-on experience and a solid understanding of fundamental concepts, they can complement a formal education and provide a structured learning path. They can be particularly helpful for gaining practical skills in specific technologies and for demonstrating a commitment to continuous learning in a rapidly evolving field. When considering certifications, research their relevance to your target roles and industries, and choose those that align with your career goals. Many online courses, including some on OpenCourser, can help prepare you for these certification exams.

Self-Directed Learning and Online Resources

For individuals looking to enter the field of Big Data Processing, or for professionals seeking to upskill, self-directed learning والموارد عبر الإنترنت offer flexible and accessible pathways. The rapid evolution of big data technologies means that continuous learning is essential, and online platforms provide a wealth of courses, tutorials, and communities to support this journey. This section explores strategies for building skills independently, the importance of hands-on projects, and how to engage with the broader big data community.

Online courses are highly suitable for building a foundational understanding of Big Data Processing concepts and tools. Platforms like OpenCourser aggregate thousands of courses from various providers, allowing learners to find resources that match their specific learning goals and pace. These courses often cover everything from introductory principles to advanced techniques in areas like distributed computing, specific technologies (e.g., Spark, Hadoop), database management, and machine learning. They can serve as a primary learning resource or supplement traditional education by providing practical, hands-on experience with the latest tools.

Professionals can use online courses to stay current with new technologies, deepen their expertise in a particular area, or acquire skills needed for a career pivot. For example, a software engineer looking to move into data engineering can find targeted courses on data pipeline development, ETL processes, and cloud data services. The modular nature of many online courses allows learners to focus on specific skills relevant to their current role or career aspirations. Furthermore, many courses offer certificates upon completion, which can be a valuable addition to a professional profile.

Building Foundational Skills Through Modular Learning

Embarking on a journey into Big Data Processing can seem daunting due to the breadth and depth of the field. However, a modular learning approach, breaking down the vast subject into manageable components, can make the process more approachable and effective. Online courses are particularly well-suited for this, allowing learners to build foundational skills step-by-step and at their own pace.

Start by focusing on the core concepts. This might include understanding what big data is (the "Vs": Volume, Velocity, Variety, etc.), the typical lifecycle of a big data project (ingestion, storage, processing, analysis, visualization), and the fundamental challenges involved. Courses that provide a general overview of the big data landscape can be a good starting point.

Next, delve into foundational technical skills. Strong programming skills are essential, with Python being a very popular choice in the data science and big data community due to its extensive libraries (like Pandas, NumPy, Scikit-learn). SQL is also crucial for data manipulation and querying. Understanding basic Linux commands and shell scripting can be very helpful for managing systems and automating tasks. Many online platforms offer excellent introductory courses in these areas.

Once you have a grasp of programming and basic data concepts, you can move on to more specialized topics. This could include learning about specific big data technologies like Apache Hadoop and Apache Spark, understanding different types of databases (SQL, NoSQL), exploring cloud platforms (AWS, Azure, GCP) and their data services, or getting an introduction to machine learning principles. Look for courses that offer hands-on exercises and projects to reinforce your learning. The key is to build a solid base in each area before moving to more advanced topics. OpenCourser's Learner's Guide offers valuable tips on structuring your learning and making the most of online educational resources.

These books can help solidify foundational knowledge:

Hands-on Projects with Public Datasets

Theoretical knowledge is essential, but practical, hands-on experience is what truly solidifies understanding and builds a compelling portfolio in the field of Big Data Processing. Working on projects with real-world or realistic datasets allows learners to apply the concepts and tools they've learned, encounter common challenges, and develop problem-solving skills. Publicly available datasets offer a fantastic resource for such projects.

Numerous organizations and government agencies release datasets covering a wide range of topics, from economics and demographics to scientific research and social media trends. Platforms like Kaggle, UCI Machine Learning Repository, Data.gov, and Google Dataset Search are excellent places to find interesting datasets. When selecting a project, try to choose something that genuinely interests you, as this will keep you motivated. Start with a clear objective or question you want to answer using the data.

Your project could involve the entire data pipeline:

- Data Ingestion and Cleaning: Practice collecting data from different sources (e.g., CSV files, APIs) and cleaning it to handle missing values, errors, and inconsistencies.

- Data Storage and Management: If the dataset is large enough, experiment with storing it in different database systems or using distributed storage like HDFS (you can set up a single-node cluster for learning).

- Data Processing and Analysis: Use tools like Python with Pandas, Spark, or SQL to perform exploratory data analysis, transformations, and more complex analyses. If relevant, apply machine learning algorithms.

- Data Visualization: Use libraries like Matplotlib, Seaborn (for Python), or tools like Tableau Public to create meaningful visualizations that communicate your findings.

Document your project thoroughly, including your methodology, code, and insights. Platforms like GitHub are excellent for showcasing your project code. These hands-on projects not only deepen your learning but also serve as tangible proof of your skills to potential employers.

Many online courses include capstone projects or guided projects designed to provide this practical experience. Look for such opportunities as you plan your learning path. OpenCourser features many project-based courses that can help you gain practical skills.

Open-Source Community Contributions

Engaging with the open-source community can be an incredibly rewarding way to learn, contribute, and build your reputation in the Big Data Processing field. Many of the foundational tools and frameworks in big data, such as Apache Hadoop, Apache Spark, Apache Kafka, and numerous libraries, are open-source projects with vibrant communities of developers and users.

Contributing to open-source projects can take many forms, and you don't necessarily need to be an expert coder to get started. You can begin by:

- Improving Documentation: Clear and accurate documentation is vital for any software project. If you find areas in the documentation that are unclear, outdated, or missing, you can contribute by suggesting improvements or writing new content.

- Reporting Bugs: As you use open-source tools, you might encounter bugs or unexpected behavior. Reporting these issues clearly, with reproducible examples, helps developers fix them.

- Testing and Providing Feedback: Many projects have development or beta versions that need testing. Participating in testing and providing constructive feedback can be very helpful.

- Answering Questions: If you gain expertise in a particular tool, you can help others by answering questions on forums, mailing lists, or platforms like Stack Overflow.

- Contributing Code: As your skills grow, you can start contributing code, whether it's fixing bugs, implementing new features, or optimizing existing code. Most projects have guidelines for contributors and processes for submitting patches or pull requests.

Participating in open-source communities allows you to learn from experienced developers, see how real-world software is built and maintained, and collaborate on meaningful projects. It's also a great way to network with other professionals in the field. Your contributions, even small ones, can be valuable and can be showcased on your resume or GitHub profile, demonstrating your initiative and practical skills.

Balancing Theoretical Knowledge with Practical Implementation

A successful journey in Big Data Processing requires a careful balance between acquiring theoretical knowledge and gaining practical implementation skills. While understanding the underlying concepts, algorithms, and principles is crucial for making informed decisions and solving complex problems, it is the ability to apply this knowledge using real-world tools and technologies that ultimately delivers value.

Theoretical knowledge provides the "why" – why certain algorithms are used, why different data models are appropriate for different scenarios, or why particular system architectures are chosen. This understanding comes from formal education, reading textbooks and research papers, and studying the foundational concepts of computer science, statistics, and distributed systems. Without this theoretical underpinning, it's easy to misuse tools or misinterpret results, even if one is proficient in using specific software.

Practical implementation skills provide the "how" – how to write code in Python or Scala, how to set up and configure a Spark cluster, how to design and query a NoSQL database, or how to build and deploy a machine learning model using cloud services. These skills are typically gained through hands-on exercises, projects, internships, and on-the-job experience. Online courses often emphasize practical application, providing opportunities to work with industry-standard tools and datasets. Finding the right equilibrium is key. Too much focus on theory without practice can lead to an inability to execute, while too much focus on tools without understanding the fundamentals can result in superficial knowledge and an inability to adapt to new challenges or technologies. The most effective learners continuously cycle between theory and practice, using practical projects to solidify theoretical understanding and theoretical knowledge to guide practical decisions. This iterative approach ensures a deep and robust skill set, preparing individuals for the dynamic and evolving landscape of Big Data Processing.

Many comprehensive online specializations and programs aim to strike this balance, often culminating in a capstone project that requires applying both theoretical and practical learning.

Consider these books for a blend of concepts and practical insights:

Future Trends in Big Data Processing

The field of Big Data Processing is in a constant state of evolution, driven by technological advancements, changing business needs, and new research frontiers. Staying abreast of emerging trends is crucial for professionals and organizations looking to remain competitive and leverage the full potential of their data assets. This section will explore some of the key future trends shaping the landscape of big data.

Convergence with AI/ML and Edge Computing

The future of Big Data Processing is increasingly intertwined with advancements in Artificial Intelligence (AI), Machine Learning (ML), and Edge Computing. This convergence is leading to more intelligent, responsive, and efficient data-driven applications.

AI and ML are moving beyond being just applications of big data to becoming integral components of the data processing pipeline itself. We are seeing AI-powered tools for automating data preparation, optimizing data storage and querying, and even generating insights with less human intervention. Sophisticated ML models are being deployed at scale to handle complex tasks like natural language processing, computer vision, and advanced predictive analytics, all fueled by massive datasets. The trend is towards more democratized AI, where tools and platforms make it easier for non-experts to leverage ML capabilities.

Edge Computing involves processing data closer to where it is generated – at the "edge" of the network – rather than sending it to a centralized cloud or data center. This is particularly relevant for Internet of Things (IoT) applications, autonomous vehicles, and other scenarios where low latency and real-time decision-making are critical. By processing data locally, edge computing can reduce bandwidth requirements, improve response times, and enhance privacy. The convergence with big data means developing strategies for managing and analyzing distributed data at the edge, potentially performing initial processing locally and sending only aggregated or relevant data to central systems for further analysis. This distributed intelligence is a key trend for the future.

The combination of AI/ML with edge computing is paving the way for "intelligent edge" applications that can learn and adapt in real-time, based on local data, while still benefiting from the broader insights of centralized big data analytics.

These courses touch upon the intersection of big data with AI and cloud technologies:

Serverless Architectures and Auto-Scaling

Serverless architectures and auto-scaling capabilities are becoming increasingly prominent in Big Data Processing, offering significant benefits in terms of operational efficiency, cost optimization, and developer productivity. These approaches allow organizations to focus more on building data applications and less on managing underlying infrastructure.

Serverless computing (also known as Function-as-a-Service or FaaS) allows developers to run code in response to events without provisioning or managing servers. Cloud providers automatically handle the infrastructure, scaling, and maintenance. In the context of big data, serverless functions can be used for various tasks within a data pipeline, such as data ingestion, transformation, and triggering downstream processes. Services like AWS Lambda, Google Cloud Functions, and Azure Functions are examples of serverless platforms. This model can be highly cost-effective as you typically pay only for the compute time consumed when your code is running.

Auto-scaling is a feature offered by cloud platforms and some modern big data frameworks that automatically adjusts the amount of compute resources allocated to an application based on its current load or predefined metrics. For big data workloads, which can be highly variable (e.g., high processing demand during peak hours, lower demand at other times), auto-scaling ensures that the system has enough resources to handle the load efficiently without being over-provisioned (and thus incurring unnecessary costs) during periods of low activity. This elasticity is a key advantage of cloud-based big data solutions, allowing systems to scale up to process large batch jobs or handle spikes in streaming data, and then scale down when the demand subsides.

The combination of serverless architectures for event-driven processing and auto-scaling for resource-intensive computations is enabling more agile, resilient, and cost-efficient big data solutions. This trend allows data teams to be more responsive to business needs and experiment more rapidly with new data initiatives.

Growing Emphasis on Federated Learning

Federated Learning is an emerging machine learning paradigm that is gaining traction, particularly in scenarios where data privacy, security, or data residency are major concerns. Unlike traditional centralized machine learning where all data is aggregated in one location for model training, federated learning allows multiple parties to collaboratively train a shared prediction model without exchanging their raw data.

In a federated learning setup, a global machine learning model is typically initialized on a central server. This model is then sent to multiple decentralized devices or data silos (e.g., mobile phones, hospitals, different organizations) where local data resides. Each device trains the model on its local data, creating an updated local model. Instead of sending the raw data back to the central server, only the model updates (e.g., changes to the model's parameters or weights) are transmitted. The central server then aggregates these updates from multiple devices to improve the global model. This process can be iterated multiple times to further refine the model.