Text Classification

derstanding Text Classification: A Comprehensive Guide

Text classification, at its core, is the process of assigning predefined categories or labels to text. Think of it as a sophisticated sorting system for words, sentences, and documents. This capability is a cornerstone of Natural Language Processing (NLP), a field of artificial intelligence that focuses on enabling computers to understand and process human language. The goal is to take unstructured text data—which makes up a vast majority of the information businesses generate daily—and organize it in a meaningful way. This allows for efficient analysis and retrieval of information, transforming raw text into actionable insights.

Working with text classification can be quite engaging. Imagine building systems that can automatically detect spam emails, saving countless hours of manual filtering. Or, picture developing tools that analyze customer reviews to gauge public sentiment about a new product, providing businesses with immediate feedback. Another exciting aspect is contributing to advancements in fields like medicine by creating systems that help process medical texts for diagnostic support or in finance by categorizing financial documents for easier analysis. The ability to transform vast amounts of text into structured, understandable information opens up a world of possibilities across numerous industries.

Introduction to Text Classification

This section delves into the foundational aspects of text classification, exploring its definition, historical roots, and its wide-ranging applications across various industries.

Definition and core purpose of text classification

Text classification, also known as text categorization, is a fundamental task in Natural Language Processing (NLP) and machine learning. Its primary purpose is to automatically assign predefined labels or categories to text documents based on their content. This process transforms unstructured text data into a structured format, making it easier to manage, analyze, and retrieve information. Essentially, text classification helps computers "understand" and organize large volumes of text by sorting them into meaningful groups.

The core idea is to train a model on a set of labeled text data, where each document is already assigned to one or more categories. The model learns to identify patterns and features in the text that are indicative of each category. Once trained, this model can then predict the category of new, unseen text documents. This automated approach offers significant advantages over manual classification, including increased speed, efficiency, and consistency, especially when dealing with the massive amounts of text data generated today.

Text classification can be a binary task, such as classifying emails as "spam" or "not spam," or a multi-class task, like categorizing news articles into topics such as "sports," "politics," or "technology." The ultimate aim is to make text data more accessible and useful, enabling various applications that rely on organized textual information.

Historical context and evolution

The journey of text classification began long before the digital age, with early forms of manual categorization rooted in library science and corpus linguistics. Librarians and scholars manually sorted texts into topics or genres to organize information. However, the exponential growth of documents, particularly with the advent of the internet, made these manual methods impractical.

The 1960s marked the initial foray into automated text classification, primarily relying on heuristic methods and rule-based systems. These early systems used handcrafted rules based on expert knowledge, such as keyword matching, to assign categories. While an improvement over purely manual efforts, these rule-based systems were often costly to develop, difficult to maintain, and lacked adaptability to new types of text or evolving language.

A significant shift occurred with the rise of machine learning in the latter half of the 20th century. Statistical methods began to replace or augment rule-based approaches. Algorithms like Naive Bayes, Support Vector Machines (SVMs), and K-Nearest Neighbors (KNN) allowed systems to learn from labeled data, improving accuracy and reducing the need for explicit rule programming. The 1990s and 2000s saw further refinements with techniques like Term Frequency-Inverse Document Frequency (TF-IDF) for feature extraction and a greater focus on text mining from the burgeoning internet. More recently, the field has been revolutionized by deep learning. Models like Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and especially Transformer-based architectures (e.g., BERT, GPT) have achieved state-of-the-art performance by automatically learning complex patterns and semantic relationships in text data. This evolution continues, with ongoing research focusing on areas like multilingual models, few-shot learning, and model efficiency.

Key industries/applications (e.g., spam detection, sentiment analysis)

Text classification is not just an academic exercise; it's a powerful technology with a wide array of practical applications across numerous industries. Its ability to automatically organize and understand text has made it indispensable in today's data-driven world.

One of the most recognizable applications is spam detection in email. Algorithms analyze incoming emails for characteristics typical of unsolicited messages, automatically filtering them into a spam folder. This saves users time and protects them from potentially malicious content.

Another prominent application is sentiment analysis, also known as opinion mining. Businesses use this to gauge customer opinions and feelings expressed in reviews, social media posts, and surveys. By classifying text as positive, negative, or neutral, companies can understand brand perception, identify areas for product improvement, and enhance customer service. Sentiment analysis is crucial for reputation management and making data-driven business decisions.

Beyond these, text classification powers topic categorization for news articles, blog posts, and research papers, making content easier to find and consume. In customer support, it helps route inquiries to the appropriate department or identify urgent issues. The legal field uses it for document review and e-discovery, while the healthcare industry employs it for analyzing medical records and research to support diagnoses. Financial institutions use text classification to categorize documents and detect fraud. Essentially, any industry dealing with large volumes of text can benefit from the organizational and analytical power of text classification.

Key Concepts and Terminology

To truly grasp text classification, it's important to become familiar with its core concepts and vocabulary. This section breaks down essential terms and methods that form the building blocks of this field.

Feature extraction methods (TF-IDF, word embeddings)

Before a machine learning model can classify text, the text itself needs to be transformed into a numerical format that the algorithm can understand. This transformation process is called feature extraction. The goal is to represent the text in a way that captures its essential characteristics relevant for classification.

One classic and widely used feature extraction method is Term Frequency-Inverse Document Frequency (TF-IDF). TF-IDF evaluates how important a word is to a document in a collection or corpus. It assigns a weight to each word based on two factors:

- Term Frequency (TF): This measures how frequently a term appears in a document. The idea is that words that appear more often are more important to that specific document.

- Inverse Document Frequency (IDF): This measures how common or rare a term is across all documents in the corpus. The IDF value is high for rare words and low for common words (like "the", "a", "is"). This helps to down-weight common words that appear in many documents and are thus less informative for distinguishing between categories.

The TF-IDF score for a word in a document is the product of its TF and IDF values. Documents can then be represented as vectors of these TF-IDF scores.

More recent and often more powerful methods involve word embeddings. Word embeddings represent words as dense vectors of real numbers in a multi-dimensional space. Unlike TF-IDF, which treats words as independent units, word embeddings capture semantic relationships between words. Words with similar meanings will have similar vector representations, meaning they are "close" to each other in the vector space. Popular word embedding techniques include Word2Vec, GloVe, and FastText. These embeddings are typically learned from large text corpora. Pre-trained word embeddings can be used directly, or they can be fine-tuned for specific tasks. Transformer-based models like BERT generate contextual word embeddings, meaning the representation of a word depends on the words surrounding it, capturing even more nuanced meaning.

These courses offer a deeper dive into the mechanics of natural language processing, including feature extraction techniques.

For those interested in the foundational statistical underpinnings of NLP, these books are excellent resources.

Labeling strategies and dataset requirements

Machine learning-based text classification, particularly supervised learning, heavily relies on labeled data. This means you need a dataset where each text document has already been assigned one or more correct categories (labels). The quality and characteristics of this dataset are crucial for building an effective classifier.

Labeling Strategies:

The process of assigning labels to your text data can be approached in several ways:

- Manual Labeling: This is the most straightforward approach, where human annotators read each document and assign the appropriate labels based on predefined guidelines. While this can lead to high-quality labels if done carefully, it is time-consuming and can be expensive, especially for large datasets.

- Crowdsourcing: Platforms like Amazon Mechanical Turk allow you to distribute the labeling task to a large number of workers. This can speed up the process and be more cost-effective than hiring dedicated annotators. However, quality control is essential, often involving multiple annotators labeling the same document and using agreement scores to ensure consistency.

- Semi-Supervised Learning: This approach uses a small amount of labeled data along with a large amount of unlabeled data. The model learns from the labeled examples and then tries to infer labels for the unlabeled data, which can then be used to further train the model. Techniques like self-training or co-training fall under this category.

- Weak Supervision: This involves using heuristics, rules, or existing knowledge bases to programmatically generate noisy labels for a large dataset. For example, you might use keywords to assign initial labels. While these labels might not be perfectly accurate, they can provide a good starting point for training a model, which can then be fine-tuned.

- Active Learning: In this iterative process, the model selects the most informative unlabeled instances for a human annotator to label. This helps to focus labeling efforts on the examples that will provide the most benefit to the model, potentially reducing the total amount of labeling required.

Dataset Requirements:

To build a robust text classification model, your dataset should ideally meet several requirements:

- Sufficient Size: The model needs enough examples to learn the underlying patterns for each category. The required size depends on the complexity of the task and the number of categories, but generally, more data is better, especially for complex deep learning models.

- Representativeness: The training data should accurately reflect the characteristics of the data the model will encounter in the real world. If the training data is too different from the deployment data, the model's performance will likely suffer.

- Balanced Classes (or strategies to handle imbalance): Ideally, you should have a roughly equal number of examples for each category. If some categories have significantly fewer examples than others (class imbalance), the model may become biased towards the majority classes. Techniques exist to handle class imbalance, such as oversampling minority classes, undersampling majority classes, or using specialized loss functions.

- High-Quality Labels: The assigned labels should be accurate and consistent. Inconsistent or incorrect labels will confuse the model and lead to poor performance. Clear annotation guidelines and quality control measures are vital.

- Clear Definition of Categories: The categories themselves should be well-defined and mutually exclusive (unless it's a multi-label classification problem where a document can belong to multiple categories). Ambiguous or overlapping categories make it difficult for both human annotators and the model to make correct assignments.

Preparing a good dataset is often one of the most critical and time-consuming parts of a text classification project, but it's an investment that pays off in model performance.

Evaluation metrics (precision, recall, F1-score)

Once a text classification model is trained, it's crucial to evaluate its performance to understand how well it's doing and to compare it with other models. Several metrics are commonly used for this purpose, each providing a different perspective on the model's accuracy and effectiveness.

For a binary classification task (e.g., spam vs. not spam), we often talk about four outcomes for each prediction:

- True Positive (TP): The model correctly predicts the positive class (e.g., correctly identifies a spam email as spam).

- False Positive (FP): The model incorrectly predicts the positive class (e.g., incorrectly identifies a legitimate email as spam – also known as a Type I error).

- True Negative (TN): The model correctly predicts the negative class (e.g., correctly identifies a legitimate email as not spam).

- False Negative (FN): The model incorrectly predicts the negative class (e.g., incorrectly identifies a spam email as legitimate – also known as a Type II error).

Based on these, we can define the key evaluation metrics:

Accuracy: This is the most straightforward metric, representing the proportion of total predictions that were correct.

Accuracy = (TP + TN) / (TP + TN + FP + FN)

While easy to understand, accuracy can be misleading, especially when dealing with imbalanced datasets (where one class is much more frequent than others). For instance, if 95% of emails are not spam, a model that always predicts "not spam" would have 95% accuracy but would be useless for filtering spam.

Precision: This metric measures the proportion of positive identifications that were actually correct. It answers the question: "Of all the instances the model labeled as positive, how many were truly positive?"

Precision = TP / (TP + FP)

High precision is important when the cost of a false positive is high. For example, in spam detection, high precision means that when an email is flagged as spam, it is very likely to actually be spam, reducing the chances of legitimate emails being missed.

Recall (Sensitivity or True Positive Rate): This metric measures the proportion of actual positives that were identified correctly. It answers the question: "Of all the truly positive instances, how many did the model correctly identify?"

Recall = TP / (TP + FN)

High recall is important when the cost of a false negative is high. In spam detection, high recall means that the model catches most of the actual spam emails, reducing the number of spam messages that reach the inbox.

F1-Score: This metric is the harmonic mean of precision and recall. It provides a single score that balances both precision and recall. It's particularly useful when you need a balance between the two, or when dealing with imbalanced classes.

F1-Score = 2 * (Precision * Recall) / (Precision + Recall)

The F1-score ranges from 0 to 1, with 1 being the best possible score. It punishes extreme values more than a simple average. For example, if precision is high but recall is very low (or vice-versa), the F1-score will also be low.

In multi-class classification, these metrics are often calculated for each class individually (e.g., precision for "sports," recall for "politics") and then averaged (e.g., macro-average or micro-average) to get an overall performance measure. Understanding these metrics is essential for interpreting a model's performance and making informed decisions about its deployment.

Common challenges (class imbalance, context understanding)

While text classification has made significant strides, practitioners often encounter several common challenges that can impact model performance and reliability.

Class Imbalance: This is a frequent issue where the number of examples in different categories is highly skewed. For instance, in fraud detection, fraudulent transactions are typically much rarer than legitimate ones. Similarly, in sentiment analysis of product reviews, overwhelmingly positive reviews might far outnumber negative or neutral ones. When models are trained on imbalanced datasets, they tend to become biased towards the majority class, as they can achieve high accuracy by simply predicting the most frequent category. This leads to poor performance on minority classes, which are often the ones of most interest. Addressing class imbalance might involve techniques like resampling the data (oversampling the minority class or undersampling the majority class), using different evaluation metrics (like F1-score or AUC-ROC which are less sensitive to imbalance than accuracy), or employing specialized algorithms or cost-sensitive learning that assign higher penalties for misclassifying minority class instances.

Context Understanding and Ambiguity: Human language is inherently complex, nuanced, and often ambiguous. Words can have multiple meanings depending on the context, and the overall meaning of a sentence can be subtle. Sarcasm, irony, idioms, and cultural references pose significant challenges for text classification models. For example, the phrase "This is great!" could be genuinely positive or sarcastic depending on the surrounding text and tone, which is hard for a machine to discern. While advanced models like Transformers have improved contextual understanding significantly, accurately interpreting these nuances remains an ongoing research area. Lack of sufficient context or the presence of noisy, irrelevant information in the text can also lead to misclassifications.

Scalability and Efficiency: As the volume of text data grows exponentially, the ability to train and deploy classification models efficiently becomes crucial. Deep learning models, while powerful, can be computationally expensive and require significant resources for training, especially with very large datasets or complex architectures. Deploying these models for real-time applications, such as instant content moderation or live sentiment analysis, demands low latency and high throughput, which can be challenging to achieve. Researchers are actively working on developing more lightweight and efficient model architectures and training techniques.

Data Scarcity for Specific Domains or Languages: High-quality labeled data is the fuel for supervised text classification models. However, for many specialized domains (e.g., niche legal areas, specific scientific research) or low-resource languages, obtaining sufficient labeled data can be difficult and expensive. This data scarcity limits the applicability and performance of text classification in these contexts. Techniques like transfer learning (leveraging knowledge from models trained on large, general-domain datasets), few-shot learning (training models with very few examples), and unsupervised or semi-supervised methods are being explored to mitigate this challenge.

Model Interpretability and Explainability: Many advanced text classification models, particularly deep learning models, operate as "black boxes." It can be difficult to understand why a model made a particular classification decision. This lack of interpretability can be a significant barrier in applications where accountability and transparency are critical, such as in medical diagnosis or financial decision-making. Efforts are underway to develop techniques for explaining model predictions, helping to build trust and identify potential biases or errors in the model's reasoning.

Addressing these challenges often requires a combination of sophisticated modeling techniques, careful data preprocessing and augmentation, and a deep understanding of the specific domain and task.

Text Classification in Modern Applications

Text classification has moved beyond theoretical research and is now a driving force in many modern applications. This section explores how it's being used in real-world scenarios to solve complex problems and create value.

Real-time content moderation systems

Real-time content moderation has become an essential application of text classification, particularly for online platforms such as social media networks, forums, messaging apps, and e-commerce sites that rely on user-generated content. The sheer volume and velocity of content posted online make manual moderation an impossible task. Automated systems powered by text classification are crucial for maintaining healthy and safe online environments.

These systems work by automatically analyzing text content (like posts, comments, or messages) as it's submitted and classifying it into categories such as "appropriate," "inappropriate," "spam," "hate speech," "harassment," or "misinformation." If content is flagged as violating a platform's policies, it can be automatically removed, hidden, or escalated to human moderators for review. The "real-time" aspect is critical; platforms need to identify and act on problematic content almost instantaneously to prevent its spread and minimize harm to users.

Building effective real-time content moderation systems involves several challenges. Models must be highly accurate to avoid both over-moderation (incorrectly removing benign content, which can lead to user frustration and accusations of censorship) and under-moderation (failing to remove harmful content). They also need to understand nuances in language, including slang, cultural references, and evolving methods of bypassing filters (e.g., using special characters or coded language). Furthermore, these systems must operate at scale, processing millions or even billions of pieces of text daily with very low latency. Deep learning models, especially those based on Transformer architectures, are increasingly used for their ability to capture complex linguistic patterns, though simpler, faster models might be used for initial screening. Hybrid approaches, combining AI with human oversight, are often the most effective.

The development and deployment of these systems also raise important ethical considerations, including potential biases in the models, freedom of speech concerns, and the psychological impact on human moderators who review the most disturbing content.

Customer experience enhancement through sentiment analysis

Sentiment analysis, a key application of text classification, plays a pivotal role in enhancing customer experience (CX). By automatically analyzing and understanding the emotions and opinions expressed in customer feedback, businesses can gain invaluable insights into how customers perceive their products, services, and overall brand.

Companies collect customer feedback from a multitude of sources: online reviews, social media mentions, survey responses, call center transcripts, emails, and chat logs. Manually sifting through this vast amount of unstructured text data to identify sentiment is impractical. Sentiment analysis tools use text classification to categorize these textual inputs as positive, negative, or neutral. Some advanced systems can even detect more granular emotions like anger, joy, frustration, or satisfaction, or perform aspect-based sentiment analysis, which identifies the sentiment towards specific features or aspects of a product or service (e.g., "The battery life is amazing [positive], but the screen is too dim [negative]").

The insights derived from sentiment analysis enable businesses to improve CX in several ways:

- Identifying Pain Points: Negative sentiment often highlights areas where customers are dissatisfied. By pinpointing these issues – whether it's a product defect, a confusing website, or poor customer service – companies can take targeted action to address them.

- Product Development: Understanding what customers love or dislike about existing products can guide future development and innovation. Positive sentiment can reinforce successful features, while negative sentiment can indicate areas needing redesign or improvement.

- Proactive Customer Service: By monitoring social media and other channels in real-time, companies can identify customers expressing frustration and intervene proactively to resolve their issues, potentially turning a negative experience into a positive one.

- Personalization: Understanding customer sentiment can help tailor marketing messages and product recommendations. For example, a customer who has expressed positive sentiment about a particular product category might be receptive to related offers.

- Brand Reputation Management: Tracking overall sentiment trends allows companies to monitor their brand health and quickly respond to emerging reputational threats.

- Measuring CX Initiatives: Sentiment analysis provides a quantifiable way to measure the impact of CX improvement efforts over time.

Ultimately, by listening to and understanding the voice of the customer through sentiment analysis, businesses can make more informed decisions, foster stronger customer relationships, and build greater loyalty.

These courses can provide practical skills in leveraging text classification for analyzing customer feedback and market trends.

For further reading on applying sentiment analysis in a business context, this book is a valuable resource.

Medical text processing for diagnosis support

Text classification is making significant inroads in the medical field, particularly in processing the vast amounts of textual data generated in healthcare for tasks like diagnostic support. Medical information often exists in unstructured formats, such as clinical notes, patient histories, medical imaging reports, discharge summaries, and published research articles. Analyzing this text manually is incredibly time-consuming and prone to human error. Text classification offers a way to automate and enhance this process.

One key application is the categorization of medical documents. For example, text classification can automatically sort patient records or research papers by disease, treatment type, or medical specialty. This helps clinicians and researchers quickly find relevant information. More directly related to diagnosis, text classification can be used to analyze clinical notes to identify mentions of specific symptoms, conditions, or risk factors. By extracting and classifying these key pieces of information, systems can help clinicians form a more complete picture of a patient's health status.

For instance, a text classification model could be trained to identify patterns of symptoms described in patient narratives that are indicative of a particular disease. While not replacing a physician's judgment, such a tool could act as a "second opinion" or highlight potential diagnoses that might have been overlooked, especially in complex cases or when dealing with rare diseases. Similarly, text classification can analyze radiology reports to automatically flag critical findings or categorize the urgency of follow-up, helping to prioritize patient care.

Furthermore, by classifying information from vast medical literature, these systems can help keep clinicians updated on the latest research relevant to their patients' conditions, supporting evidence-based medicine. Challenges in this area include the specialized and often complex medical terminology (including abbreviations and synonyms), the need for very high accuracy (as errors can have serious consequences), patient privacy concerns (requiring robust data security and anonymization techniques), and the need for models to be interpretable so clinicians can understand and trust their outputs. Despite these challenges, the potential for text classification to improve diagnostic accuracy, speed up information retrieval, and ultimately enhance patient care is substantial.

Financial document categorization

The financial industry generates and processes an enormous volume of documents daily, including regulatory filings, company reports, news articles, analyst reports, loan applications, insurance claims, and internal communications. Manually reading, understanding, and categorizing this deluge of information is a monumental task. Text classification offers powerful solutions to automate and streamline these processes, leading to increased efficiency, better risk management, and more informed decision-making.

One primary application is the automatic categorization of financial news and reports. For example, investment firms and analysts can use text classification to sort news articles by company, industry, relevant financial events (e.g., mergers and acquisitions, earnings announcements), or sentiment (e.g., positive or negative news about a stock). This allows them to quickly identify relevant information that could impact market movements or investment strategies.

In regulatory compliance, text classification can help financial institutions categorize documents to ensure they meet reporting requirements or to identify potentially non-compliant activities. For instance, it can scan internal communications for keywords or patterns indicative of insider trading or market manipulation. Similarly, in fraud detection, text classification can analyze transaction descriptions, customer communications, or insurance claims to flag suspicious activities that may warrant further investigation.

Lending institutions use text classification to process loan applications, automatically extracting key information and categorizing applications based on risk profiles or loan types. This speeds up the underwriting process and helps in making more consistent credit decisions. Furthermore, text classification can be applied to customer service in finance, such as categorizing customer inquiries received via email or chat to route them to the appropriate department or to identify common issues and sentiment trends.

The challenges in financial text classification include the specialized jargon, the need for high accuracy due to the financial implications of errors, and the dynamic nature of financial markets and regulations, which requires models to be regularly updated. Despite these, the benefits of efficiency, improved risk management, and enhanced analytical capabilities make text classification an increasingly vital tool in the financial sector.

Core Algorithms and Techniques

Understanding the engines that power text classification is key to appreciating its capabilities. This section explores the fundamental algorithms and techniques, from traditional machine learning approaches to cutting-edge deep learning architectures.

Traditional ML approaches (Naive Bayes, SVM)

Before the widespread adoption of deep learning, several traditional machine learning (ML) algorithms proved effective for text classification tasks and continue to be relevant, especially for smaller datasets or when computational resources are limited.

Naive Bayes (NB): This is a probabilistic classifier based on Bayes' Theorem with a "naive" assumption of conditional independence between features (words), given the class. Despite its simplicity and this strong assumption (which rarely holds true in real-world text), Naive Bayes often performs surprisingly well for text classification, particularly for tasks like spam filtering. It works by calculating the probability of a document belonging to a particular class based on the frequency of words in that document and their historical association with each class in the training data. It's computationally efficient and requires relatively little training data. Variations like Multinomial Naive Bayes and Bernoulli Naive Bayes are commonly used for text.

Support Vector Machines (SVM): SVM is a powerful and versatile supervised learning algorithm that can be used for both classification and regression. For text classification, SVM aims to find an optimal hyperplane in a high-dimensional space that best separates documents belonging to different categories. The "support vectors" are the data points closest to this hyperplane, and the goal is to maximize the margin (the distance) between the hyperplane and these support vectors. SVMs are particularly effective in high-dimensional spaces, which is common in text classification where each word can be a dimension (e.g., using TF-IDF features). They can also handle non-linear data using a technique called the kernel trick. SVMs often achieve high accuracy but can be more computationally intensive to train than Naive Bayes, especially with very large datasets.

Other traditional ML algorithms used for text classification include:

- Logistic Regression: A statistical model that uses a logistic function to model the probability of a binary outcome. It can be extended to multi-class classification (e.g., using a one-vs-rest approach). It's relatively simple, interpretable, and often provides good performance.

- K-Nearest Neighbors (KNN): A non-parametric, instance-based learning algorithm. It classifies a new document based on the majority class of its 'k' nearest neighbors in the feature space (i.e., the k most similar documents in the training set). The choice of 'k' and the distance metric are important. KNN can be computationally expensive during prediction for large datasets as it needs to compare the new document to all training documents.

- Decision Trees and Random Forests: Decision trees classify documents by learning a series of if-then-else rules based on features. Random Forests are an ensemble method that builds multiple decision trees and combines their predictions (e.g., by voting) to improve accuracy and reduce overfitting.

These traditional methods typically require careful feature engineering (e.g., creating TF-IDF vectors, selecting relevant words) as a preprocessing step. While deep learning models have often surpassed them in performance on many benchmarks, these classical algorithms remain valuable tools in the text classification toolkit.

The following courses provide a good introduction to these foundational machine learning algorithms and their application in R and Python.

Deep learning architectures (RNNs, Transformers)

Deep learning has brought about a paradigm shift in text classification, with architectures capable of automatically learning intricate patterns and representations from raw text, often outperforming traditional methods.

Recurrent Neural Networks (RNNs): RNNs are a class of neural networks specifically designed to process sequential data, making them naturally suited for text, which is a sequence of words. Unlike feedforward neural networks, RNNs have loops, allowing information to persist. As an RNN processes a sequence, it maintains a "hidden state" that captures information from previous elements in the sequence. This allows RNNs to understand context and dependencies between words, even if they are far apart.

A common type of RNN used for NLP is the Long Short-Term Memory (LSTM) network. LSTMs are a special kind of RNN, capable of learning long-range dependencies more effectively than simple RNNs due to their gating mechanism (input, forget, and output gates), which controls the flow of information and helps mitigate the vanishing gradient problem. Another variant is the Gated Recurrent Unit (GRU), which is similar to LSTM but has a simpler architecture. RNNs, LSTMs, and GRUs are often used in conjunction with word embeddings as input. They can process text sequentially and produce a final representation that is then fed into a classification layer.

Convolutional Neural Networks (CNNs): While initially famous for image processing, CNNs have also been successfully applied to text classification. In the context of text, 1D convolutions are applied across sequences of word embeddings. CNNs can learn to identify local patterns or n-grams (contiguous sequences of n words) that are indicative of certain classes, regardless of their position in the text. Pooling layers are then used to summarize these features. CNNs are often faster to train than RNNs and can be very effective, especially for tasks where local word patterns are important.

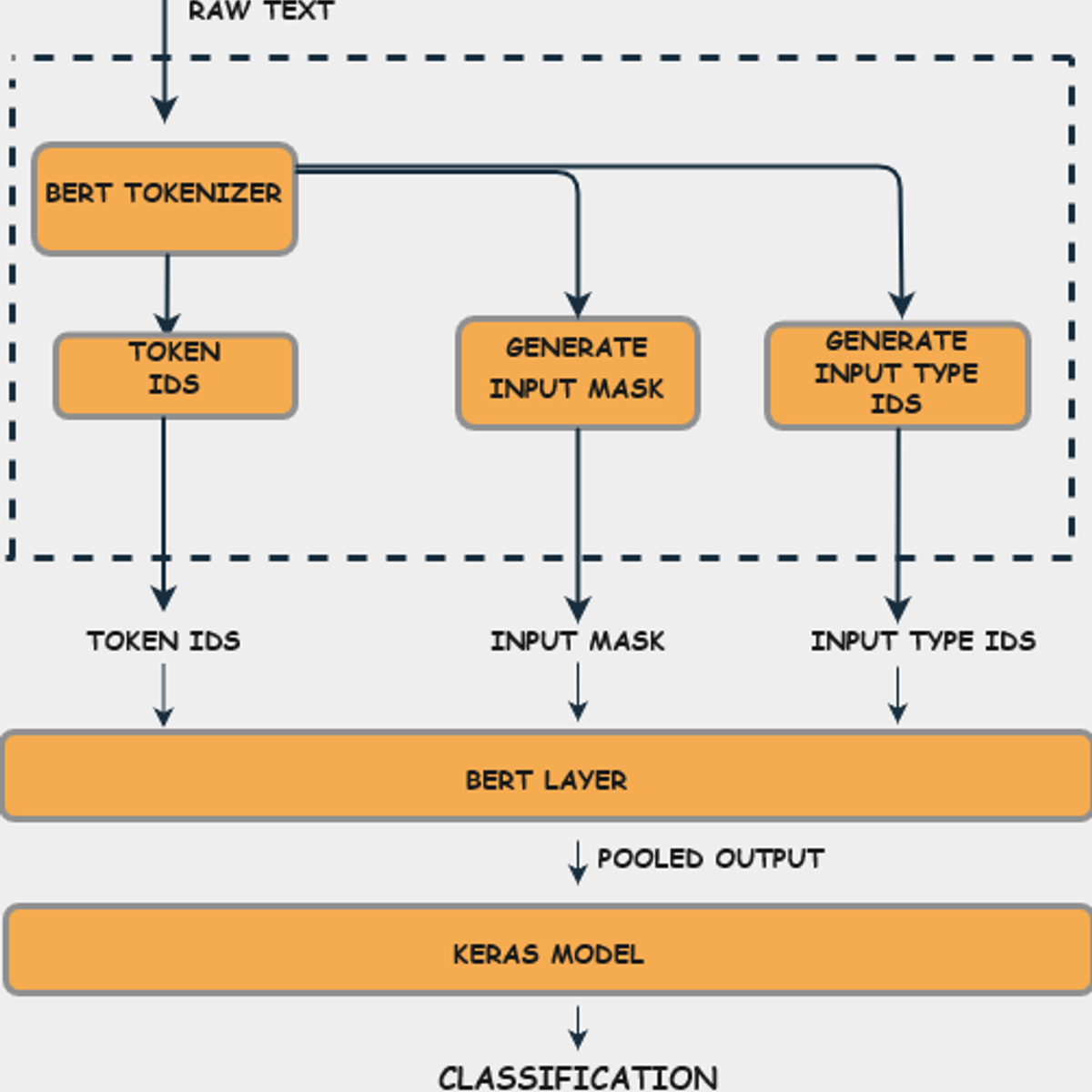

Transformer Models: Transformer-based architectures, introduced in the paper "Attention Is All You Need," have revolutionized NLP, including text classification. Models like BERT (Bidirectional Encoder Representations from Transformers), RoBERTa, XLNet, and GPT (Generative Pre-trained Transformer) have set new state-of-the-art results on numerous benchmarks.

The key innovation in Transformers is the self-attention mechanism. Self-attention allows the model to weigh the importance of different words in a sequence when representing a particular word. Unlike RNNs that process words sequentially, Transformers can process all words in parallel and capture relationships between any two words in the sequence, regardless of their distance. This allows for a richer understanding of context.

Many Transformer models are pre-trained on massive amounts of text data (e.g., the entire Wikipedia and large book corpora). This pre-training allows them to learn general language representations. These pre-trained models can then be fine-tuned on smaller, task-specific labeled datasets for text classification. This transfer learning approach has been incredibly successful, enabling high performance even with limited labeled data for the specific task.

These deep learning architectures, particularly Transformers, represent the current cutting edge in text classification, offering powerful capabilities for understanding complex language patterns.

To gain hands-on experience with Transformer models and their applications in text classification, consider these courses:

This book provides a comprehensive overview of deep learning concepts, which are foundational to these advanced architectures.

Hybrid human-AI systems

While fully automated text classification systems powered by AI have become increasingly sophisticated, there are many scenarios where a purely algorithmic approach may not be optimal or sufficient. Hybrid human-AI systems, often referred to as "human-in-the-loop" (HITL) systems, combine the strengths of artificial intelligence with the nuanced understanding and judgment of human experts. This collaboration can lead to more accurate, robust, and trustworthy text classification outcomes, especially for complex, sensitive, or ambiguous tasks.

In a typical hybrid system, the AI model handles the bulk of the classification work, processing large volumes of text quickly and identifying patterns. However, when the model encounters instances where its confidence is low, or when the text is particularly ambiguous or critical, these cases are flagged and routed to human reviewers. These human experts can then make the final classification decision, correct errors made by the AI, or provide labels for new or challenging examples. This human feedback is then often used to retrain and improve the AI model over time, creating a continuous learning loop.

Several scenarios benefit from hybrid human-AI approaches:

- High-Stakes Applications: In fields like medical diagnosis from clinical notes or legal document review, the cost of an error can be extremely high. Human oversight is crucial to ensure accuracy and mitigate risks.

- Content Moderation: While AI can filter out a large amount of problematic content, nuanced cases involving satire, cultural context, or evolving forms of harmful speech often require human judgment to avoid censorship or missed violations.

- Edge Cases and Novel Data: AI models are trained on past data and may struggle with entirely new types of text or rare, unforeseen scenarios. Humans can adapt more quickly to these novel situations.

- Building Training Datasets: When initially creating labeled datasets, especially for specialized domains, human expertise is indispensable. Active learning, a form of HITL, allows the AI to select the most informative unlabeled samples for humans to label, making the dataset creation process more efficient.

- Ensuring Fairness and Mitigating Bias: AI models can inherit biases present in their training data. Human reviewers can help identify and correct these biases, ensuring fairer outcomes.

The design of effective hybrid systems involves careful consideration of the workflow, the interface for human reviewers, and the mechanisms for incorporating human feedback into the AI model. The goal is to create a symbiotic relationship where AI augments human capabilities, and human intelligence refines and guides the AI, leading to a system that is more powerful and reliable than either could be alone.

Emergent few-shot learning methods

One of the significant challenges in supervised text classification is the need for large amounts of labeled training data. Creating such datasets can be time-consuming, expensive, and sometimes impractical, especially for niche domains or low-resource languages. Emergent few-shot learning (FSL) methods aim to address this challenge by enabling models to learn to classify text with very few labeled examples per category, sometimes even just one (one-shot learning) or zero (zero-shot learning, often relying on descriptions of the classes).

Few-shot learning has gained considerable traction, particularly with the advent of large pre-trained language models (LLMs) like GPT-3 and its successors. These models are trained on vast and diverse text corpora, allowing them to acquire a broad understanding of language, syntax, semantics, and even some degree of world knowledge. This rich pre-training serves as a powerful foundation for FSL.

Several approaches are used in few-shot text classification:

- Fine-tuning Pre-trained Models: This is a common strategy where a large pre-trained language model is further trained (fine-tuned) on a small set of labeled examples specific to the target task. Because the model has already learned general language features, it can adapt to the new task with relatively few examples.

- Meta-Learning (Learning to Learn): Meta-learning algorithms are designed to learn how to learn new tasks quickly from limited data. The model is trained on a variety of different (but related) tasks during a "meta-training" phase. This allows it to develop a learning strategy that can then be applied to a new task with only a few examples.

- Prompt-Based Learning and In-Context Learning: This approach, particularly prominent with very large LLMs, involves formulating the classification task as a natural language prompt. For example, to classify a movie review, you might provide the model with the review and ask, "Is this review positive or negative?". The model's ability to understand and respond to the prompt is leveraged for classification. In-context learning involves providing a few examples (shots) directly within the prompt itself, guiding the model's prediction for a new, unlabeled instance.

- Siamese Networks and Prototypical Networks: These methods are often used in metric-based FSL. They learn a feature space where examples from the same class are close together and examples from different classes are far apart. For a new input, its class is determined by comparing its embedding to the "prototypes" (e.g., average embeddings) of each class, which are computed from the few available labeled examples.

While few-shot learning methods have shown impressive results, they also come with challenges. Performance can be sensitive to the choice of examples, the way prompts are formulated, and the specific pre-trained model used. Ensuring robustness and generalizability with very limited data remains an active area of research. However, the ability to build effective text classifiers with minimal labeled data is a significant step towards making these technologies more accessible and applicable across a wider range of scenarios.

For learners interested in cutting-edge AI, including models that can be fine-tuned for few-shot learning tasks, these courses provide relevant knowledge:

Formal Education Pathways

For those considering a career in or research related to text classification, a strong formal education can provide the theoretical grounding and analytical skills necessary. This section outlines relevant academic avenues.

Relevant undergraduate coursework (NLP, statistics)

A solid undergraduate education provides the foundational knowledge essential for diving deeper into text classification and related fields like Natural Language Processing (NLP) and Machine Learning. While a specific "Text Classification" major is unlikely at the undergraduate level, a combination of coursework from Computer Science, Statistics, Mathematics, and sometimes Linguistics will build a strong base.

Key areas of study and relevant courses include:

-

Computer Science:

- Introduction to Programming: Essential for implementing algorithms. Python is a particularly valuable language in NLP and machine learning.

- Data Structures and Algorithms: Fundamental for understanding how to efficiently store, access, and manipulate data, and for designing efficient computational solutions.

- Introduction to Artificial Intelligence (AI): Provides an overview of AI concepts, including search algorithms, knowledge representation, and often an introduction to machine learning.

- Machine Learning: A core course covering supervised and unsupervised learning algorithms, model evaluation, and concepts like overfitting and feature engineering. This is directly applicable to text classification.

- Natural Language Processing (NLP): If available at the undergraduate level, this course would be highly relevant, covering topics like text preprocessing, part-of-speech tagging, parsing, sentiment analysis, and an introduction to text classification techniques.

- Database Management: Useful for understanding how to store and query the large datasets often used in text classification.

-

Statistics and Mathematics:

- Probability and Statistics: Absolutely crucial for understanding the theoretical underpinnings of many machine learning algorithms (e.g., Naive Bayes, logistic regression) and for evaluating model performance.

- Linear Algebra: Essential for understanding how data is represented (e.g., vectors, matrices in word embeddings) and how many algorithms operate.

- Calculus (Differential and Integral): Important for understanding optimization algorithms used in training machine learning models, particularly deep learning models.

- Discrete Mathematics: Provides a foundation for logical reasoning and understanding algorithms.

-

Linguistics (Optional but beneficial):

- Introduction to Linguistics: Can provide a deeper understanding of language structure, syntax, semantics, and pragmatics, which can be beneficial for designing more sophisticated NLP models.

- Computational Linguistics: If offered, this directly bridges linguistics and computer science, often covering NLP topics from a more linguistic perspective.

Students should also seek opportunities for practical experience, such as personal projects, internships, or research assistantships involving text data analysis. This hands-on work helps solidify theoretical knowledge and develop practical skills. Building a portfolio of projects, even small ones, can be very beneficial when seeking further education or employment. You can explore courses on Computer Science and Data Science on OpenCourser to find relevant foundational coursework.

Graduate research opportunities

For individuals passionate about pushing the boundaries of text classification and Natural Language Processing (NLP), graduate studies (Master's or Ph.D.) offer significant research opportunities. Graduate programs provide the environment for in-depth exploration of advanced topics, contribution to new knowledge, and specialization in specific areas of text classification.

Research at the graduate level often focuses on addressing the current challenges and exploring future directions in the field. Potential areas of research include:

- Advanced Deep Learning Architectures: Developing novel neural network architectures (e.g., new variants of Transformers, graph neural networks for text) that are more efficient, scalable, or better at capturing complex linguistic phenomena.

- Low-Resource NLP and Cross-Lingual Transfer: Creating methods for effective text classification in languages with limited labeled data or transferring knowledge from high-resource languages to low-resource ones.

- Few-Shot and Zero-Shot Learning: Designing models that can learn to classify text with minimal or no labeled examples for new categories, making text classification more adaptable.

- Interpretability and Explainability: Developing techniques to understand and explain the decisions made by complex "black-box" models like deep neural networks, which is crucial for trust and debugging.

- Bias Detection and Mitigation: Researching methods to identify and reduce societal biases (e.g., gender, racial bias) that can be learned by models from training data, ensuring fairer and more equitable AI systems.

- Robustness and Adversarial Attacks: Making models more resilient to noisy input, slight perturbations in the text (adversarial attacks), or shifts in data distribution.

- Multimodal Text Classification: Combining textual information with other modalities like images, audio, or video for more comprehensive classification tasks.

- Domain Adaptation: Developing techniques that allow models trained on one domain (e.g., news articles) to perform well on a different target domain (e.g., scientific papers or social media posts) where data distributions might differ.

- Efficient and Scalable Models: Designing models that are computationally less expensive to train and deploy, making them suitable for resource-constrained environments or real-time applications.

- Applications in Specific Domains: Focusing research on novel applications of text classification in areas like healthcare (e.g., improved diagnostic support from clinical notes), finance (e.g., more accurate fraud detection), law (e.g., automated legal document analysis), or social sciences (e.g., analyzing public discourse).

Graduate programs typically involve advanced coursework, research seminars, and a significant research project culminating in a thesis or dissertation. Students work closely with faculty advisors who are experts in their chosen research area. Opportunities often exist to collaborate with industry partners or publish findings in top-tier conferences and journals. A strong foundation in computer science, mathematics, and statistics is essential for success in graduate research in this field. Exploring university departments known for their NLP and machine learning research can help identify potential programs and advisors.

Interdisciplinary connections (linguistics, cognitive science)

Text classification, while heavily rooted in computer science and statistics, benefits immensely from interdisciplinary connections, particularly with fields like linguistics and cognitive science. These connections enrich the understanding of human language and thought processes, which can inspire more sophisticated and human-like approaches to automated text processing.

Linguistics: The scientific study of language, linguistics provides deep insights into the structure and meaning of text.

- Syntax: Understanding grammatical structure helps in parsing sentences and identifying relationships between words, which can be crucial for accurate classification.

- Semantics: The study of meaning in language is fundamental. Concepts like word sense disambiguation (determining the correct meaning of a word in context), lexical semantics (meaning of words), and compositional semantics (how meanings of words combine to form sentence meanings) directly inform the development of models that can "understand" text.

- Pragmatics: This branch deals with how context influences the interpretation of meaning, including understanding implicatures, speech acts, and discourse structure. This is vital for tackling challenges like sarcasm or indirect language.

- Corpus Linguistics: Provides methodologies for analyzing large collections of text, which is essential for developing and evaluating text classification models.

Insights from linguistics can guide feature engineering, the design of model architectures, and the creation of more linguistically informed evaluation metrics.

Cognitive Science: This interdisciplinary field studies the mind and its processes, including perception, language, memory, attention, reasoning, and emotion.

- Human Language Processing: Research into how humans understand and produce language can provide valuable parallels and inspiration for computational models. For instance, understanding how humans resolve ambiguity or make inferences can guide the development of AI systems with similar capabilities.

- Categorization and Concept Learning: Cognitive science explores how humans form categories and learn concepts. This knowledge can inform the design of machine learning algorithms that categorize text in ways that align more closely with human intuition.

- Attention Mechanisms: The concept of attention in deep learning (especially in Transformer models) has cognitive parallels. Studying human attentional processes might lead to further refinements in these AI mechanisms.

- Memory: Understanding human memory systems could inspire new ways for models to store and retrieve relevant information from vast amounts of text or past experiences.

- Decision Making: Cognitive models of how humans make classification decisions under uncertainty can inform the development of more robust and transparent AI classifiers.

By integrating knowledge from linguistics and cognitive science, researchers and practitioners in text classification can move beyond purely statistical pattern matching towards creating systems that have a more nuanced, human-like grasp of language. This can lead to models that are not only more accurate but also more interpretable and better aligned with human reasoning. Many universities with strong NLP programs also have strong linguistics and cognitive science departments, offering opportunities for cross-disciplinary collaboration and learning. You can browse Humanities courses, including linguistics, and Cognitive Science courses on OpenCourser.

Skill Development Through Online Learning

For individuals looking to enter the field of text classification or upskill, online learning platforms offer a wealth of accessible and flexible resources. This section discusses how to effectively use these platforms for skill development.

Project-based learning strategies

One of the most effective ways to solidify your understanding of text classification and develop practical skills is through project-based learning. Theoretical knowledge from courses is crucial, but applying that knowledge to real-world or simulated problems helps bridge the gap between theory and practice. Online learning platforms often provide opportunities or resources that can support a project-based approach.

Here are some strategies for incorporating project-based learning into your online studies:

- Start with Guided Projects: Many online courses, especially on platforms like Coursera or edX, include guided projects or capstone projects. [7o08dd, x1nvej] These projects provide a structured environment where you apply the concepts learned in the course to solve a specific problem, often with provided datasets and step-by-step instructions. This is an excellent way to get started and build confidence.

- Replicate Research Papers or Tutorials: Find interesting research papers or online tutorials that implement a text classification model. Try to replicate their results. This will expose you to different techniques, datasets, and coding practices. You'll learn a lot by working through the details and troubleshooting issues.

-

Choose Your Own Adventure (Personal Projects): Once you have some foundational knowledge, embark on your own projects based on your interests.

- Find Interesting Datasets: Look for publicly available text datasets on platforms like Kaggle, UCI Machine Learning Repository, or data.gov. Choose a dataset that aligns with a problem you find engaging (e.g., classifying news articles, analyzing product reviews, detecting hate speech).

- Define a Clear Goal: What do you want to achieve? Is it to build a spam filter, a sentiment analyzer, or a topic modeler? Having a clear objective will guide your project.

- Iterate and Experiment: Start with a simple baseline model (e.g., Naive Bayes with TF-IDF) and gradually try more complex techniques (e.g., LSTMs, Transformers). Experiment with different preprocessing steps, feature extraction methods, and model hyperparameters. Document your experiments and results.

- Focus on the Entire Pipeline: A real-world project involves more than just training a model. Pay attention to data collection (if needed), cleaning, preprocessing, feature engineering, model selection, evaluation, and potentially deployment.

- Participate in Competitions: Platforms like Kaggle host text classification competitions. Participating in these can be a great learning experience, as you'll see how others approach similar problems and get to work with interesting datasets.

- Contribute to Open Source Projects: If you have programming skills, contributing to open-source NLP or machine learning libraries can be a fantastic way to learn from experienced developers and build a public portfolio.

- Build a Portfolio: Document your projects on platforms like GitHub. Create clear README files explaining the project, your approach, and your findings. A strong portfolio of projects is invaluable when applying for jobs or graduate programs.

Online courses can provide the necessary theoretical background and introduce you to the tools, while personal projects allow you to apply that knowledge creatively and develop problem-solving skills. Don't be afraid to start small and gradually tackle more complex projects as your skills grow. The process of struggling with a problem and eventually solving it is where the deepest learning often occurs.

These project-based courses offer excellent opportunities to apply text classification skills in a structured way:

Open-source tool proficiency (Python, TensorFlow)

Developing proficiency in open-source tools is essential for anyone serious about working in text classification. These tools provide the building blocks for implementing algorithms, processing data, and building models without having to reinvent the wheel. The open-source nature also means there's a vast community for support, learning, and collaboration.

Python: Python has become the de facto programming language for machine learning and data science, including text classification. Its readability, extensive libraries, and strong community support make it an excellent choice. Key Python libraries for text classification include:

- NLTK (Natural Language Toolkit): A comprehensive library for various NLP tasks, including tokenization, stemming, lemmatization, part-of-speech tagging, and access to numerous text corpora. It's great for learning foundational NLP concepts.

- scikit-learn: An indispensable library for general machine learning. It provides implementations of many traditional classification algorithms (Naive Bayes, SVM, Logistic Regression, etc.), feature extraction methods (TF-IDFVectorizer, CountVectorizer), model evaluation tools, and utilities for data preprocessing.

- spaCy: Known for its speed and efficiency, spaCy is excellent for production-level NLP. It offers pre-trained models for various languages and tasks, including named entity recognition, part-of-speech tagging, and dependency parsing, which can be useful in feature engineering for text classification.

- Gensim: A popular library for topic modeling (e.g., LDA) and working with word embeddings (e.g., Word2Vec, FastText).

- Pandas: Essential for data manipulation and analysis, allowing you to easily load, clean, and transform textual data stored in formats like CSV or Excel.

- NumPy: The fundamental package for numerical computation in Python, providing support for multi-dimensional arrays and matrices, which are used extensively in machine learning.

Deep Learning Frameworks: For implementing more advanced deep learning models, proficiency in frameworks like TensorFlow or PyTorch is crucial.

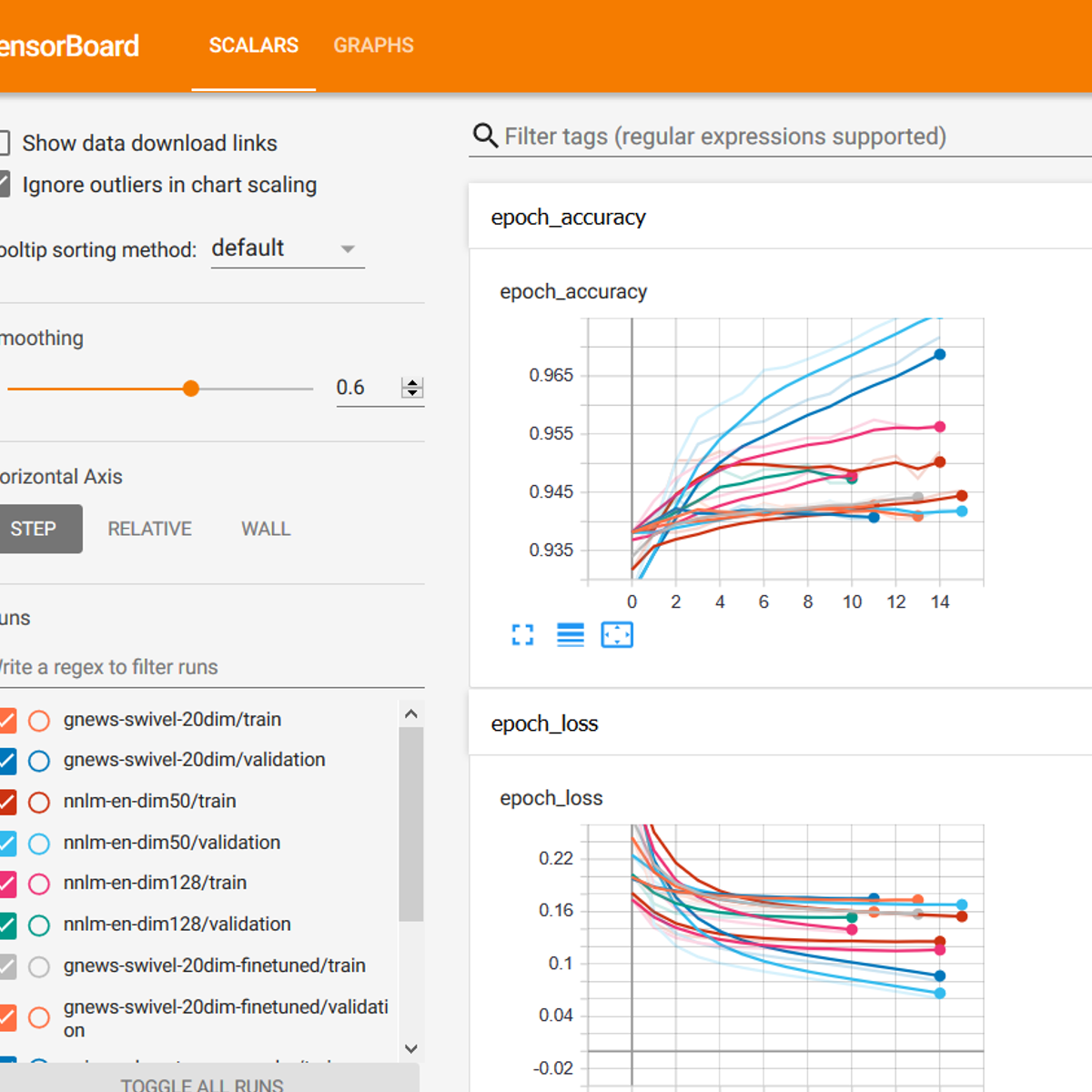

- TensorFlow: Developed by Google, TensorFlow is a powerful and flexible open-source library for numerical computation and large-scale machine learning. [x1nvej, 7o08dd] It provides a comprehensive ecosystem for building and deploying machine learning models, including deep neural networks for text classification (e.g., CNNs, RNNs, Transformers). Keras, a high-level API often used with TensorFlow, simplifies model building.

- PyTorch: Developed by Facebook's AI Research lab (FAIR), PyTorch is another widely adopted open-source machine learning library. It's known for its Pythonic feel, dynamic computation graphs (which can be very helpful for NLP research), and strong community support. Many state-of-the-art NLP models are implemented in PyTorch.

Hugging Face Transformers: This library has become incredibly popular for working with Transformer models (BERT, GPT, RoBERTa, etc.). It provides easy access to thousands of pre-trained models for various NLP tasks, including text classification, along with tools for fine-tuning them on your own data. It supports both TensorFlow and PyTorch.

Many online courses focus specifically on teaching these tools. For instance, you can find courses on "Python for Data Science," "Deep Learning with TensorFlow," or "NLP with spaCy." Actively using these tools in your projects is the best way to build proficiency. Don't just read about them; install them, work through tutorials, and try to apply them to your own text classification problems. OpenCourser features a wide range of courses on Programming, including Python, and specific Software Tools relevant to data science.

These courses are specifically designed to build proficiency in tools like TensorFlow for text classification tasks:

For those looking to build a solid foundation with Python in the context of NLP, this book is a classic:

And for those interested in R for text mining:

Community-driven knowledge sharing

The field of text classification, like much of data science and machine learning, thrives on community-driven knowledge sharing. Engaging with these communities can significantly accelerate your learning, help you overcome challenges, and keep you updated on the latest developments. Online learning often naturally connects you to these vibrant ecosystems.

Here are some key avenues for community-driven knowledge sharing:

-

Online Forums and Q&A Sites:

- Stack Overflow: An indispensable resource for programmers. If you encounter a coding error or a conceptual roadblock while working on a text classification project, chances are someone has faced a similar issue, and a solution or discussion exists on Stack Overflow. You can also ask your own well-formulated questions.

- Kaggle Forums: Kaggle is not just for competitions; its forums are a rich source of discussions about datasets, machine learning techniques (including text classification), and code sharing.

- Reddit Communities: Subreddits like r/MachineLearning, r/LanguageTechnology, and r/datascience have active communities where people share news, ask questions, and discuss research.

- Discourse Forums for Specific Tools: Many open-source tools (e.g., spaCy, Hugging Face Transformers) have their own dedicated forums or communities where users can ask questions and share insights.

- GitHub: Beyond just hosting code, GitHub is a collaborative platform. You can explore open-source text classification projects, see how others have implemented certain algorithms, contribute to projects, and even get feedback on your own code. Following influential researchers or developers in the field on GitHub can also be insightful.

- Blogs and Online Publications: Many researchers, practitioners, and companies maintain blogs where they share tutorials, case studies, and insights related to text classification and NLP. Websites like Medium, Towards Data Science, and KDnuggets host a plethora of articles. Following these can expose you to new ideas and practical tips.

- Social Media: Platforms like X (formerly Twitter) and LinkedIn are used by many in the AI/ML community to share research, news, and job opportunities. Following key individuals and organizations can be a good way to stay in the loop.

- Online Course Communities: Many online courses have their own discussion forums where students can interact, ask questions of instructors or teaching assistants, and collaborate on assignments. This is a great first point of community engagement when you're starting out.

- Meetups and Online Conferences/Webinars: While not strictly "online learning platforms," many tech communities host virtual meetups, webinars, and even full conferences. These events offer opportunities to learn from experts, hear about cutting-edge research, and network with peers.

Engaging with these communities involves both consuming information and contributing back. Don't hesitate to ask questions (after doing your own initial research), share your own solutions or projects (even if they're simple), and help others when you can. This active participation will deepen your understanding and make you a more well-rounded practitioner.

Portfolio development techniques

For anyone aiming to enter or advance in the field of text classification, a strong portfolio is often more persuasive than just a list of completed courses or a resume. A portfolio showcases your practical skills, your ability to solve problems, and your passion for the subject. Online learning provides an excellent foundation for building compelling portfolio projects.

Here are some techniques for effective portfolio development:

-

Showcase a Variety of Projects: Aim to include 2-4 well-documented projects that demonstrate different aspects of text classification. For example:

- A project using traditional ML algorithms (e.g., Naive Bayes or SVM for spam detection).

- A project involving deep learning (e.g., an LSTM or Transformer for sentiment analysis of longer reviews).

- A project that tackles a specific challenge, like class imbalance or working with noisy data.

- A project that involves an end-to-end pipeline, from data collection/cleaning to model deployment (even a simple deployment like a Flask app).

- Focus on Real-World or Realistic Problems: While tutorial-based projects are good for learning, try to pick problems that have some real-world relevance or that genuinely interest you. This makes the project more engaging for you and more compelling for potential employers or collaborators. You can often find interesting public datasets on platforms like Kaggle, data.world, or government open data portals.

-

Document Thoroughly on GitHub: GitHub is the standard platform for hosting code and showcasing projects. For each portfolio project, create a dedicated repository with:

- A clear and concise

README.mdfile. This is your project's homepage. It should explain:- The problem you are solving.

- The dataset used (with a link if public).

- Your methodology (preprocessing steps, feature extraction, models tried).

- Key results and findings (including evaluation metrics).

- Challenges encountered and how you addressed them.

- Instructions on how to run your code.

- Well-commented code, organized into logical scripts or notebooks (e.g., Jupyter Notebooks are great for exploratory analysis and demonstrating steps).

- Any necessary data files (or links to them if they are too large to host on GitHub).

- A

requirements.txtfile listing the Python libraries and versions needed to run your project.

- A clear and concise

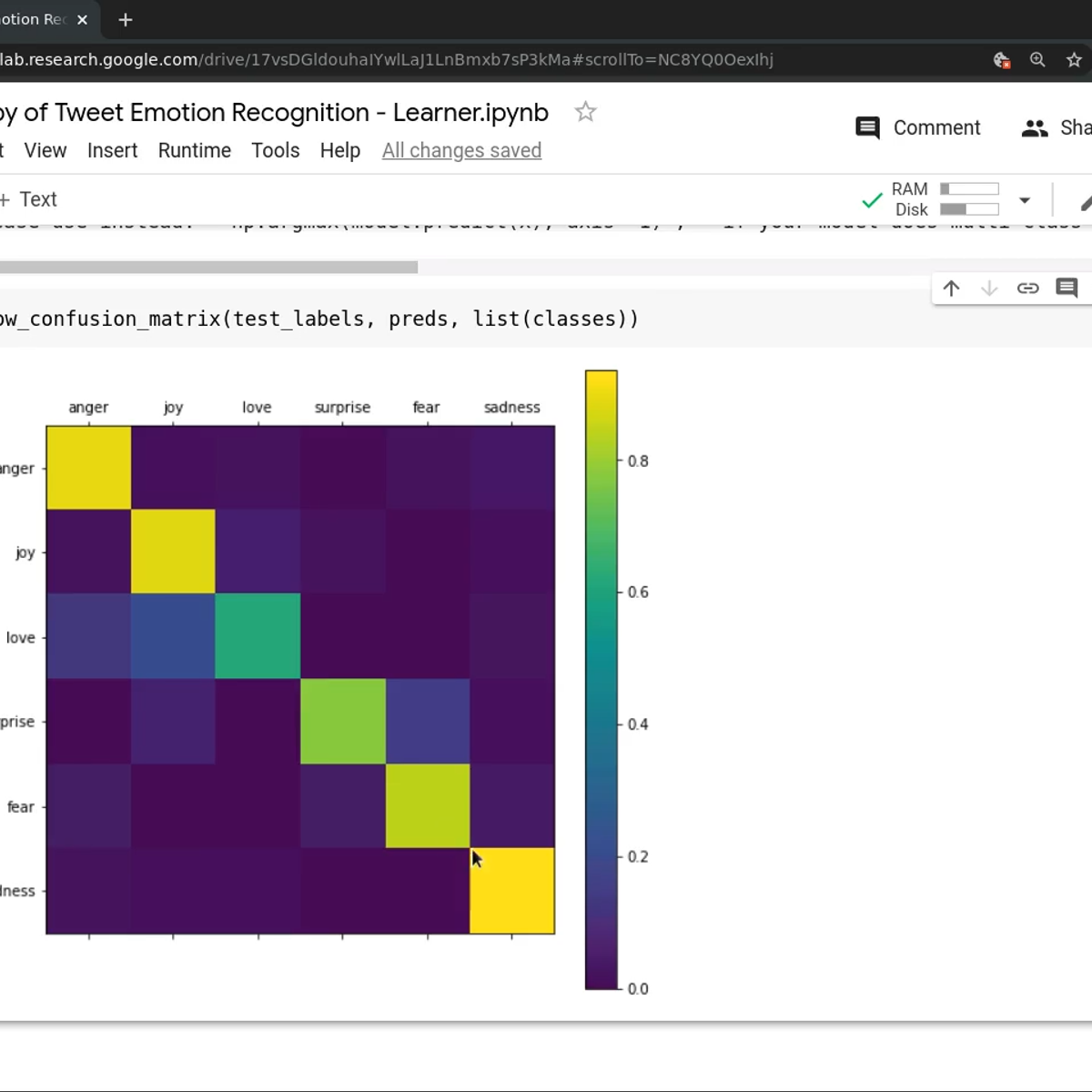

- Visualize Your Results: Use libraries like Matplotlib, Seaborn, or Plotly to create visualizations of your data (e.g., class distributions) and model performance (e.g., confusion matrices, ROC curves, precision-recall curves). Visuals make your findings more accessible and engaging.

- Write Blog Posts or Articles: Consider writing a blog post about one of your projects. Explain your process, the challenges, and what you learned. This demonstrates your communication skills and helps solidify your own understanding. You can host this on platforms like Medium, LinkedIn, or a personal blog. Link to your GitHub repository from the blog post.

- Emphasize Your Contributions and Learning: If you're building on existing work or tutorials, clearly state what your unique contributions were or what specific new skills you learned and applied.

- Keep it Clean and Professional: Ensure your code is readable and your documentation is well-written and free of typos. This reflects your attention to detail.

- Get Feedback: Share your portfolio with peers, mentors, or online communities for feedback. Constructive criticism can help you improve your projects and presentation.

Building a strong portfolio takes time and effort, but it's a crucial investment in your career. Online courses can provide you with the skills and knowledge to tackle these projects, and platforms like OpenCourser can help you find those courses. Remember, the goal is to demonstrate not just that you *know* about text classification, but that you can *do* text classification.

Career Progression and Opportunities

A background in text classification opens doors to a variety of roles and career paths in the rapidly expanding field of artificial intelligence and data science. This section outlines potential career trajectories and specializations.

Entry-level roles (data annotator, ML engineer)

For those starting their journey in text classification and related AI fields, several entry-level roles can provide valuable experience and a launchpad for future growth. These roles often require a foundational understanding of machine learning concepts, programming skills (especially Python), and an eagerness to learn.

Data Annotator / Data Labeler: This role is crucial for creating the labeled datasets that supervised machine learning models, including text classifiers, rely on. Data annotators carefully review raw text data (e.g., customer reviews, social media posts, support tickets) and assign predefined labels or categories according to specific guidelines.

- Responsibilities: Reading and understanding text, applying consistent labels, identifying ambiguous cases, providing feedback on annotation guidelines, and sometimes using specialized annotation tools.

- Skills: Attention to detail, good reading comprehension, ability to follow complex guidelines, basic computer literacy. Domain knowledge in the area of the text being annotated can be a plus.

- Career Path: While an entry point, experience as a data annotator provides a deep understanding of data quality and the challenges of creating good training sets. It can lead to roles like Annotation Team Lead, Data Quality Analyst, or transition into more technical roles with further skill development.

Junior Machine Learning Engineer / AI Engineer: This role is more technical and typically involves assisting in the development, training, and deployment of machine learning models.

- Responsibilities: Assisting with data preprocessing (cleaning text, feature extraction), training existing model architectures, running experiments, evaluating model performance, writing code (often in Python), and supporting the deployment of models into applications.

- Skills: Proficiency in Python and relevant libraries (scikit-learn, Pandas, NumPy), foundational knowledge of machine learning algorithms (including those for text classification like Naive Bayes, SVMs, and an introduction to deep learning concepts), understanding of model evaluation metrics, version control (Git), and sometimes familiarity with cloud platforms (AWS, Azure, GCP).

- Career Path: This is a direct pathway to becoming a Machine Learning Engineer. With experience, individuals can take on more complex projects, design new models, lead teams, and specialize in areas like NLP, computer vision, or MLOps (Machine Learning Operations).

Data Analyst (with NLP focus): While a broader role, Data Analysts often work with text data. An entry-level Data Analyst might be responsible for collecting, cleaning, and performing initial analyses on textual data to extract insights, which could involve basic text classification tasks or preparing data for more advanced modeling.

- Responsibilities: Data collection, data cleaning, exploratory data analysis, generating reports, visualizing text data insights, and sometimes applying basic NLP techniques.

- Skills: Python or R, SQL, data visualization tools (Tableau, Power BI), spreadsheet software, statistical analysis, and basic NLP understanding.

- Career Path: Can lead to Senior Data Analyst, Data Scientist, or Business Intelligence Analyst roles.

For individuals targeting these entry-level positions, a combination of formal education (a bachelor's degree in Computer Science, Data Science, Statistics, or a related field is often preferred), online courses to gain specific skills, and a portfolio of hands-on projects demonstrating practical abilities is highly beneficial. Internships are also an excellent way to gain initial experience. It's a competitive field, so demonstrating initiative and a passion for learning is key.

Consider exploring the Career Development section on OpenCourser for resources that can help in preparing for these roles.

Mid-career specialization paths

After gaining foundational experience in text classification and machine learning, professionals often have the opportunity to specialize further, deepening their expertise in specific areas. These mid-career specialization paths allow individuals to tackle more complex challenges, lead projects, and become experts in high-demand niches.

Some common specialization paths include:

-

NLP Scientist/Researcher: This path is for those deeply interested in the theoretical underpinnings of language understanding and advancing the state-of-the-art in NLP.

- Focus: Developing new algorithms, model architectures (e.g., novel Transformer variants), and techniques for tasks like text classification, machine translation, question answering, and natural language generation. Often involves publishing research and staying at the forefront of academic advancements.

- Skills: Strong theoretical understanding of machine learning and deep learning, advanced mathematics (linear algebra, calculus, probability), proficiency in Python and deep learning frameworks (TensorFlow, PyTorch), research methodology, and often a Master's or Ph.D.

-

Machine Learning Operations (MLOps) Engineer: As more ML models move into production, MLOps has become a critical specialization.

- Focus: Building and maintaining robust, scalable, and reliable pipelines for training, deploying, monitoring, and updating machine learning models, including text classifiers. This involves automation, version control for data and models, continuous integration/continuous deployment (CI/CD) for ML, and ensuring model performance and governance in production.

- Skills: Software engineering principles, proficiency in Python, experience with cloud platforms (AWS, Azure, GCP), containerization technologies (Docker, Kubernetes), CI/CD tools, monitoring tools, and a good understanding of the ML lifecycle.

-

AI Ethicist / Responsible AI Specialist: With the growing societal impact of AI, ensuring ethical development and deployment is paramount.

- Focus: Analyzing and addressing ethical concerns in AI systems, including text classification models. This involves working on bias detection and mitigation, fairness, transparency, accountability, and privacy-preserving techniques.

- Skills: Understanding of ethical frameworks, knowledge of fairness metrics and bias mitigation techniques, familiarity with AI regulations and policies, strong analytical and communication skills, and often an interdisciplinary background (e.g., law, philosophy, social sciences combined with technical knowledge).

-

Domain-Specific NLP Specialist: Applying text classification and NLP techniques to solve problems in a particular industry.

- Focus: Becoming an expert in applying NLP to fields like healthcare (e.g., analyzing clinical notes for diagnostic support), finance (e.g., fraud detection, sentiment analysis for trading), law (e.g., e-discovery, contract analysis), or marketing (e.g., customer segmentation, brand monitoring).

- Skills: Strong NLP and machine learning skills combined with deep domain knowledge in the chosen industry.

-

Conversational AI Developer / Chatbot Specialist: Focusing on building intelligent conversational agents.