Computer Vision Engineer

Computer Vision Engineer: Shaping How Machines See the World

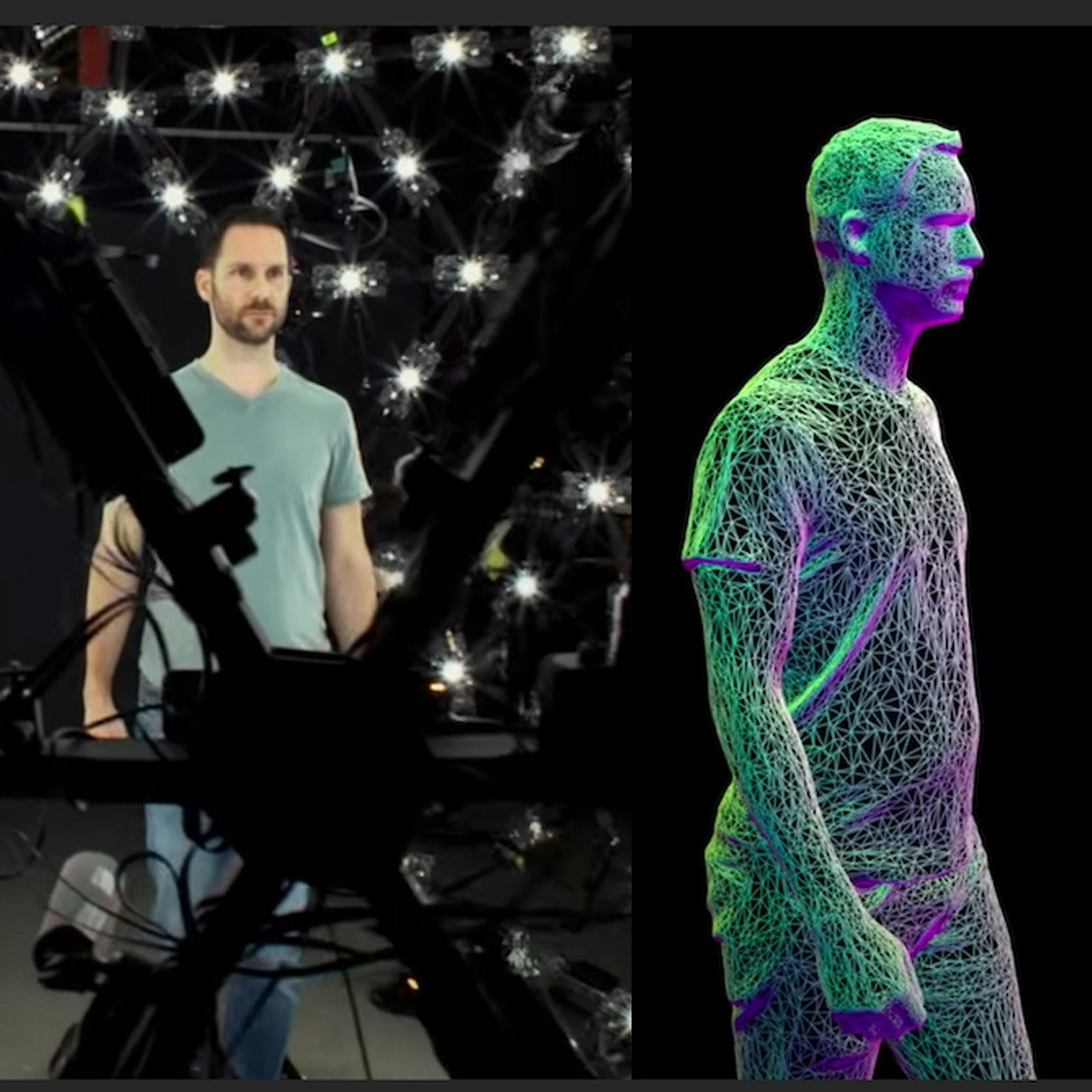

Computer Vision Engineering sits at the fascinating intersection of artificial intelligence, machine learning, and image analysis. It's the field dedicated to building systems that enable computers to "see" and interpret the visual world much like humans do. This involves extracting meaningful information from digital images, videos, and other visual inputs, allowing machines to understand scenes, identify objects, and even make decisions based on what they perceive.

Imagine self-driving cars navigating complex city streets, medical software detecting subtle anomalies in scans, or robots assembling intricate products with precision. These groundbreaking applications are powered by the work of Computer Vision Engineers. It's a dynamic and rapidly evolving field that blends deep theoretical knowledge with practical software engineering skills to create technologies that were once confined to science fiction.

Introduction to Computer Vision Engineering

At its core, computer vision aims to replicate the human visual system's capabilities using algorithms and computational power. Engineers in this domain develop sophisticated software that can process vast amounts of visual data, recognize patterns, and understand context within images or video streams. This field is a crucial component of modern Artificial Intelligence (AI) and Machine Learning (ML).

What is Computer Vision Engineering?

A Computer Vision Engineer is a specialized software engineer or researcher who designs, develops, and implements algorithms and systems capable of processing and understanding visual information. They leverage techniques from image processing, machine learning (especially deep learning), and mathematics to build models that can perform tasks like object detection, image classification, segmentation, facial recognition, and motion analysis. Their work allows machines to automate tasks that traditionally required human vision.

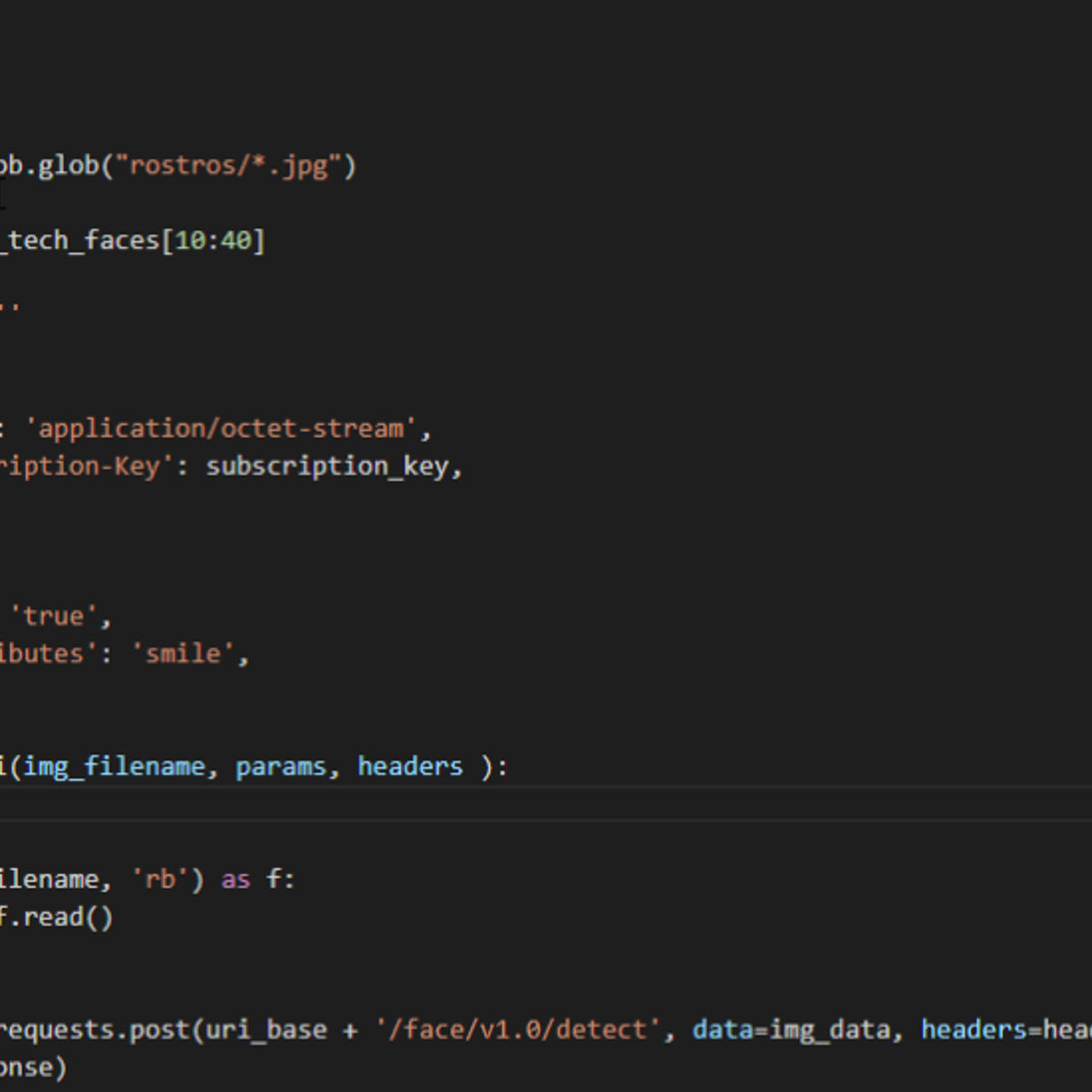

This involves not just theoretical understanding but also significant software development. Engineers write code, often in languages like Python, using specialized libraries and frameworks. They also manage large datasets, train complex models, and optimize them for performance and efficiency, sometimes deploying them on specialized hardware or edge devices.

The ultimate goal is to bridge the gap between the pixel data captured by cameras and a high-level understanding of the scene, enabling applications that interact intelligently with the physical world based on visual input.

Key Applications Driving the Field

The impact of computer vision is felt across numerous industries. One of the most visible applications is in autonomous vehicles, where vision systems are essential for detecting pedestrians, other vehicles, traffic signs, and lane markings, enabling safe navigation. These systems process real-time video feeds to make critical driving decisions.

In healthcare, computer vision aids in medical image analysis. Algorithms can analyze X-rays, CT scans, and MRIs to help doctors detect diseases like cancer or diabetic retinopathy earlier and more accurately than human eyes alone. This technology assists radiologists and improves diagnostic outcomes.

Robotics heavily relies on computer vision for tasks ranging from navigation and obstacle avoidance in warehouses and factories to precise manipulation in manufacturing. Robots equipped with vision can identify parts, inspect products for defects, and interact safely with their environment and human counterparts. Other applications include security surveillance, augmented reality, retail analytics, and content moderation on social media platforms.

Relationship to Adjacent Fields

Computer vision is inherently interdisciplinary, drawing heavily from and contributing to several related fields. Machine Learning, particularly deep learning, provides the foundational algorithms for many modern computer vision tasks. Techniques like Convolutional Neural Networks (CNNs) revolutionized the field, enabling unprecedented performance in image recognition and analysis.

Robotics is another closely related area. Vision provides robots with the sensory input needed to perceive and interact with their surroundings. Thus, many robotics engineers specialize in or collaborate closely with computer vision experts to build intelligent, autonomous systems.

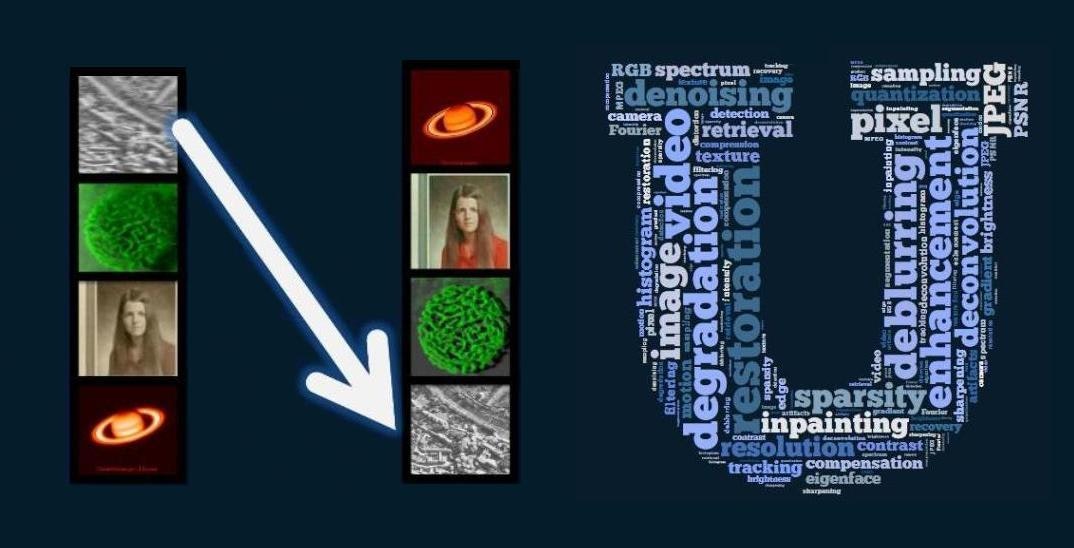

Image Processing is often considered a precursor or subset of computer vision. While image processing focuses on manipulating images (e.g., enhancing contrast, removing noise), computer vision aims for higher-level interpretation and understanding of the image content. However, image processing techniques are fundamental tools used by computer vision engineers.

Key Responsibilities of a Computer Vision Engineer

The day-to-day work of a Computer Vision Engineer can be varied, often involving a mix of research, development, testing, and deployment. Responsibilities depend on the specific role, company, and industry, but several core tasks are common across the field.

Designing and Optimizing Vision Algorithms

A central responsibility is designing algorithms that can solve specific visual tasks. This might involve selecting appropriate existing models, adapting them, or sometimes developing novel approaches. Engineers experiment with different architectures, such as CNNs, Transformers, or Generative Adversarial Networks (GANs), depending on the problem (e.g., classification, detection, generation).

Optimization is crucial. Models need to be accurate but also efficient in terms of computational cost (speed) and memory usage, especially for real-time applications or deployment on devices with limited resources (edge computing). This involves techniques like model pruning, quantization, and knowledge distillation.

Engineers spend significant time tuning hyperparameters, evaluating model performance using various metrics, and iterating on designs to achieve the desired balance between accuracy and efficiency.

Data Pipeline Management

Machine learning models, especially deep learning models used in computer vision, are data-hungry. A key responsibility is managing the entire data pipeline, from acquisition and cleaning to annotation and augmentation. Engineers often work with massive datasets of images or videos.

This involves setting up processes for collecting relevant visual data, ensuring data quality, and often overseeing or implementing data annotation (labeling objects, segmenting regions, etc.), which can be a time-consuming task. Data augmentation techniques, which artificially increase dataset size and diversity by applying transformations like rotations or brightness changes, are commonly used to improve model robustness.

Building efficient data loading and preprocessing pipelines is also vital for training models effectively. This requires skills in data handling libraries and potentially big data technologies if dealing with petabyte-scale datasets.

Deployment of Models in Production Environments

Developing a model is only part of the job; deploying it into a real-world application or system is equally important. Computer Vision Engineers are often responsible for integrating their models into larger software systems or hardware products.

This might involve containerizing models using tools like Docker, deploying them on cloud platforms (AWS, Azure, GCP), or optimizing them for edge devices like smartphones, drones, or specialized processors (e.g., NVIDIA Jetson, Google Coral). Performance monitoring and maintenance of deployed models are also part of the lifecycle.

Deployment requires strong software engineering practices, understanding of system architecture, and sometimes knowledge of specific hardware constraints and optimization techniques for inference.

These courses provide insights into deploying models, including on embedded systems.

Collaboration with Cross-Functional Teams

Computer vision projects rarely happen in isolation. Engineers typically collaborate closely with various teams. This includes software engineers building the larger application, hardware engineers designing sensors or processors, product managers defining requirements, and data scientists analyzing performance.

Effective communication skills are essential to explain complex technical concepts to non-experts, understand requirements from different stakeholders, and work effectively within a team environment. Collaboration might involve code reviews, joint debugging sessions, and contributing to system design discussions.

For example, developing a vision system for a robot requires close collaboration with mechanical and electrical engineers to integrate cameras and processing units, and with robotics software engineers to use the vision output for navigation or manipulation.

Core Technical Skills for Computer Vision Engineers

Becoming a successful Computer Vision Engineer requires a strong foundation in several technical areas, blending programming prowess, mathematical understanding, and expertise in machine learning, particularly deep learning.

Programming and Frameworks

Proficiency in programming is essential, with Python being the dominant language in the field due to its extensive libraries and strong community support. Key Python libraries include OpenCV for image processing tasks, NumPy for numerical operations, and deep learning frameworks.

Expertise in major deep learning frameworks like PyTorch and TensorFlow (often via its high-level API, Keras) is critical. These frameworks provide the tools to build, train, and deploy complex neural network models efficiently.

While Python dominates, knowledge of C++ can be valuable, especially for performance-critical applications or when working closely with hardware integration, as many high-performance libraries have C++ cores.

These courses offer introductions and practical experience with essential frameworks.

Mathematical Foundations

A solid grasp of mathematics underpins many computer vision and machine learning concepts. Key areas include linear algebra, which is fundamental for understanding data representations (vectors, matrices, tensors) and operations within neural networks.

Calculus, particularly multivariable calculus and differentiation, is essential for understanding optimization algorithms like gradient descent, which are used to train models. Probability and statistics are crucial for understanding model evaluation, uncertainty, and probabilistic models used in vision.

While day-to-day work might rely more on framework abstractions, a strong mathematical intuition helps in debugging models, understanding research papers, and developing novel solutions.

This book offers a comprehensive look at pattern recognition and machine learning, which heavily rely on these mathematical principles.

Deep Learning Architectures

Deep learning has revolutionized computer vision. Engineers need a deep understanding of various neural network architectures relevant to vision tasks. Convolutional Neural Networks (CNNs) are foundational for image analysis tasks like classification and detection.

Knowledge of more advanced architectures is increasingly important. Transformers, initially developed for natural language processing, are now achieving state-of-the-art results in vision tasks. Generative Adversarial Networks (GANs) are used for image generation and style transfer, while Recurrent Neural Networks (RNNs) might be used for video analysis or sequence modeling related to vision.

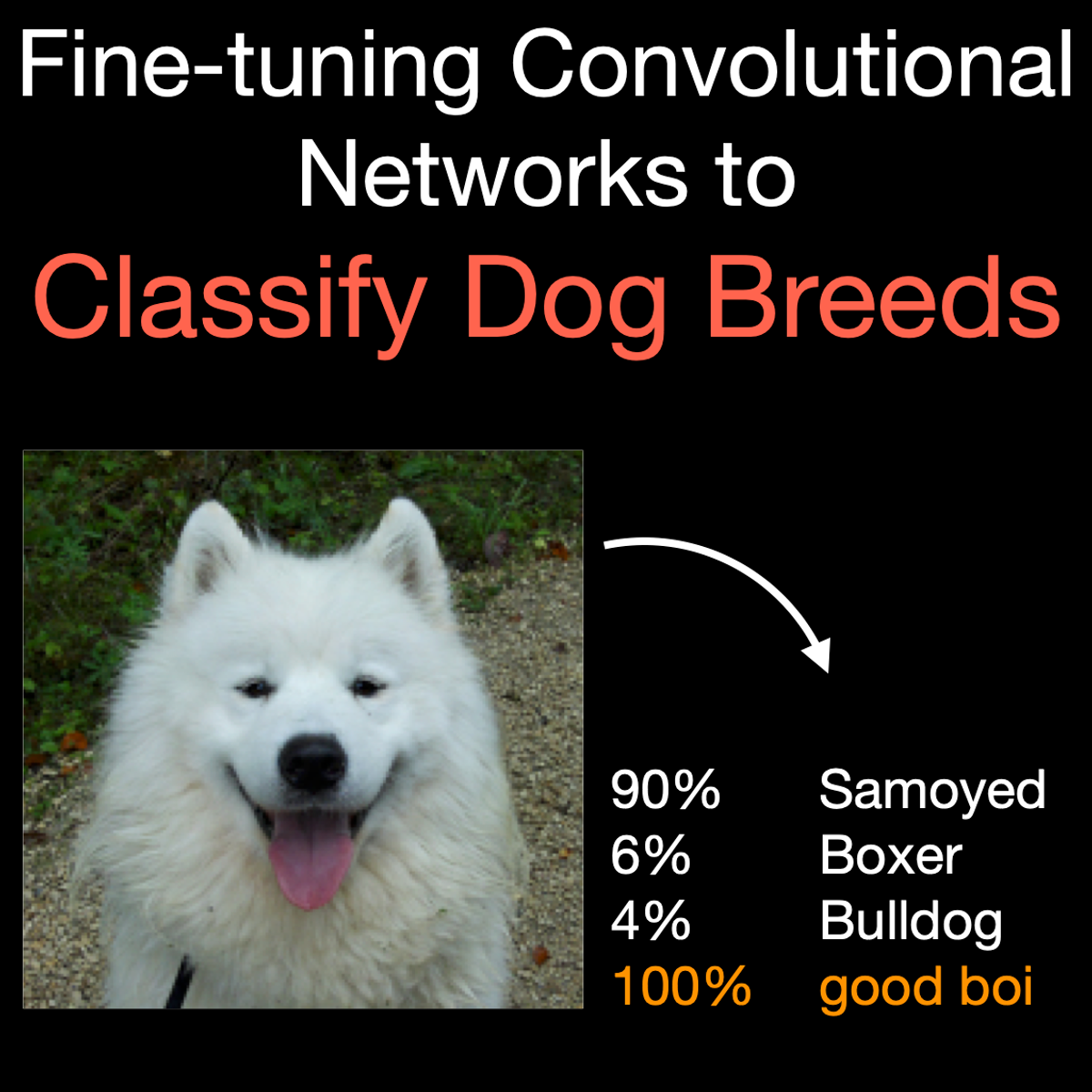

These courses delve into the specifics of CNNs and other deep learning architectures.

Explain Like I'm 5: Convolutional Neural Networks (CNNs)

Imagine you have a superpower: you can recognize your favorite toy car instantly, even if it's partially hidden or in a messy room. How do you do it? You look for specific features: its red color, its round wheels, its shiny windows. CNNs work similarly for computers looking at pictures.

A CNN looks at small parts of an image at a time, searching for simple patterns like edges or corners (like finding the edge of a wheel). It uses special "filters" (like tiny magnifying glasses programmed to find specific shapes). As the information goes deeper into the network (through more "layers"), it combines these simple patterns to find more complex ones (like combining edges and curves to recognize a whole wheel).

Eventually, after looking for many simple and complex features across the entire image, the CNN can confidently say, "Aha! Based on all the features I found – red color, four wheels, shiny windows – that's a toy car!" They are very good at recognizing objects in pictures, even if the object is tilted, partially blocked, or in different lighting.

Cloud Platforms and Edge Computing

Modern computer vision often involves large datasets and computationally intensive model training, making cloud platforms essential. Familiarity with services from major providers like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure is highly beneficial. This includes using cloud storage, virtual machines with GPUs for training, and managed AI/ML services.

Cloud computing skills enable engineers to scale their experiments and deploy models globally. Platforms like Azure Cognitive Services or AWS Rekognition offer pre-built vision capabilities, while services like SageMaker or Vertex AI provide tools for building custom models.

Conversely, edge computing focuses on running models directly on devices (e.g., smartphones, cameras) instead of in the cloud. This requires optimizing models for low power and computational resources. Skills in model optimization techniques and familiarity with edge AI platforms (like TensorFlow Lite, NVIDIA Jetson) are increasingly valuable for applications requiring real-time processing or offline operation.

Formal Education Pathways

While self-study and bootcamps play a role, a strong formal education often provides the necessary theoretical depth and structured learning for a career in Computer Vision Engineering. Universities offer various programs that build the required foundation.

Relevant Undergraduate Degrees

A bachelor's degree in a quantitative field is typically the starting point. Computer Science is the most common background, providing essential programming, algorithms, and software engineering skills. Electrical Engineering often includes crucial coursework in signal processing and hardware, which are relevant to image formation and processing.

Degrees in Applied Mathematics or Statistics can also be excellent foundations, providing the rigorous mathematical background needed for understanding complex algorithms. Some universities may offer specialized tracks or courses in AI, machine learning, or image processing within these broader degrees.

Regardless of the major, students should aim to take courses in linear algebra, calculus, probability, statistics, data structures, algorithms, and ideally introductory courses in AI and machine learning.

Graduate Research Opportunities

For those aiming for research-focused roles or positions requiring deeper expertise, pursuing a Master's or PhD is common. Graduate programs allow for specialization in computer vision, machine learning, or related areas like robotics.

Master's programs often offer a blend of advanced coursework and project work, providing specialized skills sought by industry. PhD programs are research-intensive, culminating in a dissertation that contributes novel work to the field. A PhD is often preferred for research scientist roles in industrial labs or academic positions.

Many leading universities have dedicated computer vision labs or AI research groups where students can work with renowned faculty on cutting-edge projects, often collaborating with industry partners.

These books provide a solid foundation, often used in graduate-level courses.

Advanced Study and Specialization

Graduate studies enable deep dives into specific subfields of computer vision, such as 3D vision, medical image analysis, video understanding, or human-computer interaction. Coursework often covers advanced topics like probabilistic graphical models, optimization techniques, geometric vision, and the latest deep learning architectures.

University courses focusing on signal processing provide a fundamental understanding of how images and videos are represented and manipulated digitally. Courses on neural networks and deep learning are essential for mastering the dominant techniques used in the field today.

A strong academic background not only imparts technical knowledge but also develops critical thinking, problem-solving, and research skills valuable throughout a Computer Vision Engineer's career.

Self-Directed Learning Strategies

While formal education is beneficial, the rapidly evolving nature of computer vision means continuous learning is essential. Furthermore, motivated individuals can build a strong foundation and even transition into the field through dedicated self-study, leveraging the wealth of online resources available.

Making a career pivot or starting fresh can feel daunting, especially in a field as complex as computer vision. Remember that many successful engineers started with curiosity and a willingness to learn. Setting realistic goals, celebrating small victories, and persistently tackling challenges are key. The journey requires dedication, but the rewards of building systems that 'see' can be immense.

Building Portfolio Projects

Practical experience is paramount. Building a portfolio of projects is arguably the most effective way to learn and demonstrate skills to potential employers. Start with smaller projects using public datasets (like ImageNet, COCO, or MNIST) and common libraries (OpenCV, scikit-image).

Focus on implementing core concepts: build an image classifier, an object detector, or a facial keypoint detector. Document your projects thoroughly on platforms like GitHub, explaining your approach, challenges, and results. This showcases not only technical ability but also problem-solving skills and communication.

These project-based courses offer hands-on experience with specific CV tasks.

Online Courses and Competitions

OpenCourser aggregates thousands of online courses, many of which cover foundational mathematics, programming, machine learning, and specialized computer vision topics. Platforms offer courses from universities and industry experts, ranging from introductory overviews to deep dives into specific algorithms or frameworks.

Participating in online competitions, such as those hosted on Kaggle, provides valuable experience working with real-world data and tackling challenging problems under competitive conditions. It's also a great way to learn from others and see different approaches to problem-solving.

OpenCourser's features like the "Save to list" button (manage your list here) can help you curate your own learning path by shortlisting relevant courses and books. You can browse categories like Artificial Intelligence or Computer Science to discover resources.

Combining Theory with Practice

A balanced approach is key. While hands-on projects build practical skills, understanding the underlying theory is crucial for troubleshooting, innovation, and adapting to new techniques. Supplement project work with reading textbooks, research papers (using resources like arXiv), and following blogs from leading researchers and labs.

Try to implement algorithms from scratch (e.g., a simple CNN or k-means clustering) before relying solely on high-level library functions. This deepens understanding. Conversely, apply theoretical knowledge gained from courses or books to your projects immediately to solidify concepts.

This book offers a deep dive into the mathematics and algorithms behind deep learning.

This foundational computer vision text is often used in university courses.

Leveraging Community Resources

The AI and computer vision community is vibrant and collaborative. Engage with others through online forums (like Stack Overflow, Reddit communities), local meetups, or virtual study groups. Attending conferences (even virtually) or participating in hackathons exposes you to new ideas and networking opportunities.

Contributing to open-source computer vision projects (like OpenCV or libraries within the PyTorch/TensorFlow ecosystems) is an excellent way to learn from experienced developers, improve your coding skills, and build credibility within the community. Don't hesitate to ask questions, share your work, and learn from the collective knowledge.

Remember, the path of self-directed learning requires discipline and persistence. Utilize resources like the OpenCourser Learner's Guide for tips on structuring your learning and staying motivated.

Career Progression in Computer Vision Engineering

A career in Computer Vision Engineering offers diverse pathways for growth and specialization. Progression typically involves gaining deeper technical expertise, taking on more complex projects, and potentially moving into leadership or management roles.

Entry-Level Roles

Graduates often start in roles like Computer Vision Software Engineer, Junior Machine Learning Engineer (with a CV focus), or Research Assistant/Engineer in academic labs or R&D departments. These roles typically involve implementing existing algorithms, assisting with data pipelines, testing models, and contributing to specific components of larger systems under supervision.

Entry-level positions focus on building practical skills, learning industry best practices, and gaining experience with the tools and frameworks used by the team. Strong programming skills and a solid understanding of fundamental CV and ML concepts are key.

Mid-Career Specialization

With experience, engineers often specialize in specific areas. Some may focus on algorithm development and optimization, becoming experts in areas like 3D reconstruction, semantic segmentation, or generative models. They might spend more time reading research papers and pushing the boundaries of model performance.

Others might specialize in systems design and deployment, focusing on building robust, scalable, and efficient end-to-end vision systems. This could involve expertise in MLOps (Machine Learning Operations), edge computing optimization, or integrating vision systems with hardware.

Mid-career engineers typically take ownership of larger projects or features, mentor junior engineers, and contribute significantly to technical decision-making.

Leadership and Management Roles

Experienced engineers may progress into leadership roles. A Technical Lead guides the technical direction of a project or team, making key architectural decisions and mentoring other engineers. An R&D Manager oversees research projects, sets strategic direction for innovation, and manages a team of researchers and engineers.

These roles require not only deep technical expertise but also strong leadership, communication, and project management skills. Some engineers might transition into Product Management roles, leveraging their technical understanding to define product strategy and requirements for vision-based products.

Transition Opportunities

The skills developed as a Computer Vision Engineer are transferable to adjacent high-demand fields. Many engineers transition into broader Machine Learning Engineering or Data Science roles, applying their modeling and analytical skills to different types of data.

Given the close ties, transitions into Robotics Engineering, particularly roles focused on perception and autonomy, are common. The rise of Augmented Reality (AR) and Virtual Reality (VR) also creates opportunities, as computer vision is fundamental to tracking, mapping, and object recognition in these immersive technologies.

Industries Hiring Computer Vision Engineers

The demand for Computer Vision Engineers spans a wide range of industries, driven by the increasing integration of AI and automation into various products and processes. The ability to enable machines to "see" unlocks value in diverse sectors.

Automotive and Autonomous Systems

The automotive industry is a major employer, particularly with the push towards autonomous driving and advanced driver-assistance systems (ADAS). Engineers develop perception systems for self-driving cars, delivery robots, drones, and other autonomous platforms, focusing on tasks like object detection, tracking, and scene understanding for safe navigation.

Healthcare and Medical Technology

Healthcare is another significant sector leveraging computer vision. Applications include analyzing medical images (radiology, pathology) to aid diagnosis, robotic surgery assistance, patient monitoring, and analyzing microscopic images for research. Engineers work on improving diagnostic accuracy, automating repetitive tasks, and enabling new medical procedures.

This book details some applications in medical imaging.

Defense and Surveillance

Governments and defense contractors employ computer vision engineers for applications in security, surveillance, intelligence gathering, and autonomous military systems. Tasks can include target recognition, activity monitoring from aerial or satellite imagery, facial recognition for security access, and enhancing situational awareness for personnel.

Ethical considerations are particularly prominent in this sector, requiring careful attention to privacy, bias, and the potential misuse of technology.

Retail and Augmented Reality

The retail industry uses computer vision for applications like inventory management (shelf monitoring), customer behavior analysis (foot traffic patterns), cashier-less checkout systems (like Amazon Go), and personalized advertising. Augmented Reality (AR) applications, popular in retail and entertainment, heavily rely on computer vision for tracking, object recognition, and overlaying digital information onto the real world.

Other industries include consumer electronics (smartphone cameras, smart home devices), agriculture (crop monitoring, automated harvesting), manufacturing (quality control, robotic automation), and entertainment (special effects, content creation).

Ethical Challenges in Computer Vision Engineering

As computer vision systems become more powerful and pervasive, they raise significant ethical challenges that engineers, researchers, and society must address. Developing and deploying these technologies responsibly requires careful consideration of their potential impact.

Bias in Recognition Systems

One of the most widely discussed issues is bias, particularly in facial recognition systems. Models trained predominantly on data from one demographic group may perform poorly or unfairly on others, leading to disparities in accuracy based on race, gender, or age. This can have serious consequences in applications like law enforcement or hiring.

Addressing bias requires careful dataset curation, development of fairness-aware algorithms, rigorous testing across diverse populations, and transparency about model limitations. Engineers have a responsibility to mitigate bias in the systems they build.

Privacy Concerns with Surveillance

The proliferation of cameras combined with powerful computer vision capabilities raises significant privacy concerns. Mass surveillance, tracking individuals' movements and activities, and the potential for misuse of facial recognition data by governments or corporations are major ethical dilemmas.

Balancing the benefits of security or convenience with the fundamental right to privacy is a complex challenge. Engineers should consider privacy-preserving techniques (like data anonymization or on-device processing) and advocate for clear regulations and ethical guidelines governing the use of surveillance technologies.

Environmental Impact

Training large-scale deep learning models, common in state-of-the-art computer vision, requires substantial computational resources, often consuming significant amounts of energy. The environmental footprint of developing and running these massive models is an emerging ethical concern.

Research into more energy-efficient model architectures, hardware acceleration, and training techniques (like transfer learning or federated learning) is ongoing. Engineers should be mindful of the environmental cost associated with their work and explore ways to develop more sustainable AI solutions.

Industry Standards and Regulation

The rapid advancement of computer vision often outpaces the development of clear industry standards and governmental regulations. Issues like data ownership, consent for data collection, transparency in algorithmic decision-making, and accountability for errors need robust frameworks.

Engineers play a role in shaping these standards by adhering to ethical principles, participating in discussions about responsible AI development, and designing systems that are transparent and accountable. Collaboration between industry, academia, and policymakers is crucial to ensure computer vision technology is developed and deployed ethically and for the benefit of society.

Emerging Trends in Computer Vision Engineering

Computer Vision is a rapidly evolving field, constantly pushed forward by advancements in algorithms, hardware, and data availability. Staying abreast of emerging trends is crucial for engineers looking to remain at the forefront of innovation.

Efficient Models for Edge Devices

There is a strong trend towards deploying sophisticated vision models directly on edge devices (smartphones, IoT devices, wearables) rather than relying solely on the cloud. This requires developing highly efficient model architectures (like MobileNet, EfficientNet) and using techniques like quantization and pruning to reduce computational and memory requirements without sacrificing too much accuracy.

Running AI on the edge offers benefits like lower latency, improved privacy (data stays local), and offline functionality. This trend drives research into compact network designs and specialized hardware accelerators.

Multimodal AI Integration

Future AI systems will increasingly process and integrate information from multiple modalities – not just vision, but also text, audio, and other sensor data. Models that can jointly understand images and their textual descriptions (like OpenAI's CLIP or Google's Gemini) are enabling new applications in image search, automated captioning, and human-AI interaction.

Integrating vision with language models allows for more nuanced understanding and generation capabilities, moving beyond simple object recognition towards richer scene interpretation and interaction.

Neuromorphic Computing

Inspired by the structure and efficiency of the human brain, neuromorphic computing aims to build hardware that processes information using spiking neural networks. This approach promises significant gains in energy efficiency for tasks like real-time visual processing.

While still largely in the research phase, neuromorphic vision sensors and processors could enable extremely low-power, always-on visual intelligence for applications in robotics, IoT, and autonomous systems. Engineers may increasingly work with these novel hardware platforms in the future.

Advances in Generative Models and 3D Vision

Generative models like GANs and Diffusion Models continue to improve, enabling highly realistic image and video synthesis, style transfer, and data augmentation. These advances impact creative industries, simulation, and training data generation.

Simultaneously, 3D computer vision, dealing with understanding the three-dimensional structure of scenes from images or sensor data (like LiDAR), is critical for robotics, AR/VR, and autonomous navigation. Techniques like NeRF (Neural Radiance Fields) represent significant progress in novel view synthesis and 3D reconstruction.

Frequently Asked Questions

Navigating a career path, especially in a technical field like computer vision, often brings up specific questions. Here are answers to some common inquiries.

What's the difference between computer vision and general machine learning roles?

While both roles heavily involve machine learning, Computer Vision Engineers specialize specifically in visual data (images, videos). Their focus is on tasks like object detection, image segmentation, and facial recognition using models often tailored for visual patterns (like CNNs).

General Machine Learning Engineers work with a broader range of data types (tabular, text, time series, etc.) and applications (recommendation systems, fraud detection, predictive analytics). While there's overlap, CV engineers possess deeper expertise in image processing techniques, geometric vision, and vision-specific model architectures.

Can I enter this field without a graduate degree?

Yes, it is possible, especially for engineering-focused roles rather than pure research. A strong portfolio of practical projects demonstrating proficiency with relevant tools (Python, OpenCV, TensorFlow/PyTorch) and techniques (CNNs, object detection) can be very compelling, particularly if coupled with a relevant bachelor's degree (like Computer Science or Electrical Engineering).

However, many advanced roles, especially in R&D or those requiring deep algorithmic innovation, often prefer or require a Master's or PhD. A graduate degree provides deeper theoretical grounding and research experience. Self-study, online courses, and significant project work are crucial for those entering without an advanced degree.

How does computer vision engineering impact job markets in other industries?

Computer vision acts as an enabling technology, creating new capabilities and efficiencies that impact jobs across various sectors. In manufacturing, it can automate quality control, potentially displacing manual inspectors but creating demand for engineers to build and maintain these systems. In retail, automated checkout systems change cashier roles but create jobs in system development and oversight.

In transportation, autonomous driving technology could transform roles for drivers but generate significant demand for engineers in perception, mapping, and safety systems. Healthcare sees CV assisting doctors, potentially changing workflows but not necessarily replacing diagnosticians, while creating roles for medical AI specialists. Overall, CV tends to automate specific visual tasks, shifting labor demand towards higher-skilled roles in system design, development, and maintenance.

What industries are investing most heavily in computer vision?

Several industries are making substantial investments. The technology sector itself (companies like Google, Meta, Microsoft, Apple, NVIDIA) invests heavily in fundamental research and integrating CV into products (search, social media, hardware).

The automotive industry is a major investor due to the race for autonomous driving. Healthcare technology companies and research institutions are increasingly investing in CV for medical imaging and diagnostics. Defense and security sectors are also significant investors. Retail and e-commerce are rapidly adopting CV for automation and customer analytics.

Emerging areas like robotics, augmented/virtual reality, and smart agriculture also show significant investment growth.

How susceptible is this career to AI automation?

While AI tools (like AutoML or code generation assistants) can automate parts of the model building and coding process, the role of a Computer Vision Engineer involves much more than just training models. It requires problem framing, data strategy, algorithm design, system integration, debugging complex issues, understanding domain context, and ensuring ethical deployment.

These aspects require critical thinking, creativity, and domain expertise that are difficult to automate fully. While tools may change how engineers work, making them more productive, the core skills of understanding visual data, designing systems, and solving complex problems are likely to remain in demand. The field itself is focused on *building* AI, making it less susceptible to being replaced by it compared to roles AI is designed to automate.

What are the geographic hubs for computer vision engineering roles?

Computer vision roles tend to cluster in major technology hubs where large tech companies, research institutions, and startups are concentrated. In the United States, prominent areas include Silicon Valley/San Francisco Bay Area, Seattle, Boston, New York City, and potentially growing hubs like Austin and Pittsburgh (known for robotics).

Globally, significant activity exists in cities like London, Berlin, Zurich, Paris, Tel Aviv, Toronto, Montreal, Beijing, Shanghai, and Shenzhen. The presence of top universities with strong AI/CV research programs often correlates with industry hubs. However, the rise of remote work has also somewhat distributed opportunities, although major hubs still offer the highest concentration of roles.

Embarking on a career as a Computer Vision Engineer is a challenging yet rewarding path. It requires continuous learning and adaptation in a field that is constantly pushing the boundaries of what machines can perceive and understand. Whether through formal education or dedicated self-study, building a strong foundation in programming, mathematics, and machine learning, coupled with hands-on project experience, will prepare you to contribute to this exciting and impactful domain.