Dynamic programming

Comprehensive Guide to Dynamic Programming

Dynamic programming is a powerful algorithmic technique used to solve complex problems by breaking them down into simpler, overlapping subproblems. The core idea is to solve each subproblem only once and store its solution, so that when the same subproblem is encountered again, its solution can be retrieved instead of recomputed. This approach can lead to significant improvements in efficiency, particularly for problems that exhibit certain characteristics.

For those intrigued by elegant problem-solving and optimization, dynamic programming offers a fascinating area of study. It is a cornerstone of algorithm design, and mastering it can unlock the ability to tackle a wide range of computational challenges. The thrill of devising a clever dynamic programming solution that dramatically speeds up a computation can be immensely satisfying. Furthermore, the principles of dynamic programming find applications in diverse fields, from optimizing financial strategies to unraveling the complexities of biological sequences.

What is Dynamic Programming? An Introduction

At its heart, dynamic programming is about making smart decisions. Imagine you're trying to find the shortest route from one city to another, with several intermediate cities and various paths between them. A dynamic programming approach would involve figuring out the shortest path to each intermediate city first, and then using that information to find the overall shortest path. This is much more efficient than trying out every single possible route from start to finish.

This method is particularly well-suited for problems that can be divided into smaller, self-similar parts. If you find yourself solving the same smaller problem multiple times while working towards a larger solution, dynamic programming might be the key to a more efficient approach. It's a fundamental concept in Computer Science and a valuable tool for anyone involved in algorithm design and optimization.

Definition and Core Principles

Dynamic programming is an algorithmic technique that optimizes recursive solutions by storing the results of subproblems to avoid redundant computations. This is particularly useful when the same subproblems are encountered multiple times during the recursive process. The essence of dynamic programming lies in solving each subproblem just once and saving its result, typically in a table or an array, for future reference. This strategy can dramatically reduce the time complexity of algorithms, often transforming exponential-time solutions into polynomial-time ones.

Two fundamental properties characterize problems amenable to dynamic programming: optimal substructure and overlapping subproblems. Optimal substructure means that an optimal solution to the overall problem can be constructed from optimal solutions to its subproblems. For example, if the shortest path from city A to city C passes through city B, then the segment from A to B within that path must also be the shortest path from A to B. Overlapping subproblems refer to the phenomenon where the same subproblems are solved repeatedly in different parts of the problem. The Fibonacci sequence is a classic example, where calculating F(n) involves recalculating F(n-2) multiple times if not stored.

By recognizing these properties, developers can devise dynamic programming solutions that are both correct and efficient. The process generally involves defining the subproblems, establishing a recurrence relation that links the solution of a larger problem to the solutions of its subproblems, and then systematically solving these subproblems, storing their results along the way.

Historical Origins (Bellman, 1950s)

The term "dynamic programming" was coined by Richard Bellman in the 1950s while he was working at the RAND Corporation. Interestingly, the name wasn't chosen for its descriptive clarity regarding the mathematical technique itself. Bellman explained in his autobiography, "Eye of the Hurricane," that the political climate at the time was unfavorable towards "research," especially "mathematical research." To shield his work from potential budget cuts by government officials who were wary of such terms, Bellman sought a name that sounded more proactive and less abstract.

He chose "dynamic" because it implied a sense of change and progress, and "programming" in the sense of planning or decision-making, rather than coding as we primarily understand it today. The name was intentionally somewhat vague to avoid attracting undue scrutiny. Bellman's pivotal contribution was the development of a method for solving problems where one needs to find the best decisions sequentially. This led to the formulation of the Bellman equation, a central concept in dynamic programming that expresses an optimization problem in a recursive form. His work laid the foundation for applying these techniques across a multitude of disciplines, from aerospace engineering to economics.

Despite the somewhat opaque origin of its name, dynamic programming has become an indispensable tool in mathematics and computer science. Richard Bellman received the IEEE Medal of Honor in 1979 for his contributions to decision processes and control system theory, particularly the creation and application of dynamic programming.

Key Characteristics: Overlapping Subproblems and Optimal Substructure

As briefly mentioned, the applicability of dynamic programming hinges on two key characteristics a problem must possess: overlapping subproblems and optimal substructure. Understanding these is crucial to identifying when and how to apply this powerful technique.

Overlapping Subproblems: This property means that a recursive algorithm for the problem ends up solving the same subproblems multiple times. Instead of recomputing the solution to these subproblems every time they are encountered, dynamic programming stores the solution to each subproblem the first time it is solved. Subsequent calls to solve the same subproblem simply retrieve the stored result. A classic illustration is the computation of Fibonacci numbers. To find F(n), one needs F(n-1) and F(n-2). Calculating F(n-1) in turn requires F(n-2) and F(n-3). Notice that F(n-2) is needed for both F(n) and F(n-1). Without dynamic programming, F(n-2) would be calculated independently twice (and many more times for larger n). Dynamic programming ensures it's computed only once.

Optimal Substructure: This property exists when an optimal solution to a problem contains within it optimal solutions to its subproblems. This allows for a recursive approach to finding the optimal solution. If a problem can be broken down into smaller pieces, and the optimal solution to the overall problem depends on the optimal solutions to these smaller pieces, then it has optimal substructure. For example, in finding the shortest path between two points in a graph, if a point X lies on the shortest path from a start node S to a goal node G, then the path from S to X within that overall shortest path must be the shortest path from S to X. Similarly, the path from X to G must be the shortest path from X to G. If a more optimal path existed for any sub-segment, the overall path wouldn't be optimal.

Recognizing these two characteristics is the first step in designing a dynamic programming solution. If a problem exhibits both, dynamic programming can offer a significantly more efficient approach than naive recursive or brute-force methods.

Basic Analogy to Divide-and-Conquer Approaches

Dynamic programming shares some similarities with another common algorithmic paradigm: divide-and-conquer. Both strategies involve breaking down a large problem into smaller subproblems. However, there's a crucial distinction in how they handle these subproblems.

In a typical divide-and-conquer algorithm, such as Merge Sort or Quick Sort, the subproblems are generally independent. When the problem is divided, the subproblems are solved recursively, and their solutions are then combined to solve the original problem. The key here is that the subproblems usually don't overlap; each subproblem is distinct. For instance, when sorting an array using Merge Sort, the left half and the right half are sorted independently, and there's no commonality between the subproblems of sorting these two halves.

Dynamic programming, on the other hand, is specifically designed for situations where subproblems do overlap. If a divide-and-conquer approach were applied naively to such a problem, it would end up solving the same subproblems repeatedly, leading to inefficiency. Dynamic programming addresses this by storing the solutions to these overlapping subproblems, so each is computed only once. So, while both approaches break problems down, dynamic programming adds the crucial element of remembering past results to avoid redundant work when subproblems recur. You could think of dynamic programming as a divide-and-conquer strategy with an added "memory" or "caching" layer for efficiency when dealing with overlapping subproblems.

These foundational courses can help build a solid understanding of algorithmic techniques, including dynamic programming and its relationship to other paradigms.

Core Components of Dynamic Programming

Understanding the mechanics of dynamic programming involves grasping a few essential components and techniques. These elements form the building blocks for constructing efficient DP solutions.

Memoization vs. Tabulation

There are two primary techniques for implementing dynamic programming solutions: memoization and tabulation. Both achieve the same goal of storing and reusing subproblem solutions but differ in their approach.

Memoization (Top-Down): This technique involves writing a recursive solution to the problem, just as one might initially approach it. The "memoization" part comes from adding a mechanism (like a lookup table or a hash map) to store the result of each subproblem when it's first computed. Before making a recursive call to solve a subproblem, the function first checks if the solution for that specific subproblem is already in the store. If it is, the stored result is returned immediately, avoiding re-computation. If not, the computation proceeds, and the result is stored before being returned. This approach is often more intuitive to design because it closely mirrors the natural recursive structure of the problem. However, it can incur overhead due to recursive function calls.

Tabulation (Bottom-Up): In contrast, tabulation takes an iterative approach. It starts by solving the smallest possible subproblems and progressively builds up solutions to larger and larger subproblems. Typically, this involves filling a table (hence "tabulation") where each entry corresponds to the solution of a specific subproblem. The order of filling the table is crucial and must ensure that when the solution to a subproblem is being computed, the solutions to all its prerequisite smaller subproblems have already been computed and stored in the table. This method avoids recursion overhead and can sometimes be more efficient in terms of constant factors due to direct table access. However, it might require a more careful upfront analysis to determine the correct order of computation.

The choice between memoization and tabulation often depends on the specific problem and personal preference. If all subproblems must be solved to reach the final solution, tabulation can be slightly more efficient. If only some subproblems are needed, memoization might be more efficient as it only solves what's necessary.

Many courses delve into these implementation strategies. These selections offer practical insights into both memoization and tabulation.

For a deeper dive into the nuances of these approaches, consider this book which often covers implementation details extensively.

State Transition Diagrams

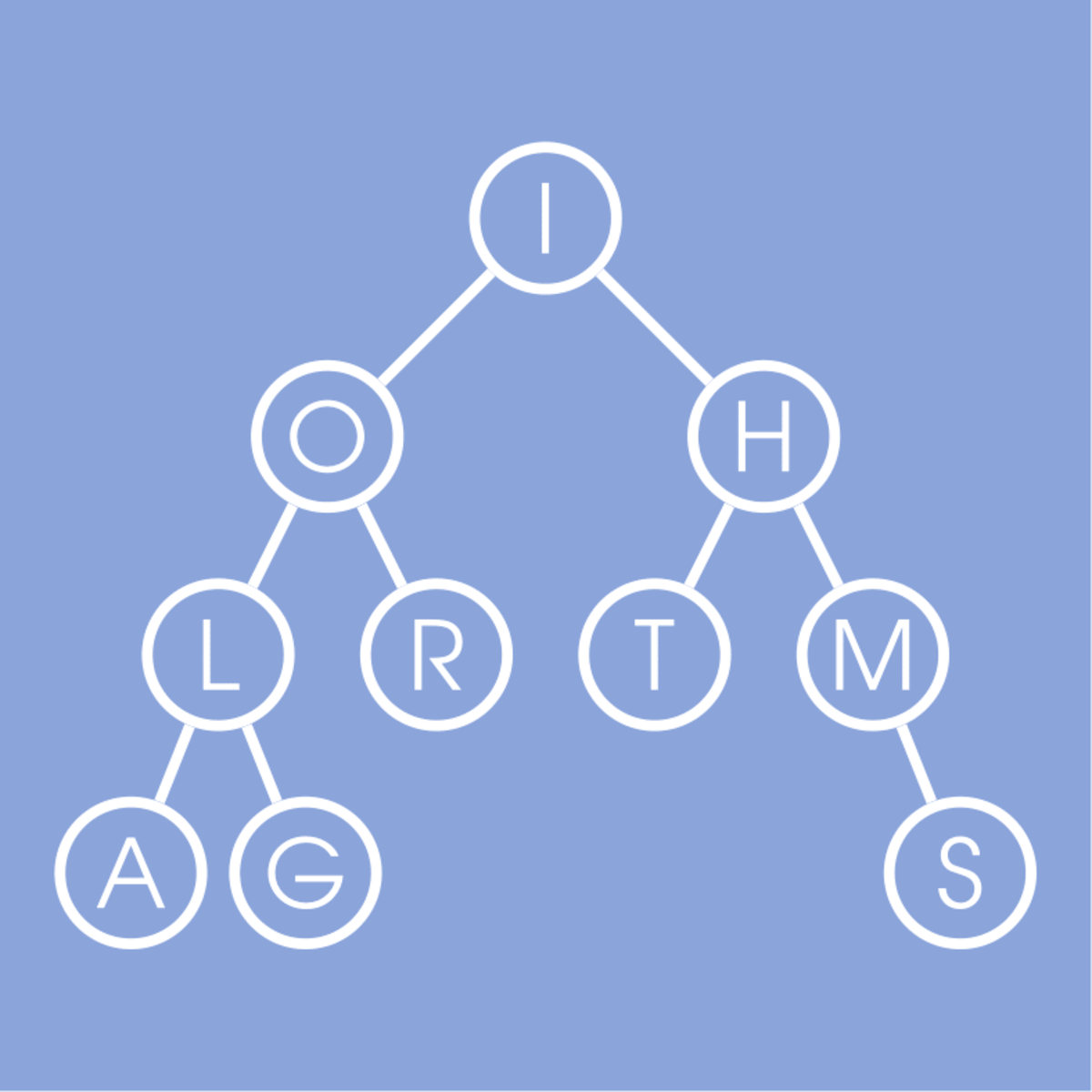

While not always explicitly drawn for every dynamic programming problem, the concept of a state transition diagram can be very helpful for visualizing the relationships between subproblems. In the context of dynamic programming, a "state" typically represents a specific subproblem. A state transition diagram would then show how the solution to one state (subproblem) depends on the solutions of other states (smaller subproblems).

Imagine each subproblem as a node in a graph. An edge from subproblem A to subproblem B would indicate that the solution to B is used to compute the solution to A. The tabulation method essentially involves visiting these nodes in a topological order, ensuring that all prerequisite nodes (smaller subproblems) are processed before a node that depends on them.

For example, in the Fibonacci sequence, F(n) depends on F(n-1) and F(n-2). F(n-1) depends on F(n-2) and F(n-3), and so on. A state transition diagram would show these dependencies. Visualizing this structure can help in defining the recurrence relation and in determining the correct order for a bottom-up (tabulation) approach. It can also highlight the overlapping nature of subproblems, reinforcing the need for dynamic programming.

Time-Space Complexity Tradeoffs

Dynamic programming often exemplifies a common theme in algorithm design: the time-space tradeoff. By using extra space to store the results of subproblems, dynamic programming algorithms can achieve significant reductions in computation time.

A naive recursive solution (without memoization) to a problem with overlapping subproblems might have an exponential time complexity because it recomputes the same work repeatedly. The space complexity for such a recursive solution is typically related to the maximum depth of the recursion stack.

When dynamic programming is applied (either through memoization or tabulation), the time complexity is generally reduced to something proportional to the number of unique subproblems multiplied by the time taken to solve each subproblem (often excluding the recursive calls for memoization, or the lookup for tabulation). For many problems, this turns an exponential time complexity into a polynomial one. However, this speedup comes at the cost of increased space complexity. We now need to store the solutions to all the computed subproblems. The space complexity of a dynamic programming solution is typically proportional to the number of subproblems.

In some cases, it's possible to optimize the space complexity of a dynamic programming solution. For example, if the solution to a current subproblem only depends on the solutions of a few immediately preceding subproblems, we might not need to store the entire table of solutions. We might only need to keep track of the solutions from the previous one or two "layers" of subproblems. This is often seen in problems like calculating Fibonacci numbers iteratively, where only the last two values are needed to compute the next.

Understanding this tradeoff is crucial. While dynamic programming can make previously intractable problems solvable within reasonable time limits, one must also consider if the memory requirements are acceptable for the given constraints.

These resources provide further context on algorithmic complexity.

Recurrence Relations

A recurrence relation is at the heart of every dynamic programming solution. It's a mathematical equation or formula that defines the solution to a larger problem in terms of the solutions to its smaller subproblems. Establishing the correct recurrence relation is arguably the most critical and often the most challenging part of designing a dynamic programming algorithm.

The recurrence relation typically has two parts:

- Recursive Case(s): This defines how to calculate the solution for a given subproblem using the solutions of one or more smaller, prerequisite subproblems. For example, in the Fibonacci sequence, the recursive case is F(n) = F(n-1) + F(n-2).

- Base Case(s): These define the solutions for the smallest, simplest subproblems that don't depend on any other subproblems. Base cases are essential to terminate the recursion (in a top-down memoized approach) or to provide the starting point for iteration (in a bottom-up tabulated approach). For Fibonacci, the base cases are typically F(0) = 0 and F(1) = 1.

Developing the recurrence relation involves carefully analyzing the problem structure. One often asks: "If I have the optimal solutions to slightly smaller versions of this problem, how can I combine them to get the optimal solution for the current problem?" This thought process helps in identifying the dependencies between subproblems. Once the recurrence relation (including base cases) is correctly formulated, implementing the dynamic programming solution, whether through memoization or tabulation, becomes a more straightforward process.

Many algorithmic courses focus heavily on deriving and applying recurrence relations. These courses are good starting points for mastering this skill.

This book is a well-regarded text that covers recurrence relations in the context of algorithm design.

Dynamic Programming in Algorithm Design

Dynamic programming is not just a theoretical concept; it's a practical tool used to design algorithms for a wide array of problems. Its ability to optimize solutions by breaking them down into manageable, overlapping subproblems makes it invaluable in many computational scenarios.

Classic Problems: Knapsack, Shortest Path, Sequence Alignment

Several classic problems in computer science are famously solved using dynamic programming, serving as excellent illustrations of the technique's power and versatility.

The Knapsack Problem: Imagine you have a knapsack with a limited weight capacity and a set of items, each with its own weight and value. The goal is to choose a subset of items to put into the knapsack such that the total value is maximized without exceeding the weight limit. Dynamic programming can solve this by considering subproblems: what is the maximum value achievable with a certain weight capacity using only a subset of the items? The recurrence relation builds upon solutions to smaller capacities and fewer items. There are variations like the 0/1 Knapsack (each item can either be taken or left) and the Unbounded Knapsack (multiple instances of an item can be taken).

Shortest Path Problems: Finding the shortest path between two nodes in a weighted graph is another common application. Algorithms like the Bellman-Ford algorithm and the Floyd-Warshall algorithm utilize dynamic programming. For example, the Bellman-Ford algorithm iteratively relaxes edges, finding shorter paths by considering paths with an increasing number of edges. The underlying principle is that the shortest path to a node using at most k edges can be found using information about shortest paths using at most k-1 edges. Dijkstra's algorithm, while often categorized as a greedy algorithm, can also be viewed from a dynamic programming perspective as it progressively finds the shortest path to each node.

Sequence Alignment: In bioinformatics, dynamic programming is crucial for aligning biological sequences, such as DNA or protein sequences. The goal is to find the best alignment between two sequences by introducing gaps to maximize similarity (or minimize differences). The Needleman-Wunsch algorithm (for global alignment) and the Smith-Waterman algorithm (for local alignment) are classic DP solutions. They build up a matrix where each cell (i, j) stores the optimal alignment score for prefixes of the two sequences up to position i and j respectively.

Other well-known problems solvable with dynamic programming include the Longest Common Subsequence (LCS), Edit Distance (calculating the minimum operations to transform one string into another), Matrix Chain Multiplication, and various counting problems (e.g., counting the ways to make change for a certain amount using given coin denominations).

These courses provide in-depth coverage of these classic problems and their DP solutions.

Many algorithm textbooks dedicate significant sections to these classic DP problems.

Comparison to Greedy Algorithms

Dynamic programming and greedy algorithms are both techniques used for optimization problems, and both often rely on the property of optimal substructure. However, they differ significantly in their approach to making choices.

A greedy algorithm makes the choice that seems best at the current moment, without considering future consequences or revisiting past choices. It makes a locally optimal choice in the hope that this will lead to a globally optimal solution. For some problems, this strategy works and yields the correct optimal solution (e.g., Dijkstra's algorithm for shortest paths with non-negative edge weights, Prim's and Kruskal's algorithms for Minimum Spanning Trees). However, for many other problems, a greedy approach can lead to suboptimal solutions because an early local optimum might prevent reaching the true global optimum.

Dynamic programming, on the other hand, is more exhaustive. When faced with a choice, it typically explores all possible choices (or at least all relevant ones based on the recurrence relation). It solves all necessary subproblems and then combines their solutions to find the overall optimal solution. Dynamic programming essentially makes decisions by considering the solutions to subproblems, ensuring that the choice made at each step contributes to the globally optimal solution. It doesn't commit to a single "greedy" choice without exploring alternatives if the problem structure requires it.

The key difference lies in how they use the optimal substructure property. A greedy algorithm makes a choice and then solves the resulting subproblem. Dynamic programming solves subproblems first and then uses their solutions to make a choice for the larger problem. If a problem exhibits both optimal substructure and overlapping subproblems, dynamic programming is generally applicable. If, in addition, the problem has the "greedy choice property" (meaning a locally optimal choice always leads to a globally optimal solution), then a simpler and often faster greedy algorithm can be used. Determining whether a greedy approach will work often requires a proof; if not, dynamic programming is a more robust alternative for finding the optimal solution.

These courses can help clarify the distinctions and applications of these two important algorithmic strategies.

Approximation Techniques

While dynamic programming can provide exact optimal solutions, some problems are so complex or large-scale that even a polynomial-time DP solution might be too slow or require too much memory. This is often due to the "curse of dimensionality," where the number of states (subproblems) grows exponentially with the number of dimensions or variables in the problem. In such scenarios, approximate dynamic programming (ADP) techniques become valuable.

ADP methods aim to find near-optimal solutions by using approximations, typically for the value function (which represents the optimal score for a given state) or the policy (the rule for making decisions). Instead of computing and storing the exact value for every possible state, ADP might use a function approximator (like a linear model, neural network, or decision tree) to estimate these values. This allows the approach to handle problems with very large or even continuous state spaces where exact DP is infeasible.

Key ideas in ADP include:

- Value Function Approximation (VFA): Representing the value function using a more compact form, often parameterized. For example, instead of a huge table, the value function might be a weighted sum of a set of basis functions or the output of a neural network.

- Policy Iteration and Value Iteration with Approximations: Adapting standard DP algorithms like policy iteration and value iteration to work with these approximate representations.

- Simulation and Learning: Many ADP techniques involve simulating the system and learning the value function or policy from experience, drawing connections to reinforcement learning.

Approximate dynamic programming is a broad field with many different algorithmic strategies. It's particularly useful for stochastic optimization problems where decisions must be made over time in the presence of uncertainty. While designing effective ADP algorithms can be challenging and may require domain-specific knowledge, they offer a pathway to tackle extremely complex optimization tasks that would otherwise be intractable.

For those interested in the cutting edge of optimization, these resources provide a starting point into approximate dynamic programming.

Real-World System Optimizations

The principles of dynamic programming extend far beyond academic exercises and find application in a multitude of real-world system optimizations. Its ability to break down complex decision-making processes into sequential steps makes it suitable for various planning and resource allocation problems.

In operations research, dynamic programming is used for inventory control (determining optimal stock levels over time), resource allocation (assigning limited resources to various activities to maximize return), scheduling (e.g., project scheduling, machine scheduling to minimize completion time or costs), and network optimization problems like finding reliable paths or designing optimal network flows. Industrial Engineering often leverages these techniques.

In control theory, dynamic programming (often in the form of Bellman equations) is fundamental to optimal control, where the goal is to find a control policy that minimizes a cost function or maximizes a reward over time for a dynamic system. This has applications in robotics, aerospace engineering (e.g., trajectory optimization), and process control.

Bioinformatics heavily relies on DP for tasks like gene and protein sequence alignment, as discussed earlier, which is crucial for understanding evolutionary relationships and functional similarities. RNA structure prediction also uses DP-like approaches.

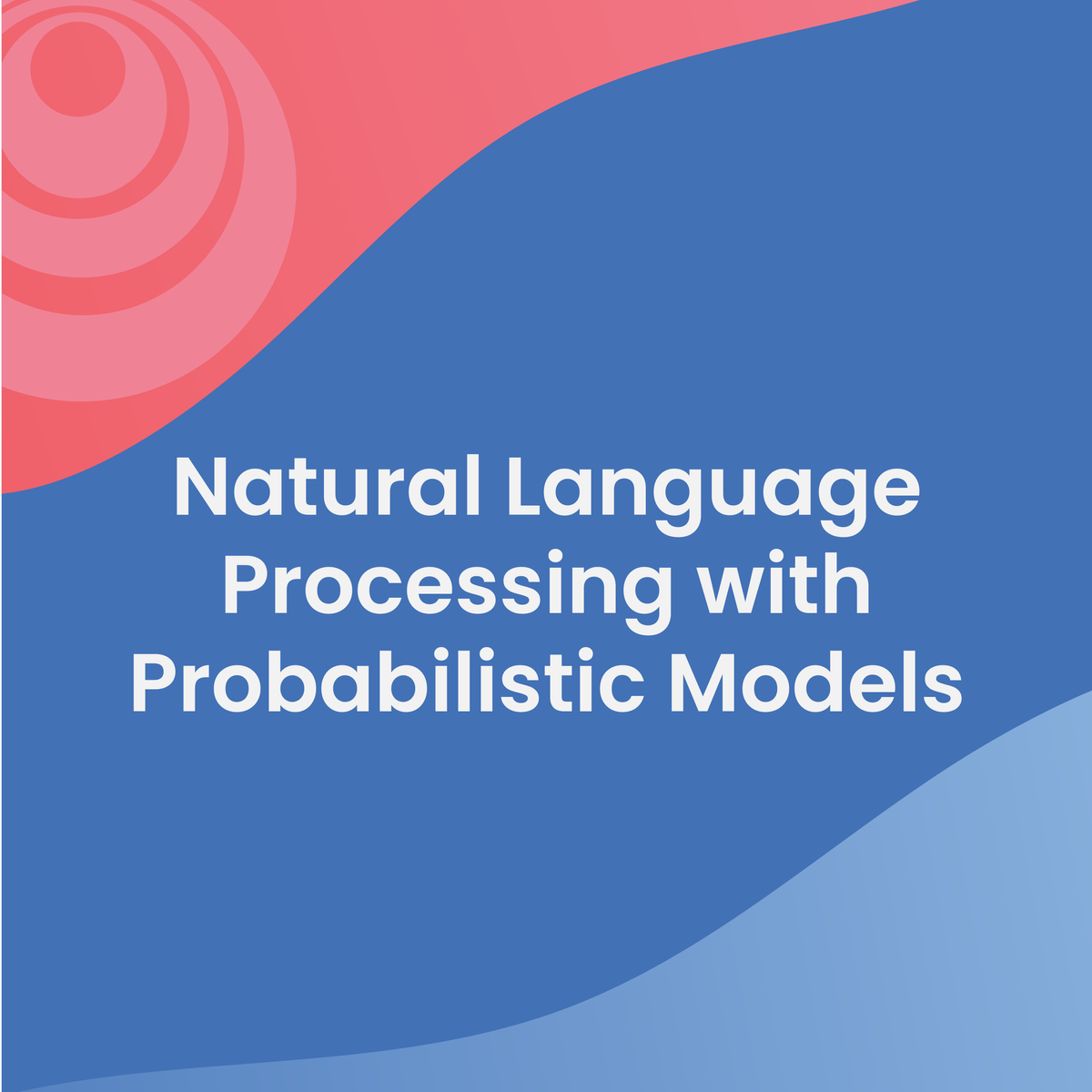

Even in areas like natural language processing, DP concepts appear. For instance, the Viterbi algorithm, used for tasks like part-of-speech tagging in Hidden Markov Models, is a dynamic programming algorithm. Algorithms for word wrapping text to fit lines optimally also use DP.

The core idea of breaking a problem into stages, solving subproblems, and combining them to find an overall optimal solution is a versatile framework that continues to find new applications as computational power increases and new problem domains emerge.

These courses touch upon algorithms that are frequently used in system optimization tasks.

Dynamic Programming in Financial Markets

The principles of dynamic programming, focused on sequential decision-making under uncertainty and optimization, naturally find applications in the complex and evolving world of Finance & Economics. While the highly stochastic and often non-stationary nature of financial markets presents unique challenges, DP provides a valuable framework for modeling and solving various financial problems.

Option Pricing Models

Dynamic programming is a cornerstone in the valuation of American-style options. An American option allows the holder to exercise the option at any time up to and including the expiration date. This early exercise feature introduces a decision-making problem at each point in time: should the option be exercised now, or held for a potentially better opportunity later? This is precisely the type of sequential decision problem that dynamic programming excels at.

The core idea is to work backward from the option's expiration date. At expiration, the value of the option is known (e.g., for a call option, it's the maximum of zero or the stock price minus the strike price). Then, at the period just before expiration, one calculates the value of holding the option versus exercising it. The value of holding depends on the expected value of the option in the next period, discounted back to the current period. This process is repeated, stepping back in time, until the current date is reached. The resulting value at each time step, given the underlying asset price, forms the basis of the option's fair value. Binomial and trinomial tree models for option pricing are essentially discrete-time dynamic programming methods.

While closed-form solutions like the Black-Scholes model exist for European options (which can only be exercised at expiration), dynamic programming provides the numerical framework necessary for valuing the more complex American options and other exotic derivatives with early exercise features.

Portfolio Optimization

Dynamic programming can be applied to multi-period portfolio optimization problems. The classic Markowitz mean-variance optimization is typically a single-period model. However, investors often have longer horizons and may need to rebalance their portfolios over time in response to changing market conditions, income, or consumption needs. Dynamic programming offers a framework to determine the optimal sequence of portfolio allocations over multiple periods to maximize expected utility of terminal wealth or to achieve other financial goals.

The state in such a model could be the current wealth and potentially other relevant factors (like age or market state variables). The decision at each period is how to allocate wealth among different asset classes. The objective function would typically involve the utility of consumption and/or terminal wealth. The Bellman equation can be set up to solve for the optimal consumption and investment policy at each stage. However, the "curse of dimensionality" can be a significant challenge here, especially if there are many asset classes or state variables. Approximate dynamic programming techniques are often explored to tackle these more complex, realistic portfolio optimization problems.

This course provides an introduction to decision-making frameworks relevant to finance.

Risk Management Applications

Dynamic programming principles can also inform various risk management strategies. For example, in determining optimal hedging strategies for a portfolio over time, DP can help decide the sequence of trades to minimize risk (e.g., variance of portfolio value) subject to certain constraints. This involves considering the current portfolio composition, market conditions, and the costs of rebalancing.

Another application lies in capital allocation for financial institutions. Deciding how much capital to allocate to different business units or risk-taking activities over time, considering the potential returns and risks, can be framed as a dynamic programming problem. The goal might be to maximize the institution's overall risk-adjusted return or to maintain solvency with a high probability over a given horizon.

Furthermore, in the context of algorithmic trading, dynamic programming can be used to optimize execution strategies. For instance, when a large order needs to be executed, breaking it into smaller pieces and timing their execution to minimize market impact while achieving a target price can be modeled as a DP problem. The state could involve the remaining shares to be traded and current market conditions, and the decision is how many shares to trade in the next small time interval.

Limitations in Volatile Markets

While dynamic programming offers a powerful theoretical framework, its application in financial markets, especially highly volatile ones, comes with significant limitations. One major challenge is the "curse of dimensionality," as previously mentioned. Financial problems often involve many state variables (e.g., prices of multiple assets, economic indicators, individual wealth) and many possible actions, making exact DP solutions computationally intractable.

Another critical issue is model misspecification. DP solutions are optimal only with respect to the assumed model of asset price dynamics, utility functions, and transaction costs. Financial markets are notoriously difficult to model accurately. Asset returns are not perfectly predictable, volatility can change unexpectedly, and extreme events ("black swans") can occur. If the underlying model does not capture these realities well, the "optimal" policy derived from DP might perform poorly in practice. The parameters of these models (e.g., expected returns, volatilities, correlations) also need to be estimated, and estimation errors can lead to suboptimal decisions.

The assumption of rational, utility-maximizing agents, often implicit in DP formulations, may not always hold. Behavioral biases can significantly influence market dynamics and individual investment decisions. Finally, the computational burden, even for simplified models, can be substantial, making real-time application difficult for complex strategies. Approximate dynamic programming methods aim to address some of these computational issues, but they introduce their own set of approximations and potential inaccuracies.

Formal Education Pathways

For individuals seeking a structured approach to learning dynamic programming and related concepts, formal education offers several pathways. These typically involve university-level coursework and can extend into advanced research for those deeply interested in the field.

Undergraduate CS Curriculum Integration

Dynamic programming is a staple topic in most undergraduate Computer Science curricula, typically introduced within courses on algorithms and data structures. Students usually encounter DP after learning fundamental concepts like recursion, basic data structures (arrays, lists, trees, graphs), and algorithm analysis (Big O notation).

The introduction often starts with motivating examples like the Fibonacci sequence, illustrating how a naive recursive approach can be inefficient due to recomputing overlapping subproblems. Then, the core principles of optimal substructure and overlapping subproblems are explained, followed by the two main implementation techniques: memoization (top-down) and tabulation (bottom-up). Classic DP problems such as the Knapsack problem, Longest Common Subsequence, Edit Distance, and basic shortest path algorithms are commonly used to solidify understanding and demonstrate the application of these techniques. Emphasis is placed on identifying the subproblem structure, formulating the recurrence relation, and correctly implementing the base cases. Students are also taught to analyze the time and space complexity of their DP solutions.

These courses are representative of what one might find in an undergraduate CS program covering algorithms and dynamic programming.

Foundational texts in algorithms are essential companions to these courses.

Graduate-Level Extensions

At the graduate level, the study of dynamic programming often becomes more specialized and delves into more advanced topics and applications. Courses in advanced algorithms, optimization techniques, or specialized areas like Artificial Intelligence (particularly reinforcement learning), operations research, or theoretical computer science will likely revisit DP with greater mathematical rigor and complexity.

Graduate studies might explore:

- Advanced DP Techniques: This could include DP on trees, DP with bitmasking, digit DP, or more complex state definitions and transitions.

- Probabilistic Dynamic Programming: Where transitions or costs are stochastic, leading to problems in areas like Markov decision processes (MDPs), which are fundamental to reinforcement learning.

- Approximate Dynamic Programming (ADP): As discussed earlier, for problems suffering from the curse of dimensionality, graduate courses may cover various ADP methods, including value function approximation, policy iteration with function approximators, and connections to simulation-based optimization and machine learning.

- Specific Application Domains: Deeper dives into how DP is used in fields like bioinformatics (e.g., advanced sequence alignment models, RNA folding), economics (e.g., optimal growth models, search theory), control theory, or network optimization.

- Theoretical Aspects: Formal analysis of the properties of problems solvable by DP, complexity bounds, and connections to other areas of computational complexity theory.

Research at this level often involves developing new DP formulations for unsolved problems or creating more efficient ADP techniques for large-scale applications.

These courses hint at the advanced topics one might encounter in graduate studies.

Advanced texts form the bedrock of graduate-level understanding.

Research Opportunities

Dynamic programming, despite its long history, continues to be an active area of research, particularly in the realm of approximate dynamic programming (ADP) and its interplay with machine learning and large-scale optimization. The "curse of dimensionality" remains a central challenge, and much research is focused on developing more scalable and robust ADP methods.

Opportunities for research exist in:

- Novel Approximation Architectures: Exploring new ways to approximate value functions or policies, for instance, using advanced deep learning architectures, kernel methods, or other non-parametric techniques.

- Theoretical Understanding of ADP: Developing better theoretical guarantees for the performance of different ADP algorithms, including convergence rates and error bounds.

- ADP for Specific Complex Systems: Applying and tailoring ADP techniques to solve challenging problems in areas like sustainable energy systems, smart grids, complex logistics and supply chain management, personalized medicine, and robust financial modeling.

- Reinforcement Learning (RL): RL is deeply connected to dynamic programming, and many advancements in RL (like deep Q-networks, actor-critic methods) can be seen as forms of approximate dynamic programming. Research here focuses on sample efficiency, exploration-exploitation trade-offs, and applying RL to real-world control problems.

- Distributed and Parallel DP: Developing methods to solve large DP problems by distributing the computation across multiple processors or machines.

- Integration with Other Optimization Techniques: Combining DP with other methods like mathematical programming (linear, integer programming), metaheuristics, or simulation-based optimization to tackle hybrid problems.

Researchers in computer science, operations research, engineering, economics, and applied mathematics often contribute to these areas. The increasing availability of large datasets and computational power continues to open new avenues for applying and advancing dynamic programming concepts.

Math Prerequisites (Discrete, Calculus)

A solid mathematical foundation is essential for a deep understanding of dynamic programming and for effectively applying it, especially at advanced levels. Key prerequisite areas include:

Discrete Mathematics: This is arguably the most crucial prerequisite.

- Combinatorics: Many DP problems involve counting or finding optimal arrangements, making combinatorial reasoning essential. Understanding permutations, combinations, and recurrence relations (which are foundational to DP) is vital.

- Graph Theory: Numerous DP problems can be modeled using graphs (e.g., shortest path problems, DP on trees). Familiarity with graph terminology, representations, and basic graph algorithms is very helpful.

- Set Theory and Logic: For precise problem formulation and proof techniques.

Calculus (especially for advanced topics):

- Limits and Series: Useful for understanding the convergence of iterative DP algorithms and for analyzing the behavior of value functions.

- Derivatives and Optimization: In continuous-time DP or when dealing with continuous state/action spaces (often encountered in control theory and economics), calculus is fundamental for deriving optimality conditions (like the Hamilton-Jacobi-Bellman equation). Understanding optimization concepts like finding maxima/minima using derivatives is also relevant.

Probability and Statistics:

- Basic Probability Theory: Essential for stochastic dynamic programming, where transitions or rewards are uncertain. Understanding concepts like expected values is key.

- Statistical Modeling: Useful if dealing with ADP techniques that involve learning from data or estimating parameters of a model.

Linear Algebra:

- Vectors and Matrices: Many DP problems, especially those involving tabulation, can be represented using matrices or arrays. Linear algebra is also crucial for understanding some function approximation techniques used in ADP.

While basic DP problems can be approached with a good grasp of recursion and basic algebra, a deeper engagement with the theory, advanced applications, and research in dynamic programming will benefit significantly from a strong background in these mathematical areas. OpenCourser offers a wide range of courses in Mathematics to build this foundation.

Self-Directed Learning Strategies

For those who prefer to learn at their own pace or are looking to supplement formal education, self-directed learning offers a flexible path to mastering dynamic programming. This approach requires discipline and a proactive mindset but can be highly effective, especially with the wealth of online resources available today.

OpenCourser is an excellent starting point for self-directed learners, providing a vast catalog of online courses on dynamic programming from various providers. The platform's features, such as detailed course information, syllabi, user reviews, and the ability to save courses to a list, can help learners structure their own learning journey. The OpenCourser Learner's Guide also offers valuable tips on how to make the most of online learning, create a curriculum, and stay motivated.

Project-Based Learning Approaches

One of the most effective ways to solidify understanding in dynamic programming is through project-based learning. Instead of just passively consuming material, actively applying DP concepts to solve concrete problems can significantly deepen comprehension and build practical skills.

Start with well-defined, smaller projects. For instance, implement solutions to classic DP problems like the Fibonacci sequence, knapsack problem (0/1 and unbounded), longest common subsequence, edit distance, or coin change problem from scratch. Focus not just on getting the code to work, but also on understanding the state definition, recurrence relation, base cases, and the choice between memoization and tabulation. Try to analyze the time and space complexity of your solutions.

As you gain confidence, you can move on to more complex or open-ended projects. Consider:

- Developing a small game solver: Many simple games can be solved using dynamic programming to find optimal strategies.

- Implementing a sequence alignment tool: Build a basic tool for aligning short DNA or protein sequences.

- Optimizing a simple resource allocation problem: For example, a simplified version of an investment strategy over a few periods.

- Contributing to open-source projects that might involve algorithmic components where DP could be applied or improved.

When undertaking projects, try to break the problem down methodically. First, ensure you understand the problem statement thoroughly. Then, try to identify if it has optimal substructure and overlapping subproblems. If so, define your subproblems (states) carefully. Formulate the recurrence relation and identify the base cases. Decide whether a top-down (memoization) or bottom-up (tabulation) approach is more suitable. Implement your solution, and then test it rigorously with various inputs, including edge cases. Platforms like GitHub can be used to showcase your projects and track your progress.

These courses often include project components or extensive problem sets that facilitate project-based learning.

Open-Source Implementations

Exploring open-source implementations of dynamic programming algorithms can be an invaluable learning tool. Many software libraries and research projects available on platforms like GitHub contain well-crafted DP code. By studying these implementations, you can gain insights into how experienced developers structure their solutions, handle edge cases, and optimize for performance.

When looking at open-source code:

- Start with established libraries: Libraries for scientific computing, bioinformatics, or even some game development engines might have modules that use dynamic programming.

- Look for clarity and good documentation: Well-commented code and clear explanations of the algorithm are crucial for learning.

- Compare different implementations: If you find multiple open-source solutions to the same DP problem, compare their approaches. Note differences in state representation, recurrence formulation, or the choice between memoization and tabulation.

- Try to understand the context: Why was DP chosen for this particular part of the larger project? What are the performance constraints?

- Experiment with the code: Clone the repository, run the code, and perhaps even try modifying it or extending it. This hands-on interaction is key.

Be mindful that not all open-source code is of high quality or well-documented. Focus on projects from reputable organizations or those with active communities and good coding standards. Reading and understanding others' code is a skill in itself and can significantly accelerate your learning process, exposing you to different coding styles and problem-solving patterns.

Competitive Programming Practice

Competitive programming platforms (such as TopCoder, Codeforces, LeetCode, HackerRank, AtCoder) are excellent venues for honing dynamic programming skills. These platforms host regular contests and offer extensive archives of problems, many of which specifically require or benefit from DP solutions. The problems often range in difficulty, allowing you to gradually build up your expertise.

Why competitive programming is effective for learning DP:

- Varied Problem Types: You'll encounter a wide array of DP problem patterns, from classic textbook examples to more obscure or combined variations. This helps in recognizing when DP is applicable.

- Immediate Feedback: Submitting your solution provides instant feedback on its correctness and efficiency (time and memory limits). This is crucial for learning to optimize.

- Editorials and Discussions: After contests, or for practice problems, platforms often provide editorials explaining the intended solutions, including DP approaches. User discussions can also offer alternative solutions and insights.

- Focus on Efficiency: Competitive programming environments have strict time and memory limits, forcing you to think carefully about the complexity of your DP states and transitions.

- Pattern Recognition: Over time, you'll start recognizing common DP patterns (e.g., DP on subsets, DP on trees, digit DP, knapsack variations), which speeds up problem-solving.

To make the most of competitive programming for DP practice:

- Start with easier DP problems: Don't jump into the hardest ones immediately. Build confidence with problems that are well-within your current skill level.

- Focus on understanding, not just coding: Before coding, spend time deriving the recurrence relation and base cases on paper.

- Learn from failed attempts: If your solution is too slow or incorrect, try to understand why. Read editorials or discuss with others to learn the correct approach.

- Practice consistently: Like any skill, DP mastery comes with regular practice.

Many online courses are specifically designed to prepare learners for competitive programming and technical interviews, often with a strong emphasis on dynamic programming.

These books are also often recommended in competitive programming circles.

Mentorship Opportunities

Finding a mentor can significantly accelerate your learning journey in dynamic programming, especially when navigating more complex concepts or tackling challenging problems. A mentor can provide guidance, share experiences, help troubleshoot issues, and offer a different perspective on problem-solving.

Potential sources for mentorship include:

- University Faculty or Teaching Assistants: If you are a student, professors and TAs who teach algorithms or related courses can be excellent mentors.

- Senior Colleagues at Work: If you are working in a tech role, experienced engineers who have a strong grasp of algorithms can offer valuable insights and guidance.

- Online Communities and Forums: Platforms dedicated to programming, algorithms, or specific technologies often have experienced members willing to help learners. While not formal mentorship, you can ask questions and learn from discussions. Some communities may have specific channels or programs for mentorship.

- Competitive Programming Communities: Participants in competitive programming often form study groups or help each other. More experienced competitors can act as informal mentors.

- Professional Networking: Attending meetups, conferences (even virtual ones), or joining professional organizations can help you connect with potential mentors.

When seeking mentorship, be respectful of the mentor's time. Come prepared with specific questions or problems you're working on. Show that you've made an effort to solve the problem yourself before asking for help. A good mentor will not just give you the answers but will guide you to find them yourself, helping you develop your problem-solving skills. Even informal interactions, like discussing a challenging DP problem with a peer who is slightly ahead of you, can be a form of mentorship and mutual learning.

Career Applications of Dynamic Programming

Expertise in dynamic programming is a valuable asset in various technology-driven careers. While not every software engineering role will require daily application of complex DP algorithms, a solid understanding of its principles signifies strong problem-solving and analytical skills, which are highly sought after.

Roles Requiring DP Expertise

Certain roles are more likely to involve the direct application or deep understanding of dynamic programming:

- Software Engineer (Algorithm-Intensive Roles): Companies working on complex systems, search engines, large-scale optimization problems, bioinformatics tools, financial modeling software, or game development (especially AI or complex mechanics) often seek engineers with strong algorithmic skills, including DP. This is particularly true for roles at top tech companies known for their challenging technical interviews.

- / Machine Learning Engineer: While many machine learning models are not directly DP, certain areas like reinforcement learning are fundamentally based on DP concepts (e.g., value iteration, policy iteration). Sequence modeling tasks (e.g., in NLP or bioinformatics) can also involve algorithms with DP-like structures.

- : Professionals in this field explicitly use mathematical optimization techniques, including dynamic programming, to solve problems in logistics, supply chain management, scheduling, resource allocation, and other business processes.

- ("Quant"): In finance, quants develop and implement mathematical models for pricing derivatives, portfolio optimization, and risk management. Dynamic programming is a key tool for many of these tasks, especially for options pricing and optimal asset allocation over time.

- Research Scientist (Computer Science, AI, Operations Research): Academic or industrial research roles focused on developing new algorithms, optimization methods, or AI techniques will often require a deep understanding and ability to innovate with dynamic programming.

- Bioinformatician: As mentioned, sequence alignment and other bioinformatics tasks heavily rely on DP algorithms.

Even in general software development, the thought process behind DP – breaking problems down, identifying optimal substructure, and handling overlapping subproblems – is a valuable skill for designing efficient and robust software.

If you are interested in these types of roles, explore these related career paths.

Industry Demand Trends

The demand for professionals with strong algorithmic skills, including proficiency in dynamic programming, remains consistently high, particularly in the technology sector and data-driven industries. While "dynamic programming expert" might not be a common job title, the underlying skills it represents – analytical thinking, problem decomposition, optimization, and efficient coding – are perennially sought after.

Several factors contribute to this sustained demand:

- Growth of Big Data and AI: As companies collect and analyze vast amounts of data, the need for efficient algorithms to process, model, and derive insights from this data increases. Fields like Artificial Intelligence and Machine Learning, which often involve optimization and complex computations, benefit from talent skilled in algorithmic paradigms like DP. [5gnzl7, 9j4a27]

- Complexity of Modern Software Systems: Modern software, from operating systems and databases to large-scale web services and distributed systems, often involves intricate algorithmic challenges where efficiency is paramount.

- Competitive Edge: Companies that can solve complex optimization problems more effectively (e.g., in logistics, finance, advertising, resource allocation) gain a significant competitive advantage.

- Technical Interviews: Many leading technology companies use algorithmic problems, including DP questions, in their technical interviews to assess candidates' problem-solving abilities. This practice itself drives demand for these skills among job seekers.

According to the U.S. Bureau of Labor Statistics (BLS), employment for Operations Research Analysts, a field that frequently uses dynamic programming, is projected to grow 23% from 2021 to 2031, much faster than the average for all occupations. The BLS also projects about 10,300 openings for operations research analysts each year, on average, over the decade. While this is a specific role, it reflects the broader trend of increasing reliance on data-driven decision-making and optimization. For instance, the median annual wage for operations research analysts was $91,290 in May 2024. Other sources report similar or even higher median salaries depending on experience and industry, with top earners in the federal government and manufacturing sectors. It's important to remember that salaries can vary significantly based on location, experience, industry, and specific job responsibilities. For broader software engineering roles, salaries also remain competitive, with expertise in algorithms often leading to higher compensation potential.

Individuals looking to enter or advance in these fields would do well to build a strong foundation in algorithms, including dynamic programming. Online platforms like OpenCourser can be instrumental in finding courses that teach these in-demand skills. You can browse relevant courses in Data Science and Programming to get started.

Interview Preparation Strategies

Technical interviews at many technology companies, especially for software engineering roles, often include questions designed to test algorithmic thinking, and dynamic programming problems are a common feature. Preparing effectively for these types of questions is crucial.

Here are some strategies:

- Master the Fundamentals: Ensure you have a strong grasp of the core concepts: optimal substructure, overlapping subproblems, memoization, and tabulation. Understand how to identify if a problem is a good candidate for DP.

- Practice Classic Problems: Work through well-known DP problems like Fibonacci, Knapsack (0/1, unbounded), Longest Common Subsequence (LCS), Longest Increasing Subsequence (LIS), Edit Distance, Coin Change, Matrix Chain Multiplication, and basic graph pathing problems. For each, try to derive the solution using both memoization and tabulation.

-

Develop a Problem-Solving Framework:

- Understand the Problem: Clarify requirements and constraints. Ask questions.

- Identify Subproblems: Define what a "state" in your DP solution represents. This is often the hardest part. Think about what information you need to make a decision at each step.

- Formulate the Recurrence Relation: How does the solution to a state depend on the solutions to smaller/previous states?

- Determine Base Cases: What are the simplest subproblems whose solutions are known directly?

- Choose an Approach: Decide between memoization (recursive) or tabulation (iterative).

- Implement and Test: Write clean code. Test with examples, including edge cases.

- Analyze Complexity: Be prepared to discuss the time and space complexity of your solution.

- Practice on Coding Platforms: Use platforms like LeetCode, HackerRank, Codeforces, etc., which have a vast collection of DP problems categorized by difficulty. Aim for a mix of problem types.

- Mock Interviews: Practice solving problems under timed conditions, ideally with someone who can provide feedback. This helps simulate the interview environment.

- Communicate Your Thought Process: During an actual interview, it's crucial to explain your thinking aloud. Interviewers are interested in your problem-solving approach, not just the final code. Talk through your state definition, recurrence, and base cases.

- Don't Get Discouraged: DP problems can be challenging. It takes consistent practice to get good at them. Learn from every problem you solve or attempt.

Many online courses are tailored for interview preparation and cover dynamic programming extensively.

Books focused on coding interviews also dedicate significant attention to dynamic programming techniques.

Skill Transferability Analysis

The skills developed while learning and applying dynamic programming are highly transferable and valuable across various domains and roles, even those that don't directly involve writing DP algorithms daily.

Problem Decomposition: At its core, DP is about breaking complex problems into smaller, manageable subproblems. This skill is fundamental to software engineering, project management, and analytical thinking in any field. Being able to see the constituent parts of a large problem and how they interrelate is crucial for effective system design and troubleshooting.

Recursive Thinking: DP often involves formulating recurrence relations, which hones one's ability to think recursively. Recursive thinking is essential for understanding many algorithms, data structures (like trees and graphs), and even for modeling complex systems.

Optimization Mindset: DP is an optimization technique. Learning DP instills a mindset of looking for efficiencies, avoiding redundant work, and finding the best possible solution under given constraints. This is valuable in performance tuning, resource management, and process improvement.

Analytical and Abstract Thinking: Defining DP states and transitions requires a good degree of abstraction and analytical rigor. These are key skills for any role that involves modeling, data analysis, or strategic thinking. For example, an

might use similar structured thinking to break down business processes, or a might decompose a client's problem into solvable parts.Algorithmic Design: Understanding DP provides a powerful tool in one's algorithmic toolkit. Even if a specific problem isn't solvable by DP, the principles learned can inspire solutions using other paradigms or hybrid approaches.

Attention to Detail: Correctly implementing DP solutions, especially handling base cases and the order of computation in tabulation, requires meticulous attention to detail. This is a hallmark of a good engineer or analyst.

Therefore, investing time in mastering dynamic programming is not just about learning a specific set of algorithms; it's about developing a more profound and versatile problem-solving capability that can be applied in numerous technical and analytical contexts.

Limitations and Modern Alternatives

While dynamic programming is a powerful and widely applicable technique, it's not a panacea for all optimization problems. It has inherent limitations, and modern computational approaches, particularly from the field of machine learning, offer alternatives or complementary methods for tackling complex decision-making tasks.

Curse of Dimensionality

One of the most significant limitations of traditional dynamic programming is the "curse of dimensionality." This term, also coined by Richard Bellman, refers to the exponential explosion in the size of the state space (the number of subproblems) as the number of variables or dimensions describing a state increases.

For example, if a problem's state is defined by a single variable that can take N values, there are N subproblems to solve. If the state is defined by two variables, each taking N values, the number of subproblems becomes N*N = N2. If there are D such variables (dimensions), the number of states can grow as ND. As D increases, the number of states quickly becomes astronomically large, making it computationally infeasible to solve and store the solution for every state.

This issue severely limits the applicability of exact dynamic programming to problems with high-dimensional state spaces, which are common in many real-world scenarios, such as controlling complex robotic systems, managing large-scale logistical networks, or detailed financial portfolio optimization with many assets and factors. Even if the computation for each state is fast, the sheer number of states to evaluate and store results for makes the approach impractical.

Approximate Dynamic Programming

Approximate Dynamic Programming (ADP) encompasses a range of techniques designed to overcome the curse of dimensionality and other limitations of exact DP methods. Instead of finding the exact optimal solution, ADP aims to find a good, near-optimal solution by using approximations.

The core idea in many ADP approaches is to approximate the value function (which represents the optimal long-term reward or cost from a given state) or the policy (the decision-making rule). Rather than calculating and storing the value for every single state, ADP uses a more compact representation, often a parameterized function (like a linear combination of basis functions, a neural network, or a decision tree). This function approximator estimates the value of a state, or suggests an action, without needing to enumerate all possible states.

Key ADP strategies include:

- Value Function Approximation (VFA): Using methods to learn an approximate representation of the value function.

- Policy Search: Directly searching in the space of policies to find a good one, often guided by simulations or approximate value estimates.

- Simulation-based methods: Using simulation to generate sample trajectories and learn from them, which is common in reinforcement learning.

ADP is a very active research area, bridging operations research, control theory, and Artificial Intelligence. It provides a powerful toolkit for tackling large-scale, complex, and often stochastic optimization problems that are intractable for exact DP.

These resources delve deeper into the concepts of approximation in algorithmic design.

Machine Learning Intersections

There's a strong and growing intersection between dynamic programming and Machine Learning, particularly in the field of Reinforcement Learning (RL). [32, 5gnzl7, 9j4a27] RL is concerned with how an agent should take actions in an environment to maximize some notion of cumulative reward. Many foundational RL algorithms are essentially ways of solving Markov Decision Processes (MDPs), which are often formulated and solved using dynamic programming principles.

For example:

- Value Iteration and Policy Iteration: These are classic DP algorithms for finding optimal policies in MDPs when the model of the environment (state transition probabilities and rewards) is known.

- Q-learning and SARSA: These are RL algorithms that can learn optimal policies even when the model is unknown. They iteratively update estimates of action-values (Q-values), which are related to the Bellman equations from DP. Deep Q-Networks (DQNs) combine Q-learning with deep neural networks to approximate the Q-function, allowing RL to tackle problems with very large state spaces, effectively a form of approximate dynamic programming.

- Actor-Critic Methods: These RL methods maintain both an approximate policy (the "actor") and an approximate value function (the "critic"), with both components being updated iteratively, drawing inspiration from policy iteration and value function approximation in ADP.

Beyond RL, DP concepts can appear in other machine learning contexts. For instance, sequence learning problems (e.g., in natural language processing or speech recognition) sometimes use algorithms with DP-like structures (e.g., Viterbi algorithm for Hidden Markov Models, or beam search which can be seen as a pruned search related to DP ideas). The challenge of fitting complex models can sometimes be broken down into stages that have a DP flavor.

The synergy is bi-directional: DP provides the foundational theory for many RL algorithms, while machine learning techniques (especially deep learning for function approximation) provide powerful tools to make DP and ADP applicable to much larger and more complex problems than previously possible.

These courses explore the intersection of algorithms, AI, and machine learning.

Quantum Computing Implications

The intersection of dynamic programming and quantum computing is an emerging area with potential, though still largely in the research and theoretical stages. Quantum computers, with their ability to perform computations using principles of quantum mechanics like superposition and entanglement, offer the prospect of solving certain types of problems much faster than classical computers.

For dynamic programming, the interest lies in whether quantum algorithms could speed up the solution of DP problems, especially those that are computationally intensive classically. Some research explores how quantum search algorithms (like Grover's algorithm) might be applied to find optimal paths or solutions within the state space of a DP problem more efficiently. Other lines of inquiry investigate whether quantum annealing or other quantum optimization algorithms could be adapted to solve problems typically tackled by DP.

However, it's not a straightforward translation. Developing quantum algorithms that provide a significant speedup for general DP problems is challenging. The structure of DP, which often involves sequential dependencies (the solution to one subproblem depends on previously solved subproblems), doesn't always lend itself easily to the parallel nature of some quantum computations. Furthermore, the practical realization of large-scale, fault-tolerant quantum computers is still a significant hurdle.

While widespread application of quantum computing to DP is not yet a reality, it represents an intriguing frontier. As quantum hardware and algorithmic theory mature, we may see specific classes of DP problems, perhaps those with particular structures or those arising in quantum mechanical systems themselves, benefit from quantum approaches. For now, it remains a specialized research topic rather than a common alternative for typical DP applications.

Frequently Asked Questions (Career Focus)

For those considering how dynamic programming fits into their career aspirations, several common questions arise. This section aims to address some of these with a focus on practical career implications.

Is DP required for software engineering roles?

For many entry-level or generalist software engineering roles, explicitly designing complex dynamic programming solutions on a daily basis might be rare. However, understanding the principles of DP and being able to solve medium-difficulty DP problems is often expected, especially during technical interviews at competitive tech companies. These companies use DP questions not just to see if you've memorized algorithms, but to assess your problem-solving skills, your ability to break down complex problems, your comfort with recursion and iteration, and your understanding of time and space complexity.

In more specialized roles, such as those in algorithm development, machine learning, quantitative finance, or operations research, a deeper knowledge and more frequent application of DP (or related techniques like reinforcement learning and approximate DP) may be required. Even if you don't use DP directly, the analytical thinking and optimization mindset cultivated by learning it are highly valuable in any software engineering capacity. It helps in writing more efficient code and making better design choices. So, while not every software engineer "requires" DP in their day-to-day tasks, a foundational understanding is broadly beneficial and often a prerequisite for certain career paths and employers.

How to demonstrate DP proficiency without work experience?

Demonstrating proficiency in dynamic programming without direct work experience can be achieved through several avenues:

- Personal Projects: Implement solutions to classic and novel DP problems. Create a portfolio of these projects on platforms like GitHub. For each project, include a clear explanation of the problem, your DP formulation (states, recurrence, base cases), and analysis of complexity. You could even write blog posts explaining your solutions.

- Competitive Programming: Participate in online coding competitions (e.g., LeetCode, Codeforces, TopCoder). Achieving good rankings or solving a significant number of DP problems on these platforms is a strong signal of proficiency. You can list your profiles or notable achievements on your resume.

- Online Courses and Certifications: Complete specialized online courses in algorithms and dynamic programming. Many offer certificates of completion which can be added to your LinkedIn profile or resume. OpenCourser is a great place to find such courses.

- Open-Source Contributions: Contribute to open-source projects, particularly those that involve algorithmic components. If you can identify an area where a DP approach could optimize existing code or solve a new problem, proposing and implementing such a solution would be a powerful demonstration.

- Strong Interview Performance: Ultimately, for job seekers, the technical interview is where you directly demonstrate your DP skills. Being able to clearly articulate your thought process and code up a correct and efficient DP solution under pressure is the most direct proof.

- Research or Academic Projects: If you are a student, engaging in research or significant academic projects that involve dynamic programming can be a great way to showcase your abilities.

The key is to create tangible evidence of your skills that you can point to and discuss. Simply stating you know DP is less convincing than showing what you've done with it.

These courses are excellent for building a portfolio of solved DP problems.

Industry sectors valuing DP most highly

While strong algorithmic skills are valued broadly in tech, certain industry sectors have a more pronounced need or appreciation for dynamic programming expertise:

- Technology (Big Tech & Startups): Companies building search engines, social networks, e-commerce platforms, cloud computing services, and operating systems often deal with large-scale optimization, resource allocation, and complex algorithmic challenges where DP principles are relevant. Their rigorous interview processes also tend to screen for these skills.

- Finance (Quantitative Trading, Risk Management): The financial industry, particularly in areas like algorithmic trading, option pricing, portfolio optimization, and risk modeling, heavily utilizes mathematical and computational techniques, including dynamic programming. [09wyfb, mxc3wi]

- Bioinformatics and Computational Biology: Analyzing biological sequences (DNA, RNA, proteins), predicting protein structures, and understanding genetic networks often involve DP algorithms for tasks like sequence alignment. [3, fd05l8]

- Operations Research and Logistics: Industries focused on supply chain management, transportation, scheduling, and resource optimization (e.g., airlines, shipping companies, manufacturing) rely on operations research techniques, which include dynamic programming. [epuo6h]

- Aerospace and Defense: Optimal control, trajectory planning, and resource management in these sectors can involve dynamic programming.

- Gaming (AI and Game Mechanics): Developing sophisticated AI for game characters or optimizing complex game mechanics can sometimes leverage DP or related concepts like reinforcement learning. [jaewc6]

- Artificial Intelligence and Machine Learning Research: Companies and research labs pushing the boundaries of AI, especially in reinforcement learning, often require a deep understanding of DP foundations. [5gnzl7]

Essentially, any sector that deals with complex optimization problems, sequential decision-making under uncertainty, or requires highly efficient algorithmic solutions is likely to value dynamic programming skills.

Career progression paths for DP specialists

For individuals who develop a strong specialization in dynamic programming and related algorithmic problem-solving, several career progression paths can emerge. It's important to note that "DP Specialist" is rarely a formal title; rather, DP is a skill that contributes to advancement in broader roles.

Possible trajectories include:

- Senior/Principal Software Engineer / Architect: Within a software engineering track, individuals with exceptional algorithmic skills often progress to roles where they tackle the most challenging technical problems, design core algorithms for key products, mentor junior engineers on algorithmic best practices, and influence the technical direction of projects. They might become the go-to person for performance optimization or complex algorithmic design.

- Research Scientist / Applied Scientist: In industrial research labs (e.g., at tech companies, financial institutions, or biotech firms) or academia, those with deep DP knowledge can lead research in areas like AI, machine learning, operations research, or computational science, developing novel algorithms and publishing their work.

- Quantitative Analyst / Researcher (Finance): Starting as a junior quant, one can progress to senior roles, managing larger portfolios, developing more sophisticated trading models, or leading research teams in financial engineering.

- Operations Research Manager / Consultant: An Operations Research Analyst can move into management roles, leading teams of analysts, or become a consultant specializing in optimization for various industries. [epuo6h]

- Specialized Technical Lead / Manager: In areas like bioinformatics or specific AI subfields, strong algorithmic expertise can lead to roles managing teams focused on those technical domains.

- Entrepreneurship: Individuals with strong algorithmic insights might identify opportunities to build products or services that solve complex optimization problems in novel ways, leading them to start their own companies.

Continuous learning is key in these paths, as the field of algorithms and optimization is always evolving. Complementing DP skills with expertise in machine learning, distributed computing, or specific application domains can further enhance career progression.

Maintaining relevance amid AI advancements

The rapid advancements in Artificial Intelligence, particularly in areas like large language models (LLMs) and automated machine learning (AutoML), naturally lead to questions about the future relevance of skills like dynamic programming. However, rather than making DP obsolete, AI advancements are more likely to change how it's used and to create new opportunities where DP principles are even more critical.

Here's why DP skills remain relevant:

- Fundamental Problem-Solving: AI tools can assist in coding or generating ideas, but the core skill of understanding a problem, decomposing it, and designing an optimal logical structure (which is what DP is about) remains a human-driven analytical task. AI often needs to be guided by this understanding.

- Optimizing AI Itself: Many AI systems, especially in reinforcement learning, are built upon DP principles. Designing and improving these AI systems often requires a deep understanding of these foundations. For example, optimizing the training process or the architecture of a neural network used in an RL agent might involve DP-like thinking.

- Niche and Complex Problems: While AI can handle many general tasks, highly specialized or novel optimization problems may still require custom algorithmic solutions, including those based on DP, where off-the-shelf AI solutions might not be optimal or applicable.

- Efficiency and Performance: AI models can be computationally expensive. Understanding algorithmic efficiency, a core tenet of DP, is crucial for developing AI systems that are scalable and performant. Sometimes, a well-crafted classical algorithm (like a DP solution) can be more efficient than a large AI model for specific, well-defined tasks.

- Hybrid Approaches: The future likely involves hybrid approaches where AI tools are used in conjunction with classical algorithmic techniques. For example, AI might help in formulating parts of a problem or suggesting potential subproblem structures, which are then refined and solved using DP.

- Understanding Limitations: Knowing the principles of DP and other algorithms helps in understanding the limitations of AI tools and when a more traditional algorithmic approach might be superior or necessary.