Data Structures

An Introduction to Data Structures

Data structures are fundamental to computer science and software development. At a high level, they are specialized formats for organizing, processing, retrieving, and storing data. Think of them as the shelves and filing cabinets of the digital world, designed not just to hold information, but to make it accessible and useful in specific ways. Different kinds of data structures are suited to different kinds of applications, and some are highly specialized for specific tasks. Understanding data structures is key to writing efficient and effective programs, as the way data is structured significantly impacts how algorithms can interact with it.

Working with data structures can be an engaging and intellectually stimulating endeavor. It involves a deep dive into problem-solving, where you might design a novel way to organize information for a cutting-edge application or optimize an existing system to handle massive amounts of data. The thrill of seeing a well-designed data structure significantly speed up a process or enable a new functionality can be immensely rewarding. Furthermore, a strong grasp of data structures opens doors to various exciting fields within technology, from developing complex algorithms and building large-scale software systems to pioneering advancements in areas like artificial intelligence and big data analytics.

What Exactly Are Data Structures?

At its core, a data structure is a particular way of organizing data in a computer so that it can be used effectively. It's not just about storing data, but about storing it in such a way that it can be accessed and worked with efficiently. Imagine you have a large collection of books. You could pile them randomly in a room, but finding a specific book would be a nightmare. Alternatively, you could arrange them alphabetically by author on shelves, or by genre, or by publication date. Each of these arrangements is a form of "data structure" for your books, and each makes certain tasks (like finding a book by a specific author) easier than others.

In computer science, these "arrangements" are more formalized and are designed to interact with the computer's memory and processing capabilities. The choice of data structure can have a profound impact on the performance of a software application. For example, if an application frequently needs to search for specific items within a massive dataset, using a data structure optimized for searching (like a hash table or a balanced tree) can make the difference between a response time of milliseconds and a response time of minutes or even hours. Therefore, understanding data structures is not just an academic exercise; it's a practical necessity for any serious programmer or software engineer.

This understanding allows developers to make informed decisions about how to handle data, leading to more efficient, scalable, and robust software. It's a foundational concept that underpins much of what makes modern computing possible, from the operating systems that run our devices to the complex applications we use every day.

A Brief Look Back: The Evolution of Data Organization

The concept of organizing data for efficient processing is as old as computing itself. Early computers had very limited memory and processing power, making efficient data organization not just a good idea, but an absolute necessity. In the mid-20th century, as programming languages began to emerge, so did rudimentary data structures like arrays and linked lists. These early structures provided basic ways to group and sequence data.

As computer science matured, so did the sophistication of data structures. The 1960s and 1970s saw the development of more complex structures like trees (useful for hierarchical data and efficient searching) and graphs (ideal for representing networks and relationships). The invention of hash tables revolutionized searching by providing, on average, constant-time lookups. These developments were often driven by the needs of specific applications, such as database systems, operating systems, and compilers.

Key milestones include the formalization of Abstract Data Types (ADTs), which separated the logical properties of a data structure from its concrete implementation. This allowed for greater flexibility and reusability. The development of complexity analysis, particularly Big O notation, provided a standardized way to compare the efficiency of different data structures and the algorithms that operate on them. This analytical framework became crucial for making informed design choices. Today, research continues into new data structures, especially for handling the massive datasets of the "big data" era and for specialized domains like quantum computing and bioinformatics.

The historical journey of data structures mirrors the broader evolution of computing, reflecting a continuous quest for greater efficiency, power, and abstraction in how we manage and process information. Understanding this history helps appreciate the ingenuity behind the tools developers use daily and the ongoing innovation in the field.

The Cornerstone of Computer Science and Software Development

Data structures are often described as the bedrock upon which computer science and software development are built. They are not just tools but fundamental concepts that shape how programmers think about and solve problems. Almost every significant piece of software, from the simplest mobile app to the most complex operating system or search engine, relies heavily on data structures to manage its information effectively.

In academic computer science programs, data structures are typically a core, foundational course, often taken early in the curriculum. This is because a solid understanding of data structures is a prerequisite for more advanced topics such as algorithm design, database systems, artificial intelligence, and compiler construction. Without an understanding of how data can be efficiently organized and accessed, it's nearly impossible to design algorithms that perform well or build systems that can scale to handle large amounts of data or high user loads.

For software developers, a practical knowledge of data structures is indispensable. When faced with a programming task, developers must often choose the most appropriate data structure to store and manipulate the data involved. This choice can dramatically affect the application's speed, memory usage, and overall efficiency. For instance, choosing to store a collection of items in an unsorted array when frequent searching is required would lead to slow performance, whereas a hash table or a balanced search tree could provide much faster lookups. Thus, data structures are a critical part of a software developer's toolkit, enabling them to write code that is not only correct but also performant and scalable.

Beyond individual applications, data structures also play a crucial role in the design of entire systems. The architecture of databases, the functioning of network routers, and the efficiency of search engines are all heavily dependent on sophisticated data structures and the algorithms that interact with them. Therefore, a deep understanding of data structures is vital for anyone aspiring to make significant contributions in the field of computer science or software engineering. If you are looking to delve deeper into the broader field, exploring Computer Science as a topic can provide a comprehensive overview.

Optimizing Performance: How Data Structures Influence Efficiency

The choice of a data structure is a critical decision in software development that directly influences algorithm efficiency and overall system performance. Each data structure has its own set of strengths and weaknesses when it comes to the speed of operations like insertion, deletion, searching, and traversal. Understanding these trade-offs is essential for writing high-performing code.

Consider the task of managing a dynamic collection of items. If you primarily need to add and remove items from one end, a stack or a queue might be the most efficient choice, offering constant-time operations for these tasks. However, if you need to frequently search for specific items within the collection, these linear structures would be inefficient, requiring, on average, a linear scan through the elements. In such a scenario, a hash table could provide average constant-time searches, or a balanced binary search tree could offer logarithmic-time searches, both significantly faster for large collections.

The impact on algorithm efficiency is profound. Many algorithms are designed to work optimally with specific data structures. For instance, Dijkstra's algorithm for finding the shortest path in a graph typically uses a priority queue (often implemented with a heap) to efficiently select the next vertex to visit. Using a less suitable data structure could dramatically increase the algorithm's running time. Similarly, sorting algorithms often operate on arrays, and their efficiency can depend on the initial state of the array and the properties of the chosen data structure.

System performance, which encompasses not just speed but also memory usage, is also heavily influenced by data structure choices. Some structures, like arrays, can be very memory-efficient if their size is known beforehand and fixed. Others, like linked lists, offer more flexibility in terms of dynamic sizing but might incur some memory overhead due to storing pointers. In memory-constrained environments or when dealing with massive datasets, these considerations become paramount. Ultimately, a thoughtful selection of data structures, guided by an understanding of their performance characteristics, is a hallmark of a skilled software engineer aiming to build efficient and robust systems.

Core Concepts and Essential Terminology

To navigate the world of data structures effectively, it's crucial to understand some core concepts and terminology. These foundational ideas provide the language and analytical tools necessary for discussing, designing, and evaluating data structures. They help in abstracting the problem, analyzing performance, and understanding how data is organized in memory. Mastering these concepts is the first step towards making informed decisions about which data structure to use for a given task.

This section will introduce you to Abstract Data Types (ADTs), the distinction between their logical definition and concrete implementation, the critical concept of time and space complexity (often expressed using Big O notation), the principles behind memory allocation and data organization, and the notions of mutability and persistence in data structures. These elements form the theoretical underpinning that supports practical application and innovation in the field.

Abstract Data Types (ADTs) vs. Concrete Implementations

An Abstract Data Type (ADT) is a mathematical model for data types. It defines a set of data values and a set of operations that can be performed on that data. Crucially, an ADT specifies what operations can be performed and what their logical behavior is, but not how these operations are implemented or how the data is actually stored in memory. Think of it as a blueprint or a contract: it tells you what a data type can do, but not the specific materials or methods used to build it.

For example, a "List" ADT might be defined as a collection of ordered items with operations like add(item), remove(item), get(index), and size(). This definition doesn't say whether the list should be implemented using an array, a linked list, or some other mechanism. It only describes the expected behavior: adding an item increases its size, getting an item at a valid index returns that item, and so on.

A concrete implementation, on the other hand, is the actual realization of an ADT. It provides the underlying data storage mechanism and the code for the operations defined by the ADT. For the "List" ADT mentioned above, a concrete implementation could be an array-based list (where items are stored in contiguous memory locations) or a linked list (where items are stored in nodes that point to each other). Each of these implementations will have different performance characteristics for the defined operations. For instance, getting an item by index is typically very fast in an array-based list (constant time) but might be slower in a linked list (linear time, as you may have to traverse the list).

This separation between the abstract definition (the "what") and the concrete implementation (the "how") is a powerful concept in software engineering. It allows for:

- Abstraction: Programmers can use an ADT without needing to know the details of its implementation. This simplifies the design and understanding of complex systems.

- Modularity: The implementation of an ADT can be changed without affecting the code that uses it, as long as the new implementation still adheres to the ADT's defined behavior. This is useful for optimization or fixing bugs.

- Reusability: A well-defined ADT can be implemented in various ways and used in many different applications.

Understanding the distinction between ADTs and their concrete implementations is fundamental to both designing and using data structures effectively. It encourages a focus on the logical requirements of a problem before diving into implementation details.

Measuring Efficiency: Time and Space Complexity (Big O Notation)

When evaluating data structures and the algorithms that operate on them, two key measures of efficiency are time complexity and space complexity. Time complexity refers to how the runtime of an operation or algorithm scales with the size of the input data. Space complexity refers to how the amount of memory used by an operation or algorithm scales with the size of the input data. Programmers strive to create solutions that are efficient in both time and space, although often there's a trade-off between the two.

Big O notation is the most common mathematical notation used to describe these complexities. It characterizes functions according to their growth rates: different functions with the same growth rate may be represented using the same O notation. Essentially, Big O notation describes the upper bound of the complexity in the worst-case scenario, focusing on how the performance changes as the input size (usually denoted as 'n') grows very large. It ignores constant factors and lower-order terms because, for large inputs, the highest-order term dominates the growth rate.

Some common Big O complexities include:

- O(1) - Constant Time: The operation takes the same amount of time regardless of the input size. Accessing an element in an array by its index is typically O(1).

- O(log n) - Logarithmic Time: The time taken increases logarithmically with the input size. Binary search in a sorted array is an example. This is very efficient for large datasets.

- O(n) - Linear Time: The time taken increases linearly with the input size. Searching for an item in an unsorted list is often O(n).

- O(n log n) - Linearithmic Time: Common in efficient sorting algorithms like Merge Sort and Heap Sort.

- O(n2) - Quadratic Time: The time taken increases with the square of the input size. Simpler sorting algorithms like Bubble Sort or Insertion Sort can have this complexity. Becomes very slow for large 'n'.

- O(2n) - Exponential Time: The time taken doubles with each addition to the input data set. These algorithms are usually impractical for all but very small input sizes.

- O(n!) - Factorial Time: The time taken grows factorially with the input size. Extremely slow and only feasible for tiny 'n'.

Understanding Big O notation allows developers to analyze and compare different approaches to solving a problem. For instance, if one algorithm for a task has O(n2) time complexity and another has O(n log n), the latter will generally be much faster for large inputs. This analysis is crucial for building scalable applications that can handle growing amounts of data and user traffic. It's a fundamental skill for anyone serious about software development and algorithm design.

These courses offer a solid introduction to the analysis of algorithms, including Big O notation.

For those looking to deepen their understanding through reading, these books are highly recommended.

Memory Matters: Allocation and Data Organization

How data is stored and organized in a computer's memory is a critical aspect of data structures. Memory allocation refers to the process of assigning blocks of memory to programs and their data. There are primarily two types of memory allocation: static and dynamic.

Static memory allocation happens at compile time. The size and type of memory needed are known before the program runs. Global variables, static variables, and data stored on the stack (for function calls and local variables) are typically allocated statically. Arrays, when their size is fixed at compile time, are a common example of a data structure using static allocation. The main advantage is speed and simplicity, as memory is allocated and deallocated automatically. However, the size must be known in advance and cannot easily change during runtime, which can lead to wasted space or insufficient space.

Dynamic memory allocation happens at runtime. Memory is allocated from a pool of available memory called the heap. This allows programs to request memory as needed and release it when it's no longer required. Data structures like linked lists, trees, and dynamically sized arrays (like Python lists or C++ vectors) often use dynamic memory allocation. This provides flexibility, as the data structure can grow or shrink based on the program's needs. However, it comes with more responsibility for the programmer, who must explicitly manage this memory (allocate and deallocate). Failure to deallocate memory can lead to memory leaks, where unused memory is not returned to the system, eventually causing the program or system to run out of memory. Conversely, trying to access deallocated memory can lead to crashes or unpredictable behavior.

The organization of data within these allocated memory blocks is what defines the structure.

- Contiguous Organization: Data elements are stored in adjacent memory locations. Arrays are the prime example. This allows for efficient access to elements using an index because the address of any element can be calculated directly from the base address and the index. However, insertions and deletions in the middle of a contiguous structure can be expensive, as they may require shifting many elements.

- Linked Organization: Data elements (often called nodes) are stored in arbitrary memory locations, and each element contains a pointer (or link) to the next element (and sometimes the previous one). Linked lists and trees use this approach. This allows for efficient insertions and deletions, as only pointers need to be updated. However, accessing an element by its position can be slower, as it may require traversing the structure from the beginning.

Understanding memory allocation and organization principles is crucial for choosing the right data structure and for writing efficient, bug-free code, especially in languages like C or C++ where manual memory management is prevalent.

This course touches upon dynamic memory allocation in the context of C.

Understanding Change: Mutability and Persistence in Data Structures

Mutability and persistence are two important characteristics that describe how data structures behave with respect to changes and time. Understanding these concepts helps in designing predictable and robust systems, especially in concurrent environments or when needing to track history.

Mutability refers to whether a data structure can be changed after it is created.

- A mutable data structure can be modified in place. For example, if you have a mutable list, you can add elements, remove elements, or change existing elements directly within that list object. Most common data structures like arrays, standard lists in Python, and hash maps are mutable by default in many programming languages. While mutability offers flexibility and can be efficient for in-place updates, it can also lead to complexities, especially in concurrent programming where multiple threads might try to modify the same data structure simultaneously, or when a reference to a mutable object is shared and an unexpected modification occurs.

- An immutable data structure, once created, cannot be changed. Any operation that appears to "modify" an immutable data structure actually creates and returns a new data structure with the change, leaving the original untouched. Strings in many languages (like Java and Python) and tuples in Python are examples of immutable data structures. Immutability offers several advantages: they are inherently thread-safe (since they can't be changed, there are no race conditions), they are simpler to reason about (their state is fixed), and they can be useful for caching or representing values that should not change. However, frequent "modifications" can be less efficient as they involve creating new objects.

Persistence in the context of data structures (not to be confused with data persistence like saving to a disk) refers to the ability of a data structure to preserve its previous versions when it is modified.

- In a partially persistent data structure, all versions can be accessed, but only the newest version can be modified.

- In a fully persistent data structure, every version can be both accessed and modified.

Persistent data structures are effectively immutable in their core, as modifications always yield new versions. They are particularly useful in functional programming and in applications requiring undo/redo functionality, version control, or maintaining historical states of data without extensive copying. Techniques like path copying in trees allow for efficient creation of new versions by sharing unchanged parts of the structure.

The choice between mutable and immutable, or the decision to use persistent data structures, depends on the specific requirements of the application, including performance needs, concurrency considerations, and the need for historical data tracking. Many modern programming paradigms and libraries increasingly favor immutability and persistence for building more robust and predictable software.

Exploring the Landscape: Types of Data Structures

The world of data structures is vast and varied, offering a diverse toolkit for programmers to organize and manage data. These structures can be broadly categorized based on their organization, how they store elements, and the relationships between those elements. Understanding these different types allows developers to select the most efficient structure for the task at hand, optimizing for speed, memory usage, or specific operational needs. From simple building blocks to complex arrangements, each type serves distinct purposes in the realm of software development.

In this section, we'll navigate through this landscape, starting with the fundamental distinction between primitive and composite structures. We will then delve into linear structures like arrays, linked lists, stacks, and queues, where elements are arranged sequentially. Following that, we'll explore non-linear structures such as trees, graphs, and heaps, which represent more complex relationships. Finally, we'll examine hash-based structures, known for their efficient data retrieval capabilities, and the common challenge of collision resolution.

Building Blocks: Primitive vs. Composite Structures

Data structures can be fundamentally classified into two main categories: primitive and composite (or non-primitive) data structures. This distinction is based on how they are constructed and the nature of the data they hold.

Primitive Data Structures are the most basic data types that are directly supported by a programming language. They are the fundamental building blocks for all other data structures and typically hold a single, simple value. Examples of primitive data types include:

- Integers: Whole numbers (e.g., 5, -10, 0).

- Floating-point numbers: Numbers with a decimal point (e.g., 3.14, -0.001).

- Characters: Single letters, symbols, or numbers represented as characters (e.g., 'a', '$', '7').

- Booleans: Logical values representing true or false.

These types are "primitive" because their representation is usually directly mapped to machine-level instructions, and they cannot be broken down into simpler data types. Operations on primitive data types are generally very fast and efficient.

Composite Data Structures (also known as non-primitive or compound data structures) are more complex. They are derived from primitive data structures and are designed to store a collection of values, which can be of the same type or different types. These structures define a particular way of organizing and accessing these collections. Composite data structures can be further classified into linear and non-linear structures. Examples include:

- Arrays: Collections of elements of the same type stored in contiguous memory locations.

- Linked Lists: Collections of elements (nodes) where each node contains data and a reference (or link) to the next node in the sequence.

- Stacks: Linear structures that follow a Last-In, First-Out (LIFO) principle.

- Queues: Linear structures that follow a First-In, First-Out (FIFO) principle.

- Trees: Hierarchical structures consisting of nodes connected by edges.

- Graphs: Collections of nodes (vertices) connected by edges, representing relationships between entities.

- Hash Tables: Structures that map keys to values for efficient lookups.

Understanding this basic classification is important because composite data structures are built using primitive types. The way these primitives are combined and the rules governing their organization and access define the characteristics and utility of each composite data structure.

These courses provide a good foundation in various data structures, including how they are built from simpler components.

Following a Line: Linear Structures (Arrays, Linked Lists, Stacks, Queues)

Linear data structures are characterized by elements arranged in a sequential or linear manner. Each element is connected to its previous and next elements, forming a straight line of data. This sequential arrangement dictates how data is accessed and processed. Let's explore some of the most common linear data structures:

Arrays: An array is a collection of items of the same data type stored at contiguous memory locations. This contiguity allows for constant-time access (O(1)) to any element if its index is known. Arrays are simple to understand and implement, making them a fundamental data structure. However, their size is often fixed at the time of creation, which can lead to inefficiencies if the number of elements changes frequently (requiring resizing, which can be costly) or if the allocated size is much larger than needed (wasting memory). Inserting or deleting elements in the middle of an array can also be slow (O(n)) because subsequent elements may need to be shifted.

Linked Lists: A linked list is a linear data structure where elements are not stored at contiguous memory locations. Instead, each element (called a node) consists of two parts: the data itself and a reference (or pointer) to the next node in the sequence. This structure allows for dynamic sizing, as nodes can be added or removed easily without reallocating the entire structure. Insertions and deletions, especially at the beginning or end, can be very efficient (O(1)) if you have a pointer to the relevant location. However, accessing an element by its index requires traversing the list from the beginning (O(n)), which is slower than array access. Variations include doubly linked lists (where each node also points to the previous node) and circular linked lists (where the last node points back to the first).

Stacks: A stack is an abstract data type that serves as a collection of elements, with two principal operations: push, which adds an element to the collection, and pop, which removes the most recently added element that was not yet removed. This behavior is known as Last-In, First-Out (LIFO). Think of a stack of plates: you add plates to the top and remove plates from the top. Stacks are used in many computing applications, such as managing function calls (the call stack), parsing expressions (infix to postfix conversion), and implementing undo mechanisms.

Queues: A queue is another abstract data type that serves as a collection of elements, with two principal operations: enqueue, which adds an element to the rear of the collection, and dequeue, which removes an element from the front of the collection. This behavior is known as First-In, First-Out (FIFO). Imagine a queue of people waiting for a bus: the first person to join the queue is the first person to get on the bus. Queues are widely used in scenarios like task scheduling in operating systems, managing requests in web servers, and breadth-first search in graphs.

Each of these linear structures provides a different set of trade-offs regarding access patterns, modification efficiency, and memory usage, making them suitable for different types of problems.

To get started with these fundamental structures, especially with Python, these resources are helpful:

For a deeper dive into implementations and theory, consider this classic text:

Branching Out: Non-Linear Structures (Trees, Graphs, Heaps)

Non-linear data structures are those where data elements are not arranged in a sequential manner. Instead, elements can be connected to multiple other elements, representing hierarchical or network-like relationships. This allows for more complex connections and often more efficient ways to handle certain types of data and operations compared to linear structures.

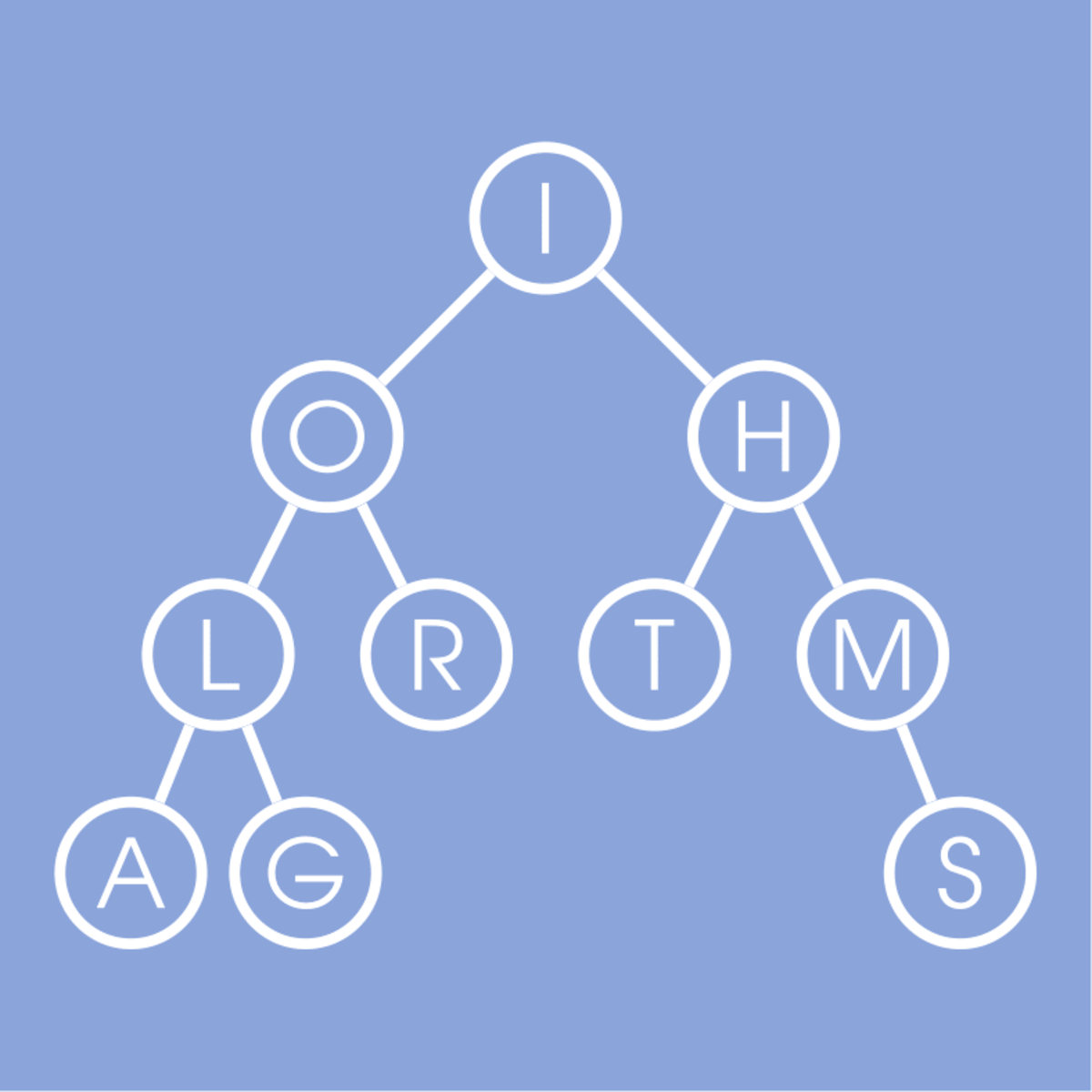

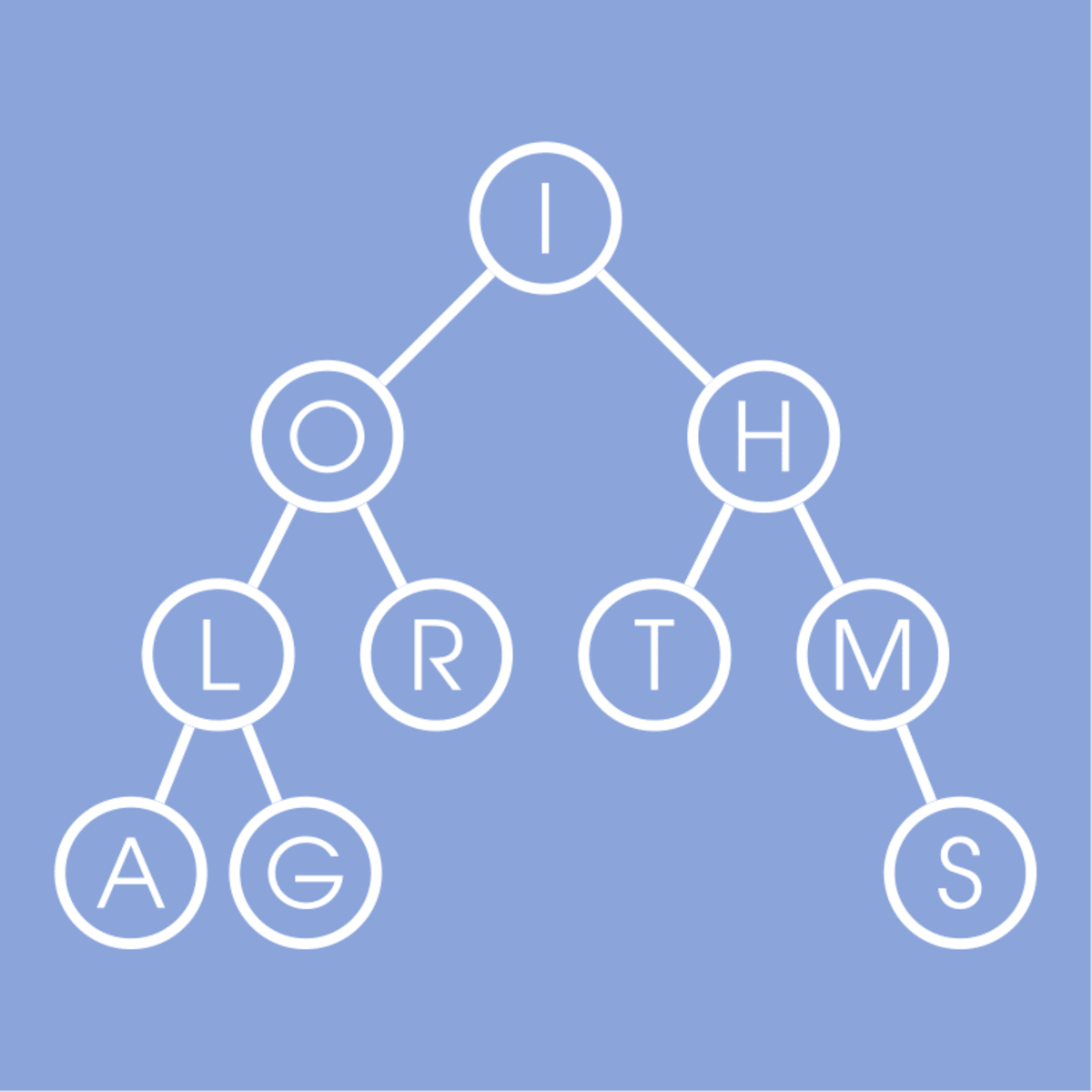

Trees: A tree is a hierarchical data structure consisting of a set of linked nodes, where one node is designated as the root, and all other nodes are descendants of the root. Each node can have zero or more child nodes, and nodes with no children are called leaf nodes. Trees are used to represent hierarchical relationships, such as file systems, organization charts, or the structure of an XML document. Common types of trees include:

- Binary Trees: Each node has at most two children (a left child and a right child).

- Binary Search Trees (BSTs): A binary tree where for each node, all values in its left subtree are less than its own value, and all values in its right subtree are greater. BSTs allow for efficient searching, insertion, and deletion (often O(log n) on average if the tree is balanced).

- Balanced Trees (e.g., AVL Trees, Red-Black Trees): These are BSTs that automatically maintain a certain level of balance to ensure that operations remain efficient (worst-case O(log n)) even with many insertions and deletions.

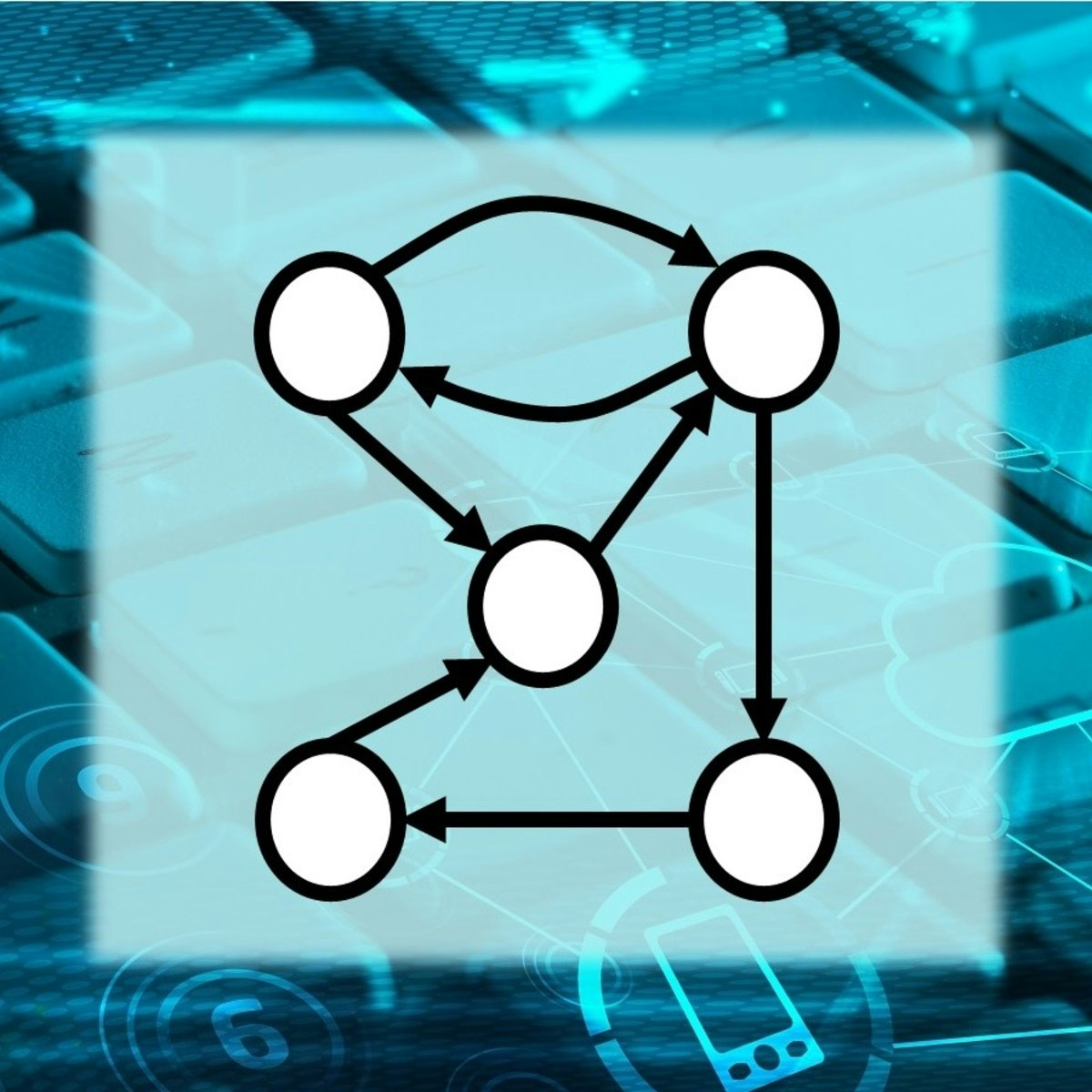

Graphs: A graph is a collection of nodes (also called vertices) and edges that connect pairs of nodes. Unlike trees, graphs do not necessarily have a root or a hierarchical structure; connections can be arbitrary, and cycles (paths that start and end at the same node) are common. Graphs are incredibly versatile and are used to model a wide variety of real-world systems, such as social networks (nodes are people, edges are friendships), road networks (nodes are intersections, edges are roads), and the internet (nodes are web pages, edges are links). Graphs can be directed (edges have a direction) or undirected (edges have no direction), and edges can have weights (representing costs, distances, etc.). Common graph algorithms include those for finding shortest paths (e.g., Dijkstra's algorithm), traversing the graph (e.g., Breadth-First Search, Depth-First Search), and finding minimum spanning trees (e.g., Kruskal's or Prim's algorithm). You can explore Mathematics to understand the theoretical underpinnings of graph theory.

Heaps: A heap is a specialized tree-based data structure that satisfies the heap property: in a max-heap, for any given node C, if P is a parent node of C, then the key (the value) of P is greater than or equal to the key of C. In a min-heap, the key of P is less than or equal to the key of C. Heaps are commonly implemented as binary trees and are particularly useful for implementing priority queues, where elements with higher (or lower) priority are processed first. They offer efficient insertion (O(log n)) and extraction of the minimum/maximum element (O(log n)). Heapsort is also a well-known sorting algorithm that uses a heap.

These non-linear structures open up powerful ways to model and solve complex problems that are difficult to address with linear structures alone.

Courses that cover these non-linear structures in detail include:

For further reading on these topics, consider this book:

Efficient Lookups: Hash-Based Structures and Collision Resolution

Hash-based data structures, most notably hash tables (also known as hash maps or dictionaries in some languages), are designed for extremely efficient lookups, insertions, and deletions. On average, these operations can be performed in constant time, O(1), which is significantly faster than the O(log n) or O(n) times offered by many other structures, especially for large datasets.

The core idea behind a hash table is a hash function. A hash function takes an input (a key, which can be of various types like a string or number) and computes an integer value called a hash code. This hash code is then typically mapped to an index in an array (often called buckets or slots). The value associated with the key is then stored at this calculated index. When you want to retrieve a value, you apply the same hash function to the key, get the index, and directly access the value. This direct computation of the index is what allows for the O(1) average-case performance.

However, a major challenge with hash tables is collisions. A collision occurs when two different keys produce the same hash code (and thus map to the same array index). Since two distinct items cannot be stored in the exact same spot, strategies are needed to handle these collisions. This is known as collision resolution. Common collision resolution techniques include:

- Chaining (or Separate Chaining): Each bucket in the array, instead of storing a single value, stores a pointer to a linked list (or another data structure) of all key-value pairs that hash to that index. When a collision occurs, the new key-value pair is simply added to this linked list. Lookups involve hashing to the index and then searching through the (hopefully short) linked list at that bucket.

-

Open Addressing (or Closed Hashing): All key-value pairs are stored directly within the array itself. When a collision occurs, the algorithm probes for the next available empty slot in the array according to a predefined sequence. Common probing strategies include:

- Linear Probing: Sequentially checks the next slot, then the next, and so on, wrapping around if necessary.

- Quadratic Probing: Checks slots at offsets that increase quadratically (e.g., index + 12, index + 22, index + 32).

- Double Hashing: Uses a second hash function to determine the step size for probing.

The choice of hash function and collision resolution strategy significantly impacts the performance of a hash table. A good hash function distributes keys uniformly across the buckets, minimizing collisions. Effective collision resolution ensures that even when collisions do occur, performance degradation is graceful. Poor choices can lead to clustering (where many keys map to nearby slots), degrading performance towards O(n) in the worst case. Despite this, well-implemented hash tables are a cornerstone of efficient data management in countless applications, including database indexing, caching, and symbol tables in compilers.

These courses provide practical insights into hash tables and their applications.

The Interplay: Algorithms and Data Structures

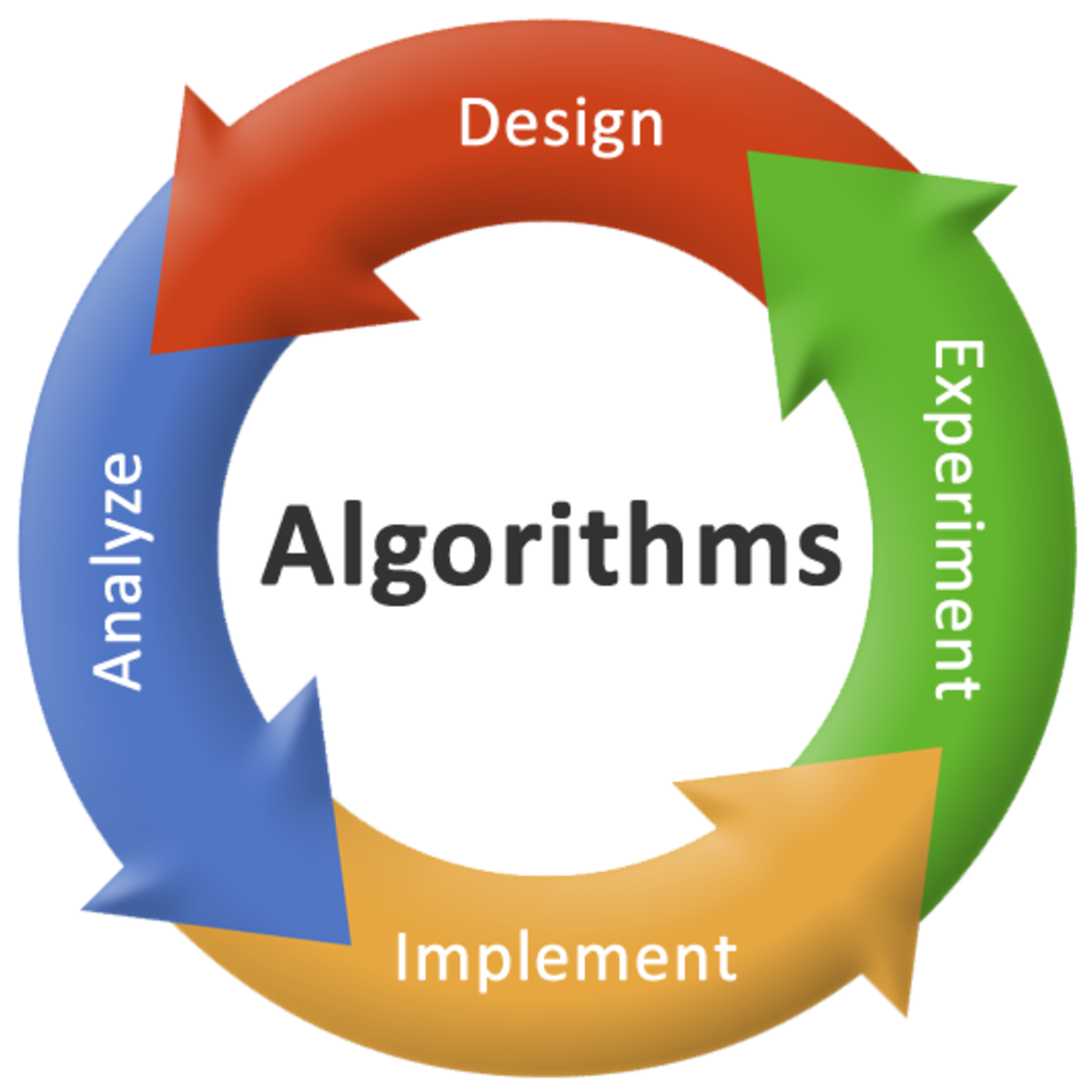

Data structures and algorithms are inextricably linked; they are two sides of the same coin in the world of computer science. A data structure is a way to store and organize data, while an algorithm is a set of instructions or rules designed to perform a specific task, often on that data. The choice of data structure directly impacts which algorithms can be used and how efficient those algorithms will be. Conversely, the requirements of an algorithm can dictate the choice of data structure.

Imagine trying to find a specific word in a dictionary. If the dictionary (our data structure) is sorted alphabetically, a binary search algorithm (which repeatedly divides the search interval in half) is very efficient. If the words were randomly ordered, you'd have to use a linear search (checking each word one by one), which is much slower for a large dictionary. This simple example illustrates the symbiotic relationship: the sorted nature of the dictionary enables the efficient binary search algorithm.

This section explores this critical interplay, examining how data structures underpin common algorithmic tasks like searching and sorting, how specific structures enable powerful graph traversal techniques, the role of data structures in optimization strategies like dynamic programming, and considerations for designing data structures in concurrent environments. Understanding this relationship is key to developing efficient and effective software solutions. You may also want to explore Algorithms as a broader topic of study.

Partners in Efficiency: Searching and Sorting Algorithm Dependencies

Searching and sorting are two of the most fundamental operations in computer science, and their efficiency is heavily dependent on the underlying data structures used to store the data. The way data is organized directly influences how quickly we can find a particular item or arrange the entire dataset in a specific order.

Searching Algorithms: The goal of a searching algorithm is to find a specific element (the target) within a collection of elements.

- With an unsorted array or linked list, the most straightforward approach is a linear search, which checks each element one by one until the target is found or the end of the collection is reached. This has a time complexity of O(n) in the worst case.

- If the data is stored in a sorted array, a much more efficient binary search can be used. Binary search repeatedly divides the search interval in half, achieving an O(log n) time complexity. This highlights how a sorted data structure enables a more efficient algorithm.

- Hash tables are designed for fast searching. By using a hash function to map keys to indices, they can achieve average O(1) search times, making them ideal when search speed is paramount.

- Binary Search Trees (BSTs), especially balanced ones like AVL trees or Red-Black trees, offer O(log n) search times. They provide a dynamic alternative to sorted arrays, allowing efficient insertions and deletions while maintaining search performance.

Sorting Algorithms: Sorting algorithms arrange elements in a specific order (e.g., ascending or descending).

- Many sorting algorithms, like Bubble Sort, Insertion Sort, and Selection Sort, operate directly on arrays. Their efficiency varies (often O(n2) in the worst or average case), but their implementation is relatively simple.

- More efficient array-based sorting algorithms like Merge Sort and Quicksort achieve an average time complexity of O(n log n). Quicksort's in-place nature makes it memory efficient, while Merge Sort's stability is sometimes preferred.

- Heapsort uses a heap data structure (typically a binary heap implemented with an array) to sort elements in O(n log n) time. It's an in-place algorithm.

- Specialized sorting algorithms like Counting Sort or Radix Sort can be even faster (e.g., O(n+k) or O(nk)) for specific types of data (like integers within a known range) by leveraging properties of the data itself, often using auxiliary array structures.

The choice of data structure thus predetermines or heavily influences the feasible and efficient searching and sorting algorithms. A programmer must consider the expected operations on the data (how often will it be searched? sorted? modified?) when selecting a data structure to ensure optimal overall performance.

These courses cover various searching and sorting algorithms and their relationship with data structures.

For a foundational text that details many of these algorithms and their analysis, "Introduction to Algorithms" is an excellent resource.

Navigating Networks: Graph Traversal Algorithms (BFS/DFS)

Graph traversal algorithms are fundamental techniques for systematically visiting all the nodes in a graph. These algorithms are crucial for solving a wide array of problems related to networks, such as finding paths, checking connectivity, identifying cycles, and forming the basis for more complex graph algorithms. The two most common graph traversal algorithms are Breadth-First Search (BFS) and Depth-First Search (DFS). The way a graph is represented (e.g., adjacency list or adjacency matrix) can impact the implementation efficiency of these traversals.

Breadth-First Search (BFS): BFS explores the graph layer by layer. It starts at a given source node and visits all its immediate neighbors first. Then, for each of those neighbors, it visits their unvisited neighbors, and so on. This process continues until all reachable nodes from the source have been visited. BFS typically uses a queue data structure to keep track of the nodes to visit next. Applications of BFS:

- Finding the shortest path between two nodes in an unweighted graph (in terms of the number of edges).

- Web crawlers use BFS to explore websites level by level.

- Detecting cycles in an undirected graph.

- Used in algorithms like Cheney's algorithm for garbage collection.

- Network broadcasting or finding all connected components of a graph.

Depth-First Search (DFS): DFS explores the graph by going as deep as possible along each branch before backtracking. It starts at a given source node, explores one of its neighbors, then explores one of that neighbor's neighbors, and so on, until it reaches a node with no unvisited neighbors or a dead end. Then, it backtracks to the previous node and explores another unvisited branch. DFS typically uses a stack data structure (either explicitly or implicitly via recursion using the call stack) to keep track of the nodes to visit. Applications of DFS:

- Detecting cycles in a directed or undirected graph.

- Topological sorting of a directed acyclic graph (DAG).

- Finding connected components or strongly connected components in a graph.

- Solving puzzles with only one solution, such as mazes (DFS explores one path to its end).

- Pathfinding algorithms.

The choice between BFS and DFS depends on the problem at hand. If you need to find the shortest path in terms of edges or explore layer by layer, BFS is generally preferred. If you need to explore a path to its full depth, check for cycles, or perform topological sorting, DFS is often more suitable. Both algorithms have a time complexity of O(V + E), where V is the number of vertices and E is the number of edges, when implemented with an adjacency list representation of the graph. Data structures (queues for BFS, stacks for DFS) are integral to their operation.

To gain practical experience with graph algorithms, these courses are excellent choices:

Optimization Strategies: Dynamic Programming and Memoization

Dynamic Programming (DP) is a powerful algorithmic technique used for solving complex problems by breaking them down into simpler, overlapping subproblems. The key idea is to solve each subproblem only once and store its result, typically in a data structure like an array or a hash table, so that it can be reused if the same subproblem is encountered again. This avoidance of recomputing solutions to subproblems is what makes DP efficient for problems that exhibit overlapping subproblems and optimal substructure (where the optimal solution to the overall problem can be constructed from optimal solutions to its subproblems).

Memoization is a specific optimization strategy often used in conjunction with a top-down (recursive) approach to dynamic programming. In memoization, the results of expensive function calls (solutions to subproblems) are cached (or "memoized"). When the function is called again with the same inputs, the cached result is returned immediately instead of recomputing it. This is typically implemented by using a lookup table (often an array or a hash map) to store the results of already solved subproblems. If a subproblem's result is not in the table, it is computed, stored in the table, and then returned. This approach preserves the natural recursive structure of the problem while gaining the efficiency benefits of DP.

Data structures play a crucial role in both dynamic programming and memoization:

- Arrays (1D, 2D, or multi-dimensional): These are commonly used to store the solutions to subproblems in a bottom-up DP approach (also known as tabulation). The dimensions of the array often correspond to the parameters that define the subproblems. For example, in the classic knapsack problem, a 2D array might store the maximum value achievable for different item counts and capacities.

- Hash Tables (or Dictionaries): These are frequently used in memoization with a top-down recursive approach. The keys of the hash table might represent the parameters of a subproblem (e.g., a tuple of input values), and the values would be the computed solutions. Hash tables are useful when the subproblem space is sparse or not easily mapped to array indices.

- Other Structures: Depending on the problem, other data structures like trees or even custom structures might be used to organize and retrieve subproblem solutions.

For example, calculating the nth Fibonacci number can be done efficiently using DP. A naive recursive approach has exponential time complexity due to recomputing the same Fibonacci numbers multiple times. With memoization, each Fibonacci number F(i) is computed once and stored. Subsequent requests for F(i) retrieve the stored value. A bottom-up DP approach would build an array, iteratively calculating F(i) from F(i-1) and F(i-2).

Dynamic programming and memoization are powerful tools for optimizing algorithms that would otherwise be too slow. The effective use of data structures to store and retrieve intermediate results is central to their success. Understanding these techniques is crucial for tackling many complex computational problems efficiently.

These courses can help build a strong foundation in dynamic programming and related algorithmic techniques:

Working Together: Concurrency and Thread-Safe Data Structures

In modern computing, concurrent programming—where multiple tasks or threads execute seemingly simultaneously—is essential for maximizing performance, especially on multi-core processors. However, concurrency introduces significant challenges when multiple threads need to access and modify shared data structures. Without proper synchronization, this can lead to race conditions (where the outcome depends on the unpredictable timing of operations), data corruption, and other hard-to-debug issues.

A thread-safe data structure is one that guarantees correct behavior even when accessed by multiple threads concurrently. Achieving thread safety typically involves mechanisms to control access to shared resources and ensure that operations are atomic (indivisible) or properly ordered. Common approaches to creating thread-safe data structures include:

- Locks (Mutexes, Semaphores): Locks are synchronization primitives that allow only one thread (or a limited number of threads, in the case of semaphores) to access a critical section of code (e.g., code that modifies the data structure) at a time. While effective, locks can introduce performance bottlenecks if contention is high, and they can lead to deadlocks if not used carefully.

- Atomic Operations: Many processors provide atomic instructions for simple operations like incrementing a counter or compare-and-swap. These can be used to build more complex thread-safe structures without explicit locks for certain operations, often leading to better performance.

- Immutable Data Structures: As discussed earlier, immutable data structures are inherently thread-safe because their state cannot change after creation. If threads only read from an immutable structure, no synchronization is needed. "Modifications" create new instances, avoiding shared mutable state issues.

-

Concurrent Data Structures (Lock-Free/Wait-Free): These are sophisticated data structures designed to allow concurrent access without using traditional locks. Lock-free structures guarantee that at least one thread will always make progress. Wait-free structures guarantee that every thread will make progress in a finite number of steps. Examples include concurrent queues, stacks, and hash maps provided by libraries in languages like Java (e.g.,

java.util.concurrentpackage) or through specialized libraries. These often use atomic operations and careful algorithmic design to manage concurrent access.

Choosing or designing data structures for concurrent environments requires careful consideration of the trade-offs between correctness, performance, and complexity. For example, a coarse-grained lock that protects the entire data structure is simpler to implement but might limit concurrency, while fine-grained locking or lock-free approaches can offer better scalability but are much harder to design and verify correctly.

The performance of concurrent applications often hinges on the efficiency of the underlying data structures and their ability to handle concurrent access. Understanding these principles is crucial for developers building multi-threaded applications, distributed systems, or high-performance computing solutions. Many programming languages and platforms now offer built-in concurrent data structures that are highly optimized and tested, which are often the best choice for application developers.

Data Structures in Action: Real-World Applications

The theoretical concepts of data structures come to life in countless real-world applications, forming the backbone of much of the technology we use daily. From how search engines quickly find information to how social networks map connections, and how e-commerce sites manage inventory, data structures are the invisible engines driving efficiency and functionality. Understanding these practical applications not only solidifies one's grasp of the concepts but also highlights their importance in solving tangible problems across various industries.

This section will explore several prominent examples of data structures at work. We'll see how B-trees are fundamental to database indexing, how variants of linked lists underpin blockchain technology, the role of tensor representations in the rapidly evolving field of machine learning, and how graph algorithms are essential for network routing. These examples demonstrate the power and versatility of data structures in addressing complex, large-scale challenges.

Organizing the Web's Information: Database Indexing (e.g., B-Trees)

Databases store vast amounts of information, and retrieving specific data quickly is crucial for most applications. Imagine searching for a customer record in a database with millions of entries; without an efficient indexing mechanism, this could take an unacceptably long time. Database indexing is a technique used to speed up the performance of queries by minimizing the number of disk accesses required when a query is processed. One of the most widely used data structures for database indexing is the B-tree and its variants (like B+ trees).

A B-tree is a self-balancing tree data structure that maintains sorted data and allows searches, sequential access, insertions, and deletions in logarithmic time. What makes B-trees particularly well-suited for databases is their design, which is optimized for systems that read and write large blocks of data. Disk I/O operations are typically much slower than memory operations. B-trees reduce the number of disk accesses by being "wide" and "shallow." Each node in a B-tree can have many children (often hundreds or even thousands), and it stores many keys. This means the height of the tree is kept very small, even for a massive number of records. Since traversing from the root to a leaf often involves reading one disk block per node, a shallow tree means fewer disk reads.

In a typical database index using a B+ tree (a common variant), the actual data records might be stored separately, and the leaf nodes of the B+ tree contain pointers to these records. The leaf nodes are also often linked together sequentially, which allows for efficient range queries (e.g., "find all employees with salaries between $50,000 and $70,000"). When a query is made (e.g., SELECT * FROM employees WHERE employee_id = 12345), the database system uses the B-tree index to quickly navigate to the leaf node containing the key (employee_id 12345) and then retrieves the corresponding data record. Insertions and deletions are also handled efficiently by algorithms that maintain the B-tree's balanced structure and properties.

Without efficient indexing structures like B-trees, database operations on large datasets would be impractically slow, severely limiting the usability and performance of most modern software applications that rely on databases. This makes B-trees a cornerstone of database management systems.

To understand database systems more broadly, you may find these resources useful.

Securing Transactions: Blockchain Technology (Linked List Variants)

Blockchain technology, the foundational technology behind cryptocurrencies like Bitcoin and many other decentralized applications, relies heavily on cryptographic principles and specific data structures to ensure security, immutability, and transparency. At its core, a blockchain is essentially a distributed and continually growing list of records, called blocks, which are linked and secured using cryptography. This "chain of blocks" can be thought of as a specialized variant of a linked list.

In a simple linked list, each node contains data and a pointer to the next node. In a blockchain, each block typically contains:

- Data: This could be a set of transactions (as in Bitcoin), smart contract information, or other types of records.

- Hash of the current block: A cryptographic hash (like SHA-256) is calculated based on the block's content (including its data, timestamp, and the hash of the previous block). This hash acts as a unique identifier for the block.

- Hash of the previous block: This is crucial. Each block contains the cryptographic hash of the block that came before it in the chain. This is what links the blocks together sequentially and chronologically.

The inclusion of the previous block's hash in the current block is what makes the blockchain highly resistant to tampering. If an attacker tries to alter the data in a past block, the hash of that block would change. Since this hash is included in the subsequent block, that subsequent block's hash would also change, and so on, all the way up the chain. This cascading effect makes unauthorized modifications easily detectable, especially in a distributed system where many participants hold copies of the blockchain. The first block in a blockchain, called the "genesis block," does not have a previous block hash.

While the fundamental structure is akin to a linked list (each block "points" to the previous one via its hash), blockchains often incorporate more complex tree-like structures within each block, such as Merkle trees, to efficiently summarize and verify large sets of transactions. The overall chain, however, maintains that linked, chronological sequence of blocks. This combination of cryptographic hashing and a linked data structure is what gives blockchain its characteristic security and immutability.

Exploring topics related to Blockchain can provide more context on this technology.

Powering Intelligence: Machine Learning (Tensor Representations)

Machine Learning (ML), particularly deep learning, heavily relies on efficient ways to represent and manipulate large, multi-dimensional datasets. The fundamental data structure used in this domain is the tensor. While the term "tensor" has a precise mathematical definition as a multilinear map, in the context of ML and programming frameworks like TensorFlow and PyTorch, it's often used more informally to refer to a multi-dimensional array.

Tensors generalize scalars, vectors, and matrices to higher dimensions:

- A 0D tensor is a scalar (a single number).

- A 1D tensor is a vector (a list of numbers).

- A 2D tensor is a matrix (a table of numbers with rows and columns).

- A 3D tensor can represent data like a sequence of matrices (e.g., time-series data where each time step is a matrix, or a color image with height, width, and color channels).

- Higher-dimensional tensors (4D, 5D, etc.) are used for more complex data, such as a batch of color images (batch size, height, width, channels) or video data (batch size, frames, height, width, channels).

Neural networks, the core of deep learning, process data in the form of tensors. The input data (images, text, sound), the weights and biases of the network's layers, and the outputs of these layers are all represented as tensors. ML libraries are highly optimized for performing mathematical operations (like matrix multiplication, dot products, convolutions) on these tensors, often leveraging specialized hardware like Graphics Processing Units (GPUs) or Tensor Processing Units (TPUs) for acceleration.

The use of tensors allows for a unified way to handle various types of data and to perform the complex computations required for training and running ML models. For example, in image processing, an image can be represented as a 3D tensor (height x width x color channels). A batch of images for training a model would then be a 4D tensor. The layers of a convolutional neural network (CNN) perform operations on these tensors to extract features and make predictions. The efficiency of these tensor operations is critical for the performance of ML systems. Thus, while a tensor is conceptually a multi-dimensional array, its role as the primary data structure in ML highlights the importance of choosing appropriate representations for complex computations.

If you're interested in the intersection of data structures and AI, these courses might be appealing:

You can also browse courses in Artificial Intelligence for a broader understanding.

Connecting the Dots: Network Routing (Graph Algorithms)

Network routing is the process of selecting a path for traffic in a network, or between or across multiple networks. This is a fundamental task in computer networks, from the internet backbone to local area networks and even in applications like GPS navigation. At the heart of network routing are graph algorithms, which model the network as a graph and use various techniques to find optimal paths.

In this model:

- Nodes (Vertices) represent routers, switches, computers, or even geographical locations (like intersections in a road network).

- Edges represent the connections or links between these nodes (e.g., network cables, wireless links, roads).

- Edges often have associated weights or costs, which can represent various metrics like distance, latency (delay), bandwidth (capacity), or monetary cost.

The primary goal of routing algorithms is often to find the "shortest" or "best" path between a source and a destination node. "Shortest" can mean different things depending on the metric used for edge weights. Common graph algorithms used in network routing include:

- Dijkstra's Algorithm: Finds the shortest path from a single source node to all other nodes in a graph with non-negative edge weights. It's widely used in link-state routing protocols like OSPF (Open Shortest Path First).

- Bellman-Ford Algorithm: Also finds the shortest path from a single source, but it can handle graphs with negative edge weights (as long as there are no negative-weight cycles reachable from the source). It's used in distance-vector routing protocols like RIP (Routing Information Protocol), although RIP itself has limitations with larger networks.

- Floyd-Warshall Algorithm: Computes the shortest paths between all pairs of vertices in a weighted graph. While powerful, it can be more computationally intensive for very large networks compared to running single-source algorithms multiple times.

- Breadth-First Search (BFS): Can find the shortest path in terms of the number of hops (edges) in an unweighted graph.

Routing algorithms must also consider dynamic network conditions like link failures or congestion. Protocols often involve routers exchanging information about network topology and link states, allowing them to update their routing tables (which store the best paths to destinations) dynamically. The efficiency and scalability of these algorithms are critical, especially in large and complex networks like the internet. Graph theory provides the essential framework and tools for designing, analyzing, and implementing these vital network functions.

Understanding graph algorithms is crucial for network engineering and many other fields. These courses can provide a strong foundation:

Paving Your Way: Formal Education in Data Structures

A strong foundation in data structures is a hallmark of a formal computer science education. Universities worldwide recognize its critical importance and typically integrate it as a core component of their undergraduate and graduate programs. This formal training provides students with not only the knowledge of various data structures and their associated algorithms but also the analytical skills to evaluate their efficiency and applicability to different problems. It's the academic rigor that often distinguishes a computer scientist from a casual programmer.

For students considering a career in software development, systems architecture, data science, or academic research in computer science, understanding the educational pathways related to data structures is vital. This section will touch upon how data structures are integrated into undergraduate curricula, the opportunities for advanced study and research at the graduate level, their significance in competitive programming, and potential thesis topics for those looking to specialize further.

The Academic Blueprint: Undergraduate Curriculum Integration

Data structures and algorithms typically form one or more cornerstone courses in an undergraduate computer science curriculum, often introduced after foundational programming courses. These courses aim to equip students with the essential tools to design and analyze efficient software. The curriculum usually covers a wide range of topics, starting with fundamental concepts like Abstract Data Types (ADTs), time and space complexity analysis (Big O notation), and basic data organization principles.

Students then delve into specific data structures, learning their definitions, properties, common operations, and typical use cases. Linear structures like arrays, linked lists (singly, doubly, circular), stacks, and queues are usually covered first. This is followed by non-linear structures such as trees (binary trees, binary search trees, balanced trees like AVL or Red-Black trees), heaps (binary heaps, priority queues), and graphs (representations like adjacency lists/matrices, and basic traversal algorithms like BFS and DFS). Hash tables, along with collision resolution techniques, are also a critical component. For each structure, students learn to implement the core operations and analyze their efficiency.

The algorithmic aspect is interwoven throughout. Students learn algorithms that are closely tied to these structures, such as various searching algorithms (linear search, binary search) and sorting algorithms (bubble sort, insertion sort, merge sort, quicksort, heapsort). Graph algorithms like shortest path (Dijkstra's, Bellman-Ford) and minimum spanning tree (Prim's, Kruskal's) are often introduced. Assignments and projects typically involve implementing these data structures and algorithms from scratch in a programming language like Java, C++, or Python, and applying them to solve practical problems. This hands-on experience is crucial for solidifying understanding and developing problem-solving skills.

Many universities make their course materials available, and online platforms often feature courses taught by university professors, offering a glimpse into typical undergraduate content.

A highly regarded textbook often used in such courses is "Introduction to Algorithms" by Cormen, Leiserson, Rivest, and Stein.

Advanced Studies: Graduate Research Opportunities

For students with a deep interest in data structures and algorithms, graduate studies (Master's or Ph.D. programs) offer opportunities to delve into advanced topics and contribute to cutting-edge research. At the graduate level, the focus shifts from learning established data structures to designing new ones, analyzing their performance with greater mathematical rigor, and applying them to solve complex problems in specialized domains.

Research areas in advanced data structures are diverse and constantly evolving. Some potential areas include:

- Probabilistic Data Structures: Structures like Bloom filters, HyperLogLog, and Count-Min sketch, which provide approximate answers to queries with quantifiable error rates but use significantly less space or time than exact structures. Research might involve designing new probabilistic structures or improving the analysis of existing ones.

- External Memory and Cache-Oblivious Structures: Designing data structures that perform efficiently when data is too large to fit in main memory and must reside on disk. Cache-oblivious algorithms aim to be efficient regardless of memory hierarchy parameters.

- Concurrent and Distributed Data Structures: Developing data structures that can be safely and efficiently accessed and modified by multiple threads or across multiple machines in a distributed system. This is crucial for high-performance computing and large-scale data processing.

- Persistent Data Structures: Exploring structures that preserve previous versions when modified, with applications in version control, transactional memory, and functional programming.

- Data Structures for Specific Data Types: Designing specialized structures for geometric data (e.g., k-d trees, quadtrees), string data (e.g., suffix trees, tries), or high-dimensional data common in machine learning.

- Quantum Data Structures: An emerging area exploring how quantum computing principles might lead to new types of data structures with capabilities beyond classical structures for certain problems.

- Succinct Data Structures: Structures that use space very close to the information-theoretic lower bound while still supporting efficient queries.

Graduate research often involves a combination of theoretical work (designing structures, proving correctness and performance bounds) and experimental work (implementing structures and evaluating their performance on real or synthetic datasets). Students typically work closely with faculty advisors who are experts in these areas. Contributing to research in data structures can lead to publications in academic conferences and journals, and can pave the way for careers in academia or research-oriented roles in industry.

Courses that touch on more advanced topics or are part of graduate-level specializations include:

Sharpening Skills: Competitive Programming Preparation

Competitive programming is a mind sport where participants solve algorithmic and data structure problems within tight time limits. It's a popular activity among students and aspiring software engineers as it hones problem-solving abilities, improves coding speed and accuracy, and deepens the understanding of data structures and algorithms. Success in competitive programming often translates well to technical interviews, as many interview questions are similar in nature to contest problems.

A strong grasp of data structures is absolutely essential for competitive programming. Problems often require contestants to choose the most efficient data structure to manage the input data and intermediate states to arrive at a solution within the given time and memory constraints. Standard library implementations of common data structures (like C++ STL, Java Collections Framework, or Python's built-in structures) are frequently used, but a deep understanding of their underlying principles and performance characteristics is crucial. Commonly encountered data structures and concepts in competitive programming include:

- Basic Structures: Arrays, linked lists, stacks, queues.

- Trees: Binary search trees, segment trees, Fenwick trees (Binary Indexed Trees), treaps, suffix trees, tries.

- Graphs: Representations (adjacency list/matrix), BFS, DFS, shortest path algorithms (Dijkstra, Bellman-Ford, Floyd-Warshall), minimum spanning tree (Kruskal, Prim), network flow, strongly connected components.

- Heaps/Priority Queues.

- Hash Tables/Sets/Maps.

- Disjoint Set Union (DSU) / Union-Find.

Beyond knowing these structures, competitive programmers must be adept at recognizing when to use them and how to combine them with various algorithmic techniques like dynamic programming, greedy algorithms, divide and conquer, and various mathematical approaches. Regular practice on online judging platforms (like LeetCode, HackerRank, Codeforces, TopCoder) is key to improving. Many universities have competitive programming clubs, and there are numerous online resources, tutorials, and communities dedicated to it.

These courses are excellent for those preparing for competitive programming or technical interviews that heavily feature algorithmic problem-solving.

Books like "Cracking the Coding Interview" and "Introduction to Algorithms" are also valuable resources.

Pushing Boundaries: Thesis Topics in Advanced Structures

For students pursuing graduate degrees, particularly a Master's or Ph.D. in Computer Science, a thesis often represents the culmination of their research efforts. Data structures, being a fundamental and ever-evolving field, offer a rich landscape for potential thesis topics. These topics typically involve exploring novel data structures, analyzing existing ones in new contexts, or applying advanced data structures to solve challenging problems in specific domains.

Some illustrative examples of thesis topic areas in advanced data structures could include:

- Dynamic Graph Algorithms: Developing data structures and algorithms that can efficiently maintain properties of a graph (like connectivity, shortest paths, or minimum spanning trees) as the graph undergoes changes (edge insertions/deletions). This is relevant for social networks, communication networks, and other dynamic systems.

- Succinct Data Structures for Large-Scale Genomics: Designing space-efficient data structures to store and query massive genomic datasets, such as suffix trees/arrays for sequence alignment, or compressed representations of variation graphs.

- Cache-Efficient Geometric Data Structures: Creating data structures for geometric problems (e.g., range searching, nearest neighbor queries) that minimize cache misses and perform well in the memory hierarchy, which is crucial for handling large spatial datasets.

- Privacy-Preserving Data Structures: Investigating structures that allow for computation or querying on sensitive data while preserving individual privacy, potentially using techniques from differential privacy or cryptography. This is increasingly important with growing concerns about data security.

- Data Structures for Machine Learning Optimization: Exploring novel data structures to accelerate training or inference in machine learning models, for example, to efficiently manage sparse data, gradients, or model parameters.

- Quantum Data Structures and Algorithms: A more theoretical area focusing on how quantum phenomena could be harnessed to create data structures that outperform classical counterparts on specific tasks, or how classical data structures need to adapt for quantum computing environments.

- Self-Adjusting or Adaptive Data Structures: Designing structures that dynamically change their organization based on access patterns to optimize future performance, such as splay trees or adaptive hash tables.

A successful thesis in this area typically involves a deep theoretical understanding, often strong mathematical skills for analysis, and proficient implementation abilities for experimental validation. It pushes the boundaries of current knowledge and contributes new insights or tools to the field of computer science.

Courses providing a glimpse into advanced areas and research thinking include:

Learning Beyond the Classroom: Online Education and Self-Study

The digital age has revolutionized how we learn, and data structures are no exception. For career changers, self-taught developers, or even students looking to supplement their formal education, online resources offer a wealth of opportunities to master this critical subject. The flexibility and accessibility of online courses, interactive platforms, and open-source projects allow learners to study at their own pace and often gain practical, hands-on experience that is highly valued in the tech industry.

This path requires discipline and proactivity, but it can be incredibly rewarding. From interactive coding platforms that provide instant feedback to contributing to real-world open-source projects, the avenues for self-study are diverse. Moreover, online communities provide support and collaboration opportunities, helping learners stay motivated and overcome challenges. This section will explore how these resources can be leveraged for effective self-directed learning in data structures.

OpenCourser is an excellent starting point for finding relevant online courses. With features like detailed course information, syllabi, user reviews, and a "Save to list" button, you can easily browse through thousands of Computer Science courses and curate a learning path tailored to your needs. The platform's "Activities" section can also suggest preparatory work or supplementary projects to enhance your learning journey.

Interactive Learning: Coding Platforms and Challenges

One of the most effective ways to learn data structures and algorithms, especially for self-starters, is through interactive coding platforms and online challenges. Websites like LeetCode, HackerRank, Codewars, and TopCoder offer vast collections of programming problems that specifically target data structures and algorithmic thinking. These platforms provide an environment where you can write code, test it against various inputs, and receive immediate feedback on correctness and efficiency.

These platforms are invaluable for several reasons:

- Hands-on Practice: Learning data structures isn't just about understanding the theory; it's about being able to implement and use them. Coding challenges force you to translate conceptual knowledge into working code.

- Problem Variety: You'll encounter problems of varying difficulty levels, covering a wide range of data structures (arrays, linked lists, trees, graphs, hash tables, etc.) and algorithmic paradigms (sorting, searching, dynamic programming, greedy algorithms, etc.).

- Efficiency Focus: Many problems have constraints on execution time and memory usage. This pushes you to think about the efficiency of your solutions (Big O complexity) and choose appropriate data structures.

- Interview Preparation: These platforms are heavily used by companies to source technical interview questions. Practicing here is excellent preparation for job interviews, especially at tech companies.

- Community and Solutions: Most platforms have discussion forums where users can share their solutions and approaches. Seeing how others solve the same problem can provide new insights and teach different techniques.

To make the most of these platforms, it's beneficial to have a foundational understanding of common data structures first, perhaps from an online course or a textbook. Then, you can start with easier problems and gradually move to more complex ones as your skills develop. Many learners find it helpful to focus on problems related to a specific data structure or algorithm they are currently studying to reinforce their understanding. Regularly participating in timed contests on these platforms can also simulate the pressure of technical interviews and improve coding speed under constraints.

Many online courses incorporate problem-solving on such platforms or have their own interactive coding environments. These courses can help bridge theory with practice.

Real-World Experience: Open-Source Contribution Opportunities

Contributing to open-source projects is an excellent way for self-taught developers and career changers to gain practical experience with data structures, see how they are used in real-world software, and build a portfolio that showcases their skills. Many open-source projects, ranging from large complex systems like operating systems and databases to smaller libraries and tools, involve the use and sometimes the design of sophisticated data structures.

Getting involved can seem daunting at first, but many projects are welcoming to new contributors. Here’s how you might approach it:

- Find a Project: Look for projects that interest you on platforms like GitHub, GitLab, or Bitbucket. Consider projects written in languages you are familiar with or want to learn. Look for projects that have "good first issue" or "help wanted" tags, which often indicate tasks suitable for newcomers.