Linux

In-Depth Guide to Linux: Understanding the Operating System and Its Career Landscape

Linux is a powerful and versatile open-source operating system kernel that serves as the foundation for a vast array of systems, from embedded devices and mobile phones to personal computers, enterprise servers, and the world's most powerful supercomputers. Initially created by Linus Torvalds in 1991 as a personal project, Linux has grown through the collaborative efforts of a global community of developers. Its stability, security, and flexibility have made it a cornerstone of modern computing.

Working with Linux can be incredibly engaging. Imagine having the ability to finely tune your computer's performance, automate complex tasks with scripting, or contribute to a global software project. The transparency of its open-source nature allows for deep understanding and customization, which many find both challenging and rewarding. Furthermore, the skills acquired in the Linux ecosystem are highly transferable and in demand across numerous technology sectors, opening doors to exciting career opportunities in areas like system administration, cloud computing, and cybersecurity.

Introduction to Linux

Definition and Core Principles of Linux

At its core, Linux refers to the kernel of a Unix-like operating system. The kernel is the central component of an operating system that manages the system's resources, and the communication between hardware and software. However, the term "Linux" is commonly used to describe entire operating systems built around this kernel, often bundled with system software and libraries from the GNU Project, leading to the name GNU/Linux. These complete operating systems are known as Linux distributions.

The core principles underpinning Linux are rooted in the open-source philosophy. This means its source code is freely available for anyone to view, modify, and distribute. This transparency fosters collaboration, innovation, and security, as a global community can scrutinize and contribute to its development. Key principles include modularity, allowing different components to be developed and updated independently; portability, enabling Linux to run on a wide range of hardware architectures; and a hierarchical file system, which organizes files and directories in a tree-like structure.

Linux is also designed with multi-user and multitasking capabilities, meaning it can support multiple users working simultaneously and run multiple applications concurrently. Its robust command-line interface (CLI) offers powerful tools for system management and automation, while graphical user interfaces (GUIs) provide a user-friendly experience for desktop users.

Key Differences from Other Operating Systems (e.g., Windows, macOS)

Linux distinguishes itself from proprietary operating systems like Microsoft Windows and Apple's macOS in several fundamental ways. Perhaps the most significant difference is its open-source nature. While Windows and macOS have source code that is closed and proprietary, Linux's code is open, allowing for unparalleled customization and community-driven development. This openness also means Linux distributions are generally free of charge, though commercial versions with paid support exist.

Another key difference lies in software management. Linux distributions typically use package managers (like APT for Debian/Ubuntu or YUM/DNF for Fedora/RHEL) to install, update, and remove software from centralized repositories. This system simplifies software management and ensures dependencies are handled correctly. Windows relies on executable installers and the Microsoft Store, while macOS uses .app bundles and the App Store.

Hardware compatibility can also vary. While Linux supports a vast range of hardware, driver support for the very latest or highly specialized hardware might sometimes lag behind Windows, which often benefits from direct manufacturer support due to its market share. Conversely, Linux is renowned for its ability to run efficiently on older hardware. In terms of user interface, Windows and macOS offer highly polished and consistent GUIs. Linux provides a choice of desktop environments (like GNOME, KDE Plasma, XFCE), each with its own look, feel, and resource requirements, offering users greater flexibility but sometimes a steeper learning curve for those accustomed to a single, standardized interface.

These courses provide a solid starting point for understanding Linux fundamentals and how to navigate its environment.

Historical Context and Creation by Linus Torvalds

The story of Linux begins in 1991 with Linus Torvalds, then a 21-year-old computer science student at the University of Helsinki, Finland. Dissatisfied with the licensing and capabilities of existing operating systems like Minix (a small Unix-like system for educational purposes), Torvalds set out to create his own operating system kernel that could run on the Intel 386 processors he was using.

He announced his project on the Usenet newsgroup comp.os.minix, famously stating, "I'm doing a (free) operating system (just a hobby, won't be big and professional like gnu) for 386(486) AT clones." Initially, Torvalds did not intend for Linux to be open source in the way it is today; he considered licensing it under terms more restrictive than the GNU General Public License (GPL). However, he was soon persuaded to release it under the GPL, a pivotal decision that allowed for widespread collaboration and contribution.

The combination of Torvalds' kernel with the existing tools and utilities developed by the GNU Project (founded by Richard Stallman) created a fully functional free operating system. This synergy was crucial to Linux's early growth and adoption. The name "Linux" was coined by Ari Lemmke, who administered the FTP server where the first versions of the kernel were hosted; Torvalds had initially named his project "Freax."

Overview of Linux Distributions (Ubuntu, Fedora, Debian)

A Linux distribution, often shortened to "distro," is an operating system made from a software collection that includes the Linux kernel and, often, a package management system. Users typically obtain Linux by downloading one of these distributions. There are hundreds of distributions available, each tailored for different purposes and user groups, but some of the most well-known include Ubuntu, Fedora, and Debian.

Debian is one of the oldest and most influential Linux distributions, first released in 1993. It is known for its commitment to free software principles, its stability, and its rigorous quality control. Debian serves as the foundation for many other popular distributions, including Ubuntu. It uses the APT package manager and the .deb package format. Debian is a popular choice for servers and for users who prioritize stability and adherence to open-source ideals.

Ubuntu, first released in 2004 by Canonical Ltd., is based on Debian and has become one of the most popular desktop Linux distributions. It aims to be user-friendly and easy to install, making Linux more accessible to a broader audience. Ubuntu releases new versions every six months, with Long-Term Support (LTS) versions released every two years offering five years of support. It also uses APT and .deb packages and is widely used for desktops, servers, and cloud computing.

Fedora, sponsored by Red Hat, is a community-driven distribution that focuses on incorporating the latest free and open-source software. It serves as an upstream source for Red Hat Enterprise Linux (RHEL). Fedora is known for its innovation and cutting-edge features, making it a good choice for developers and users who want the newest software. It uses the DNF package manager (a successor to YUM) and the RPM package format. New Fedora versions are released approximately every six months.

These distributions, and many others like Mint, CentOS Stream, openSUSE, and Arch Linux, offer a diverse range of choices, allowing users to select an operating system that best fits their technical skills, hardware, and specific needs. For those looking to dive deeper into specific distributions, these resources can be helpful.

Linux Architecture and Core Components

The Kernel's Central Role

The Linux kernel is the heart of any Linux operating system. It acts as the primary interface between the computer's hardware and the software applications running on it. Think of the kernel as a highly efficient manager or a translator, ensuring that all parts of the system can communicate and work together harmoniously. Its responsibilities are vast and critical for the functioning of the entire system.

Key functions of the kernel include managing the system's memory, allocating it to different processes as needed, and ensuring that one process doesn't interfere with another's memory space. It also schedules processes, deciding which programs get to use the CPU and for how long. Furthermore, the kernel handles input and output (I/O) requests from software, translating them into instructions for the hardware devices like disks, network cards, and displays. It also manages the file system, controlling how data is stored and retrieved.

The Linux kernel is monolithic, meaning it's a single, large program containing all the necessary code to perform its core functions. However, it's also highly modular, supporting loadable kernel modules (LKMs). These modules allow device drivers and other functionalities to be added or removed from the kernel at runtime, without needing to recompile the entire kernel or reboot the system. This modularity provides flexibility and allows the kernel to remain relatively small while supporting a vast range of hardware.

User-Space vs. Kernel-Space Interactions

To ensure system stability and security, Linux, like most modern operating systems, separates its operational environment into two distinct modes: kernel-space and user-space. This separation prevents user applications from directly accessing critical hardware or disrupting the kernel's operations.

Kernel-space is where the kernel itself executes and provides its services. Code running in kernel-space has unrestricted access to all hardware and system resources. This is necessary for the kernel to perform its management tasks. However, a bug in kernel-space code can have severe consequences, potentially crashing the entire system.

User-space is where all user applications and most system utilities run. Programs in user-space have restricted access to hardware and memory. If a user application needs to perform a privileged operation, such as reading from a file or sending data over the network, it cannot do so directly. Instead, it must make a request to the kernel through a special mechanism called a system call. The kernel then validates the request and, if permissible, performs the operation on behalf of the application, returning the result to user-space. This controlled interaction protects the system's integrity. For example, when you type ls in a terminal to list files, the ls program (running in user-space) makes system calls to the kernel to read directory information from the file system (managed by the kernel in kernel-space).

This architectural division is crucial for building robust and secure operating systems. Understanding this interaction is fundamental for developers writing system-level software or device drivers.

For those interested in the intricacies of how processes communicate within Linux, this course offers a deep dive.

These books offer comprehensive insights into kernel development and the Linux programming interface.

File System Hierarchy (FHS)

The Filesystem Hierarchy Standard (FHS) defines the main directories and their contents in Linux and other Unix-like operating systems. The goal of the FHS is to provide a consistent and predictable directory structure, making it easier for users and software developers to locate files and for administrators to manage systems. Even though different Linux distributions might have minor variations, they generally adhere to the FHS.

At the top of the hierarchy is the root directory, denoted by a single forward slash (/). All other files and directories are nested within this root directory. Some key directories defined by the FHS include:

-

/bin: Essential user command binaries (programs) that are needed in single-user mode and by all users, such as

ls,cp,mv. -

/sbin: Essential system binaries, typically used by the system administrator, such as

reboot,fdisk,ifconfig. - /etc: Host-specific system configuration files. This directory contains configuration files for the system and installed applications.

- /dev: Device files, which provide an interface to hardware devices.

- /home: Users' home directories, where personal files and configurations are stored (e.g., /home/username).

- /lib: Essential shared libraries needed by the binaries in /bin and /sbin, and kernel modules.

- /mnt: A generic mount point for temporarily mounting file systems.

- /opt: Optional application software packages.

- /proc: A virtual file system providing information about system processes and kernel parameters.

- /root: The home directory for the root user (the system administrator).

- /tmp: Temporary files. Files in this directory are often deleted upon reboot.

- /usr: Secondary hierarchy for user data; contains the majority of multi-user utilities and applications. It includes subdirectories like /usr/bin, /usr/sbin, /usr/lib, and /usr/local (for locally installed software).

- /var: Variable files—files whose content is expected to continually change during normal operation of the system—such as logs, spool files, and temporary e-mail files.

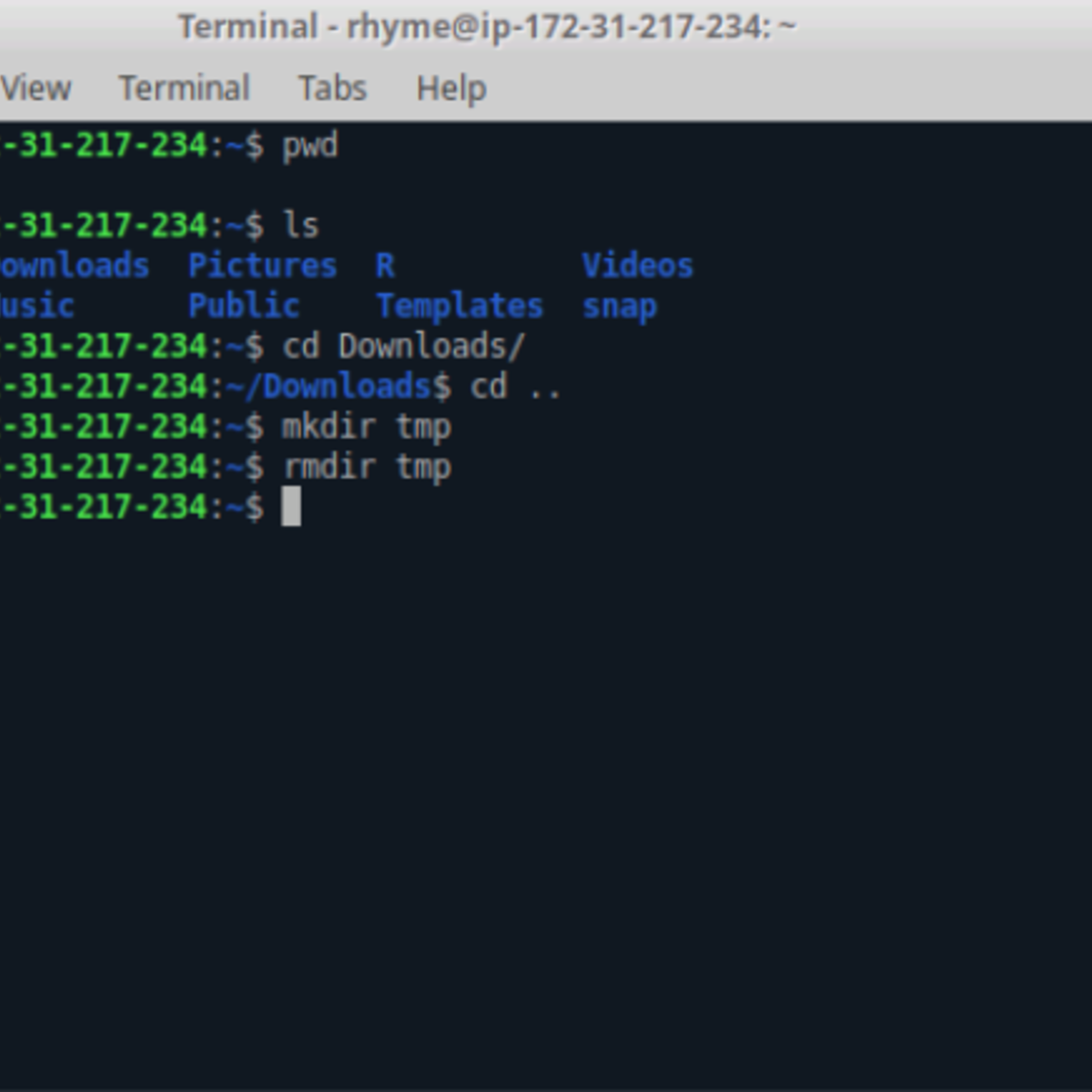

Understanding the FHS is crucial for navigating a Linux system effectively and for system administration. If you want to practice working with files and directories, consider the following resources.

Key Subsystems: Process Management and Memory Management

Two of the most critical subsystems managed by the Linux kernel are process management and memory management. These subsystems work in concert to ensure that applications run efficiently and reliably.

Process Management: A process is an instance of a running program. The kernel is responsible for creating, scheduling, and terminating processes. When you launch an application, the kernel creates a new process for it, allocating necessary resources like CPU time and memory. The scheduler component of the kernel determines which process gets to run on the CPU at any given time, and for how long. Linux uses sophisticated scheduling algorithms to balance the needs of various processes, aiming for responsiveness for interactive tasks and high throughput for background jobs. Process management also involves handling inter-process communication (IPC), allowing different processes to exchange data and synchronize their actions.

Memory Management: This subsystem controls how memory (RAM) is allocated to and used by processes. Each process in Linux operates within its own virtual address space, which is a private view of the system's memory. The kernel maps these virtual addresses to physical memory addresses. This virtual memory system provides several benefits, including process isolation (one process cannot directly access another's memory) and the ability to use more memory than physically available by swapping less-used portions of memory to disk (swap space). The kernel's memory manager also handles tasks like allocating and freeing memory pages, caching frequently accessed data from disk into RAM (page cache) to speed up access, and managing shared memory between processes.

Efficient process and memory management are fundamental to Linux's performance and stability. These topics are often covered in depth in operating systems courses and books.

Linux in the Open-Source Ecosystem

The Role of the GNU Project and GPL Licensing

The Linux kernel, while a monumental achievement in itself, is typically used as part of a larger system of software. Much of this surrounding software comes from the GNU Project, initiated by Richard Stallman in 1983. The GNU Project's goal was to create a completely free Unix-like operating system. "GNU" is a recursive acronym for "GNU's Not Unix!"

By the time Linus Torvalds began developing the Linux kernel, the GNU Project had already created many essential components of an operating system, including a powerful C compiler (GCC), various shell utilities (like Bash), text editors (like Emacs), and system libraries. The missing piece was a free kernel. When Torvalds released his kernel under the GNU General Public License (GPL), it naturally fit with the GNU software to form a complete, usable, and free operating system. This is why many refer to these systems as GNU/Linux, to acknowledge the significant contributions of the GNU Project.

The GNU General Public License (GPL) is a cornerstone of the Linux and open-source ecosystem. It is a "copyleft" license, meaning that it grants users the freedom to run, study, share, and modify the software. Crucially, it also requires that any derivative works or modified versions distributed must also be licensed under the GPL. This provision ensures that the software remains free and open for all future users and developers, preventing it from being turned into proprietary software. The GPL has been instrumental in fostering the collaborative development model that characterizes Linux and much of the open-source world.

To understand more about open source software development and its principles, these resources are excellent starting points.

Community-Driven Development Model

Linux thrives on a community-driven development model, a hallmark of successful open-source projects. Unlike proprietary software developed by a single company in a closed environment, Linux development is a global, collaborative effort involving thousands of individual developers, corporations, and non-profit organizations. This decentralized approach has led to rapid innovation, robust code, and a system that adapts quickly to new technologies and user needs.

Contributions come in many forms: writing new code, fixing bugs, testing software, writing documentation, translating software into different languages, and providing user support through forums and mailing lists. Developers submit their proposed changes (patches) to kernel subsystem maintainers, who review the code. If accepted, patches move up a hierarchy of maintainers, eventually reaching Linus Torvalds, who still oversees the integration of new code into the official kernel releases.

This meritocratic and transparent process ensures code quality and allows for diverse perspectives to shape the future of Linux. Corporations like Red Hat, SUSE, Google, Intel, IBM, and many others also contribute significantly to Linux development, often employing developers who work full-time on the kernel and related projects. Their involvement underscores the commercial viability and importance of Linux in the modern technology landscape. The vibrant community also ensures that Linux remains independent and not beholden to the interests of any single entity.

Contributions to Cloud Computing and Containerization (e.g., Docker, Kubernetes)

Linux has become the de facto operating system for cloud computing and containerization technologies, fundamentally shaping modern IT infrastructure. Its stability, scalability, security, and open-source nature make it an ideal platform for powering the massive data centers that underpin cloud services from providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

In the realm of containerization, Linux kernel features such as cgroups (control groups) and namespaces are the foundational technologies that enable tools like Docker. Cgroups limit and isolate the resource usage (CPU, memory, disk I/O, network, etc.) of a collection of processes, while namespaces provide process isolation, ensuring that a process within a container has its own view of the system (e.g., its own process IDs, network stack, and file system mounts). Docker simplified the creation and management of these isolated environments, making it easy to package applications and their dependencies into portable containers.

Building on containerization, Kubernetes, an open-source container orchestration platform originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), has become the standard for automating the deployment, scaling, and management of containerized applications. Kubernetes, too, is predominantly run on Linux. The combination of Linux, Docker, and Kubernetes has revolutionized how applications are developed, deployed, and managed, enabling microservices architectures, continuous integration/continuous deployment (CI/CD) pipelines, and highly scalable and resilient systems. You can explore more about Cloud Computing on OpenCourser.

For those looking to get hands-on with these technologies, these courses can be beneficial.

Enterprise Adoption (Red Hat, SUSE)

While Linux began as a hobbyist project, it has achieved widespread adoption in enterprise environments, becoming a critical component of IT infrastructure for businesses of all sizes. Companies rely on Linux for its performance, reliability, security, and cost-effectiveness. Two of the most prominent players in the enterprise Linux space are Red Hat and SUSE.

Red Hat, now a subsidiary of IBM, offers Red Hat Enterprise Linux (RHEL), a commercially supported Linux distribution. RHEL is known for its stability, security certifications, and long-term support, making it a popular choice for mission-critical applications in industries like finance, healthcare, and telecommunications. Red Hat also provides a wide range of related open-source solutions, including OpenShift (a Kubernetes platform), Ansible (automation), and JBoss middleware.

SUSE offers SUSE Linux Enterprise Server (SLES), another leading commercial Linux distribution focused on the enterprise market. SLES is recognized for its reliability, interoperability with other enterprise systems (including Windows), and support for a broad range of hardware platforms, from x86 to IBM Z mainframes and Power Systems. SUSE also provides solutions for software-defined storage, cloud platforms, and container management.

These enterprise Linux vendors provide subscription models that include access to certified software, security updates, technical support, and management tools. Their offerings give businesses the confidence to deploy Linux for demanding workloads, backed by enterprise-grade service level agreements (SLAs). The success of these companies demonstrates the maturity and robustness of Linux in the corporate world. Many organizations also use community-supported distributions like CentOS Stream (which is upstream for RHEL) or Debian for their enterprise needs, often leveraging in-house expertise or third-party support.

Formal Education Pathways for Linux

Computer Science Curricula Incorporating Linux

Linux has become an integral part of modern computer science education. Many universities and colleges incorporate Linux into their curricula, recognizing its importance in understanding operating system concepts, system programming, networking, and software development. Students often encounter Linux in courses on operating systems, where they might explore its architecture, kernel components, process management, and memory management. Practical assignments may involve writing shell scripts, developing C programs that interact with the system via system calls, or even modifying parts of the kernel itself.

Beyond dedicated OS courses, Linux is frequently the preferred environment for teaching programming languages like C, C++, Python, and Java, due to its powerful command-line tools and development environments. Courses in networking often use Linux for hands-on labs involving socket programming, network configuration, and analysis of network protocols. Cybersecurity programs extensively use Linux-based distributions like Kali Linux for penetration testing and security analysis. Furthermore, research in areas like high-performance computing (HPC), distributed systems, and embedded systems heavily relies on Linux.

By using Linux in their studies, students gain valuable practical skills that are highly sought after in the industry. They learn to work with the command line, understand file system structures, manage software packages, and troubleshoot system issues. This exposure prepares them not only for careers directly involving Linux administration or development but also for a wide range of software engineering and IT roles where Linux proficiency is a significant asset. OpenCourser offers a wide variety of courses in Computer Science that can complement a formal education.

Certifications (LPIC, RHCSA)

For individuals looking to validate their Linux skills and enhance their career prospects, several industry-recognized certifications are available. These certifications demonstrate a certain level of competency and can be a valuable credential for employers. Two of the most well-known certification tracks are offered by the Linux Professional Institute (LPI) and Red Hat.

The Linux Professional Institute Certification (LPIC) program offers a multi-level certification path that is distribution-neutral, meaning it covers skills applicable across various Linux distributions.

- LPIC-1 (Linux Administrator): Focuses on fundamental system administration tasks, including command-line usage, system installation, package management, and basic networking.

- LPIC-2 (Linux Engineer): Covers more advanced topics such as kernel management, advanced storage, network configuration, and system security.

- LPIC-3 (Linux Enterprise Professional): Offers specializations in areas like mixed environments, security, and virtualization/high availability.

The Red Hat Certified System Administrator (RHCSA) is a performance-based certification that validates the skills needed to manage Red Hat Enterprise Linux (RHEL) systems. Candidates must perform real-world tasks on a live system to pass the exam. The RHCSA is a prerequisite for higher-level Red Hat certifications like the Red Hat Certified Engineer (RHCE), which focuses on automation with Ansible, and other specialist certifications. These Red Hat certifications are highly regarded, particularly in enterprise environments that use RHEL.

Other notable certifications include those from CompTIA (Linux+), Oracle (Oracle Linux certifications), and The Linux Foundation (e.g., LFCS - Linux Foundation Certified SysAdmin, LFCE - Linux Foundation Certified Engineer). Pursuing certifications can be a structured way to learn Linux and can complement formal education or self-study. Many online courses are designed to help prepare for these certification exams.

These courses can help you prepare for industry certifications.

Research Applications in HPC and Embedded Systems

Linux plays a dominant role in research, particularly in the fields of High-Performance Computing (HPC) and embedded systems. Its open-source nature, flexibility, and robustness make it an ideal platform for cutting-edge scientific and engineering research.

In High-Performance Computing, Linux powers virtually all of the world's fastest supercomputers. Researchers in fields like physics, climate modeling, genomics, materials science, and artificial intelligence rely on HPC clusters running Linux to perform complex simulations and analyze massive datasets. Linux's ability to be customized for specific hardware, its efficient resource management, and the availability of a vast ecosystem of scientific software and libraries (often open-source themselves) are key reasons for its ubiquity in this domain. The scalability of Linux allows it to manage tens of thousands of processor cores working in parallel.

In Embedded Systems, Linux is widely used in a vast array of devices, from consumer electronics like smart TVs, routers, and Android smartphones (which use a modified Linux kernel) to industrial control systems, medical devices, automotive systems, and aerospace applications. The Yocto Project and Buildroot are popular frameworks that help developers create custom Linux distributions tailored for the specific resource constraints and requirements of embedded hardware. Linux's modularity, small footprint (when configured appropriately), real-time capabilities (with extensions like PREEMPT_RT), and extensive driver support contribute to its success in the embedded space. Researchers developing new embedded technologies often use Linux as their development and deployment platform due to its adaptability and the rich set of tools available.

These courses provide insight into specialized areas of Linux application.

University Labs Using Linux for OS Development

University research labs and computer science departments frequently use Linux as a platform for teaching and research in operating system development. Its open-source nature provides an unparalleled opportunity for students and researchers to delve into the inner workings of a real-world, production-quality OS kernel.

Students in advanced operating systems courses often engage in projects that involve modifying or extending the Linux kernel. This could include developing new scheduling algorithms, implementing novel memory management techniques, writing device drivers for custom hardware, or enhancing system security features. Such hands-on experience is invaluable for understanding complex OS concepts and for developing strong system programming skills. Access to the complete source code allows for detailed study and experimentation that would be impossible with proprietary operating systems.

Researchers in university labs also leverage Linux for OS-level innovation. They might explore new kernel architectures, develop operating systems for emerging hardware platforms (like neuromorphic or quantum computers, where feasible), or investigate fundamental challenges in areas like concurrency, fault tolerance, and resource management. The collaborative development model of Linux also means that innovations originating in university labs can potentially find their way into the mainline kernel, benefiting the wider community. Many prominent kernel developers and maintainers have academic backgrounds or started their Linux journey in university settings. This strong link between academia and the Linux development community continues to drive OS research and education forward.

If you are interested in system programming aspects of Linux, these books can be very informative.

Self-Directed Linux Learning

Building Home Labs with Raspberry Pi or Similar Devices

One of the most effective ways to learn Linux in a self-directed manner is by building a home lab. Affordable single-board computers like the Raspberry Pi have revolutionized hands-on learning for Linux enthusiasts. These small, low-power devices are capable of running full Linux distributions and provide an excellent platform for experimentation without the risk of damaging a primary computer.

With a Raspberry Pi, you can set up a personal web server, a network-attached storage (NAS) device, a media center, a home automation hub, or even a small cluster for learning about distributed computing. Each of these projects provides opportunities to learn about different aspects of Linux, such as network configuration, user management, service deployment, scripting, and security hardening. The process of installing the OS, configuring services from the command line, and troubleshooting issues offers invaluable practical experience.

Beyond Raspberry Pi, older desktop or laptop computers can be repurposed as Linux lab machines. The beauty of a home lab is the freedom it provides to install, break, fix, and reinstall systems, which accelerates learning. Online communities and project tutorials offer endless inspiration and guidance for home lab projects. This hands-on approach solidifies theoretical knowledge and builds confidence in managing Linux systems.

These courses can help you get started with projects on devices like the Raspberry Pi.

Contributing to Open-Source Projects

Contributing to open-source projects is an excellent way for self-directed learners to deepen their Linux knowledge, gain practical experience, and become part of the vibrant open-source community. Many Linux distributions and related software projects welcome contributions from individuals of all skill levels.

Contributions don't always have to be complex code. Beginners can start by helping with documentation, translating software into other languages, testing new releases and reporting bugs, or providing support to other users on forums and mailing lists. These activities help build familiarity with the project's codebase, development processes, and community dynamics. As skills develop, one can move on to fixing small bugs, implementing minor features, or submitting patches for review.

Platforms like GitHub host a vast number of open-source projects. Many projects label issues as "good first issue" or "help wanted" to guide newcomers. Engaging with a project involves learning its version control system (usually Git), communication channels (mailing lists, IRC, Slack), and coding standards. The feedback received from experienced developers during code reviews is an invaluable learning opportunity. Contributing to open source not only enhances technical skills but also builds a portfolio of work that can be showcased to potential employers.

This course provides insights into the world of open source software development.

Scripting Practice with Bash/Python

Scripting is a fundamental skill for anyone working extensively with Linux. Automating repetitive tasks, managing system configurations, and processing data can all be achieved efficiently through scripts. The two most common scripting languages in the Linux environment are Bash and Python.

Bash (Bourne Again SHell) is the default command-line interpreter on most Linux distributions. Bash scripting involves writing sequences of shell commands into a file that can be executed. It's particularly powerful for automating system administration tasks, file manipulation, and managing processes. Practicing Bash scripting can involve writing scripts to automate backups, monitor system resources, manage user accounts, or customize your shell environment. Learning about variables, control structures (loops, conditionals), functions, and how to use common command-line utilities (like grep, awk, sed) within scripts is key.

Python is a versatile, high-level programming language that has gained immense popularity for scripting and general-purpose programming on Linux. Its clear syntax and extensive standard library make it suitable for a wide range of tasks, from system automation and web development to data analysis and machine learning. For Linux users, Python can be used to write more complex scripts that require interaction with web APIs, databases, or sophisticated data processing. Many system administration tools and DevOps frameworks are written in or interface with Python.

Regular practice, such as by identifying daily tasks that could be automated or by working through online scripting challenges and tutorials, is crucial for developing proficiency. You can explore more about Programming and find relevant courses on OpenCourser.

These courses focus on scripting in the Linux environment.

The following book is a classic for learning the command line, which is essential for scripting.

Using Virtual Machines for Experimentation

Virtual Machines (VMs) offer a safe and flexible environment for self-directed Linux learning and experimentation. Virtualization software like VirtualBox, VMware Workstation Player/Fusion, or KVM/QEMU allows you to run one or more operating systems concurrently on your existing computer, each within its own isolated virtual environment.

For learners, VMs provide a sandbox where you can install different Linux distributions, try out various desktop environments, experiment with server configurations, or even intentionally "break" things to learn how to fix them, all without affecting your primary operating system. You can take snapshots of a VM's state, allowing you to easily revert to a previous working configuration if something goes wrong. This is incredibly useful when learning about system administration, security, or complex software setups.

VMs are also excellent for setting up specific development environments or testing software compatibility across different Linux versions. You can create a VM tailored for web development, another for database administration, and another for cybersecurity practice with distributions like Kali Linux. This isolation prevents software conflicts and keeps your host system clean. Many online tutorials and courses leverage VMs to provide students with a consistent learning environment. Mastering the use of virtualization tools is itself a valuable skill in modern IT.

Linux Career Progression

Entry-Level Roles: Sysadmin, DevOps Engineer

A solid understanding of Linux is a gateway to several exciting entry-level roles in the IT industry. Two prominent paths are that of a Systems Administrator and a junior DevOps Engineer. These roles are often the first step for individuals passionate about managing and optimizing IT infrastructure.

A Linux Systems Administrator (Sysadmin) is responsible for installing, configuring, maintaining, and troubleshooting Linux servers and systems. Daily tasks can include managing user accounts and permissions, monitoring system performance, applying patches and updates, ensuring system security, performing backups, and automating routine tasks using shell scripting. Entry-level sysadmins typically work with a variety of Linux distributions and need strong problem-solving skills and a good grasp of networking fundamentals. This role is crucial for the smooth operation of an organization's IT services.

A junior DevOps Engineer bridges the gap between software development (Dev) and IT operations (Ops). While DevOps is a culture and a set of practices, entry-level roles often focus on implementing tools and processes that enable faster and more reliable software delivery. Linux proficiency is vital here, as most DevOps tools and cloud platforms run on Linux. Tasks might include working with version control systems (like Git), continuous integration/continuous deployment (CI/CD) pipelines (using tools like Jenkins or GitLab CI), containerization technologies (Docker), and infrastructure-as-code tools (like Terraform or Ansible). A junior DevOps engineer often collaborates closely with both development and operations teams.

For those starting out, the journey can seem daunting, but remember that every expert was once a beginner. Building a strong foundation in Linux fundamentals, practicing consistently, and perhaps pursuing an entry-level certification can significantly boost your chances. Don't be afraid to start with smaller projects or home labs to build experience. The tech community is often supportive, so engage in forums and online groups. Your ambition and willingness to learn are your greatest assets.

These courses can provide foundational knowledge for such roles.

This book is a valuable resource for aspiring system administrators.

Mid-Career Paths: Cloud Architect, SRE

After gaining a few years of experience and deepening their Linux expertise, professionals can progress into more specialized and strategic mid-career roles. Two such prominent paths are Cloud Architect and Site Reliability Engineer (SRE).

A Cloud Architect designs and oversees an organization's cloud computing strategy. This involves selecting appropriate cloud services (from providers like AWS, Azure, or GCP, all heavily reliant on Linux), designing scalable, resilient, and secure cloud infrastructure, and managing cloud costs. Cloud Architects need a deep understanding of Linux, networking, virtualization, containerization, and various cloud services (compute, storage, databases, security). They work closely with development teams and business stakeholders to translate requirements into technical cloud solutions.

A Site Reliability Engineer (SRE) is a role, often described by Google, that blends software engineering principles with infrastructure operations. SREs are responsible for the availability, performance, monitoring, and incident response of large-scale systems. They use software engineering practices to automate operational tasks, build tools for monitoring and diagnostics, and design systems that are inherently more reliable and scalable. Strong Linux skills, coding abilities (often in Python or Go), and a deep understanding of distributed systems, networking, and observability are crucial for SREs.

Transitioning into these roles requires continuous learning and a proactive approach to skill development. It's about more than just technical prowess; it's also about understanding the bigger picture, strategic thinking, and often, leadership. If you're at this stage, keep pushing your boundaries, seek out complex challenges, and consider mentoring others. Your experience is valuable, and these roles offer significant opportunities for impact and growth.

Consider these courses to build skills relevant to mid-career paths.

Leadership Opportunities: CTO, Open-Source Project Maintainer

With extensive experience, deep technical expertise, and proven leadership capabilities, individuals with a strong Linux background can aspire to significant leadership positions. These roles often involve shaping technology strategy at a high level or guiding the direction of influential open-source projects.

The role of a Chief Technology Officer (CTO) in many organizations, especially tech-driven companies, often benefits from a profound understanding of underlying technologies like Linux. A CTO is responsible for overseeing the technological needs of an organization, driving innovation, making strategic technology decisions, and managing technology teams. While a CTO role is broad, a background in robust and scalable systems, often built on Linux, provides a strong foundation for making informed architectural and platform choices.

Becoming an Open-Source Project Maintainer for a significant Linux-related project is another prestigious leadership path. Maintainers are highly respected developers responsible for reviewing contributions, guiding the project's technical direction, and ensuring its long-term health and relevance. This role requires exceptional technical skill, a deep understanding of the project's codebase and community, and strong communication and leadership abilities. Linus Torvalds, as the creator and lead maintainer of the Linux kernel, is the prime example of such a role. While not a formal corporate position, it carries immense influence and responsibility within the open-source world.

Reaching these levels requires not just years of experience but also a continuous commitment to learning, a strategic mindset, the ability to inspire and lead others, and often, a significant impact on the technology landscape. The path to such leadership is challenging but immensely rewarding, offering the chance to shape the future of technology.

Freelancing and Consulting in Linux Environments

For experienced Linux professionals who prefer autonomy or wish to apply their expertise across a variety of projects and clients, freelancing and consulting offer viable and often lucrative career paths. The demand for specialized Linux skills means that businesses of all sizes often seek external expertise for specific projects, system upgrades, security audits, or ongoing support.

Freelance Linux professionals might offer services such as server setup and administration, cloud migration, DevOps implementation, performance tuning, security hardening, or custom scripting and automation. Consultants often provide more strategic advice, helping organizations design their IT infrastructure, choose appropriate technologies, troubleshoot complex issues, or develop long-term IT roadmaps. Success in these roles requires not only strong technical skills but also good business acumen, communication abilities, project management skills, and the ability to market oneself effectively.

Building a strong portfolio, networking within the industry, and developing a reputation for reliability and expertise are key to thriving as a Linux freelancer or consultant. While it offers flexibility, it also comes with the responsibilities of managing one's own business, including finding clients, negotiating contracts, and handling finances. For those with the right skills and entrepreneurial spirit, it can be a very fulfilling way to leverage their Linux knowledge.

If you are considering a career shift or exploring different avenues, remember that the skills you've built are valuable. Freelancing can be a great way to test the waters or to build a career on your own terms. OpenCourser's Career Development section might offer additional insights into navigating such transitions.

Linux Security and Ethical Considerations

SELinux and AppArmor Frameworks

Standard Linux security relies on Discretionary Access Control (DAC), where access to objects (like files) is based on user identity and ownership. However, for enhanced security, especially in environments requiring stricter control, Linux offers Mandatory Access Control (MAC) frameworks like SELinux and AppArmor.

SELinux (Security-Enhanced Linux) was originally developed by the U.S. National Security Agency (NSA) and has been integrated into the mainline Linux kernel. It implements MAC by defining security policies that dictate what actions subjects (like processes) can perform on objects (like files, sockets, or other processes). Even if DAC rules would permit an action, SELinux can deny it if it violates the defined policy. SELinux policies are highly granular and can enforce very specific security constraints, significantly reducing the potential damage from compromised applications or user accounts. It operates in modes like "enforcing" (actively blocking violations) or "permissive" (logging violations but not blocking them, useful for policy development). Distributions like Fedora and RHEL use SELinux by default.

AppArmor (Application Armor) is another MAC system, primarily developed by SUSE and now part of the Linux kernel. AppArmor uses profiles associated with specific applications to restrict their capabilities. These profiles define which files an application can access and what actions (read, write, execute) it can perform. Unlike SELinux's system-wide policies, AppArmor focuses on confining individual applications. It is often considered easier to learn and manage than SELinux. AppArmor is used by default in distributions like Ubuntu and openSUSE.

Both SELinux and AppArmor aim to limit the scope of potential security breaches by enforcing the principle of least privilege, ensuring that processes only have the permissions absolutely necessary for their legitimate functions. Understanding and configuring these frameworks is a key skill for Linux security professionals. You can explore related topics in Cybersecurity on OpenCourser.

This course touches upon server management and security.

Penetration Testing with Kali Linux

Penetration testing, also known as ethical hacking, is the practice of proactively identifying and exploiting vulnerabilities in computer systems, networks, and applications to assess their security posture. Linux, and specifically distributions tailored for this purpose like Kali Linux, are central to this field.

Kali Linux is a Debian-derived Linux distribution designed for digital forensics and penetration testing. It comes pre-installed with a vast arsenal of security tools, including network scanners (e.g., Nmap), vulnerability scanners (e.g., Nessus, OpenVAS), password cracking tools (e.g., John the Ripper, Hashcat), exploitation frameworks (e.g., Metasploit Framework), wireless attack tools (e.g., Aircrack-ng), and web application security tools (e.g., Burp Suite, OWASP ZAP). These tools allow security professionals to simulate real-world attacks in a controlled manner to uncover weaknesses before malicious actors do.

Penetration testers use Kali Linux throughout the various phases of an engagement: reconnaissance (gathering information about the target), scanning (identifying live hosts, open ports, and services), gaining access (exploiting vulnerabilities), maintaining access, and covering tracks. The skills involved require a deep understanding of Linux, networking protocols, operating systems, and common vulnerabilities. Ethical hacking requires strict adherence to legal and ethical guidelines, always operating with explicit permission from the target organization.

For those interested in this domain, hands-on practice in safe, virtual lab environments is crucial. Many online resources and certifications focus on penetration testing methodologies and tool usage.

These courses can introduce you to ethical hacking and relevant Linux skills.

Ethical Debates Around Kernel Vulnerabilities

The discovery and disclosure of vulnerabilities in the Linux kernel, like any widely used software, spark ongoing ethical debates within the security community and beyond. The core tension often lies between the principles of full disclosure (making vulnerability details public quickly to pressure vendors to fix them and inform users) and responsible disclosure (privately reporting vulnerabilities to vendors, allowing them time to develop and release patches before public announcement).

Proponents of full disclosure argue that it creates transparency and holds vendors accountable, ensuring that vulnerabilities are addressed promptly. They believe that users have a right to know about risks to their systems. However, critics contend that immediate public disclosure can provide malicious actors with a window of opportunity to exploit the vulnerability before patches are widely deployed, potentially causing significant harm.

Responsible disclosure, on the other hand, aims to minimize this risk by giving vendors a grace period (e.g., 30, 60, or 90 days) to fix the issue. If a patch is not released within this timeframe, the finder might then proceed with public disclosure. This approach is favored by many organizations and security researchers as a balanced way to protect users while still incentivizing timely fixes. However, debates continue about appropriate timelines, the level of detail to disclose, and how to handle situations where vendors are unresponsive or fixes are inadequate.

The Linux kernel community generally follows a responsible disclosure model. Vulnerabilities are typically reported to security mailing lists and kernel developers, and patches are developed and integrated. The open-source nature of Linux means that the process of patching and the details of vulnerabilities eventually become public, allowing everyone to learn from them. These ethical considerations are vital for maintaining trust and security within the ecosystem.

Compliance (GDPR, HIPAA) in Linux Systems

Organizations that handle sensitive data, such as personal information or health records, are often subject to stringent regulatory compliance requirements like the General Data Protection Regulation (GDPR) in Europe or the Health Insurance Portability and Accountability Act (HIPAA) in the United States. While Linux itself is a general-purpose operating system, its configuration and the security measures implemented around it are crucial for meeting these compliance mandates.

For GDPR compliance, organizations using Linux systems to process personal data of EU residents must ensure appropriate technical and organizational measures are in place to protect that data. This includes implementing strong access controls, encryption (both at rest and in transit), audit logging to track access and modifications to data, data backup and recovery mechanisms, and processes for data breach notification. Linux offers tools and features that can help achieve these goals, such as file permissions, encryption utilities (like LUKS for disk encryption and GPG for file encryption), logging systems (like rsyslog or journald), and security frameworks like SELinux or AppArmor to enforce data handling policies.

Similarly, for HIPAA compliance, healthcare organizations and their associates using Linux systems to handle Protected Health Information (PHI) must implement administrative, physical, and technical safeguards. Technical safeguards relevant to Linux systems include access controls (ensuring only authorized individuals can access PHI), audit controls (recording and examining activity in systems containing PHI), integrity controls (ensuring PHI is not improperly altered or destroyed), and transmission security (protecting PHI when transmitted over electronic networks, often through encryption). Regular security assessments, vulnerability management, and staff training are also key components.

Achieving compliance is not just about the operating system itself but about the entire ecosystem of policies, procedures, and technologies surrounding it. Linux provides a flexible and robust foundation upon which compliant systems can be built, but it requires careful configuration, ongoing management, and a strong understanding of the specific regulatory requirements.

Linux in Emerging Technologies

Edge Computing with Yocto Project

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the sources of data. This is done to improve response times and save bandwidth, which is critical for applications like IoT devices, autonomous vehicles, and industrial automation. Linux is a natural fit for edge devices due to its scalability, flexibility, and strong community support. The Yocto Project plays a significant role in enabling Linux for edge computing.

The Yocto Project is an open-source collaboration project that provides templates, tools, and methods to help developers create custom Linux-based systems for embedded products, regardless of the hardware architecture. It allows for the creation of highly tailored Linux distributions that include only the necessary components for a specific edge device, minimizing the footprint, reducing attack surfaces, and optimizing performance. This is crucial for resource-constrained edge devices.

Using the Yocto Project, developers can build a complete Linux distribution from source, precisely controlling the software stack. This includes the kernel, bootloader, libraries, and applications. This level of customization is essential for meeting the diverse requirements of edge computing scenarios, from small sensors to more powerful edge gateways. As edge computing continues to grow, the role of Linux, facilitated by tools like the Yocto Project, will become even more critical in managing and processing data at the periphery of the network.

This course can give you a deeper understanding of building embedded Linux systems, relevant to edge computing.

AI/ML Pipelines on Linux Clusters

Artificial Intelligence (AI) and Machine Learning (ML) have become transformative technologies, and Linux is the predominant operating system for developing and deploying AI/ML workloads. From research labs to large-scale production systems, AI/ML pipelines are typically built and run on Linux clusters.

Several factors contribute to Linux's dominance in this field. Firstly, most popular AI/ML frameworks and libraries, such as TensorFlow, PyTorch, scikit-learn, and Keras, have excellent support for Linux and often release new features for Linux first. Secondly, Linux provides robust support for the specialized hardware, like GPUs (Graphics Processing Units) from NVIDIA, that are essential for accelerating the training of deep learning models. NVIDIA's CUDA toolkit and drivers are well-supported on Linux. Thirdly, the open-source nature and command-line power of Linux make it ideal for managing complex software dependencies, automating workflows, and scaling computations across clusters of machines using tools like Kubernetes or Slurm.

AI/ML pipelines involve several stages, including data ingestion, preprocessing, model training, evaluation, deployment, and monitoring. Linux clusters provide the scalable compute resources needed for these demanding tasks. Containerization technologies like Docker, running on Linux, are widely used to package AI/ML environments, ensuring reproducibility and simplifying deployment. The combination of powerful open-source software, hardware support, and scalability makes Linux the go-to platform for innovation in AI and Machine Learning. You can explore Artificial Intelligence and Machine Learning further on OpenCourser.

Blockchain Node Deployment

Blockchain technology, the distributed ledger system underpinning cryptocurrencies like Bitcoin and Ethereum, and enabling various decentralized applications (dApps), heavily relies on Linux for node deployment and network participation. A blockchain node is a computer connected to the blockchain network that stores a copy of the ledger (or parts of it) and validates transactions and blocks according to the network's consensus rules.

Linux offers several advantages for running blockchain nodes. Its stability and reliability are crucial for nodes that need to operate 24/7 to maintain network integrity. The security features of Linux, including its robust permission model and tools for network hardening, help protect nodes from attacks. Furthermore, the command-line interface and scripting capabilities of Linux are well-suited for managing node software, automating updates, and monitoring node performance. Most blockchain client software (e.g., Geth for Ethereum, Bitcoin Core) is developed with strong support for Linux environments.

Developers and organizations setting up blockchain nodes, whether for participating in a public network, mining/staking, or building private/consortium blockchains, typically choose Linux as their operating system. Cloud platforms, which predominantly use Linux, are also a popular choice for hosting blockchain nodes due to their scalability and ease of deployment. As blockchain technology continues to evolve and find new applications, Linux will likely remain a foundational element of its infrastructure. Explore more about Blockchain on OpenCourser.

IoT Device Firmware Development

The Internet of Things (IoT) encompasses a vast network of interconnected physical devices, vehicles, home appliances, and other items embedded with electronics, software, sensors, actuators, and connectivity which enables these objects to collect and exchange data. Linux has emerged as a leading operating system for a significant portion of these IoT devices, especially those requiring more processing power and networking capabilities than simple microcontrollers can provide.

Developing firmware for Linux-based IoT devices involves creating a customized Linux distribution that is optimized for the specific hardware and application. This includes selecting an appropriate kernel version, configuring necessary drivers, choosing a root filesystem, and including the required libraries and application software. Tools like the Yocto Project and Buildroot are commonly used to streamline this complex process, allowing developers to build lean, secure, and efficient firmware images. The firmware must also often include mechanisms for over-the-air (OTA) updates to deploy new features and security patches remotely.

Linux offers a rich development environment, extensive networking capabilities (Wi-Fi, Bluetooth, Ethernet, cellular), and support for a wide range of peripherals, making it suitable for diverse IoT applications, from smart home hubs and industrial sensors to medical monitors and connected cars. Security is a major concern in IoT, and Linux provides various tools and techniques for hardening devices, such as secure boot, access control, and encryption. The large developer community and wealth of open-source software also accelerate IoT firmware development on Linux.

This course delves into embedded systems development, which is highly relevant to IoT firmware.

Challenges in the Linux Ecosystem

Hardware Compatibility Issues

While Linux boasts impressive hardware support across a vast range of architectures and devices, hardware compatibility can still present challenges, particularly for newer or niche components. Unlike proprietary operating systems where hardware manufacturers often prioritize driver development due to market share, Linux driver support sometimes relies on community efforts, reverse engineering, or delayed releases from manufacturers.

Users might encounter issues with brand-new graphics cards, Wi-Fi chipsets, or specialized peripherals where Linux drivers are not immediately available or fully functional upon the hardware's release. This can lead to a frustrating experience, requiring users to search for workarounds, compile drivers from source, or wait for official support to be integrated into the kernel or distributions. While the situation has significantly improved over the years, with many manufacturers now actively contributing to Linux driver development, gaps can still exist.

Another aspect is the complexity of certain hardware configurations, such as laptops with hybrid graphics or unique power management features. Getting these to work flawlessly on Linux might require additional configuration or specific kernel parameters. However, the Linux community is generally very resourceful, and solutions or workarounds are often shared through forums and wikis. For most standard hardware components, Linux compatibility is excellent, but it's always a good practice to research compatibility before purchasing new hardware specifically for Linux use.

Enterprise Support vs. Community Editions

A key consideration for organizations adopting Linux is the difference between enterprise-supported distributions and community-driven editions. While both are built upon the same open-source Linux kernel, they cater to different needs and offer varying levels of assurance and service.

Enterprise Linux distributions, such as Red Hat Enterprise Linux (RHEL) and SUSE Linux Enterprise Server (SLES), are commercial offerings that come with paid subscriptions. These subscriptions typically include access to certified and thoroughly tested software releases, long-term support (often 10 years or more), security patches, bug fixes, technical support from the vendor, and compatibility guarantees with specific hardware and software. This model provides businesses with a level of stability, predictability, and accountability that is often crucial for mission-critical systems.

Community editions, like Fedora, Debian, Ubuntu (the free community version), or CentOS Stream, are developed and supported by a global community of volunteers and organizations. They are generally free to use and distribute. While many community distributions are incredibly stable and reliable (Debian Stable is a prime example), their support model is different. Support often comes from community forums, mailing lists, and online documentation. Release cycles can be faster (e.g., Fedora), offering newer software but potentially shorter support windows for each release. While some organizations successfully use community editions in production, they typically need strong in-house Linux expertise or rely on third-party support providers if they require service-level agreements.

The challenge for some enterprises can be choosing the right model. The cost of enterprise subscriptions needs to be weighed against the potential risks and internal resource requirements of managing community editions for critical workloads. For many, the peace of mind and dedicated support offered by enterprise vendors justify the expense, especially in regulated industries or for systems demanding high availability.

Skill Gaps in Legacy System Maintenance

While Linux is at the forefront of modern technology, many organizations still rely on older, legacy systems that may be running outdated versions of Linux or other Unix-like operating systems. Maintaining these legacy systems can present significant challenges due to a growing skill gap.

As technology evolves rapidly, newer IT professionals are often trained on the latest tools, cloud platforms, and modern Linux distributions. Expertise in older kernel versions, specific proprietary Unix variants (which share conceptual similarities with Linux), or archaic system administration practices may become rarer. Finding individuals with the skills and willingness to maintain these aging systems can be difficult and expensive. The original developers or administrators of these systems may have retired or moved on, leaving behind poorly documented or complex environments.

Migrating legacy applications to modern platforms is often the ideal solution but can be a complex, costly, and time-consuming undertaking, especially if the applications are critical and deeply integrated. Therefore, organizations might find themselves in a situation where they need to keep legacy Linux systems running for an extended period. This requires careful planning for knowledge transfer, investing in specialized training if possible, or engaging consultants with experience in legacy system maintenance. The challenge highlights the importance of continuous modernization efforts and proactive succession planning for critical IT skills.

Competition with Proprietary Cloud Platforms

While Linux underpins the vast majority of cloud infrastructure, including that of major proprietary cloud providers like AWS, Azure, and GCP, these platforms also offer a wide array of managed services and platform-as-a-service (PaaS) offerings that can, in some ways, compete with traditional Linux-centric approaches.

For example, instead of an organization setting up and managing its own database server on a Linux VM in the cloud, it might opt for a managed database service (like Amazon RDS or Azure SQL Database). Similarly, instead of deploying applications on Linux VMs, developers might use serverless computing platforms (like AWS Lambda or Azure Functions) or managed container orchestration services (like Amazon ECS/EKS, Azure Kubernetes Service). These managed services abstract away much of the underlying operating system management, including patching, scaling, and high availability, which can be attractive to businesses looking to reduce operational overhead and focus on application development.

This doesn't diminish the role of Linux, as it's still running underneath these services. However, it means that some of the traditional tasks of Linux system administration are handled by the cloud provider. The "competition" is more about the level of abstraction at which organizations choose to operate. While this shift can reduce the direct need for some types of Linux administration roles focused on OS-level tasks within those specific managed services, it also creates demand for professionals who understand how to integrate and manage these cloud services, often still requiring strong Linux and scripting skills for automation, monitoring, and interaction with the cloud platforms' APIs. The challenge for Linux professionals is to adapt and acquire skills relevant to these evolving cloud-native architectures.

Understanding cloud concepts is increasingly important for Linux professionals.

Frequently Asked Questions (Career Focus)

Is Linux certification necessary for cloud engineering roles?

While not always strictly mandatory, Linux certifications can be beneficial for aspiring cloud engineers. Cloud platforms like AWS, Azure, and GCP heavily rely on Linux for their underlying infrastructure and many of their services. Therefore, a strong understanding of Linux is fundamental for most cloud engineering roles. Certifications such as LPIC-1, CompTIA Linux+, or RHCSA can formally validate your Linux skills and demonstrate to potential employers that you have a foundational competency.

However, practical experience and demonstrable skills often carry more weight than certifications alone. Employers in the cloud space are typically looking for candidates who can manage Linux instances in the cloud, automate tasks using scripting (Bash, Python), understand networking in a cloud context, and work with containerization technologies like Docker and orchestration tools like Kubernetes, all of which run predominantly on Linux. If you have strong project experience, contributions to open-source projects, or a portfolio showcasing your abilities, these can be just as, if not more, compelling than a certification.

In summary, a Linux certification can be a valuable asset, especially for entry-level positions or when transitioning into a cloud role, as it can help your resume stand out and provide a structured learning path. However, it should be complemented by hands-on experience and a deep understanding of cloud-specific technologies. Many cloud provider certifications (e.g., AWS Certified Solutions Architect, Azure Administrator Associate) also assume or test Linux knowledge implicitly.

How does Linux experience impact software developer salaries?

Linux experience can positively impact a software developer's salary, particularly in certain domains. Many modern software development stacks, especially in web development, backend systems, cloud computing, and embedded systems, are built on or deployed to Linux environments. Developers proficient in Linux often possess a deeper understanding of the systems their code runs on, which can lead to more efficient, robust, and secure applications.

Skills such as navigating the command line, shell scripting for automation, understanding system calls, debugging on Linux, and working with Linux-based development tools (compilers, debuggers, version control) are highly valued. For roles in DevOps, backend development, or systems programming, Linux proficiency is often a core requirement and can command higher salaries. According to various salary surveys and job market analyses, roles that explicitly require Linux skills or operate within Linux-heavy ecosystems tend to offer competitive compensation. For instance, a salary guide from Robert Half often shows that specialized skills, including operating system expertise, can influence earning potential. Data from sites like the U.S. Bureau of Labor Statistics (BLS) on Computer and Information Technology Occupations also provides broad insights into earning potential in related fields, although it may not drill down to specific OS skills.

Furthermore, experience with Linux-based technologies like Docker, Kubernetes, and cloud platforms directly translates to higher earning potential, as these are in high demand. While the exact impact varies by location, experience level, and specific role, a strong foundation in Linux generally makes a software developer a more versatile and valuable asset, which is often reflected in their compensation.

Can Linux skills transition to cybersecurity careers?

Absolutely. Linux skills are not only transferable but often essential for a career in cybersecurity. Many cybersecurity tools, platforms, and methodologies are built on or heavily utilize Linux. For example, Kali Linux, a popular distribution for penetration testing and digital forensics, is Linux-based and comes packed with security tools that require command-line proficiency.

Cybersecurity professionals in roles such as Security Analyst, Penetration Tester, Security Engineer, or Digital Forensics Investigator frequently work with Linux systems. They need to understand Linux internals to analyze system logs for malicious activity, configure firewalls and intrusion detection systems, assess vulnerabilities in Linux servers and applications, and conduct security audits. Skills in shell scripting (Bash) and programming languages like Python are also crucial for automating security tasks, analyzing malware, and developing custom security tools on Linux platforms.

Moreover, understanding how Linux systems are secured (e.g., user permissions, process isolation, MAC frameworks like SELinux/AppArmor) provides a strong foundation for defending against attacks and implementing robust security measures. Many security incidents involve Linux servers, so being able to navigate and analyze these systems is critical. Therefore, a background in Linux system administration or development provides an excellent springboard into various cybersecurity specializations.

These courses are relevant for those looking at the intersection of Linux and security.

What industries prioritize Linux expertise?

Linux expertise is prioritized across a wide range of industries due to its versatility, stability, security, and cost-effectiveness. Some key sectors where Linux skills are particularly in high demand include:

- Technology and Software Development: This is perhaps the most obvious. Companies developing software, web applications, mobile apps (Android is Linux-based), and cloud services heavily rely on Linux for their development, testing, and deployment environments.

- Cloud Computing: Major cloud providers (AWS, Azure, GCP) and companies using cloud services extensively need professionals with Linux skills to manage cloud infrastructure and deploy applications.

- Telecommunications: Telecom companies use Linux in network equipment, servers, and for managing their vast infrastructure.

- Finance and Banking: The financial industry values Linux for its stability and security in running trading platforms, backend systems, and data analytics. High-frequency trading systems often run on optimized Linux kernels.

- Scientific Research and Academia: As mentioned earlier, Linux dominates High-Performance Computing (HPC) used in scientific research and is a staple in university computer science programs.

- Government and Defense: Many government agencies and defense contractors use Linux for its security features and customizability.

- Manufacturing and Industrial Automation: Linux is increasingly used in embedded systems for industrial control, robotics, and IoT devices within manufacturing environments.

- Media and Entertainment: Animation studios and visual effects companies often use Linux-based render farms and workstations for their processing power and flexibility.

- Healthcare: While subject to strict regulations like HIPAA, Linux is used in some medical devices, hospital information systems, and research databases.

Essentially, any industry that relies on robust server infrastructure, cloud computing, embedded systems, or requires significant data processing and analysis is likely to prioritize Linux expertise. The open-source nature of Linux also makes it attractive to startups and organizations looking for cost-effective and customizable solutions.

How to demonstrate Linux skills without formal education?

Demonstrating Linux skills without a formal computer science degree is entirely possible and quite common in the tech industry, which often values practical abilities and experience. Here are several ways to showcase your Linux proficiency:

- Build a Portfolio of Projects: Create personal projects that utilize Linux. This could be setting up a home server (web server, NAS, media server on a Raspberry Pi), developing scripts to automate tasks, configuring complex network setups in a virtual lab, or building applications that run on Linux. Document these projects on a personal website, blog, or GitHub repository.

- Contribute to Open-Source Projects: As mentioned earlier, contributing to Linux distributions or other open-source software demonstrates real-world experience, collaboration skills, and technical competence. Even small contributions like bug fixes, documentation improvements, or testing can be valuable.

- Earn Certifications: Industry-recognized certifications like LPIC-1, CompTIA Linux+, or RHCSA can formally validate your skills and provide a credential that employers recognize. These often require hands-on lab work to pass.

- Create Online Content: Write blog posts, create video tutorials, or answer questions on forums like Stack Exchange or Reddit communities related to Linux. This not only helps others but also solidifies your own understanding and showcases your expertise.

- Participate in CTFs and Coding Challenges: Engaging in Capture The Flag (CTF) competitions (especially those focused on Linux security) or coding challenges that require Linux environments can demonstrate problem-solving abilities and technical depth.

- Home Lab Experimentation: Detail your home lab setup and the experiments you've conducted. Explain the challenges you faced and how you overcame them. This shows initiative and a passion for learning.

- Networking and Community Involvement: Attend local Linux User Group (LUG) meetings, conferences (many have virtual options), or online communities. Engaging with other professionals can lead to learning opportunities and connections that might vouch for your skills.

When applying for jobs, tailor your resume to highlight these practical experiences and skills. During interviews, be prepared to discuss your projects in detail and solve technical problems, often involving a Linux command line. Enthusiasm and a demonstrable passion for learning can go a long way. Consider taking online courses to structure your learning and gain specific skills. Many platforms, like OpenCourser, list a wide variety of Linux courses.

This course specifically addresses preparing for Linux technical interviews.

This book is a great starting point for learning Linux from scratch.

Remote work opportunities for Linux professionals

Remote work opportunities for Linux professionals are abundant and have been steadily increasing, a trend accelerated by global shifts in work culture. The nature of Linux-related work, which often involves managing systems and software that can be accessed from anywhere with an internet connection, lends itself well to remote arrangements.