Computer Architecture

vigating the World of Computer Architecture

Computer Architecture is, at its core, the conceptual design and fundamental operational structure of a computer system. Think of it as the detailed blueprint that dictates how a computer's hardware components are organized and how they interact to execute software. This intricate field serves as the critical bridge between the physical hardware and the software that runs on it, defining everything from the types of instructions a processor can understand to how data is managed and moved throughout the system. For anyone intrigued by what makes computers tick, understanding computer architecture offers a fascinating journey into the heart of modern technology.

Working in computer architecture can be deeply engaging. It involves solving complex puzzles to make computers faster, more efficient, and capable of tackling new challenges, from powering vast data centers to enabling the tiny, powerful chips in our smartphones. There's also the excitement of being at the forefront of innovation, constantly pushing the boundaries of what's possible in computing, whether that's designing processors for the next generation of artificial intelligence or creating ultra-low-power chips for new Internet of Things (IoT) devices. This field is not just about building computers; it's about shaping the future of how we interact with the digital world.

What is Computer Architecture?

Computer Architecture is the discipline that defines the functional behavior and organization of computer systems. It's the art and science of selecting and interconnecting hardware components to create computers that meet functional, performance, and cost goals. Essentially, it provides the 'blueprint' for a computer system, detailing how all the parts work together. This field is crucial because it directly impacts a computer's capabilities, speed, energy consumption, and overall efficiency. Whether you are a software developer, a hardware engineer, or simply a technology enthusiast, a foundational understanding of computer architecture can provide valuable insights into how these ubiquitous machines operate.

The Blueprint of a Computer System

Imagine you're building with LEGOs. Computer architecture is like the master plan that shows you which blocks you have, what each block can do, and how they should connect to build your desired creation, be it a race car or a spaceship. It doesn't necessarily specify the exact color or material of each LEGO brick (that's more like the physical implementation or microarchitecture), but it defines the overall structure and how the different parts function together. In a computer, these "blocks" are components like the central processing unit (CPU), memory, and input/output devices.

The architecture defines what these components are and how they communicate. For example, it specifies what kind of instructions the CPU can understand, how memory is accessed, and how data flows between different parts of the system. This blueprint ensures that all components can work harmoniously, allowing software programs to run effectively and efficiently.

Understanding this blueprint is vital because it determines the capabilities and limitations of a computer. A well-designed architecture can lead to a fast and efficient system, while a poorly designed one can result in bottlenecks and sluggish performance. It's the foundational plan that all software and hardware engineers rely on.

The Hardware-Software Interface: Instruction Set Architecture (ISA)

At the heart of computer architecture lies the Instruction Set Architecture, or ISA. You can think of the ISA as the official language that the hardware (the processor) and the software (programs) use to communicate. It's a contract, defining all the operations the processor can perform, the data types it can handle, how it manages memory, and how it deals with inputs and outputs. When a programmer writes code in a high-level language like Python or Java, that code is eventually translated (compiled or interpreted) into a series of these specific instructions that the processor can directly execute.

For example, an ISA will define an instruction for adding two numbers, another for moving data from memory to a temporary storage location within the processor (a register), and yet another for deciding what instruction to execute next based on a certain condition. Software developers write programs using these fundamental building blocks, and hardware designers build processors that can understand and execute these instructions efficiently.

The ISA is a critical abstraction layer. It allows software to run on different hardware implementations as long as they adhere to the same ISA. This means a program compiled for a specific ISA can, in theory, run on any processor that supports that ISA, regardless of the underlying physical design of that processor. This separation is what allows Intel and AMD to produce different processors that can still run the same Windows or Linux operating systems and applications.

These courses can help you build a solid understanding of the fundamental interface between hardware and software.

Architecture vs. Microarchitecture

It's important to distinguish between computer architecture and microarchitecture, though the terms are sometimes used interchangeably. As we've discussed, architecture (often referring to the ISA) is the logical design – it's what the computer does from a programmer's perspective. It defines the instructions, registers, memory addressing modes, and the overall behavior of the system. It's the "what."

Microarchitecture, on the other hand, is the physical implementation of that architecture – it's how the computer actually does it. It involves the specific design of the circuits, the arrangement of components on a chip, the number of execution units, the size and organization of caches, and the techniques used to speed up instruction execution (like pipelining or out-of-order execution). It's the "how."

Think of it this way: the architecture of a car might specify that it has an engine, four wheels, a steering wheel, and can accelerate, brake, and turn. This is the functional definition. The microarchitecture would be the specific type of engine (V6, electric), the design of the transmission, the layout of the cylinders, and the materials used. Different car manufacturers can implement the same basic architecture (a functional car) with vastly different microarchitectures, leading to variations in performance, fuel efficiency, and cost. Similarly, two processors can implement the same ISA (e.g., x86) but have very different microarchitectures, resulting in different speeds and power consumption.

Why Understanding Computer Architecture is Crucial

In our increasingly digital world, understanding computer architecture is more crucial than ever. For software developers, it helps in writing more efficient code by understanding how their programs interact with the underlying hardware. Knowing how memory is organized, how caches work, and how processors execute instructions can lead to significant performance improvements in applications. This is especially true for performance-critical software like games, operating systems, databases, and high-performance computing applications.

For hardware engineers, a deep understanding of architecture is fundamental to designing the next generation of processors, memory systems, and other components. It allows them to make informed trade-offs between performance, power consumption, cost, and area. As technology scales and new computing paradigms emerge (like AI accelerators and quantum computers), the role of the computer architect becomes even more vital in pushing the boundaries of what's possible.

Even for those not directly involved in hardware or software development, a basic grasp of computer architecture can demystify how computers work, enabling more informed decisions when choosing technology and a better appreciation for the complexity and ingenuity behind the devices we use every day. It underpins everything from the smartphones in our pockets to the supercomputers crunching vast datasets to solve global challenges.

To delve deeper into the foundational concepts that underpin computer systems, these resources offer comprehensive introductions.

For those who prefer a traditional textbook approach, these books are highly recommended for their clarity and depth in explaining core architectural principles.

Fundamental Concepts

To truly appreciate computer architecture, one must grasp several fundamental concepts that form its bedrock. These principles govern how computers are structured and how they carry out their tasks. From the overarching design philosophies to the step-by-step process of executing a single command, these concepts are essential for anyone looking to understand the inner workings of digital machines. They provide the vocabulary and the framework for discussing and designing computer systems, regardless of their size or application.

Exploring these fundamentals will illuminate how different design choices impact performance, efficiency, and complexity. We will touch upon foundational models of computation, the critical role of the instruction set, the basic operational cycle of a processor, key metrics used to evaluate performance, and an ingenious technique to make computers work faster.

Von Neumann and Harvard Architectures

Two early and influential models for computer architecture are the Von Neumann and Harvard architectures. They primarily differ in how they handle memory for instructions and data.

The Von Neumann architecture, proposed by mathematician John von Neumann, is characterized by a single address space for both instructions and data. This means that the CPU fetches both program instructions and the data those instructions operate on from the same memory unit using the same data bus (the pathway for data transfer). Most general-purpose computers today, like your laptop or desktop, are based on this model due to its flexibility and simpler hardware requirements for memory access. However, because instructions and data share the same pathway, it can lead to a bottleneck, known as the Von Neumann bottleneck, where the CPU has to wait for data or instructions to be fetched, limiting processing speed.

The Harvard architecture, in contrast, uses physically separate storage and signal pathways for instructions and data. This allows the CPU to fetch an instruction and access data simultaneously, potentially leading to faster execution because instruction fetches can overlap with data operations. This architecture is commonly found in specialized systems like Digital Signal Processors (DSPs) and microcontrollers, where speed and predictability for specific tasks are paramount. Many modern high-performance processors actually use a modified Harvard architecture, especially within their cache systems, to gain the benefits of simultaneous access while still interacting with a unified main memory further down the hierarchy.

The Instruction Set Architecture (ISA) Revisited

As introduced earlier, the Instruction Set Architecture (ISA) is a critical concept, acting as the abstract interface between the hardware and the lowest-level software. It defines everything a programmer needs to know to write correct machine code for a processor, without needing to know the specific details of its internal workings (the microarchitecture).

An ISA specifies elements such as:

- The set of instructions: What operations can the processor perform? (e.g., arithmetic operations like add and subtract, logical operations like AND and OR, data movement operations like load and store, and control flow operations like branch and jump).

- Data types: What kinds of data can these instructions operate on? (e.g., integers, floating-point numbers, characters).

- Registers: Small, fast storage locations within the CPU that hold data temporarily during computations. The ISA defines the number and types of registers accessible to the programmer.

- Memory addressing modes: How does the processor specify the location of data in main memory?

- Input/Output (I/O) model: How does the processor communicate with peripheral devices?

The choice of ISA has profound implications for the design of both hardware and software. Different ISAs (like x86, ARM, RISC-V) are designed with different goals in mind, leading to variations in complexity, performance characteristics, and power efficiency, making them suitable for different types of devices and applications.

This course provides a deeper dive into how programs are represented and executed at a system level.

For a comprehensive understanding of computer organization, which is closely related to architecture, consider the following highly-rated text.

The Fetch-Decode-Execute Cycle

The fundamental operation of most CPUs is the fetch-decode-execute cycle. This is the sequence of steps the processor repeatedly performs to run a program. It's the heartbeat of the computer.

- Fetch: The processor fetches the next instruction from memory (or cache). The address of this instruction is typically stored in a special register called the Program Counter (PC). After fetching, the PC is updated to point to the next instruction.

- Decode: The fetched instruction, which is in binary machine code, is interpreted or decoded by the processor's control unit. The control unit determines what operation needs to be performed and what operands (data) are involved.

- Execute: The decoded instruction is carried out. This might involve performing an arithmetic or logical operation using the Arithmetic Logic Unit (ALU), moving data between registers and memory, or changing the program counter to jump to a different part of the program (in the case of a branch instruction). After execution, the cycle repeats with the fetching of the next instruction.

This cycle happens incredibly fast, millions or even billions of times per second in modern processors. While this is a simplified view (modern processors often have more complex cycles involving additional steps like operand fetching and result write-back, and use techniques like pipelining to overlap these stages for multiple instructions), the fetch-decode-execute sequence remains the core principle of how CPUs process instructions.

The following course offers a detailed look at the operational cycle of a processor and how instructions are handled.

Key Performance Metrics

Evaluating the performance of a computer or its components requires specific metrics. Some of the key ones include:

- Clock Speed (Clock Rate): Measured in Hertz (Hz), typically Gigahertz (GHz) for modern processors. This indicates how many cycles the processor's internal clock ticks per second. A higher clock speed generally means more instructions can be processed per unit of time, but it's not the sole determinant of performance.

- CPI (Cycles Per Instruction): This is the average number of clock cycles required to execute one instruction. Different instructions can take different numbers of cycles. A lower CPI is generally better, indicating more efficient instruction processing.

- IPC (Instructions Per Cycle): This is the inverse of CPI, representing the average number of instructions completed per clock cycle. A higher IPC is better. Modern processors often achieve an IPC greater than 1 by executing multiple instructions in parallel.

- Latency: The time it takes for an operation to complete, from start to finish. For example, memory latency is the time taken to retrieve data from memory. Lower latency is desirable.

- Throughput: The total amount of work done in a given amount of time. For instance, memory throughput (or bandwidth) is the rate at which data can be read from or written to memory, often measured in Gigabytes per second (GB/s). Higher throughput is better.

It's crucial to understand that these metrics are often interrelated and optimizing for one might negatively impact another. For instance, increasing clock speed can sometimes lead to a higher CPI if the microarchitecture cannot keep up, or it might significantly increase power consumption. A holistic view is necessary when evaluating and designing for performance.

This course provides insights into measuring and optimizing system performance.

Pipelining: Speeding Up Execution

Pipelining is a powerful technique used in modern processors to improve performance by overlapping the execution of multiple instructions. It's analogous to an assembly line in a factory. Instead of processing one instruction completely (fetch, decode, execute, etc.) before starting the next, pipelining breaks down instruction processing into several independent stages. Each stage handles a different part of an instruction, and multiple instructions can be in different stages of execution simultaneously.

For example, while one instruction is being executed, the next instruction can be decoded, and the one after that can be fetched. If an instruction takes, say, 5 clock cycles to complete without pipelining, and you have a 5-stage pipeline, then after an initial fill-up period, the processor can ideally complete one instruction every clock cycle, effectively increasing the throughput by up to 5 times (though practical limits and hazards reduce this ideal speedup).

Pipelining significantly enhances the instruction throughput (the number of instructions completed per unit time) without necessarily decreasing the latency of individual instructions. However, it introduces complexities, such as pipeline hazards (situations where the next instruction cannot execute in the following clock cycle), which architects must design mechanisms to handle. Despite these challenges, pipelining is a cornerstone of high-performance processor design.

Historical Evolution and Milestones

The field of computer architecture is not static; it has a rich history marked by groundbreaking innovations and significant shifts in design philosophy. Understanding this evolution provides context for current technologies and offers insights into the enduring challenges and trade-offs that architects have grappled with for decades. From the room-sized behemoths of the mid-20th century to the incredibly powerful and compact microprocessors of today, the journey of computer architecture is a story of relentless progress and ingenuity.

Tracing these developments helps us appreciate how theoretical concepts were translated into working machines, how limitations spurred new inventions, and how the interplay between hardware capabilities and software demands has driven the field forward. It's a narrative that includes iconic machines, transformative ideas, and the ever-present influence of technological scaling.

From ENIAC to Microprocessors

The dawn of electronic computing saw machines like the ENIAC (Electronic Numerical Integrator and Computer), completed in 1945. ENIAC was a colossal machine that filled a large room, used thousands of vacuum tubes, and was programmed by manually setting switches and plugging cables. It was a decimal machine, not binary, and lacked stored program capability initially. Its successor, EDVAC (Electronic Discrete Variable Automatic Computer), designed with John von Neumann's contributions, was one of the first to incorporate the stored-program concept, where instructions were stored in memory alongside data, a fundamental tenet of the Von Neumann architecture.

The invention of the transistor in 1947 by Bell Labs and later the integrated circuit (IC) in the late 1950s revolutionized electronics, leading to smaller, faster, more reliable, and less power-hungry computers. Mainframes dominated the 1960s, with IBM's System/360 family being a landmark. It introduced the concept of a compatible family of computers with different performance levels and prices, all running the same software – a crucial architectural innovation.

The 1970s witnessed the birth of the microprocessor, where an entire CPU was integrated onto a single chip. Intel's 4004, released in 1971, is widely regarded as the first commercially available microprocessor. This paved the way for minicomputers, and eventually, personal computers, transforming computing from a centralized, specialized activity into something accessible to individuals and businesses.

The Impact of Moore's Law

No discussion of computer architecture's evolution is complete without mentioning Moore's Law. In 1965, Gordon Moore, co-founder of Intel, observed that the number of transistors on an integrated circuit was doubling approximately every two years (initially every year, then revised). This observation, which became a self-fulfilling prophecy for the semiconductor industry, has been a primary driver of architectural evolution for decades.

Moore's Law meant that architects could count on having exponentially more transistors to work with in each new generation of chips. This abundance allowed for increasingly complex architectures, larger caches, more sophisticated prediction mechanisms, and the integration of more features onto a single chip (like memory controllers and graphics units). It fueled incredible performance gains and cost reductions, making computers more powerful and affordable over time.

However, in recent years, the traditional scaling predicted by Moore's Law has slowed due to physical limitations (transistors approaching atomic sizes) and economic challenges (the rising cost of manufacturing facilities for advanced nodes). This slowdown is forcing architects to explore new avenues for performance improvement beyond simply shrinking transistors, such as specialized architectures and novel computing paradigms.

Significant Architectural Shifts

Several key architectural ideas and shifts have profoundly impacted computer design:

- Caches: The introduction of small, fast memory (cache) located closer to the CPU to store frequently accessed data and instructions dramatically reduced the effective memory access time, mitigating the Von Neumann bottleneck. Modern systems use multiple levels of cache (L1, L2, L3).

- Pipelining: As discussed earlier, this technique of overlapping instruction execution stages significantly increased instruction throughput.

-

RISC vs. CISC Debate: In the 1980s, a major debate emerged between two design philosophies for Instruction Set Architectures:

- CISC (Complex Instruction Set Computer): Characterized by a large number of complex instructions, some of which could perform multiple low-level operations (e.g., a single instruction to load from memory, add to a register, and store back to memory). The idea was to make assembly language programming easier and to reduce the number of instructions per program. Intel's x86 architecture is a prime example.

- RISC (Reduced Instruction Set Computer): Advocated for a smaller set of simple, fixed-length instructions, each executable in a single clock cycle (ideally). This simplicity allows for faster decoding, easier pipelining, and potentially higher clock speeds. Compilers play a more significant role in translating high-level language constructs into these simpler instructions. ARM and MIPS are well-known RISC architectures.

- Superscalar Execution and Out-of-Order Execution: To further boost performance, processors began incorporating multiple execution units, allowing them to execute more than one instruction per clock cycle (superscalar). Out-of-order execution allows the processor to execute instructions based on data availability rather than their original program order, hiding latencies and improving efficiency.

Influential Architectures and Machines

Certain computer architectures and machines have left an indelible mark on the field:

- IBM System/360 (1964): Revolutionized the industry by introducing a family of compatible computers, establishing the concept of a stable architecture across different hardware implementations. It was a CISC architecture.

- DEC PDP-11 (1970): A highly influential 16-bit minicomputer with a clean and elegant architecture. It played a significant role in the development of the C programming language and the UNIX operating system.

- Intel x86 (starting with the 8086 in 1978): This CISC architecture became dominant in personal computers and servers. It has evolved significantly over decades, incorporating numerous extensions while maintaining backward compatibility.

- ARM (Acorn RISC Machine, now Advanced RISC Machines): A RISC architecture that has become ubiquitous in mobile and embedded devices due to its low power consumption and good performance per watt. Its influence is rapidly expanding into other areas, including servers and laptops.

- MIPS (Microprocessor without Interlocked Pipeline Stages): Another influential RISC architecture, widely used in embedded systems, networking equipment, and early workstations. It was also a popular choice for teaching computer architecture in universities.

- RISC-V (2010 onwards): An open-standard ISA based on RISC principles. Its open nature allows anyone to design, manufacture, and sell RISC-V chips and software, fostering innovation and competition. It is gaining significant traction in various application domains.

These examples highlight how architectural choices and innovations have shaped the computing landscape we know today. Learning about these historical milestones provides valuable lessons for future designs.

The following course offers insights into the evolution of RISC-V, a significant modern architecture.

Key Components and Design Principles

Understanding computer architecture involves dissecting a system into its core components and grasping the fundamental principles that guide their design and interaction. These components are the essential hardware building blocks, each performing specific tasks, while the design principles reflect the constant balancing act architects must perform to meet diverse and often conflicting goals. Delving into these aspects reveals the intricate machinery that powers our digital experiences and the clever trade-offs made to achieve optimal performance, efficiency, and cost-effectiveness.

Main CPU Components: CU, ALU, Registers

The Central Processing Unit (CPU), often called the "brain" of the computer, is where most of the computation happens. It consists of several key sub-components:

- Control Unit (CU): The CU directs and coordinates most of the operations within the CPU and, by extension, the entire computer. It fetches instructions from memory, decodes them, and generates control signals that tell other components (like the ALU, registers, and memory) what to do and when. Think of it as the conductor of an orchestra, ensuring every section plays its part at the right time.

- Arithmetic Logic Unit (ALU): The ALU performs arithmetic operations (like addition, subtraction, multiplication, division) and logical operations (like AND, OR, NOT, XOR). It takes data from registers, performs the specified operation, and stores the result back into a register or memory. This is where the actual "number crunching" and decision-making (based on comparisons) take place.

- Registers: Registers are small, extremely fast storage locations directly within the CPU. They are used to hold data that is currently being processed, instructions that are about to be executed, intermediate results, and control information. Because they are so close to the CU and ALU, accessing data in registers is much faster than accessing data in main memory. Common types of registers include general-purpose registers (for holding data), the program counter (PC, which holds the address of the next instruction to be fetched), and the instruction register (IR, which holds the currently executing instruction).

These components work in tight coordination, driven by the fetch-decode-execute cycle, to process instructions and manipulate data.

For learners interested in the practical aspects of CPU design, this course offers a hands-on approach.

This book provides in-depth coverage of digital design principles which are foundational to understanding CPU components.

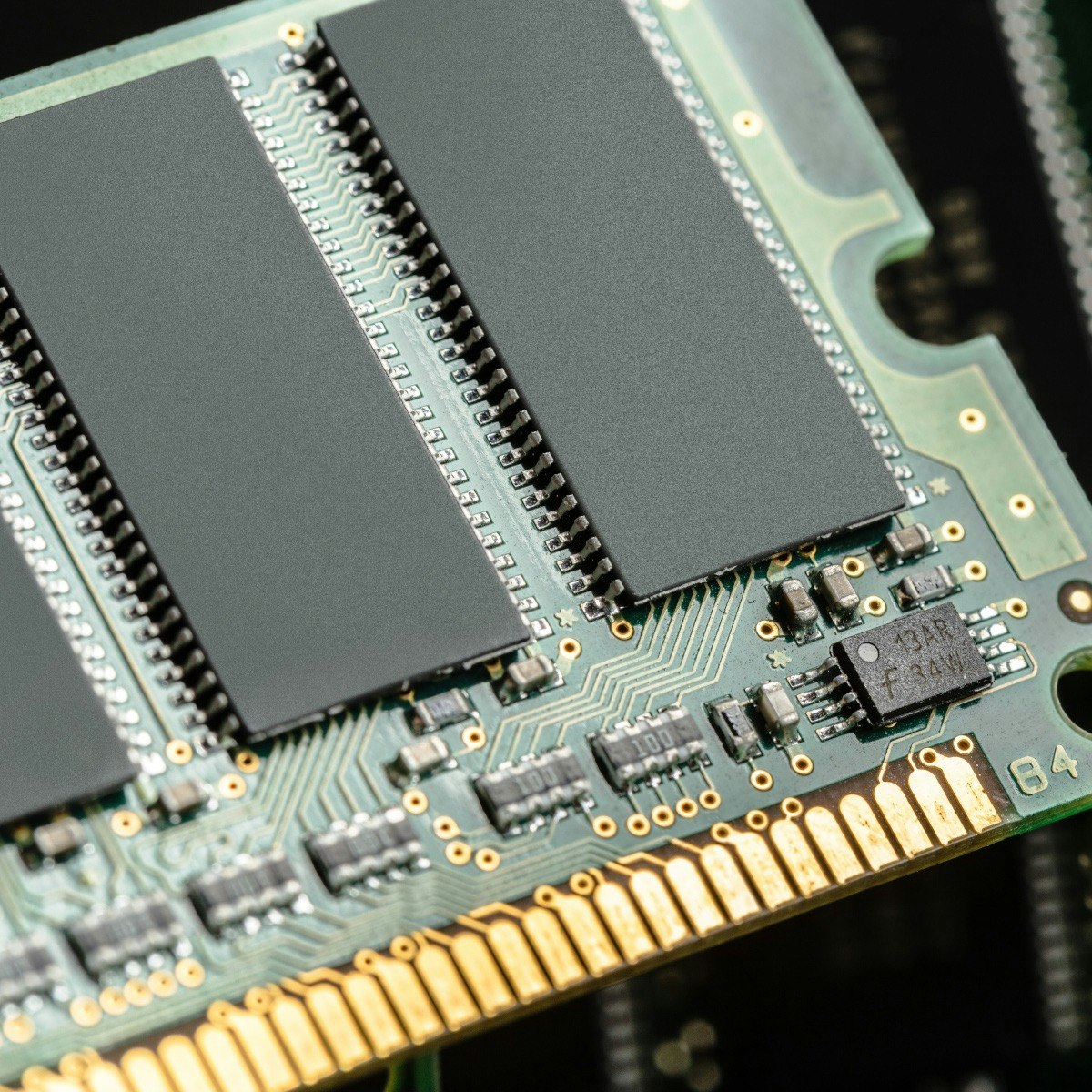

The Memory Hierarchy

Computers use a variety of memory types, organized in a hierarchy based on speed, cost, and capacity. The goal of the memory hierarchy is to provide the CPU with fast access to frequently used data and instructions, while also offering large, cost-effective storage for less frequently accessed information. It exploits the principle of locality, which observes that programs tend to access data and instructions in clusters.

The typical levels in a memory hierarchy, from fastest and smallest to slowest and largest, are:

- Registers: As mentioned, these are inside the CPU, offering the fastest access but having very limited capacity.

-

Cache: A small, fast memory located on or very close to the CPU chip. Caches store copies of frequently used data and instructions from main memory. Accessing data in cache is much faster than going to main memory. Modern CPUs typically have multiple levels of cache:

- L1 Cache (Level 1): Smallest and fastest, often split into separate caches for instructions (I-cache) and data (D-cache).

- L2 Cache (Level 2): Larger and slightly slower than L1, usually unified (stores both instructions and data).

- L3 Cache (Level 3): Even larger and slower, often shared among multiple CPU cores. Some systems may have even more levels.

- Main Memory (RAM - Random Access Memory): This is the primary working memory of the computer, where programs and data are stored while the computer is running. It's much larger than cache but significantly slower. It is typically volatile, meaning its contents are lost when the power is turned off. DRAM (Dynamic RAM) is the most common technology used for main memory.

- Secondary Storage: This provides long-term, non-volatile storage for programs and data. Examples include Hard Disk Drives (HDDs) and Solid-State Drives (SSDs). Secondary storage is much larger in capacity than main memory but also much slower to access. It's where your operating system, applications, and files are stored permanently.

- Tertiary Storage (Optional): Used for very large-scale data archiving, such as tape libraries. It offers massive capacity at a very low cost per bit but has very slow access times.

The memory hierarchy is managed by a combination of hardware and operating system software to ensure that the CPU has the data it needs as quickly as possible, minimizing stalls and maximizing performance.

These courses explore memory systems, a critical aspect of computer architecture.

Input/Output (I/O) Mechanisms and Buses

Input/Output (I/O) mechanisms allow the computer to interact with the outside world and with peripheral devices such as keyboards, mice, displays, printers, network adapters, and storage drives. Managing I/O is a critical function of computer architecture.

Key aspects of I/O include:

- I/O Modules (or Controllers): These are specialized hardware components that interface between the CPU/memory and peripheral devices. Each device type typically has its own controller (e.g., a graphics card is a sophisticated I/O controller for the display). Controllers handle the specifics of device operation, translating commands from the CPU into signals the device understands, and vice versa.

- I/O Addressing: The CPU needs a way to select and communicate with specific I/O devices. This can be done through memory-mapped I/O (where I/O device registers are treated as memory locations) or port-mapped I/O (using special I/O instructions and a separate address space for I/O ports).

-

Data Transfer Methods:

- Programmed I/O (PIO): The CPU directly controls the data transfer, repeatedly checking the status of the I/O device. This is simple but can be inefficient as it keeps the CPU busy.

- Interrupt-driven I/O: The I/O device notifies the CPU via an interrupt signal when it is ready for data transfer or has completed an operation. This allows the CPU to perform other tasks while waiting, improving efficiency.

- Direct Memory Access (DMA): For large data transfers, a DMA controller can transfer data directly between an I/O device and main memory without involving the CPU in every byte transfer. This significantly offloads the CPU.

- Buses: Buses are communication pathways that transfer data and control signals between different components of the computer (CPU, memory, I/O controllers). A system typically has several types of buses, such as a processor-memory bus (for high-speed communication between CPU and main memory) and various I/O buses (e.g., PCIe, USB) for connecting peripheral devices. Buses are characterized by their width (number of bits transferred simultaneously) and speed.

Fundamental Design Trade-offs

Designing a computer architecture is a complex process that involves balancing numerous, often conflicting, design goals. Architects constantly make trade-offs to achieve the best possible system for a given set of constraints and target applications. Some of the most fundamental trade-offs include:

- Performance vs. Power Consumption: Increasing performance (e.g., by using higher clock speeds, more execution units, or more aggressive techniques like out-of-order execution) often leads to increased power consumption and heat dissipation. This is a critical concern for mobile devices (battery life) and large data centers (electricity costs and cooling).

- Performance vs. Cost: Higher performance components (faster processors, larger caches, faster memory) generally cost more to manufacture. Architects must balance the desire for maximum performance with the target cost of the system.

- Performance vs. Area: On an integrated circuit, more complex features and larger caches consume more silicon area. Larger chips are more expensive to produce and can have lower manufacturing yields. Architects must decide how to best utilize the available chip area.

- Flexibility/Generality vs. Specialization: General-purpose processors (like CPUs) are designed to run a wide variety of applications reasonably well. Specialized processors (like GPUs for graphics, or TPUs for AI) are designed to perform specific tasks extremely efficiently but may be inefficient or incapable of performing other tasks. There's a trade-off between the versatility of a general-purpose architecture and the high performance/efficiency of a specialized one.

- Backward Compatibility vs. Innovation: Maintaining compatibility with older software can constrain architectural innovation, as new designs must support legacy features. However, breaking compatibility can alienate users and disrupt existing software ecosystems.

These trade-offs are at the heart of computer architecture. There is rarely a single "best" solution; instead, architects strive for an optimal design that meets the specific requirements of the target market and application domain. This course offers a perspective on designing for energy efficiency, a key modern constraint.

Instruction Set Architectures (ISAs)

The Instruction Set Architecture (ISA) is a pivotal concept in computer architecture, forming the crucial link between the software that runs on a computer and the hardware that executes it. It defines the set of all instructions that a processor can understand and execute. Think of it as the vocabulary and grammar of a language that hardware and software must both adhere to for successful communication. The choice and design of an ISA have far-reaching implications, influencing everything from processor complexity and performance to power efficiency and the ease of software development.

ISA as the Contract Between Hardware and Software

The ISA serves as a formal specification and an abstract model of a computer from the perspective of a machine language programmer or a compiler writer. It precisely defines what the processor is capable of doing, but not necessarily how it does it internally. This abstraction is powerful because it decouples software development from specific hardware implementations.

This "contract" includes details about:

- Instruction Repertoire: The complete list of available machine instructions (e.g., arithmetic, logical, data transfer, control flow).

- Instruction Formats: How instructions are encoded in binary, including the opcode (operation code) and operand fields.

- Data Types and Sizes: The types of data (integers, floating-point numbers, etc.) and their representations (e.g., 32-bit integers, 64-bit floating-point).

- Registers: The number, size, and purpose of processor registers that are visible to the programmer.

- Memory Addressing: How memory locations are specified and accessed (e.g., direct addressing, indirect addressing, indexed addressing).

- Interrupts and Exception Handling: How the processor deals with external events and errors.

Software written and compiled for a specific ISA can run on any processor that implements that ISA, regardless of differences in their underlying microarchitecture (the internal hardware design). This allows for innovation in hardware design without breaking software compatibility, and vice-versa. For instance, multiple generations of Intel processors, despite having different microarchitectures, can all run software compiled for the x86 ISA.

RISC vs. CISC Philosophies

Two major contrasting philosophies have shaped the design of ISAs: RISC (Reduced Instruction Set Computer) and CISC (Complex Instruction Set Computer).

CISC (Complex Instruction Set Computer):

- Goal: To make assembly language programming easier and to reduce the semantic gap between high-level programming languages and machine instructions. The idea was that complex operations could be performed with a single machine instruction.

-

Characteristics:

- A large number of instructions, often with variable lengths.

- Instructions can perform complex operations, sometimes combining memory access with arithmetic (e.g., "add the contents of a memory location to a register").

- Multiple addressing modes for accessing memory.

- Instructions may take varying numbers of clock cycles to execute.

- Examples: Intel x86 (used in most desktops, laptops, and servers), IBM System/360.

- Advantages (Historical): Potentially smaller code size (fewer instructions needed for a task), simpler compiler design (initially).

- Disadvantages: More complex hardware to decode and execute instructions, can make pipelining and other performance enhancements harder to implement efficiently.

RISC (Reduced Instruction Set Computer):

- Goal: To simplify the hardware by having a small set of simple, highly optimized instructions. The emphasis is on instructions that can be executed quickly, typically within a single clock cycle in a pipelined processor.

-

Characteristics:

- A small, fixed number of instruction formats and fixed instruction length.

- Instructions perform simple, well-defined operations (e.g., arithmetic operations are typically register-to-register; separate load/store instructions are used for memory access).

- Fewer addressing modes.

- Designed for efficient pipelining.

- Examples: ARM (dominant in mobile and embedded devices), MIPS, PowerPC, SPARC, and the open-standard RISC-V.

- Advantages: Simpler hardware design, potentially faster clock speeds, easier to pipeline efficiently, often more power-efficient. Compilers play a crucial role in optimizing code by combining simple instructions.

- Disadvantages: Potentially larger code size (more instructions needed for a complex task), greater reliance on compiler technology.

In modern practice, the lines between RISC and CISC have blurred. Many CISC processors (like x86) internally translate complex instructions into simpler, RISC-like micro-operations that are then executed by a RISC-like core. Conversely, some RISC ISAs have incorporated more complex instructions over time. However, the fundamental design philosophies still influence the overall approach to ISA and processor design.

This course delves into an important RISC-based architecture.

Dominant ISAs and Their Applications

Several ISAs have achieved widespread adoption, each finding its niche in different segments of the computing market:

- x86 (and its 64-bit extension, x86-64 or AMD64): Developed by Intel, this CISC architecture dominates the desktop, laptop, and server markets. Its long history and vast software ecosystem are major strengths. Companies like Intel and AMD are the primary manufacturers.

- ARM (Advanced RISC Machines): This RISC architecture is the de facto standard for mobile devices (smartphones, tablets) and a vast array of embedded systems due to its excellent power efficiency and performance per watt. ARM Holdings licenses its architecture to many chip manufacturers (e.g., Qualcomm, Apple, Samsung). It is also making significant inroads into laptops, servers, and high-performance computing.

- RISC-V: An open-standard ISA based on RISC principles. Its key advantage is that it is free to use, modify, and distribute, allowing for custom processor designs without licensing fees. RISC-V is gaining traction across various applications, from embedded controllers and IoT devices to specialized accelerators and even general-purpose computing. The open nature is fostering a rapidly growing ecosystem.

- MIPS (Microprocessor without Interlocked Pipeline Stages): Historically significant in workstations, embedded systems, and networking equipment. While its market share has declined relative to ARM and x86 in some areas, it remains relevant in certain embedded applications.

- Power Architecture (formerly PowerPC): Developed by the AIM alliance (Apple, IBM, Motorola), it has been used in Apple Macintosh computers (before their switch to Intel and then ARM), servers, embedded systems, and automotive applications. IBM's POWER processors are used in high-performance computing and enterprise servers.

The choice of ISA for a particular device or system depends on factors like performance requirements, power constraints, cost, available software ecosystem, and licensing considerations.

For those interested in assembly language programming for specific ISAs, these courses offer practical experience.

Implications of ISA Choice

The selection of an ISA has significant consequences for both software development and hardware performance:

-

Software Development:

- Compiler Complexity: CISC ISAs might initially seem to simplify compiler design as high-level constructs can map to fewer, more complex instructions. However, optimizing code for complex CISC instructions can be challenging. RISC ISAs often rely more heavily on sophisticated compiler technology to schedule simple instructions optimally and manage resources like registers effectively.

- Code Density: CISC programs can sometimes be smaller in terms of instruction count, which might lead to better cache utilization and reduced memory bandwidth usage. However, RISC instructions are typically fixed-length and simpler to decode, which can offset some of this. Modern techniques like instruction compression in RISC ISAs also address code density.

- Portability: Software compiled for one ISA will not run directly on a processor with a different ISA (e.g., x86 software on an ARM processor, or vice-versa) without recompilation or emulation. This is a major consideration when developing software for multiple platforms.

-

Hardware Performance and Design:

- Processor Complexity: RISC ISAs generally lead to simpler processor designs, which can be faster to design, easier to verify, and potentially have higher clock speeds or lower power consumption for a given task. CISC processors require more complex decoding and control logic.

- Pipelining: The uniform instruction format and simpler operations of RISC ISAs make them inherently easier to pipeline efficiently compared to variable-length, complex CISC instructions.

- Power Efficiency: RISC architectures, with their emphasis on simplicity and single-cycle execution (ideally), often achieve better power efficiency, a key reason for ARM's dominance in mobile devices.

- Specialization: The openness of ISAs like RISC-V allows for the addition of custom instructions tailored for specific applications (e.g., AI, cryptography), enabling highly optimized domain-specific accelerators.

Ultimately, the "best" ISA depends on the specific goals and constraints of a project. The ongoing evolution of both RISC and CISC approaches, along with the rise of open ISAs, continues to shape the landscape of processor design and computer architecture.

Modern Trends in Computer Architecture

The field of computer architecture is in a constant state of flux, driven by insatiable demands for higher performance, better energy efficiency, and new computing capabilities. As traditional scaling methods like Moore's Law slow down, architects are increasingly looking towards innovative solutions and paradigm shifts. These modern trends are reshaping how processors and computer systems are designed, leading to more diverse and specialized hardware tailored for a wide range of applications, from massive data centers to tiny embedded devices.

Multi-core and Many-core Processors

For many years, performance gains came primarily from increasing the clock speed of single processors. However, around the early 2000s, this approach hit a "power wall" – higher clock speeds led to unmanageable power consumption and heat dissipation. The industry's response was to shift towards multi-core processors. Instead of making one core faster, chip designers began putting multiple, often simpler, processing cores onto a single chip.

A multi-core processor contains two or more independent processing units (cores), each capable of executing its own sequence of instructions. This allows for parallel execution of multiple tasks (task-level parallelism) or breaking down a single task into parts that can run concurrently on different cores (thread-level parallelism). Most modern CPUs in desktops, laptops, and servers are multi-core (e.g., dual-core, quad-core, octa-core, and even more).

Many-core processors take this concept further, integrating tens, hundreds, or even thousands of cores. Graphics Processing Units (GPUs) are a prime example of many-core architectures, originally designed for parallel processing of graphics rendering but now widely used for general-purpose parallel computation (GPGPU), especially in scientific computing and machine learning. The challenge with multi-core and many-core systems lies in effectively programming them to exploit the available parallelism and managing communication and synchronization between cores.

These courses explore the design and implications of multi-core systems.

Specialized Accelerators (GPUs, TPUs, FPGAs) and Heterogeneous Computing

As the benefits from general-purpose CPU scaling diminish, there's a growing trend towards using specialized hardware accelerators designed to perform specific tasks much more efficiently than a CPU. This has led to the rise of heterogeneous computing, where systems combine different types of processing units (CPUs, GPUs, and other accelerators) to optimize for diverse workloads.

- GPUs (Graphics Processing Units): Initially for graphics, their massively parallel architecture is highly effective for data-parallel tasks like scientific simulations, financial modeling, and, crucially, training and inference in deep learning (AI).

- TPUs (Tensor Processing Units): Developed by Google, TPUs are Application-Specific Integrated Circuits (ASICs) designed specifically for neural network machine learning, particularly for Google's TensorFlow framework. They offer very high performance and energy efficiency for AI workloads.

- FPGAs (Field-Programmable Gate Arrays): These are integrated circuits that can be configured by a customer or a designer after manufacturing – hence "field-programmable." FPGAs offer a balance between the flexibility of software and the performance of custom hardware. They can be programmed to implement specialized logic for tasks like network processing, signal processing, and accelerating specific algorithms. They are often used for prototyping ASICs or for applications where the hardware function might need to change over time.

The idea behind heterogeneous computing is to use the right processor for the right job: the CPU for general control and serial tasks, and specialized accelerators for computationally intensive parallel tasks or specific functions. Managing these diverse resources and programming them effectively are key challenges in modern system design.

Consider these courses to learn more about specialized hardware like FPGAs.

Architectural Techniques for Energy Efficiency

Power consumption is a critical constraint in almost all computing systems, from battery-powered mobile devices to large-scale data centers where energy costs can be substantial. Architects employ various techniques to improve energy efficiency (performance per watt):

- Clock Gating: Turning off the clock signal to idle parts of the processor. Since power is consumed during clock transitions, stopping the clock to inactive units saves significant power.

- Power Gating: Completely shutting off the power supply to idle blocks or cores. This provides greater power savings than clock gating but typically has a longer latency to wake up the block.

- Dynamic Voltage and Frequency Scaling (DVFS): Adjusting the processor's voltage and clock frequency dynamically based on the current workload. Lowering the voltage and frequency during periods of low activity reduces power consumption. Most modern CPUs and GPUs implement DVFS.

- Specialized Low-Power Cores: Some architectures, like ARM's big.LITTLE, combine high-performance "big" cores with energy-efficient "LITTLE" cores. The system can switch tasks between these cores based on demand, using LITTLE cores for background or less intensive tasks to save power, and big cores for demanding applications.

- Architectural Optimizations for Specific Workloads: Designing hardware to perform common tasks with fewer operations or less data movement can also lead to significant energy savings.

Energy efficiency is no longer an afterthought but a primary design goal in modern computer architecture.

Security Considerations at the Architectural Level

Security has become a paramount concern, and vulnerabilities can exist not just in software but also at the hardware and architectural levels. Events like the Spectre and Meltdown vulnerabilities, which exploited side effects of speculative execution in modern processors to leak sensitive data, highlighted the need for architects to consider security implications more deeply during the design process.

Architectural approaches to enhancing security include:

- Hardware-based Isolation: Creating secure enclaves or trusted execution environments (TEEs) within the processor where sensitive code and data can be protected even from a compromised operating system.

- Memory Encryption and Integrity Checking: Protecting data in main memory from unauthorized access or modification through encryption and integrity verification mechanisms implemented in hardware.

- Mitigations for Side-Channel Attacks: Designing hardware to be more resistant to attacks that exploit information leaked through physical characteristics like power consumption, timing, or electromagnetic radiation. This includes architectural changes to reduce or mask the information leakage from techniques like speculative execution.

- Secure Boot and Hardware Roots of Trust: Establishing a chain of trust starting from immutable hardware components to ensure that the system boots with authentic software and that cryptographic keys are securely managed.

- Formal Verification: Using mathematical methods to prove the correctness of hardware designs with respect to security properties, helping to identify and eliminate vulnerabilities before manufacturing.

Integrating security features directly into the architecture is crucial for building more resilient and trustworthy computing systems.

This course provides an introduction to computer security concepts, some of which have architectural implications.

Memory and Storage Innovations

The performance of memory and storage systems often lags behind processor performance, creating what's known as the "memory wall." Innovations in memory and storage technology are crucial for feeding data to powerful processors efficiently.

- High Bandwidth Memory (HBM): A type of 3D-stacked DRAM that offers significantly higher bandwidth (data transfer rate) and lower power consumption per bit compared to traditional DDR SDRAM. HBM is often used in high-performance GPUs and other accelerators where memory bandwidth is critical.

- Non-Volatile Memory (NVM): Technologies that retain data even when power is removed. While traditional NVM like flash (used in SSDs) has been around for a while, newer NVM technologies like 3D XPoint (e.g., Intel's Optane) offer performance characteristics that bridge the gap between DRAM (fast, volatile) and NAND flash (slower, non-volatile). These can be used as fast storage, persistent memory (appearing like RAM but retaining data), or even as an extension to main memory.

- Near-Data Processing (Processing-in-Memory - PIM): This involves moving computation closer to where data is stored (in memory or even within storage devices) to reduce the energy and latency costs of data movement. Instead of bringing vast amounts of data to the CPU, simple operations can be performed on the data in place. This is an active area of research with significant potential for data-intensive applications.

- Storage Class Memory (SCM): A category of NVM technologies that aim to provide performance close to DRAM but with the persistence and density of storage.

- Computational Storage: Integrating processing capabilities directly into storage devices (like SSDs) to offload tasks from the host CPU, such as data compression, encryption, or database queries.

These advancements are reshaping the memory hierarchy and how data is managed and accessed in modern computer systems, aiming to alleviate bottlenecks and improve overall system performance and efficiency.

This course explores emerging memory technologies.

Formal Education Pathways

Embarking on a career in computer architecture typically involves a structured educational journey, often beginning with foundational knowledge in mathematics and science, and progressing through specialized university coursework and potentially advanced research. This path equips aspiring architects with the theoretical understanding and practical skills necessary to design and analyze complex computer systems. While the specific requirements and course names may vary between institutions, the core competencies developed are generally consistent.

For those in high school with an early interest, focusing on strong grades in math (especially algebra, calculus) and physics can provide a solid base. Early exposure to programming can also be highly beneficial, not just for the coding skills themselves, but for developing logical thinking and problem-solving abilities.

Pre-University Foundations

While formal computer architecture education begins at the university level, a strong foundation in high school can significantly ease the transition and enhance success. Students should focus on:

- Mathematics: A strong grasp of algebra, trigonometry, and pre-calculus is essential. Calculus will be a cornerstone of engineering and computer science programs. Discrete mathematics, if available, is also highly relevant as it deals with logic, set theory, and graph theory, which are fundamental to computing.

- Physics: Understanding basic physics, particularly concepts related to electricity and magnetism, provides context for how electronic components in computers work.

- Computer Science/Programming: If available, introductory computer science courses that teach programming fundamentals (e.g., in Python, Java, or C++) are invaluable. These courses help develop algorithmic thinking and problem-solving skills. Even self-study through online resources or coding clubs can be very beneficial.

- Electronics (if available): Some high schools may offer basic electronics courses or clubs. Hands-on experience with circuits, even simple ones, can provide a tangible link to the hardware concepts encountered later.

Developing good study habits, critical thinking skills, and a curiosity for how things work are also crucial at this stage. Participating in science fairs, math competitions, or robotics clubs can further nurture these interests.

These introductory courses can provide a taste of computer science and programming for those exploring the field.

Undergraduate Studies: The Core Curriculum

A bachelor's degree is typically the minimum educational requirement for entry-level roles related to computer architecture. Relevant degree programs include:

- Computer Engineering: This discipline often provides the most direct pathway, blending electrical engineering and computer science. Coursework typically covers digital logic design, circuit theory, electronics, computer organization, computer architecture, microprocessors, embedded systems, and operating systems.

- Electrical Engineering (with a computer specialization): Similar to computer engineering, but may have a broader focus on electronics, signal processing, and communication systems, with options to specialize in computer hardware and architecture.

- Computer Science: While often more software-focused, many computer science programs offer tracks or elective courses in computer organization, computer architecture, systems programming, and operating systems. A strong CS background provides an excellent understanding of the software that runs on the hardware.

Key courses that build the foundation for computer architecture include:

- Digital Logic Design: Teaches the fundamentals of Boolean algebra, logic gates, combinational and sequential circuits, and how these are used to build basic digital components like adders, multiplexers, and memory elements. This is the bedrock of hardware design.

- Computer Organization: Bridges the gap between digital logic and computer architecture. It explores how the basic components (CPU, memory, I/O) are structured and interact at a functional level. Topics often include instruction set basics, datapath design, control unit design, and memory systems.

- Computer Architecture: This course (or series of courses) delves deeper into the design of processors and computer systems. It covers topics like pipelining, instruction-level parallelism, memory hierarchies (caches, virtual memory), I/O systems, and often explores different architectural styles (RISC/CISC, multi-core).

- Operating Systems: Provides understanding of how the OS manages hardware resources (CPU, memory, I/O devices) and provides services to applications. This perspective is crucial for architects designing hardware that interacts efficiently with system software.

- Programming and Data Structures: Strong programming skills (often in C/C++ and assembly language for systems-level work) are essential for understanding how software utilizes hardware and for tasks like simulation and verification.

Many undergraduate programs also include lab components where students get hands-on experience designing and simulating digital circuits, programming microcontrollers, or analyzing system performance.

These university-level courses cover foundational topics in computer architecture and organization.

For foundational knowledge, these books are often considered essential reading in undergraduate programs.

Graduate Studies: Specialization and Research

For those wishing to pursue research, teach at the university level, or work on cutting-edge architectural designs in industry, graduate studies (Master's or PhD) are often necessary. Graduate programs allow for deeper specialization and engagement with advanced topics.

Master's Degree (M.S. or M.Eng.): A Master's program typically takes 1-2 years and offers advanced coursework in areas such as:

- Advanced Computer Architecture (e.g., multi-core/many-core systems, GPUs, domain-specific architectures)

- High-Performance Computing

- Parallel Processing

- VLSI (Very Large Scale Integration) Design

- Embedded Systems Design

- Network-on-Chip (NoC) Architectures

- Computer Security from a Hardware Perspective

- Fault-Tolerant Computing

Master's programs may be course-based or include a research thesis/project component, providing an opportunity to contribute to a specific research area or solve a challenging design problem.

Doctoral Degree (Ph.D.): A Ph.D. is a research-intensive degree that typically takes 4-6 years (or more) beyond a bachelor's or master's. It involves extensive coursework in a chosen specialization, followed by original research culminating in a doctoral dissertation that makes a significant contribution to the field. Ph.D. students work closely with faculty advisors in research labs, publish their findings in academic journals and conferences, and often present their work to the wider research community.

Specialization areas for Ph.D. research in computer architecture are diverse and evolving, including but not limited to:

- Novel processor microarchitectures

- Memory system innovations (e.g., new cache designs, non-volatile memory architectures)

- Architectures for artificial intelligence and machine learning

- Quantum computing architectures (a more nascent but growing field)

- Neuromorphic computing (brain-inspired architectures)

- Secure and reliable computer architectures

- Energy-efficient computing

- Reconfigurable computing

The Role of Research Labs and Publications

Academic research labs at universities and research institutions, as well as industrial research labs (e.g., at companies like Intel, NVIDIA, IBM, Google, Microsoft), are at the forefront of computer architecture innovation. These labs conduct pioneering research, develop new architectural concepts, build prototypes, and evaluate their performance.

The dissemination of research findings is crucial for the advancement of the field. This occurs primarily through:

- Academic Conferences: Highly prestigious conferences like ISCA (International Symposium on Computer Architecture), MICRO (International Symposium on Microarchitecture), HPCA (High-Performance Computer Architecture), and ASPLOS (Architectural Support for Programming Languages and Operating Systems) are primary venues for presenting cutting-edge research. Papers submitted to these conferences undergo rigorous peer review.

- Journals: Archival journals such as IEEE Transactions on Computers, ACM Transactions on Architecture and Code Optimization (TACO), and IEEE Micro publish in-depth research articles.

Staying abreast of the latest publications and attending conferences are essential for researchers and practitioners to keep up with the rapid pace of innovation in computer architecture. These forums also provide valuable opportunities for networking and collaboration.

For those interested in the intersection of hardware and advanced software concepts, these books can provide deeper insights.

Independent Learning and Online Resources

While formal education provides a structured path into computer architecture, the wealth of online resources and the possibility of self-directed study have opened up alternative avenues for learning and skill development. Whether you're a student looking to supplement your coursework, a professional aiming to upskill or pivot careers, or simply a curious learner, independent learning can be a powerful tool. The key is to approach it with discipline, a clear plan, and a focus on practical application.

The digital age offers unprecedented access to courses, textbooks, simulators, and communities, making it more feasible than ever to delve into the intricacies of how computers work from the comfort of your own home. OpenCourser itself is a testament to this, providing a vast catalog to help learners navigate these options.

Online Courses: From Overviews to Deep Dives

Online learning platforms offer a vast array of courses on topics relevant to computer architecture, catering to different levels of expertise. These courses can be broadly categorized:

- Conceptual Overviews: Many courses provide high-level introductions to computer organization and architecture, explaining fundamental concepts like the Von Neumann model, ISAs, the fetch-decode-execute cycle, and memory hierarchies. These are excellent starting points for beginners or those needing a refresher. They often use analogies and simplified explanations to make complex topics accessible.

- Digital Logic and Basic Electronics: For those without a formal engineering background, online courses on digital logic design (Boolean algebra, logic gates, circuit design) and basic electronics are crucial prerequisites for understanding computer hardware.

- Specific Technology Deep Dives: More advanced courses might focus on particular ISAs (like ARM or RISC-V), specific processor design techniques (pipelining, superscalar design), memory technologies (cache design, DRAM, NVM), or specialized architectures (GPUs, FPGAs).

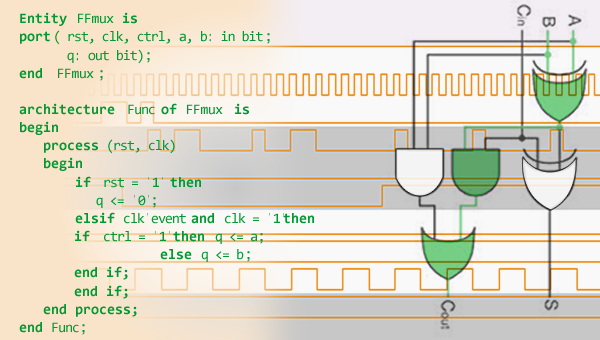

- Hardware Description Languages (HDLs): Courses teaching Verilog or VHDL are essential for anyone wanting to design and simulate digital hardware. These languages are used to describe the structure and behavior of electronic circuits.

- System Software: Courses on operating systems and compilers can provide a valuable software perspective that complements hardware knowledge, helping learners understand the hardware-software interface more deeply.

Platforms like Coursera, edX, Udacity, and Udemy host courses from universities and industry experts. Many are self-paced, offering flexibility, while others are part of structured specializations or MicroMasters programs. OpenCourser can help you find and compare these courses, often highlighting reviews and enrollment numbers to gauge popularity and perceived quality.

These online courses are excellent starting points for independent learners looking to grasp the fundamentals of computer architecture.

Self-Studying Core Concepts

Self-studying core computer architecture concepts is definitely feasible with dedication and the right resources. Beyond online courses, consider:

- Textbooks: Classic textbooks like "Computer Organization and Design" by Patterson and Hennessy, and "Structured Computer Organization" by Tanenbaum, are comprehensive resources. Many older editions can often be found at a lower cost or even in university libraries. Accompanying lecture slides or notes from university courses that use these textbooks are sometimes available online.

- Online Materials: Many universities make course materials (lecture notes, assignments, past exams) publicly available. Websites of professors specializing in computer architecture can also be goldmines of information.

-

Simulators and Emulators: Software tools that simulate the behavior of processors and memory systems are invaluable for understanding concepts. Examples include:

- Logisim or Digital: For designing and simulating digital logic circuits.

- MARS (MIPS Assembler and Runtime Simulator) or RARS (RISC-V Assembler and Runtime Simulator): For writing and running assembly language programs for MIPS and RISC-V ISAs, respectively.

- CPU Simulators (e.g., gem5, SimpleScalar): More advanced research-grade simulators that allow for detailed modeling and performance analysis of various microarchitectures. These have a steeper learning curve but offer powerful capabilities.

- Academic Papers and Articles: For deeper understanding or exploring specific topics, reading seminal papers or survey articles from conferences and journals can be enlightening, though often challenging for beginners.

A structured approach is helpful: start with fundamentals (digital logic, basic organization) before moving to more complex architectural concepts. Don't be afraid to revisit topics or seek explanations from multiple sources if something isn't clear.

Pathways for Independent Learners

For an independent learner, a possible pathway could be:

- Start with Digital Logic: Understand Boolean algebra, logic gates, flip-flops, and how to build basic combinational and sequential circuits. Use a simulator like Logisim to experiment.

- Move to Computer Organization: Learn about the basic components of a computer (CPU, memory, I/O), how they are interconnected (buses), and the basic Von Neumann cycle. Study a simple ISA (like a subset of MIPS or RISC-V) and try some assembly language programming using a simulator like MARS or RARS. The "Nand to Tetris" course/book is an excellent project-based approach that covers this ground up to building a basic computer and assembler.

- Dive into Computer Architecture: Explore more advanced topics like pipelining, memory hierarchies (caches, virtual memory), instruction-level parallelism, and an introduction to multi-core concepts. At this stage, more advanced textbooks and simulators become relevant.

- Specialize (Optional): Based on interest, delve into specific areas like GPU architecture, embedded systems, FPGA design, or even the architectural implications of operating systems or compilers.

Throughout this journey, consistency and active learning (doing exercises, working on projects) are more important than speed. Joining online forums or communities related to computer architecture or specific tools can also provide support and opportunities for discussion.

These books are often recommended for self-study due to their comprehensive coverage and clear explanations.

The Importance of Hands-on Projects

Theoretical knowledge is essential, but hands-on experience solidifies understanding and develops practical skills. For computer architecture, projects can take many forms:

- Designing Digital Circuits: Use a logic simulator to design and test components like ALUs, memory units, or even a simple control unit.

- Assembly Language Programming: Write programs in assembly for an ISA like MIPS or RISC-V. This provides deep insight into how the processor works at a low level. Try implementing common algorithms or simple I/O routines.

- Building a Simple CPU (in simulation): More ambitious projects involve designing a simplified CPU datapath and control unit using an HDL like Verilog or VHDL and simulating its operation. The "Nand to Tetris" project is a well-guided example of building a computer system from the ground up in simulation.

- Using FPGAs (if accessible): For those with access to FPGA development boards (some universities provide them, or they can be purchased), implementing digital designs on actual hardware is an incredibly rewarding experience. This allows you to see your designs come to life.

- Performance Analysis with Simulators: Use architectural simulators (like gem5 for advanced users) to model different cache configurations or pipeline designs and analyze their impact on performance for benchmark programs.

- Contributing to Open Source Projects: There are open-source hardware projects (e.g., around RISC-V) or simulator projects where one might be able to contribute, even in small ways, after gaining sufficient expertise.

Projects not only reinforce learning but also provide tangible evidence of your skills, which can be valuable for academic progression or job applications. They help bridge the gap between theory and practice.

These project-centered courses can provide excellent hands-on experience.

Supplementing Formal Education or Facilitating Career Pivots

Online resources and independent study are not just for those learning from scratch. They can also be incredibly valuable for:

- University Students: To supplement formal coursework, get alternative explanations of difficult concepts, explore topics not covered in their curriculum, or prepare for specific internships or jobs.

- Industry Professionals: To stay updated with the latest architectural trends, learn about new technologies (e.g., RISC-V, AI accelerators), acquire new skills for career advancement (e.g., learning an HDL), or upskill for a career pivot into hardware-related roles from a software background (or vice-versa).

- Career Changers: For individuals looking to transition into computer architecture from other fields, online courses and self-study, coupled with strong project work, can provide a viable pathway, though it requires significant dedication. It might be a steeper climb without a foundational engineering or CS degree, but passion and persistence can make a big difference. Grounding oneself in the fundamentals is especially critical.

For those considering a career change, it's important to be realistic. While online learning can provide knowledge, breaking into specialized hardware roles often benefits from formal credentials and demonstrable project experience. However, understanding architecture can also enhance roles in software performance, embedded systems, or technical support, providing alternative entry points or skill enhancements. OpenCourser's Learner's Guide offers tips on how to structure self-learning and make the most of online educational materials.

Career Paths and Industry Roles

A background in computer architecture opens doors to a variety of specialized and impactful roles within the technology industry. Professionals in this field are responsible for designing the very heart of computing systems, from the microprocessors in our everyday devices to the massive computing engines that power data centers and supercomputers. The career paths are typically challenging yet rewarding, offering opportunities to work on cutting-edge technology and solve complex problems. Understanding these roles, the skills required, and the typical progression can help aspiring architects chart their course.

The industry for computer architects is dynamic, with continuous innovation driven by demands for higher performance, lower power consumption, and new functionalities, especially in areas like artificial intelligence and the Internet of Things. Companies ranging from semiconductor giants to tech titans and specialized research labs are constantly seeking talent in this domain.

Common Job Titles

While specific titles can vary by company and region, some common job titles for professionals working in computer architecture and related areas include:

- CPU Architect / SoC (System-on-Chip) Architect: Designs the high-level architecture of central processing units or entire systems on a chip, which may include CPUs, GPUs, memory controllers, and other components. They define features, performance targets, and power budgets.

- Microarchitect: Focuses on the detailed implementation of an ISA. They design the internal structure of the processor, including the pipeline, execution units, caches, and control logic, to meet the architectural specifications efficiently.

- Hardware Design Engineer / Digital Design Engineer: Involved in the design, implementation, and testing of digital circuits and hardware components using HDLs like Verilog or VHDL. They translate architectural or microarchitectural specifications into detailed logic designs.

- Verification Engineer: Responsible for ensuring that hardware designs are correct and function as intended before they are manufactured. This involves creating test plans, developing testbenches, and using simulation and formal verification techniques to find bugs. This is a critical role, as errors in hardware can be extremely costly to fix after fabrication.

- Performance Engineer / Performance Analyst: Analyzes and models the performance of computer architectures and microarchitectures. They use simulators and performance counters to identify bottlenecks, evaluate design trade-offs, and propose improvements to enhance speed and efficiency.

- Physical Design Engineer: Takes the logic design (often in the form of a netlist) and works on the physical layout of the chip, including placement of components and routing of wires, to meet timing, power, and area constraints.

- FPGA Design Engineer: Specializes in designing and implementing digital systems using Field-Programmable Gate Arrays.

- Research Scientist (Computer Architecture): Typically found in academic institutions or corporate research labs, these roles involve exploring novel architectural concepts, publishing research, and pushing the boundaries of the field.

These roles often require a strong foundation in the principles discussed throughout this article.

Key Skills Required

Success in computer architecture roles demands a blend of strong technical skills, analytical abilities, and often, good communication and teamwork skills. Essential technical competencies include:

- Deep Understanding of Digital Logic and Circuit Design: The foundation of all hardware.

- Proficiency in Computer Organization and Architecture Principles: Including ISAs, pipelining, memory hierarchies, I/O systems.

- Hardware Description Languages (HDLs): Fluency in Verilog or VHDL is crucial for design and verification roles.

- Simulation and Modeling Tools: Experience with logic simulators, architectural simulators (e.g., gem5, SimpleScalar), and performance modeling tools.

- Performance Analysis and Bottleneck Identification: The ability to analyze complex systems, identify performance limitations, and propose solutions.

- Programming Skills: Often C/C++ for system-level programming, simulation, and tool development. Assembly language understanding is also vital. Scripting languages like Python are widely used for automation and data analysis.