Linear Transformations

Introduction to Linear Transformations

Linear transformations are a fundamental concept in mathematics, particularly in the field of linear algebra. At a high level, a linear transformation is a function between two vector spaces that preserves the operations of vector addition and scalar multiplication. This means that if you take two vectors, add them together, and then apply the transformation, you get the same result as if you transformed each vector individually and then added the results. Similarly, if you scale a vector by a number and then apply the transformation, it's the same as transforming the vector first and then scaling it. This elegant property makes linear transformations incredibly powerful for understanding and manipulating data in a structured way.

Working with linear transformations can be quite engaging. Imagine being able to rotate, scale, or shear shapes in a 2D or 3D space with mathematical precision – this is one of the visual and intuitive aspects of linear transformations. Beyond geometry, these transformations are the quiet engines behind many technologies we use daily, from the stunning graphics in video games to the complex algorithms that power search engines and machine learning. Understanding how these transformations work allows you to peek under the hood of these systems and appreciate the mathematical beauty that makes them possible.

What are Linear Transformations?

To truly grasp linear transformations, let's break down the definition and explore some of its core properties. This will lay the groundwork for understanding their broader applications and significance.

Defining Linear Transformations and Their Basic Properties

A linear transformation, often denoted by T, is a mapping from a vector space V to a vector space W (T: V → W) that satisfies two key conditions for all vectors u and v in V and any scalar c. The first condition is additivity: T(u + v) = T(u) + T(v). This means the transformation of a sum of vectors is the sum of their individual transformations. The second condition is homogeneity (or scalar multiplication preservation): T(cu) = cT(u). This means the transformation of a scaled vector is the scaled version of the transformed vector. Essentially, linear transformations respect the linear structure of vector spaces.

One important consequence of these properties is that a linear transformation always maps the zero vector in V to the zero vector in W, i.e., T(0V) = 0W. This can be a quick check to see if a transformation might be linear; if it doesn't map the zero vector to the zero vector, it's not a linear transformation. Another key aspect is that linear transformations can often be represented by matrices. This matrix representation turns abstract transformations into concrete arrays of numbers that computers can efficiently process, forming a bridge between theory and computation.

Understanding these basic properties is crucial because they form the foundation upon which more complex concepts in linear algebra are built. They ensure that linear transformations behave predictably and consistently, which is why they are so widely applicable in various fields.

Visualizing Linear Transformations: Examples in 2D and 3D Geometry

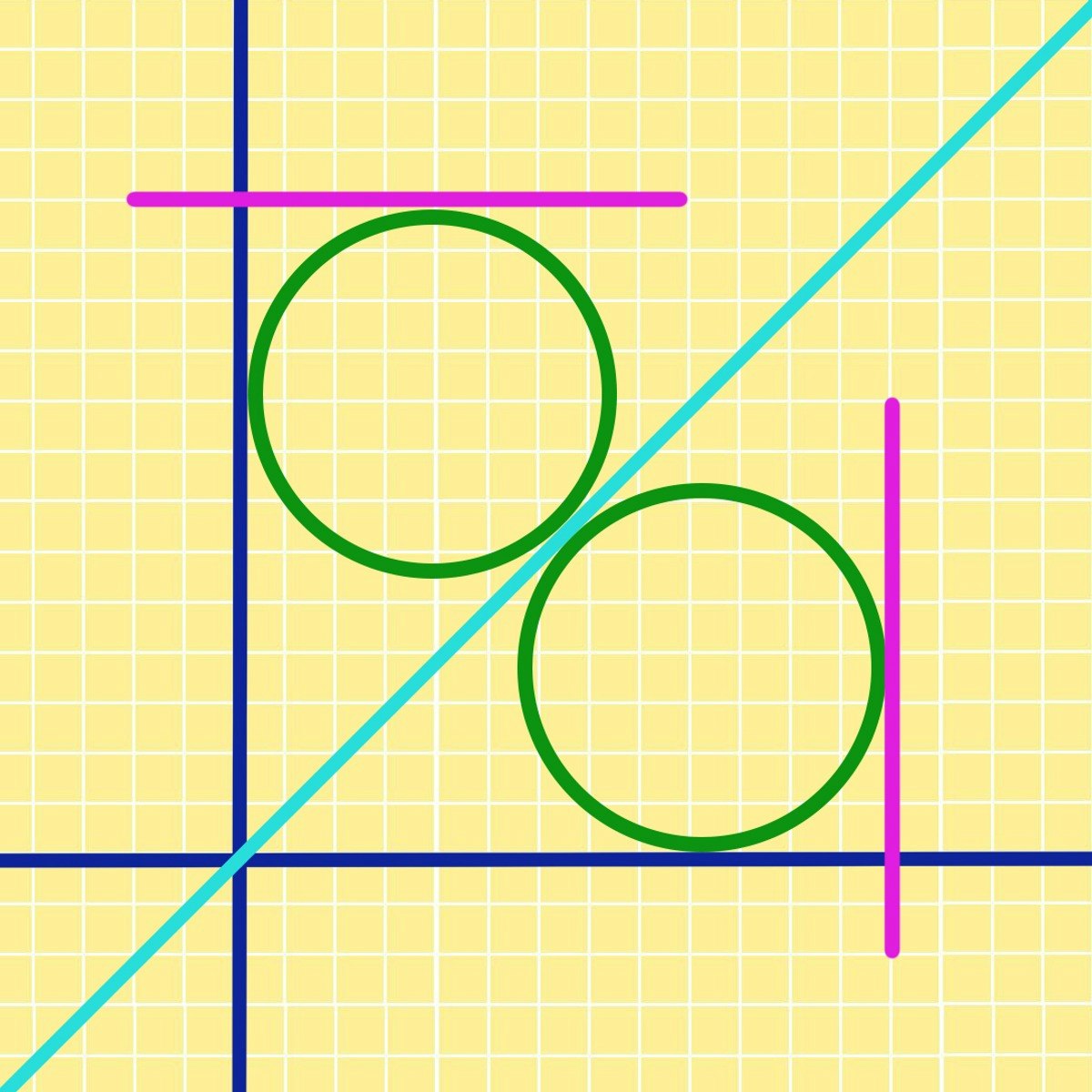

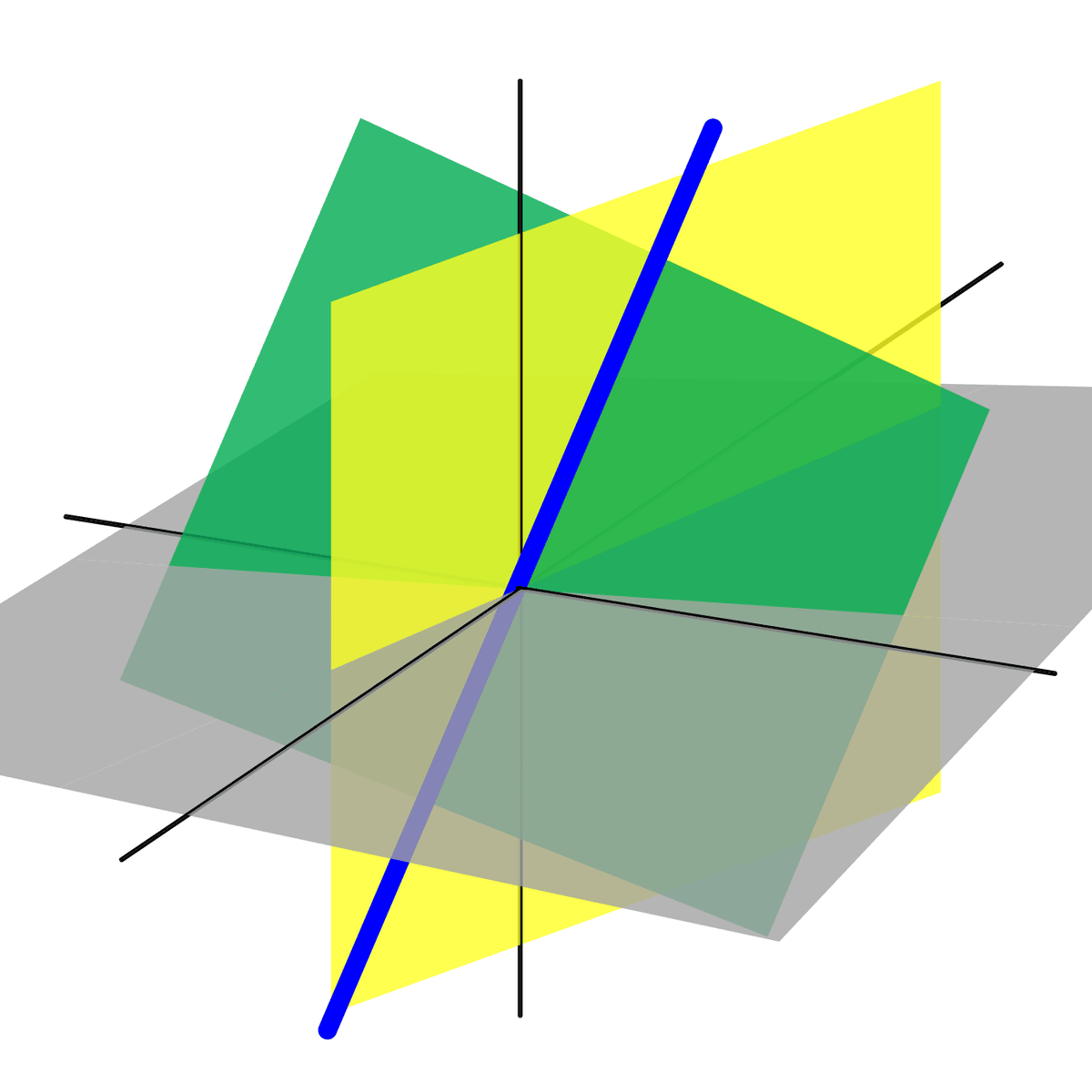

One of the most intuitive ways to understand linear transformations is by visualizing their effects on geometric shapes in two or three dimensions. Common examples include rotations, scaling, and shearing. Imagine a square in a 2D plane. A rotation transformation would spin this square around the origin by a certain angle. A scaling transformation could make the square larger or smaller, or stretch it along one axis more than another. A shearing transformation would slant the square, turning it into a parallelogram, as if you pushed the top edge horizontally while keeping the bottom edge fixed.

In 3D space, these concepts extend similarly. A cube can be rotated around any axis, scaled uniformly to become a bigger or smaller cube, or scaled non-uniformly to become a rectangular prism. Shearing in 3D can also occur along different planes. These geometric manipulations are fundamental in computer graphics, where they are used to position, orient, and animate objects in a virtual scene. For instance, every time you see an object move or change shape in a video game or animated movie, linear transformations (often represented by matrices) are likely at play, calculating the new positions of all the points that make up that object.

Thinking about these visual examples helps to build intuition for what linear transformations "do." They take an input (a set of points or vectors) and produce an output (a transformed set of points or vectors) while maintaining straight lines as straight lines and keeping the origin fixed (unless combined with a translation, which is technically an affine transformation, but often used in conjunction with linear transformations).

The Link to Linear Algebra Fundamentals

Linear transformations are not an isolated topic; they are deeply intertwined with the core concepts of linear algebra. Understanding vector spaces, basis vectors, dimension, matrices, determinants, eigenvalues, and eigenvectors is crucial for a comprehensive grasp of linear transformations. Vector spaces provide the stage where linear transformations act. Basis vectors are like the fundamental building blocks of a vector space, and how a linear transformation affects these basis vectors completely determines how it affects any other vector in that space.

Matrices provide a concrete way to represent linear transformations in finite-dimensional spaces. Each linear transformation between such spaces corresponds to a unique matrix, and conversely, every matrix defines a linear transformation. Operations on transformations, like composition (applying one transformation after another), correspond to matrix multiplication. Determinants of matrices associated with linear transformations can tell us how the transformation scales areas or volumes. Eigenvalues and eigenvectors reveal special directions in which the transformation acts simply by scaling, without changing direction. These concepts are foundational for analyzing the properties and behavior of linear transformations.

For those aspiring to delve deeper into fields like data science, computer graphics, physics, or engineering, a solid understanding of these linear algebra fundamentals is indispensable, as linear transformations appear in various guises across these disciplines.

These courses can help build a solid foundation in the fundamentals of linear algebra, which are essential for understanding linear transformations.

The following books are often recommended for learning linear algebra and provide comprehensive coverage of its core concepts, including linear transformations.

A Glimpse into History and Modern Significance

The concepts underpinning linear transformations and linear algebra have a rich history, with roots tracing back to methods for solving systems of linear equations developed by ancient civilizations. The Babylonians, around 4000 years ago, knew how to solve simple 2x2 systems, and ancient Chinese mathematicians, around 200 BC, demonstrated the ability to solve 3x3 systems. However, the more formal development of linear algebra, including the theory of determinants and matrices, began much later, in the 17th and 18th centuries, with contributions from mathematicians like Gottfried Wilhelm Leibniz and Gabriel Cramer.

The 19th century saw significant advancements with the work of Carl Friedrich Gauss (Gaussian elimination), Hermann Grassmann (foundations of vector spaces), James Joseph Sylvester (who coined the term "matrix"), and Arthur Cayley (matrix algebra and the connection between matrices and linear transformations). Giuseppe Peano provided the first modern definition of a vector space in 1888. Linear algebra took its modern, more abstract form in the first half of the 20th century.

Today, linear transformations and linear algebra are more relevant than ever. They are fundamental tools in numerous fields, including computer science (especially computer graphics, machine learning, and data analysis), engineering, physics, economics, and statistics. The ability to represent and manipulate data efficiently using linear transformations is crucial for handling the large datasets and complex models prevalent in modern technology and research. From rendering realistic 3D worlds in games to enabling sophisticated AI algorithms, the legacy of those early mathematicians continues to shape our world in profound ways.

Formal Education Pathways

For those looking to build a career or deepen their expertise in areas that heavily rely on linear transformations, a formal education often provides a structured path. This typically involves university-level coursework and, for some, graduate studies.

Integration into Typical Undergraduate Curricula

Linear algebra, the branch of mathematics that extensively covers linear transformations, is a standard component of most undergraduate STEM (Science, Technology, Engineering, and Mathematics) curricula. It's often introduced in the first or second year of university, sometimes concurrently with or shortly after calculus. Courses typically cover fundamental concepts such as systems of linear equations, vector spaces, matrices, determinants, eigenvalues, eigenvectors, and, of course, linear transformations themselves. These topics are usually presented with a mix of theoretical explanations, proofs, and computational exercises.

In computer science programs, linear algebra is foundational for courses in computer graphics, machine learning, data mining, and algorithm design. For engineering students, it's essential for understanding systems analysis, control theory, signal processing, and structural mechanics. Physics majors use linear algebra extensively in classical mechanics, quantum mechanics, and electromagnetism. Even in fields like economics and business analytics, linear algebra underpins optimization techniques and econometric modeling.

The goal of these undergraduate courses is to provide students with a working knowledge of the tools and concepts of linear algebra, enabling them to apply these ideas in their respective fields and to prepare them for more advanced studies if they choose to pursue them.

These online courses offer comprehensive introductions to linear algebra, suitable for supplementing an undergraduate curriculum or for self-study.

Applications in Graduate-Level Research

At the graduate level, particularly in fields like applied mathematics, computer science, physics, and engineering, linear transformations and advanced linear algebra become indispensable research tools. In computer science, for instance, research in machine learning and artificial intelligence often involves developing new algorithms for dimensionality reduction, feature extraction, and model optimization, many of which rely on sophisticated applications of linear transformations, such as Singular Value Decomposition (SVD) or Principal Component Analysis (PCA). Researchers might explore transformations in very high-dimensional spaces or work with large-scale matrices that require specialized numerical methods.

In physics, quantum mechanics is fundamentally described using the language of linear algebra, where quantum states are vectors in a Hilbert space and physical observables are represented by linear operators (a type of linear transformation). Research in quantum computing heavily involves manipulating these transformations, known as quantum gates. Engineers working on complex systems, such as in aerospace or robotics, use linear algebra for modeling, simulation, and control. Graduate research might involve developing more efficient numerical algorithms for solving large systems of linear equations that arise from these models or exploring the stability of dynamical systems using eigenvalue analysis.

The depth and abstraction of linear algebra encountered at the graduate level often go far beyond typical undergraduate coursework, focusing on theoretical properties, advanced numerical techniques, and their application to cutting-edge research problems.

For those interested in graduate-level topics or seeking a deeper theoretical understanding, these resources can be valuable.

Prerequisites for Advanced Study

To successfully engage with advanced topics involving linear transformations, a solid foundation in several prerequisite areas is generally required. First and foremost is a strong understanding of introductory linear algebra itself, as covered in a typical undergraduate course. This includes proficiency with matrix operations (addition, multiplication, inversion, transpose), solving systems of linear equations, vector spaces, subspaces, basis, dimension, determinants, eigenvalues, and eigenvectors. Familiarity with the definition and properties of linear transformations is, naturally, key.

Beyond linear algebra, a good grasp of calculus, particularly multivariable calculus, is often beneficial, as many applications involve functions of multiple variables and concepts like gradients and Hessians, which can be represented using vectors and matrices. Some exposure to abstract mathematics and proof techniques, often gained through courses in discrete mathematics or an introductory course on proofs, can be very helpful, especially for more theoretical advanced study. This helps in understanding the rigorous definitions and theorems that characterize linear algebra at a deeper level.

For certain specialized areas, additional prerequisites might be necessary. For instance, those interested in numerical linear algebra will benefit from knowledge of numerical analysis and programming. Those heading into quantum mechanics will need a background in classical mechanics and differential equations. Essentially, the more advanced the study, the broader and deeper the required mathematical maturity becomes.

Interdisciplinary Connections in Physics and Computer Science

Linear transformations and the broader field of linear algebra serve as a powerful bridge connecting various disciplines, most notably physics and computer science. In physics, linear algebra is the bedrock of quantum mechanics. Quantum states are represented as vectors in complex vector spaces, and physical operations or measurements are described by linear operators (transformations). Concepts like superposition and entanglement have direct analogs in the linear combinations of vectors and tensor products of vector spaces. Eigenvalue problems are central to finding the possible outcomes of measurements.

In computer science, the applications are equally profound and widespread. Computer graphics heavily relies on linear transformations (represented by matrices) for rendering 2D and 3D scenes, including operations like rotation, scaling, translation, and projection. Machine learning algorithms frequently use linear algebra to represent data (e.g., as vectors or matrices) and to perform transformations for tasks like dimensionality reduction (e.g., PCA), feature engineering, and model training (e.g., solving systems of linear equations in linear regression or updating weights in neural networks). Fields like data mining, image processing, and cryptography also extensively employ linear algebraic tools.

This interdisciplinary nature means that advancements in one field can often spur progress in another. For example, the development of efficient algorithms for matrix computations in computer science can benefit physicists performing large-scale simulations, while theoretical insights from physics can sometimes inspire new computational approaches. The common language of linear algebra facilitates this cross-pollination of ideas.

These courses highlight the application of linear algebra in specific domains like computer science and data analysis.

Core Concepts and Properties

Delving deeper into linear transformations requires understanding their fundamental building blocks and behaviors. These core concepts provide the analytical tools to work with and understand transformations in a more rigorous mathematical setting.

Matrix Representation of Linear Transformations

A cornerstone in the study of linear transformations, especially in finite-dimensional vector spaces, is their representation using matrices. For any linear transformation T that maps vectors from an n-dimensional space Rn to an m-dimensional space Rm, there exists a unique m x n matrix A such that T(x) = Ax for every vector x in Rn. This matrix A is called the standard matrix of the linear transformation T.

The columns of this matrix A are, in fact, the transformations of the standard basis vectors of Rn. For example, if {e1, e2, ..., en} is the standard basis for Rn, then the j-th column of A is the vector T(ej). This provides a straightforward way to construct the matrix representation of a given linear transformation. Conversely, any m x n matrix A defines a linear transformation from Rn to Rm via matrix multiplication. This one-to-one correspondence between linear transformations and matrices is incredibly powerful, as it allows us to use the well-developed tools of matrix algebra to analyze and compute linear transformations.

Operations on linear transformations also have corresponding matrix operations. For instance, the composition of two linear transformations corresponds to the multiplication of their respective matrices. The identity transformation corresponds to the identity matrix. This matrix representation simplifies many abstract concepts and makes computations feasible, especially with the aid of computers.

These courses delve into the matrix representations of linear transformations and related concepts like determinants and eigenvalues.

This book offers a detailed look at matrix analysis, which is central to understanding linear transformations.

Kernel and Image (Range) Subspaces

Two fundamental subspaces associated with any linear transformation T: V → W are its kernel (or null space) and its image (or range). The kernel of T, denoted as Ker(T) or Nul(T), is the set of all vectors v in the domain V such that T(v) = 0W (the zero vector in the codomain W). In simpler terms, the kernel consists of all vectors that are "squashed" or mapped to zero by the transformation. The kernel is always a subspace of the domain V.

The image of T, denoted as Im(T) or Range(T), is the set of all vectors w in the codomain W for which there exists at least one vector v in V such that T(v) = w. Essentially, the image is the set of all possible outputs of the transformation. The image is always a subspace of the codomain W.

These two subspaces provide crucial information about the transformation. For instance, a linear transformation is injective (one-to-one) if and only if its kernel consists only of the zero vector (Ker(T) = {0V}). The dimension of the kernel is called the nullity of T, and the dimension of the image is called the rank of T. A fundamental result, known as the Rank-Nullity Theorem, states that for a linear transformation T: V → W where V is finite-dimensional, the sum of the rank and the nullity of T equals the dimension of the domain V: rank(T) + nullity(T) = dim(V).

Interpreting Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are special scalars and vectors associated with a linear transformation (or its matrix representation) that reveal deep insights into the transformation's behavior. For a given square matrix A representing a linear transformation T, an eigenvector v is a non-zero vector such that when T acts on v (or when A multiplies v), the result is simply a scaled version of v. That is, T(v) = λv (or Av = λv), where λ (lambda) is a scalar called the eigenvalue corresponding to the eigenvector v.

Geometrically, eigenvectors represent directions that are invariant (or stretched/shrunk but not changed in direction) under the transformation. The eigenvalue λ indicates the factor by which the eigenvector is scaled along that direction. If λ > 1, the vector is stretched. If 0 < λ < 1, it's shrunk. If λ < 0, the direction is reversed and then scaled. If λ = 1, vectors in that direction are unchanged. If λ = 0, vectors in that direction are mapped to the zero vector (meaning they are part of the kernel).

Eigenvalues and eigenvectors have numerous applications. In physics, they are used to find principal axes of rotation and vibrational modes of systems. In data analysis, techniques like Principal Component Analysis (PCA) use eigenvectors of a covariance matrix to find the directions of maximum variance in data. In studying dynamical systems, eigenvalues can determine the stability of equilibrium points. Understanding eigenvalues and eigenvectors allows for a more profound comprehension of how a linear transformation acts on its vector space.

This course specifically covers eigenvalues, a crucial concept in understanding the behavior of linear transformations.

This comprehensive book by Gilbert Strang is a classic for learning about eigenvalues, eigenvectors, and their applications.

Isomorphisms and Invertibility

An isomorphism is a special type of linear transformation that establishes a perfect structural correspondence between two vector spaces. A linear transformation T: V → W is called an isomorphism if it is both injective (one-to-one) and surjective (onto). If such an isomorphism exists, the vector spaces V and W are said to be isomorphic, meaning they are essentially the same from a linear algebra perspective, even if their elements represent different kinds of objects. For finite-dimensional vector spaces, an isomorphism exists between V and W if and only if they have the same dimension.

Invertibility is closely related to isomorphisms. A linear transformation T: V → W is invertible if there exists another linear transformation S: W → V such that applying T then S (or S then T) results in the identity transformation. That is, S(T(v)) = v for all v in V, and T(S(w)) = w for all w in W. The transformation S is called the inverse of T, denoted as T-1. A linear transformation is invertible if and only if it is an isomorphism. This implies that for T to be invertible, V and W must have the same dimension, and T must map a basis of V to a basis of W.

When a linear transformation is represented by a square matrix A, the transformation is invertible if and only if the matrix A is invertible (i.e., its determinant is non-zero). The inverse transformation T-1 is then represented by the inverse matrix A-1. Invertible transformations are crucial in many applications, such as solving systems of linear equations (if Ax = b and A is invertible, then x = A-1b) or "undoing" a transformation to recover the original state.

ELI5: Linear Transformations Explained Simply

Imagine you have a bunch of stretchy, bendy grid paper, and you're drawing shapes on it. Linear transformations are like special ways you're allowed to stretch, shrink, rotate, or tilt this grid paper, and whatever shapes you've drawn on it will change in the same way.

Think of a simple square you drew. If you stretch the grid paper to be twice as wide, your square also becomes a rectangle twice as wide. If you rotate the grid paper, your square rotates too. The key rules are that straight lines on your grid paper must stay straight lines after you change it, and the center point (the origin) doesn't move. So, you can't suddenly make a straight line curvy, and you can't just pick up the whole grid and move it somewhere else without also transforming it.

Let's say you have two commands: "stretch horizontally by 2 times" and "rotate by 90 degrees." A linear transformation ensures that if you give it a point (like a corner of your square), it will tell you exactly where that point moves after you do the stretch or rotation. If you have two points and you add them (like finding a point halfway between them), and then you transform that new point, it's the same as transforming the original two points first and then finding the new halfway point. That's what "preserving addition and scalar multiplication" means in simple terms – the relationships between points stay consistent even after the transformation.

Applications in Modern Industries

Linear transformations are not just abstract mathematical concepts; they are the workhorses behind many modern technologies and analytical methods across a diverse range of industries. Their ability to model and manipulate relationships between variables in a structured way makes them invaluable.

Powering Computer Graphics and Game Development

In the realm of computer graphics and game development, linear transformations are absolutely fundamental. Every time an object moves, rotates, scales, or is viewed from a different perspective on your screen, linear transformations (primarily represented by matrices) are performing the necessary calculations. 3D models of characters, environments, and objects are typically defined by a collection of vertices (points in 3D space). Linear transformations are applied to these vertices to achieve various effects.

For example, a translation matrix moves an object from one position to another. A rotation matrix spins an object around an axis. A scaling matrix makes an object larger or smaller. More complex transformations, like shearing or reflection, are also achieved using specific matrices. Furthermore, to display a 3D scene on a 2D screen, a series of projection transformations are used, which effectively "flatten" the 3D coordinates onto a 2D plane, mimicking how a camera captures an image. Game engines and graphics libraries perform millions of these matrix operations per second to render dynamic and interactive scenes. The efficiency of these transformations is crucial for achieving smooth frame rates and realistic visuals.

The ability to combine multiple transformations by simply multiplying their corresponding matrices is another powerful feature extensively used in graphics. This allows for complex sequences of operations to be represented and applied efficiently.

Transforming Features in Machine Learning

Linear transformations play a critical role in machine learning for data preprocessing, feature engineering, and model building. Data in machine learning is often represented as vectors or matrices, where rows might be samples and columns are features. Linear transformations can be applied to this data to change its representation into a more suitable form for learning algorithms.

One of the most well-known applications is dimensionality reduction. Techniques like Principal Component Analysis (PCA) use linear transformations to project high-dimensional data onto a lower-dimensional subspace while retaining as much of the original variance as possible. This can help in visualizing data, reducing computational complexity, and mitigating the "curse of dimensionality." The new dimensions (principal components) are linear combinations of the original features.

Linear transformations are also inherent in many machine learning models themselves. For example, linear regression models directly use a linear transformation (a weighted sum of input features) to make predictions. In neural networks, each layer often performs a linear transformation of its input (multiplying by a weight matrix) followed by a non-linear activation function. Understanding how these transformations affect the data is key to designing and interpreting machine learning models effectively.

These courses explore how linear algebra concepts, including transformations, are applied in machine learning and data science.

Applications in Economic Modeling

In economics, linear transformations and systems of linear equations are foundational tools for modeling various economic phenomena and relationships. Economists use these mathematical structures to analyze complex systems, understand interdependencies, and make predictions. For instance, input-output models, pioneered by Wassily Leontief, use matrices to represent the flow of goods and services between different sectors of an economy. These models can be used to analyze how changes in demand in one sector ripple through the entire economy. Solving these models often involves matrix inversion, a concept directly related to linear transformations.

Linear programming, a technique for optimizing a linear objective function subject to linear equality and inequality constraints, also relies heavily on linear algebra. It's used in resource allocation, production planning, and logistics. Furthermore, econometric models, which use statistical methods to analyze economic data, often employ linear regression and its variants. These regression techniques are based on finding the best linear relationship (a linear transformation) between dependent and independent variables. Concepts like eigenvalues and eigenvectors also appear in dynamic economic models to analyze stability and long-term behavior.

Analyzing Engineering Systems

Engineering disciplines extensively use linear transformations and linear algebra for the analysis, design, and control of various systems. In electrical engineering, circuit analysis often involves solving systems of linear equations where variables represent currents and voltages. Signal processing, a subfield of electrical and computer engineering, heavily relies on transformations like the Fourier Transform (which can be viewed in a discrete sense as a linear transformation) to analyze signals in the frequency domain.

Mechanical and civil engineers use linear algebra for structural analysis, determining stresses and strains in buildings, bridges, and other structures under various loads. Finite Element Analysis (FEA), a powerful computational technique, breaks down complex structures into smaller elements and uses linear algebra to solve the resulting systems of equations. In control systems engineering, state-space representations, which use matrices and vectors to describe the behavior of dynamic systems, are fundamental. Linear transformations are used to analyze system stability, controllability, and observability, and to design controllers that ensure desired performance. Robotics also makes extensive use of linear transformations for describing the position and orientation of robot arms and for planning movements.

Career Progression and Opportunities

Understanding linear transformations can open doors to a variety of career paths and provides a valuable skillset that is transferable across many industries. Whether you are starting out or looking to pivot, a grasp of these concepts can be a significant asset.

Academic vs. Industry Career Paths

Careers utilizing knowledge of linear transformations can broadly be categorized into academic and industry paths, though there's often overlap. An academic career typically involves teaching and research at a university or research institution. This path usually requires a Ph.D. in mathematics, computer science, physics, engineering, or a related field where linear algebra plays a crucial role. Academics might focus on advancing the theoretical understanding of linear algebra itself, developing new algorithms, or applying these concepts to solve problems in their specific domain of research. They publish papers, attend conferences, and mentor students.

An industry career involves applying linear algebra and transformation concepts to solve real-world problems in various sectors. This could be in tech companies (software engineering, data science, machine learning, computer graphics), finance (quantitative analysis, risk management), engineering firms (aerospace, mechanical, electrical), a wide array of roles in IT, and even in fields like animation, game development, or operations research. The educational requirements can vary from a bachelor's degree for some entry-level roles to master's or Ph.D. degrees for more specialized research and development positions. Industry roles often emphasize practical application, software development skills, and the ability to work in teams to deliver products or solutions.

For those considering a career transition, both paths offer opportunities. The key is to identify which environment—the scholarly pursuit of knowledge or the application-driven world of industry—aligns best with your interests and goals. It's also becoming increasingly common for individuals to move between academia and industry, bringing valuable perspectives from both worlds.

Entry-Level Roles Requiring Linear Algebra

Many entry-level positions, particularly in technology and engineering, list linear algebra as a required or desired skill. For instance, aspiring software engineers, especially those working on graphics, simulations, or backend systems that handle large datasets, will find linear algebra useful. Data analyst or junior data scientist roles often require an understanding of linear algebra for tasks like data manipulation, understanding machine learning models, and performing statistical analyses.

In engineering fields, entry-level roles in areas like control systems, signal processing, robotics, and structural analysis will likely expect a working knowledge of linear algebra. Even in fields like game development, junior programmers working on game physics or rendering engines will benefit from these mathematical foundations. While a deep theoretical mastery might not always be required at the entry level, the ability to understand and apply core concepts like matrix operations, vector manipulations, and the geometric interpretation of transformations is often expected. Employers value candidates who can think analytically and use these mathematical tools to solve problems.

If you're new to these careers or transitioning, highlighting relevant coursework, projects (even personal or academic ones), and any practical experience with tools like MATLAB, Python (with libraries like NumPy), or R can demonstrate your foundational knowledge in linear algebra. Don't be discouraged if you feel your knowledge isn't expert-level; many employers are looking for a solid base and a willingness to learn and grow.

Advanced Research Positions

For those with advanced degrees (typically a Ph.D., but sometimes a Master's with significant research experience) in fields heavily reliant on linear algebra, specialized research positions become accessible. These roles are found in both academia and industry research labs (e.g., at large tech companies, government research facilities, or specialized R&D firms). Advanced research positions often involve pushing the boundaries of knowledge or developing novel applications of linear transformations and related concepts.

In machine learning and AI, researchers might develop new algorithms for deep learning, reinforcement learning, or natural language processing that leverage sophisticated linear algebraic techniques for handling high-dimensional data, optimizing complex functions, or improving computational efficiency. In quantum computing, researchers work on designing new quantum algorithms (many of which are based on unitary transformations, a type of linear transformation) and understanding the fundamental mathematical structures of quantum information. Physicists and engineers in advanced research roles might use linear algebra to model complex physical phenomena, design next-generation materials, or develop innovative control systems. These positions demand a deep theoretical understanding, strong problem-solving skills, and often, the ability to lead research projects and publish findings.

Skill Transferability Analysis

One of the significant advantages of gaining proficiency in linear transformations and linear algebra is the high degree of skill transferability. The core concepts—vector spaces, matrix manipulations, understanding systems of equations, eigenvalues/eigenvectors—are not confined to a single discipline. This means that skills developed in one context can often be applied in another, opening up a wider range of career options and making pivots more feasible.

For example, someone with a strong background in linear algebra from a physics degree might find their skills valuable in data science, where similar mathematical tools are used for data analysis and machine learning. An engineer who used linear algebra for control systems might transition into robotics or even quantitative finance. The analytical thinking and problem-solving abilities honed through studying linear algebra are universally valued by employers across many technical and scientific fields.

Furthermore, the ability to work with abstract mathematical structures and then apply them to concrete problems is a meta-skill that is highly sought after. If you're considering a career change, focus on identifying the fundamental mathematical and computational skills you've acquired through your study of linear transformations and articulate how these can be applied to the challenges in your target field. This can be a powerful way to bridge experience gaps and demonstrate your potential to contribute in a new area.

Many roles that utilize linear algebra also benefit from strong programming skills and familiarity with data analysis tools. OpenCourser offers a wide range of courses in Programming and Data Science that can complement your mathematical knowledge.

Common Challenges and Pitfalls

Learning linear transformations and the broader subject of linear algebra can be a rewarding experience, but it also comes with its share of challenges. Being aware of these common hurdles can help learners navigate them more effectively.

Difficulties in Visualizing Abstract Concepts

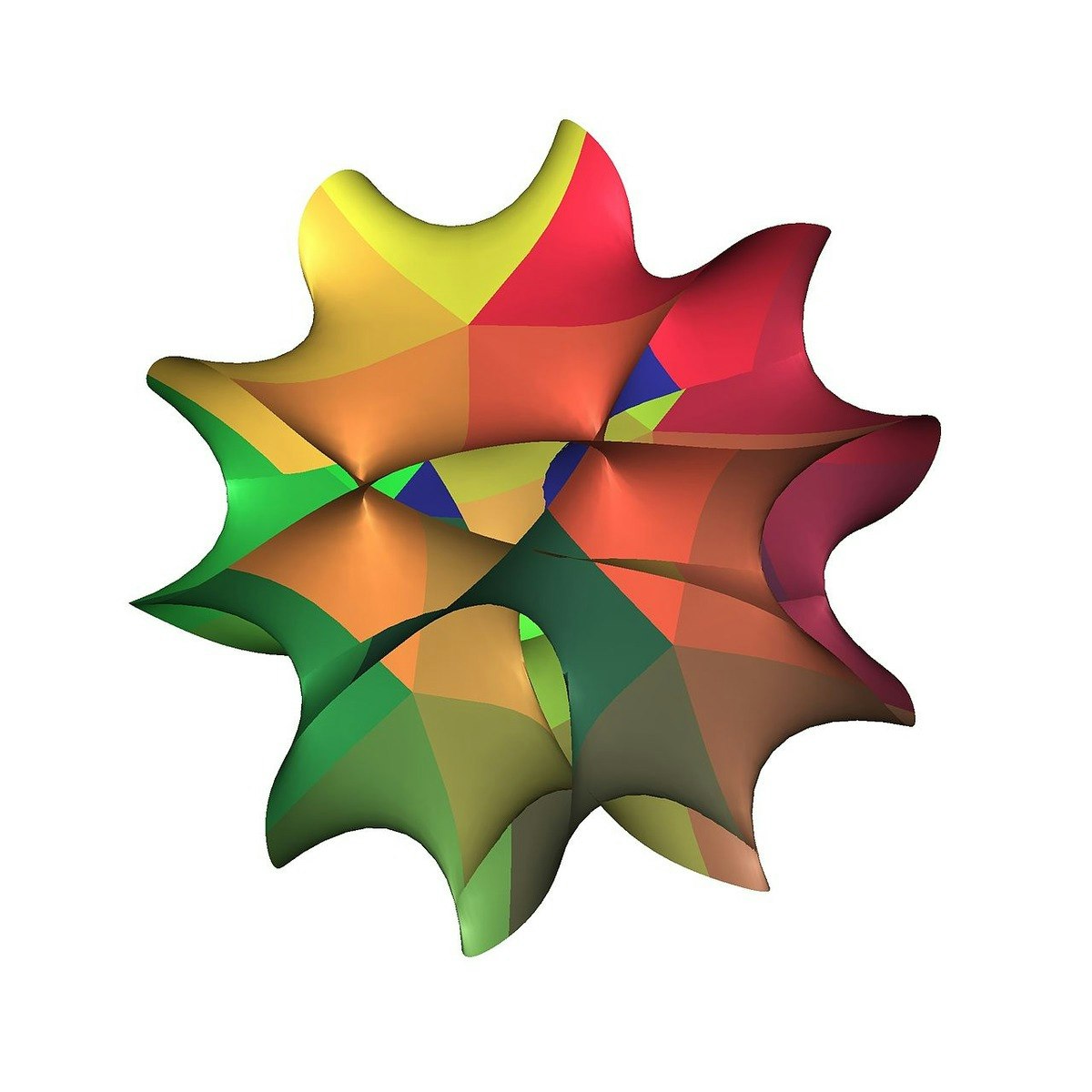

Linear algebra often involves a shift from concrete calculations to more abstract concepts like vector spaces, subspaces, and transformations in higher dimensions. While transformations in 2D or 3D can be visualized geometrically (e.g., rotations, scaling), it becomes challenging to form a mental picture of what's happening in spaces with four or more dimensions. This abstraction can be a significant hurdle for students who are more accustomed to concrete, visualizable mathematics.

Terms like "kernel," "image," "span," and "basis" can feel very abstract without a strong geometric intuition. The jump from manipulating numbers in matrices to understanding what those manipulations mean in terms of transforming spaces requires a different way of thinking. Some students find it difficult to connect the algebraic manipulations (e.g., row reducing a matrix) to the underlying geometric or structural changes they represent.

To overcome this, it's often helpful to spend extra time on the geometric interpretations in 2D and 3D, as these can serve as analogies for higher-dimensional concepts. Using interactive software that allows visualization of transformations can also be beneficial. Relating abstract concepts back to concrete examples or simpler cases can help build understanding gradually.

Computational vs. Conceptual Understanding

Another common challenge is striking the right balance between computational proficiency and conceptual understanding. Linear algebra involves many computational procedures: solving systems of linear equations, multiplying matrices, finding determinants, calculating eigenvalues and eigenvectors, etc. It's possible to become adept at these calculations without fully grasping the underlying concepts or why these procedures work.

For instance, a student might be able to mechanically find the eigenvalues of a matrix but not understand what eigenvalues represent in terms of the transformation's behavior. Conversely, a student might grasp the abstract idea of a vector space but struggle with the concrete calculations needed to find a basis for a given subspace. Both computational skill and conceptual insight are important for truly mastering linear algebra and applying it effectively.

A common pitfall is "rote learning"—memorizing formulas and procedures without understanding their derivation or meaning. This approach often fails when faced with problems that require adapting known methods or applying concepts in novel situations. To bridge this gap, it's crucial to always ask "why?" not just "how?" Working through proofs (even if not required to reproduce them) and trying to explain concepts in one's own words can deepen conceptual understanding.

These courses provide a mix of theoretical explanations and practical applications, helping to bridge the gap between computation and conceptual understanding.

This book is known for its intuitive explanations that connect algebraic procedures to their geometric meanings.

Common Errors in Matrix Operations

Matrix operations, while seemingly straightforward, are a common source of errors, especially for beginners. One of the most frequent mistakes is incorrect matrix multiplication. Unlike scalar multiplication, matrix multiplication is not commutative (AB ≠ BA, in general), and the dimensions of the matrices must be compatible (the number of columns in the first matrix must equal the number of rows in the second). Students often struggle with the row-by-column multiplication process itself, leading to arithmetic errors.

Calculating determinants, especially for matrices larger than 3x3, can be tedious and prone to sign errors or mistakes in cofactor expansion. Finding matrix inverses is another complex procedure where errors can easily creep in, whether using Gaussian elimination (row reduction) or the adjugate matrix formula. Even basic operations like matrix addition or scalar multiplication can lead to errors if care is not taken with corresponding entries.

Careful, methodical work and double-checking calculations are essential. Understanding the properties of these operations (e.g., associativity, distributivity) can also help avoid conceptual errors. Practice is key to building accuracy and speed with matrix computations. Using software tools to verify hand calculations can also be a useful learning aid, but it's important to first master the manual procedures to understand what the software is doing.

Bridging Theory to Application Gaps

Many students find it challenging to connect the theoretical concepts learned in a linear algebra course to real-world applications. They might understand the definition of an eigenvector but not see how it's used in Principal Component Analysis or in analyzing the stability of a bridge. This gap can make the subject feel dry or irrelevant to some, hindering motivation and deeper understanding.

Textbooks and courses that heavily emphasize abstract theory without sufficient examples of applications can exacerbate this problem. Students often ask, "When will I ever use this?" If the connections to practical problems in engineering, computer science, physics, or economics are not made clear, the motivation to grapple with challenging theoretical material can wane.

To bridge this gap, learners should actively seek out applications relevant to their interests. Reading articles, watching videos, or working on projects that use linear algebra in a practical context can be very illuminating. For educators, incorporating real-world examples and case studies into the curriculum is crucial. Understanding how linear transformations are used in computer graphics, how systems of equations model electrical circuits, or how eigenvalues describe vibrational frequencies can make the theory come alive and demonstrate its power and utility. Exploring applications through project-based learning can be particularly effective.

The following resources provide a strong focus on applications, which can help bridge the gap between theory and practice.

Emerging Trends in Linear Transformations

The field of linear algebra, and with it the study and application of linear transformations, is not static. New research and technological advancements continually push the boundaries, leading to emerging trends and novel applications.

Applications in High-Dimensional Data

The modern era is characterized by an explosion of data, often referred to as "big data." Much of this data is high-dimensional, meaning each data point is described by a large number of features or attributes. Analyzing and extracting meaningful insights from such high-dimensional data presents significant challenges. Linear transformations play a crucial role in developing techniques to handle these challenges.

Techniques like Principal Component Analysis (PCA) and Singular Value Decomposition (SVD), which are fundamentally based on linear transformations, are widely used for dimensionality reduction. These methods aim to find lower-dimensional representations of the data that capture the most important variations, making it easier to visualize, process, and model. Researchers are continuously working on scaling these techniques to handle massive datasets and developing new variations that are robust to noise or can capture non-linear structures when combined with other methods (e.g., kernel PCA).

Furthermore, areas like compressed sensing leverage linear algebra to reconstruct signals or images from a surprisingly small number of measurements, which has applications in medical imaging, signal processing, and data compression. The study of random matrices and their properties is also an active area of research with implications for understanding the behavior of linear transformations in high-dimensional settings.

Implications for Quantum Computing

Quantum computing is a rapidly emerging field that promises to revolutionize computation by harnessing the principles of quantum mechanics. Linear algebra, and specifically linear transformations, are at the very heart of quantum computing. In the quantum model, the state of a quantum system (composed of qubits) is represented by a vector in a complex Hilbert space. Quantum operations, or "quantum gates," which are the building blocks of quantum algorithms, are described by unitary linear transformations acting on these state vectors.

Many famous quantum algorithms, such as Shor's algorithm for factoring large numbers and Grover's algorithm for searching unsorted databases, are essentially sequences of carefully designed unitary transformations. The Quantum Fourier Transform (QFT), a quantum analogue of the classical Discrete Fourier Transform, is a key linear transformation used in several quantum algorithms. Researchers are actively exploring new quantum algorithms, developing more efficient ways to implement these transformations on quantum hardware, and investigating the mathematical properties of quantum systems using the tools of linear algebra. The interplay between linear algebra and quantum mechanics is driving innovation in both fields.

AI-Driven Automated Theorem Proving

Automated Theorem Proving (ATP) is a subfield of artificial intelligence and mathematical logic concerned with developing computer programs that can prove mathematical theorems. While traditionally relying on logical deduction and search algorithms, there's growing interest in leveraging machine learning and AI techniques, including those based on linear algebra, to enhance ATP systems.

For instance, machine learning models can be trained to guide the search for proofs, predict useful lemmas, or select promising inference rules. Representing mathematical expressions, logical formulas, or even entire proof states as vectors in a high-dimensional space allows for the application of techniques like neural networks. These networks often involve linear transformations (weight matrices) in their layers. The goal is to build AI systems that can not only verify proofs but also discover new mathematical knowledge. While still an evolving area, the integration of AI, with its underpinnings in linear algebra, into the domain of automated reasoning and theorem proving holds significant potential for advancing mathematics and other scientific disciplines.

Advancements in Numerical Analysis

Numerical linear algebra is a branch of numerical analysis focused on developing and analyzing algorithms for solving linear algebra problems on computers. This field is crucial because many real-world applications involve very large matrices and vectors, where direct analytical solutions are infeasible, and computational efficiency and numerical stability are paramount.

Emerging trends in this area include the development of algorithms that can effectively utilize modern parallel computing architectures, such as multi-core CPUs and GPUs. Iterative methods for solving large sparse systems of linear equations (systems where most matrix entries are zero) continue to be refined. Randomized algorithms for matrix computations (e.g., randomized SVD, randomized least squares) are gaining traction as they can often provide approximate solutions much faster than deterministic methods for massive datasets. There's also ongoing research into mixed-precision algorithms that use lower precision arithmetic for speed while maintaining accuracy, and into developing robust software libraries that implement these advanced techniques, making them accessible to a broader range of scientists and engineers. These advancements directly impact how effectively linear transformations can be applied to solve large-scale problems in science and engineering.

Ethical Considerations in Applications

While linear transformations are powerful mathematical tools with widespread beneficial applications, their use, particularly in areas like machine learning and data analysis, also raises important ethical considerations that practitioners and policymakers must address.

Bias in Machine Learning Transformations

Machine learning models, many of which rely on linear transformations for feature processing or as part of their architecture, can inadvertently learn and even amplify biases present in the training data. If historical data reflects societal biases related to race, gender, age, or other protected characteristics, models trained on this data may perpetuate or exacerbate these biases in their predictions or decisions. For example, a loan application model trained on biased data might unfairly disadvantage certain demographic groups, even if the sensitive attributes themselves are not explicitly used as input features.

Linear transformations used in dimensionality reduction or feature selection can also play a role. If these transformations are optimized solely for predictive accuracy without considering fairness, they might inadvertently discard information crucial for equitable outcomes or create new features that correlate strongly with sensitive attributes in unintended ways. Addressing algorithmic bias requires careful attention to data collection, preprocessing techniques (which may involve specific transformations designed to mitigate bias), model design, and post-hoc auditing. Researchers are actively developing "fairness-aware" machine learning techniques that aim to balance accuracy with equity, sometimes by modifying or constraining the transformations used.

It is crucial for developers and data scientists to be aware of these potential pitfalls and to proactively work towards building fairer and more equitable AI systems. Transparency in how data is transformed and how models make decisions is also a key aspect of responsible AI development.

Debate on Military Applications

Linear algebra and linear transformations are fundamental to many technologies used in modern military applications. This includes areas like target tracking, missile guidance systems, signal processing for radar and sonar, cryptography for secure communications, and the development of autonomous weapons systems. The mathematical precision and computational efficiency offered by linear algebraic methods make them invaluable for these purposes.

However, the use of such technologies in warfare raises profound ethical debates. Concerns exist around the development of lethal autonomous weapons systems (LAWS) that could make life-or-death decisions without human intervention. The potential for errors in complex systems, the accountability for actions taken by autonomous systems, and the risk of escalating conflicts are all serious issues. While linear transformations themselves are neutral mathematical tools, their application in this context requires careful ethical scrutiny and public discourse regarding international treaties, rules of engagement, and the principles of humanitarian law.

Engineers and scientists working in these areas face ethical dilemmas regarding the potential uses of their research and innovations. There is an ongoing discussion within the scientific community and among policymakers about the responsible development and deployment of military technologies that rely on advanced mathematical and computational methods.

Implications for Data Privacy

Linear transformations are often used in data analysis and machine learning to process and extract insights from datasets that may contain sensitive personal information. While techniques like dimensionality reduction (e.g., PCA) can help in anonymizing data to some extent by transforming the original features, they do not always guarantee privacy. It might still be possible to re-identify individuals or infer sensitive attributes from the transformed data, especially if combined with other datasets or auxiliary information.

Furthermore, the process of data transformation itself, if not handled securely, can create vulnerabilities. If the transformation parameters (e.g., the projection matrix in PCA) are compromised, it might be possible to reverse the transformation or gain insights into the original data. Cryptographic techniques, some of which also rely on linear algebraic concepts (like matrix operations in certain encryption schemes), are used to protect data privacy. However, the interplay between data utility (how useful the data is after transformation) and privacy preservation is a delicate balance.

Emerging privacy-enhancing technologies (PETs) like homomorphic encryption (which allows computations on encrypted data) and federated learning (which trains models on decentralized data without sharing raw data) are being developed to address these concerns. Understanding the privacy implications of data transformations is crucial for responsible data science and for complying with data protection regulations like GDPR or CCPA.

Frameworks for Responsible Innovation

Given the potential societal impacts of technologies built upon linear transformations and other advanced mathematical concepts, there is a growing call for frameworks that promote responsible innovation. This involves integrating ethical considerations throughout the entire lifecycle of technology development, from initial research and design to deployment and monitoring.

Such frameworks often emphasize principles like fairness, accountability, transparency (FAT), safety, security, and privacy. They may involve conducting ethical impact assessments, engaging with diverse stakeholders (including affected communities), establishing clear lines of responsibility, and implementing mechanisms for oversight and redress. For technologies like AI and machine learning, this includes efforts to ensure that models are explainable (to the extent possible), that biases are identified and mitigated, and that systems are robust and reliable.

Organizations like the World Economic Forum and various academic institutions are actively working on developing and promoting guidelines and best practices for responsible AI and data governance. For individuals working in these fields, a commitment to ethical principles and continuous learning about the societal implications of their work is becoming increasingly important. This includes understanding not just the "how" of applying mathematical tools like linear transformations, but also the "why" and "what if."

Online Learning Strategies for Linear Transformations

For individuals who prefer self-directed study, wish to supplement formal education, or are looking to enhance their professional skills, online learning offers a wealth of resources for mastering linear transformations. A structured approach can make this journey more effective and rewarding.

OpenCourser is an excellent platform to begin your search for online courses, offering a vast catalog of options. You can browse mathematics courses or search specifically for "linear algebra" to find resources tailored to your learning style and goals. Don't forget to check the deals page for potential savings on course enrollments.

Designing Your Self-Study Curriculum

When embarking on self-study for linear transformations, creating a well-thought-out curriculum is key. Start by identifying your learning goals: Are you aiming for a basic understanding, or do you need a deep theoretical grasp for advanced applications? This will help determine the depth and breadth of topics to cover.

A typical curriculum should begin with the fundamentals of linear algebra: vectors, matrices, systems of linear equations, determinants. Once these are established, move into vector spaces, subspaces, basis, and dimension. Then, you can formally introduce linear transformations, their properties (kernel, image, rank-nullity theorem), and their matrix representations. Finally, cover eigenvalues, eigenvectors, and their applications. For a more applied focus, include topics like orthogonality, least squares, and specific transformations used in your field of interest (e.g., Fourier transforms for signal processing, geometric transformations for graphics).

Select primary learning resources, such as a good textbook or a comprehensive online course series. Supplement these with other materials like video lectures, interactive tutorials, and problem sets. Set a realistic schedule, breaking down topics into manageable weekly or daily goals. Regularly review and test your understanding to ensure concepts are sinking in. The OpenCourser Learner's Guide offers valuable tips on creating a self-study plan and staying disciplined.

These courses provide a structured introduction to linear algebra, suitable for building a self-study curriculum.

A foundational textbook can be the centerpiece of your self-study curriculum.

Adopting Project-Based Learning Approaches

Project-based learning is an incredibly effective way to solidify your understanding of linear transformations and see their practical relevance. Instead of just solving textbook problems, working on projects allows you to apply concepts in a more integrated and meaningful way. This approach helps bridge the gap between theory and application.

Consider projects that align with your interests. If you're into computer graphics, try implementing basic 2D or 3D transformations (rotation, scaling, translation) on simple shapes using a programming language like Python with a graphics library. If machine learning is your focus, you could implement Principal Component Analysis (PCA) from scratch or apply it to a dataset to reduce its dimensionality. Other project ideas include building a simple recommendation system using matrix factorization, simulating a physical system using systems of linear equations, or even creating a program to encrypt/decrypt messages using matrix transformations.

Start with small, manageable projects and gradually increase complexity as your skills grow. The process of figuring out how to apply the mathematical concepts to a tangible problem, debugging your code, and interpreting the results will deepen your understanding far more than passive learning alone. Don't be afraid to consult online resources or forums if you get stuck; the problem-solving process itself is a valuable learning experience. Many universities, like MIT OpenCourseWare, offer example projects that can serve as inspiration.

Supplementing Formal Education with Online Resources

Online courses and resources can be a fantastic supplement to formal university education in linear algebra. University courses often move at a fixed pace and may not cater to every individual's learning style. Online platforms can offer alternative explanations, interactive visualizations, and additional practice problems that can help clarify difficult concepts.

If you find a particular topic in your university course challenging, you can search for online lectures or tutorials specifically addressing that concept. Platforms like Khan Academy, Coursera, edX, and Udemy host numerous linear algebra courses taught by different instructors, some of whom might present the material in a way that resonates better with you. [For example, courses listed on OpenCourser like those from Georgia Tech or UT Austin offer different perspectives]. Many online courses also feature auto-graded quizzes and assignments, providing immediate feedback that can be very helpful for learning.

Furthermore, online resources can provide exposure to applications of linear algebra that might not be covered in depth in a theoretical university course. You can find courses or tutorials on how linear algebra is used in specific software (like MATLAB or Python's NumPy/SciPy libraries) or in particular domains (like machine learning or finance). This can help you connect the abstract concepts to practical skills valued in the job market. Using OpenCourser's "Save to list" feature can help you curate a collection of supplementary resources.

These online courses can serve as excellent supplements to formal education, offering different teaching styles and additional practice.

Leveraging Community-Driven Learning Resources

Learning, especially a subject as abstract as linear algebra, doesn't have to be a solitary endeavor. Engaging with community-driven learning resources can provide support, motivation, and diverse perspectives. Online forums like Stack Exchange (Mathematics and Cross Validated sections), Reddit (e.g., r/learnmath, r/linearalgebra), or specific discussion boards associated with online courses can be invaluable. You can ask questions when you're stuck, help others (which reinforces your own understanding), and see how different people approach problems.

Many online learning platforms have built-in communities where students can interact. Participating in these discussions, sharing insights, and collaborating on challenging problems can enhance the learning experience. There are also numerous blogs, YouTube channels, and open-source projects dedicated to mathematics education where you can find explanations, tutorials, and discussions. Some educators and enthusiasts create interactive visualizations or coding examples that they share freely, offering alternative ways to engage with the material.

Consider forming or joining a study group, either online or in person if possible. Discussing concepts with peers, working through problems together, and explaining ideas to each other can significantly improve comprehension and retention. The collective intelligence and mutual support of a learning community can make the journey of mastering linear transformations more enjoyable and successful.

Frequently Asked Questions (Career Focus)

For those exploring careers related to linear transformations, several common questions arise regarding its importance, industry demand, and skill development.

Is knowledge of linear transformations essential for data science careers?

Yes, a solid understanding of linear transformations and broader linear algebra concepts is generally considered essential for a successful career in data science. Data scientists frequently work with high-dimensional datasets, which are often represented as vectors and matrices. Linear transformations are at the heart of many fundamental data science techniques. For example, Principal Component Analysis (PCA), a widely used dimensionality reduction method, is based on finding an orthogonal linear transformation that maps data to a new coordinate system where the greatest variances lie on the first few axes.

Many machine learning algorithms, which are a core part of a data scientist's toolkit, have linear algebra at their core. Linear regression, logistic regression, support vector machines (SVMs), and the foundational operations within neural networks all involve linear transformations (often in the form of matrix multiplications). Understanding these transformations helps data scientists not only to use these algorithms effectively but also to interpret their results, diagnose problems, and even develop custom solutions. While some high-level libraries might abstract away the direct manipulation of matrices, a conceptual understanding of what's happening "under the hood" is crucial for effective modeling and problem-solving. For aspiring data scientists, exploring data science courses on OpenCourser can provide a structured learning path.

Which industries value expertise in linear transformations the most?

Expertise in linear transformations and linear algebra is highly valued across a diverse range of industries. The technology sector is a major employer, with roles in software engineering (especially for graphics, simulation, and scientific computing), machine learning, artificial intelligence, and data science all requiring these skills. Companies developing everything from search engines and social media platforms to self-driving cars and virtual reality systems rely on linear algebra.

The engineering disciplines (aerospace, mechanical, electrical, civil, biomedical) extensively use linear algebra for modeling, analysis, and design. This includes applications in control systems, signal processing, structural analysis, fluid dynamics, and robotics. The finance industry, particularly in quantitative finance ("quant") roles, uses linear algebra for portfolio optimization, risk management, and algorithmic trading. Other notable industries include game development (for graphics and physics engines), animation, telecommunications (for signal processing and network optimization), and scientific research in fields like physics, chemistry, and biology (for modeling and data analysis). Even areas like operations research and logistics use linear programming and optimization techniques rooted in linear algebra.

How does knowledge of linear transformations impact salary potential?

While it's difficult to isolate the direct salary impact of knowing "linear transformations" specifically, this knowledge is a core component of broader skill sets (like those in data science, machine learning engineering, or specialized software development) that are in high demand and often command competitive salaries. Roles that require a strong mathematical background, including a deep understanding of linear algebra, are typically more specialized and harder to fill, which can drive up compensation. For example, according to ZipRecruiter, jobs explicitly mentioning "Linear Algebra" can range significantly, but specialized roles like "Linear Algebra Primitives Product Manager" at companies like NVIDIA can have base salary ranges well into six figures. Positions like Data Scientist or Machine Learning Engineer, where linear algebra is a foundational skill, are also known for their high earning potential, as noted by various industry salary surveys from sources like Robert Half or Glassdoor.

Generally, the more advanced and specialized the application of linear algebra (e.g., in cutting-edge AI research, quantum computing, or complex financial modeling), the higher the potential salary. However, salary is also influenced by factors like years of experience, level of education (B.S., M.S., Ph.D.), geographic location, the specific industry, and the size and type of the employing organization. Possessing a strong grasp of linear transformations enhances your analytical capabilities and problem-solving skills, making you a more valuable asset in many technical and quantitative roles, which in turn positively influences earning potential.

Can self-taught professionals compete with degree holders in fields requiring linear transformations?

Yes, self-taught professionals can certainly compete with degree holders in fields requiring knowledge of linear transformations, especially in the technology sector. While a formal degree provides structured learning and a recognized credential, many employers, particularly in areas like software development, data science, and machine learning, increasingly value demonstrated skills, practical experience, and a strong portfolio over specific academic qualifications alone. The key for self-taught individuals is to rigorously learn the material, build tangible projects that showcase their understanding and application of linear transformations, and be able to articulate their knowledge effectively during interviews.

Online learning platforms, open-source textbooks, and community forums provide ample resources for dedicated individuals to master linear algebra to a high level. Building a portfolio of projects (e.g., on GitHub) where you've implemented algorithms using linear algebra, contributed to open-source software, or solved real-world (or realistic) problems can be very compelling to employers. Certifications from reputable online course providers can also help validate skills. The ability to pass technical interviews, which often include problem-solving sessions related to linear algebra concepts (especially for roles in AI/ML or graphics), is a critical factor where self-taught individuals can prove their competence. It requires discipline and initiative, but the path is certainly viable. Many companies are more focused on what you can do than solely on how you learned to do it.

What adjacent skills enhance career prospects in areas utilizing linear transformations?

Beyond a solid understanding of linear transformations and core linear algebra, several adjacent skills significantly enhance career prospects. Strong programming skills are paramount, particularly in languages commonly used for numerical computation and data analysis, such as Python (with libraries like NumPy, SciPy, Pandas, Scikit-learn), R, MATLAB, or C++. Familiarity with data structures and algorithms is also crucial, especially for roles involving software development or efficient data processing.

For data-centric roles, skills in statistics, probability, and data visualization are highly complementary. Experience with databases (SQL and NoSQL) and data manipulation tools is also beneficial. In machine learning, understanding various ML algorithms, model evaluation techniques, and deep learning frameworks (like TensorFlow or PyTorch) is important. For computer graphics or game development, knowledge of graphics APIs (like OpenGL or DirectX), shaders, and 3D modeling software can be advantageous. More broadly, strong problem-solving abilities, analytical thinking, good communication skills (to explain complex technical concepts to non-technical audiences), and the ability to work effectively in a team are soft skills that are universally valued and can greatly boost career progression.

How can one demonstrate competency in linear transformations during job applications and interviews?

Demonstrating competency in linear transformations during job applications and interviews requires a multi-faceted approach. On your resume and cover letter, highlight relevant coursework (mentioning specific topics like vector spaces, matrix theory, eigenvalues, etc.), academic projects, or any personal projects where you applied linear algebra concepts. If you've used tools like Python/NumPy, MATLAB, or R for linear algebra tasks, be sure to list them. Quantify your achievements whenever possible (e.g., "Developed a Python script using NumPy to perform PCA on a dataset of X dimensions, reducing dimensionality by Y% while retaining Z% of variance").

During interviews, especially technical ones, be prepared to solve problems that test your understanding of linear transformations. This might involve whiteboard coding to implement a matrix operation, explaining the geometric interpretation of a transformation, discussing eigenvalues/eigenvectors, or applying concepts to a hypothetical scenario related to the job role. Practice explaining concepts clearly and concisely. For example, be ready to explain what a linear transformation is, why it's useful, and provide examples. If you have a portfolio (e.g., on GitHub), be prepared to walk through relevant projects, explaining your design choices and how linear algebra played a role. Showing enthusiasm for the subject and an ability to connect theoretical knowledge to practical applications will make a strong impression.

Understanding and applying linear transformations is a journey that combines abstract reasoning with practical problem-solving. Whether you are just starting to explore this fascinating area of mathematics or are looking to apply its principles in your career, the journey offers continuous opportunities for growth and discovery. With dedication and the right resources, mastering linear transformations can unlock a deeper understanding of the mathematical structures that underpin much of our technological world.