Command Line Tools

Command Line Tools: A Comprehensive Guide

Command Line Tools, often referred to as the Command Line Interface (CLI), represent a powerful and enduring method of interacting with computers. At its core, a CLI is a text-based interface used for running programs, managing computer files, and interacting with the operating system. Users type commands into a terminal or console window, and the computer executes them. This direct line of communication with the machine offers a level of control and efficiency that graphical user interfaces (GUIs) sometimes cannot match, especially for complex or repetitive tasks. For those new to the concept, imagine it as having a direct conversation with your computer, telling it precisely what to do, step by step, using a specific language of commands.

Working with command line tools can be an engaging and empowering experience. For many, the thrill comes from the ability to automate complex workflows with simple scripts, manage remote servers seamlessly, or delve deep into the workings of an operating system. The precision and speed offered by the CLI can transform tedious manual processes into swift, automated actions. Furthermore, proficiency in command line tools is often a gateway to a deeper understanding of computer systems and a fundamental skill in numerous technology fields, from software development and system administration to data science and cybersecurity. This direct interaction fosters a problem-solving mindset and provides a tangible sense of mastery over the digital environment.

Introduction to Command Line Tools

This section will explore the foundational aspects of command line tools, their historical context, and their enduring relevance in the modern technological landscape. Understanding these elements is crucial for anyone looking to harness the power of the CLI, whether for professional development or personal enrichment.

Defining the Command Line Interface (CLI)

A Command Line Interface (CLI) is a means of interacting with a computer program where the user issues commands to the program in the form of successive lines of text. This interaction typically occurs in a terminal or console window. Unlike Graphical User Interfaces (GUIs), which rely on visual elements like icons and menus, CLIs are entirely text-driven. The user types a command, presses Enter, and the system responds, often by displaying text output or performing an action.

The basic purpose of a CLI is to provide a direct and efficient way to control a computer's operating system or specific applications. It allows for precise instructions and can handle tasks that might be cumbersome or impossible through a GUI. For instance, running a program with specific parameters, manipulating files in bulk, or automating sequences of operations are tasks well-suited to the CLI.

While the initial learning curve might seem steeper compared to a GUI, the power and flexibility gained are substantial. Many advanced operations and system configurations are only accessible through the command line, making it an indispensable tool for developers, system administrators, and power users.

A Brief History: From Teletypes to Terminals

The origins of command line interfaces are deeply intertwined with the history of computing itself. In the early days of computing, before graphical displays became common, teletypewriters (TTYs) served as the primary means of input and output. Users would type commands, and the computer's response would be printed on paper. These early systems laid the groundwork for the CLI paradigm.

As video display terminals (VDTs) replaced teletypes, the command line moved from paper to screen, but the fundamental interaction remained text-based. Operating systems like Unix, developed in the late 1960s and early 1970s, heavily relied on the CLI and introduced many of the foundational command line tools and concepts still in use today, such as pipes and redirection. MS-DOS, popular on early personal computers, also featured a command line interface (command.com).

Even with the rise of GUIs, initiated by systems like the Xerox Alto and popularized by Apple's Macintosh and Microsoft Windows, the CLI has not only survived but thrived. Modern operating systems like Linux, macOS (which is Unix-based), and Windows (with PowerShell and Windows Terminal) all feature powerful CLIs. This endurance speaks to the CLI's efficiency, power, and importance in the world of computing.

Advantages Over Graphical User Interfaces (GUIs)

While GUIs are often praised for their ease of use and visual appeal, CLIs offer distinct advantages in many scenarios. One primary benefit is resource efficiency. CLIs typically consume far fewer system resources (CPU, memory) than GUIs, making them ideal for servers or resource-constrained environments.

Another significant advantage is scriptability and automation. Repetitive tasks can be easily automated by writing scripts – sequences of commands – that the CLI can execute. This is immensely powerful for system administration, software development (e.g., build processes, deployments), and data processing. GUIs, while sometimes offering macro capabilities, rarely match the flexibility and power of CLI scripting.

Furthermore, CLIs often provide more granular control over system functions and applications. Many tools offer a vast array of options and flags that can be combined in complex ways, allowing for highly specific operations not easily exposed in a GUI. For remote access and management, CLIs are often preferred due to their lower bandwidth requirements and ease of use over SSH (Secure Shell).

Ubiquitous Use Cases Across Industries

Command line tools are not confined to a niche corner of the tech world; their applications span a multitude of industries. In software development, CLIs are indispensable for version control (e.g., Git), compiling code, running tests, and managing dependencies with package managers. Web developers routinely use CLIs for tasks like managing front-end build processes or interacting with cloud services.

System administrators and DevOps engineers live in the command line, using it to manage servers, configure networks, monitor system health, and deploy applications. The ability to remotely manage hundreds or even thousands of servers efficiently is only practical through CLI tools and automation scripts. You can explore many technical skills related to these roles on OpenCourser.

In data science and research, CLIs are used for manipulating large datasets, running analytical programs, and managing high-performance computing clusters. Bioinformatics, for example, heavily relies on command line tools for genomic sequence analysis. Even in fields like cybersecurity, penetration testers and security analysts use specialized CLI tools for network scanning, vulnerability assessment, and forensic analysis. The versatility of command line tools makes them a cornerstone of modern technology infrastructure.

Core Concepts and Terminology

To effectively wield command line tools, a grasp of fundamental concepts and terminology is essential. This section breaks down the building blocks of CLI interaction, providing a clear understanding for those newer to this environment, particularly university students and early-career professionals looking to solidify their technical foundations.

Understanding Shell Environments

A shell is a program that takes commands typed by the user and gives them to the operating system to perform. It's the primary interface between the user and the operating system's kernel. When you open a terminal or command prompt, you are interacting with a shell. Different operating systems and user preferences have led to various shell programs.

Some of the most common shells include Bash (Bourne Again SHell), which is the default on most Linux distributions and macOS. Zsh (Z Shell) is another popular option, known for its extensive customization capabilities and features like improved tab completion. For Windows users, PowerShell is a powerful, object-oriented shell and scripting language, while the traditional Command Prompt (cmd.exe) offers more basic functionality. Each shell has its own syntax nuances and built-in commands, but many core principles are shared.

Choosing a shell can depend on the operating system you are using and the specific tasks you need to perform. For many, Bash provides a robust and widely available starting point. Learning the basics of your chosen shell is the first step towards CLI mastery.

These courses can help build a foundation in working with common shell environments, particularly Linux-based ones.

For those specifically interested in Windows, learning about its command line environments is also valuable.

Grasping Command Syntax and Structure

Commands in a CLI typically follow a common structure: `command [options] [arguments]`. The command itself is the name of the program you want to run (e.g., `ls` for listing files, `cp` for copying files). Options (often called flags or switches) modify the behavior of the command. They usually start with a hyphen (`-`) for short options (e.g., `-l`) or two hyphens (`--`) for long options (e.g., `--long-format`).

Arguments specify what the command should operate on, such as filenames, directories, or URLs. For example, in the command `cp source.txt destination.txt`, `cp` is the command, and `source.txt` and `destination.txt` are arguments. Some commands can take multiple options and multiple arguments.

Understanding this basic syntax is crucial for interpreting documentation and constructing effective commands. Most commands provide help documentation, often accessible with options like `-h`, `--help`, or through the `man` (manual) command (e.g., `man ls`). Learning to read these manual pages is a key skill for exploring the full capabilities of any command line tool.

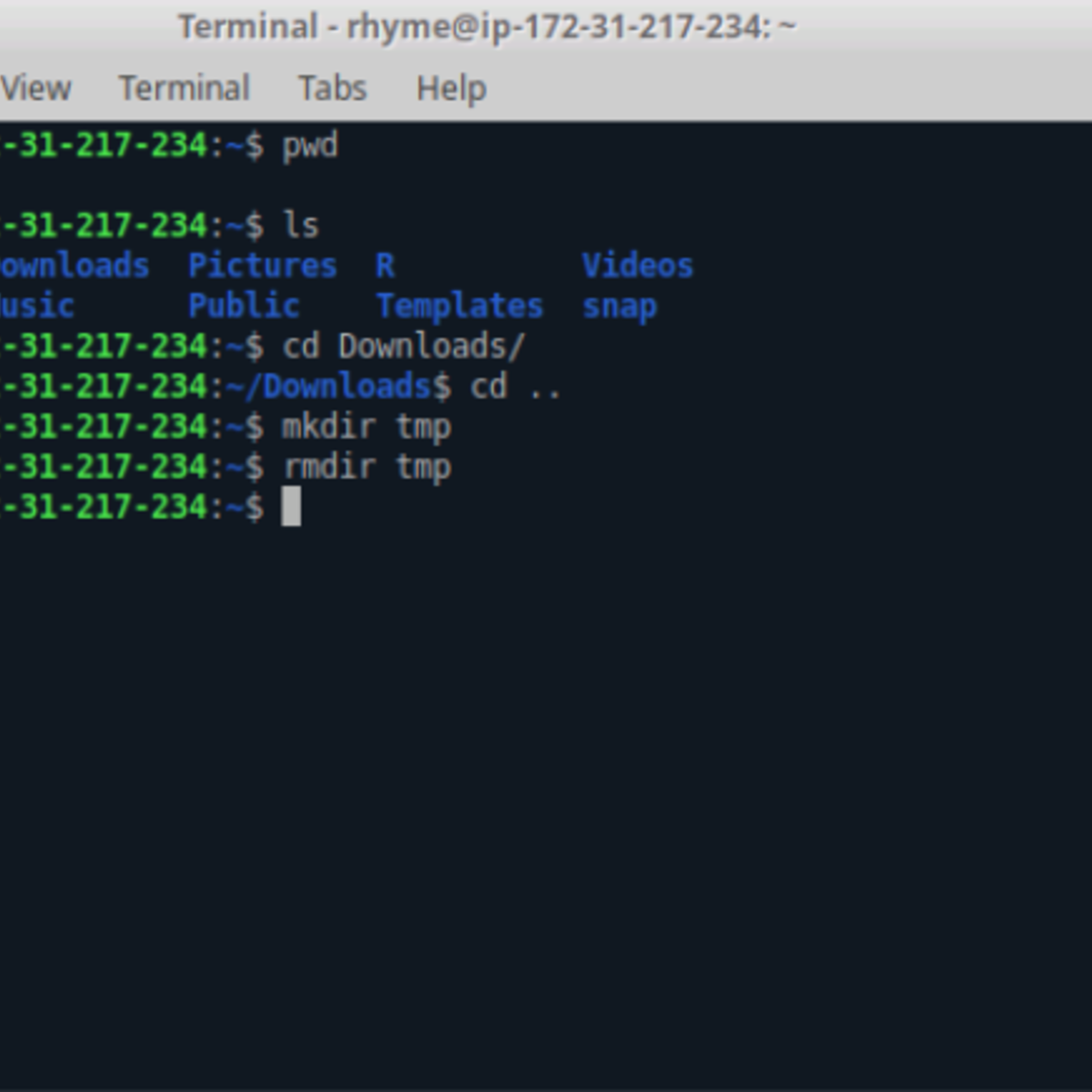

Navigating the File System

One of the most frequent uses of the command line is navigating and managing the file system. Commands like `pwd` (print working directory) show your current location. `ls` (list) displays the contents of the current directory. To change directories, you use the `cd` (change directory) command, followed by the path to the desired directory (e.g., `cd /home/user/documents`).

Paths can be absolute (starting from the root directory, e.g., `/usr/local/bin`) or relative (starting from the current directory, e.g., `../project_files` where `..` means the parent directory and `.` means the current directory). Other essential file system commands include `mkdir` (make directory), `rmdir` (remove directory), `cp` (copy files/directories), `mv` (move or rename files/directories), and `rm` (remove files/directories).

Mastering these navigation and file manipulation commands forms the bedrock of CLI usage. It allows you to efficiently locate, organize, and manage your files and projects directly from the terminal.

Many introductory courses cover file system navigation extensively.

Input/Output Redirection and Piping

A powerful feature of many shells is the ability to redirect input and output (I/O). By default, commands take input from the keyboard (standard input, stdin) and send output to the screen (standard output, stdout). Errors are typically sent to another stream called standard error (stderr). Redirection allows you to change these defaults.

The `>` operator redirects stdout to a file, overwriting the file if it exists (e.g., `ls -l > file_list.txt`). The `>>` operator appends stdout to a file. The `<` operator redirects stdin from a file (e.g., `sort < unsorted_names.txt`). You can also redirect stderr, often using `2>`.

Piping, represented by the `|` operator, allows you to send the stdout of one command directly to the stdin of another command. This enables you to chain commands together to perform complex operations. For example, `ls -l | grep ".txt"` would list all files in long format, and then `grep` would filter that output to show only lines containing ".txt". This concept of building "pipelines" is a cornerstone of Unix philosophy and CLI productivity.

Environment Variables and Configuration

Environment variables are dynamic named values that can affect the way running processes will behave on a computer. They are part of the environment in which a process runs. For example, the `PATH` environment variable tells the shell which directories to search for executable programs. The `HOME` variable typically points to the current user's home directory.

You can view environment variables (e.g., with `env` or `printenv`), set them for the current session (e.g., `export MY_VARIABLE="value"` in Bash), or set them permanently by modifying shell configuration files. These files, such as `.bashrc` or `.zshrc` for Bash and Zsh respectively, or profile settings for PowerShell, are scripts that run when the shell starts. They allow users to customize their shell environment, set aliases (shortcuts for longer commands), define functions, and modify the prompt.

Understanding how to inspect and modify environment variables and configure your shell environment is crucial for tailoring the CLI to your workflow and ensuring that tools and scripts run correctly.

The following books are excellent resources for delving deeper into these core concepts, particularly within the Linux environment.

Essential Command Line Tools

Beyond the basic shell commands, a vast ecosystem of specialized command line tools exists to perform a wide array of tasks. This section introduces some of the most essential and widely used utilities that practitioners and learners will encounter. Focusing on cross-platform availability where possible, these tools demonstrate the CLI's versatility and power in real-world applications.

Text Processing Titans: grep, awk, and sed

Manipulating text is a common task in computing, and the command line offers powerful utilities for this. `grep` (Global Regular Expression Print) is used to search for patterns in text. It can scan files or standard input and print lines that match a given regular expression. For example, `grep "error" logfile.txt` will find all lines containing the word "error" in `logfile.txt`.

`awk` is a versatile programming language designed for text processing and data extraction. It processes files line by line and can perform actions based on patterns found in each line. It's particularly adept at handling structured text data, like CSV files or log files, allowing for complex data manipulation and reporting directly from the command line.

`sed` (Stream EDitor) is used for performing basic text transformations on an input stream (a file or input from a pipeline). It can perform operations like search and replace, deletion, and insertion. For instance, `sed 's/old_text/new_text/g' input.txt > output.txt` replaces all occurrences of "old_text" with "new_text" in `input.txt` and saves the result to `output.txt`. Mastering these tools, often in combination, provides incredible power for text manipulation.

These books offer in-depth guidance on text processing utilities.

System Monitoring Sentinels: top, htop, ps

Understanding what your system is doing is crucial for troubleshooting and performance tuning. `top` (Table Of Processes) provides a dynamic real-time view of a running system. It displays system summary information and a list of tasks currently being managed by the kernel, ordered by CPU usage by default. It's an invaluable tool for quickly identifying resource-hungry processes.

`htop` is an interactive process viewer and system monitor that is often considered a more user-friendly alternative to `top`. It provides a colorized display, allows scrolling through the process list, and makes it easier to send signals to processes (e.g., to kill or renice them). While `top` is almost universally available, `htop` might need to be installed separately on some systems.

The `ps` (Process Status) command displays information about currently running processes. It can show a snapshot of the processes at a specific moment. With various options, `ps` can provide detailed information, including process IDs (PIDs), user ownership, CPU and memory usage, and the command that started the process (e.g., `ps aux` on Linux systems shows all processes with detailed information).

Network Navigators: curl, wget, netstat

Interacting with networks and the internet is another common CLI task. `curl` (Client URL) is a versatile tool for transferring data with URLs. It supports a wide range of protocols, including HTTP, HTTPS, FTP, and more. It can be used to download files, test APIs, send form data, and much more, making it a favorite among developers and system administrators for network-related scripting and debugging.

`wget` is a non-interactive network downloader. It's primarily used for retrieving files from the web using HTTP, HTTPS, and FTP. `wget` can download files recursively, resume aborted downloads, and work in the background, making it suitable for downloading large files or entire websites.

`netstat` (Network Statistics) displays network connections (both incoming and outgoing), routing tables, and a number of network interface statistics. It can help identify which services are listening on which ports, active connections, and troubleshoot network connectivity issues. On modern Linux systems, `ss` (Socket Statistics) is often recommended as a replacement for `netstat` as it's generally faster and provides more information.

Learning to use these tools effectively is crucial for anyone working with networked systems.

Version Control Vanguard: Git CLI

Version control is essential for modern software development, and Git is the de facto standard. While graphical Git clients exist, the Git Command Line Interface offers the most power and control. Commands like `git clone`, `git add`, `git commit`, `git push`, `git pull`, `git branch`, and `git merge` are fundamental to the daily workflow of millions of developers.

The Git CLI allows for precise control over every aspect of the version control process, from managing complex branching strategies to resolving merge conflicts and inspecting repository history. Understanding the Git CLI is often a prerequisite for effective collaboration on software projects and for contributing to open-source projects. Many cloud platforms and CI/CD systems also integrate directly with Git repositories, often configured and managed via command line interactions.

Proficiency with the Git CLI is a highly sought-after skill. You can find official documentation and resources at git-scm.com/doc.

Package Powerhouses: apt, brew, pip

Package managers automate the process of installing, upgrading, configuring, and removing software packages. They simplify software management significantly. On Debian-based Linux distributions (like Ubuntu), `apt` (Advanced Package Tool) is the primary command line package manager (e.g., `sudo apt update`, `sudo apt install package-name`). Other Linux distributions have their own, like `yum` or `dnf` for Fedora/RHEL.

For macOS, Homebrew (`brew`) is a popular open-source package manager that allows users to easily install software that Apple doesn’t provide. It simplifies the installation of thousands of command line tools and applications (e.g., `brew install wget`).

For programming languages, language-specific package managers are common. For Python, `pip` (Pip Installs Packages) is used to install and manage software packages written in Python (e.g., `pip install requests`). Similarly, Node.js has `npm` or `yarn`, Ruby has `gem`, etc. Understanding the relevant package managers for your operating system and development stack is crucial for an efficient workflow.

Advanced CLI Techniques

Once comfortable with the essentials, users can explore advanced techniques to unlock even greater productivity and control. These methods are particularly relevant for technical professionals, researchers, and anyone looking to automate complex tasks or manage sophisticated systems. This section delves into scripting, regular expressions, remote management, containerization, and performance optimization using the command line.

The Art of Scripting and Automation

Scripting is the process of writing a sequence of commands in a file that can be executed by the shell. This allows for the automation of repetitive or complex tasks. Shell scripts (e.g., Bash scripts with a `.sh` extension) can include variables, loops, conditional logic, and functions, making them powerful tools for system administration, build automation, data processing, and more.

Beyond shell scripting, many programming languages like Python, Perl, or Ruby are also excellent for writing more complex command line tools and automation scripts. These languages often provide robust libraries for interacting with the operating system, network, and various data formats. Learning to write effective scripts can save enormous amounts of time and reduce the potential for human error in routine operations.

For those looking to build command line tools using programming languages, these courses offer practical introductions.

A solid understanding of programming principles can be beneficial for advanced scripting.

Harnessing Regular Expressions for Pattern Matching

Regular expressions (regex or regexp) are powerful sequences of characters that define a search pattern. They are used by many command line tools (like `grep`, `sed`, `awk`) and programming languages to find, match, and manage text. While the syntax can seem cryptic at first, mastering regular expressions unlocks a sophisticated level of text manipulation and data extraction.

With regular expressions, you can search for complex patterns, validate input formats (like email addresses or phone numbers), extract specific pieces of information from unstructured text, and perform intricate search-and-replace operations. For example, a regex could find all IP addresses in a log file or identify all lines that start with a specific date format. Online regex testers and debuggers can be very helpful when learning and crafting complex expressions.

Many programming and scripting courses will touch upon regular expressions, as they are a fundamental tool in text processing.

Secure Connections: SSH and Remote Server Management

SSH (Secure Shell) is a cryptographic network protocol for operating network services securely over an unsecured network. Its most notable application is for remote login to computer systems by users. Using the `ssh` command line tool, administrators and developers can access and manage servers located anywhere in the world as if they were sitting right in front of them.

Beyond interactive shell access, SSH is also used for securely transferring files (using tools like `scp` - secure copy, or `sftp` - SSH File Transfer Protocol) and for tunneling other network protocols. SSH keys provide a more secure and often more convenient way to authenticate than passwords. Proficiency in using SSH is essential for anyone involved in server administration, cloud computing, or distributed software development.

Managing multiple remote servers often involves scripting SSH commands or using configuration management tools that leverage SSH for secure communication.

Working with Containers: The Docker CLI

Containerization, with Docker being the most prominent platform, has revolutionized how applications are developed, shipped, and run. The Docker CLI is the primary way to interact with the Docker daemon to build, manage, and run containers. Commands like `docker build`, `docker run`, `docker ps`, `docker images`, and `docker-compose up` are central to the container workflow.

The Docker CLI allows developers to define application environments in Dockerfiles, build consistent container images, and run these images across different machines and cloud platforms. This ensures that applications behave the same way regardless of the underlying infrastructure. For microservices architectures and CI/CD pipelines, Docker and its CLI are foundational technologies.

Understanding the Docker CLI is increasingly important for software developers, DevOps engineers, and system administrators.

Optimizing Performance with CLI Tools

Command line tools are not just for managing systems; they are also critical for diagnosing and optimizing performance. Tools like `vmstat`, `iostat`, and `mpstat` provide detailed statistics about virtual memory, I/O activity, and CPU usage, respectively. Profiling tools like `perf` on Linux can help identify performance bottlenecks in applications at a very granular level.

Network performance can be analyzed using tools like `ping`, `traceroute` (or `mtr`), and `iperf`. For web servers, tools like `ab` (Apache Benchmark) or `wrk` can be used for load testing. Many databases also offer command line utilities for monitoring performance and optimizing queries.

Effectively using these tools requires understanding the metrics they provide and how those metrics relate to system and application behavior. This knowledge is vital for ensuring systems run efficiently and reliably, especially under heavy load.

Formal Education Pathways

For those considering an academic route to mastering command line tools and related technologies, several formal education pathways can provide a strong theoretical and practical foundation. University programs in computer science and related fields often integrate command line usage extensively into their curricula.

The Role in Computer Science Degrees

A Bachelor's or Master's degree in Computer Science typically provides a comprehensive understanding of the principles underlying command line interfaces and the operating systems they interact with. Core courses often require students to work in Unix-like environments, compile code from the command line, and use CLI-based development tools.

Students learn about process management, file systems, inter-process communication, and shell scripting, all ofwhich are concepts directly applicable to proficient CLI usage. While not always a dedicated "CLI course," the practical application of these tools is woven throughout many subjects, from software engineering to database management.

These programs aim to develop problem-solving skills and a deep understanding of how computers work, with the CLI being a fundamental tool in that exploration.

Operating Systems Coursework as a Foundation

Courses specifically focused on Operating Systems (OS) are particularly relevant. These courses delve into the architecture and design of operating systems, including the role of the kernel, system calls, memory management, concurrency, and security. A significant portion of practical OS coursework often involves interacting with the system at a low level, frequently through a command line interface.

Students in OS courses might build their own simple shells, implement system utilities, or work with kernel modules, all ofwhich heavily rely on CLI tools for compilation, debugging, and execution. This hands-on experience provides a robust understanding of what happens "under the hood" when a command is typed and executed.

The following courses offer introductions to operating systems and related tools, which are fundamental to understanding CLIs.

Research Applications: HPC and Data Science

In academic research, particularly in fields requiring significant computational power like High-Performance Computing (HPC) and Data Science, command line tools are indispensable. Researchers often work with remote supercomputing clusters, which are almost exclusively accessed and managed via SSH and command line interfaces. Job submission scripts, data management, and software environment configuration on these clusters are all handled through CLI commands.

In data science, while tools like Jupyter notebooks provide interactive environments, much of the underlying data processing, model training pipeline automation, and interaction with big data frameworks (like Hadoop or Spark) often involves command line operations. Many data science libraries and tools also offer CLI versions for scripting and batch processing.

A strong command of the CLI is therefore crucial for researchers who need to manage large datasets and complex computational workflows efficiently.

University Lab Environments and Supercomputing

University lab environments, especially in engineering, physics, bioinformatics, and computer science departments, frequently rely on Linux or Unix-based systems where command line interaction is the norm. Students gain practical experience using scientific software, compilers, debuggers, and data analysis tools directly from the terminal.

Access to university supercomputing resources further necessitates CLI proficiency. These powerful machines are shared resources, and interacting with their job schedulers (e.g., Slurm, PBS) to submit, monitor, and manage computational tasks is done entirely through command line commands and scripts. This exposure prepares students for research careers and roles in industries that utilize HPC.

This practical experience in academic settings often forms the basis for a lifelong reliance on the power and flexibility of the command line.

CLI in Academic Conferences and Workflows

Even in the context of academic conferences and scholarly publishing, command line tools play a role. For example, typesetting systems like LaTeX, widely used for writing scientific papers and dissertations, are often compiled and managed using command line tools. Version control systems like Git, accessed via its CLI, are invaluable for collaborating on research papers and managing code for experiments.

Furthermore, automating tasks like data visualization generation for papers, managing bibliographies, or preparing presentation materials can be streamlined using scripts and CLI tools. While not always explicitly taught as "conference tools," the underlying CLI skills enhance productivity and efficiency in academic research workflows.

Many of these skills are transferable to any research-intensive career path.

Self-Directed Learning Strategies

For individuals looking to pivot careers, upskill, or learn independently, mastering command line tools is an achievable goal through self-directed learning. A wealth of online resources, communities, and project-based opportunities can guide your journey. This section explores effective strategies for those forging their own path.

Crafting Your Learning Roadmap

Starting your journey into command line tools requires a plan. Begin by identifying your goals: Are you aiming for a specific tech role, looking to automate personal tasks, or simply curious? A clear objective will help you choose the right tools and topics to focus on. For instance, aspiring web developers might prioritize learning Git CLI and tools for managing Node.js environments, while future system administrators would focus on Bash scripting, system monitoring tools, and network utilities on Linux.

Break down your learning into manageable chunks. Start with the basics: understanding the shell, file system navigation, and common commands. Gradually move to more complex topics like scripting, text processing, and specific tools relevant to your goals. Many online platforms offer structured courses, which can serve as a good foundation for your roadmap. OpenCourser is an excellent resource for finding such courses across various providers.

Remember that consistency is key. Dedicate regular time to learning and practice, even if it's just for short periods each day. Track your progress and adjust your roadmap as you discover new interests or encounter challenges.

These courses provide excellent starting points for learning command line fundamentals, especially for beginners.

Contributing to Open-Source Projects

Once you have a basic understanding, contributing to open-source projects is an excellent way to gain practical experience and learn from others. Many open-source projects rely heavily on command line tools for development, testing, and deployment. Start by finding projects that interest you on platforms like GitHub or GitLab.

You can begin with small contributions, such as fixing bugs, improving documentation (which often involves understanding how the software is built and run from the command line), or writing simple scripts. This not only hones your CLI skills but also allows you to collaborate with experienced developers, understand real-world coding practices, and build a portfolio of work. Many projects have contributor guidelines and welcoming communities to help newcomers get started.

Engaging with open source can be a powerful motivator and provide tangible evidence of your growing skills to potential employers.

Setting Up Your Local Development Playground

Practice is paramount. Set up a local environment where you can experiment freely without fear of breaking a critical system. If you're on Windows, consider installing the Windows Subsystem for Linux (WSL) to get a Linux environment. Alternatively, use a virtual machine (VM) with a Linux distribution like Ubuntu or Fedora. macOS users already have a Unix-based terminal.

Once your environment is ready, start using the command line for everyday tasks. Manage your files, install software, write small scripts to automate things you do regularly. The more you use it, the more comfortable and proficient you'll become. Don't be afraid to experiment with different commands and options – that's how you learn. You can always create a snapshot of your VM if you're worried about making irreversible changes.

This hands-on approach will solidify your understanding far more effectively than passive learning alone.

Exploring CLI-Focused Certification Programs

While not always necessary, certifications can provide a structured learning path and a credential to showcase your skills. Several organizations offer certifications related to Linux administration (like LPIC or CompTIA Linux+), cloud platforms (AWS Certified Cloud Practitioner, Azure Fundamentals often involve CLI knowledge), or specific tools. These programs typically have defined curricula and exams that test practical CLI skills.

Before pursuing a certification, research its relevance to your career goals and its recognition in your target industry. Certifications can be particularly helpful for career changers as they provide a formal validation of acquired skills. Many online courses on platforms discoverable through OpenCourser align with certification exam objectives or offer their own certificates of completion, which can be added to your professional profiles. The OpenCourser Learner's Guide offers tips on how to best leverage such certificates.

Even if you don't take the exam, studying the material for a certification can be a valuable learning experience.

Leveraging Community Resources and Documentation

The command line community is vast and supportive. Online forums like Stack Exchange (Stack Overflow, Super User, Unix & Linux), Reddit communities (e.g., r/linux, r/commandline), and dedicated Discord servers or Slack channels offer places to ask questions, share knowledge, and learn from peers. Don't hesitate to seek help when you're stuck, but also try to solve problems yourself first – the process of troubleshooting is a great learning opportunity.

Official documentation is your best friend. Most command line tools come with manual pages (`man` command) or help flags (`--help`). Websites for specific tools (like the Git documentation or Docker docs) provide comprehensive guides and tutorials. Learning to read and understand technical documentation is a crucial skill in itself. Many open-source projects also have extensive wikis and community-contributed documentation.

Engaging with these resources will not only help you overcome specific challenges but also keep you updated on new tools and techniques.

Career Progression and Opportunities

Proficiency in command line tools is not just a technical skill; it's a gateway to a wide array of career opportunities and progression paths in the technology sector. From entry-level support roles to senior leadership positions, the ability to effectively use and automate via the CLI is highly valued.

Starting with Entry-Level Technical Support

Many individuals begin their tech careers in technical support roles. Even in these positions, basic command line skills can be beneficial, especially when troubleshooting network issues (using `ping`, `ipconfig`/`ifconfig`, `traceroute`), diagnosing software problems, or remotely accessing user machines. As support roles become more specialized, such as supporting Linux systems or specific server applications, CLI proficiency becomes increasingly important.

These roles provide valuable experience in problem-solving, customer interaction, and understanding how systems work in real-world scenarios. They can serve as a stepping stone to more advanced technical positions as you continue to build your CLI and other technical skills. Ambition and a willingness to learn can quickly differentiate you in these roles.

For those starting out, building a solid foundation is key. Even short, focused courses can provide an immediate boost.

Advancing to Mid-Career DevOps/SRE Positions

For mid-career professionals, strong command line skills are fundamental for roles like DevOps Engineer or Site Reliability Engineer (SRE). These roles focus on automating and streamlining the software development lifecycle, managing infrastructure as code, and ensuring the reliability and scalability of systems. The CLI is the primary interface for interacting with cloud platforms, container orchestration tools (like Kubernetes), configuration management systems (Ansible, Chef, Puppet), and CI/CD pipelines.

Scripting in languages like Bash, Python, or Go to automate operational tasks is a daily activity. Monitoring system health, deploying updates, and responding to incidents often involve intricate command line work. The demand for skilled DevOps and SRE professionals is high, and robust CLI capabilities are a core requirement.

Continuous learning is vital in these evolving fields. Consider exploring topics related to cloud computing and automation.

Meeting Cloud Engineering Requirements

Cloud Engineers, who design, implement, and manage solutions on cloud platforms like AWS, Azure, or Google Cloud, rely heavily on command line interfaces. Each major cloud provider offers its own powerful CLI (e.g., AWS CLI, Azure CLI, gcloud CLI) for managing all aspects of their services, from provisioning virtual machines and storage to configuring networks and security policies.

While cloud consoles provide graphical interfaces, CLIs are essential for automation, scripting complex deployments, and integrating cloud services into DevOps workflows. Infrastructure as Code (IaC) tools like Terraform and CloudFormation also often have CLI components for applying configurations. A deep understanding of the relevant cloud CLI is non-negotiable for serious cloud engineering roles.

Specialized courses can help you master cloud-specific CLIs.

Cybersecurity Applications and CLI Prowess

In the field of Cybersecurity, command line tools are indispensable for various specializations. Penetration testers use a plethora of CLI tools for network scanning (e.g., Nmap), vulnerability exploitation (e.g., Metasploit Framework often has a CLI), and password cracking. Digital forensics analysts use command line utilities for disk imaging, log analysis, and data recovery.

Security administrators use CLIs to configure firewalls, manage intrusion detection systems, and script security audits. Even tasks like analyzing malware or monitoring network traffic often involve specialized command line tools. The precision and control offered by the CLI are critical when dealing with sensitive security operations. Information about career paths in cybersecurity can often be found through resources like the U.S. Bureau of Labor Statistics.

Pathways to Leadership in Infrastructure Teams

For those with deep technical expertise in command line tools, scripting, and system architecture, leadership roles within infrastructure teams become attainable. Positions like Infrastructure Manager, Director of IT Operations, or Chief Technology Officer (in smaller organizations) often require a strong understanding of the technologies their teams are working with, including the command line tools that underpin much of modern IT infrastructure.

While these roles involve more strategic planning and people management, the ability to understand technical discussions, guide architectural decisions, and troubleshoot complex issues often draws upon a solid foundation in CLI and systems engineering. A leader who has "been in the trenches" with command line tools can often command greater respect and make more informed decisions. Salary benchmarks and detailed skill demand metrics for such roles can be researched on professional networking sites and through industry salary surveys.

Consider roles such as System Administrator as a foundational step towards these leadership positions.

The Role of Command Line Tools in Modern Automation

Command line tools are not relics of the past; they are central to modern automation across various technological domains. From software delivery to managing vast cloud infrastructures and deploying sophisticated AI models, the CLI is often the engine driving efficiency, consistency, and speed.

Powering CI/CD Pipelines

Continuous Integration and Continuous Deployment/Delivery (CI/CD) pipelines are the backbone of modern software development, enabling teams to build, test, and release software rapidly and reliably. Command line tools are fundamental to nearly every stage of a CI/CD pipeline.

Version control systems like Git are accessed via their CLIs to fetch the latest code. Build tools (e.g., Maven, Gradle, npm scripts) are executed as command line commands to compile code and package applications. Testing frameworks are often invoked from the command line. Deployment tools and scripts, whether deploying to on-premises servers or cloud environments, heavily utilize CLIs to orchestrate the release process. Tools like Jenkins, GitLab CI, GitHub Actions, and others primarily operate by executing sequences of command line instructions.

The ability to script and automate these steps via the CLI is what makes the speed and reliability of CI/CD possible.

Driving Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is the practice of managing and provisioning computing infrastructure through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools. Popular IaC tools like Terraform, Ansible, Chef, Puppet, and Pulumi all have powerful command line interfaces.

Using these CLIs, engineers can define their entire infrastructure – servers, networks, databases, load balancers – in code. This code can then be versioned, tested, and applied to create or update environments consistently and repeatedly. The CLI is used to plan changes, apply configurations, and manage the state of the infrastructure. This approach significantly reduces manual effort, minimizes configuration drift, and enables scalable and reproducible environments, especially in the cloud.

Many cloud automation courses focus heavily on the CLI aspects of IaC tools.

Facilitating AI/ML Model Deployment

The deployment of Artificial Intelligence (AI) and Machine Learning (ML) models into production environments often involves sophisticated pipelines where command line tools play a crucial role. Data preprocessing scripts, model training jobs, and model packaging are frequently executed as command line tasks. Tools for MLOps (Machine Learning Operations) often provide CLIs for versioning models, managing experiments, and deploying models as APIs.

Containerization tools like Docker, managed via its CLI, are widely used to package ML models and their dependencies for consistent deployment. Orchestration platforms like Kubernetes, also often managed via its `kubectl` CLI, are used to scale and manage these containerized ML services. Even interacting with cloud-based AI/ML platforms for training or inference typically involves using their respective CLIs for automation and integration into larger workflows. The ability to script these interactions is essential for robust MLOps practices. The Linux Foundation hosts many projects and initiatives relevant to AI/ML infrastructure.

Optimizing Costs Through Automation

Command line driven automation can lead to significant cost optimizations. By scripting routine administrative tasks, organizations reduce the manual hours spent on maintenance, deployment, and monitoring. This frees up skilled engineers to focus on higher-value work. Automated scaling, often configured and triggered via CLI commands or scripts interacting with cloud provider APIs, ensures that resources are provisioned only when needed and de-provisioned when not, optimizing cloud spend.

Automated testing and deployment pipelines, orchestrated by CLI tools, reduce the cost of errors by catching bugs earlier in the development cycle and ensuring more reliable releases. Furthermore, efficient resource utilization, achieved through CLI-based monitoring and optimization of systems, can lower hardware and energy costs. In essence, the precision and repeatability offered by command line automation translate directly into operational efficiencies and financial savings.

Market trends, such as those reported by firms like Gartner or Forrester, consistently highlight the growing importance of automation and DevOps tooling, where CLI skills are paramount.

Tracking Market Trends in DevOps Tooling

The market for DevOps tooling is dynamic and constantly evolving, with a strong emphasis on automation, cloud-native technologies, and developer productivity. Many of the leading and emerging tools in this space are either primarily CLI-driven or have robust CLIs as a core component for integration and automation. This includes tools for version control, CI/CD, containerization, orchestration, configuration management, monitoring, and security.

Trends indicate a continued shift towards "everything as code," where not just infrastructure but also security policies, monitoring configurations, and even compliance are defined and managed through code, often applied and audited using command line interfaces. The rise of serverless computing and edge computing also brings new CLI tools and workflows for deploying and managing functions and applications in these environments.

Staying abreast of these trends, often by following industry publications, attending conferences, and experimenting with new tools (many ofwhich can be found via OpenCourser's programming section), is important for professionals who rely on command line skills to remain effective and competitive.

Frequently Asked Questions (Career Focus)

Navigating a career in technology often brings up questions about skill relevance and future prospects. This section addresses common queries related to command line proficiency, aiming to provide clarity for those considering or advancing careers where CLI skills are pertinent.

Is CLI proficiency required for cloud engineering roles?

Yes, unequivocally. While cloud platforms offer sophisticated graphical user interfaces (GUIs) through their web consoles, true operational efficiency, automation, and scalability in cloud engineering are achieved through their Command Line Interfaces (CLIs) and Infrastructure as Code (IaC) tools, which are themselves CLI-driven. Tasks like scripting bulk operations, integrating cloud services into CI/CD pipelines, managing complex deployments, and performing deep troubleshooting often necessitate using the AWS CLI, Azure CLI, or Google Cloud SDK's gcloud commands. Employers in cloud engineering expect candidates to be comfortable and proficient with these tools. Without strong CLI skills, a cloud engineer's ability to automate and manage at scale is severely limited.

Many cloud-focused courses emphasize CLI usage.

Can CLI skills compensate for a lack of a formal degree?

In many parts of the tech industry, practical skills and demonstrable experience can indeed carry significant weight, sometimes compensating for the lack of a traditional four-year computer science degree. Strong CLI skills, especially when combined with scripting abilities (e.g., Python, Bash), knowledge of version control (Git), and experience with relevant tools (Docker, Kubernetes, cloud CLIs), can make a candidate highly attractive to employers, particularly for roles in DevOps, system administration, and cloud operations.

A portfolio of projects (e.g., on GitHub), contributions to open-source, or relevant certifications can further showcase your capabilities. While a formal degree provides a broad theoretical foundation, many companies prioritize proven ability to perform the job. If you can demonstrate through projects and technical interviews that you possess the necessary CLI prowess and problem-solving skills, the absence of a degree becomes less of a barrier, especially in fast-moving fields where practical, up-to-date knowledge is paramount. However, for some research-oriented or very large traditional enterprises, a degree might still be a firm requirement.

It's about proving you can do the work, and CLI mastery is a very tangible way to do that.

How can I demonstrate CLI expertise in job interviews?

Demonstrating CLI expertise in interviews goes beyond simply listing tools on your resume. Be prepared for practical, hands-on technical assessments where you might be asked to perform tasks in a live terminal environment. This could involve navigating a file system, manipulating text files using `grep`, `sed`, or `awk`, writing a short shell script to automate a task, troubleshooting a (simulated) system issue, or using Git commands to manage a repository.

During verbal discussions, clearly articulate how you've used CLI tools to solve specific problems or improve processes in past projects or roles. Provide concrete examples. If you have a portfolio on GitHub with scripts you've written or projects where you've automated tasks using the CLI, make sure to highlight it. Discussing your preferred shell, customizations (`.bashrc`, `.zshrc` aliases and functions), and favorite "power user" commands can also subtly indicate your comfort level and depth of knowledge.

Confidence, clarity, and the ability to explain your thought process while working through a CLI problem are key.

How can I future-proof my CLI skills against AI advancements?

Rather than seeing AI as a threat to CLI skills, view it as a potential enhancement. AI tools are emerging that can help generate CLI commands or scripts based on natural language prompts, or assist in debugging. However, understanding the underlying principles of how shells, commands, and systems work remains crucial to effectively use, validate, and troubleshoot what AI generates. AI might help you write a complex `awk` command faster, but you still need to understand `awk` to know if the command is correct and efficient for your specific problem.

Future-proofing involves focusing on the fundamental concepts: problem-solving, automation logic, system architecture, and security principles. Learn to use AI as a productivity multiplier, not a replacement for understanding. Moreover, many AI/MLOps roles themselves require strong CLI skills for model deployment, data pipeline management, and interacting with AI infrastructure. The ability to integrate CLI workflows with AI tools will likely become a valuable skill in itself.

Continuous learning and adapting to new tools, including AI-assisted ones, while maintaining a strong grasp of fundamentals is the best approach.

Is there a place for CLI usage in non-technical careers?

While CLI proficiency is a hallmark of technical roles, its applications are slowly seeping into less traditionally technical fields. For example, in digital humanities, researchers might use command line tools for text analysis of large corpuses, managing digital archives, or converting file formats. Data journalists may use CLI tools for data wrangling and preliminary analysis. Even some marketing roles involving data analytics or automation might touch upon CLI tools for specific tasks.

However, for most purely non-technical careers, deep CLI expertise is unlikely to be a primary requirement. Basic familiarity might be helpful in some niche scenarios, but GUI-based tools will generally suffice. The benefit for non-technical professionals is more about understanding the capabilities and being able to communicate effectively with technical teams who do rely on the CLI. If your role involves managing technical projects or products, a conceptual understanding of what's possible with the CLI can be advantageous.

For those interested in a gentle introduction from a less technical starting point, simplified courses can be helpful.

How should I balance GUI and CLI skills in modern workflows?

The most effective approach in modern workflows is often a pragmatic balance between GUI and CLI skills. Neither is universally superior; they excel in different areas. GUIs are generally better for tasks requiring visual feedback, exploration, or infrequent complex operations where memorizing commands is impractical (e.g., image editing, complex UI design, browsing data visually).

CLIs shine for automation, repetition, precise control, managing remote systems, and resource-constrained environments. Many modern tools offer both. For example, cloud platforms have web consoles (GUI) and powerful CLIs. Integrated Development Environments (IDEs) are GUIs but often have built-in terminals for CLI tasks. The key is to understand the strengths and weaknesses of each and use the most appropriate tool for the job. Often, a hybrid approach is best: using a GUI for an overview or initial setup, then diving into the CLI for automation, scripting, or fine-grained control. Being proficient in both makes you a more versatile and effective professional.

Mastering command line tools is a journey that offers significant rewards in terms of efficiency, control, and career opportunities. Whether you are just starting or looking to deepen your expertise, the path involves continuous learning and practical application. The resources and pathways discussed here provide a solid foundation for anyone aspiring to harness the power of the command line. As technology evolves, the principles of direct system interaction and automation that the CLI embodies will remain profoundly relevant.