Functions

Introduction to Functions

Functions are a fundamental concept that appear across numerous fields, most notably mathematics and computer science. At its core, a function describes a relationship between a set of inputs and a set of permissible outputs, with the property that each input is related to exactly one output. Think of it as a rule or a machine: you put something specific in, and you get something specific out, consistently every time for the same input. This simple idea provides a powerful way to model relationships, processes, and transformations in the world around us.

The utility of functions extends far beyond abstract mathematics. In programming, functions allow developers to break down complex problems into smaller, manageable, and reusable pieces of code. In engineering, functions model how systems behave in response to different conditions. Even in everyday life, we encounter functional relationships constantly, such as the relationship between the amount of fuel put into a car (input) and the distance it can travel (output), or the time spent studying (input) and the grade received on a test (output). Understanding functions unlocks a way of thinking that is precise, analytical, and incredibly versatile, forming a cornerstone for logical reasoning and problem-solving in countless domains.

What are Functions?

Definition and Core Purpose

A function is formally defined as a relation between a set of inputs, called the domain, and a set of permissible outputs, called the codomain, such that each input is associated with exactly one output. This unique output is often referred to as the value of the function for that specific input. The core purpose of a function is to encapsulate a specific rule or process that transforms inputs into outputs in a predictable and consistent manner. This predictability is key; for any given input from the domain, the function must produce the same single output every time.

Imagine a vending machine. The set of buttons you can press represents the domain (inputs). The set of all possible items inside the machine represents the codomain (potential outputs). When you press a specific button (input), the machine dispenses a specific item (output). This relationship is functional because pressing the 'Soda A' button always gets you 'Soda A', never 'Chips B' or nothing at all (assuming the machine is working correctly and stocked). It wouldn't be a function if pressing one button could randomly give you one of several different items, or if one button corresponded to two different items simultaneously.

This concept allows us to model deterministic processes where an action or state reliably leads to a specific outcome. By defining the input set, the output set, and the rule connecting them, functions provide a precise language for describing dependencies and transformations. This is crucial in fields that require logical rigor and predictability, such as mathematics, physics, engineering, economics, and computer science.

A Brief Historical Overview

The concept of a function, though formalized relatively recently, has roots stretching back to ancient mathematics. Early mathematicians implicitly used functional relationships when studying geometric curves or dependencies between quantities, but without a formal definition. For example, Babylonian astronomers developed tables that related the position of celestial bodies over time, essentially creating tabular functions.

The modern understanding began to take shape in the 17th century with the development of calculus by Isaac Newton and Gottfried Wilhelm Leibniz. They studied continuously changing quantities and the relationships between them, laying the groundwork for analyzing functions describing motion, rates of change, and accumulation. Leibniz introduced the term "function" around 1673 to describe quantities related to curves, like their slope or coordinates.

Over the following centuries, mathematicians like Leonhard Euler, Joseph Fourier, and Augustin-Louis Cauchy refined the concept. Euler introduced the familiar f(x) notation. The need for greater rigor in calculus led to more precise definitions. Peter Gustav Lejeune Dirichlet is often credited with the modern "formal" definition of a function in 1837, emphasizing the arbitrary nature of the rule mapping inputs to outputs, as long as it assigns exactly one output to each input. Later, the development of set theory in the late 19th and early 20th centuries provided the language (domain, codomain, mapping) to express this definition with full generality, cementing the function as a central object in mathematics and logic.

Functions Across Disciplines

The power of the function concept lies in its universality. While rooted in mathematics, its applications permeate virtually every quantitative and logical field. In mathematics itself, functions are ubiquitous, appearing in algebra, calculus, topology, analysis, and more. They describe everything from simple linear relationships (y = 2x + 3) to complex transformations in geometry or the behavior of abstract structures.

In computer science, functions (often called procedures, subroutines, or methods) are the building blocks of programs. They allow programmers to write modular, reusable code, making software development more organized and efficient. Functional programming paradigms even treat computation primarily as the evaluation of mathematical functions. Data structures like hash tables rely heavily on functions (hash functions) to map keys to storage locations.

In engineering and physics, functions model physical laws and system behaviors. For instance, the position of a falling object can be expressed as a function of time, or the voltage across a resistor can be described as a function of current (Ohm's Law). Engineers use functions extensively in simulations, control systems design, and signal processing. Economics uses functions to model supply and demand, cost, revenue, and utility. Even fields like biology use functions to describe population growth or the rate of enzyme reactions. This widespread applicability highlights the function as a fundamental tool for describing and analyzing the world.

ELI5: Functions as Input-Output Machines

Imagine you have a special machine, let's call it the "Doubler Machine." Whatever number you put into this machine, it spits out double that number. If you put in 2, it gives you 4. If you put in 5, it gives you 10. If you put in 100, it gives you 200. This machine is like a function. It takes an input (the number you put in) and gives you a specific output (the doubled number) based on a rule (multiply by 2).

A key thing about function machines is that they are reliable. If you put in the number 5 today, you get 10. If you put the number 5 in tomorrow, you still get 10. It always gives the same output for the same input. Also, it only gives you one output for each input. The "Doubler Machine" won't give you both 10 and 12 when you put in 5; it only gives you 10.

Now, imagine another machine, the "Animal Sound Machine." You tell it an animal (input), and it makes that animal's sound (output). If you say "Cow," it says "Moo." If you say "Cat," it says "Meow." This is also like a function. The input is the animal name, the output is the sound, and the rule is "make the sound associated with this animal." Just like the Doubler Machine, it's reliable (a cat always meows) and gives only one primary sound per animal. Functions are just these reliable input-output rules or machines.

Mathematical Foundations of Functions

The Language of Sets: Domain, Codomain, and Range

To speak precisely about functions in mathematics, we use the language of set theory. A function, formally denoted as f: A → B, involves three key components: two sets, A and B, and a rule, f, that assigns each element in set A to exactly one element in set B.

The set A is called the domain of the function. It represents the complete collection of all possible valid inputs for the function. The set B is called the codomain. It represents the set of all potential outputs. It's important to note that not every element in the codomain necessarily has to be an output for some input.

A related concept is the range (or image) of the function. The range is the subset of the codomain B that consists of all the actual outputs produced by the function when applied to every element in the domain A. So, while the codomain is the set of possible outputs, the range is the set of achieved outputs. For example, if we define a function f(x) = x² with domain as all real numbers and codomain as all real numbers, the range is only the non-negative real numbers (0 and positive numbers), because squaring a real number never results in a negative number.

These foundational courses cover the necessary set theory and introduce function concepts:

Types of Functions: One-to-One, Onto, and Bijections

Functions can be classified based on how their inputs map to their outputs, leading to important distinctions. An injective function, also known as a one-to-one function, is one where every distinct input in the domain maps to a distinct output in the range. In other words, no two different inputs produce the same output. If f(a) = f(b), then it must be that a = b. Our "Doubler Machine" (f(x) = 2x) is injective because different numbers always double to different numbers.

A surjective function, also known as an onto function, is one where every element in the codomain is mapped to by at least one element in the domain. This means the range of the function is equal to its entire codomain; every possible output value is actually achieved for some input. Consider a function g(x) = x³ with both domain and codomain as all real numbers. This function is surjective because for any real number y, there exists a real number x (the cube root of y) such that g(x) = y.

A function that is both injective and surjective is called a bijective function, or a one-to-one correspondence. In a bijection, every element in the domain maps to a unique element in the codomain, and every element in the codomain is mapped to by exactly one element from the domain. Bijections establish a perfect pairing between the elements of the domain and the codomain. These classifications are crucial in areas like abstract algebra, cryptography, and proving properties about the sizes of infinite sets.

These courses delve deeper into the properties and types of functions:

These books provide rigorous mathematical treatment:

Combining Functions: Composition and Inverses

Functions can be combined in various ways, with function composition being particularly important. If we have two functions, f: A → B and g: B → C, where the codomain of f is the domain of g, we can form a new function called the composite function, denoted g ∘ f (read as "g composed with f"). This composite function maps elements from A directly to C by first applying f and then applying g to the result: (g ∘ f)(x) = g(f(x)). Think of it as chaining two function machines together: the output of the first machine becomes the input for the second.

For certain functions, specifically bijective functions, we can define an inverse function. If f: A → B is a bijection, its inverse function, denoted f⁻¹: B → A, "undoes" the operation of f. This means that if f maps an element 'a' in A to an element 'b' in B (i.e., f(a) = b), then the inverse function f⁻¹ maps 'b' back to 'a' (i.e., f⁻¹(b) = a). Applying a function and then its inverse (or vice versa) returns the original input: (f⁻¹ ∘ f)(x) = x for all x in A, and (f ∘ f⁻¹)(y) = y for all y in B.

Inverse functions are fundamental in solving equations and are widely used in fields like cryptography and signal processing. For example, the logarithm function is the inverse of the exponential function, allowing us to solve equations where the unknown is in the exponent. Understanding composition and inverses allows us to build complex functional relationships from simpler ones and to reverse processes.

Calculus courses heavily utilize function composition and inverses:

Advanced mathematical texts cover these concepts extensively:

Visualizing Functions: Graphs and Mapping Diagrams

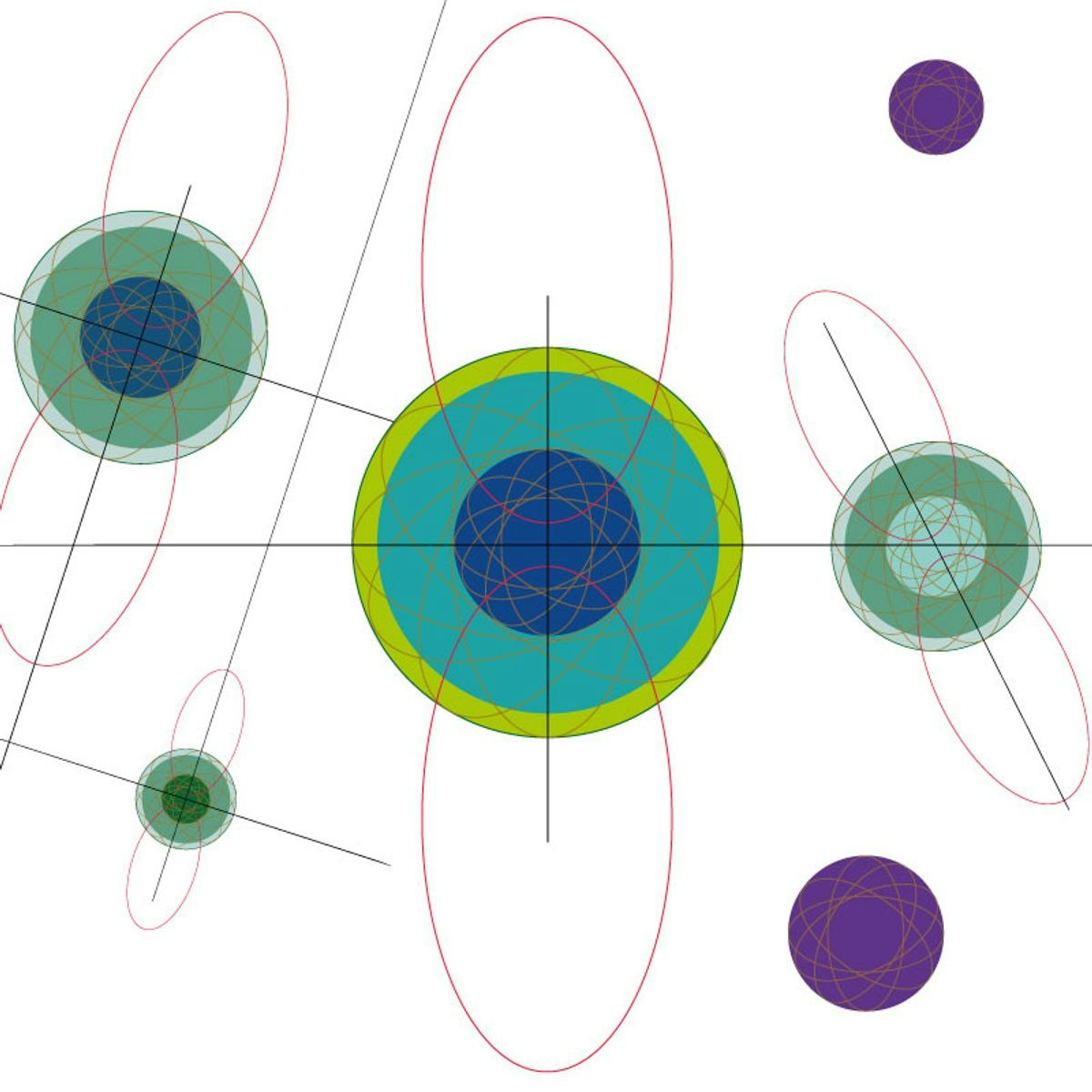

Visual representations are powerful tools for understanding the behavior of functions. The most common method is the Cartesian graph. For a function f: A → B where A and B are subsets of the real numbers, the graph consists of all points (x, y) in the Cartesian plane such that x is in the domain A and y = f(x). This visual plot allows us to see patterns, trends, rates of change (slope), maxima, minima, and discontinuities at a glance.

The shape of the graph reveals key properties. A horizontal line intersecting the graph more than once indicates the function is not injective (one-to-one). If a horizontal line corresponding to a value y in the codomain never intersects the graph, then y is not in the range, and if this happens for any y in the codomain, the function is not surjective (onto). Properties like continuity, differentiability, and integration are often intuitively understood through their graphical interpretations.

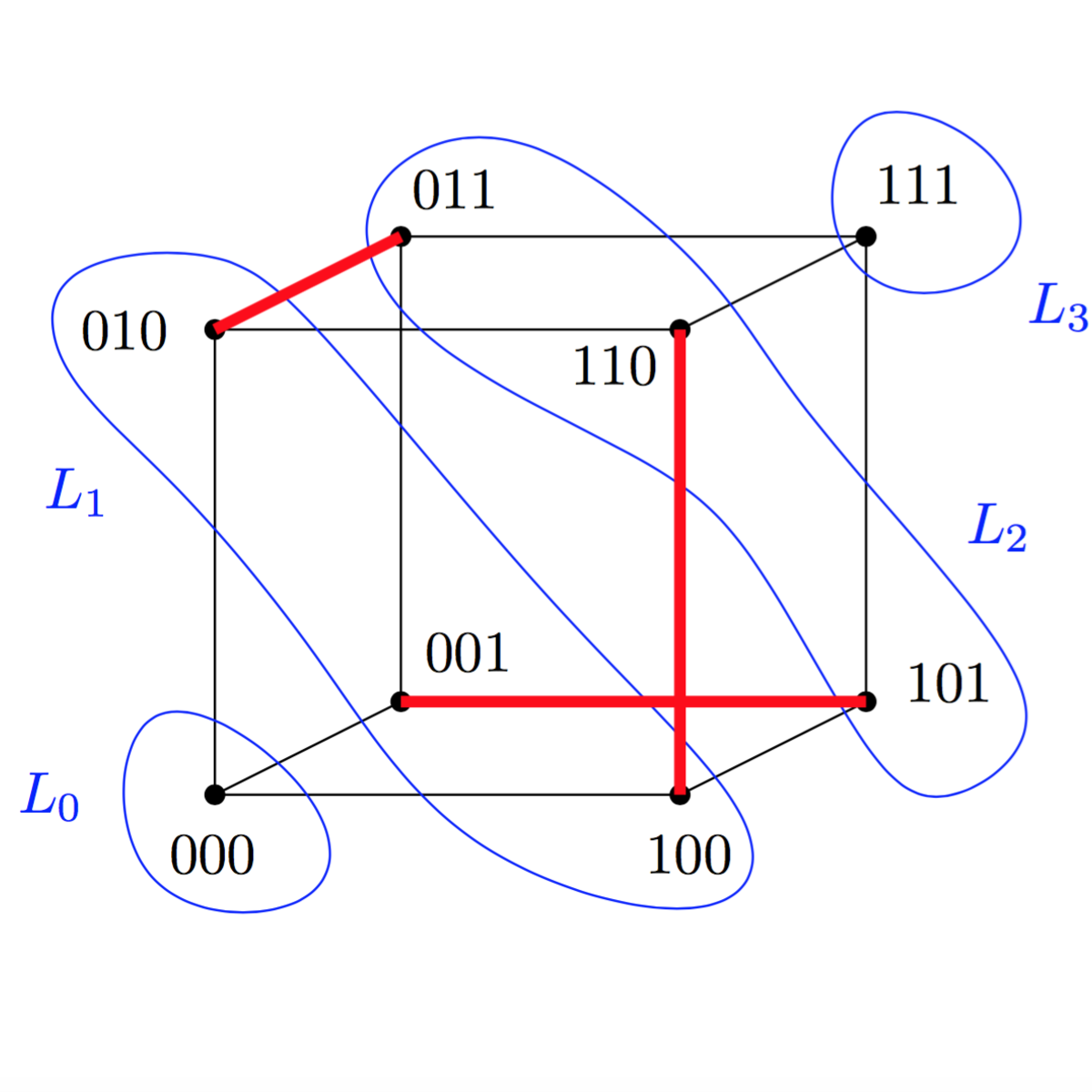

Another visualization tool, particularly useful for functions with finite or discrete domains and codomains, is a mapping diagram. This involves drawing two sets (often as ovals or rectangles) representing the domain and codomain, and drawing arrows from each element in the domain to its corresponding element in the codomain. This clearly illustrates the mapping rule and makes it easy to check properties like injectivity (no two arrows point to the same output element) and surjectivity (every element in the codomain has at least one arrow pointing to it).

These courses focus on the relationship between functions and their visual representations:

Functions in Programming

The Building Blocks of Code: Modularity and Reusability

In computer programming, functions (also known as methods, procedures, or subroutines, depending on the language and context) are fundamental units of organization. They allow programmers to group a sequence of instructions together, give that group a name, and then execute that entire sequence simply by calling the name. This serves several critical purposes, primarily modularity and reusability.

Modularity refers to breaking down a large, complex program into smaller, self-contained, and manageable parts. Each function ideally performs a single, well-defined task. This makes the overall program structure easier to understand, develop, and maintain. If a problem arises, it's often easier to isolate it within a specific function rather than searching through an entire monolithic block of code.

Reusability means that once a function is written to perform a specific task (e.g., calculate the square root of a number, sort a list, connect to a database), it can be called multiple times from different parts of the program, or even used in entirely different programs, without rewriting the code. This saves development time, reduces redundancy, and promotes consistency. Libraries and frameworks in programming are essentially collections of pre-written functions designed for reuse.

These courses introduce programming fundamentals where functions are key:

Inputs and Outputs: Parameters and Return Values

Just like mathematical functions operate on inputs to produce outputs, programming functions often work with data. Inputs are typically provided to a function through parameters (also called arguments). When defining a function, you specify the parameters it expects to receive – these act like placeholders for the data the function will operate on. When you call the function, you provide the actual values, known as arguments, which are assigned to the corresponding parameters.

For example, a function designed to add two numbers might be defined with two parameters, say num1 and num2. When you call this function, you might provide the arguments 5 and 3. Inside the function, num1 would take the value 5, and num2 would take the value 3.

Functions often produce an output, which is sent back to the part of the code that called the function. This output is known as the return value. A function typically uses a specific keyword (like return) to specify the value being sent back. Not all functions need to return a value; some might perform an action, like printing text to the screen or modifying a global data structure, without explicitly returning anything (sometimes called void functions).

Courses covering function parameters and return values in specific languages:

Recursion: Functions Calling Themselves

Recursion is a powerful programming technique where a function calls itself within its own definition. This might sound circular, but it works because each recursive call typically operates on a smaller or simpler version of the original problem. Crucially, a recursive function must have a base case – a condition under which the function stops calling itself and returns a value directly. Without a base case, the function would call itself indefinitely, leading to a stack overflow error.

Consider calculating the factorial of a non-negative integer n (denoted n!), which is the product of all positive integers up to n (e.g., 5! = 5 4 3 2 1 = 120). This can be defined recursively: the factorial of n is n multiplied by the factorial of (n-1). The base case is when n is 0, where 0! is defined as 1. A recursive function for factorial would check if n is 0; if so, it returns 1. Otherwise, it returns n multiplied by the result of calling itself with (n-1).

Recursion often leads to elegant and concise solutions for problems that can be broken down into smaller, self-similar subproblems, such as traversing tree data structures or certain sorting algorithms (like Merge Sort). However, it can sometimes be less efficient than iterative solutions (using loops) due to the overhead of function calls building up on the program's call stack.

These courses explore recursion as a programming concept:

Pure vs. Impure Functions: Side Effects Matter

In programming, particularly in the context of functional programming paradigms, functions are often categorized as either pure or impure.

A pure function adheres strictly to the mathematical definition: its return value depends only on its input arguments, and it has no observable side effects. Side effects are actions that modify state outside the function's local scope, such as changing a global variable, writing to a file or database, printing to the console, or relying on external mutable state. Given the same input, a pure function will always produce the same output and won't change anything else in the program's environment.

An impure function, conversely, is one that either depends on factors other than its explicit inputs (e.g., global variables, system time) or causes side effects. Most functions that perform input/output operations (like reading from a file or user input, or writing to the screen) are inherently impure. While pure functions offer benefits like predictability, testability, and suitability for parallel execution, impure functions are necessary for programs to interact with the outside world and manage state changes over time.

Understanding the distinction helps in designing more predictable and maintainable software. Functional programming languages often encourage the use of pure functions as much as possible, isolating side effects to specific parts of the program to manage complexity.

While specific courses on pure/impure functions aren't listed, foundational programming courses touch upon scope and state:

Career Applications of Functions

Data Science and Machine Learning

Functions are indispensable in the field of data science and machine learning. Data transformation pipelines, a core part of preparing data for analysis or model training, often consist of a series of functions applied sequentially. Each function might perform a specific task like cleaning missing values, scaling features, encoding categorical variables, or extracting relevant information. This modular approach makes complex data preprocessing workflows manageable and reproducible.

In machine learning, models themselves can often be viewed as complex functions that map input features to predicted outputs (e.g., predicting house prices based on features like size and location). Activation functions within neural networks determine the output of a neuron based on its input, introducing non-linearity essential for learning complex patterns. Loss functions quantify the difference between predicted and actual values, guiding the model's learning process. Expertise in defining, applying, and optimizing functions is crucial for data scientists and machine learning engineers.

These courses provide foundations relevant to data science applications:

Key books in the field often rely heavily on functional concepts:

Engineering and System Modeling

Engineers across various disciplines rely heavily on functions to model and analyze physical systems and processes. In electrical engineering, functions describe the relationship between voltage, current, and resistance (Ohm's Law) or the behavior of signals over time (e.g., sine waves). Mechanical engineers use functions to model stress-strain relationships in materials, the motion of objects under forces, or the efficiency of engines under varying conditions.

Control systems engineering is fundamentally about designing functions (controllers) that manipulate system inputs to achieve desired output behavior, often represented using transfer functions in the frequency domain. Chemical engineers use functions to model reaction rates, fluid dynamics, and heat transfer. Civil engineers might use functions to model traffic flow or the load-bearing capacity of structures.

Simulation software, a vital tool in modern engineering, uses numerical methods to approximate the behavior of systems described by complex mathematical functions, often involving differential equations. A solid grasp of functions and calculus is therefore essential for modeling, analyzing, and designing engineered systems.

These courses cover mathematical tools used in engineering:

Foundational mathematical texts are crucial references:

Finance and Economics

The fields of finance and economics extensively use functions to model relationships between economic variables and financial instruments. Demand and supply curves, fundamental concepts in microeconomics, are typically represented as functions relating price and quantity. Cost functions describe the total cost of production as a function of output level, while revenue functions model income based on sales.

In finance, functions are used to model the value of investments over time (compound interest functions), calculate the present or future value of cash flows, and price financial derivatives like options (e.g., the Black-Scholes model is based on solving a partial differential equation). Portfolio theory uses functions to relate risk and expected return. Econometric models often involve estimating functional relationships between variables using statistical techniques to make predictions or test economic theories.

Quantitative analysts ("quants") in the financial industry spend much of their time developing and implementing complex mathematical functions to model market behavior, assess risk, and develop trading strategies. A strong understanding of calculus, probability, and statistics, all built upon the concept of functions, is critical for these roles.

These courses apply mathematical functions to finance and business:

Statistical knowledge is often applied through functional models:

Transferable Skills for Diverse Careers

Beyond specific domains, a deep understanding of functions cultivates highly transferable skills valuable across a vast range of careers. Thinking in terms of functions promotes logical reasoning, analytical thinking, and precise communication. The ability to identify inputs, outputs, and the rules governing their relationship is fundamental to problem-solving in any context.

Breaking down complex problems into smaller, manageable functional units (a skill honed in programming) is applicable to project management, process design, and strategic planning. Understanding concepts like domain (valid inputs) and range (possible outputs) helps in defining constraints and managing expectations. Familiarity with different types of functions (linear, exponential, logarithmic, periodic) provides a mental toolkit for recognizing and modeling various patterns of behavior or growth.

Whether you are designing a user interface, planning a marketing campaign, analyzing social trends, or optimizing a logistics network, the ability to think functionally – to map causes to effects, inputs to outputs, actions to consequences in a structured way – is a powerful asset. It provides a framework for clarity, rigor, and effective analysis, making it a core component of quantitative literacy and critical thinking applicable far beyond purely technical roles.

Historical Evolution of Functional Thinking

Early Seeds: Ancient and Medieval Insights

While the formal definition is modern, the intuitive idea of a functional relationship – one quantity depending on another – is ancient. Babylonian astronomers compiled extensive tables predicting celestial events, implicitly defining functions relating time to position. Ancient Greek mathematicians, particularly in geometry, studied curves defined by relationships between coordinates, like conic sections (circles, ellipses, parabolas, hyperbolas), effectively exploring geometric functions.

Thinkers like Archimedes used methods anticipating calculus to find areas and volumes, implicitly dealing with functional relationships between geometric dimensions and measures. Although they lacked algebraic notation and the formal concept of a function, their work explored dependencies between variable quantities.

During the medieval period, scholars like Nicole Oresme in the 14th century explored representing changing quantities graphically, plotting dependent variables against independent ones (like velocity against time), foreshadowing the graphical representation of functions. These early efforts, while not using modern terminology, laid the conceptual groundwork by grappling with the idea of variable quantities and their deterministic interdependencies.

The Calculus Revolution: Newton and Leibniz

The 17th century marked a pivotal moment with the independent development of calculus by Isaac Newton and Gottfried Wilhelm Leibniz. Their work was fundamentally concerned with change and motion, requiring a way to describe how one continuously varying quantity depended on another. This necessitated a more dynamic concept than the static relationships often studied previously.

Newton thought in terms of "fluents" (changing quantities, like distance) and "fluxions" (their rates of change, like velocity), implicitly using functions to describe motion and physical laws. Leibniz explicitly introduced the term "function" to denote quantities associated with points on a curve, such as coordinates or slope. He also developed much of the notation still used today, like dy/dx for derivatives and the integral sign.

Calculus provided powerful tools – differentiation and integration – to analyze functions, find their rates of change, calculate areas under curves, and solve optimization problems. While their initial understanding of functions was often tied to geometric curves or analytic expressions (formulas), their work revolutionized mathematics and physics, making the study of functional relationships central to scientific inquiry.

These courses cover the fundamentals established during this era:

Seminal calculus texts provide deep dives into these concepts:

Formalization and Computation Theory

The 18th and 19th centuries saw increasing efforts to place calculus and the function concept on a more rigorous logical foundation. Mathematicians like Euler, Lagrange, Cauchy, and Weierstrass worked towards precise definitions of limits, continuity, and the function itself. Dirichlet's 1837 definition, emphasizing the arbitrary mapping rule between input and output sets, freed the concept from the requirement of being expressible by a single analytic formula.

The late 19th and early 20th centuries brought the development of set theory by Cantor, Dedekind, and others, providing the formal language of domains, codomains, and mappings used today. Simultaneously, the foundations of computation were being explored. Logicians like Alonzo Church developed lambda calculus in the 1930s, a formal system based entirely on functions and function application, proving foundational for theoretical computer science.

Alan Turing's work on Turing machines provided a different, mechanical model of computation. The Church-Turing thesis posits that these two seemingly different approaches (lambda calculus and Turing machines) capture the same intuitive notion of "computable function," linking the mathematical concept of function directly to the theoretical limits of what computers can do.

Advanced analysis courses build on this formalization:

Modern Functional Programming

The theoretical work on functions and computability, particularly lambda calculus, directly inspired the development of functional programming languages starting in the mid-20th century with Lisp, and later evolving with languages like ML, Haskell, F#, Scala, and features incorporated into mainstream languages like Python, Java, and JavaScript.

Functional programming emphasizes computation as the evaluation of pure mathematical functions, avoiding changing state and mutable data. Key concepts include treating functions as "first-class citizens" (meaning they can be passed as arguments, returned from other functions, and assigned to variables), immutability (data cannot be changed after creation), and recursion over iteration. These principles often lead to code that is more predictable, easier to reason about, test, and parallelize.

Modern software development increasingly incorporates functional programming concepts, even in languages not strictly designated as functional. Features like lambda expressions (anonymous functions), map/filter/reduce operations on collections, and a focus on immutability are becoming common, demonstrating the enduring influence of mathematical functional thinking on practical software engineering.

These courses introduce programming languages where functional concepts are relevant:

Formal Education Pathways

High School Foundations

A solid foundation for understanding functions typically begins in high school mathematics, particularly in algebra and pre-calculus courses. Students are introduced to the basic definition of a function, the concepts of domain and range, and function notation (f(x)). They learn to evaluate functions for specific inputs and explore various types of functions, including linear, quadratic, polynomial, rational, exponential, and logarithmic functions.

Graphing functions on the Cartesian plane is a major component, teaching students to visualize function behavior and understand concepts like intercepts, slope, asymptotes, and transformations (shifts, stretches, reflections). Solving equations often involves understanding inverse functions. Word problems require students to model real-world situations using appropriate function types. Success in these courses provides the essential algebraic manipulation skills and conceptual understanding needed for more advanced study.

These courses cover typical high school and pre-university function topics:

Undergraduate Core Courses

At the university level, the study of functions deepens significantly, particularly in mathematics and computer science programs. Calculus sequences (typically Calculus I, II, and III) extensively analyze functions of one and several variables, focusing on limits, continuity, differentiation, integration, and series representations (like Taylor series). These courses develop the tools to understand rates of change, optimization, and accumulation related to functional models.

Discrete Mathematics courses formalize the set-theoretic definition of functions and explore properties like injectivity, surjectivity, and bijectivity. Function composition, inverses, and recursive definitions are often covered in detail. This course is foundational for computer science, providing tools for algorithm analysis, logic, and data structures.

Linear Algebra focuses on a specific type of function known as linear transformations between vector spaces, represented by matrices. Understanding these transformations is crucial in fields ranging from computer graphics to quantum mechanics. Abstract Algebra studies algebraic structures where functions (homomorphisms) preserving those structures play a central role.

These university-level courses build upon high school foundations:

Foundational texts for undergraduate math include:

Graduate Studies and Research

Graduate studies in mathematics, computer science, physics, engineering, and economics often involve highly specialized and abstract applications of functions. In pure mathematics, fields like Real Analysis and Complex Analysis rigorously study the properties of functions (continuity, differentiability, integrability) on real and complex domains. Functional Analysis studies infinite-dimensional vector spaces of functions, essential for understanding differential equations and quantum mechanics. Topology examines continuous functions (homeomorphisms) to classify geometric spaces.

In theoretical computer science, computability theory investigates the limits of what functions can be computed algorithmically. Complexity theory classifies computable functions based on the resources (time, memory) required to compute them. Advanced algorithm design often involves sophisticated functional techniques and data structures.

Research across many scientific and engineering disciplines involves developing new functional models, analyzing their properties, or finding efficient ways to compute or approximate them. This often requires a deep understanding of advanced mathematical concepts built upon the foundations of functions, sets, and logic.

Relevant advanced books for graduate study:

Self-Directed Learning Strategies

Leveraging Online Courses and Platforms

The digital age offers unprecedented opportunities for self-directed learning, especially for foundational concepts like functions. Platforms like OpenCourser aggregate thousands of courses from various providers, covering mathematics and programming from introductory to advanced levels. Learners can find courses tailored to their specific needs, whether refreshing high school algebra, learning calculus for a career pivot, or exploring functional programming paradigms.

Online courses often provide flexibility, allowing learners to study at their own pace and schedule. Many include video lectures, interactive exercises, quizzes, and peer forums, offering a structured learning experience. Look for courses with clear prerequisites, syllabi outlining the topics covered, and positive reviews from fellow learners. OpenCourser's features, such as summarized reviews and course comparisons, can help you find the right course to build a solid understanding of functions in mathematics or their application in programming.

Consider starting with foundational mathematics or introductory programming:

Project-Based Learning

Applying concepts is crucial for solidifying understanding. Project-based learning is an effective strategy for mastering functions. Instead of just completing textbook exercises, try building something tangible that utilizes functional concepts. For aspiring programmers, this could mean writing a simple calculator application (using functions for arithmetic operations), developing a small game (using functions to handle player input, movement, and game logic), or creating a data analysis script (using functions to clean, transform, and visualize data).

For those focusing on mathematics, projects could involve using software like MATLAB, Python with libraries like NumPy and Matplotlib, or graphing tools like GeoGebra to model a real-world phenomenon using functions. For example, model population growth using exponential functions, simulate projectile motion using quadratic functions, or analyze financial data using logarithmic or power functions. Choose projects that align with your interests and career goals.

Building a personal portfolio of projects demonstrates practical skills to potential employers or academic programs. It forces you to integrate different concepts and troubleshoot problems, leading to deeper learning than passive consumption of material. OpenCourser's Learner's Guide offers tips on structuring self-study and incorporating projects effectively.

These courses emphasize practical application and projects:

Community and Collaboration

Learning doesn't have to be a solitary activity. Engaging with online communities can significantly enhance the self-directed learning experience. Platforms like Stack Overflow, Reddit (e.g., r/learnprogramming, r/math), GitHub, and specialized forums provide spaces to ask questions, share solutions, and collaborate on projects.

Participating in coding challenges (e.g., on sites like LeetCode, HackerRank, or Kaggle for data science) exposes you to diverse problems and solutions, often involving clever applications of functions and algorithms. Contributing to open-source projects allows you to read code written by experienced developers, learn best practices, and gain practical experience working in a collaborative environment. Explaining concepts to others or trying to solve their problems is also an excellent way to test and deepen your own understanding.

Don't hesitate to seek help when you're stuck, but also try to contribute back to the community by sharing what you've learned. This collaborative aspect can provide motivation, different perspectives, and valuable feedback, making the learning journey more effective and enjoyable.

Ethical Considerations in Functional Modeling

Bias in Algorithmic Functions

When functions are used to model real-world processes involving people, especially in machine learning algorithms for tasks like loan approvals, hiring screenings, or facial recognition, they can inadvertently encode or even amplify existing societal biases present in the training data. An algorithm is essentially a complex function mapping inputs (e.g., applicant data) to outputs (e.g., loan approved/denied). If the historical data used to train this function reflects biased decision-making, the resulting function will learn and perpetuate those biases.

This can lead to unfair or discriminatory outcomes for certain demographic groups. For example, a facial recognition function trained predominantly on images of one ethnicity may perform poorly on others. A loan approval function trained on historical data where certain neighborhoods were unfairly redlined might continue to disadvantage applicants from those areas. Recognizing that algorithmic functions are not inherently objective, but rather reflect the data they are built upon, is crucial.

Addressing algorithmic bias requires careful consideration of data sources, feature selection, model evaluation metrics (beyond simple accuracy), and potentially implementing fairness constraints during model training. It's an active area of research and requires interdisciplinary collaboration between computer scientists, ethicists, social scientists, and policymakers. Resources from organizations like the Pew Research Center often explore public and expert opinions on AI ethics.

Transparency and Explainability

Many modern functions used in AI and machine learning, particularly deep neural networks, operate as "black boxes." While they might achieve high accuracy in mapping inputs to outputs, their internal decision-making processes can be incredibly complex and difficult for humans to understand or interpret. This lack of transparency poses significant ethical challenges, especially when these functions are used in high-stakes domains like healthcare, finance, or criminal justice.

If a function denies someone a loan or makes a critical medical diagnosis recommendation, the inability to explain why the function arrived at that decision raises serious concerns about accountability, fairness, and the potential for hidden errors or biases. There is a growing demand for "Explainable AI" (XAI) – techniques and models that allow developers and users to understand the reasoning behind an AI's output.

Developing functions that are not only accurate but also interpretable is a key ethical consideration. This involves trade-offs, as sometimes the most accurate models are the least transparent. Balancing performance with explainability is crucial for building trust and ensuring responsible deployment of functional models in society.

Data Privacy and Security

Functions often operate on sensitive input data. For example, a function used in personalized medicine might take a patient's genetic information and medical history as input. A function powering a recommendation engine might use a user's browsing history and personal preferences. This raises significant concerns about data privacy and security.

How is the input data collected, stored, and protected? Who has access to the function and the data it processes? Could the function's outputs inadvertently reveal sensitive information about the inputs? Techniques like differential privacy aim to design functions that provide useful aggregate results while mathematically guaranteeing a level of privacy for individual data points.

Securely implementing and deploying functions, especially those handling personal data, requires robust security practices to prevent unauthorized access, data breaches, or malicious manipulation of the function's behavior. Ethical considerations demand that data privacy is a primary concern throughout the lifecycle of designing, building, and using functional models.

Career Progression with Functions Expertise

Entry-Level Opportunities

A strong grasp of functions, both mathematical and computational, opens doors to numerous entry-level roles across technology, finance, engineering, and science. Positions like Junior Software Developer, Data Analyst, QA Engineer, or Research Assistant often require the ability to understand and implement functional logic. Software developers use functions daily to structure code. Data analysts use spreadsheet functions (Excel, Google Sheets) and programming functions (Python, R) to manipulate and analyze data.

In engineering fields, entry-level roles might involve using software like MATLAB or simulation tools based on functional models. Even roles in quantitative marketing or business analysis benefit from the ability to model relationships functionally. The key at this stage is demonstrating a solid understanding of core concepts and the ability to apply them to practical problems, often showcased through academic coursework, projects, or internships.

Starting salaries vary significantly by role, industry, and location, but roles requiring strong analytical and programming skills typically offer competitive compensation. Resources like the U.S. Bureau of Labor Statistics Occupational Outlook Handbook provide general information on various occupations, including typical duties, education requirements, and salary ranges, though specific data tied directly to "function expertise" isn't isolated.

These courses help build foundational skills for entry-level tech roles:

Mid-Career Specialization

As professionals gain experience, their understanding and application of functions often become more specialized. A software developer might specialize in functional programming languages or backend development involving complex API design (functions exposed for other systems to call). A data analyst might transition into a Data Scientist role, developing sophisticated machine learning models (complex functions) or specializing in data engineering, building robust data pipelines (sequences of functions).

Engineers might specialize in control systems design, computational fluid dynamics, or finite element analysis, all requiring deep expertise in specific types of mathematical functions and numerical methods. Financial professionals could become Quantitative Analysts ("quants"), specializing in derivative pricing models or algorithmic trading strategies heavily reliant on advanced mathematical functions.

Mid-career progression often involves leading small teams, mentoring junior members, and tackling more complex technical challenges. Continuous learning is crucial to stay updated with new techniques, tools, and theoretical advancements related to functional modeling in one's chosen specialization. Salary potential generally increases significantly with specialization and experience.

These courses offer deeper dives into areas requiring specialized function knowledge:

Leadership and Architectural Roles

With extensive experience, individuals with deep expertise in functions and their applications can move into leadership and architectural roles. A Principal Software Engineer or Software Architect designs the overall structure of complex software systems, making high-level decisions about modularity, component interaction (often via function calls or APIs), and technology choices. Their understanding of functional decomposition and abstraction is critical.

In data science, a Lead Data Scientist or AI Architect oversees the development and deployment of large-scale machine learning systems, ensuring models (functions) are robust, scalable, and ethically sound. Engineering Managers or Technical Leads guide teams, set technical direction, and ensure the rigorous application of modeling and analysis techniques based on functional understanding.

These roles require not only deep technical expertise but also strong communication, strategic thinking, and leadership skills. They involve translating business requirements into technical specifications, managing complexity, and making decisions with long-term impact. Compensation at this level is typically substantial, reflecting the high level of responsibility and expertise required.

Emerging Fields and Future Demand

The importance of functions is likely to grow as technology advances. Fields like Artificial Intelligence and Machine Learning are fundamentally built on mathematical functions, from the activation functions in neural networks to the loss functions optimized during training. As AI becomes more pervasive, the demand for professionals who can design, implement, and analyze these complex functional systems will increase.

Quantum computing relies on linear algebra and functional analysis to describe quantum states and operations (which are essentially functions acting on those states). Advances in scientific computing, bioinformatics, and computational modeling across all sciences continue to push the boundaries of what can be simulated and analyzed, demanding ever more sophisticated functional models and numerical methods.

The rise of big data necessitates efficient functions for processing and extracting insights from massive datasets. Even areas like cybersecurity increasingly use functional analysis and machine learning for anomaly detection and threat modeling. Staying adaptable and continuously learning new applications of functional concepts will be key to remaining relevant in these rapidly evolving fields.

Consider exploring related advanced topics:

Frequently Asked Questions

What are the essential math prerequisites for function-heavy roles?

The required math background varies depending on the specific role, but a strong foundation is generally crucial. For most programming roles applying functions, a solid grasp of Algebra (manipulating expressions, solving equations) and potentially Discrete Mathematics (sets, logic, basic proofs) is essential. Pre-calculus concepts (function types, graphs, transformations) are also highly beneficial.

For roles in data science, machine learning, engineering, physics, and quantitative finance, the requirements are typically more extensive. Calculus (differentiation, integration, series) and Linear Algebra (vectors, matrices, transformations) are almost always mandatory. Probability and Statistics are also critical, particularly for data-driven fields. For more theoretical or research-oriented roles, advanced topics like Real Analysis, Complex Analysis, Differential Equations, or Functional Analysis might be necessary.

Focus on building a conceptual understanding alongside computational proficiency. Knowing why a certain mathematical tool works is often as important as knowing how to apply it. Online courses covering college algebra, pre-calculus, calculus, linear algebra, and discrete math can provide these prerequisites.

These courses cover essential prerequisites:

Can strong function skills compensate for a lack of a formal degree?

In some fields, particularly software development and certain data analysis roles, demonstrated skills and a strong portfolio can sometimes compensate for the lack of a traditional four-year degree, especially from prestigious institutions. If you can prove through projects, coding challenge results, open-source contributions, or certifications that you possess the necessary programming skills (including effective use of functions, algorithms, and data structures) and problem-solving abilities, some employers may prioritize practical ability over formal credentials.

However, for many roles, especially in engineering, research, quantitative finance, or positions requiring licensed practice, a formal degree (often Bachelor's or higher) remains a standard requirement due to the depth of theoretical knowledge needed or regulatory prerequisites. Fields requiring advanced mathematical modeling typically demand rigorous academic training.

For career changers or those without a relevant degree, building a compelling portfolio, networking effectively, and potentially acquiring targeted certifications become even more critical. Online courses can be invaluable for acquiring the necessary skills, but translating those skills into demonstrable achievements is key. Be realistic; while possible, breaking into certain function-heavy fields without a degree can be more challenging and may limit initial opportunities or advancement tracks compared to degree holders.

Which industries have the highest demand for function expertise?

Demand for expertise involving functions is widespread, but particularly high in several key industries. The technology sector (software development, cloud computing, AI/machine learning) heavily relies on programming functions and, increasingly, sophisticated mathematical functions for algorithms. Companies developing software, web platforms, mobile apps, and AI solutions constantly seek individuals proficient in functional decomposition and implementation.

The finance industry, particularly in areas like quantitative analysis, algorithmic trading, risk management, and financial modeling, has a strong demand for individuals with deep mathematical and statistical function expertise. Engineering disciplines (aerospace, electrical, mechanical, chemical, civil) require engineers who can model physical systems using mathematical functions and simulations. The data science and analytics field, spanning across various industries (tech, finance, healthcare, retail, consulting), needs professionals skilled in using functions for data manipulation, statistical modeling, and machine learning.

Research and development sectors, both in academia and private industry (e.g., pharmaceuticals, biotechnology, materials science), also require individuals capable of advanced functional modeling and analysis. Consulting firms often hire individuals with strong analytical skills, where functional thinking is a core competency.

How can I maintain relevance as AI increasingly automates tasks?

While AI and automation may automate certain routine tasks involving basic function application, they also create new opportunities and increase the demand for higher-level skills. Instead of fearing obsolescence, focus on developing skills that complement AI. This includes understanding the underlying principles of AI models (which are often complex functions), enabling you to use these tools effectively, interpret their outputs critically, and identify their limitations or potential biases.

Focus on problem formulation, critical thinking, and domain expertise. AI tools are powerful, but they need humans to define the right problems, choose appropriate models (functions), prepare relevant data, and interpret results in context. Develop skills in areas requiring creativity, complex problem-solving, ethical judgment, and effective communication – areas where human intelligence currently excels over AI.

Continuous learning is essential. Stay updated on AI advancements and learn how to leverage new tools. Deepen your understanding of the mathematical and computational foundations (including functions) that underpin these technologies. Rather than just applying functions, focus on designing, analyzing, and validating the complex functional systems that AI represents. Adaptability and a commitment to lifelong learning are the best strategies for maintaining relevance.

Understanding AI foundations is key:

What portfolio projects best demonstrate function mastery?

Effective portfolio projects showcase your ability to apply functional concepts to solve meaningful problems. For programming-focused roles, consider:

- A modular application: Build a non-trivial application (e.g., a task manager, a simple e-commerce site backend, a data visualization tool) clearly structured using well-defined functions, demonstrating modularity and reusability.

- An algorithmic implementation: Implement a known algorithm that relies on functions, such as a sorting algorithm (Merge Sort often uses recursion), a pathfinding algorithm (like A*), or a data structure involving functional operations (like a hash map).

- A small interpreter or compiler: Implementing a parser or interpreter for a simple language heavily involves defining functions for lexical analysis, parsing syntax trees, and evaluation.

- A data processing pipeline: Write a script that takes raw data, cleans it, transforms it, and generates insights or visualizations, using distinct functions for each step.

For math-focused roles:

- Modeling and simulation: Use mathematical software or programming to model a physical or economic system using relevant functions (e.g., differential equations for population dynamics, optimization functions for resource allocation). Document your model assumptions and analysis clearly.

- Implementation of a numerical method: Code a numerical method for solving equations, performing integration, or optimizing functions (e.g., Newton's method, Runge-Kutta methods).

- Interactive visualization: Create a tool (e.g., using Python libraries like Matplotlib/Plotly or tools like GeoGebra) that allows users to explore the behavior of a family of functions by changing parameters.

Whatever the project, ensure the code is clean, well-documented, and available (e.g., on GitHub). Explain the problem, your approach, the functional concepts used, and the results achieved.

This article provides a broad overview of functions, their mathematical underpinnings, programming applications, and relevance across various career paths. Whether you are just beginning your exploration or seeking to deepen your expertise, understanding functions is a valuable endeavor that enhances analytical thinking and problem-solving abilities applicable in countless domains. We encourage you to explore the resources mentioned and continue your learning journey on OpenCourser.