Data Manipulation

Data Manipulation

Data manipulation refers to the process of changing or altering data to make it more organized, readable, or useful for analysis. It involves a range of operations performed on datasets to transform raw data into a structured format suitable for processing, interpretation, and deriving insights. Think of it as shaping raw clay into a finished sculpture; data manipulation molds raw information into a form that reveals its underlying patterns and meaning.

Working with data manipulation can be quite engaging. It often feels like solving a puzzle, where you take jumbled pieces of information and arrange them into a coherent picture. The process allows for creativity in finding efficient ways to clean, transform, and structure data. Furthermore, mastering data manipulation skills opens doors to various data-centric roles, empowering individuals to unlock valuable insights from complex datasets across numerous industries.

Introduction to Data Manipulation

This section provides a foundational understanding of data manipulation, placing it within the broader context of data-related fields and illustrating its core ideas through simple analogies.

What is Data Manipulation?

At its core, data manipulation encompasses any process that modifies data. This broad definition includes operations like cleaning (removing errors or inconsistencies), transforming (changing structure or format), enriching (adding related information), and aggregating (summarizing) data. The primary goal is typically to prepare data for analysis, visualization, reporting, or input into machine learning models. Without effective manipulation, raw data often remains unusable due to its inherent messiness, incompleteness, or unsuitable format.

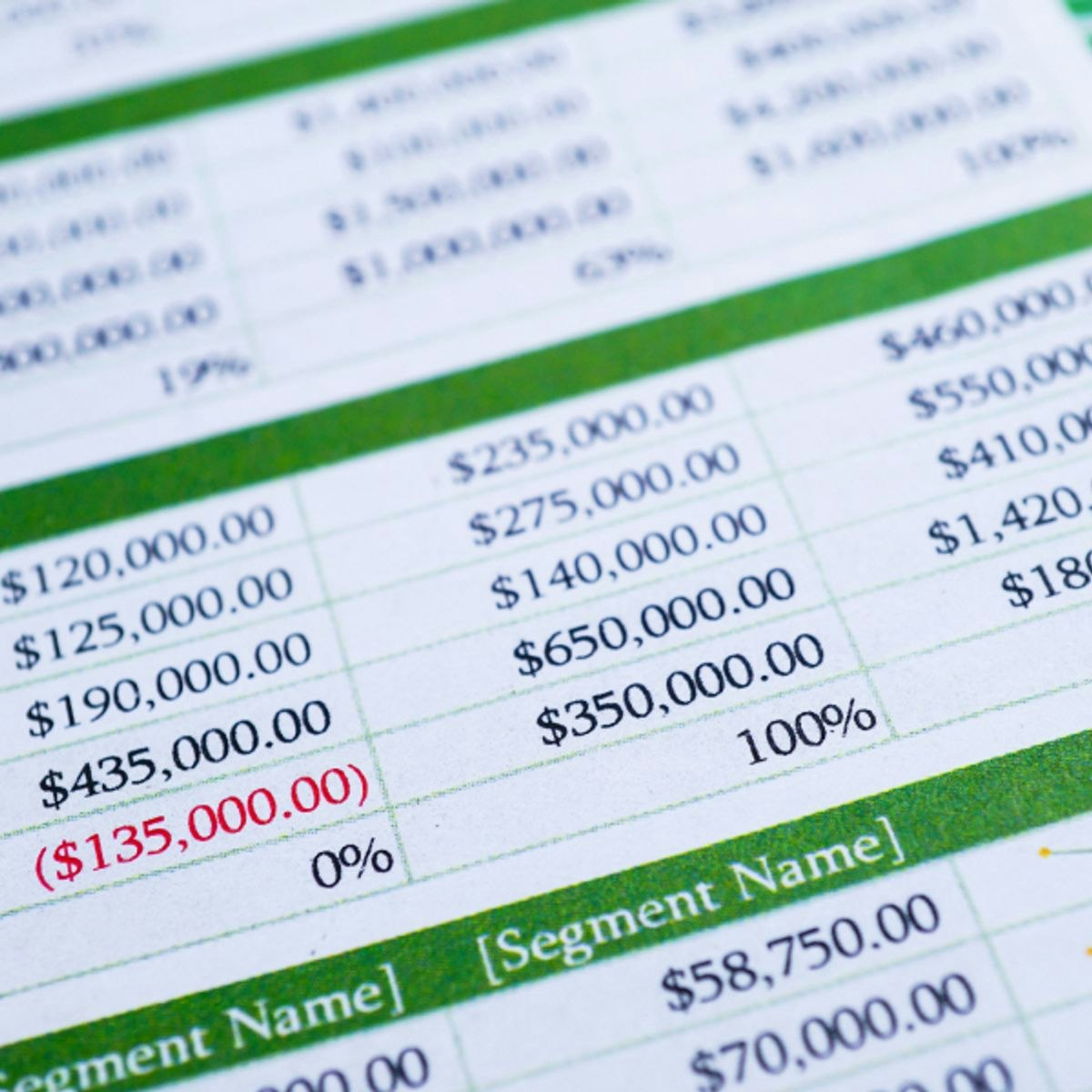

The scope of data manipulation extends from simple tasks performed in spreadsheets, like sorting columns or filtering rows, to complex transformations involving multiple datasets using specialized programming languages and tools. It bridges the gap between raw data collection and meaningful data analysis. Effectively manipulating data requires understanding both the data itself (its structure, meaning, and limitations) and the desired outcome or analysis goal.

Consider data stored in different systems, formats, or with varying levels of quality. Data manipulation techniques allow professionals to harmonize this disparate information, correct inaccuracies, handle missing values appropriately, and ultimately create a reliable dataset that can be trusted for decision-making. It is a fundamental step in nearly every data workflow.

A Brief History and Evolution

The concept of manipulating data is as old as data collection itself. Early forms involved manual sorting and calculation on paper ledgers. The advent of punched cards and mechanical tabulators in the late 19th and early 20th centuries, pioneered by Herman Hollerith for the US Census, represented a significant leap, allowing for automated sorting and aggregation.

The digital computer revolution dramatically accelerated the evolution of data manipulation. Early programming languages and database systems provided rudimentary tools. The development of relational databases and Structured Query Language (SQL) in the 1970s provided a powerful, standardized way to query and modify structured data, becoming a cornerstone of data manipulation that remains vital today.

More recently, the rise of "Big Data" and the increasing complexity and volume of unstructured or semi-structured data (like text, images, and sensor data) have spurred the development of new tools and techniques. Programming languages like Python and R, with powerful libraries such as Pandas and dplyr, offer flexible ways to handle diverse data formats. Extract, Transform, Load (ETL) processes and tools became standard for moving and reshaping data between systems, particularly for data warehousing. Today, cloud platforms and specialized data engineering tools continue to push the boundaries of what's possible in manipulating vast and varied datasets.

Connecting Data Manipulation to Related Fields

Data manipulation is a crucial component within several related fields, though its emphasis and specific techniques may vary. In Data Science, manipulation (often called "data wrangling" or "data munging") is a foundational step, preparing data for modeling and analysis. Data scientists spend a significant portion of their time cleaning and transforming data before they can build predictive models or extract insights.

Similarly, Data Analysis relies heavily on manipulation to structure data for exploration, visualization, and statistical testing. Analysts use manipulation techniques to filter, aggregate, and reshape data to answer specific business questions. Business Intelligence (BI) professionals also perform data manipulation, often within specialized BI tools or during the ETL process, to create dashboards and reports for decision-makers.

Database Management focuses on the efficient storage, retrieval, and modification of data within database systems. While database administrators (DBAs) are concerned with the overall health and performance of the database, they use data manipulation languages (like SQL) extensively for tasks like updates, deletions, and data migration. Data Engineering specifically focuses on building robust pipelines for moving, transforming, and storing data at scale, making data manipulation a central part of their role.

Making Sense of Data Manipulation: Simple Analogies

To grasp the essence of data manipulation without technical jargon, consider everyday analogies. Imagine you're cooking. Raw ingredients (raw data) might come in various forms – some need washing (cleaning), peeling or chopping (transforming), while others might be missing (handling missing data). Combining ingredients in specific proportions (aggregation) and following a recipe (workflow/script) results in a finished dish (analyzable data). Data manipulation is the kitchen prep work essential for a successful meal.

Another analogy is organizing a messy closet. You might start by taking everything out (extraction). Then, you sort items by type – shirts, pants, shoes (structuring). You might fold clothes neatly (formatting), discard items you no longer need (filtering/cleaning), and group outfits together (joining/enriching). The end result is an organized closet where items are easy to find and use (analysis-ready data).

Think also of a librarian organizing books arriving at a library. They check for damage (quality control), assign a unique code and category (structuring/transformation), record the author and title consistently (standardization), and place the book on the correct shelf (loading). This process makes the library's collection (data) usable for patrons (analysts/users).

Core Principles of Data Manipulation

This section delves into the fundamental techniques and concepts that underpin effective data manipulation, targeting those with a more technical interest.

Cleaning and Preprocessing Data

Data cleaning and preprocessing form the bedrock of reliable data analysis. Raw data is often imperfect, containing errors, inconsistencies, or missing values that can skew results if not addressed. Cleaning involves identifying and correcting these issues. This might include fixing typos, standardizing formats (e.g., ensuring all dates are in YYYY-MM-DD format), removing duplicate records, and handling outliers – extreme values that might be errors or genuinely unusual occurrences.

Preprocessing encompasses a broader set of preparatory steps. Beyond cleaning, it might involve feature scaling (adjusting the range of numerical features, often necessary for certain machine learning algorithms), encoding categorical variables (converting text labels like "Red", "Green", "Blue" into numerical representations), and feature engineering (creating new, potentially more informative features from existing ones). The specific cleaning and preprocessing steps depend heavily on the data source, the intended analysis, and the tools being used.

Thorough data cleaning requires domain knowledge to understand what constitutes an error or an outlier in a specific context. It's often an iterative process; initial cleaning might reveal deeper issues requiring further investigation and refinement. Documenting the cleaning steps taken is crucial for reproducibility and understanding the final dataset's provenance.

These courses offer practical introductions to data cleaning and processing techniques using popular tools.

Transforming Data for Insight

Data transformation involves changing the structure, format, or values of data to better suit analytical needs. Raw data might not be organized in a way that facilitates easy analysis or visualization. Transformation techniques help reshape the data into a more useful form. Common transformations include normalization and standardization, which scale numerical data to a common range, often improving the performance of algorithms sensitive to feature scales.

Aggregation is another key transformation, involving summarizing data by grouping records based on certain attributes and calculating metrics like counts, sums, averages, or maximums for each group. For example, transforming daily sales records into monthly summaries per product category. Pivoting is a specific type of restructuring, often used to convert data from a "long" format (where each observation has its own row) to a "wide" format (where variables are spread across columns), or vice-versa, depending on what's best for analysis or visualization.

Other transformations might involve creating derived variables (e.g., calculating age from a date of birth), binning continuous variables into discrete categories (e.g., grouping customers by age ranges), or applying mathematical functions (like logarithms) to change data distributions. The choice of transformation depends on the analytical question and the characteristics of the data.

These courses cover various data transformation techniques in R and other environments.

Addressing Missing and Inconsistent Data

Missing data is a common challenge in real-world datasets. Values might be missing because they were never recorded, lost during transfer, or intentionally omitted. How missing data is handled can significantly impact analysis results. Common strategies include deletion (removing rows or columns with missing values, feasible only if the proportion is small and missingness is random), imputation (filling in missing values using statistical methods like mean, median, mode imputation, or more sophisticated techniques like regression imputation), or using algorithms that can inherently handle missing values.

Inconsistent data refers to contradictions or discrepancies within the dataset. This could manifest as the same entity being recorded with slightly different names (e.g., "IBM" vs. "International Business Machines"), conflicting values for the same attribute (e.g., a customer listed as living in two different cities simultaneously), or violations of known constraints (e.g., an order date occurring after the shipping date). Identifying and resolving inconsistencies often requires careful investigation, potentially involving cross-referencing with other data sources or applying business rules.

Both missing and inconsistent data require careful consideration. The chosen handling method should be documented, and its potential impact on the analysis understood. Blindly deleting data or using simple imputation methods without understanding the underlying reasons for missingness or inconsistency can lead to biased or inaccurate conclusions.

These courses provide strategies for dealing with common data quality issues.

Working with Time and Space

Manipulating temporal (time-based) and spatial (location-based) data presents unique challenges and requires specialized techniques. Temporal data often involves timestamps or time intervals, requiring operations like extracting date components (year, month, day), calculating durations, resampling data to different time frequencies (e.g., converting daily data to weekly), and handling time zones correctly. Analyzing trends over time often involves techniques like calculating rolling averages or identifying seasonality.

Spatial data involves geographic locations, often represented as coordinates (latitude, longitude) or geometric shapes (points, lines, polygons). Manipulating spatial data might include calculating distances between points, determining if a point falls within a specific area (point-in-polygon analysis), buffering (creating zones around spatial features), or overlaying different spatial layers to find intersections or unions. Geographic Information Systems (GIS) software and specialized libraries in programming languages are commonly used for these tasks.

Both temporal and spatial data often require careful handling of formats and projections. Ensuring consistency in time zones or spatial reference systems is crucial for accurate analysis. Combining temporal and spatial data (spatio-temporal data) allows for analyzing how phenomena change over both time and space, common in fields like environmental science, epidemiology, and urban planning.

Industry Applications

Data manipulation is not just a technical exercise; it drives value across diverse industries by enabling better decision-making, optimizing processes, and uncovering hidden opportunities.

Data Manipulation in Finance

The financial sector heavily relies on data manipulation for various critical functions. In fraud detection, analysts manipulate transaction data to identify unusual patterns or anomalies that might indicate fraudulent activity. This involves cleaning transaction logs, enriching them with customer information, and structuring the data for input into fraud detection algorithms.

Risk modeling requires manipulating historical market data, customer financial data, and macroeconomic indicators. Data must be cleaned, time-aligned, and transformed (e.g., calculating returns, volatility) to build models that assess credit risk, market risk, or operational risk. Portfolio management involves manipulating asset price data, performance metrics, and client information to optimize investment strategies and generate client reports.

Regulatory compliance also necessitates extensive data manipulation. Financial institutions must process and structure vast amounts of data to meet reporting requirements for bodies like the SEC or central banks. This involves aggregating data from various systems, ensuring consistency, and formatting it according to specific regulatory standards.

Navigating Healthcare Data Challenges

Healthcare data is notoriously complex, fragmented, and subject to strict privacy regulations like HIPAA. Data manipulation plays a vital role in making this data usable for improving patient care, research, and operational efficiency. Electronic Health Records (EHR) often contain unstructured text (doctor's notes), varied coding systems (ICD, SNOMED), and data quality issues. Manipulation is needed to extract structured information from notes, standardize medical codes, and clean patient records.

Integrating data from different sources – EHRs, lab results, imaging systems, insurance claims – is a major challenge requiring sophisticated manipulation techniques. Data must be de-identified for research purposes while maintaining linkages for longitudinal studies. Standardization is key to comparing outcomes across different providers or populations.

Clinical research relies on manipulating trial data to prepare it for statistical analysis, ensuring data integrity and adherence to protocols. Hospital operations use manipulated data to track resource utilization, patient flow, and quality metrics. Public health organizations manipulate population-level data for disease surveillance and monitoring health trends.

Optimizing Retail and Supply Chains

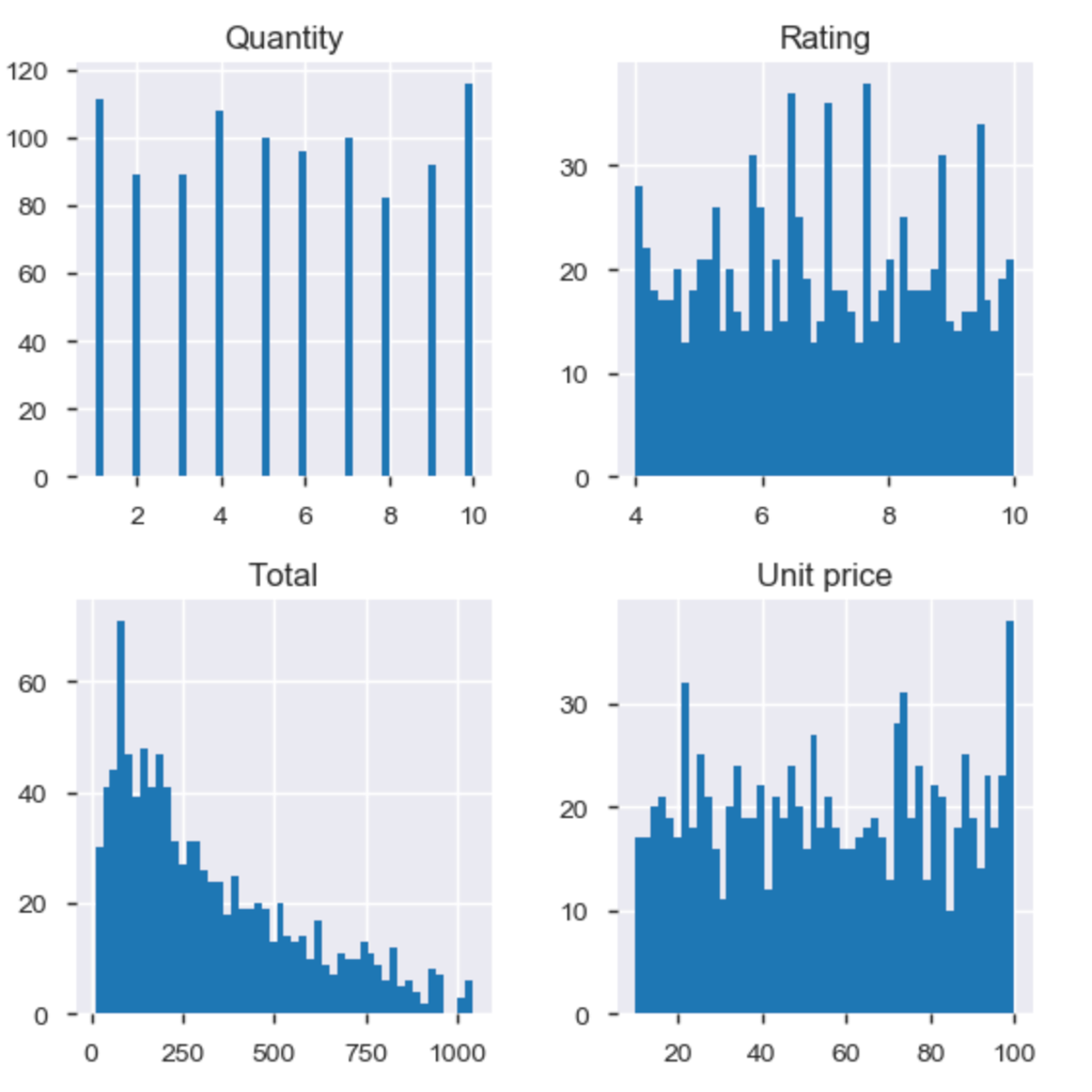

In retail, data manipulation helps understand customer behavior and optimize operations. Sales data, customer loyalty program information, website clickstream data, and social media sentiment are manipulated to perform market basket analysis (identifying items frequently bought together), customer segmentation, and personalized marketing campaigns. Cleaning and integrating these diverse data sources is crucial.

Supply chain optimization involves manipulating data related to inventory levels, supplier performance, shipping logistics, and demand forecasts. By cleaning and integrating data from warehouse management systems, transportation logs, and point-of-sale systems, companies can improve inventory management, reduce transportation costs, predict demand more accurately, and identify bottlenecks in the supply chain. For example, manipulating historical sales and weather data can help predict demand for seasonal items.

Pricing strategies are often informed by manipulating competitor pricing data and internal sales data to understand price elasticity and optimize pricing for maximum revenue or profit. Store layout and product placement decisions can also be guided by analyzing manipulated transaction data linked to store maps.

Fueling Artificial Intelligence and Machine Learning

Data manipulation is arguably the most time-consuming, yet critical, part of building Artificial Intelligence (AI) and Machine Learning (ML) models. Raw data is rarely suitable for direct input into algorithms. Extensive preprocessing and feature engineering are required.

This involves cleaning data, handling missing values, encoding categorical features into numerical formats (e.g., one-hot encoding), scaling numerical features, and potentially reducing dimensionality (selecting the most important features or creating composite features using techniques like Principal Component Analysis). Feature engineering, the art of creating new input variables from existing data, often requires significant domain expertise and data manipulation skill to craft features that improve model performance.

For specific ML tasks, unique manipulations are needed. Natural Language Processing (NLP) requires manipulating text data through tokenization, stemming, and vectorization. Computer Vision involves manipulating image data through resizing, normalization, and data augmentation (creating modified copies of images to increase training data). Preparing data correctly ensures that ML models learn effectively and generalize well to new, unseen data.

These courses touch upon using AI/ML in the context of data analysis and manipulation.

Essential Tools and Technologies

A variety of tools and technologies are available for data manipulation, ranging from ubiquitous spreadsheets to powerful programming languages and specialized platforms.

SQL: The Language of Relational Data

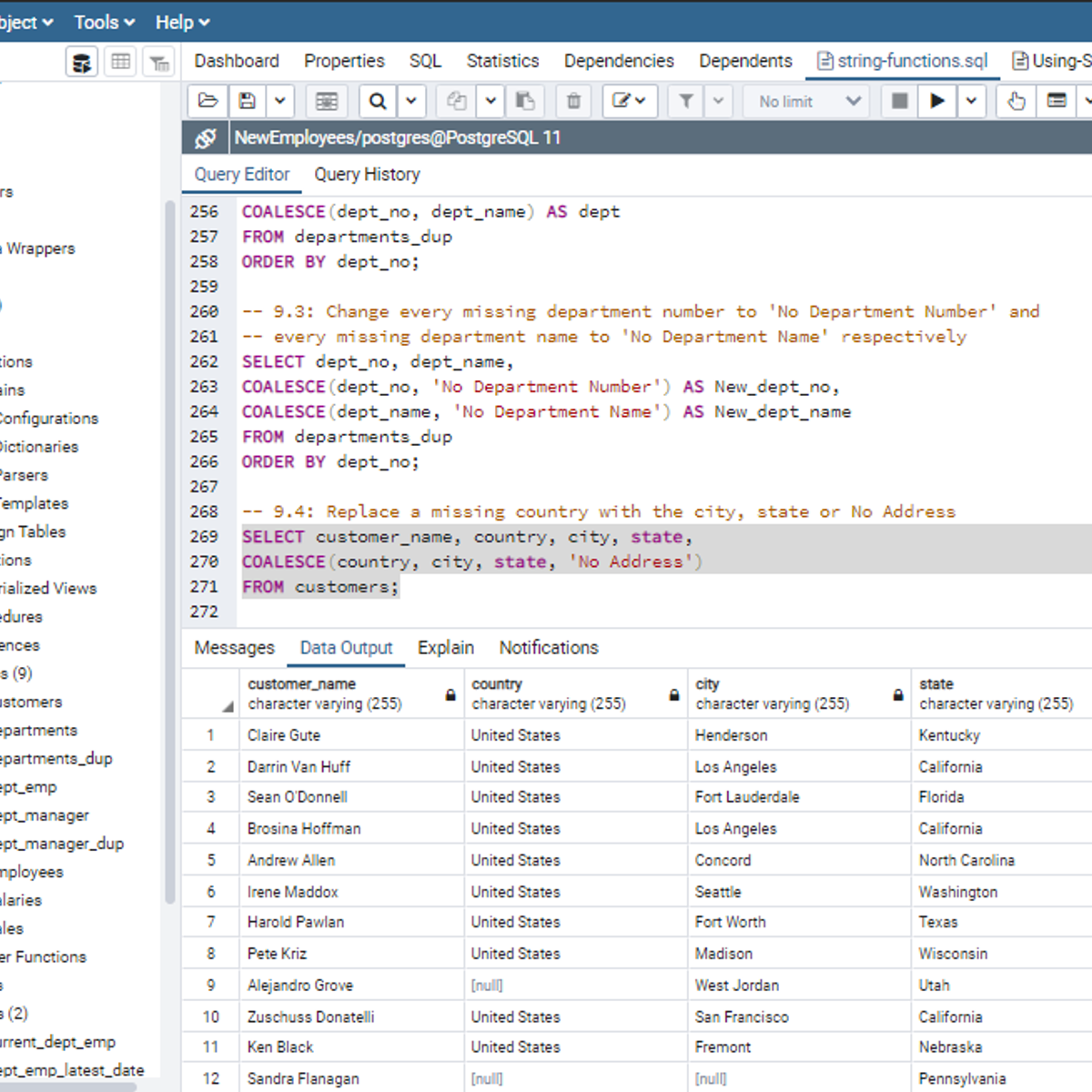

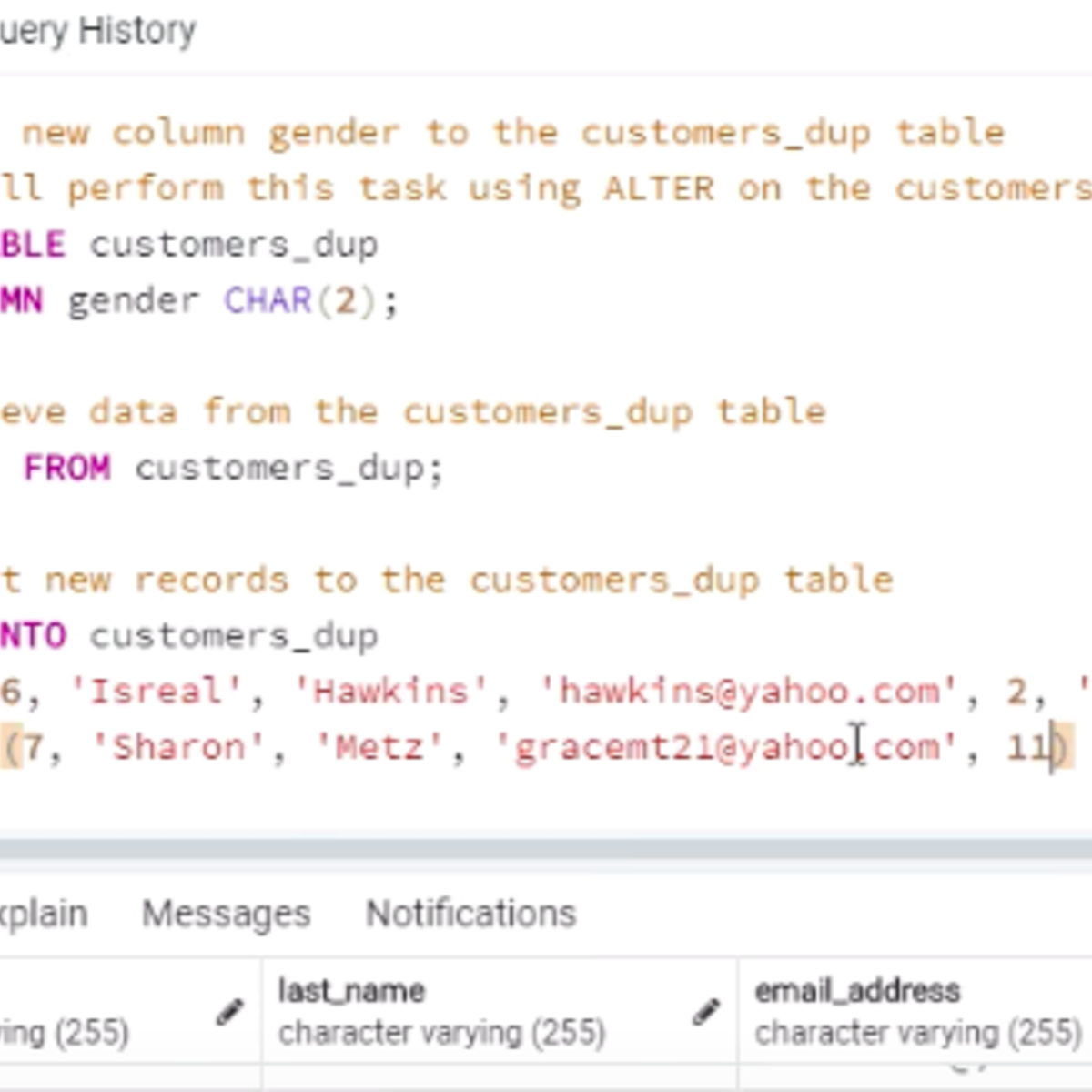

SQL (Structured Query Language) remains the standard language for interacting with relational databases. It provides a declarative way to define, query, and manipulate structured data stored in tables. Core SQL commands for manipulation include INSERT (to add new rows), UPDATE (to modify existing rows), and DELETE (to remove rows). The SELECT statement, while primarily for querying, often incorporates manipulation functions for data transformation directly within the query (e.g., calculating new values, formatting dates, concatenating strings).

SQL is powerful for filtering data (WHERE clause), sorting results (ORDER BY), grouping and aggregating data (GROUP BY with aggregate functions like COUNT, SUM, AVG), and combining data from multiple tables (JOIN operations). Its widespread adoption means SQL skills are essential for anyone working directly with relational databases, including data analysts, database administrators, and backend developers.

While different database systems (like MySQL, PostgreSQL, SQL Server, Oracle) might have minor variations in syntax or additional proprietary functions, the core SQL principles are largely universal, making it a highly transferable skill.

These courses provide practical training in using SQL for data definition and manipulation.

These books offer comprehensive guides to learning and applying SQL.

Python and R Libraries for Data Wrangling

For more complex data manipulation tasks, especially involving diverse data formats or requiring integration with statistical analysis and machine learning, programming languages like Python and R are preferred. Both offer rich ecosystems of libraries specifically designed for data wrangling.

In Python, the Pandas library is the de facto standard. It introduces DataFrame and Series objects, providing intuitive and high-performance data structures and tools for reading various file formats (CSV, Excel, JSON, SQL databases), cleaning data, handling missing values, merging and joining datasets, reshaping data (pivoting, melting), time series manipulation, and much more. NumPy is another fundamental library, providing efficient array operations often used in conjunction with Pandas.

In R, the "Tidyverse" collection of packages, particularly dplyr and tidyr, provides a cohesive framework for data manipulation based on the concept of "tidy data". Dplyr offers verbs like filter(), select(), mutate(), arrange(), and summarise() for common manipulation tasks, known for its expressive and readable syntax. Tidyr focuses on reshaping data layouts. Other R packages handle specific needs like date/time manipulation (lubridate) or string processing (stringr).

These courses focus on using Python and R libraries for effective data manipulation.

These books are essential references for data analysis using Python and R.

You may also wish to explore these related topics.

Leveraging Spreadsheets for Data Tasks

Spreadsheet software like Microsoft Excel or Google Sheets remains a widely accessible and often underestimated tool for basic to intermediate data manipulation. They offer a visual, interactive environment suitable for smaller datasets. Common manipulation tasks achievable in spreadsheets include sorting data based on one or more columns, filtering data to show only rows meeting specific criteria, finding and replacing values, removing duplicates, and splitting text into columns.

Spreadsheets provide a vast array of built-in functions for calculations and transformations. These range from simple arithmetic and statistical functions (SUM, AVERAGE, COUNT) to more complex lookup functions (VLOOKUP, INDEX/MATCH), conditional logic (IF, COUNTIF, SUMIF), text manipulation functions (LEFT, RIGHT, MID, CONCATENATE), and date/time functions. Pivot Tables are a particularly powerful feature for interactively summarizing and aggregating large amounts of data without writing formulas.

While spreadsheets have limitations regarding dataset size, performance, reproducibility (tracking changes can be difficult), and handling complex transformations, they are excellent for quick exploratory analysis, simple cleaning tasks, and situations where programming expertise is not available or necessary. Many data professionals use spreadsheets for initial data inspection or final report formatting, even if the core manipulation happens elsewhere.

These courses cover data manipulation using spreadsheet software.

This book provides practical techniques for data analysis in Excel.

ETL Tools and Workflow Automation

Extract, Transform, Load (ETL) tools are specialized software designed to automate the process of moving and reshaping data between different systems, often from operational databases into data warehouses or data lakes. They provide graphical interfaces for building data pipelines, allowing users to define data sources (Extract), specify a series of transformations (Transform), and define the destination (Load) without extensive coding.

ETL tools typically offer a wide range of pre-built connectors for various databases, file formats, and APIs, simplifying the extraction process. They provide numerous transformation components for tasks like data cleaning, filtering, joining, aggregation, and applying business rules. These tools are designed for handling large volumes of data efficiently and often include features for scheduling pipeline runs, monitoring execution, and handling errors.

Workflow automation tools, which may overlap with ETL tools or complement them, help orchestrate complex sequences of tasks that might involve data manipulation alongside other processes (like running scripts, sending notifications, or triggering other applications). Tools like Apache Airflow or platforms like UiPath (for Robotic Process Automation, which can include data manipulation steps) allow users to define dependencies between tasks, schedule workflows, and manage complex data processing routines systematically.

These courses introduce concepts related to ETL and data manipulation in automated workflows.

Ethical Considerations in Data Manipulation

While data manipulation is a technical process, it carries significant ethical responsibilities. Decisions made during data cleaning and transformation can have real-world consequences, potentially leading to biased outcomes or privacy violations if not handled carefully.

The Risk of Introducing Bias

Data manipulation, particularly during cleaning and preprocessing, can inadvertently introduce or amplify biases present in the original data or the process itself. For example, the method chosen for handling missing data can introduce bias. If data is missing non-randomly (e.g., certain demographic groups are less likely to respond to a survey question), simply deleting those records or imputing values based on the overall average can skew the dataset, leading to models or analyses that underrepresent or mischaracterize those groups.

Decisions about outlier removal can also introduce bias. Removing extreme values might seem statistically sound, but if those outliers represent valid, albeit rare, cases or belong disproportionately to a specific subgroup, their removal can distort the understanding of the full population distribution. Similarly, choices made during feature engineering or variable encoding can reflect the biases of the person performing the manipulation.

Awareness of potential biases is crucial. Practitioners should critically examine their data sources, understand why data might be missing or inconsistent, and choose manipulation techniques that minimize the introduction or amplification of unfairness. Transparency about the methods used is essential for others to assess potential biases.

Ensuring Data Provenance and Audit Trails

Data provenance refers to the history or origin of data, including all the transformations and manipulations it has undergone. Maintaining clear provenance is ethically important because it ensures transparency and accountability. Knowing how data was changed allows others to understand its limitations, reproduce the results, and identify potential sources of error or bias.

An audit trail involves logging the specific steps taken during data manipulation. This includes recording which data sources were used, what cleaning rules were applied, how missing values were handled, which transformations were performed, and the versions of software or scripts used. Good data manipulation practices involve documenting these steps meticulously, often through commented code, workflow diagrams in ETL tools, or dedicated metadata management systems.

Without proper provenance and audit trails, it becomes difficult to trust the results of data analysis or machine learning models. If errors are discovered later, tracing them back to their source is challenging without a clear record of the manipulation process. This lack of traceability undermines the reliability and ethical standing of data-driven conclusions.

Navigating Regulations and Compliance

Data manipulation activities are often subject to legal and regulatory frameworks designed to protect privacy and ensure data security. Regulations like the General Data Protection Regulation (GDPR) in Europe and the Health Insurance Portability and Accountability Act (HIPAA) in the US healthcare sector impose strict rules on how personal data can be collected, processed, stored, and shared.

Data manipulation techniques must comply with these regulations. For instance, processes involving personal data often require anonymization or pseudonymization techniques to remove or obscure personally identifiable information (PII) before analysis or sharing. Consent management is also critical; data manipulation pipelines must respect individuals' consent choices regarding the use of their data.

Compliance requires careful design of data manipulation workflows, ensuring appropriate security measures are in place, access controls are enforced, and data retention policies are followed. Failure to comply can result in significant legal penalties, reputational damage, and loss of public trust. Data professionals must stay informed about relevant regulations in their industry and jurisdiction.

The Importance of Reproducibility

Reproducibility is a cornerstone of scientific integrity and ethical data practice. It means that others should be able to achieve the same results using the original data and the documented manipulation and analysis steps. Data manipulation plays a critical role in reproducibility; if the cleaning and transformation steps are not clearly documented or automated, reproducing the final dataset and subsequent analysis becomes impossible.

Achieving reproducibility often involves using scripts (e.g., in Python or R) or documented workflows (in ETL tools) for all manipulation steps, rather than performing manual changes in spreadsheets without tracking. Version control systems (like Git) are essential for managing code changes and ensuring that the exact version used for a specific analysis can be retrieved.

When data manipulation is not reproducible, it hinders scientific validation, makes it difficult to debug errors, and erodes trust in the findings. Ethical data practice demands that manipulation processes be transparent and repeatable, allowing for scrutiny and verification by the broader community or regulatory bodies.

Formal Education Pathways

For those seeking a structured approach to learning data manipulation and related skills, formal education offers established pathways through universities and colleges.

Relevant Academic Programs

Several undergraduate majors provide a strong foundation for roles involving data manipulation. Computer Science programs offer essential programming skills, understanding of algorithms, and database fundamentals, which are directly applicable. Statistics degrees provide the mathematical and analytical grounding needed to understand data distributions, statistical transformations, and methods for handling missing data or outliers.

Information Systems or Management Information Systems (MIS) programs often bridge business and technology, covering database management, data warehousing, and business intelligence tools, all heavily reliant on data manipulation. Mathematics degrees also build strong analytical reasoning skills applicable to complex data problems. Increasingly, specialized undergraduate programs in Data Science or Data Analytics are emerging, offering curricula specifically focused on the entire data lifecycle, including manipulation.

Regardless of the specific major, coursework in databases (especially SQL), programming (Python or R), statistics, and potentially machine learning provides the core competencies needed for data manipulation tasks.

Consider exploring programs in these areas via OpenCourser's browse feature, particularly within Computer Science, Data Science, and Mathematics.

Advancing Knowledge Through Graduate Studies

Graduate programs (Master's or PhD) offer opportunities for deeper specialization and research involving advanced data manipulation techniques. Master's programs in Data Science, Business Analytics, Statistics, or Computer Science often include advanced courses on database systems, distributed computing (relevant for large-scale manipulation), machine learning pipelines (which heavily involve preprocessing), and specialized data types (like text or spatial data).

PhD programs in these fields allow students to conduct original research that may involve developing new algorithms for data cleaning, transformation, or integration, or applying sophisticated manipulation techniques to complex research problems in various domains (e.g., bioinformatics, econometrics, social sciences). Research often pushes the boundaries of handling challenging data characteristics like high dimensionality, sparsity, or streaming data.

Graduate studies provide not only advanced technical skills but also a deeper theoretical understanding of the underlying principles, enabling graduates to tackle more complex data challenges and potentially lead data teams or research initiatives.

Demonstrating Skills with Capstone Projects

Many academic programs, particularly at the undergraduate and master's levels, culminate in a capstone project. These projects provide an invaluable opportunity to apply learned concepts, including data manipulation, to a real-world or simulated problem. Students typically work with messy, complex datasets, requiring them to perform significant cleaning, transformation, and integration before analysis or model building.

A successful capstone project demonstrates practical proficiency in the entire data workflow. It showcases a student's ability to identify data quality issues, select appropriate manipulation techniques, implement them using relevant tools (SQL, Python, R, etc.), and document the process clearly. These projects often become key items in a graduate's portfolio when seeking employment.

Best practices for capstone projects involving data manipulation include starting with a clear research question or objective, thoroughly exploring the raw data to understand its challenges, systematically applying and documenting cleaning and transformation steps, and using version control to manage code and track changes.

Staying Current with Academic Research

The field of data management and analysis is constantly evolving, with new techniques and tools emerging from academic research. Staying connected with the academic community through conferences and journals helps practitioners and researchers remain aware of the latest developments in data manipulation.

Major conferences in database systems (e.g., SIGMOD, VLDB), data mining (e.g., KDD), and machine learning (e.g., NeurIPS, ICML) often feature papers presenting novel approaches to data cleaning, integration, transformation for specific algorithms, or handling new data types. Leading journals in these fields publish in-depth research articles.

While direct participation in academic conferences might be limited, following key researchers, reading influential papers, and paying attention to trends discussed in academic circles can provide insights into future directions and potentially more effective ways to handle data manipulation challenges.

Self-Directed Learning Strategies

Formal education isn't the only path. Many successful data professionals build their skills through self-directed learning, leveraging online resources, practical projects, and community engagement. This route requires discipline and initiative but offers flexibility.

Building Statistical Foundations

A solid understanding of basic statistical concepts is highly beneficial for effective data manipulation. Statistics helps you understand data distributions, identify potential outliers, choose appropriate methods for handling missing data, and grasp the purpose behind transformations like normalization or standardization. Knowing concepts like mean, median, standard deviation, probability distributions, and hypothesis testing provides context for many manipulation tasks.

Numerous online resources can help build this foundation. Online courses covering introductory statistics, probability, and statistical inference are widely available. Many focus specifically on statistics for data science, applying concepts directly to data analysis scenarios. Textbooks and free online materials from universities also offer comprehensive coverage.

Focus on understanding the concepts rather than just memorizing formulas. Apply what you learn by calculating descriptive statistics on datasets you encounter, helping to solidify your understanding and see the practical relevance of statistical principles in data manipulation.

These courses offer introductions to statistical concepts relevant to data work.

Practicing with Real-World Data

Theoretical knowledge is important, but practical experience is paramount in mastering data manipulation. Seek out publicly available datasets from sources like Kaggle, government open data portals (e.g., data.gov), UCI Machine Learning Repository, or specialized repositories relevant to your interests (e.g., healthcare, finance). These datasets often mimic the messiness of real-world data, providing excellent practice opportunities.

Challenge yourself to take a raw dataset and prepare it for a specific analysis or visualization goal. Practice common tasks: identify and handle missing values, detect and address inconsistencies, convert data types, merge data from multiple files, reshape data layouts, and create new features. Document your steps as you go, perhaps in a Jupyter Notebook or R Markdown file.

Start with smaller, simpler datasets and gradually tackle more complex ones. Don't be afraid to experiment with different manipulation techniques and tools. The more hands-on practice you get, the more intuitive and efficient you will become.

Explore the vast library of online courses on OpenCourser to find guided projects and tutorials using various datasets.

Creating a Compelling Portfolio

For self-directed learners, a portfolio showcasing practical skills is crucial for demonstrating competence to potential employers. Document your data manipulation projects clearly, explaining the problem, the raw data's challenges, the steps you took to clean and transform it, and the tools you used. Include code snippets (e.g., via GitHub) and potentially visualizations of the data before and after manipulation.

Focus on projects that demonstrate a range of manipulation skills and tackle realistic data problems. Explain your reasoning for choosing specific techniques (e.g., why you imputed missing values in a certain way). If possible, connect the manipulation work to a meaningful outcome, like enabling a specific analysis or improving the performance of a simple model.

Your portfolio serves as tangible evidence of your abilities. Platforms like GitHub, personal blogs, or dedicated portfolio websites are excellent ways to present your work professionally. Ensure your code is clean, well-commented, and reflects good practices.

The OpenCourser Learner's Guide offers tips on building a portfolio and leveraging online learning for career advancement.

Learning from the Community

Engaging with the data community can significantly accelerate your learning. Online forums like Stack Overflow, Reddit communities (e.g., r/datascience, r/learnpython), and specialized communities for tools like R or specific Python libraries are invaluable resources for asking questions, finding solutions to common problems, and learning from others' experiences.

Participate in online challenges or competitions (like those on Kaggle) – even if you don't aim to win, working on shared problems and seeing how others approach data cleaning and manipulation is highly educational. Follow influential data professionals and educators on platforms like Twitter or LinkedIn to stay updated on new tools, techniques, and best practices.

Consider joining local data science or programming meetups (many now have virtual options) to network with peers, learn from presentations, and discuss challenges. Mentorship, whether formal or informal, can also provide guidance and support. Don't hesitate to share your own work (e.g., portfolio projects) and solicit feedback from the community.

Career Progression and Roles

Proficiency in data manipulation opens doors to various roles and offers pathways for career growth within the data field.

Starting Your Career in Data Manipulation

Entry-level roles often involve significant data manipulation tasks. Titles like Data Technician, Junior Data Analyst, or Business Intelligence Analyst frequently require individuals to gather data from various sources, perform cleaning and basic transformations (often using SQL or spreadsheets), and prepare data for reporting or analysis by more senior team members. These roles provide practical experience with real-world data challenges and foundational tools.

Essential skills for these positions typically include proficiency in SQL and spreadsheet software (like Excel), attention to detail, problem-solving abilities, and basic understanding of data structures and types. Some roles might also require introductory knowledge of a programming language like Python or R. According to the U.S. Bureau of Labor Statistics, fields related to data analysis show strong growth prospects, making these entry-level positions a solid starting point.

Building a portfolio demonstrating practical SQL and spreadsheet skills, possibly supplemented by introductory online courses, can significantly enhance competitiveness for these roles. Emphasize projects where you successfully cleaned and prepared messy data.

These careers often involve foundational data manipulation tasks.

These courses can help build foundational skills for entry-level roles.

Specializing and Growing Mid-Career

As professionals gain experience, they often specialize. A Data Analyst might deepen their expertise in a specific domain (e.g., marketing analytics, financial analysis) or toolset (e.g., becoming an expert in a particular BI platform or advanced Python/R techniques). They take on more complex analytical tasks, where sophisticated data manipulation is required to answer nuanced business questions.

Some may transition into Data Science roles, focusing more on predictive modeling and machine learning, which demands advanced data preprocessing and feature engineering skills. Others might move towards Data Engineering, designing and building scalable data pipelines, requiring expertise in ETL tools, cloud platforms, and distributed computing frameworks like Spark. Roles in Data Governance emerge, focusing on establishing policies and processes for data quality, security, and compliance, often leveraging data manipulation knowledge to implement data quality checks.

Mid-career growth involves not only deepening technical skills but also developing stronger communication, project management, and domain expertise. Certifications in specific tools (like cloud platforms or ETL software) or domains can be beneficial, complementing practical experience.

These roles often require more advanced data manipulation skills.

Leading Data Governance and Strategy

Senior roles often involve less hands-on manipulation but require a deep understanding of its principles to guide strategy and governance. Data Architects design the overall structure of an organization's data assets, making decisions about database technologies, data models, and integration strategies, all influenced by manipulation requirements. Data Governance Managers establish standards for data quality, metadata management, security, and compliance, overseeing the processes that ensure data is handled responsibly – including setting rules for cleaning and transformation.

Chief Data Officers (CDOs) or Heads of Analytics set the overall data strategy for the organization, aligning data initiatives with business goals. Their understanding of data manipulation challenges helps them advocate for necessary resources, tools, and talent. These leadership roles require strong technical foundations combined with strategic thinking, leadership skills, and the ability to communicate the value of data across the organization.

Progression to these roles typically requires extensive experience across various data functions, a proven track record of delivering data projects, and strong leadership capabilities.

Exploring Freelance and Consulting Paths

Strong data manipulation skills are highly valuable in the freelance and consulting market. Many businesses, particularly small and medium-sized ones, need help cleaning, integrating, and preparing their data for analysis but may not have the resources for a full-time data professional. Freelancers can offer services ranging from specific data cleaning projects to building automated ETL pipelines or preparing datasets for BI tools.

Consultants often work on larger strategic projects, advising companies on data architecture, choosing appropriate tools, establishing data governance frameworks, or solving complex data integration challenges. Success in these roles requires not only technical expertise in data manipulation tools and techniques but also strong client communication, project management skills, and the ability to quickly understand diverse business contexts.

Building a strong portfolio, networking effectively, and potentially specializing in a niche industry or technology can help establish a successful freelance or consulting career focused on data manipulation and related services.

Emerging Trends and Challenges

The field of data manipulation is continuously evolving, driven by technological advancements and the ever-increasing volume and complexity of data.

The Rise of Automated Data Wrangling

A significant trend is the development of tools aiming to automate aspects of data wrangling. These tools leverage AI and machine learning techniques to automatically detect data types, identify potential errors or inconsistencies, suggest cleaning transformations, and even generate code (e.g., Python/Pandas) to perform these operations. The goal is to reduce the manual effort and time spent on routine manipulation tasks.

While promising, these automated tools are not yet a complete replacement for human expertise. They often work best as assistants, suggesting potential actions that still require human review and validation. Complex data issues or transformations requiring deep domain knowledge often still necessitate manual intervention. However, these tools are expected to become more sophisticated, potentially shifting the focus of data professionals towards more complex challenges and oversight rather than low-level cleaning.

This course touches on using generative AI for data analysis tasks.

Handling Real-Time Data Streams

Increasingly, data is generated continuously in real-time streams – from IoT devices, website interactions, financial markets, or social media feeds. Manipulating streaming data presents unique challenges compared to batch processing of static datasets. Transformations and cleaning must often happen on the fly, with limited time and computational resources per data point.

Technologies like Apache Kafka, Apache Flink, and Spark Streaming are designed for processing data streams. Data manipulation in this context involves techniques for windowing (analyzing data over specific time intervals), stateful processing (maintaining information across multiple data points), and handling out-of-order or delayed data. Ensuring data quality and consistency in a high-velocity streaming environment requires robust error handling and monitoring.

The demand for skills in manipulating real-time data is growing as more businesses seek immediate insights and automated responses based on live data feeds.

Integrating Data Across Platforms

Modern organizations often use a multitude of software applications, databases, and cloud services, leading to data being siloed across different platforms. Integrating this disparate data effectively remains a major challenge. Data manipulation is central to bridging these silos, requiring techniques to handle different data formats, resolve schema inconsistencies, and manage data movement between on-premises systems and various cloud environments (e.g., AWS, Azure, GCP).

The rise of data lakes and lakehouses aims to provide unified repositories, but the complexity of ingesting, cleaning, and transforming data from diverse sources into these platforms persists. Cross-platform data integration requires expertise in APIs, various database technologies (SQL and NoSQL), data serialization formats (JSON, Avro, Parquet), and potentially cloud-specific data integration services.

Ensuring data consistency, security, and governance across these distributed environments adds further layers of complexity to the manipulation process.

Evolving Skill Requirements

As tools and technologies evolve, the required skillset for data manipulation also changes. While foundational skills in SQL and spreadsheets remain valuable, proficiency in programming languages like Python or R, along with key libraries (Pandas, dplyr), is becoming increasingly essential for tackling complex tasks and larger datasets. Familiarity with cloud platforms and their data services is also growing in importance.

Beyond technical skills, the ability to understand business context, think critically about data quality, communicate findings effectively, and collaborate with domain experts is crucial. As automation handles more routine tasks, the value shifts towards strategic thinking, problem-solving complex data issues, and ensuring ethical and responsible data handling.

Lifelong learning is key. Data professionals need to continuously update their skills, experimenting with new tools and techniques to remain effective in a rapidly changing landscape. Platforms like OpenCourser provide resources for staying current through access to a wide range of online courses.

Frequently Asked Questions

Here are answers to some common questions about pursuing skills and careers related to data manipulation.

What are the essential skills for an entry-level data manipulation role? Foundational skills typically include strong proficiency in SQL for querying and modifying data in relational databases, and advanced capabilities in spreadsheet software like Microsoft Excel (including functions, pivot tables, and basic cleaning techniques). Attention to detail is critical for identifying inconsistencies. Basic analytical thinking and problem-solving skills are needed to understand data issues and apply appropriate solutions. Familiarity with core data concepts (data types, structures) is also expected.

How valuable are certifications compared to practical experience? Practical experience, often demonstrated through a portfolio of projects working with real (or realistic) messy data, is generally valued more highly by employers than certifications alone. However, certifications from reputable providers (e.g., cloud platforms like AWS/Azure/GCP, software vendors like SAS, or recognized training institutions) can validate specific tool knowledge and demonstrate commitment to learning. They can be particularly helpful for career changers or those targeting roles requiring expertise in a specific technology. Ultimately, a combination of demonstrated practical skills and relevant certifications often presents the strongest profile.

Should I specialize in a particular industry? While core data manipulation skills are transferable across industries, specializing can be advantageous. Different industries have unique data types, challenges, regulations, and analytical needs (e.g., healthcare data privacy under HIPAA, financial market time-series data, retail customer transaction patterns). Developing domain expertise alongside technical skills allows you to understand the context better, perform more relevant manipulations, and communicate more effectively with stakeholders. Specialization can make you a more valuable candidate within that specific sector, though it might slightly narrow the range of initial opportunities compared to a generalist approach.

Are remote work opportunities common? Yes, roles involving significant data manipulation are often well-suited for remote work. The tasks primarily involve working with digital data and tools, requiring less physical presence than many other professions. Many companies, particularly in the tech sector, have embraced remote or hybrid work models for data analysts, scientists, and engineers. The availability of remote positions depends on company policy, team structure, and the specific nature of the role (e.g., roles requiring access to highly sensitive on-premises data might be less likely to be fully remote). Searching job boards reveals numerous remote opportunities in data-related fields.

Will automation make data manipulation skills obsolete? While automation tools are improving and will likely handle more routine cleaning and transformation tasks in the future, they are unlikely to make data manipulation skills obsolete entirely. Human oversight, critical thinking, and domain expertise remain crucial for validating automated suggestions, handling complex or ambiguous data issues, ensuring ethical considerations are met, and designing appropriate data strategies. The focus may shift from manual execution of simple tasks to overseeing automated processes, solving more complex problems, and ensuring the quality and integrity of the entire data pipeline. Core skills like SQL, programming logic, and understanding data structures will likely remain relevant.

How can I transition into this field from an unrelated background? Transitioning requires demonstrating relevant skills, even without direct prior job experience. Start by building foundational knowledge through online courses focusing on SQL, Excel, and perhaps an introduction to Python/R with Pandas/dplyr. Practice extensively with public datasets, focusing on cleaning and transforming data. Build a portfolio showcasing these projects clearly. Highlight any quantitative or analytical aspects of your previous roles on your resume. Network with people in the field through online communities or local meetups. Consider entry-level data roles (like Data Technician or Junior Analyst) as a stepping stone. Be prepared to explain how your skills from your previous field (e.g., problem-solving, attention to detail) are transferable.

Embarking on a path involving data manipulation requires dedication and continuous learning, but it offers intellectually stimulating challenges and rewarding career opportunities. By building a solid foundation, practicing consistently, and staying adaptable, individuals can successfully navigate the dynamic world of data.