Statistical Modeling

Statistical Modeling: A Comprehensive Guide

Statistical modeling is a powerful and essential discipline that involves using mathematical frameworks and statistical assumptions to understand data, identify relationships between variables, and make predictions about real-world phenomena. At its core, it's about creating simplified representations of complex processes to extract meaningful insights. This field allows analysts and scientists to move beyond raw observations, enabling them to test hypotheses, quantify uncertainty, and inform decision-making across a vast array of domains. Whether it's forecasting economic trends, evaluating the effectiveness of a new medical treatment, or personalizing customer experiences, statistical modeling provides the tools to navigate and interpret the data-rich world around us.

Working with statistical models can be an engaging and intellectually stimulating endeavor. It offers the thrill of discovery, allowing practitioners to uncover hidden patterns and relationships within data that might not be immediately obvious. Furthermore, the ability to build models that can predict future outcomes with a degree of certainty is a powerful asset in any field, offering a tangible way to impact strategies and results. The process is often iterative and creative, involving not just mathematical rigor but also critical thinking and problem-solving skills to choose the right approach for the data and the question at hand. For those who enjoy a blend of analytical thinking, mathematical application, and real-world problem-solving, statistical modeling offers a compelling path.

Introduction to Statistical Modeling

This section aims to lay a solid groundwork for understanding what statistical modeling is, why it's important, and the basic ideas that underpin it. We'll explore its purpose, its diverse applications, and how it empowers data-driven decisions. Finally, we'll touch upon some fundamental terms that will be useful as you delve deeper into this fascinating field.

Defining Statistical Modeling and Its Purpose

Statistical modeling is the process of developing a mathematical description of a real-world process or phenomenon based on data. It's a formal way to represent the relationships between different variables, often distinguishing between those that are random (unpredictable) and those that are non-random or deterministic. The primary purpose of statistical modeling is to analyze and interpret complex datasets, allowing us to understand underlying patterns, make inferences about larger populations from samples, predict future outcomes, and test specific hypotheses about how things work.

Think of a statistical model as a simplified, mathematical approximation of reality. Because real-world systems are often incredibly complex, models help us focus on the most important factors and their interactions. For example, a model might try to explain how a student's study hours, previous grades, and attendance affect their final exam score. The "purpose" here is to quantify these relationships and perhaps predict future scores or identify students at risk.

Ultimately, statistical models serve as a bridge between raw data and actionable insights. They provide a structured framework for learning from data and for using that knowledge to make more informed and objective decisions in various contexts, from scientific research to business strategy.

Where is Statistical Modeling Used?

Statistical modeling is not confined to a single industry or academic discipline; its applications are widespread and incredibly diverse, reflecting its fundamental utility in understanding data. In business and finance, statistical models are the backbone of risk assessment, helping banks determine creditworthiness or insurance companies set premiums. They are used for market trend analysis, forecasting sales, and optimizing investment strategies. For instance, retailers leverage statistical models to predict future demand by examining historical purchasing patterns, seasonality, and other influencing factors, which aids in inventory management and targeted marketing.

The healthcare sector relies heavily on statistical modeling for everything from clinical trials, where models assess the efficacy of new drugs, to epidemiology, where they track and predict disease outbreaks. Patient outcomes can be predicted, treatment effectiveness evaluated, and healthcare resources managed more efficiently using these analytical tools. In engineering and manufacturing, statistical models are crucial for quality control, ensuring products meet specific standards, and for reliability testing, predicting the lifespan of a product or system.

Beyond these, statistical modeling is essential in the social sciences for analyzing survey data and understanding societal trends, in environmental science for modeling climate change and ecological systems, and even in sports for analyzing player performance and game strategies. Government agencies use models for economic planning, resource allocation, and policy-making based on census and public health data. The pervasive nature of data in the modern world means that statistical modeling is an increasingly vital tool across nearly every field imaginable.

These courses offer a glimpse into the practical application of statistical modeling in various fields, particularly in health and data science.

The Role of Statistical Modeling in Data-Driven Decisions

In an era where data is generated at an unprecedented rate, the ability to make sense of this information is paramount. Statistical modeling plays a critical role in transforming raw data into the kind of actionable insights that fuel data-driven decision-making. Essentially, models act as interpreters, translating complex and often noisy data into a clearer understanding of underlying trends, relationships, and potential future outcomes.

Data-driven decision-making means relying on evidence and analysis rather than solely on intuition or anecdotal experience. Statistical models provide the rigorous, mathematical framework needed to support this approach. For example, a marketing team might use a statistical model to understand which advertising channels yield the highest return on investment, allowing them to allocate their budget more effectively. A city planner might use models to predict population growth and its impact on infrastructure, informing decisions about future development. These are just a few examples of how statistical analysis helps organizations make strategic choices.

Moreover, statistical models help quantify uncertainty. No prediction about the future is ever perfect, but models can provide a measure of confidence or a range of likely outcomes. This allows decision-makers to understand the potential risks and rewards associated with different choices. By providing a structured way to evaluate evidence and predict consequences, statistical modeling empowers individuals and organizations to make more objective, efficient, and ultimately, more successful decisions.

Fundamental Concepts and Terminology

To embark on a journey into statistical modeling, it's helpful to become familiar with some of its basic building blocks. These terms will appear frequently as you learn more about different types of models and techniques.

Imagine you're a detective trying to solve a case (the "phenomenon"). The clues you gather are your data. The people or items you're investigating form your population (the entire group of interest). Since it's often impossible to examine everyone or everything, you'll take a sample, which is a smaller, manageable subset of that population. Your goal is to use this sample to understand the bigger picture.

Within your data, you'll find variables – these are characteristics or attributes that can change or vary. For instance, if you're studying students, variables could be 'hours spent studying', 'exam score', or 'age'. Variables that you think influence other variables are often called independent variables (or predictors, features). The variable you're trying to predict or explain is the dependent variable (or outcome, response). A parameter is a numerical characteristic of the whole population (like the true average height of all adults in a country), which we often try to estimate using our sample data. A statistic is a numerical summary calculated from a sample (like the average height of adults in your sample).

A hypothesis is like a detective's hunch – an educated guess or a claim about the population that you want to test using your data (e.g., "Students who study more get higher exam scores"). Statistical modeling often involves building a mathematical equation (the model) that describes how these variables relate to each other. The process of figuring out the best numbers for this equation, based on your sample data, is called parameter estimation. Finally, statistical significance is a term used to describe whether an observed effect in your sample is likely to be real and not just due to random chance.

Understanding these core concepts provides a crucial foundation for grasping the more complex aspects of statistical modeling discussed in subsequent sections.

Core Principles of Statistical Models

Building upon the introductory concepts, this section delves into the fundamental principles that govern statistical models. We will explore the different categories of models, the critical assumptions that underpin their validity, how variables are handled and parameters are estimated, and the criteria used to select the most appropriate model for a given task. A solid grasp of these principles is essential for anyone looking to build, interpret, or critically evaluate statistical models.

Exploring Different Types of Statistical Models

Statistical models come in various flavors, each designed to address different types of questions and data. Broadly, they can be categorized into descriptive, inferential, and predictive models, although some models can serve multiple purposes.

Descriptive models aim to summarize and highlight key features or patterns within a dataset. They don't necessarily try to make predictions or infer beyond the observed data. Think of calculating the average income in a neighborhood, creating a chart showing the distribution of customer ages, or identifying common themes in survey responses. These models help in understanding the data at hand. An example is using clustering algorithms to group similar customers based on their purchasing behavior.

Inferential models go a step further. They use data from a sample to draw conclusions or make inferences about a larger population from which the sample was drawn. This often involves hypothesis testing – for example, testing whether a new drug is more effective than an existing one, or if there's a statistically significant difference in exam scores between two teaching methods. The goal is to generalize findings from the sample to the broader context. These models rely heavily on probability theory to quantify the uncertainty associated with these inferences.

Predictive models, as the name suggests, are focused on forecasting future outcomes or predicting unknown values. By learning patterns from historical data, these models develop a framework to make educated guesses about new, unseen data. Examples include forecasting stock prices, predicting which customers are likely to churn, or identifying patients at high risk for a particular disease. Many machine learning techniques fall under this category. These three types of models—parametric, nonparametric, and semiparametric—are also key distinctions, relating to how their parameters are defined and estimated.

It's important to recognize that the lines between these types can blur. A regression model, for instance, can be used descriptively to understand relationships, inferentially to test hypotheses about those relationships in a population, and predictively to forecast future values. The specific application and the questions being asked will often determine how a model is categorized and used.

These resources can help build a foundational understanding of inference and predictive modeling.

Model Assumptions and Ensuring Validity

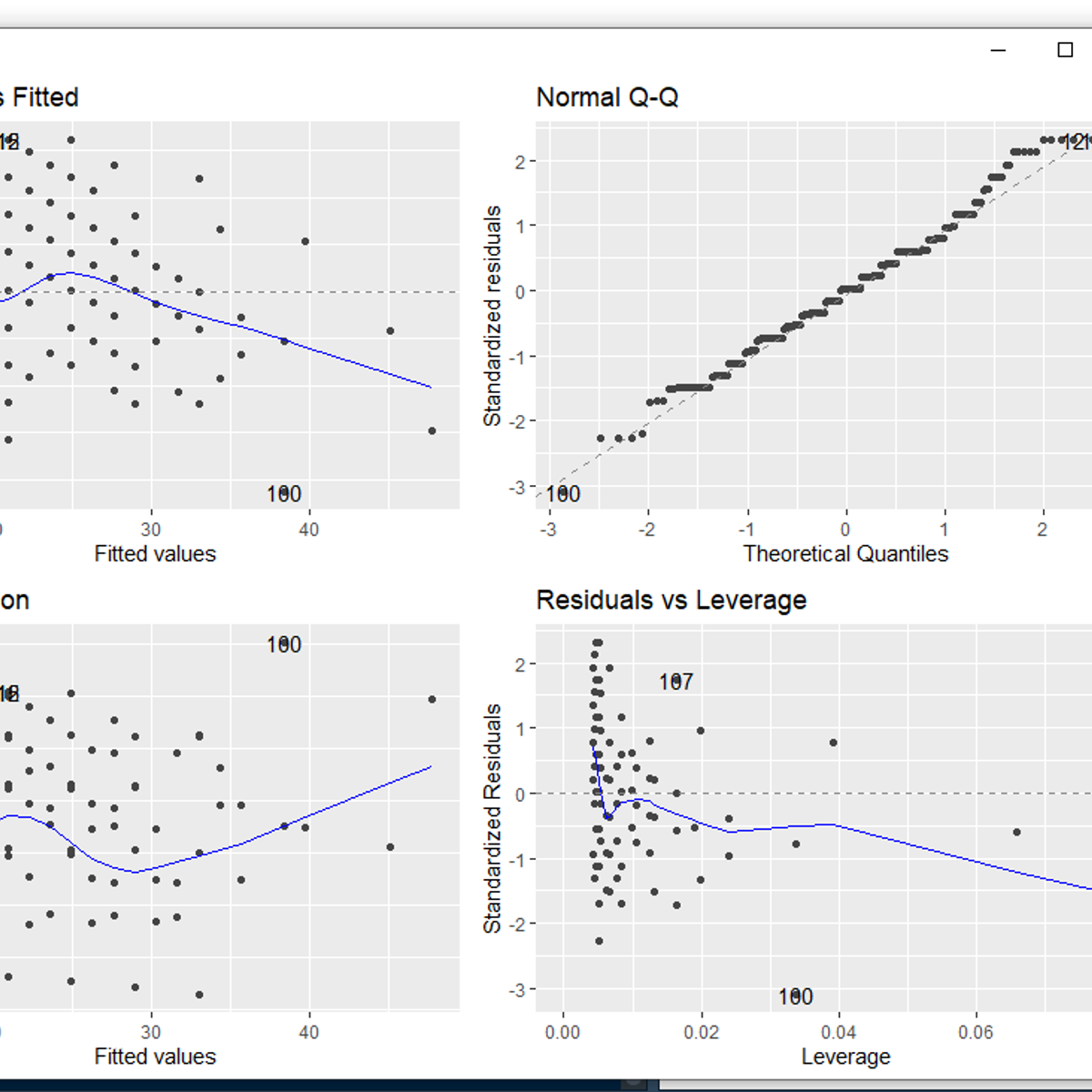

Every statistical model is built upon a set of assumptions about the data and the process that generated it. These assumptions are like the ground rules the model needs to operate correctly and produce reliable results. Common assumptions might include ideas about the distribution of the data (e.g., that errors are normally distributed), the independence of observations (e.g., that one data point doesn't influence another), or the nature of the relationship between variables (e.g., that the relationship is linear).

For a model's conclusions or predictions to be considered valid, its underlying assumptions must hold reasonably true for the data being analyzed. If these assumptions are violated significantly, the model's output can be misleading or entirely incorrect. For example, if a linear regression model is used when the true relationship between variables is strongly curved, the model will provide a poor fit and inaccurate predictions. Therefore, a crucial part of the modeling process involves checking these assumptions. This can be done through various diagnostic tests and graphical methods.

Ensuring model validity also extends to how well the model generalizes to new, unseen data. A model might perform exceptionally well on the data it was trained on but poorly on new data – a phenomenon known as overfitting. Techniques like cross-validation, which will be discussed later, are essential for assessing a model's predictive performance and guarding against overfitting. Ultimately, a valid model is one whose assumptions are met, provides a good fit to the data, and yields meaningful and generalizable results.

The following book is a classic text that delves deeply into the principles of statistical learning, including model assumptions and validation.

Understanding Variables and Parameter Estimation

Variables are the fundamental pieces of information that statistical models work with. They represent measurable characteristics that can differ across individuals or observations. Broadly, variables can be classified as either categorical (representing distinct groups or categories, like gender, color, or type of product) or continuous (representing numerical values that can fall anywhere along a scale, like height, temperature, or income).

In many models, especially in predictive and inferential contexts, we distinguish between dependent variables and independent variables. The dependent variable (often denoted as 'Y') is the outcome or phenomenon we are trying to understand or predict. Independent variables (often denoted as 'X's) are the factors we believe influence the dependent variable. For instance, in a model predicting crop yield (dependent variable), independent variables might include rainfall, temperature, and fertilizer amount.

Parameters are numerical values that define the specific form of the relationship between variables within a model. In a simple linear regression equation like Y = β₀ + β₁X + ε, β₀ (the intercept) and β₁ (the slope) are parameters. These parameters are typically unknown for the entire population and must be estimated from the sample data. Parameter estimation is the process of using statistical methods to find the values of these parameters that best fit the observed data. Common estimation techniques include Ordinary Least Squares (OLS) for linear regression, which minimizes the sum of the squared differences between observed and predicted values, and Maximum Likelihood Estimation (MLE), which finds parameter values that maximize the probability of observing the given data. The quality of these estimates is crucial for the model's accuracy and interpretability.

This course offers insight into how statistical models are fitted to data, which inherently involves understanding variables and estimating parameters.

Criteria for Selecting the Right Model

Choosing the most appropriate statistical model from a pool of potential candidates is a critical step in the analysis process. It's not always about finding the most complex model; often, a simpler model that adequately explains the data is preferable, a principle known as Occam's Razor or parsimony. Model selection involves balancing goodness-of-fit (how well the model describes the observed data) with model complexity (the number of parameters or variables included).

Several statistical criteria have been developed to help guide this selection process. Two of the most widely used are the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC). Both AIC and BIC are measures that penalize models for having too many parameters, thereby discouraging overfitting. When comparing multiple models, the model with the lower AIC or BIC value is generally preferred. These criteria attempt to find a model that will perform well not just on the current data, but also on new, unseen data.

Other factors also influence model selection. The theoretical underpinnings of the problem, the specific research question, the nature of the data (e.g., its distribution, the presence of outliers), and the interpretability of the model are all important considerations. Sometimes, a slightly less "optimal" model according to AIC/BIC might be chosen if it is much easier to understand and explain, or if it aligns better with existing domain knowledge. The process is often iterative, involving fitting several models, evaluating them based on these criteria and diagnostic checks, and then refining the choices.

These resources provide deeper insights into model selection and the theoretical underpinnings essential for choosing appropriate models.

Common Statistical Modeling Techniques

Having covered the core principles, we now turn to some of the specific tools in the statistical modeler's toolkit. This section will introduce several widely used statistical modeling techniques. We'll explore how regression analysis helps us understand relationships, how time series analysis allows us to forecast trends, the unique perspective Bayesian methods offer, and how statistical modeling principles are increasingly integrated with machine learning.

Unraveling Relationships with Regression Analysis

Regression analysis is a cornerstone of statistical modeling, used to examine and quantify the relationship between a dependent variable and one or more independent variables. It helps us understand how changes in the independent variables are associated with changes in the dependent variable, and it can also be used for prediction.

The most fundamental type is linear regression. Imagine you want to predict a house's price (dependent variable) based on its size (independent variable). Linear regression would try to fit a straight line through a scatter plot of house prices versus sizes. The equation of this line (e.g., Price = Intercept + Slope * Size) allows you to estimate the price for a new house given its size and understand how much the price tends to increase for each additional square foot. When multiple independent variables are used (e.g., size, number of bedrooms, age of the house), it's called multiple linear regression.

When the dependent variable is categorical rather than continuous (e.g., predicting whether a customer will click on an ad – 'yes' or 'no', or whether a loan applicant will default – 'yes' or 'no'), logistic regression is often used. Instead of predicting a numerical value directly, logistic regression predicts the probability of a particular outcome occurring. For example, it could estimate the probability that a patient with certain characteristics has a specific disease. These techniques are foundational for understanding associations and making predictions in many fields.

These courses provide practical introductions to regression techniques using popular software tools.

The following topic offers a broader exploration of regression methods.

Forecasting the Future with Time Series Analysis

Time series analysis deals with data points that are collected sequentially over time. Think of stock prices recorded daily, monthly sales figures, hourly temperature readings, or annual GDP growth. The defining characteristic is that the order of the data matters, as observations are often dependent on previous observations. The primary goal of time series analysis is often to understand the underlying patterns in the data and to forecast future values.

Several key components are often analyzed in time series data. Trend refers to the long-term direction of the series (e.g., a steady increase in sales over several years). Seasonality describes patterns that repeat at regular intervals (e.g., higher ice cream sales in the summer). Cyclical patterns are longer-term fluctuations that are not of a fixed period, often related to economic or business cycles. Finally, there's usually some random variation or noise.

Models like ARIMA (Autoregressive Integrated Moving Average) and exponential smoothing methods are commonly used to capture these components and make forecasts. For example, a company might use time series analysis to forecast next quarter's demand for its products, or an economist might use it to predict inflation rates. Understanding and modeling the temporal dependencies in data is crucial for accurate forecasting in many domains, from finance to meteorology.

These courses delve into the methods used for analyzing and forecasting time-dependent data.

This topic provides more resources on time series methods.

Embracing Uncertainty with Bayesian Methods

Bayesian methods offer a distinct approach to statistical inference and modeling, differing fundamentally from the more traditional "frequentist" techniques. The core idea of Bayesian statistics is the updating of beliefs in light of new evidence. It starts with a prior probability distribution (or simply "prior"), which represents our initial beliefs about a parameter before observing any data. Then, as data is collected, this prior belief is combined with the likelihood of the data (how probable the observed data is, given certain parameter values) to produce a posterior probability distribution (or "posterior"). This posterior distribution reflects our updated beliefs about the parameter after considering the data.

This framework is particularly intuitive for many real-world problems. For example, a doctor might have an initial belief about the likelihood of a patient having a certain disease (prior). When new test results come in (data/likelihood), the doctor updates their belief about the diagnosis (posterior). Bayesian models inherently quantify uncertainty through these probability distributions for parameters, rather than just point estimates and confidence intervals as often seen in frequentist approaches.

Bayesian methods are used in a wide range of applications, including spam filtering (updating the probability that an email is spam based on its words), medical diagnosis, A/B testing, and complex hierarchical modeling. While computationally more intensive in the past, modern algorithms like Markov Chain Monte Carlo (MCMC) and increased computing power have made Bayesian techniques much more accessible and widely used.

These books are excellent resources for understanding Bayesian principles and applications.

For those interested in advanced experimental methods often complemented by Bayesian approaches:

[course] Statistical Inference and Modeling for High-throughput ExperimentsThe Convergence of Statistical Modeling and Machine Learning

While sometimes presented as distinct fields, statistical modeling and machine learning (ML) share a deep historical connection and significant overlap. Many machine learning algorithms are, at their core, sophisticated statistical models designed for prediction, pattern recognition, and learning from data. Statistical modeling provides the theoretical underpinnings for understanding why these algorithms work, how to evaluate them, and the assumptions they make.

Techniques like linear and logistic regression, which are staples of statistical modeling, are also considered fundamental machine learning algorithms for supervised learning tasks. Decision trees, another popular ML method, have roots in statistical classification techniques. More advanced ML models, such as support vector machines, random forests, and even neural networks, build upon statistical concepts like optimization, probability distributions, and variance reduction, although their mathematical complexity can sometimes obscure these foundations.

The key difference often lies in the primary goal: statistical modeling frequently emphasizes inference and understanding the relationships between variables, while machine learning often prioritizes predictive accuracy, sometimes at the cost of interpretability. However, this distinction is blurring. There's a growing movement towards "explainable AI" which seeks to make complex ML models more transparent, drawing on principles from statistical inference. As data becomes more abundant and complex, the synergy between robust statistical principles and powerful machine learning algorithms will continue to drive innovation in how we extract knowledge and make predictions from data.

These resources explore the intersection of statistics and machine learning, often using Python, a popular language in both fields.

The Crucial Steps of Data Preparation and Cleaning

Before any sophisticated statistical model can be built, the raw data must undergo a critical phase of preparation and cleaning. This stage is often the most time-consuming part of the analytical process but is absolutely essential for producing reliable and meaningful results. "Garbage in, garbage out" is a common adage in data work, highlighting that even the most advanced model will fail if built on poor-quality data. This section covers key aspects of data preparation, including handling missing values, dealing with outliers, creating new informative features, and standardizing data.

Addressing Missing Data: Strategies and Implications

Missing data is a common problem in real-world datasets. Values might be missing for various reasons: a respondent skipped a survey question, a sensor malfunctioned, or data was simply not recorded. How these missing values are handled can significantly impact the subsequent analysis and model performance.

Several strategies exist for dealing with missing data. One simple approach is **deletion**, where either entire records (listwise deletion) or specific variables with too many missing values are removed. However, this can lead to a loss of valuable information and potentially biased results if the missingness is not completely random. Another common set of techniques falls under **imputation**, which involves filling in the missing values with estimated ones. Simple imputation methods include replacing missing values with the mean, median (for numerical data), or mode (for categorical data) of the observed values for that variable. More sophisticated imputation methods use regression models or machine learning algorithms (like k-nearest neighbors) to predict the missing values based on other variables in the dataset.

The choice of strategy depends on the extent of missingness, the nature of the data, and the reasons why the data are missing (e.g., missing completely at random, missing at random, or missing not at random). Failing to appropriately address missing data can lead to biased parameter estimates, reduced statistical power, and ultimately, incorrect conclusions from your statistical model.

This course touches upon the messy realities of data analysis, which includes dealing with imperfect data.

Identifying and Managing Outliers

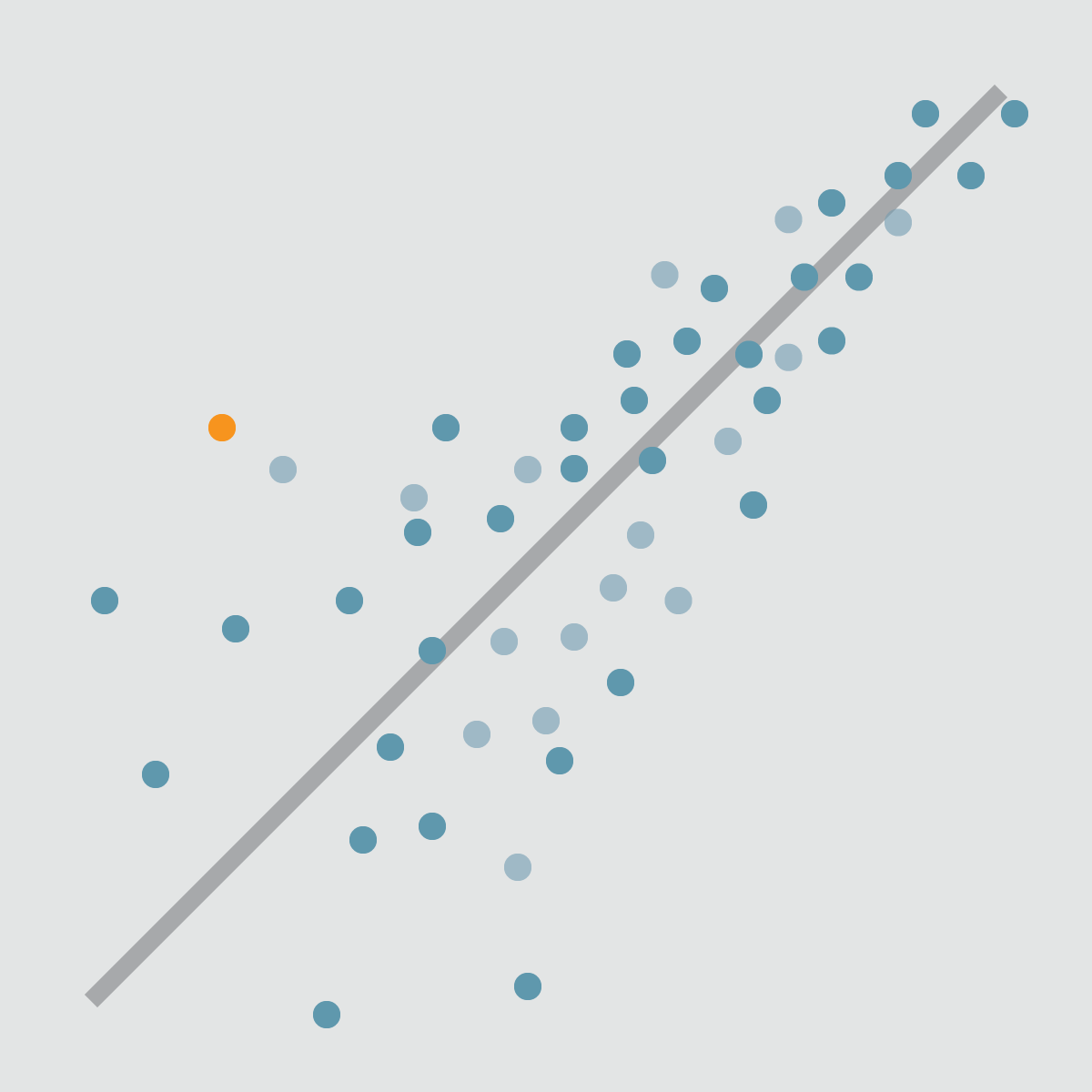

Outliers are data points that deviate markedly from other observations in a dataset. They can arise from various sources, including measurement errors, data entry mistakes, or genuinely unusual events. Identifying and appropriately managing outliers is crucial because they can disproportionately influence statistical analyses and model parameters, leading to skewed results and poor predictive performance.

Outlier detection can be performed using several methods. Visual techniques, such as box plots, scatter plots, and histograms, often make outliers apparent. Statistical methods include using z-scores (how many standard deviations a point is from the mean) or interquartile range (IQR) based rules. For example, data points falling below Q1 - 1.5*IQR or above Q3 + 1.5*IQR are often flagged as potential outliers in a box plot.

Once identified, the approach to managing outliers depends on their cause and context. If an outlier is confirmed to be an error (e.g., a typo like an age of 200 years), it should be corrected if possible or removed. If the outlier represents a genuine but rare event, the decision is more complex. Options include removing the outlier (if it's believed to be unrepresentative or overly influential), transforming the data (e.g., using a log transformation to reduce the impact of extreme values), or using robust statistical methods that are less sensitive to outliers. Ignoring outliers or mishandling them can significantly distort model estimates and reduce the reliability of your findings.

Crafting Informative Features through Feature Engineering

Feature engineering is the process of creating new input variables (features) from existing raw data to improve the performance of statistical models and machine learning algorithms. It's often described as more of an art than a science, requiring domain knowledge, creativity, and an understanding of how models work. Well-crafted features can significantly enhance a model's ability to detect patterns and make accurate predictions.

There are many ways to engineer features. This can involve transforming existing variables, such as taking the logarithm of a skewed variable to make its distribution more symmetrical, or converting a continuous variable into categorical bins (e.g., grouping ages into 'young', 'middle-aged', 'senior'). It can also mean creating new features by combining existing ones – for example, calculating a debt-to-income ratio from separate debt and income variables, or creating interaction terms that capture the combined effect of two or more variables (e.g., the effect of a drug might depend on both dosage and patient age).

Other feature engineering techniques include extracting relevant information from text data (like word counts or sentiment scores), decomposing date/time variables into components (like hour of day, day of week, month), or using dimensionality reduction techniques like Principal Component Analysis (PCA) to create a smaller set of uncorrelated features from a larger set of correlated ones. Effective feature engineering is often a key differentiator between a mediocre model and a highly effective one, as it helps the model to better capture the underlying structure of the data.

This course provides an essential overview of techniques for summarizing and exploring data, which is a precursor to feature engineering.

Standardizing Your Data: Normalization and Transformation

Many statistical models and machine learning algorithms perform better or converge faster when the input numerical variables are on a similar scale. This is where data standardization, which includes techniques like normalization and transformation, comes into play.

Normalization typically refers to rescaling numeric data to a fixed range, usually between 0 and 1. One common method is min-max scaling, where each value is transformed by subtracting the minimum value of the feature and dividing by the range (maximum minus minimum). Standardization (or z-score normalization) transforms data to have a mean of 0 and a standard deviation of 1. This is achieved by subtracting the mean of the feature from each value and then dividing by the standard deviation. Algorithms that use distance measures (like k-nearest neighbors or support vector machines) or gradient descent-based optimization (like many neural networks) are often sensitive to feature scales, so standardization can be particularly important for them.

Data transformation involves applying a mathematical function to each data point to change its distribution. This is often done to make the data conform more closely to the assumptions of a statistical model, such as normality. For example, if a variable is highly skewed, applying a logarithm, square root, or Box-Cox transformation can often make its distribution more symmetric and bell-shaped. Choosing the right transformation or scaling technique depends on the specific algorithm being used and the characteristics of the data itself. These preprocessing steps are vital for optimizing model performance and ensuring that all features contribute appropriately to the model training process.

Ensuring Model Robustness: Validation and Interpretation

Building a statistical model is only part of the journey. Once a model is constructed, it's crucial to assess its performance, understand its limitations, and interpret its findings in a meaningful way. This section focuses on the vital processes of model validation, the inherent trade-off between bias and variance, the balance between model complexity and interpretability, and the art of communicating model results effectively to various audiences.

Testing Your Model: Cross-Validation Techniques

A critical aspect of building reliable statistical models is ensuring they generalize well to new, unseen data. A model that performs perfectly on the data it was trained on but fails on new data is said to be "overfit" and isn't very useful in practice. Cross-validation is a set of techniques used to evaluate how the results of a statistical analysis will generalize to an independent dataset.

The most common approach involves splitting the available data into two or three sets: a training set, a validation set (optional, but good practice for tuning model hyperparameters), and a test set. The model is built using the training set. If a validation set is used, it helps in selecting the best model or tuning its parameters without "peeking" at the final test set. Finally, the test set, which the model has never seen before, is used to provide an unbiased estimate of its performance on new data.

A popular and robust cross-validation method is k-fold cross-validation. Here, the original dataset is randomly partitioned into 'k' equal-sized subsamples (or "folds"). Of the k subsamples, a single subsample is retained as the validation data for testing the model, and the remaining k-1 subsamples are used as training data. The cross-validation process is then repeated k times (the folds), with each of the k subsamples used exactly once as the validation data. The k results from the folds can then be averaged (or otherwise combined) to produce a single estimation. This approach provides a more reliable estimate of model performance than a single train-test split, especially when data is limited. Another variant, leave-one-out cross-validation (LOOCV), is an extreme case where k is equal to the number of data points, meaning each data point is used once as a validation set.

The Balancing Act: Bias-Variance Tradeoff

When evaluating statistical models, especially predictive models, two key sources of error are bias and variance. Understanding the bias-variance tradeoff is fundamental to building models that generalize well.

Bias refers to the error introduced by approximating a real-life problem, which may be very complicated, by a much simpler model. A model with high bias pays little attention to the training data and oversimplifies the underlying patterns. This often leads to underfitting, where the model performs poorly on both the training data and new, unseen data. For example, trying to fit a straight line (a simple linear model) to data that has a complex, curved relationship would result in high bias.

Variance, on the other hand, refers to the amount by which the model's prediction would change if we trained it on a different training dataset. A model with high variance pays too much attention to the training data, capturing not only the underlying patterns but also the noise and random fluctuations. This often leads to overfitting, where the model performs very well on the training data but poorly on new, unseen data because it has essentially memorized the training set rather than learned generalizable patterns. Complex models, like high-degree polynomials or deep neural networks, are prone to high variance if not regularized properly.

The tradeoff comes because models with low bias tend to have high variance, and vice-versa. As you increase model complexity, bias typically decreases, but variance increases. The goal is to find a sweet spot – a model complexity that minimizes the total error (a combination of bias squared, variance, and irreducible error). This usually involves finding a balance where the model is complex enough to capture the true underlying relationships but not so complex that it learns the noise in the training data.

This course offers insights into experimental design, which is crucial for understanding and potentially mitigating sources of bias and variance.

Interpretability Versus Complexity in Models

In statistical modeling, there's often a tension between a model's interpretability and its complexity (which often correlates with predictive power). Interpretability refers to the degree to which a human can understand the reasons behind a model's predictions or the relationships it has learned. Complexity refers to the intricacy of the model's mathematical form, often involving many parameters or non-linear relationships.

Simple models, like linear regression or decision trees with few branches, are generally highly interpretable. You can easily see which variables are important and how they affect the outcome. For example, in a linear regression model, the coefficients directly tell you the magnitude and direction of each variable's impact. This transparency is highly valued in fields where understanding the "why" is as important as, or even more important than, the "what." For instance, in medical diagnosis or credit scoring, regulators and users need to understand how decisions are made to ensure fairness and identify potential errors.

More complex models, such as deep neural networks, ensemble methods like random forests, or support vector machines, can often achieve higher predictive accuracy, especially on large and intricate datasets. However, they often operate as "black boxes," making it difficult to understand precisely how they arrive at their predictions. While techniques for explaining black-box models (often grouped under "Explainable AI" or XAI) are advancing, they don't always provide the same level of intuitive clarity as simpler models. The choice between interpretability and complexity often depends on the specific application, the stakes involved, and whether the primary goal is understanding, prediction, or a balance of both.

Effectively Communicating Model Results to Stakeholders

The final, and arguably one of the most important, steps in the statistical modeling process is communicating the results to stakeholders. These stakeholders might range from technical peers to business executives or the general public, each with different levels of statistical understanding. The ability to translate complex statistical findings into clear, concise, and actionable insights is a critical skill for any modeler.

Effective communication begins with understanding your audience. For a technical audience, you might delve into model diagnostics, parameter estimates, and statistical significance. For a non-technical audience, the focus should be on the implications of the findings, the key takeaways, and how the results can inform decisions, avoiding jargon where possible. Visualizations, such as charts and graphs, are powerful tools for conveying patterns and results intuitively. Data visualization can make complex relationships much easier to grasp than tables of numbers or dense text.

It's also crucial to be transparent about the model's limitations and assumptions. No model is perfect, and communicating the degree of uncertainty associated with predictions or conclusions is essential for managing expectations and ensuring responsible use of the model. Clearly articulating what the model can and cannot do, the data it was based on, and any potential biases helps build trust and credibility. Ultimately, the goal is to empower stakeholders to use the model's insights to make better-informed decisions.

This course focuses on the broader process of data analysis, which includes the crucial aspect of communicating findings.

This topic provides resources for developing skills in visual communication of data.

Ethical Dimensions of Statistical Modeling

As statistical models increasingly influence decisions that affect people's lives—from loan approvals and job applications to medical diagnoses and criminal justice—the ethical implications of their design and use have become a paramount concern. It is not enough for models to be statistically sound; they must also be fair, transparent, and accountable. This section explores some of the key ethical considerations in statistical modeling, including bias and fairness, privacy and data security, transparency and accountability, and the regulatory landscape.

Confronting Bias and Ensuring Fairness in Models

One of the most significant ethical challenges in statistical modeling is the potential for bias, which can lead to unfair or discriminatory outcomes. Bias can creep into models in several ways. It can originate from the data itself if historical data reflects existing societal biases or an unrepresentative sample. For example, if a hiring model is trained on past hiring data where certain demographic groups were underrepresented or unfairly evaluated, the model may learn and perpetuate these biases, even if the protected attributes (like race or gender) are not explicitly used as predictors.

Algorithmic bias can also arise from the choices made during model design, such as feature selection or the objective function the model is optimized for. The consequences of biased models can be severe, reinforcing systemic inequalities in areas like lending, employment, healthcare, and the justice system.

Addressing bias and ensuring fairness requires a multi-faceted approach. It involves careful examination of training data for potential biases, developing and using fairness metrics to evaluate model outcomes across different groups, and exploring bias mitigation techniques. These techniques can be applied at different stages: pre-processing (adjusting the data), in-processing (modifying the learning algorithm to be fairness-aware), or post-processing (adjusting the model's predictions). The definition of "fairness" itself can be complex and context-dependent, with various mathematical formalizations that may sometimes be in conflict. A continuous effort and critical evaluation are needed to build models that are not only accurate but also equitable.

Safeguarding Privacy and Data Security

Statistical modeling often relies on large datasets, which may contain sensitive personal information. Protecting the privacy of individuals whose data is used and ensuring the security of that data are fundamental ethical obligations. Breaches of data privacy can lead to significant harm, including identity theft, financial loss, and reputational damage.

Data anonymization and de-identification techniques aim to remove or obscure personally identifiable information (PII) before data is used for modeling. However, perfect anonymization can be challenging, as re-identification might still be possible by linking anonymized datasets with other publicly available information. Therefore, robust data security measures are also crucial. This includes implementing access controls, encryption, secure storage, and protocols for data handling and disposal to prevent unauthorized access, use, or disclosure.

Emerging techniques like differential privacy offer a more formal mathematical framework for privacy protection. Differential privacy involves adding a carefully calibrated amount of noise to the data or the query results, such_that the output of an analysis is not overly sensitive to any single individual's data. This provides a quantifiable guarantee of privacy while still allowing useful statistical insights to be drawn. Adhering to best practices in data privacy and security is essential for maintaining public trust and complying with legal regulations.

The Imperatives of Transparency and Accountability

As statistical models become more complex and influential, the need for transparency in how they work and accountability for their outcomes grows stronger. Transparency, often linked with interpretability or explainability, refers to the degree to which the internal workings of a model and the basis for its decisions can be understood. For "black box" models like complex neural networks, achieving transparency can be a significant challenge, as their decision-making processes are not easily decipherable by humans.

Lack of transparency can erode trust and make it difficult to identify or rectify errors or biases in a model. If individuals are adversely affected by a model's decision (e.g., denied a loan), they have a right to understand the reasons. Efforts in Explainable AI (XAI) aim to develop methods that can provide insights into the predictions of complex models, making them more understandable.

Accountability means establishing clear lines of responsibility for the development, deployment, and impact of statistical models. Who is responsible if a model produces biased outcomes or causes harm? This involves having governance structures in place, conducting thorough testing and validation, and continuously monitoring models post-deployment. Ensuring that there are mechanisms for redress when models make mistakes or produce unfair results is a key component of accountability. Both transparency and accountability are crucial for fostering responsible innovation and ensuring that statistical models serve societal good.

Navigating Regulatory Landscapes

The increasing use of data and statistical models has led to the development of various legal and regulatory frameworks designed to protect individuals' rights and ensure ethical data practices. Organizations that develop or deploy statistical models must be aware of and comply with these regulations.

Prominent examples include the General Data Protection Regulation (GDPR) in the European Union, which sets strict rules for the collection, processing, and storage of personal data and grants individuals rights such as the right to access their data and the right to an explanation for automated decisions. In the United States, industry-specific regulations like the Health Insurance Portability and Accountability Act (HIPAA) govern the use and disclosure of protected health information. Other regulations may pertain to fair lending, equal employment opportunity, and consumer protection, all of which can have implications for how statistical models are built and used in those domains.

Compliance involves not only adhering to the letter of the law but also embracing its spirit by fostering a culture of ethical data handling and responsible AI. This may include conducting data protection impact assessments, implementing privacy-by-design principles, and ensuring that models are regularly audited for fairness and accuracy. Navigating this evolving regulatory landscape requires ongoing attention and often legal expertise, but it is an essential part of using statistical modeling responsibly in the modern world.

Career Pathways in Statistical Modeling

A strong foundation in statistical modeling opens doors to a wide array of exciting and in-demand career opportunities across diverse sectors. As organizations increasingly rely on data to drive decisions, professionals skilled in building, interpreting, and applying statistical models are highly valued. This section explores the different types of roles available, the essential technical skills required, educational routes to enter the field, and some emerging specializations for those looking to carve out a niche.

If you're considering a career in this area, OpenCourser offers a vast library of Data Science courses and Mathematics courses that can help you build the necessary skills. You can save courses to a list to curate your own learning path and compare syllabi to find the best fit for your goals.

Bridging Academia and Industry: Diverse Roles

Careers in statistical modeling span both academic and industry settings, each offering unique challenges and rewards. In academia, statisticians and data scientists often engage in cutting-edge research, developing new statistical methodologies, or applying existing techniques to solve complex problems in various scientific disciplines. They also play a crucial role in teaching the next generation of modelers and researchers.

In industry and government, the roles are incredibly varied. A Data Scientist typically works with large datasets, applying statistical modeling and machine learning techniques to extract insights, build predictive models, and solve business problems. A Statistician might focus more on experimental design, survey methodology, and rigorous inferential analysis, often in specialized fields like pharmaceuticals, finance, or government agencies like the Census Bureau or the Bureau of Labor Statistics. Data Analysts often focus on descriptive and diagnostic analytics, interpreting data and creating reports to help businesses understand trends and performance. Other related roles include Quantitative Analyst ("Quant") in finance, Actuary in insurance, Operations Research Analyst, and Market Researcher, all of whom utilize statistical modeling principles extensively in their work.

The day-to-day activities can differ significantly. An academic researcher might spend more time on theoretical development and writing papers, while an industry data scientist might focus on building and deploying models that directly impact business outcomes, often working in multidisciplinary teams. Regardless of the setting, a strong analytical mindset and problem-solving skills are key.

Here are some of the core careers that heavily involve statistical modeling:

Essential Technical Skills for Aspiring Modelers

To succeed in the field of statistical modeling, a combination of theoretical knowledge and practical technical skills is essential. Proficiency in programming languages commonly used for data analysis is a primary requirement. Python, with its extensive libraries like Pandas, NumPy, Scikit-learn, and TensorFlow, has become a dominant language in data science and machine learning. R is another powerful language specifically designed for statistical computing and graphics, widely used by statisticians and researchers, and also boasting a vast ecosystem of packages.

Knowledge of SQL (Structured Query Language) is crucial for data retrieval and manipulation, as most data resides in relational databases. Familiarity with statistical software packages such as SAS or SPSS can also be beneficial, particularly in certain industries or research environments, though Python and R are increasingly prevalent.

Beyond specific tools, a strong understanding of statistical theory, probability, linear algebra, and calculus forms the mathematical foundation. Skills in data visualization, data wrangling (cleaning and transforming data), model evaluation, and effective communication of results are also highly important. For those leaning more towards machine learning applications, familiarity with cloud computing platforms (like AWS, Azure, or GCP) and big data technologies (like Spark) can be advantageous.

These courses can help you develop some of the core technical skills in R and Python, which are vital for statistical modeling.

For those interested in SAS, this course offers preparation for a key certification.

Educational Routes: Certifications and Advanced Degrees

There are multiple educational pathways to a career in statistical modeling. A bachelor's degree in statistics, mathematics, computer science, economics, or a related quantitative field often serves as a strong starting point. For more advanced roles, particularly in research or specialized areas of data science, a Master's degree or a Ph.D. is frequently preferred or required. These advanced degrees typically provide deeper theoretical understanding and more extensive research experience.

In today's rapidly evolving landscape, online courses and certifications have become invaluable resources for acquiring specific skills and demonstrating proficiency to employers. Platforms like OpenCourser aggregate thousands of courses from various providers, allowing learners to find programs that fit their specific needs, whether it's learning a new programming language like Python or R, mastering specific modeling techniques like time series analysis, or gaining an understanding of machine learning algorithms. Completing relevant online courses and earning certificates can be particularly beneficial for those transitioning from other fields or looking to upskill in specific areas. Many universities also offer online Master's degrees in data science or statistics.

Regardless of the formal educational path, continuous learning is key in this dynamic field. New techniques, tools, and ethical considerations are constantly emerging, so a commitment to ongoing professional development is essential for long-term success. Building a portfolio of projects, participating in data science competitions, and contributing to open-source projects can also be excellent ways to gain practical experience and showcase your abilities.

These comprehensive courses offer a solid grounding in data science principles, inference, and modeling, often forming part of broader specializations or degree programs.

[course] Data Science: Inference and Modeling [course] Data Analysis: Statistical Modeling and Computation in ApplicationsExploring Emerging Specializations

The field of statistical modeling is continually evolving, leading to the emergence of various exciting specializations. As organizations seek deeper insights and more sophisticated solutions, professionals with specialized expertise are in high demand.

Artificial Intelligence (AI) and Machine Learning (ML) Engineering represents a significant area of specialization. While data scientists often focus on developing models, ML engineers are typically responsible for deploying, scaling, monitoring, and maintaining these models in production environments. This requires a strong blend of software engineering skills and an understanding of ML operations (MLOps).

Biostatistics is a well-established specialization that applies statistical methods to biological and health-related data. Biostatisticians play a critical role in designing clinical trials, analyzing genetic data, conducting epidemiological studies, and improving public health outcomes. Similarly, Epidemiologists use statistical models to study the patterns, causes, and effects of health and disease conditions in defined populations. The COVID-19 pandemic highlighted the crucial role of these professionals.

Econometrics applies statistical methods to economic data to give empirical content to economic relationships. Econometricians build models to forecast economic trends, analyze policy impacts, and understand consumer behavior. Other emerging areas include causal inference, which focuses on determining cause-and-effect relationships from observational data, and specializations in areas like sports analytics, environmental statistics, or financial fraud detection. Pursuing a specialization can lead to deeper expertise and opportunities in niche domains.

Here are some careers and topics related to these specializations:

Challenges and the Future of Statistical Modeling

The field of statistical modeling, while powerful and transformative, is not without its challenges. As we look to the future, several key trends and obstacles are shaping its trajectory. These include the demands of handling increasingly large and complex datasets, the ongoing integration with artificial intelligence, the critical need to ensure research reproducibility, and the application of modeling to pressing global issues. Navigating these challenges effectively will be crucial for the continued advancement and responsible application of statistical modeling.

Tackling Big Data and Computational Hurdles

The explosion of data in recent years, often termed "Big Data," presents both immense opportunities and significant challenges for statistical modeling. Datasets are not only larger in volume but also more varied in type (e.g., text, images, streaming data) and arrive at higher velocities. Traditional statistical methods and software were often designed for smaller, more structured datasets and can struggle with the scale and complexity of modern data.

Computational hurdles are a major concern. Processing and analyzing terabytes or even petabytes of data require substantial computing power and efficient algorithms. This has spurred the development of new statistical techniques and computational frameworks designed for distributed computing, where analyses are spread across multiple machines. Technologies like Apache Spark and cloud computing platforms (AWS, Azure, Google Cloud) provide the infrastructure and tools to manage and analyze Big Data, but they also require modelers to acquire new skills.

Furthermore, high-dimensional data (datasets with a very large number of variables or features relative to the number of observations) can lead to statistical challenges like the "curse of dimensionality," making it harder to find meaningful patterns and increasing the risk of overfitting. Techniques for dimensionality reduction and feature selection, as well as regularization methods, become even more critical in this context. The future will likely see continued innovation in scalable algorithms and distributed systems to effectively harness the power of Big Data.

Synergies with Artificial Intelligence and Automation

The relationship between statistical modeling and Artificial Intelligence (AI) is becoming increasingly intertwined, with each field influencing and benefiting the other. Many AI systems, particularly those in machine learning, are fundamentally based on statistical models that learn from data to make predictions or decisions. As AI continues to advance, the demand for robust statistical underpinnings for these systems will grow.

One significant trend is the rise of Automated Machine Learning (AutoML). AutoML tools aim to automate some of the more time-consuming aspects of the modeling pipeline, such as data preprocessing, feature selection, model selection, and hyperparameter tuning. While this can increase efficiency and make modeling more accessible to non-experts, it also raises concerns about the potential for misuse if the underlying statistical assumptions and limitations are not well understood. The role of the statistical modeler is evolving from solely building models to also understanding, validating, and interpreting the outputs of these automated systems, ensuring they are used responsibly and effectively.

The synergy also flows the other way, with AI techniques being used to enhance statistical modeling itself. For instance, AI can help in discovering complex patterns in data that might be missed by traditional methods, or in developing more sophisticated simulation environments. The future will likely see a deeper integration, where statistical rigor informs AI development and AI tools augment the capabilities of statistical modelers.

Addressing the Reproducibility Crisis in Research

A significant challenge facing many scientific disciplines, including those that rely heavily on statistical modeling, is the "reproducibility crisis." This refers to the concerning observation that many published research findings are difficult or impossible to replicate when other researchers attempt to repeat the studies using the same methods and data. This crisis undermines the credibility of scientific findings and can slow down scientific progress.

Several factors contribute to this problem, including publication bias (a tendency for journals to publish positive or novel results more readily than negative or null findings), questionable research practices (like p-hacking or HARKing – Hypothesizing After the Results are Known), inadequate statistical methods or their misapplication, and insufficient detail in reporting methods and data.

Addressing the reproducibility crisis requires a concerted effort from researchers, journals, funding agencies, and institutions. For statistical modeling, this includes promoting transparency in methods and data, such as sharing code and datasets used for analysis. Encouraging pre-registration of studies (where hypotheses and analysis plans are registered before data collection) can help reduce bias. There's also a push for more rigorous statistical training, emphasizing the proper interpretation of results (e.g., p-values and confidence intervals) and the importance of replication studies. Tools and platforms that facilitate reproducible research workflows are also becoming more common. Ensuring that statistical modeling practices contribute to reliable and verifiable knowledge is crucial for maintaining scientific integrity.

Modeling for Global Impact: Climate and Beyond

Statistical modeling plays an increasingly vital role in addressing complex global challenges that affect societies worldwide. One of the most prominent examples is climate modeling. Scientists use sophisticated statistical models to understand past climate changes, project future climate scenarios under different greenhouse gas emission pathways, and assess the potential impacts on ecosystems and human societies. These models integrate vast amounts of data from satellites, weather stations, and ocean buoys, and they are essential for informing policy decisions related to climate change mitigation and adaptation.

Beyond climate change, statistical modeling is crucial in epidemiology for tracking disease outbreaks, predicting their spread, and evaluating the effectiveness of public health interventions, as was starkly demonstrated during the COVID-19 pandemic. In resource management, models help predict the availability of natural resources like water or fisheries and inform sustainable management practices. In humanitarian aid, statistical models can be used to forecast needs in disaster situations and optimize the allocation of relief supplies.

The application of statistical modeling to these global issues requires not only technical expertise but also a deep understanding of the context, ethical considerations, and the ability to communicate complex findings to diverse stakeholders, including policymakers and the public. The future will see an even greater need for skilled statistical modelers who can contribute to solving these pressing global problems and improving human welfare.

This course touches upon predictive analytics in population health, an area with significant global impact.

For understanding policy impacts, which often relate to global challenges, this course is relevant.

Frequently Asked Questions (FAQ)

This section addresses some common questions that individuals exploring statistical modeling, especially those considering it as a career path or new area of study, might have. The goal is to provide concise and helpful answers to guide your decision-making process.

What Industries Actively Hire Statistical Modelers?

Statistical modelers are in demand across a vast spectrum of industries because so many organizations rely on data to make informed decisions. The finance and insurance sectors are major employers, using modelers for risk assessment, fraud detection, algorithmic trading, and actuarial work. The healthcare and pharmaceutical industries hire statisticians and data scientists for clinical trial analysis, epidemiological studies, drug discovery, and personalized medicine.

Technology companies, from established giants to innovative startups, are constantly seeking talent to develop and improve search algorithms, recommendation systems, advertising targeting, and new AI-driven products. Marketing and retail firms employ modelers for customer segmentation, market research, sales forecasting, and optimizing marketing campaigns. Government agencies (e.g., Census Bureau, Bureau of Labor Statistics, environmental agencies, defense) rely on statistical modeling for policy analysis, economic forecasting, public health surveillance, and resource allocation.

Other significant sectors include consulting (providing statistical expertise to various clients), manufacturing (for quality control and process optimization), energy (for forecasting demand and optimizing production), and research and academia. Essentially, any field that collects and utilizes data to understand patterns, make predictions, or optimize processes is likely to have opportunities for skilled statistical modelers. The versatility of these skills makes it a career path with broad applicability.

These careers are often found in the industries mentioned above.

Is Strong Programming Knowledge Mandatory?

In the contemporary landscape of statistical modeling and data science, strong programming knowledge is increasingly becoming mandatory, or at the very least, a significant advantage. While some statistical analyses can be performed using graphical user interface (GUI)-based software like SPSS or JMP, the trend is heavily towards using programming languages for greater flexibility, reproducibility, power, and access to cutting-edge techniques.

The most common programming languages in this field are Python and R. Python is lauded for its versatility, extensive libraries for machine learning and data manipulation (e.g., Pandas, Scikit-learn), and integration into larger software systems. R is a language specifically designed for statistical computing and graphics, with a vast repository of packages covering almost any statistical technique imaginable. Proficiency in at least one of these is often a baseline expectation for many roles.

Beyond Python and R, knowledge of SQL is generally essential for extracting and managing data from databases. Depending on the specialization, familiarity with other languages or tools (like Scala for Spark, or C++ for high-performance computing) might also be beneficial. While some entry-level analyst roles might rely more on Excel or GUI software, a career path aiming for advanced statistical modeling or data science will almost certainly require solid programming skills. Those new to the field should prioritize learning Python or R alongside statistical concepts.

How Does Statistical Modeling Differ from Data Science?

Statistical modeling and data science are closely related fields, often with significant overlap, but they are not entirely synonymous. Think of statistical modeling as a core, foundational component *within* the broader discipline of Data Science.

Statistical modeling primarily focuses on developing and applying mathematical models to understand relationships between variables, make inferences from data, and test hypotheses, often with an emphasis on quantifying uncertainty and validating assumptions. Statisticians typically have deep knowledge of probability theory, experimental design, and various inferential techniques.

Data Science is a more multidisciplinary field that encompasses a wider range of skills and processes. While it heavily utilizes statistical modeling, it also involves aspects of computer science (e.g., programming, software engineering, database management, algorithm development), machine learning (often with a stronger focus on predictive accuracy, sometimes using "black-box" models), data engineering (e.g., building data pipelines, working with big data technologies), domain expertise, data visualization, and communication skills to translate findings into actionable business insights. Data scientists often work with larger, messier, and more diverse datasets (including unstructured data like text or images) than traditional statisticians might have.

In essence, a data scientist is often expected to have strong statistical modeling skills, but also proficiency in programming, machine learning, and handling the entire data lifecycle. A statistician might specialize more deeply in the theoretical and applied aspects of statistical methods. However, the lines are increasingly blurred, and many professionals incorporate elements of both.

This topic explores the broader field that heavily utilizes statistical modeling.

What Are the General Salary Expectations?

Salary expectations for roles involving statistical modeling can vary significantly based on factors such as geographic location, years of experience, level of education, specific industry, and the complexity of the role. However, generally, careers in this field are well-compensated due to the high demand for these skills.

According to the U.S. Bureau of Labor Statistics (BLS), the median annual wage for statisticians was $103,300 in May 2024. More recent data from May 2023 cited by Coursera indicates a median annual wage of $116,440 for statisticians. Experience plays a significant role; Glassdoor data cited by Coursera suggests entry-level (0-1 years) statisticians might earn around $95,336, while those with 15+ years of experience could earn $177,758 or more. ZipRecruiter reports an average annual pay for a "Statistics" role in the United States as $83,657 as of April 2025, with a range typically between $57,000 and $116,000. For data scientists, salaries are often even higher, reflecting the broader skillset typically required. The BLS reported a median annual wage for data scientists as $100,910 in their 2021 survey, while other sources like DataLemur suggest an average of $122,738 as of 2024 for data scientists.

Industries also influence pay. For example, statisticians in pharmaceutical and medicine manufacturing tend to earn more than those in academic institutions. Roles in high-cost-of-living areas or major tech hubs often command higher salaries. The job outlook for mathematicians and statisticians is projected to grow 11% from 2023 to 2033, much faster than the average for all occupations, indicating continued strong demand. This positive outlook helps support competitive salary levels. For the most up-to-date and specific salary information, it's always recommended to consult resources like the BLS Occupational Outlook Handbook and reputable job/salary websites for your specific region and target role.

How is Artificial Intelligence Impacting Job Prospects for Statistical Modelers?

Artificial Intelligence (AI), particularly its subfield of machine learning, is having a profound impact on the field of statistical modeling and the job prospects for those within it. Rather than making statistical modelers obsolete, AI is largely transforming roles and creating new opportunities, while also demanding new skill sets.

AI and ML tools can automate some of the more routine aspects of statistical modeling, such as basic data cleaning, feature selection, or even running multiple models to find a good starting point (AutoML). This can free up modelers to focus on more complex problems, deeper interpretation, ethical considerations, and the crucial "last mile" of translating model outputs into actionable insights. The demand is shifting towards professionals who can not only build models but also understand the statistical underpinnings of AI algorithms, critically evaluate their outputs, and ensure they are used responsibly and ethically.

Furthermore, AI is generating vast amounts of new data and creating entirely new types of problems that require statistical expertise. For example, analyzing the performance of AI systems, developing AI for scientific discovery, or ensuring fairness and transparency in AI decision-making all require strong statistical skills. Job prospects are likely to be strongest for those who can bridge the gap between traditional statistical rigor and modern AI techniques, effectively leveraging AI tools while maintaining a critical, analytical perspective. Continuous learning and adaptation will be key, as the tools and techniques in this space are evolving rapidly.

What are the Best Resources for Self-Learning Statistical Modeling?

Self-learning statistical modeling is definitely achievable with dedication and the right resources. A multi-faceted approach often works best, combining theoretical learning with practical application.

Online Courses: Platforms like OpenCourser are invaluable for finding a wide range of courses. You can search for introductory statistics courses, specialized topics like regression or time series analysis, or comprehensive data science programs. Look for courses that include hands-on exercises using software like R or Python. OpenCourser's features, such as the ability to save courses to lists and compare syllabi, can help you structure your learning path. Many universities also offer Massive Open Online Courses (MOOCs) through various platforms, often taught by leading academics.

Textbooks: Several classic textbooks provide comprehensive coverage of statistical modeling. "An Introduction to Statistical Learning" (often with R or Python labs) is a highly recommended starting point, known for its accessible explanations and practical examples. [fmxpts] For a more advanced and theoretical treatment, "The Elements of Statistical Learning" is a seminal work. [7b0dns] Books focusing on specific areas, like Bayesian statistics or time series, can be added as your knowledge grows.

Projects and Practice: Theory alone isn't enough. Applying what you learn to real-world or simulated datasets is crucial. Websites like Kaggle host data science competitions and provide datasets that you can use to practice your modeling skills. You can also find public datasets from government agencies or research institutions. Start with simple projects and gradually tackle more complex ones. Document your projects, perhaps on GitHub, to build a portfolio.

Community and Documentation: Engage with online communities like Stack Overflow or Cross Validated for Q&A. The official documentation for programming languages (Python, R) and their statistical libraries (Scikit-learn, Statsmodels) are also excellent resources. Reading blogs and articles from practitioners can provide practical insights and keep you updated on new trends. For guidance on making the most of online learning, OpenCourser's Learner's Guide offers articles on topics like creating a curriculum and staying disciplined.

Consider these foundational books for self-study:

This introductory course can also be a good starting point for self-learners.

Useful Links and Further Resources

To further your exploration of statistical modeling, several organizations and resources can provide valuable information, networking opportunities, and ongoing education.

One of the foremost organizations is the American Statistical Association (ASA). Founded in 1839, the ASA is the world's largest community of statisticians and serves professionals in industry, government, and academia. They publish numerous journals, host conferences, and provide resources for career development and statistical education. Their mission includes supporting excellence in statistical practice and promoting the proper application of statistics.

For government statistics and labor market information related to statisticians and related fields, the U.S. Bureau of Labor Statistics (BLS) Occupational Outlook Handbook is an excellent resource. It provides detailed information on job duties, education requirements, pay, and job outlook for various occupations, including mathematicians and statisticians.

Many universities with strong statistics or data science departments also share valuable resources, research papers, and course materials online. Exploring the websites of institutions known for their contributions to statistical theory and application can be very insightful. Additionally, platforms like OpenCourser's browse page can help you discover a wide range of topics and courses related to statistical modeling and its various specializations, allowing you to tailor your learning journey.

Statistical modeling is a dynamic and intellectually rewarding field that offers diverse opportunities to make a significant impact. It demands a blend of analytical rigor, technical skill, and creative problem-solving. While the path to mastering statistical modeling requires dedication and continuous learning, the ability to transform data into understanding and foresight is an invaluable asset in our increasingly data-driven world. Whether you are just starting to explore this domain or are looking to deepen your existing knowledge, the journey into statistical modeling is one of ongoing discovery and growth.