Data Wrangling

vigating the World of Data Wrangling

Data wrangling, also known as data munging or data remediation, is the comprehensive process of taking raw data and transforming it into a more usable, structured, and reliable format. This crucial step occurs before any in-depth analysis can take place, ensuring that the insights derived are built upon a solid foundation of high-quality data. Think of it as preparing your ingredients before cooking a complex meal; without properly cleaned and organized components, the final dish (your analysis) is unlikely to be successful.

The world of data wrangling can be quite engaging for individuals who enjoy problem-solving and have a keen eye for detail. There's a certain satisfaction in taking a chaotic, messy dataset and systematically bringing order to it. Furthermore, the ability to unlock the stories hidden within data, making it ready for powerful analytical techniques and machine learning models, is a significant motivator for many in this field. The impact of this work is tangible, as well-wrangled data directly contributes to more accurate predictions, informed business strategies, and ultimately, better decision-making across various industries.

Introduction to Data Wrangling

This section will lay the groundwork for understanding the fundamental concepts of data wrangling and its significance in today's data-driven landscape.

Defining Data Wrangling and Its Scope

At its core, data wrangling encompasses a variety of processes designed to convert raw data inputs into more readily usable outputs. This involves several key activities such as identifying and handling errors, structuring data for consistency, and enriching it to enhance its analytical value. The scope of data wrangling is broad, touching upon any scenario where raw data needs to be prepared before it can be effectively utilized for analysis, reporting, or feeding into machine learning algorithms. It's the critical first step in turning vast amounts of often messy information into a valuable asset.

It's important to distinguish data wrangling from data cleaning, though the terms are sometimes used interchangeably. Data cleaning is a vital part of data wrangling, specifically focused on identifying and correcting inaccuracies, inconsistencies, and errors within a dataset. Data wrangling, however, is the overarching methodology that includes cleaning, but also extends to structuring, transforming, and enriching data to meet the specific needs of a project.

The ultimate aim of data wrangling is to ensure that the data you are working with is accurate, complete, and in the correct format for whatever analytical task comes next. This meticulous preparation is what allows for meaningful insights to be drawn and for data-driven decisions to be made with confidence.

The Role of Data Wrangling in Data Science and Analytics Workflows

Data wrangling is an indispensable component of both data science and analytics workflows. Analysts and data scientists often report that a significant portion of their time, sometimes estimated to be as high as 45% to 80%, is spent on preparing and transforming data before any actual analysis can begin. This highlights the critical nature of wrangling: without it, the subsequent steps of data exploration, model building, and insight generation would be severely hampered, if not impossible.

In a typical data science pipeline, data wrangling acts as the bridge between raw, often chaotic data sources and the sophisticated analytical techniques used to extract knowledge. It ensures that the data fed into machine learning models is clean, consistent, and relevant, which is paramount for the accuracy and reliability of these models. Similarly, in business analytics, well-wrangled data underpins the creation of accurate reports, dashboards, and visualizations that inform strategic decisions.

The quality of your analytical outcomes is directly tied to the quality of your input data. If data is incomplete, contains errors, or is poorly structured, any analysis performed on it will likely be flawed, leading to misguided conclusions and potentially costly mistakes. Therefore, data wrangling serves as a foundational pillar, ensuring the integrity and utility of data throughout the entire analytical lifecycle.

Key Objectives and Outcomes of Data Wrangling

The primary objective of data wrangling is to transform raw data into a high-quality, usable dataset that is fit for its intended analytical purpose. This involves several interconnected goals. One key objective is to improve data quality by identifying and rectifying errors, inconsistencies, missing values, and outliers. This ensures that the data is accurate and reliable.

Another crucial objective is to structure and organize data. Raw data often comes from disparate sources and in various formats. Data wrangling aims to standardize formats, reshape datasets, and integrate different data sources to create a cohesive and analyzable whole. This makes the data easier to work with and understand. Furthermore, data wrangling seeks to enrich the data by adding relevant information or deriving new features that can enhance the subsequent analysis.

The ultimate outcomes of effective data wrangling are numerous. Firstly, it leads to more accurate and reliable analytical results, enabling better-informed decision-making. Secondly, it increases the efficiency of the overall data analysis process by reducing the time analysts and data scientists spend on data preparation. Finally, well-wrangled data facilitates the use of advanced analytical techniques and machine learning models, unlocking deeper insights and predictive capabilities.

Common Industries Relying on Data Wrangling

Data wrangling is not confined to a single industry; its applications are widespread wherever data is collected and analyzed to drive decisions and innovation. The finance industry, for example, heavily relies on data wrangling to process vast amounts of transactional data, market data, and customer information for risk assessment, fraud detection, and regulatory compliance.

Healthcare is another sector where data wrangling is critical. Patient records, clinical trial data, and public health information need meticulous preparation to ensure accuracy for medical research, treatment efficacy analysis, and healthcare management. Similarly, the retail and e-commerce industries use data wrangling to clean and structure customer purchase histories, website interactions, and inventory data to personalize marketing, optimize supply chains, and improve customer experience.

Manufacturing companies leverage data wrangling to make sense of sensor data from production lines for quality control and predictive maintenance. Marketing departments across all industries wrangle data from various campaigns and customer touchpoints to measure effectiveness and understand consumer behavior. Even in fields like unscripted television production, data wranglers are essential for managing and backing up digital footage from location shoots. Essentially, any organization that aims to harness the power of its data will find data wrangling to be an indispensable part of its operations.

Core Techniques in Data Wrangling

This section delves into the practical methods and approaches that form the backbone of the data wrangling process. Understanding these techniques is crucial for anyone looking to effectively manipulate and prepare data for analysis.

Data Cleaning and Preprocessing Methods

Data cleaning and preprocessing are foundational to data wrangling, focusing on identifying and rectifying errors, inconsistencies, and inaccuracies within a dataset. This step is crucial because the quality of your analysis is directly dependent on the quality of your data. Common tasks in data cleaning include handling missing values, which might involve removing records with missing data, or imputing (filling in) missing values based on statistical methods or other information in the dataset.

Another critical aspect is dealing with erroneous data. This can include correcting typos, standardizing formats (e.g., ensuring all dates are in a consistent YYYY-MM-DD format), and removing duplicate entries that could skew analysis. Outlier detection and treatment are also important; outliers are data points that are significantly different from other observations and can distort statistical analyses. Depending on the context, outliers might be removed, corrected if they are errors, or investigated further to understand their cause.

Preprocessing also involves tasks like data type conversion (e.g., ensuring numerical data is stored as numbers and not text) and addressing inconsistencies in data representation (e.g., "New York", "NY", and "N.Y." all referring to the same location). The overall goal is to produce a dataset that is as accurate, consistent, and complete as possible, forming a reliable basis for subsequent transformation and analysis.

These courses provide a solid introduction to the tools and techniques used in data cleaning and preprocessing.

For those looking to deepen their understanding through practical application, these books offer valuable insights and methodologies.

Data Transformation and Normalization

Data transformation is the process of changing the structure, format, or values of data to make it suitable for analysis. This can involve a wide range of operations. For instance, you might need to aggregate data, such as calculating monthly sales totals from daily transaction records. Another common transformation is pivoting data, which involves converting rows into columns or vice versa to achieve a more analyzable structure.

Normalization, in the context of data wrangling, often refers to scaling numerical data to a standard range, such as 0 to 1 or -1 to 1. This is particularly important for certain machine learning algorithms that are sensitive to the scale of input features. For example, if one feature ranges from 0 to 1000 and another from 0 to 1, the algorithm might incorrectly give more weight to the feature with the larger range. Normalization helps to prevent this by putting all features on a similar scale.

Other transformation techniques include creating new features from existing ones (feature engineering), such as calculating an age from a birth date, or binning continuous data into discrete categories (e.g., grouping ages into "young," "middle-aged," and "senior"). The specific transformations applied will depend heavily on the analytical goals and the nature of the data itself. The objective is always to make the data more amenable to the planned analyses and to potentially uncover underlying patterns.

To gain hands-on experience with data transformation and normalization, consider exploring these resources.

Handling Missing Data and Outliers

Missing data and outliers are common issues in raw datasets that can significantly impact the validity of analytical results if not handled appropriately. Missing data refers to the absence of values for one or more variables in an observation. There are various strategies for dealing with missing data. One simple approach is to delete the rows or columns containing missing values, but this can lead to a loss of valuable information, especially if the amount of missing data is substantial. Another common technique is imputation, where missing values are replaced with estimated values. These estimates can be simple, like the mean, median, or mode of the variable, or more sophisticated, derived using regression or machine learning techniques.

Outliers are data points that deviate markedly from other observations. They can be caused by measurement errors, data entry mistakes, or genuine, albeit rare, occurrences. Identifying outliers often involves statistical methods (e.g., Z-scores, interquartile range) or visualization techniques (e.g., box plots, scatter plots). Once identified, the approach to handling outliers depends on their nature. If an outlier is determined to be an error, it should be corrected or removed. If it represents a genuine extreme value, the decision to remove it or keep it depends on the specific analysis and its sensitivity to extreme values. Sometimes, transformation techniques like log transformation can reduce the impact of outliers.

The choice of method for handling missing data and outliers is critical and should be guided by an understanding of the data and the objectives of the analysis. Careless handling can introduce bias or lead to misleading conclusions.

These courses offer practical guidance on identifying and managing missing data and outliers.

Integration of Disparate Data Sources

In many real-world scenarios, the data needed for analysis resides in multiple, disparate sources. These sources might include databases, spreadsheets, APIs, web pages, or flat files, each with its own structure, format, and level of quality. Data integration is the process of combining data from these different sources into a unified and consistent dataset that can be used for analysis. This is a challenging but essential part of data wrangling.

A key step in data integration is identifying common fields or keys that can be used to link records across different datasets (e.g., a customer ID, product SKU, or timestamp). Once these links are established, data can be merged or joined. However, this process is often complicated by inconsistencies in how data is represented across sources. For example, the same entity might have slightly different names or identifiers in different systems. Resolving these discrepancies, often referred to as entity resolution or record linkage, is a critical aspect of successful data integration.

Furthermore, data integration involves harmonizing data schemas and formats. This might include standardizing column names, data types, and units of measurement. The goal is to create a single, comprehensive view of the data that allows for holistic analysis. Effective data integration can unlock significant value by enabling analysts to see relationships and patterns that would not be apparent from looking at individual data sources in isolation.

Understanding how to effectively combine data from various origins is a valuable skill. These resources can help you learn more about data integration techniques.

Tools and Technologies for Data Wrangling

A variety of tools and technologies are available to assist with the often complex tasks of data wrangling. The choice of tool often depends on the size and complexity of the data, the specific wrangling tasks required, and the user's technical proficiency.

Overview of Popular Tools (e.g., Python, R, SQL)

Several programming languages and specialized tools are widely used for data wrangling. Python, with its rich ecosystem of libraries, is a dominant force in the data science world, and by extension, in data wrangling. Libraries such as Pandas provide powerful and flexible data structures (like DataFrames) and functions for data manipulation, cleaning, transformation, and integration. NumPy is another fundamental Python library, essential for numerical computation and handling large, multi-dimensional arrays.

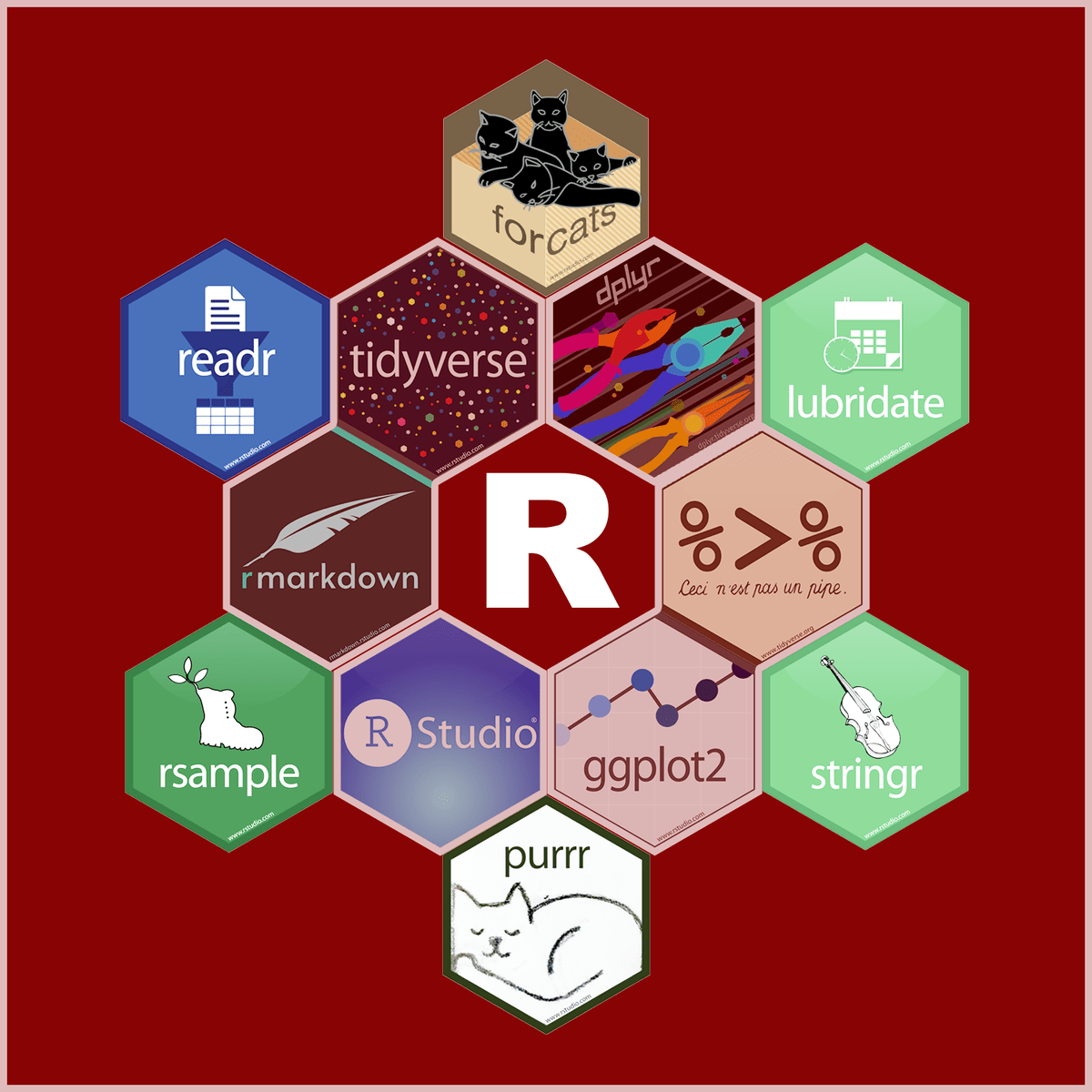

R is another programming language highly favored by statisticians and data analysts, particularly for its robust capabilities in statistical computing and data visualization. Packages within the Tidyverse, such as `dplyr` for data manipulation and `tidyr` for tidying data, offer an intuitive and powerful framework for data wrangling tasks.

SQL (Structured Query Language) is indispensable when working with relational databases. It is used to retrieve, filter, join, and aggregate data directly within the database, often serving as a first step in the data wrangling process before data is extracted for further manipulation in Python or R. Beyond these core programming languages, tools like Microsoft Excel with its Power Query feature, and specialized data preparation platforms like Alteryx, Trifacta, and OpenRefine, also play significant roles. Excel is often used for smaller datasets and by users who prefer a graphical interface, while dedicated platforms offer more advanced features and automation capabilities for complex wrangling workflows.

These courses offer comprehensive introductions to using popular programming languages for data wrangling.

For those who prefer learning through books, these titles are highly recommended for mastering Python and R for data analysis and wrangling.

You may also wish to explore these topics if you are interested in the tools discussed.

Comparison of Open-Source vs. Proprietary Software

When selecting data wrangling tools, a key consideration is whether to use open-source software or proprietary (commercial) software. Open-source tools, such as Python and R along with their extensive libraries, offer significant advantages. They are typically free to use, which can be a major cost saving, especially for individuals and smaller organizations. They also benefit from large, active communities that contribute to their development, provide support through forums and documentation, and create a vast array of extension packages that enhance their functionality. The transparency of open-source code can also be a plus, allowing users to understand and even modify the underlying algorithms if needed.

Proprietary software, on the other hand, often comes with dedicated customer support, polished user interfaces, and integrated workflows that might be more user-friendly for non-programmers. Companies developing these tools invest in research and development to provide specialized features and ensure a certain level of reliability and performance. Examples include tools like Alteryx, Tableau Prep, or SAS Data Preparation. These often provide visual, drag-and-drop interfaces that can speed up common wrangling tasks without requiring coding.

The choice between open-source and proprietary tools often depends on factors like budget, the technical skills of the team, the scale and complexity of data wrangling tasks, and the need for specific features or enterprise-level support. Many organizations use a combination of both, leveraging the flexibility and power of open-source languages for complex tasks and the ease of use of proprietary tools for more standardized operations or for users with less programming experience.

Automation Tools and Frameworks

Given that data wrangling can be a time-consuming and repetitive process, automation plays a crucial role in improving efficiency and consistency. Automation tools and frameworks allow data professionals to define a sequence of wrangling steps (a workflow or pipeline) that can be executed automatically whenever new data arrives or updates are needed. This not only saves considerable time and manual effort but also reduces the risk of human error.

Programming languages like Python and R are inherently well-suited for automation, as scripts can be written to perform complex wrangling tasks programmatically. Libraries and functions within these languages can be chained together to create robust data processing pipelines. Tools like Apache Airflow or cloud-based workflow management services (e.g., AWS Glue, Azure Data Factory) provide more structured frameworks for scheduling, monitoring, and managing complex data pipelines that may involve multiple wrangling steps and dependencies.

Many dedicated data wrangling platforms also offer automation features. These tools often allow users to build wrangling workflows visually and then schedule them to run automatically. The trend is towards more intelligent automation, where the tools not only execute predefined steps but also learn from past wrangling activities to suggest transformations or identify potential data quality issues proactively. By automating routine tasks, data professionals can focus more on the analytical aspects of their work and on deriving insights from the prepared data.

These resources provide insights into tools and frameworks that facilitate the automation of data wrangling tasks.

Emerging AI-Driven Solutions

The field of data wrangling is increasingly being influenced by advancements in Artificial Intelligence (AI) and Machine Learning (ML). AI-driven solutions are emerging that aim to automate and enhance various aspects of the data wrangling process, making it faster, more efficient, and more intelligent. These tools can learn from historical data and user interactions to suggest relevant data transformations, identify anomalies and errors, and even automatically clean and structure datasets with minimal human intervention.

For example, ML algorithms can be used to predict missing values with greater accuracy than traditional statistical methods. AI can also assist in identifying complex patterns and relationships in data that might be indicative of quality issues or that could inform data transformation strategies. Some advanced tools are incorporating natural language processing (NLP) capabilities, allowing users to specify wrangling tasks in plain English rather than writing code or navigating complex interfaces.

The goal of these AI-driven solutions is not necessarily to replace human oversight entirely, but rather to augment the capabilities of data professionals, handling the more tedious and repetitive tasks and allowing humans to focus on more strategic and complex aspects of data preparation. As AI technology continues to mature, its role in automating and optimizing data wrangling workflows is expected to grow significantly, leading to more agile and insightful data analysis.

This course explores how generative AI is being applied in data wrangling and other business applications.

Challenges in Data Wrangling

Despite the availability of powerful tools and techniques, data wrangling is often fraught with challenges. These hurdles can range from the sheer scale of data to issues of privacy and the inherent complexities of unstructured information.

Scalability Issues with Large Datasets

One of the most significant challenges in modern data wrangling is dealing with the sheer volume, velocity, and variety of data, often referred to as Big Data. Traditional data wrangling tools and techniques that work well for smaller datasets may struggle or fail entirely when confronted with terabytes or even petabytes of information. Processing such large datasets can lead to performance bottlenecks, where operations take an unacceptably long time to complete, or even cause systems to run out of memory and crash.

Handling high-velocity data, such as streaming data from IoT devices or social media feeds, adds another layer of complexity. The wrangling process must be able to keep up with the incoming data flow in real-time or near real-time, which requires highly efficient and optimized workflows. The variety of data, including structured, semi-structured (like JSON or XML), and unstructured data (like text or images), also poses scalability challenges, as different types of data may require different wrangling approaches and tools.

To address these scalability issues, organizations often turn to distributed computing frameworks like Apache Spark or cloud-based data processing services. These platforms allow data wrangling tasks to be parallelized across multiple machines, significantly speeding up processing times for large datasets. However, effectively utilizing these distributed systems often requires specialized skills and careful design of wrangling pipelines.

These resources delve into the challenges and solutions for wrangling large-scale datasets.

Data Privacy and Security Concerns

Data privacy and security are paramount concerns throughout the data lifecycle, and data wrangling is no exception. Datasets often contain sensitive information, such as personally identifiable information (PII), financial records, or health data. During the wrangling process, this data is accessed, manipulated, and potentially transformed, creating risks if not handled with extreme care. Unauthorized access, data breaches, or accidental exposure of sensitive information can have severe consequences, including legal penalties, reputational damage, and loss of customer trust.

Data wranglers must be acutely aware of and adhere to relevant data privacy regulations, such as the General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA), or the Health Insurance Portability and Accountability Act (HIPAA) in the United States. This involves implementing appropriate security measures to protect data during wrangling, such as data encryption, access controls, and secure storage. Techniques like data anonymization or pseudonymization, where direct identifiers are removed or replaced, may be necessary to reduce privacy risks while still allowing for meaningful analysis.

Ensuring that data wrangling processes are compliant with these regulations often involves establishing clear data governance policies and procedures. This includes documenting data handling practices, tracking data lineage (where data comes from and how it's transformed), and conducting regular audits to ensure compliance. Balancing the need to thoroughly wrangle data for analytical insights with the imperative to protect individual privacy is a critical and ongoing challenge.

Handling Unstructured Data Formats

While structured data, neatly organized in rows and columns like in a relational database, is relatively straightforward to wrangle, unstructured data presents a significantly greater challenge. Unstructured data includes formats like plain text documents, emails, social media posts, images, audio, and video. This type of data does not have a predefined data model or organization, making it difficult to process using traditional wrangling techniques.

Extracting meaningful information from unstructured data often requires specialized tools and techniques from fields like Natural Language Processing (NLP) for text data, or computer vision for image data. For example, wrangling text data might involve tasks like tokenization (breaking text into words or phrases), stemming or lemmatization (reducing words to their root form), removing stop words (common words like "the" or "is"), and identifying named entities (like people, organizations, or locations).

Transforming unstructured data into a structured or semi-structured format that can be analyzed often involves creating numerical representations, such as vector embeddings for text or feature vectors for images. The sheer volume and complexity of unstructured data add to the difficulty. Developing effective strategies for wrangling diverse unstructured data formats is an ongoing area of development and a key skill for data professionals working with modern datasets.

This course touches upon handling various data types, which is crucial when dealing with unstructured formats.

MongoDB is a NoSQL database often used for managing unstructured and semi-structured data. Understanding its principles can be beneficial.

Time and Resource Constraints

Data wrangling is notoriously time-consuming, often cited as one of the most labor-intensive parts of any data project. This significant time investment can put a strain on project timelines and budgets. The iterative nature of data wrangling—where data is explored, cleaned, transformed, and validated, often requiring multiple cycles—contributes to this time commitment. Identifying all potential data quality issues and determining the best transformations can be a complex and exploratory process.

Beyond the time commitment of data professionals, wrangling can also be resource-intensive in terms of computational power and storage, especially when dealing with large or complex datasets. Running complex transformations or integrations on massive datasets may require significant processing power and memory, potentially necessitating investment in more powerful hardware or cloud computing resources.

Organizations often face a trade-off between the thoroughness of data wrangling and the available time and resources. While meticulous wrangling leads to higher quality data and more reliable insights, practical constraints may force compromises. Finding the right balance, and leveraging automation and efficient tools to minimize the time and resource burden, are ongoing challenges in the field. Effective project management and clear communication about the importance and effort involved in data wrangling are also crucial for managing expectations and securing necessary resources.

Ethical Considerations in Data Wrangling

Beyond the technical challenges, data wrangling carries significant ethical responsibilities. The way data is cleaned, transformed, and prepared can have profound implications, particularly concerning bias, fairness, and privacy. Data professionals must approach their work with a strong ethical compass.

Bias Detection and Mitigation

Bias in data can arise from various sources, including historical prejudices reflected in the data, a non-representative sample, or even the choices made by data wranglers themselves during the cleaning and transformation process. If undetected and unaddressed, these biases can be perpetuated and even amplified in subsequent analyses and machine learning models, leading to unfair or discriminatory outcomes. For example, if historical hiring data reflects gender bias, a model trained on this data without proper mitigation might learn to unfairly favor one gender over another.

Detecting bias requires careful examination of the data, looking for skewed distributions, underrepresentation of certain groups, or correlations that might reflect societal biases. This often involves not just statistical analysis but also a deep understanding of the context in which the data was generated. Mitigation strategies can include re-sampling the data to create a more balanced representation, re-weighting certain data points, or modifying algorithms to be less sensitive to biased features. It's important to note that completely eliminating bias is often very difficult, but conscious effort must be made to identify and reduce it as much as possible.

Transparency in how bias is detected and addressed is also crucial. Data wranglers and analysts should document the potential biases they've identified and the steps taken to mitigate them. This allows for scrutiny and helps build trust in the analytical outcomes. The ethical imperative is to strive for fairness and to ensure that data-driven decisions do not disproportionately harm vulnerable groups.

Compliance with Data Regulations (e.g., GDPR)

Adherence to data protection and privacy regulations is a critical ethical and legal responsibility in data wrangling. Regulations like the General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA), and others around the world impose strict rules on how personal data can be collected, processed, stored, and used. Data wranglers must be knowledgeable about these regulations and ensure that their practices are compliant.

Compliance involves several key aspects. Data minimization, a principle of GDPR, means collecting and processing only the data that is necessary for a specific, legitimate purpose. During wrangling, any data that is not relevant to the analytical goal should ideally be removed or anonymized. Secure handling of data is also vital, including implementing strong access controls, encryption, and measures to prevent data breaches.

Individuals often have rights regarding their data, such as the right to access, rectify, or erase their personal information. Data wrangling processes should be designed in a way that facilitates the fulfillment of these rights. Maintaining records of data processing activities, including wrangling steps, is also often a regulatory requirement and helps demonstrate accountability. Failure to comply with data regulations can result in significant fines and reputational damage, underscoring the importance of embedding compliance into every stage of the data wrangling workflow.

Transparency in Data Manipulation

Transparency in how data is manipulated during the wrangling process is a cornerstone of ethical data handling and scientific rigor. It is essential that the steps taken to clean, transform, and prepare data are well-documented and reproducible. This allows others to understand what has been done to the data, to scrutinize the methods used, and, if necessary, to replicate the wrangling process to verify the results.

Lack of transparency can obscure biases, errors, or questionable decisions made during wrangling, potentially leading to flawed or misleading analytical outcomes. For example, if outliers are removed without justification, or if missing data is imputed using a method that introduces bias, this should be clearly stated. Documentation should ideally include the rationale behind each wrangling decision, the specific tools or code used, and any assumptions made.

Version control systems (like Git) for code and detailed logs of transformations can be invaluable for maintaining transparency. When data and the code used to wrangle it are shared (where appropriate and respecting privacy), it fosters collaboration, allows for peer review, and builds trust in the data and the insights derived from it. Ultimately, transparency ensures accountability and upholds the integrity of the data analysis process.

Ethical Implications of Data Usage

The ethical considerations in data wrangling extend to the potential uses and impacts of the data once it has been prepared. Data wranglers, as those who shape and prepare the data, share a responsibility to consider how the data might be used and whether those uses are ethical. This involves thinking about potential harms or benefits that could arise from the insights generated from the wrangled data.

For instance, data wrangled for a marketing campaign could be used in ways that are manipulative or exploit vulnerable consumers. Data prepared for predictive policing models could, if biased, lead to unfair targeting of certain communities. While the data wrangler may not be the ultimate decision-maker on how data is used, they are often in a position to identify potential ethical red flags early in the process. This might involve raising concerns about data quality, potential biases, or privacy implications that could lead to unethical applications.

Promoting ethical data usage involves fostering a culture of responsible data handling within an organization. This includes advocating for data to be used in ways that are fair, just, and respect individual rights and societal values. It also means being mindful of the potential for data to be misinterpreted or misused, and taking steps during the wrangling process (such as clear documentation of limitations) to mitigate these risks.

Career Pathways in Data Wrangling

Proficiency in data wrangling is a highly sought-after skill that opens doors to a variety of career paths in the rapidly growing field of data. For those considering a career in this domain, or looking to pivot, understanding the roles, skill development, and market trends is crucial. It's a field that demands both technical acumen and a problem-solving mindset, but the rewards can be substantial, both professionally and intellectually.

Embarking on a data-focused career can be an exciting journey. While challenges are inherent in any meaningful pursuit, the ability to transform raw information into actionable insights is a powerful skill. Remember that every expert was once a beginner, and consistent effort in learning and practice will pave the way for growth and success. OpenCourser offers a vast array of Data Science courses to help you build a strong foundation and advance your skills.

Entry-Level Roles and Responsibilities

For individuals starting in the field, entry-level roles often involve supporting more senior data professionals in the tasks of collecting, cleaning, and preparing data. Titles might include Junior Data Analyst, Data Technician, or Data Wrangler. Responsibilities typically include performing initial data quality checks, identifying and handling missing values or obvious errors, and executing predefined data transformation scripts under supervision. You might also be involved in documenting data sources and wrangling processes.

These roles provide invaluable hands-on experience with real-world datasets and data wrangling tools. You'll learn to apply foundational techniques for data cleaning, structuring, and validation. A significant part of the learning curve involves understanding the nuances of different data types, the common pitfalls in data quality, and the importance of meticulous attention to detail. While the initial tasks might seem foundational, they are the bedrock upon which all subsequent data analysis is built, making this a critical learning phase.

Employers will look for a basic understanding of data concepts, familiarity with tools like Excel or SQL, and perhaps some exposure to programming languages like Python or R. Strong problem-solving skills and a willingness to learn are also highly valued. This stage is about building your technical toolkit and gaining practical experience that will serve as a springboard for more advanced roles. For those exploring options, browsing career development resources can provide additional guidance.

These courses are excellent starting points for those new to data wrangling and data analysis.

If you are interested in roles that heavily involve data wrangling, you might explore these careers:

Skill Development for Career Advancement

Advancing in a data wrangling-focused career requires continuous skill development, moving beyond basic tasks to more complex data manipulation, automation, and strategic thinking. Mastering programming languages like Python (with libraries such as Pandas and NumPy) and R (with the Tidyverse) is crucial for handling more sophisticated wrangling challenges and automating repetitive tasks. Proficiency in SQL for database querying and manipulation also remains a core skill.

Beyond technical proficiency, developing a deeper understanding of data modeling, data governance, and data quality management principles becomes increasingly important. This includes learning how to design efficient data pipelines, ensure data integrity and security, and work with various data storage technologies, including relational databases, NoSQL databases, and data lakes. Familiarity with cloud computing platforms (like AWS, Azure, or GCP) and their data services is also becoming essential as more data and analytics workloads move to the cloud.

Soft skills also play a vital role in career advancement. Strong problem-solving abilities, critical thinking, attention to detail, and effective communication are highly valued. The ability to understand business requirements, translate them into data wrangling strategies, and explain complex data issues to non-technical stakeholders is key for moving into more senior or specialized roles. Consider exploring resources in Professional Development to hone these complementary skills.

These courses can help you develop the advanced programming and analytical skills needed for career progression.

These books offer in-depth knowledge for those looking to become experts in using Python and R for data wrangling and analysis.

Certifications and Credentials

While practical experience and a strong skill set are paramount, certifications and credentials can play a role in demonstrating your knowledge and commitment to the field of data wrangling and broader data science. Various organizations and technology vendors offer certifications related to data analysis, data engineering, and specific tools like Python, R, SQL, or cloud platforms. These can be particularly helpful for those transitioning into the field or seeking to validate their skills for potential employers.

Some certifications focus on foundational data literacy and analytical skills, while others are more specialized, covering advanced data manipulation techniques, database management, or big data technologies. Vendor-specific certifications (e.g., from Microsoft, AWS, Google Cloud) can demonstrate proficiency in using particular platforms and services that are widely used in the industry. Industry-recognized certifications from professional organizations can also add credibility to your profile.

It's important to research certifications carefully to ensure they align with your career goals and are well-regarded by employers in your target industry or role. While a certification alone won't guarantee a job, it can complement your resume, provide structured learning, and potentially give you an edge in a competitive job market. Often, the process of studying for a certification is as valuable as the credential itself, as it deepens your understanding of key concepts and best practices. Many online courses on platforms like OpenCourser, including those leading to a certificate of completion, can be a great way to build these credentials.

Consider these capstone projects and specialization assessments to solidify your learning and earn credentials.

Industry Demand and Salary Trends

The demand for professionals with strong data wrangling skills is robust and expected to continue growing. As organizations across all sectors increasingly rely on data to drive decisions, innovate, and gain a competitive edge, the need for individuals who can effectively prepare and manage that data is paramount. Roles such as Data Analyst, Data Engineer, and Data Scientist, all of which require significant data wrangling expertise, consistently rank among the most in-demand jobs. According to the U.S. Bureau of Labor Statistics, employment for data scientists is projected to grow significantly faster than the average for all occupations.

Salary trends for roles involving data wrangling are generally positive, reflecting the high demand and the specialized skills required. Compensation can vary based on factors such as years of experience, level of education, specific technical skills (e.g., proficiency in Python, Spark, cloud platforms), industry, and geographic location. Entry-level positions will typically offer competitive starting salaries, with significant potential for growth as individuals gain experience and develop more advanced skills.

The market for data wrangling itself is also predicted to expand. This indicates a sustained need for both the tools and the talent to perform these critical data preparation tasks. Staying updated with emerging technologies and continuously honing one's skills will be key to capitalizing on the opportunities in this dynamic and evolving field. For more specific salary information, resources like U.S. Bureau of Labor Statistics Occupational Outlook Handbook can provide valuable insights into various data-related professions.

Educational Routes for Data Wrangling

Aspiring data wranglers have several educational pathways to acquire the necessary knowledge and skills. The best route often depends on individual learning preferences, career goals, and existing educational background. Whether through formal degree programs or more flexible self-learning options, a commitment to continuous learning is key in this evolving field.

University Degrees and Specialized Programs

A traditional route into data-focused careers, including those heavy on data wrangling, is through a university degree. Bachelor's degrees in fields like Computer Science, Statistics, Mathematics, Information Technology, or Data Science often provide a strong theoretical foundation and practical skills relevant to data wrangling. These programs typically cover fundamental concepts in programming, database management, statistical analysis, and data structures, all of which are crucial for effective data manipulation.

For those seeking more specialized knowledge or aiming for advanced roles, a Master's degree in Data Science, Business Analytics, Data Engineering, or a related field can be highly beneficial. These graduate programs often offer more in-depth coursework on advanced data wrangling techniques, machine learning, big data technologies, and data governance. Many universities are also developing specialized tracks or certificates within broader programs that focus specifically on data preparation and management.

University programs provide a structured learning environment, access to experienced faculty, and opportunities for research or capstone projects that can offer valuable real-world experience. They also offer networking opportunities with peers and industry professionals. While a degree is a significant time and financial commitment, it can provide a comprehensive and well-rounded education for a career in data. You can explore a wide range of university-level courses and programs related to Computer Science and Mathematics on OpenCourser.

These courses, offered by universities, provide foundational knowledge relevant to data wrangling.

Workshops and Bootcamps

For individuals seeking a more intensive and shorter-term educational experience, workshops and bootcamps focused on data science, data analytics, or data engineering can be an effective option. These programs are typically designed to be immersive and career-focused, aiming to equip students with practical, job-ready skills in a condensed timeframe, often ranging from a few weeks to several months.

Data wrangling is a core component of most data science and analytics bootcamps, with curricula often including hands-on training in tools like Python, R, SQL, and various data manipulation libraries. Students work on real-world projects and case studies, learning how to clean, transform, and prepare data for analysis and modeling. The emphasis is usually on practical application and building a portfolio of work that can be showcased to potential employers.

Bootcamps can be particularly attractive to career changers or those looking to quickly upskill in specific data-related technologies. They offer a focused learning path and often provide career services, such as resume workshops and interview preparation. However, the intensity and rapid pace of bootcamps require a significant time commitment and self-discipline. When considering a bootcamp, it's important to research the curriculum, instructor credentials, and job placement rates to ensure it aligns with your learning style and career aspirations. OpenCourser's Learner's Guide offers tips on how to choose and make the most of such programs.

Self-Taught Strategies and Resources

With the abundance of online resources available today, a self-taught path is a viable option for learning data wrangling. This route offers maximum flexibility, allowing learners to study at their own pace and focus on specific areas of interest. Numerous online learning platforms, including OpenCourser, offer a vast selection of courses, tutorials, and guided projects on data wrangling, programming languages like Python and R, SQL, and various data analysis tools.

Successful self-teaching requires discipline, motivation, and a structured approach. Creating a personal learning plan, setting achievable goals, and consistently dedicating time to study and practice are essential. Supplementing online courses with books, blogs, and participation in online communities (like forums or Q&A sites such as Stack Overflow) can provide additional support and learning opportunities. Working on personal projects using publicly available datasets is also crucial for applying learned concepts and building a practical portfolio.

While the self-taught route can be more challenging in terms of staying motivated and validating skills without formal credentials, it is a cost-effective way to gain valuable knowledge. Many successful data professionals have built their careers through self-learning, demonstrating that with dedication and the right resources, it is possible to master the art of data wrangling independently. For those starting out, exploring foundational topics in Programming can be a great first step.

Many learners find success by combining self-study with structured online courses. These courses are designed for self-paced learning.

Books are also excellent resources for self-learners. These titles cover fundamental tools and concepts.

Integration with Data Science Curricula

Data wrangling is a fundamental and integral part of virtually all comprehensive data science curricula, whether in university degree programs, bootcamps, or online specializations. Recognizing its critical role in the data science workflow, educators ensure that students develop a strong foundation in data preparation techniques before moving on to more advanced topics like machine learning, statistical modeling, or data visualization.

In a typical data science curriculum, data wrangling modules will cover topics such as data cleaning (handling missing values, errors, duplicates), data transformation (reshaping, aggregating, normalizing data), data integration (merging datasets from different sources), and feature engineering (creating new variables from existing ones). Students learn to use key tools and programming languages like Python (with Pandas, NumPy), R (with dplyr, tidyr), and SQL for these tasks.

The emphasis is often on hands-on practice, with students working on projects that require them to wrangle real-world, messy datasets to prepare them for analysis. This practical experience is crucial for developing the problem-solving skills and attention to detail necessary for effective data wrangling. By integrating data wrangling deeply into the curriculum, data science programs aim to produce graduates who are not only proficient in building models but also adept at the essential groundwork of preparing high-quality data, which is the foundation of any successful data science endeavor. Exploring related topics like Data Analysis and Machine Learning will often show data wrangling as a prerequisite or core component.

These courses are part of broader data science specializations and highlight the integration of wrangling skills.

Online Learning and Self-Paced Study

Online learning has revolutionized access to education, and data wrangling is no exception. For individuals seeking to learn at their own pace, upskill for their current role, or transition into a data-focused career, online courses and resources offer a flexible and often cost-effective pathway. The key to success in self-paced study is discipline, a structured approach, and a commitment to practical application.

If you're embarking on this journey, remember that challenges are part of the learning process. Don't be discouraged if concepts seem difficult at first. Break down complex topics into smaller, manageable parts, celebrate small victories, and don't hesitate to seek help from online communities or mentors. The ability to learn and adapt independently is a valuable asset in the ever-evolving field of data.

Benefits of Online Courses

Online courses offer numerous benefits for learning data wrangling. One of the primary advantages is flexibility. Learners can access course materials and lectures at any time and from anywhere, allowing them to study at their own pace and fit learning around their existing commitments, such as work or family. This self-paced nature can be particularly beneficial for a topic like data wrangling, where understanding and mastering different techniques may require varying amounts of time for different individuals.

Cost-effectiveness is another significant benefit. Online courses are often more affordable than traditional university programs or in-person bootcamps. Many platforms offer a wide range of courses, from free introductory modules to more comprehensive paid specializations or certificate programs. This accessibility makes it possible for a broader audience to acquire valuable data wrangling skills. Furthermore, online courses frequently provide hands-on exercises, quizzes, and projects that allow learners to apply what they've learned in a practical context, which is crucial for skill development in a technical field like data wrangling. OpenCourser makes it easy to browse through thousands of courses to find the ones that best fit your learning needs and budget. You can even check the deals page for potential savings on courses.

Many online courses are taught by industry experts or academics from renowned institutions, providing access to high-quality instruction. They often include discussion forums or community features where learners can interact with peers and instructors, ask questions, and collaborate on problems. This sense of community can be very supportive, especially for self-learners. The ability to revisit course materials as needed also aids in reinforcing learning and understanding complex concepts.

These courses exemplify the type of focused, skill-based learning available online.

Building a Portfolio Through Projects

For aspiring data wranglers, especially those who are self-taught or transitioning careers, building a portfolio of projects is critically important. A well-curated portfolio serves as tangible evidence of your skills and ability to apply data wrangling techniques to real-world (or realistic) problems. It allows potential employers to see what you can do, rather than just relying on a list of skills on a resume.

Projects can range in complexity, from cleaning and transforming a small, publicly available dataset to developing a more extensive data pipeline. The key is to choose projects that genuinely interest you and that allow you to showcase a variety of wrangling tasks, such as handling missing data, merging disparate sources, cleaning messy text, or restructuring data for a specific analytical purpose. Document your process thoroughly for each project: describe the data source, the challenges encountered, the wrangling steps you took (including code snippets if applicable), and the rationale behind your decisions. This documentation is as important as the final cleaned dataset.

You can find datasets for projects from various sources, including government open data portals, sites like Kaggle, or even by scraping data from websites (while respecting terms of service). Sharing your projects on platforms like GitHub not only makes them accessible to potential employers but also allows you to get feedback from the broader data community. A strong portfolio demonstrates initiative, practical skills, and a passion for working with data, which can significantly enhance your job prospects. OpenCourser's "Activities" section on many course pages suggests projects that can help you build these valuable portfolio pieces. You can also manage and share your completed projects and learning paths using the "Save to List" feature on OpenCourser and then publishing your lists from the manage list page.

Project-based courses are excellent for portfolio building. Consider these options:

Community and Peer Learning

Engaging with online communities and participating in peer learning can greatly enhance the self-paced study experience for data wrangling. Learning in isolation can sometimes be challenging, and communities provide a valuable support system, a source of motivation, and opportunities for collaborative problem-solving. Platforms like Stack Overflow, Reddit (e.g., r/datascience, r/learnpython), specialized forums for data analysis tools, and discussion boards within online courses are excellent places to ask questions, share knowledge, and learn from the experiences of others.

Peer learning can take various forms. You might collaborate with other learners on projects, participate in study groups, or review each other's code or wrangling approaches. Explaining a concept to someone else is often one of the best ways to solidify your own understanding. Seeing how others tackle similar problems can also expose you to new techniques or perspectives that you might not have considered on your own.

Many online learning platforms actively foster community interaction. Contributing to these communities, whether by asking thoughtful questions or offering help to others, can be a rewarding experience. It also helps you build a professional network, which can be beneficial for career development. Don't underestimate the power of learning with and from others, even when pursuing a self-paced study path. The shared journey can make the learning process more enjoyable and effective. OpenCourser's own blog, OpenCourser Notes, often features articles and discussions that can connect you with broader learning trends and insights.

Transitioning to Formal Roles Post-Certification

For individuals who have acquired data wrangling skills through online courses, self-study, or bootcamps, and perhaps earned certifications, the next step is often to transition into a formal data-related role. This transition requires more than just technical skills; it also involves effectively showcasing your abilities to potential employers and navigating the job market. Your portfolio of projects will be a key asset here, providing concrete proof of your capabilities.

Tailor your resume and cover letter to highlight your data wrangling skills and relevant projects, emphasizing the problems you solved and the tools you used. Practice articulating your wrangling process and the rationale behind your decisions, as this is often a topic in technical interviews. Networking can also be very beneficial; connect with professionals in the field through LinkedIn, attend virtual or local meetups (if available), and don't hesitate to reach out for informational interviews to learn more about specific roles or companies.

Be prepared to start in an entry-level or junior role, even if you have experience in a different field. This allows you to gain practical, on-the-job experience and further develop your skills within a professional setting. Emphasize your eagerness to learn and contribute. For guidance on how to present your online course certificates, you might find helpful articles in OpenCourser's Learner's Guide, such as "How to add a certificate to LinkedIn or your resume." The journey might require persistence, but with a solid foundation in data wrangling and a proactive approach to the job search, a fulfilling career in data is attainable.

These courses focus on practical application and could be valuable for those looking to transition into formal roles.

Future Trends in Data Wrangling

The field of data wrangling is continuously evolving, driven by advancements in technology, the ever-increasing volume and complexity of data, and the growing demand for more sophisticated analytics. Staying abreast of these future trends is crucial for data professionals who want to remain effective and relevant in this dynamic landscape.

Impact of Machine Learning Automation

One of the most significant trends shaping the future of data wrangling is the increasing use of Machine Learning (ML) to automate and enhance various aspects of the process. ML algorithms are being developed and integrated into data wrangling tools to perform tasks that traditionally required significant manual effort. This includes automatically detecting and correcting errors, identifying and imputing missing values with greater accuracy, suggesting relevant data transformations, and even generating code for wrangling pipelines based on high-level user specifications.

AI-powered data wrangling aims to make the process faster, more efficient, and less prone to human error. For example, ML models can learn from historical data preparation activities to understand common data quality issues and how they were resolved, then apply this knowledge to new datasets. Anomaly detection algorithms can flag unusual patterns or outliers that might otherwise be missed. As these technologies mature, they have the potential to significantly reduce the manual burden of data wrangling, freeing up data professionals to focus on more strategic and analytical tasks.

However, it's important to note that ML automation is unlikely to completely replace human oversight in the near future. Human expertise will still be needed to guide the automation, validate the results, and handle ambiguous or novel data quality challenges. The future likely involves a collaborative approach, where ML tools augment human capabilities, leading to more intelligent and efficient data wrangling. You can explore advancements in this area by looking into Artificial Intelligence courses.

This course provides insights into how AI and ML are transforming various business processes, including data handling.

Evolution of Data Storage Technologies

The evolution of data storage technologies is another key trend influencing data wrangling practices. The rise of Big Data has led to the development of new storage solutions beyond traditional relational databases, such as data lakes, data warehouses, and NoSQL databases. Data lakes, for instance, can store vast amounts of raw data in its native format, including structured, semi-structured, and unstructured data. This provides flexibility but also means that much of the data wrangling may need to happen "on read" or as part of specific analytical queries, rather than upfront before data is loaded.

Cloud-based data storage solutions (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage) have become increasingly popular due to their scalability, cost-effectiveness, and integration with cloud-based analytics and machine learning services. Data wrangling workflows are adapting to leverage these cloud platforms, with tools and techniques being developed to efficiently process data stored in the cloud. The concept of the "data lakehouse," which combines the benefits of data lakes and data warehouses, is also gaining traction, offering a more unified platform for data storage, wrangling, and analytics.

These evolving storage paradigms require data wranglers to be proficient with a broader range of tools and technologies. They need to understand how to access and process data from various storage systems, how to optimize wrangling pipelines for different data architectures, and how to manage data governance and security in these distributed environments. Familiarity with Cloud Computing concepts and platforms is becoming increasingly essential.

Understanding modern data storage is key. These resources cover relevant technologies.

Cross-Industry Applications

The principles and techniques of data wrangling are not limited to a specific industry but are finding increasingly diverse applications across various sectors. As more industries recognize the value of data-driven decision-making, the need for effective data preparation becomes universal. We are seeing innovative uses of data wrangling in fields beyond the traditional tech and finance strongholds.

For example, in agriculture, data wrangling is used to process data from sensors, drones, and weather stations to optimize crop yields and manage resources more efficiently. In urban planning, wrangled data from traffic patterns, public transit, and demographic shifts helps in designing smarter cities. The sports industry uses data wrangling to prepare player performance data and fan engagement metrics for analysis. Even in arts and humanities, researchers are applying data wrangling techniques to analyze large textual corpora or digitized historical archives.

This cross-industry adoption means that data wrangling skills are becoming more transferable and valuable across a wider range of career opportunities. It also highlights the importance of domain knowledge in conjunction with technical wrangling skills. Understanding the specific context and nuances of the data in a particular industry can greatly enhance the effectiveness of the wrangling process and the relevance of the insights derived. This trend underscores the broad applicability and enduring importance of mastering data wrangling.

Predictions for Next-Decade Developments

Looking ahead, the next decade is likely to see further significant advancements in data wrangling. Automation, driven by AI and ML, will almost certainly become more sophisticated and pervasive, handling increasingly complex wrangling tasks with less human intervention. We may see the rise of "augmented data wrangling," where AI acts as an intelligent assistant to data professionals, proactively identifying issues and suggesting optimal solutions.

The integration of data wrangling tools with broader data management and analytics platforms will likely deepen, creating more seamless end-to-end data pipelines. There will be a continued emphasis on real-time data wrangling capabilities to support instantaneous decision-making in areas like fraud detection, personalized recommendations, and IoT analytics. The ethical dimensions of data wrangling, particularly around bias detection and mitigation, and ensuring data privacy, will also receive greater attention, with tools and best practices evolving to address these challenges more effectively.

Furthermore, as data literacy becomes more widespread, we may see the development of even more user-friendly, "no-code" or "low-code" data wrangling tools that empower a broader range of business users to prepare their own data for analysis, while still maintaining governance and quality standards. The ability to wrangle increasingly diverse data types, including complex unstructured data like video and audio, will also be a key area of development. Continuous learning and adaptability will be essential for data professionals to keep pace with these exciting future developments.

Frequently Asked Questions (Career Focus)

This section addresses common questions that individuals exploring a career in or related to data wrangling often have. The answers aim to provide concise and actionable information to help guide your career planning.

What entry-level jobs require data wrangling skills?

Many entry-level positions in the data field require foundational data wrangling skills. Roles such as Data Analyst, Junior Data Scientist, Business Intelligence Analyst, Data Technician, and even some marketing or research assistant roles will involve tasks like cleaning datasets, transforming data for reporting, and ensuring data quality. For example, a Junior Data Analyst might be responsible for gathering data from various sources, performing initial cleaning to remove errors and inconsistencies, and structuring it in a way that's ready for analysis by senior team members. Similarly, a Data Wrangler in the media industry, as a more specialized role, would be responsible for backing up and organizing raw footage from shoots.

Even if "data wrangling" isn't explicitly in the job title, the responsibilities often include many wrangling tasks. Employers look for candidates who can demonstrate an understanding of data cleaning principles, familiarity with tools like Excel or SQL, and ideally some exposure to programming languages like Python or R. These entry-level jobs provide excellent opportunities to build practical experience and further develop your wrangling expertise.

The key is to look for roles that involve working directly with raw data and preparing it for analysis or other downstream uses. These experiences form the bedrock of a career in data, as high-quality data is essential for any meaningful analysis or data-driven decision-making.

Consider exploring these career paths if you're interested in entry-level roles with a data wrangling component:

How to transition from another field into data wrangling?

Transitioning into data wrangling from another field is increasingly common and achievable with a focused approach. First, identify any existing skills that are transferable. For example, if your current role involves attention to detail, problem-solving, or working with spreadsheets, these are valuable assets. Next, focus on acquiring the core technical skills for data wrangling. This typically involves learning SQL for database interaction, and a programming language like Python (with libraries like Pandas) or R for data manipulation.

Online courses, bootcamps, and self-study are all viable paths for gaining these skills. Create a structured learning plan and dedicate consistent time to it. Crucially, work on hands-on projects using real or publicly available datasets. This will not only solidify your learning but also provide tangible evidence of your abilities for your portfolio. Document your projects thoroughly, explaining the challenges and your solutions. Networking is also important; connect with people in the data field, attend webinars or meetups, and consider seeking mentorship.

When applying for jobs, tailor your resume to highlight your new data wrangling skills and projects. Be prepared to explain how your experiences from your previous field can bring a unique perspective to a data role. It might be necessary to start with an entry-level position to gain practical experience, but with dedication and continuous learning, a successful transition is well within reach. Many find that the problem-solving and analytical thinking developed in other professions are highly applicable to the challenges of data wrangling.

These courses are often recommended for individuals looking to build foundational data skills for a career change.

Which certifications are most recognized by employers?

While practical skills and a strong portfolio are often weighed more heavily than certifications alone, certain credentials can help validate your knowledge and make your resume stand out, especially when transitioning fields or starting your career. Certifications from major technology vendors are often well-recognized because they demonstrate proficiency in widely used tools and platforms. Examples include Microsoft's certifications for data analysis (e.g., Power BI Data Analyst Associate) or Azure data engineering, AWS certifications related to data analytics or big data, and Google Cloud certifications for data engineers or data analysts.

Beyond vendor-specific credentials, certifications offered by reputable industry organizations or through well-regarded online learning platforms can also carry weight. Look for certifications that involve hands-on assessments or project work, as these tend to be better indicators of practical ability. Certifications focused on foundational data science or data analytics principles, or those that cover specific programming languages like Python or R in the context of data manipulation, can also be beneficial.

It's important to research which certifications are most relevant to the specific roles and industries you are targeting. Reading job descriptions for positions you're interested in can give you clues about which tools and, by extension, which certifications might be valued. Remember, a certification is a supplement to, not a replacement for, demonstrable skills and experience. The process of studying for a certification can be a valuable learning experience in itself. OpenCourser's Learner's Guide can offer insights on how to best leverage certifications in your career journey.

Is programming mandatory for data wrangling roles?

While it's possible to perform some basic data wrangling tasks using GUI-based tools like Microsoft Excel (especially with Power Query) or specialized data preparation software without writing code, programming skills are becoming increasingly essential for most data wrangling roles, especially as datasets become larger and more complex. Programming languages like Python (with its Pandas library) and R (with Tidyverse) offer far greater flexibility, power, and automation capabilities for sophisticated data manipulation, cleaning, and transformation tasks than most GUI tools.

Many job descriptions for Data Analysts, Data Engineers, and Data Scientists will list proficiency in Python or R, along with SQL, as required or highly desired skills. Even if a role primarily uses a no-code/low-code platform, understanding programming concepts can help you better grasp what the tool is doing under the hood and troubleshoot issues more effectively. For career advancement and tackling more challenging data wrangling problems, programming proficiency is generally considered a key differentiator.

If you are new to programming, don't be daunted. Many resources are available to learn Python or R, often geared specifically towards data analysis. Starting with the basics and gradually building up your skills through practice and projects is a manageable approach. While some entry-level tasks might be achievable without coding, investing in learning programming will significantly broaden your capabilities and career opportunities in the field of data wrangling.

These courses introduce data wrangling with popular programming languages.

How to demonstrate data wrangling skills in interviews?

Demonstrating data wrangling skills in an interview involves a combination of articulating your understanding of concepts, showcasing your practical experience through projects, and potentially solving technical challenges. Be prepared to discuss your data wrangling process. Interviewers may ask you to walk them through how you would approach cleaning a messy dataset, handling missing values, or integrating data from different sources. Explain your thought process, the tools you would use (e.g., Python/Pandas, SQL), and the rationale behind your decisions.

Your portfolio will be invaluable. Be ready to talk in detail about specific projects where you performed significant data wrangling. Describe the initial state of the data, the challenges you faced, the wrangling techniques you applied, and the impact your work had on the subsequent analysis or outcome. Use specific examples and, if possible, quantify the results of your efforts (e.g., "reduced data errors by X%," "enabled the analysis of Y additional records").

Technical interviews often include coding challenges or case studies related to data manipulation. You might be given a sample dataset and asked to write Python or SQL code to clean, transform, or analyze it. Practice these types of problems beforehand. Focus not just on getting the right answer, but also on writing clean, efficient, and well-documented code. Clearly explain your approach as you work through the problem. Even if you don't arrive at the perfect solution, demonstrating strong problem-solving skills and a logical approach to data wrangling tasks will be viewed favorably.

What industries offer the highest salaries for this expertise?

Salaries for roles involving data wrangling expertise can vary significantly based on several factors, including industry, geographic location, company size, years of experience, and the specific responsibilities of the role. However, certain industries tend to offer higher compensation due to the high demand for data skills and the significant value that data-driven insights bring to their operations. The technology sector, including software companies, e-commerce giants, and social media platforms, is often a top payer for data professionals, including those specializing in data wrangling, data engineering, and data science.

Finance and insurance are also industries where data expertise commands high salaries. These sectors deal with vast amounts of complex financial data, and accurate data wrangling is critical for risk management, fraud detection, algorithmic trading, and regulatory compliance. Consulting firms that provide data analytics and data strategy services to various clients also tend to offer competitive compensation packages. Furthermore, the healthcare and pharmaceutical industries are increasingly reliant on data for research, clinical trials, and personalized medicine, leading to growing demand and strong salaries for data professionals.

It's worth noting that while some industries may offer higher average salaries, opportunities for impactful work and competitive compensation exist across a wide range of sectors. Focusing on developing strong, in-demand skills and gaining relevant experience will generally lead to better earning potential regardless of the specific industry. Resources like the Occupational Employment Statistics from the BLS can provide more detailed salary data for various occupations and locations.

Useful Links and Resources

To further your exploration of data wrangling and related fields, here are some helpful resources:

- OpenCourser - Data Science Category: https://opencourser.com/browse/data-science - Explore a wide variety of courses on data science, many of which cover data wrangling extensively.

- OpenCourser - Learner's Guide: https://opencourser.com/learners-guide - Find articles and tips on how to make the most of online learning, choose courses, and advance your career.

- OpenCourser - Official Blog (Notes): https://opencourser.com/notes - Stay updated with insights on online learning, data careers, and more.

- U.S. Bureau of Labor Statistics - Occupational Outlook Handbook: https://www.bls.gov/ooh/ - A valuable resource for information on various careers, including job duties, education requirements, and salary expectations in the United States.

The journey into data wrangling is one of continuous learning and problem-solving. By understanding its core principles, mastering its techniques and tools, and staying aware of its evolving landscape, you can build a rewarding and impactful career. Whether you are just starting or looking to deepen your expertise, the resources available through OpenCourser and beyond can support you every step of the way. Embrace the challenge of taming complex data, and you will unlock the power to derive meaningful insights and drive innovation.